Oscillating Neural Synchrony

Abstract

This chapter applied stable information theory and Reduced Error Logistic Regression (RELR) to oscillating neural synchrony phenomena in neural computation. The concept of oscillating neural synchrony was introduced as measured by the electroencephalography (EEG). The relationship between the EEG and local potential fields to the probability of binary spiking in neural populations was then introduced, and the suggestion was that local field potentials are good proxies for the probability of binary spiking in an underlying neural population. The relationship between EEG frequency and rhythmic structure and cognitive effects related to consciousness was also reviewed. The Hubel and Wiesel hierarchical integration model of visual perception was contrasted with binding by synchrony proposal of Singer. The Gestalt psychology roots of Singer's proposal were summarized. An RELR model of square perception was then shown to be consistent with binding by synchrony in the case of illusionary Kanizsa square perception. The concept of spike-timing plasticity was also connected to oscillating neural synchrony models of brain learning. This was also suggested to be consistent with RELR. Finally, evidence on the timing of cognitive operations as reflected in rhythmic oscillating neural synchrony patterns in the EEG was reviewed. Similarities to the rhythmic structure of music were suggested.

Keywords

Alpha rhythm; Attention; Beta waves; Binding by synchrony; Delta waves; Electroencephalography (EEG); Gamma frequency range; Gestalt; Hierarchical integration model of perception; Law of Pragnanz; Magnetoencephalography (MEG); Theta frequency range, Alpha frequency range, Delta frequency range, Beta frequency range

“In the melody, in the high singing principal voice, leading the whole and progressing with unrestrained freedom, in the uninterrupted significant connection of one thought from beginning to end, and expressing a whole, I recognize the highest grade of the Will's objectification, the intellectual life and endeavor of man.”

Arthur Schopenhauer, The World as Will and Representation, 1818.1

Contents

1. The EEG and Neural Synchrony

2. Neural Synchrony, Parsimony, and Grandmother Cells

3. Gestalt Pragnanz and Oscillating Neural Synchrony

4. RELR and Spike-Timing-Dependent Plasticity

5. Attention and Neural Synchrony

There is an enormous gap today between knowledge at the individual neural level and ensemble level. The technology simply has not existed to fill this gap with good empirical measurement. Instead, computational modeling methods have attempted to fill this divide, but these insights are quite fallible including those of Reduced Error Logistic Regression (RELR) and its associated information theory unless they are supported by empirical data. Promising new imaging methods are now available that may finally bridge this chasm, and this is a major focus of a new initiative led by the National Institutes of Health (NIH) in the United States called the Brain Activity Map (BAM) initiative. This BAM initiative eventually could be on the same scale and have the same impact as the NIH Human Genome Project. These new imaging methods are based upon nanotechnology and will allow activity from large collections of neurons to be simultaneously recorded.2

While the BAM proposal acknowledges that computational modeling approaches will still have a place, all computational models of neural function will be greatly enhanced by these data. In many cases, these data may falsify current proposals that attempt to bridge the enormous gap between individual neural function and populations of neurons including the RELR models reviewed in this book. One may wonder how helpful better data on neural computation and consciousness would be for machine learning applications. Much smarter machines should be possible with better observations of how the massively parallel brain may achieve the intricate timing necessary for accurate and rapid cognitive prediction and explanation. Yet, this knowledge also could be humbling and suggest limitations to cognitive machines designed to simulate these neural ensemble processes.

This chapter introduces the idea of oscillating neural synchrony as a basic organizing principle in ensembles of neurons, as it focuses on abundant research both on an individual neural level and on a population neural level that has concerned how information is rapidly coded and computed in the brain's massive parallel processing. The importance of oscillating neural synchrony in neural computation is unlikely to change as new measurements become available with the BAM initiative. But a much better understanding of how oscillating neural synchrony arises in neural ensembles and how it may reflect cognition and consciousness is a likely possible. Oscillating neural synchrony is the most obvious observation when measurements are taken from the brain's electromagnetic fields through devices known as the electroencephalography (EEG) and the magnetoencephalography (MEG). These measurements are quite noisy and thus require significant averaging or filtering to remove noise. Yet, the resulting signals often have substantial rhythmic and periodic structure that begs comparison to another phenomenon that seems to reflect cognition and consciousness. This is music.

The nineteenth century German philosopher Arthur Schopenhauer saw evidence for intelligence in all forms of matter. To Schopenhauer, there is a natural hierarchical order to intelligence; human thought is simply at the highest level. Schopenhauer suggested that the art form of music is our means to represent and understand this fundamental intelligence process that he called the Will. Whereas all the other art forms represent the objective expressions of the Will, music he argued represents the inner or subjective dynamics of the Will. Low-bass tones represent the Will in inorganic forms and high tones represent the Will in organic forms, but Schopenhauer speculated that melody is that aspect of music that represents human thought because it leads with the most informative highest tones and thus connects underlying patterns into a meaningful harmonic whole. Whether or not one accepts the deep mysticism that Schopenhauer borrowed from eastern philosophical traditions, it is hard not to agree that music can represent and move something that is very deep within us. Later sections of this chapter will explore striking similarities between structures that exist in musical forms and the brain's neural signaling at an ensemble level. But first let us understand the concept of neural synchrony and how it is measured through the EEG.

1 The EEG and Neural Synchrony

The scalp recorded EEG, along with local brain field potentials, are generated by postsynaptic excitatory and inhibitory graded potentials that are synchronized across a large number of neurons in close proximity.3 While the scalp EEG and local field potentials do not directly reflect the faster spiking responses that represent neural binary signals, the time histogram of spiking responses in a local brain region closely matches the temporal course of local field potentials.4 Thus, the local field potentials can be thought to reflect nonrandom summation that is roughly the probability of a binary spiking response in a local neural ensemble, whereas the EEG is a cruder and noisier far field reflection of these local field potentials.5,6

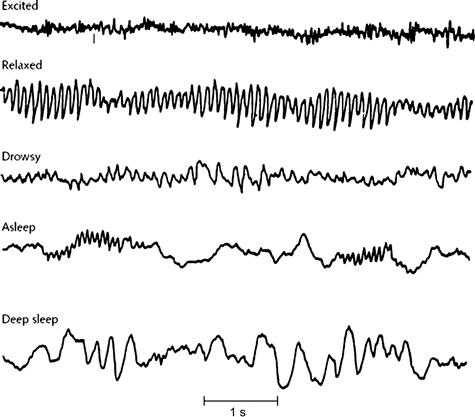

Neural synchrony as reflected by the EEG often oscillates in very regular rhythms. These EEG patterns are strikingly different across different conscious states. The predominant frequency of the EEG is a rough indicator of the level of consciousness as shown in Fig. 6.1, as faster rhythms imply a more awake brain. However, slower rhythms are often mixed with faster rhythms during intense periods of awake cognitive processing and faster frequencies can be superimposed upon the slowest delta waves during deep sleep.

Figure 6.1 This early EEG recording depicts the basic levels of consciousness and human cognitive states that are associated with the EEG. Excited conscious states happen during aroused wakefulness and are associated with faster beta- (15–30 Hz) and gamma-EEG frequencies (>30 Hz). Relaxed conscious states occur during reflective wakefulness and are associated with alpha rhythms (8–15 Hz). More drowsy conscious states are associated with theta-range frequencies (4–8 Hz). Sleep and especially deep sleep are associated with slower delta-range frequencies (0–4 Hz). Source: Penfield and Erickson (1941).7

All important ideas about the neural basis of cognition and consciousness today suggest that it correlates to oscillating neural synchrony. Yet, the greatest degree of neural synchrony is during deep sleep. In fact, the higher amplitude deep sleep delta waves may be described as reflecting a cerebral cortex that is exhibiting the greatest amount of synchrony as reflected by the largest amplitude scalp EEG recordings. How then does one reconcile neural synchrony theories of cognition with the fact that the greatest neural synchrony actually occurs during deep sleep when there seems to be the least cognition and consciousness? While large in amplitude, the slower delta waves that occur during deep sleep are not obviously as structured and periodic as other more rhythmic waves like the alpha rhythm that is shown during the relaxed and wakeful period in Fig. 6.1.

Thus, the predominant periodicity and rhythmic structure of these oscillating brain fields may be an important indicator of the degree to which brain activity is organized into meaningful cognition. For example, sleep studies show that when theta waves without any obvious rhythmic structure are prominent as occurs in drowsiness and very light sleep, people might report experiencing isolated mental images that are not connected into a coherent story such as seeing an isolated image of a face or hearing the sound of a certain word,8,9 and subjects might report no memory for any mental activity during deep sleep when delta waves predominate.10 In contrast, when a sleep research subject reports the experience of a connected train of thought as in dreams or wakefulness, the associated EEG is almost always much faster in frequency and often shows the more rhythmic structure seen in alpha rhythms.11 Very slow EEG frequencies like delta and theta do occur in tandem with much higher frequencies during wakefulness and then may have obvious rhythmic structure, as to be reviewed in this chapter.

The effects that are shown in Fig. 6.1 corresponding to levels of arousal in humans are best seen in more posterior scalp electrodes that overlay occipital, parietal and temporal lobes shown in Fig. 6.2. These underlying brain areas reflect the visual, auditory and somatosensory primary cortex areas, along with secondary and association cortex areas that are sensitive to higher levels of sensory processing and mixtures of these modalities. For this reason, more posterior scalp electrodes are especially sensitive to the influx of sensory information as happens most prominently during the excited state shown in Fig. 6.1. Because the posterior cortex is more closely tied to sensory inputs, the alpha rhythm in humans is most readily observed during resting, eyes-closed wakefulness in these posterior areas. Differentiation of underlying rhythms is also seen during relaxed wakefulness, as the alpha rhythm is much less obvious in more frontal regions where faster rhythms would be more apparent.

Figure 6.2 Principal areas of the human brain seen from (a) a lateral view and (b) a medial view that shows the Limbic System. Notice that structures within the medial temporal lobe including Hippocampus and Parahippocampal Gyrus are depicted on the right. Source: Public Domain License Granted at Wikimedia.org.12

The greatest differentiation of underlying rhythms is reflected in the excited EEG state that is associated with higher levels of arousal in an awake or dreaming brain. This excited state was originally referred to as a desynchronized EEG because original pen recordings low pass filtered the higher frequency synchronized gamma oscillations. However, very periodic higher frequency signals which are often in the gamma frequency range (>30 Hz) are now observed in today's digital recordings measured from the scalp. Whereas the slower EEG rhythms are thought to be associated with oscillating neural synchrony involving greater spatial regions, faster EEG signals reflect much less global synchrony and much more isolated pockets of oscillating synchrony which may even be out-of-phase locally so that the voltage trace in the scalp recording shows very low amplitude.13 Thus, very fast gamma oscillating synchrony still may be difficult to detect in many cases in today's scalp recording and only may be seen in invasively recorded EEG as is done to monitor epileptic patients. Long-range synchrony of periodic gamma activity, such as between frontal and posterior cortical regions, is the best marker of consciousness in animals and humans undergoing general anesthesia. In this case, it subsides during loss of consciousness and only returns during wakefulness.14

2 Neural Synchrony, Parsimony, and Grandmother Cells

At some level, neural synchrony must be present in how the brain represents information. For example, when synchronously activated presynaptic neurons converge and cause a postsynaptic neuron to fire, the postsynaptic neuron's firing binds information together from the input neurons. In the sense where it is defined by synchronous presynaptic inputs that lead to postsynaptic convergence and firing, neural synchrony is similar to the concept of hierarchical convergence advanced by Nobel Prize winners David Hubel and Thorsten Wiesel. Based upon their neural recording data, Hubel and Wiesel proposed that visual cortical neurons at higher levels of processing simply sum the inputs from lower levels to create ever sparser representations that express ever higher levels of sensory and perceptual constructs. In their proposed hierarchy, simple neurons code for basic features such as lines of certain spatial frequency and orientations in specific regions of the visual field. Yet, there is still tremendous redundancy in simple neurons' response in visual cortex, as many neurons respond to similar features.15 More complex cells code over a larger spatial region and some respond to movement; their firing activity would appear to result from the summation of lower level simple cell inputs.16 The effect of such processing is that fewer total units may need to be active to code representations at the higher levels, so parsimony is a natural outcome.

Yet, if this idea of ever greater sparsity at higher levels of representation were taken to the extreme, the activity of one single neuron would code for the image of your grandmother. Such extreme sparsity appears to be unlikely though, as an ensemble of synchronously activated or inactivated neurons that operate as a neural synchrony unit that may be called the neural ensemble would seem instead to represent information at each stage. In fact, there is now evidence for an ensemble level neural code which discriminates well between different orientations of visual sine wave gratings using logistic regression with the inputs simply being the relative response of neurons in the primary visual cortex.17 While one specific grandmother cell that uniquely codes for the image of a grandmother has never been found, much greater sparsity seems to exist with higher levels of representation. That is, ever greater selectivity of representations is found in ever sparser populations of neurons in higher cognitive processing. Thus, in later perceptual processing in inferior temporal cortex, there are a relatively sparse group of neurons that increase firing more so to the face of grandmother, but this response may vary based upon the orientation of the grandmother's face and these cells also may respond somewhat to other faces.18 At even higher levels of representation in the brain's medial temporal lobe explicit memory system involving the hippocampus and entorhinal cortex, more specific and even sparser coding has been found. In the medial temporal lobe, cells have been found to respond specifically to the face of actress Jennifer Aniston in various orientations, her name, and her famous costar—Brad Pitt, but not to another famous actress—Julia Roberts. In the same study, another medial temporal lobe ensemble of cells showed similar specific firing to another Hollywood actress—Halle Berry.19

3 Gestalt Pragnanz and Oscillating Neural Synchrony

This idea of synchronous data-driven hierarchical convergence as a mechanism of cognitive processing such as perceptual representation or explicit memory reconstruction would at first glance seem reasonable as long as it is accomplished in a sparse population of neurons in a neural ensemble rather than through single neurons. However, the Gestalt psychologists in the early twentieth century pointed out that our perception of the world cannot simply be a data-driven process and must instead also result from a higher level binding process where the unitary whole is not simply the sum of the parts. The most basic Gestalt law concerns Pragnanz, which in German means “good figure”. This idea is that we tend to see the world always in the simplest and more organized way possible and this may in fact distort physical reality. This Gestalt principle implies that our conscious brains always try to force parsimony and good organization onto representations to result in relatively few meaningful high-level perceptual components, as compared to more complex nonsensical representations.

The Kanizsa Square depicted in Fig. 6.3 is a prime example of what the Gestalt psychologists meant in their Law of Pragnanz. The white square is actually a perceptual illusion and a distortion of physical reality, as there are not the correct elementary features here to code for a white square. That is, the sides of the square are missing. For this reason, data-driven machine learning may fail completely to represent what humans perceive. A parsimony principle could help to explain human perception in this case. There are five regularly shaped objects in our perception: a bright-white square in the foreground, and four circles in the background. However, there are actually many separate irregular and fuzzy objects in the physical picture, as there are four sets of a large number of fuzzy concentric rings that are missing one-fourth of their structure in right angles. But, the brain perceives a far simpler, more meaningful, and more organized image, which is a white square in front of four fuzzy circular objects. Thus, the brain constructs a more parsimonious and meaningful perceptual interpretation that is not the actual physical reality. The Gestalt psychologists proposed that this Law of Pragnanz is a fundamental process in everything that we perceive. Max Wertheimer, one of the three prominent Gestalt theorists early in the twentieth century, used the following example to illustrate this Pragnanz principle:

Figure 6.3 The Kanizsa Square.20

I stand at the window and see a house, trees, sky. Theoretically I might say there were 327 brightnesses and nuances of color. Do I have “327”? No. I have sky, house, and trees.21

The principle of parsimony along with hierarchical integration could explain the Kanizsa Square illusion, along with why the brain sees a sky, house and trees. This would have to assume that a relatively sparse ensemble of neurons in the brain is somehow able to represent Kanizsa Square or the sky, house and tree through mutual synchronized oscillations that ensure that the perception is composed of meaningful features. These parsimonious conscious representations could originate with highly redundant feed-forward signals that code lower level unconscious sensory input, such as simple cell activity in the primary visual cortex. As processing moves to secondary visual cortex and association areas in the brain, the redundancy would lessen, as the brain would gradually increase its specificity by selecting the most meaningful features through feedback that removes the most redundant features. Ultimately, this neural ensemble would code for a complex perception as reflected by momentary highly periodic oscillating local field standing wave signals that allow mutual synchrony across multiple regions including primary visual cortex, secondary visual cortex, and association cortex. This oscillating neural synchrony ensemble represents a unitary perception built with higher level meaningful features that have prior memory weights including a white square in the foreground and concentric dark fuzzy circles in the background.

This is one idea about how perception could work. Yet, it is not really the Hubel and Wiesel idea of feed-forward hierarchical integration because it requires feedback to select the final important features and explain their mutually synchronized oscillatory representation, and because it superimposes a principle of parsimony along with meaningful prior memory weights in the final selected features that are the basis of the perception. On this point, the insightful book by György Buzsáki entitled Rhythms of the Brain argues that a most basic failing of Hubel and Wiesel's hierarchical integration model of perceptual binding is that it fails to consider the extensive oscillating synchrony feedback signals that are obviously involved in much of the brain's conscious processing.

In fact, such a sparse oscillating neural synchrony mechanism has been proposed by Wolf Singer to be a very basic and general conscious organizing principle that he calls “binding by synchrony”.22,23 This brain process would bind elementary attributes like the right angles and sides of a square to create the experience of a bright-white square in the Kanizsa illusion. Singer has pointed out that Gestalt ideas about perception played a large role in his thinking about perceptual binding.24 Singer's ideas are based upon findings originally reported in the 1980s that highly periodic local field oscillations in the gamma frequency range were synchronized with zero phase lag across spatially disparate primary and secondary visual cortical regions in cats who were viewing coherent stimuli such as a single moving long light bar.25 Such perfect oscillating synchrony did not occur during control conditions when two light bars were moved in opposite directions or when two light bars were moved in the same direction, even though these light bars were also exciting the identical visual cortex neurons as the single moving light bar. What was remarkable about these observations is that the gamma frequency oscillations were so periodic and that the synchrony between such very periodic gamma oscillations occurred across spatially distinct regions specifically in the one condition when the conscious experience of seeing one unitary stimulus with coherent and continuous motion should occur. This work suggested that such perfect oscillating synchrony across spatially distinct brain regions represented global properties of the stimulus such as coherent continuous motions that were perceived in a unified conscious experience. This work also suggested that this long-range oscillating synchrony in the gamma frequency range across spatially distinct cortical regions simply could not be explained by hierarchical convergence.

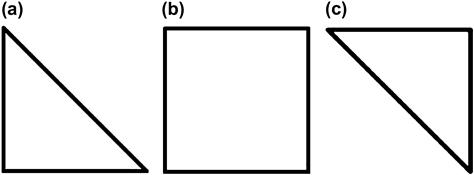

A demonstration of how RELR may model a neural ensemble in the representation of a square which may have missing sides like in the Kanizsa Square illusion is the following. First, an RELR model trains on input data that present a square on half the learning trials and a right triangle that is either oriented with the 90° angle at the bottom left or top right on the other half of the trials. Figure 6.4 shows these square and triangle training stimuli. The input signals to the neural ensemble here could be the ensemble of neurons that code the important visual features of squares and triangles like the presence of 90° or 45° angles, the presence of sides of the figures, along with the important auditory feature “square” or “not Square” which is the “verbalization” feature driven by an external instructor or by reading. Note that this verbalization signal is simply a neural representation of the subjective auditory verbalization of the word “square” or “triangle” as might be expected to occur when performing a task to discriminate between squares and triangles. The output signals from this neural ensemble would be measured in population of neurons that produce binary signals in synchrony with these input features, although the probability of the binary output responses in this ensemble would be a closer representation of the local field potentials. This is because the scalp EEG and other measures including intracranial measures of the local field potentials are not sensitive to actual binary spiking responses and instead represent the probability of binary responses in local neural ensembles.26 Together this neural ensemble that encompasses both input features and output responses may represent the perceived and labeled figure whether it is a “square” or “triangle”. The behavior of a single modal neuron within this ensemble is simulated in the toy model training conditions illustrated in Tables 6.1 and 6.2.

Figure 6.4 Stimuli used to train RELR's square perception. The stimuli in (a) and (c) represent the right triangles used, and (b) represents the square that was used.

Table 6.1

Initial training episode for RELR square perception. Each row corresponds to one training observation where either a Square or Triangle is presented with the physical features being indicated as “Present” 1 (Y) or “Not Present” 0 (Y) and the Verbalization feature indicates “Square” 1 (S) or “No Square” 0 (T). The output is a binary response that represents either a neural spike (1) or no-spike (0) indicating Square Perception (1) or No Square Perception (0).

Table 6.2

Initial training and update weights and output probabilities corresponding to square perception training conditions. In top panel regression coefficient weights in RELR model are shown as initial training weights, update weights (no missing data) with identical training sample as initial training, and update weights (missing sides and verbalization) when features corresponding to the square sides (top line, bottom line, right line, and left line) and the verbalization have missing values but the training sample is otherwise identical to the initial training. Bottom panel shows output probabilities for binary response for all 10 observations in these conditions.

Table 6.1 shows the training observations for this bursting neuron with 10 training observations equally split between triangle and square presentations, along with the input visual features and auditory verbalization features, and the binary response output. The binary response is purposefully designed here to not completely depend upon the input features by randomly setting two binary output signals to be incorrect. This is meant to model the possibility of local field potentials having a causal effect on binary spiking responses that is independent from the effects of input features based upon evidence reviewed in the last chapter.27 The top panel of Table 6.2 shows the regression weights for this model neuron across the input features after the training condition, the retraining condition with the identical features, and then after the retraining condition with missing sides and verbalization features. Both retraining models are updated with RELR's sequential online learning method based upon minimal K–L divergence/maximum likelihood as previously outlined in Chapter 3 where the posterior weights from the initial training episode serve as the prior weights for retraining/updating conditions. The bottom panel of Table 6.2 shows the binary output probabilities corresponding to these 10 observations in the three training conditions. Notice that because RELR is easily able to handle missing values, it also easily perceives the squares instead of triangles when sides are missing as input features. The update weights in the “missing sides and verbalization” condition are all zero because RELR fills missing values with zeroes in its standardized features, and the update weights in the other features of the square are minimally changed. As reviewed in Appendix A4, the new posterior weights after the update/retraining condition is the sum of the prior and update weights. So, it would take many such retraining conditions with missing sides and verbalization before this RELR model would substantially change the relative weights in the posterior weights of the features that are present after retraining with missing data. These training and retraining episodes require the assumption that all training observations within an episode are independent in the sense that one observation cannot cause another observation; such an assumption might be reasonable, for example, in the case of a neuron that has rapidly bursting output signals. If this assumption is not met, then both training and retraining would need to occur at the pace of one observation at a time.

Perceptual binding by synchronous oscillating feedback gamma frequency loops would require a large number of different synchronized and oscillating neural ensembles to represent every possible perception, but that is possible. In fact, a scaled down model has been built by Werning and Maye.28 When neural ensembles oscillate in synchrony in this model, these ensembles represent a common object; when these oscillations are desynchronized, they do not. Though simultaneously activated, the different oscillating components are almost uncorrelated across time and there is one larger more holistic oscillating component that contains evidence of all other components. For example, one oscillating component may represent head and arms, another represents body and legs, and the holistic component contains evidence of an entire body. The Werning and Maye model is unlike the artificial neural network models of a generation ago, as this model is not at the single neural model but instead represents the activation of an ensemble of neurons in local synchronous oscillating circuits within visual cortex. Whether this model is ultimately accurate, it is useful in showing how approximately orthogonal oscillations can be synchronized to generate a cognitive representation that seems to obey the Gestalt principle that the whole is greater than the sum of the parts.

Long-range oscillating gamma frequency synchronization between neural populations in very distinct regions of the brain such as across hemispheres has also been observed during visual perception binding.29 There is however controversy as to the extent to which long-range synchrony could explain the cognitive binding of elementary attributes into larger attributes. Some studies that have looked for long-range synchrony in spike response recordings during visual perception have not found it.30,31 Singer has recently commented that all evidence is that binding by synchrony effects are much easier to see in EEG field recordings than in single neuron spike-response recordings.32 This makes sense because oscillating and synchronous activity is also much easier to see in EEG field recordings than in spike responses, as spike responses are much noisier. Note that traveling waves of synchronized EEG field correlations across spatially distinct cortical regions actually have been observed during visual processing, so strict long-range synchrony in perfectly standing waves always may not be involved in binding.33 Instead, some models have allowed for slight phase lags between spatially distinct regions consistent with traveling waves that do not always combine to create perfectly stable standing waves.34,35 This is similar to how some parts of a musical orchestra often echo other parts with slight delay, but the effect is clearly experienced as a unified musical theme.

Singer has suggested that oscillating neural synchrony functions to provide shorter term and more flexible synchrony than afforded by fixed anatomical connections between neurons. While Singer agrees that much of oscillating gamma frequency synchrony could occur through fixed short-range anatomical connections such as interneurons in cerebral cortex, he argues that the critical binding mechanism of longer range synchrony must rely upon the periodicity of oscillations. This is because the longest range neural synchrony effects occur too rapidly in the higher gamma frequency range (>30 Hz) to be determined by fixed neuronal connections.36 As an example of how this periodicity may be used to determine long-range synchrony, Buzsáki has argued that periodic oscillations would be a very inexpensive means for synchronous parallel processing between two distant neural regions to be accomplished. If two brain regions have coordinated oscillating periodicities, then they are naturally synchronized and do not have to communicate to one another in real time, but instead could rely upon intermittent and slower long-range communications to maintain synchrony. This mechanism of long-range oscillating synchrony achieved through intermittent communications also might explain slower brain rhythms than in the gamma frequency range like those in the theta (4–8 Hz) and alpha (8–12 Hz) range which are known to depend upon fixed corticohippocampal or corticothalamic neural connections.

The only way that coordinated repetitive temporal patterns in oscillating neural synchrony could occur with two or more independent oscillators is if there is approximately an orthogonal relationship between the different oscillators which would manifest as small whole number frequency ratios like 1:1, 2:1, 3:2, or 4:1. In fact, there is abundant evidence for such simple small whole number ratio structure in oscillating neural synchrony as will be reviewed shortly. In perceptual processing, these highly periodic and harmonic oscillating neural synchronies could function to organize the large amount of disconnected sensory information into a few meaningful conscious chunks of perceptual information like sky, trees and house. In higher level explicit working memory processing, oscillating neural synchrony between different elements in the neural ensemble could explain how the mental representation of Brad Pitt can occur simultaneously or in close temporal succession with the image of Jennifer Aniston, as perhaps because there is explicit recollection that one of the features of Jennifer Aniston is that she was married to Brad Pitt or that she was in the same movie as him.

In building up parsimonious explicit cognitive representations from simple oscillating feature elements that exhibit small whole number ratio structure, this music-like neural ensemble orchestra may be an embodiment of the Law of Pragnanz that requires that conscious representations are always as simple and meaningful as possible. Explicit RELR's parsimonious feature selection will tend to discard highly multicollinear feature elements and instead returns features that are as uncorrelated or orthogonal as is possible while still fitting the data. This was exemplified back in Fig. 3.4(b) where the final Explicit RELR model discarded a feature that was highly correlated with the final selected feature. So, Explicit RELR also will tend to return as simple and meaningful a representation as is possible while still fitting the data in accord with Pragnanz, especially because its selections also can be determined by meaningful prior weight parameters in online sequential learning. Yet, a big difference between Explicit RELR's treatment of multicollinear features compared to standard predictive modeling methods like principal component analysis (PCA) is that RELR does not force orthogonal features as predefined features. In fact, more complicated Explicit RELR models often will contain highly multicollinear features if needed to fit the data. In contrast, Implicit RELR will almost always contain highly multicollinear features if they are predictive because its objective is not determined by parsimony principles. The problem with forcing orthogonal features is that the solution might be entirely an artifact of an assumption of orthogonal features. For example, an algorithm like Fourier analysis that forces orthogonal solutions will find orthogonal solutions with frequency components in perfect whole number ratios even in white noise observations. On the other hand, when Explicit RELR finds simple structure that is built from features that are relatively uncorrelated, it is much more likely to be a stable structure that would be similar if the model had been trained with independent data.

4 RELR and Spike-Timing-Dependent Plasticity

Spike-timing-dependent plasticity effects are observed quite widely in neural learning. These effects are evidence for the Hebbian learning principle introduced in the last chapter.37 The basic effect is if a presynaptic neuron spikes within a brief window of time prior to when a postsynaptic neuron spikes, this will have a higher probability of causing long-term potentiation (LTP), which is a long-term positive weight change in an input learning weight. On the other hand, if the presynaptic neuron spike occurs in a brief window of time after the postsynaptic neuron spikes, synaptic long-term depression (LDP) and a more negative learning weight change is likely. Spike-timing-dependent processes were originally observed in excitatory synapses; yet these processes are now known to exist in inhibitory synapses.38

Temporal coincidence involving the relative timing of pre- and postsynaptic spikes is involved in spike-timing-dependent learning effects. The rapid learning of new connections through temporal coincidence of pre- and postsynaptic activation would allow for flexible rerouting of information that does not depend upon prior fixed anatomical connections. The resulting spike-timing plasticity effects could lead to LTP and LDP and have the effect of boosting short-term activity in those synapses that are most active and important for memory consolidation. Consistent with these results is the observation that human memory is strongest when there is a strong coupling between local theta oscillations and neuronal spiking in medial temporal lobe.39

Abundant empirical evidence indicates that neural learning weight changes are related to tight temporal correlations between pre- and postsynaptic spikes. Yet, the relative positivity or negativity of graded potentials in dendrites appears to be the critical effect rather than the timing of the spikes in presynaptic neurons relative to spike in postsynaptic neurons.40,41 When spikes in postsynaptic neurons occur during greater positivity in their dendritically measured potentials, LTP appears to be more likely in these same dendritic synapses. In contrast, when spikes in postsynaptic neurons occur during greater negativity in their dendritic measured potentials, LDP appears to be more likely. The precise mechanisms that cause these LTP and LDP weight changes are still being worked out and may relate to a back propagation mechanism in dendrites.42

Like this neural spike-timing-plasticity mechanism, RELR's learning is based upon the tight correlation between input features and binary signals. Those features which are considered as viable candidates tend to have the highest magnitude correlations in either a positive or a negative direction. Likewise, RELR's maximum entropy logistic regression learning mechanism is based upon a cross-product sum across binary responses and coincident independent variable feature values (Appendix A1, Eqns A1–A5). This mechanism also updates prior memory weights through minimal K–L divergence learning (Appendix A4). This cross-product sum is computed for each independent variable feature to form a set of basic constraints in the maximum likelihood logistic regression optimization. This RELR learning mechanism is consistent with spike-timing-dependent plasticity that is caused by greater relative positivity or negativity in the features. In a RELR neural mechanism, postsynaptic spiking responses that occur during greater feature positivity would be more likely to give greater positive weight for a given effect, whereas greater negative weight would occur as a result of spiking that occurs during greater feature negativity.

Thus, RELR's logistic regression learning may have reasonable similarity to the spike-timing-dependent plasticity in real neural learning at least in an aggregate probabilistic sense. The sum of the cross-products between a graded potential input effect and the axonal hillock binary spike signal across a training episode time interval would be greater for more synchronous input effects. This cross-product sum is identical to the cross-product sum that is the basic constraint that determines weight learning in RELR. This is depicted in Fig. 6.5, which shows that the advantage of synchronous graded potential inputs would be that they would produce more positivity in tandem. Likewise, if they occurred during negative phases of the local field potentials, they would produce more negativity in tandem. Therefore, as also happens in neural spike-timing-learning mechanisms, the RELR learning mechanism is general enough to handle spike-timing-dependent processes that are excitatory, inhibitory, or that change direction during the course of the development.43 This is because RELR's learning is entirely data-driven and caused by correlations between independent variable features and binary responses, which may change direction over time.

5 Attention and Neural Synchrony

The sparse oscillating neural representations of conscious human perception, memory and thinking are ultimately a function of what is amplified by the attention system. Attention can be described as a form of filtering where certain signals are allowed to pass through to conscious processing and others are prevented. A more aesthetically pleasing metaphor that is sometimes used by psychologists is that attention is the conductor in the orchestra of explicit cognitive experience, as it allows explicit cognition to exert control over implicit cognitive information and focus on what is truly important. In essence, this conductor is constantly directing all instruments in the brain's neural ensemble orchestra, but also extemporaneously composing its own oscillating synchronies, periodicities, and rhythms.

Psychologists can measure attention processing with reaction time and signal detection performance. During the excited EEG state depicted in Fig. 6.1, attention to external stimuli is heightened as measured by faster reaction times and better signal detection than during the relaxed and wakeful times when the alpha rhythm predominates. Attention also fluctuates as a function of the phase of the resting posterior alpha rhythm as measured by auditory and visual performance in humans. The resting alpha rhythm is thought to reflect extensive synchronous feedback oscillatory signaling between the thalamus and the cortex. As shown in Fig. 6.2, the thalamus sits in the center of the brain as a passageway between sensory organs and the cerebral cortex. Different thalamic nuclei serve as a major relay station for sensory information on the way toward the primary cortex for the different modalities. Yet, the thalamus is not simply a passive responder to incoming sensory inputs. Instead, the oscillating neural synchrony as measured in the alpha rhythm would appear to gate input information from the physical environment. This is essentially a sampling mechanism that does not continuously monitor the external world equally at all times while we direct attention inward, but instead samples the external environment with a sensitivity to incoming information that varies directly with the phase and frequency of the alpha rhythm at roughly 8–12 Hz.44 Even during the excited EEG state, the evidence is that the phase of the EEG still determines attention, as detection performance has been observed to vary with the phase of a 7 Hz theta rhythm in this case.45 Thus, oscillating neural synchrony may be able to serve as an attention shutter rhythm that opens and closes to determine the sensory information that is processed by higher cognitive functions in the brain.

This attention mechanism also can be studied at a lower level like in a single neuron or a small ensemble of neurons. This research suggests that attention is regulated by neural synchrony. The same neural synchrony principles that may apply to a larger ensemble of neurons seem to apply to the individual neuron. In particular, input neurons that exert synchronized effects on an output neuron appear more influential and have an amplified effect on the output; a similar mechanism can be assumed to produce attention in a neural ensemble representing groups of neurons.46 These results would be predicted at a neuronal level because synchronized spiking presynaptic neurons that produce synchronized graded potential effects in the postsynaptic output neuron would be expected to exert much stronger effects on the output neuron's axonal spiking compared to asynchronous inputs. Thus, greater synchrony of excitatory neural inputs should be associated with enhanced spiking compared to asynchronous inputs as simulated in Fig. 6.6. In neural computation, binary spiking and input feature signals that are perfectly in phase or perfectly out of phase would imply strong positive or strong negative correlation between binary spiking and features. On the other hand, random phase relationships between input features and binary spiking would be associated with much smaller magnitude correlations and corresponding lower t-value magnitudes. So, this attention mechanism may be similar to the RELR feature reduction based upon t-value magnitudes which serves to focus on the most important candidate features for later processing. These attention neural synchrony computational effects are shown in Fig. 6.6.

Figure 6.6 At the top is a simple simulation of the compound output signal at the soma that would arise from excitatory synchronous neural spiking inputs. At the bottom is the same such output signal that arises from excitatory asynchronous neural spiking inputs. This is designed to be a simple integrate-and-fire response with an all-or-none spiking output. Notice that much greater spiking can occur in response to two excitatory synchronous inputs compared to two excitatory asynchronous inputs. The 2:1 frequency ratio between the synchronous inputs is not accidental in the top panel, as oscillating neural synchrony would arise through simple whole number frequency ratios in the firing response of the neurons that form the inputs.

Another well-studied attention phenomenon is that the brain habituates very rapidly to monotonous stimuli whether it is a monotonous tone, a monotonous spoken word, or monotonous visual imagery in driving along a highway. This indicates that the attention mechanism greatly prefers novel stimuli and will ignore monotonous stimuli whenever possible. When only monotonous sensory inputs are available when driving on a monotonous highway, people often become drowsy and fall asleep. A similar attention preference for novel or rare events is seen in RELR's preference to balance rare versus frequent target outcome events in training samples, as a sample composed of all outcomes of one value would be meaningless.

Whether attention is considered at the neural ensemble or individual neuron level, this still may be considered to be a feature reduction process that also may sample the learning episode to prefer more novel observations. Attention seems to work something like how caching works in hard disk processing. It breaks incoming data into learning episodes that are the basic data epochs, and it is those episodes that are processed in working memory. To some extent, it is driven by external events, as when a relatively rare event in the presence of monotonous stimuli awakens attention. Yet, the brain's attention is also inextricably connected with explicit prior memory as in the square perception example. In addition, the brain's attention is also under the control of the central executive function in working memory, as in the top-down processing that originates in the frontal eye fields of prefrontal cortex and gradually restrict visual inputs in structures that eventually include the primary visual cortex.47 Attention of cached samples of incoming data allows the brain to engage in sequential online learning while memory weights are constantly updated using small samples of new observations in ways that could be similar to RELRs sequential online learning. The human brain clearly does not perceive information in real time. This is exemplified by film which is built from 20 discrete frames per second, but where continuous motion images are perceived. Thus, real-time learning might not be necessary to simulate the brain. Instead, rapid cached sequential working memory updates might be all that is necessary.

The important point is that the brain does not seem to perform batch sample learning across huge numbers of observations which is a lesson that might be of value to current machine learning notions about how Big Data might be used to yield smarter artificial intelligence. Instead of using enormous Big Data observation samples across lengthy historical periods, the brain seems to sample brief episodes in time that are the basis of working memory. The memory of these episodes are likely to become episodic longer term memories if rehearsed or processed more deeply, although the consolidation of recent explicit memories into substantially longer term explicit memories also may depend upon effects observed during sleep.48

6 Metrical Rhythm in Oscillating Neural Synchrony

The lower frequency EEG rhythms that are typically prominent during human wakefulness are <30 Hz and include theta, alpha, beta, and lower frequency gamma rhythms, although even very low-frequency rhythms in the delta frequency range are observed at times. These low-frequency brain signals often seem to reflect the duration and rhythm of separate conscious processing events much like the duration and rhythm of musical notes. In fact, separate low-frequency brain signals can have small whole number duration ratios which are also akin to the rhythmic structure in many forms of music. An example is the 4:1 ratio that is observed between the duration of quarter and sixteenth notes in metrical rhythm in western music.

A striking example of this low-frequency rhythmic structure in the EEG is from a study that concerned the activity of the hippocampus in epileptic patients by Axmacher and associates.49 Patients were engaged in a working memory task that is a modified form of the well-known Sternberg memory task. Novel faces were first serially presented to the patients with one, two or four items in a to-be-remembered set. Next, there was a 3.5 s maintenance period when these items were to be rehearsed. Finally, a probe face was presented and patients had to indicate whether or not the probe face was in the preceding set of to-be-remembered faces. During the maintenance phase of this task, there was significant phase and frequency coupling of rhythms in the theta range with a peak spectral power at 7 Hz and the beta/gamma range with a peak spectral gamma power at 28 Hz. That is, the faster gamma rhythm was amplitude modulated by and phase locked to the positive phase of the slower theta rhythm. Most interestingly, the rhythmic structure varied with the number of items to-be-remembered in this task, as one item related to one gamma cycle per theta cycle, two items related to two gamma cycles per theta cycle, and four items related to four gamma cycles per theta cycle. Also very notable, the frequency ratios of gamma to theta rhythms remained locked at 4:1 across these memory conditions. Although there was a shift toward slower theta rhythms with increasing memory load, the gamma signals also trended toward slower rhythms as memory load increased from one to four items. The simultaneous slowing in both theta and gamma rhythms with increasing memory load is why the 4:1 ratio in frequency remained in effect across the memory load conditions. This is a very rapid extreme tempo during this challenging memory task, but it is close to the upper range of what might observed in American Jazz music.

Based upon other data involving this coupling between theta and gamma rhythms during working memory, Jensen and Lisman had proposed earlier that the number of gamma cycles that are coupled to the theta rhythm should reflect the number of items in a working memory maintenance rehearsal process. That is, the processing of each to-be-remembered item would be reflected by each different gamma cycle in a gamma rhythm that is itself locked to the theta rhythm. Thus, if a person rehearses a seven-digit phone number, there should be seven gamma cycles per theta cycle and each gamma cycle would represent the timing of the brain's processing of a different digit in the series. Likewise, the rehearsal of four facial images would be associated with four gamma cycles per theta cycle, whereas the rehearsal of one facial image would be associated with only one gamma cycle per theta cycle. The Axmacher et al. findings are interpreted to be in support of this model that every gamma cycle in a sequence reflects the processing of an independent object of consciousness in the underlying neural ensemble. These rehearsed neural ensemble objects are then synchronized to the theta rhythm in the hippocampus during the brain's working memory processing.50

However, the Axmacher et al. finding of an invariant small whole number frequency ratio across the memory load conditions does contradict one detail in the earlier Jensen and Lisman model. Jensen and Lisman had proposed that the theta rhythms should slow to accommodate more rehearsed items but the gamma rhythm should not, as gamma was assumed to have a constant frequency in this model so that whole number ratios would not be maintained between these two frequencies. In contrast, this Axmacher et al. finding that the theta and gamma rhythms are organized in invariant whole number frequency ratios suggests that this EEG rhythmic structure is similar to metric rhythmic structures seen, for example, in western music where quarter and sixteenth notes exhibit an approximate constant 4:1 duration ratio across varying tempos. Wavelet analysis is typically in studies like Axmacher et al. because it is sensitive to such temporal organization and variation in rhythms.

Another interesting finding in the Axmacher et al. study was that there was significant coupling between very low-frequency delta (1–4 Hz) and the beta rhythm (14–20 Hz) although there was no increase in this coupling during the Sternberg maintenance rehearsal phase compared to baseline. Thus, other faster rhythms may be organized relative to other slower beat rhythms in analogy with metrical rhythms in music, so this metrical rhythm structure would not be expected to be unique to gamma and theta synchrony. Indeed, this metrical rhythm organization may be present across diverse cognitive operations in the brain, and also not be unique to the brain's working memory rehearsal.

The Axmacher et al. findings are based upon within-subject comparisons of hippocampus recordings during working memory rehearsal in humans. Kaminski, Brzezicka and Wróbel51 also studied the association between human working memory and the theta/gamma ratio, but they used scalp recordings and were interested in between-subject comparisons. Kaminski et al. report that individual differences in working memory capacity as measured in a modified Wechsler digit span task are directly correlated to gamma/theta ratio. This digit span test assesses one's skill in successfully reproducing serially presented digits such as a phone number, so it is also a measure of working memory rehearsal ability. Lisman and Idiart52 (1995) had originally proposed that the gamma/theta ratio determines the capacity of human working memory; Kaminski et al. interpreted their finding as supporting the Lisman and Idiart hypothesis. Across Kaminski et al.'s 17 subjects, this digit span capacity varied between four and eight and there was close to a one-to-one relationship with gamma/theta ratio although it varied more so at the higher ratios. The EEG was scalp recorded from mid-frontal electrodes, and this would be expected to have more noise than the hippocampal measure of Axmacher et al. The higher gamma/theta ratios also would be expected to have more noise, as between-subject comparisons would have greater than noise than within-subject comparisons. Thus, greater noise could explain the lack of a perfect 1:1 relationship between number of memory items and number of gamma cycles coupled to theta in this study. The other possibility is that the best performers chunked two or more digits together, so that they could then rehearse more information more rapidly, and each gamma cycle would then still represent a rehearsal item. Still, overall these findings are supportive of the notion that the gamma/theta coupling reflects the rhythm of working memory processing including rehearsal processing.

Oscillating neural synchrony mechanisms, as reflected in rhythmic coupling between theta (4–8 Hz) and gamma rhythms in the 25–30 Hz range, are proposed to reflect a mechanism that allows the hippocampus and associated medial temporal lobe structures to place shorter term working memory patterns into a longer term store and to retrieve such patterns when needed.53 When the brain rehearses or maintains items like faces in working memory, it likely does not store all features in rote detail. Instead, it likely stores specific more pronounced features like a large nose. In addition, it may chunk many facial features by storing larger associations to a face like who this person looks like. Thus, it is reasonable to expect that it stores the features and associations that it processes most deeply. In other words, this feedback process that ultimately stores the most important and most meaningful information may be a feature selection storage process akin to an Explicit RELR online sequential learning process that is driven very much by prior meaningful memories. In fact, the learning of new episodic memories has long been known to be enhanced by depth of processing that can utilize the prior meaningfulness of the to-be-remembered items.54

A similar rhythmic organization in lower frequency brain signals below roughly 30 Hz has been observed in brain structures not directly involving the medial temporal lobe and during a variety of tasks that can be characterized as involving more than just working memory. For example, Carlqvist and collaborators55 extracted resting scalp recorded EEG alpha (7.5–12.5 Hz) and beta (15–25 Hz) rhythms from human subjects. They found approximately a 1:2 frequency ratio between the beta and alpha oscillations. The spectral power correlation between these rhythms was strongest in posterior electrodes where both rhythms also had the most power. Thus, the alpha and beta rhythms were coupled much like theta and gamma in invasive hippocampus recordings, but with a different whole number frequency ratio. Nikulin and Brismar also report 1:2 ratios between alpha and beta frequencies in a larger study composed of 176 adults during resting EEG.56 Palva, Palva and Kaila studied scalp MEG in humans at rest and during mental arithmetic with two or three digits.57 They found that phase synchrony between alpha and beta or gamma was prominent mainly in the right hemispheric parietal region during the mental arithmetic task where there was a 2:1 or 3:1 frequency ratio of beta (20 Hz) or gamma (30 Hz) to alpha (10 Hz) with phase locking between the two rhythms. The higher frequency ratio was most prominently seen in the more difficult mental arithmetic task involving three digits. This Palva et al. finding again supports the idea that the number of gamma cycles per lower frequency rhythm may reflect the neural ensemble's processing rhythm that represents separate conscious objects computed through oscillating synchrony mechanisms. But in this case, the lower frequency rhythm is the alpha rhythm and the objects are mental arithmetic items and the oscillating synchrony is appearing in the right parietal region.

Roopun58 and collaborators comment that mental arithmetic effects like observed in Palva et al. might be explained by a memory matching and attention model proposed by Sauseng and associates.59 Sauseng et al. find that during attention to a visual target, there is enhanced synchrony between a gamma (e.g. a 30 Hz signal) rhythm and a lower frequency rhythm (e.g. an alpha or theta rhythm). They suggest that the gamma and lower frequency alpha or theta rhythms represent bottom-up and top-down processing, respectively. This is interpreted as memory matching between incoming visual information and stored top-down information. Such a mechanism would highlight the function of oscillatory brain activity in the integration of attention and memory processes in working memory. In this mechanism, the increased interregional synchrony of the lower frequency rhythm—theta or alpha—seems to be important in setting the temporal rhythm and duration of this conscious cognitive process, whereas each cycle of the higher frequency beta or gamma rhythms up to about 30 Hz may represent the separate objects of working memory. Small whole number frequency ratios between these various rhythms would be needed to give rise to stable repeating standing wave oscillating synchrony effects in an explicit cognitive mechanism. If bottom-up processing is taken to be the implicit cognitive processing in attention that performs feature reduction and top-down processing is assumed to be the explicit cognitive processing that recalls a given object or feature from memory, then this type of matching mechanism possibly could be a matching mechanism similar to RELR's sequential online learning or RELR's outcome score matching process. This is because both these RELR matching processes can be modeled as interplays between implicit and explicit processes. This suggestion is speculative and any resemblance to the brain's sequential and simultaneous matching processes would require empirical data like what may be obtained in the BAM project over the coming years. But, RELR at least allows putative neural ensemble matching mechanisms to be imagined, which may be a starting point for hypothesis testing with better data afforded by the BAM project.

Roopun et al. also comment that other irrational frequency ratios often exist between lower and higher frequency rhythms in the EEG field potentials and thus give rise to nonstationary and nonrepeating signals. If one believes the Buzsáki hypothesis outlined previously that feedback signaling in the brain represents conscious processing whereas feed-forward signaling in the brain represents nonconscious processing, then a simple interpretation is possible. The irrational frequency ratios might reflect underlying feed-forward traveling waves in implicit unconscious cognitive processes that never resonate with more stable explicit conscious periodic standing wave signals. On the other hand, the low-frequency brain rhythms that are related in simple whole number frequency ratios to the higher frequency rhythms would be the same implicit unconscious signals that resonate with conscious object perception and memory events to produce explicit learning. This could occur through a bottom-up/top-down matching mechanism like proposed by Roopun et al. but which is also akin to RELR's model of online sequential learning. Again, empirical tests of these hypothetical neural computation mechanisms might be possible with BAM data in coming years.

7 Higher Frequency Gamma Oscillations

The available evidence is that lower frequency brain electromagnetic field signals roughly at or below 30 Hz can have music-like metric rhythm structure and that there may be correspondence to the temporal events in conscious cognitive experience. However, low-frequency brain signals would not be able to carry information at a rate that even closely approximates the maximal firing rate of neural impulses of 1000 Hz. On the other hand, higher frequency gamma signals >30 Hz would be able to carry information close to this rate, as invasive recordings suggest that gamma signals range all the way to at least 500 Hz in humans. Since middle C in western music is approximately 264 Hz in its fundamental frequency, higher frequency gamma signals are in the same frequency range as the tones that form the harmonic and melodic structure of music. This high-frequency tonal structure of music is experienced as its most informative aspect, which seems to express conscious emotional and cognitive events in a pure language of oscillating synchrony. This is indicated by Schopenhauer's famous suggestion that the higher tones embodied in the melodic voice represent the “uninterrupted significant connection of one thought from beginning to end” in the “intellectual life and endeavor of man”. Thus, a very intriguing question is whether the brain's very high-frequency gamma field potential signals may be rhythmically organized relative to the lower frequency rhythms in a way that is analogous to how higher frequency musical tones are organized with respect to rhythmic lower frequency note patterns. A related question is whether this music-like structure can be observed during informative explicit cognitive processing.

The problem is that higher frequency gamma signals are the hardest to record either invasively or noninvasively due to the low pass filter properties of the brain and scalp tissue. Even with this constraint, there is still a large and growing amount of evidence that the higher frequency gamma signals do actually reflect higher order explicit cognitive processing that would be properly labeled as perception or thought. Recall that the original observation in the 1980s that led to Singer's proposal that gamma oscillations represent perception was based upon highly periodic and synchronized 40 Hz visual cortex gamma oscillations in cats. Similar highly periodic gamma oscillations are observed at higher cognitive levels in humans, but they often have much higher frequencies.

As one example, Gaona and associates show that in subdural and dural electrodes placed over left hemispheric cortical language areas, gamma activity in the range of 80 Hz is more pronounced during speaking a set of words, whereas gamma activity in the range of 288 Hz is more pronounced during hearing the same set of words.60 Higher frequency gamma signals also may show aspects of music-like organization in that they are rhythmically organized relative to the lower frequency rhythms. For example, Jacobs and Kahana61 show that neural representation of individual letter stimuli in humans may be revealed by higher gamma-band invasive EEG activity (up to 128 Hz). Gamma-band activity phase locked to theta increased at specific electrodes and to specific letters. The gamma activation in the occipital cortex seemed specific to the physical properties of the letter because the activation pattern across electrodes was similar for physically similar letters. In other brain regions such as temporal cortex, the physical specificity of gamma increase was not observed as instead the activation seemed to be specific to the abstract symbol. So this is evidence that high-frequency gamma signals are both phase locked to the underlying theta rhythm and are activated during both data-driven physical stimulus processing and semantic processing in cerebral cortex.

Other studies also show that high-frequency gamma signals are phase locked to lower frequency theta or alpha rhythms and this varies with the cognitive task. For example, Canolty and colleagues62 found that high gamma activity (80–150 Hz) in invasive EEG recordings in neocortical temporal, parietal and frontal regions was phase locked to theta (4–8 Hz) with stronger modulation occurring at higher theta amplitudes. Different tasks elicited different spatial patterns of theta/gamma coupling. Examples of tasks included an auditory Sternberg phoneme working memory task, passive listening to tones or phonemes, auditory-tactile target detection, linguistic target detection, and verb generation. One other important finding was that tasks with overlapping behavioral components exhibited more similar patterns of spatial theta/gamma coupling. Voytek and associates63 replicated this earlier Canolty et al. finding related to gamma/theta coupling. They also studied gamma/alpha coupling and found that it has somewhat similar properties. The exception was that gamma/theta coupling was more anterior and is more likely to be observed in nonvisual tasks compared to gamma/alpha coupling. These studies add to the impressive body of evidence that high-frequency gamma signals are temporally organized with respect to lower frequency rhythms during conscious cognitive processing.

One notable problem in today's Big Data machine learning is that it is very difficult to implement parallel processing mechanisms compared to serial processing. This is undoubtedly because the rhythm and timing of the separate methodic components need to be well synchronized in much the same way that a conductor needs to make sure that various sections of the symphonic orchestra synchronize together. Perhaps, because of rhythmic structure in parallel processing across time so that components are well harmonized, the brain's constant exchange between explicit and implicit cognitive processing overcomes this problem in ways that would be a good model for machine learning.

So, the brain may use what is recognized as music-like structure in how it organizes its massively parallel signaling into some semblance of conscious cognitive order. These raw field potential signals certainly do not sound like music when they are recorded, as there is a large amount of noise present always. Yet, when filtered, averaged, or recorded locally in a way that amplifies the signal and reduces the noise, rhythmic and periodic properties become clear. These surprising discoveries of oscillating neural synchrony with essentially music-like structure during conscious human cognition seem to harmonize the most grandiose mystical speculations of Pythagoras and Schopenhauer in a leitmotif that is central to modern neuroscience. A constant interplay between consonance and dissonance of underlying explicit and implicit neural ensembles throughout the brain is also consistent with recent evidence that emotion seems to be a property of many simultaneously activated brain regions.64 Unfortunately, the working memory neural ensemble is not always capable of resolving underlying irrationality and dissonance in ways that even remotely compare to a well-harmonized string ensemble playing a Bach fugue. That is the subject of the next chapter.