Management and configuration

This chapter describes enhancements in z/OS that help to simplify storage management, which leaves it to the system to manage automatically and autonomically the storage. The foundation to reach this goal was developed through system-managed storage and has been available since 1989. Enhancements over the past years to today’s z/OS Version 2 level perfected the storage software within z/OS.

The focus of this chapter is on storage pool designs and specific enhancements that were jointly developed by the z/OS and DS8880 storage system development teams to achieve more synergy between both units.

This chapter includes the following topics:

4.1 Storage pool design considerations

Storage pool design considerations were always a source of debate. Discussions originated in the early days when customers discovered that they could not manually manage the growing number of their disk-based volumes.

IBM responded to this challenge by introducing system-managed storage and its corresponding system storage software. The approach was to no longer focus on volume awareness and instead turn to a pool concept. The pool was the container for many volumes and disk storage was managed on a pool level.

Eventually, storage pool design considerations also evolved with the introduction of storage systems, such as the DS8000 storage system, which offered other possibilities.

This section covers the system-managed storage and DS8000 views, and how both are combined to contribute to the synergy between the IBM Z server and DS8880 storage systems.

4.1.1 Storage pool design considerations within z/OS

System storage software, such as Data Facility Storage Management Subsystem (DFSMS), manages information by creating a file or data set, setting its initial placement in the storage hierarchy, and managing it through its entire lifecycle until the file is deleted. The z/OS Storage Management Subsystem (SMS) can automate management storage tasks and reduce the related costs. This automation is achieved through policy-based data management, availability management, space management, and even performance management, which DFSMS provides autonomically.

Ultimately, it is complemented by the DS8000 storage system and its rich variety of functions that work well with DFSMS and its components, as described in 4.1.4, “Combining SMS storage groups and DS8000 extent pools” on page 48.

This storage management starts with the initial placement of a newly allocated file within a storage hierarchy. It includes consideration for storage tiers in the DS8000 storage system where the data is stored. SMS assigns policy-based attributes to each file. Those attributes might change over the lifecycle of that file.

In addition to logically grouping attributes, such as the SMS Data Class (DC), SMS Storage Class (SC), and SMS Management Class (MC) constructs, SMS uses the concept of Storage Group (SG).

Finally, files are assigned to an SG or set of SGs. Ideally, the criteria for where to place the file should be solely dictated by the SC attributes and is controlled by the last Automatic Class Selection (ACS) routine.

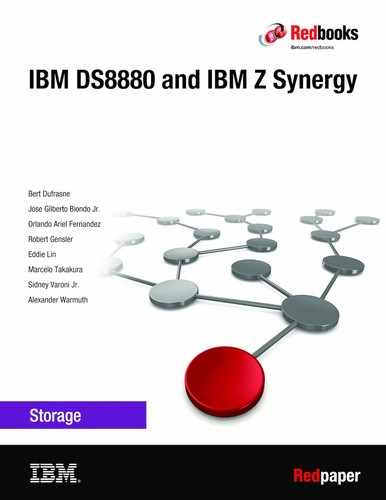

A chain of ACS routines begins with an optional DC ACS routine, and a mandatory SC ACS routine that is followed by another optional MC ACS routine. This chain of routines is concluded by the SG ACS routine. The result of the SG ACS routine culminates in a list of candidate volumes where this file is placed.

Behind this candidate volume list is sophisticated logic to create the volume list. z/OS components and measurements are involved to review this volume list to identify the optimal volume for the file.

Therefore, the key construct from a pooling viewpoint is the SMS SG. The preferred approach is to create only a few SMS SGs and populate each SMS SG with as many volumes as possible. This approach delegates to the system software the control for how to use and populate each single volume within the SMS SG.

The system software includes all of the information about the configuration and the capabilities of each storage technology within the SMS SG. Performance-related service-level requirements can be addressed by SC attributes.

The SMS ACS routines and execution sequence are shown in Figure 4-1. Also shown is a typical SMS environment. The SC ACS routine is typically responsible for satisfying performance requirements for each file by assigning a particular SC to each file. The SC ACS fragment routine (see Figure 4-1) selects, through filter lists, certain data set name structures to identify files that need a particular initial placement within the SMS storage hierarchy. Also shown in Figure 4-1 is the storage technology tiers.

Figure 4-1 SMS ACS routines and SMS storage hierarchy

For more information about SMS constructs and ACS routines, see z/OS DFSMSdfp Storage Administration Version 2 Release 2, SC23-6860-02 or IBM Knowledge Center.

How the SG ACS routine assigns one or more SGs to a newly allocated file is shown in Figure 4-2. Also shown is that, in this hypothetical scenario, an SG contains volumes that are based on a homogeneous storage technology only.

Figure 4-2 SMS SG ACS routine

Typically, you use this kind of storage pool management when a specific application always needs the fastest available storage. Independently of the I/O rate and size of each I/O, you want to protect the data from being moved to a less powerful storage level.

In this example, you might assign an SC CRIT and place the corresponding files in the FLASH SG. The FLASH SG contains only volumes that are in an extent pool with flash storage exclusively. This case might be extreme, but it is justified for an important application that requires the highest available performance in any respect.

|

Note: In the newer DS8880 storage systems, a best practice is to create hybrid extent pools and enable Easy Tier on them for systems with multitier hybrid configurations. By doing so, the DS8880 microcode handles the data placement within the multiple storage tiers that belong to the extent pools for best performance results.

|

4.1.2 z/OS DFSMS class transition

Starting with z/OS 2.1, DFSMS (and specifically Data Facility Storage Management Subsystem Hierarchical Storage Manager (DFSMShsm)) is enhanced to support a potential class transition in an automatic fashion, which enables relocating a file within its L0 storage level from an SG into another SG. This relocation is performed during DFSMShsm automatic space management and is called class transition because ACS routines are exercised again during this transition process.

Based on a potentially newly assigned SC, MC, or a combination of both, the SG ACS routine then assigns a new SG. This group might then be an SG that is different from the previous group based on the newly assigned SC within this transition process. This new policy-based data movement between SCes and SG is a powerful function that is performed during DFSMShsm primary space management and by on-demand migration and interval migration.

|

Note: During initial file allocation, SMS can select the correct SG. However, the performance requirements might change for this file over time and a different SG might be more appropriate.

|

For more information, see the following publications:

•z/OS DFSMS Using the New Functions Version 2 Release 1, SC23-6857

•DFSMS Using the New Functions Version 2 Release 2, SC23-6857

With z/OS V2.2, various migrate commands are enhanced to support class transitions at the data set, volume, and SG level. For more information, see IBM z/OS V2R2: Storage Management and Utilities, SG24-8289.

4.1.3 DS8000 storage pools

The DS8000 architecture also understands the concept of pools or SGs as extent pools.

The concept of extent pools within the DS8000 storage system also evolved over time from homogeneous drive technologies within an extent pool to what today is referred to as a hybrid extent pool, with heterogeneous storage technology within the same extent pool. The use of hybrid extent pool is made possible and efficient through the Easy Tier functions.

With Easy Tier, you can autonomically use the various storage tiers in the most optimal fashion, based on workload characteristics. This goal is ambitious, but Easy Tier for the DS8880 storage system evolved over the years.

In contrast to SMS, where the granularity or entity of management is a file or data set, the management entity is a DS8880 extent within Easy Tier. The Count Key Data (CKD) extent size is the equivalent of a 3390-1 volume with 1113 cylinders or approximately 0.946 GB as a DS8000 extent size, when large extents are used.

When small extents are used, these extents are 21 cylinders, or approximately 17.85 MB, but are grouped in extent groups of 64 small extents for Easy Tier management. Automatic Easy Tier management within the DS8880 storage system migrates extents between technologies within the same extent pool or within an extent pool with homogeneous storage technology.

For more information, see IBM DS8000 Easy Tier (for DS8880 R8.5 or later), REDP-4667.

4.1.4 Combining SMS storage groups and DS8000 extent pools

When comparing SMS storage tiering and DS8000 storage tiering, each approach has its own strength. SMS-based tiering addresses application and availability needs through initial data set or file placement according to service levels and policy-based management. It also gives control to the user and application regarding where to place the data within the storage hierarchy.

With DFSMS class transition, files can automatically transition between different SMS SGs. This capability currently requires that the file is not open to an active application. From that standpoint, Easy Tier can relocate DS8000 extents without affecting a file that might be in use by an application.

Easy Tier does not understand an application’s needs when the data set is created. Its intention is to use the available storage technology in an optimal fashion by using flash memory when the performance effect of hard disk drive (HDD)-based technology with its latency might negatively affect an application.

SMS storage tiering and DS8000 storage tiering are compared in Figure 4-3.

Figure 4-3 Comparison of SMS tiering and DS8000 tiering

Combining both tiering approaches is possible, as shown in Figure 4-4.

Figure 4-4 SMS storage groups with and without mixed storage technology

Four potential SMS SGs are shown in Figure 4-4 on page 48. In this example, SG Flash is intended to store crucial and important files that are latency sensitive. Therefore, this SG has its volumes solely defined in a DS8000 extent pool that consists of only flash memory, only solid-state drive (SSD) technology, or both.

Through SMS, class transition storage administration can automatically relocate files according to the application’s needs and requirements. This feature might be especially helpful to address important applications that can run only at month-end or quarterly. But then, they must have the best available performance that the configuration can provide.

The Ent SG has all of its volumes defined within DS8000 extent pools that contain enterprise level HDDs (SAS-based 10 K / 15 K RPM). Typically, HDDs provide sufficient performance and IBM Z controlled I/O uses the DS8000 cache storage so efficiently that it can provide good I/O performance because of high cache-hit ratios.

A nearline SMS SG uses high-capacity 7200 RPM SAS-based HDDs. All related volumes are defined in DS8000 extent pools that contain 7.2 K HDDs only. To that storage tier, SMS can direct files that do not need high performance and require the least I/O service time.

The fourth SMS SG has all its volumes defined in DS8000 hybrid extent pools, which might contain SAS HDDs and also some flash technology (see Figure 4-4 on page 48). SMS can allocate files here that show a regular load pattern but with different I/O behavior and I/O skews.

However, Easy Tier in automatic mode can see the I/O skew over time and might start moving extents within the pool. The Easy Tier intratier capability relocates certain extents from overloaded ranks to ranks with less activity within the affected extent pool, which uses a balanced back-end workload as much as possible.

Combining SMS SGs and DS8000 Easy Tier tiering approaches might lead to the best achievable results from a total systems perspective. This combination proves another tight integration between IBM Z and DS8000 storage system to achieve to best possible results in an economical fashion with highly automated and transparent functions serving IBM Z customers.

4.2 Extended address volume enhancements

Today’s large storage facilities tend to expand to larger CKD volume capacities. Some installations are nearing or beyond the z/OS addressable unit control block (UCB) 64 KB limitation disk storage. Because of the four-digit device addressing limitation, larger CKD volumes must be defined by increasing the number of cylinders per volume.

Currently, an extended address volume (EAV) supports volumes with up to 1,182,006 cylinders (approximately 1 TB).

With the introduction of EAVs, the addressing changed from track to cylinder addressing. The partial change from track to cylinder addressing creates the following address areas on EAVs:

•Track-Managed Space: The area on an EAV that is within the first 65,520 cylinders. The use of the 16-bit cylinder addressing allows a theoretical maximum address of 65,535 cylinders. To allocate more cylinders, you must have a new format to address the area above 65,520 cylinders.

For 16-bit cylinder numbers, the track address format is CCCCHHHH, where:

– HHHH: 16-bit track number

– CCCC: 16-bit track cylinder

•Cylinder-Managed Space: The area on an EAV that is above the first 65,520 cylinders. This space is allocated in multicylinder units (MCUs), which currently have a size of 21 cylinders. A new cylinder-track address format addresses the extended capacity on an EAV.

For 28-bit cylinder numbers, the format is CCCCcccH, where:

– CCCC: The low order 16 bits of a 28-bit cylinder number

– ccc: The high order 12 bits of a 28-bit cylinder number

– H: A 4-bit track number (0 - 14)

The following z/OS components and products now support 1,182,006 cylinders:

•DS8000 storage system and z/OS V1.R12 or later support CKD EAV volume sizes: 3390 Model A: 1 - 1,182,006 cylinders (approximately 1004 TB addressable storage).

•Configuration granularity:

– 1-cylinder boundary sizes: 1 - 56,520 cylinders

– 1113-cylinder boundary sizes: 56,763 (51 x 1113) - 1,182,006 (1062 x 1113) cylinders

The size of a Mod 3/9/A volume can be increased to its maximum supported size by using dynamic volume expansion (DVE). For more information, see 4.3, “Dynamic volume expansion” on page 54.

The volume table copy (VTOC) allocation method for an EAV volume was changed compared to the VTOC used for traditional smaller volumes. The size of an EAV VTOC index was increased four-fold, and now has 8192 blocks instead of 2048 blocks.

Because no space remains inside the Format 1 data set control block (DSCB), new DSCB formats (Format 8 and Format 9) were created to protect programs from seeing unexpected track addresses. These DSCBs are known as extended attribute DSCBs. Format 8 and 9 DSCBs are new for EAV. The Format 4 DSCB also was changed to point to the new Format 8 DSCB.

4.2.1 Data set type dependencies on an EAV

EAV includes several data set type dependencies.

In all Virtual Storage Access Method (VSAM) sequential data set types, Extended, Basic, and Large format; basic direct-access method (BDAM); partitioned data set (PDS); partitioned data set extended (PDSE); VSAM volume data set (VVDS); and basic catalog structure (BCS) can be placed on the extended addressing space (EAS). This space is the cylinder-managed space of an EAV volume that is running on z/OS V1.12 and later.

EAV includes all VSAM data types, such as key-sequenced data set (KSDS); relative record data set (RRDS); entry-sequenced data set (ESDS); linear data set; and IBM Db2, IBM IMS, IBM CICS®, and IBM z/OS File System (zFS) data sets.

The VSAM data sets that are placed on an EAV volume can be SMS or non-SMS managed.

For an EAV volume, the following data sets might exist, but are not eligible to have extents in the EAS (cylinder-managed space):

•VSAM data sets with incompatible control area sizes.

•VTOC (it is still restricted to the first 64 K - 1 tracks).

•VTOC index.

•Page data sets.

•A VSAM data set with IMBED or KEYRANGE attributes is not supported.

•Hierarchical file system (HFS) file system.

•SYS1.NUCLEUS.

All other data sets can be placed on an EAV EAS.

In the current releases, you can expand all Mod 3/9/A volumes to a large EAV by using DVE. For a sequential data set, VTOC reformat is run automatically if REFVTOC=ENABLE is enabled in the DEVSUPxx parmlib member.

The data set placement on EAV as supported on z/OS V1 R12 and later is shown in Figure 4-5.

Figure 4-5 Data set placement on EAV

4.2.2 z/OS prerequisites for EAV volumes

The following prerequisites must be met for EAV volumes:

•EAV volumes with 1 TB sizes are supported only on z/OS V1.12 and later. A non-VSAM data set that is allocated with an extended attribute DSCB (EADSCB) on z/OS V1.12 cannot be opened on earlier versions of z/OS V1.12.

•After a large volume is upgraded to 3390 Model volume (an EAV with up to 1,182,006 cylinders) and the system is granted permission, an automatic VTOC refresh and index rebuild are run. The permission is granted by REFVTOC=ENABLE in parmlib member DEVSUPxx. The trigger to the system is a State Change Interrupt (SCI) that occurs after the volume expansion, which is presented by the storage system to z/OS.

•No other hardware configuration definition (HCD) considerations are available for the 3390 Model A definitions.

•On parmlib member IGDSMSxx, the USEEAV(YES) parameter must be set to allow data set allocations on EAV volumes. The default value is NO and prevents allocating data sets to an EAV volume. Example 4-1 shows a message that you receive when you are trying to allocate a data set on a EAV volume and USEEAV(NO) is set.

Example 4-1 Message IEF021I with USEEVA set to NO

IEF021I TEAM142 STEP1 DD1 EXTENDED ADDRESS VOLUME USE PREVENTED DUE TO SMS USEEAV (NO)SPECIFICATION.

•The new Break Point Value (BPV) parameter determines which size the data set must have to be allocated on a cylinder-managed area. The default for the parameter is 10 cylinders, which can be set on parmlib member IGDSMSxx and in the SG definition (SG BPV overrides system-level BPV). The BPV value can be 0 - 65520, where 0 means that the cylinder-managed area is always preferred and 65520 means that a track-managed area is always preferred.

4.2.3 Identifying an EAV volume

Any EAV has more than 65,520 cylinders. To address this volume, the Format 4 DSCB was updated to x’FFFE’ and DSCB 8+9 is used for cylinder-managed address space. Most of the eligible EAV data sets were modified by software with EADSCB=YES.

An easy way to identify any EAV that is used is to list the VTOC summary in TSO/ISPF option 3.4. Example 4-2 shows the VTOC summary of a 3390 Model A with a size of 1 TB CKD usage.

Example 4-2 TSO/ISPF 3.4 pane for a 1 TB EAV volume: VTOC summary

Menu Reflist Refmode Utilities Help

Menu Reflist Refmode Utilities HelpWhen the data set list is displayed, enter either:

"/" on the data set list command field for the command prompt pop-up,

an ISPF line command, the name of a TSO command, CLIST, or REXX exec, or

"=" to execute the previous command.

|

Important: Before EAV volumes are implemented, apply the latest z/OS maintenance levels, especially when z/OS levels below V1.13 are used. For more information, see the Preventive Service Planning buckets for mainframe operating environments page.

|

4.2.4 EAV migration considerations

When you are planning to migrate to EAV volumes, consider the following items:

•Assistance

Migration assistance is provided by using the Application Migration Assistance Tracker. For more information about Assistance Tracker, see APAR II13752: CONSOLE ID TRACKING FACILITY INFORMATION.

•Suggested actions:

– Review your programs and look for calls for the following macros:

• OBTAIN

• REALLOC

• CVAFDIR

• CVAFSEQ

• CVAFDSM

• CVAFFILT

These macros were modified and you must update your program to reflect those changes.

– Look for programs that calculate volume or data set size by any means, including reading a VTOC or VTOC index directly with a basic sequential access method (BSAM) or EXCP DCB. This task is important because now you have new values that are returning for the volume size.

– Review your programs and look for the EXCP and STARTIO macros for direct access storage device (DASD) channel programs and other programs that examine DASD channel programs or track addresses. Now that a new addressing mode exists, programs must be updated.

– Look for programs that examine any of the many operator messages that contain a DASD track, block address, data set, or volume size. The messages now show new values.

•Migrating data:

– Define new EAVs by creating them on the DS8880 storage system or expanding volumes by using DVE.

– Add new EAV volumes to SGs and storage pools, and update ACS routines.

– Copy data at the volume level:

• IBM Transparent Data Migration Facility (IBM TDMF)

• Data Facility Storage Management Subsystem Data Set Services (DFSMSdss)

• IBM DS8000 Copy Services Metro Mirror (MM) (formerly known as Peer-to-Peer Remote Copy (PPRC))

• Global Mirror (GM)

• Global Copy

• FlashCopy

– Copy data at the data set level:

• DS8000 FlashCopy

• SMS attrition

• IBM z/OS Dataset Migration Facility (IBM zDMF)

• DFSMSdss

• DFSMShsm

All data set types are currently good candidates for EAV except for the following types:

•Work data sets

•TSO batch and load libraries

•System volumes

4.3 Dynamic volume expansion

DVE simplifies management by enabling easier online volume expansion for IBM Z to support application data growth. It also supports data center migration and consolidation to larger volumes to ease addressing constraints.

The size of a Mod 3/9/A volume can be increased to its maximum supported size by using DVE. The volume can be dynamically increased in size on a DS8000 storage system by using the GUI or DSCLI.

Example 4-3 shows how the volume can be increased by using the DS8000 DSCLI interface.

Example 4-3 Dynamically expand CKD volume

dscli> chckdvol -cap 262268 -captype cyl 9ab0

CMUC00022I chckdvol: CKD Volume 9AB0 successfully modified.

DVE can be done while the volume remains online to the z/OS host system. When a volume is dynamically expanded, the VTOC and VTOC index must be reformatted to map the extra space. With z/OS V1.11 and later, an increase in volume size is detected by the system, which then performs an automatic VTOC and rebuilds the index.

The following options are available:

•DEVSUPxx parmlib options

The system is informed by SCIs, which are controlled by the following parameters:

– REFVTOC=ENABLE

With this option, the device manager causes the volume VTOC to be automatically rebuilt when a volume expansion is detected.

– REFVTOC=DISABLE

This parameter is the default. An IBM Device Support Facilities (ICKDSF) batch job must be submitted to rebuild the VTOC before the newly added space on the volume can be used. Start ICKDSF with REFORMAT/REFVTOC to update the VTOC and index to reflect the real device capacity. The following message is issued when the volume expansion is detected:

IEA019I dev, volser, VOLUME CAPACITY CHANGE,OLD=xxxxxxxx,NEW=yyyyyyyy

•Use the SET DEVSUP=xx command to enable automatic VTOC and index reformatting after an IPL or disabling.

•Use the F DEVMAN,ENABLE(REFVTOC) command to communicate with the device manager address space to rebuild the VTOC. However, update the DEVSUPxx parmlib member to ensure it remains enabled across subsequent IPLs.

|

Note: For the DVE function, volumes cannot be in Copy Services relationships (point-in-time copy or FlashCopy, MM, GM, Metro/Global Mirror (MGM), and IBM z/OS Global Mirror (IBM zGM)) during expansion. Copy Services relationships must be stopped until the source and target volumes are at their new capacity, and then the Copy Service pair can be reestablished.

|

4.4 Quick initialization

Whenever the new volumes are assigned to a host, any new capacity that is allocated to it should be initialized. On a CKD logical volume, any CKD logical track that is read before it is written is formatted with a default record 0, which contains a count field with the physical cylinder and head of the logical track, record (R) = 0, key length (KL) = 0, and data length (DL) = 8. The data field contains 8 bytes of zeros.

A DS8000 storage system supports the quick volume initialization function (Quick Init) for

IBM Z environments, which is designed to make the newly provisioned CKD volumes accessible to the host immediately after being created and assigned to it. The Quick Init function is automatically started whenever the volume is created or the existing volume is expanded.

IBM Z environments, which is designed to make the newly provisioned CKD volumes accessible to the host immediately after being created and assigned to it. The Quick Init function is automatically started whenever the volume is created or the existing volume is expanded.

It initializes the newly allocated space dynamically, which allows logical volumes to be configured and placed online to host more quickly. Therefore, manually initializing a volume from the host side is not necessary.

If the volume is expanded by using the DS8000 DVE function, normal read and write access to the logical volume is allowed during the initialization process. Depending on the operation, the Quick Init function can be started for the entire logical volume or for an extent range on the logical volume.

Quick Init improves device initialization speeds, simplifies the host storage provisioning process, and allows a Copy Services relationship to be established soon after a device is created.

4.5 Volume formatting overwrite protection

ICKDSF is the main z/OS utility to manage disk volumes (for example, for the initialize and reformat actions). In the complex IBM Z environment with many logical partitions (LPARs) in which volumes are assigned and accessible to more than one z/OS system, it is easy to erase or rewrite mistakenly the contents of a volume that is used by another z/OS image.

The DS8880 storage system addresses this exposure through the Query Host Access function, which is used to determine whether target devices for certain script verbs or commands are online to systems where they should not be online. Query Host Access provides more useful information to ICKDSF about every system (including various sysplexes, virtual machine (VM), Linux, and other LPARs) that has a path to the volume that you are about to alter by using the ICKDFS utility.

The ICKDSF VERIFYOFFLINE parameter was introduced for that purpose. It fails an INIT or REFORMAT job if the volume is being accessed by any system other than the one performing the INIT or REFORMAT operation (as shown in Figure 4-6). The VERIFYOFFLINE parameter is set when the ICKDSF reads the volume label.

Figure 4-6 ICKDSF volume formatting overwrite protection

Messages that are generated soon after the ICKDSF REFORMAT starts and the volume is found to be online to some other system is shown in Example 4-4.

Example 4-4 ICKDSF REFORMAT volume

REFORMAT UNIT(8000) NVFY VOLID(DS8000) VERIFYOFFLINE

ICK00700I DEVICE INFORMATION FOR 8000IS CURRENTLY AS FOLLOWS:

PHYSICAL DEVICE = 3390

STORAGE CONTROLLER = 2107

STORAGE CONTROL DESCRIPTOR = E8

DEVICE DESCRIPTOR = 0E

ADDITIONAL DEVICE INFORMATION = 4A00003C

TRKS/CYL = 15, # PRIMARY CYLS = 65520

ICK04000I DEVICE IS IN SIMPLEX STATE

ICK00091I 9042 NED=002107.900.IBM.75.0000000xxxxx

ICK31306I VERIFICATION FAILED: DEVICE FOUND TO BE GROUPED

ICK30003I FUNCTION TERMINATED. CONDITION CODE IS 12

If this condition is found, the Query Host Access command from ICKDSF (ANALYZE) or DEVSERV (with the QHA option) can be used to determine what other z/OS systems have the volume online.

Example 4-5 DEVSERV with the QHA option

-DS QD,01800,QHA

IEE459I 10.34.09 DEVSERV QDASD 455

UNIT VOLSER SCUTYPE DEVTYPE CYL SSID SCU-SERIAL DEV-SERIAL EFC

01800 XX1800 2107951 2107900 10017 1800 0175-TV181 0175-TV181 *OK

QUERY HOST ACCESS TO VOLUME

PATH-GROUP-ID FL STATUS SYSPLEX MAX-CYLS

80000D3C672828D1626AB7 50 ON 1182006

8000043C672828D16736C2* 50 ON 1182006

8000053C672828D16737CB 50 ON 1182006

**** 3 PATH GROUP ID(S) MET THE SELECTION CRITERIA

**** 1 DEVICE(S) MET THE SELECTION CRITERIA

**** 0 DEVICE(S) FAILED EXTENDED FUNCTION CHECKING

This synergy between a DS8000 storage system and ICKDSF prevents accidental data loss and some unpredictable results. In addition, it simplifies the storage management by reducing the need of manual control.

The DS8000 Query Host Access function is used by IBM Geographically Dispersed Parallel Sysplex (GDPS), as described in 2.4, “Geographically Dispersed Parallel Sysplex” on page 24.

4.6 Channel paths and a control-unit-initiated reconfiguration

In the IBM Z environment, the normal practice is to provide multiple paths from each host to a storage system. Typically, four or eight paths are installed. The channels in each host that can access each logical control unit (LCU) in the DS8000 storage system are defined in the HCD or I/O configuration data set (IOCDS) for that host.

Dynamic Path Selection (DPS) allows the channel subsystem to select any available (non-busy) path to start an operation to the disk subsystem. Dynamic Path Reconnect (DPR) allows the DS8880 storage system to select any available path to a host to reconnect and resume a disconnected operation, for example, to transfer data after disconnection because of a cache miss.

These functions are part of IBM z/Architecture® and are managed by the channel subsystem on the host and the DS8000 storage system.

A physical FICON path is established when the DS8000 port sees light on the fiber, for example, a cable is plugged in to a DS8880 host adapter, a processor or the DS8880 storage system is powered on, or a path is configured online by z/OS. Now, logical paths are established through the port between the host and some or all of the LCUs in the DS8880 storage system are controlled by the HCD definition for that host. This configuration occurs for each physical path between an IBM Z host and the DS8880 storage systems.

Multiple system images can be in a CPU. Logical paths are established for each system image. The DS8880 storage system then knows which paths can be used to communicate between each LCU and each host.

Control-unit initiated reconfiguration (CUIR) varies a path or paths offline to all IBM Z hosts to allow service for an I/O enclosure or host adapter. Then, it varies on the paths to all host systems when the host adapter ports are available. This function automates channel path management in IBM Z environments in support of selected DS8000 service actions.

CUIR is available for the DS8880 storage system when it operates in the z/OS and IBM z/VM environments. CUIR provides automatic channel path vary on and vary off actions to minimize manual operator intervention during selected DS8880 storage system service actions.

CUIR also allows the DS8880 storage system to request that all attached system images set all paths that are required for a particular service action to the offline state. System images with the appropriate level of software support respond to such requests by varying off the affected paths, and notifying the DS8880 storage system that the paths are offline or that it cannot take the paths offline. CUIR reduces manual operator intervention and the possibility of human error during maintenance actions, and reduces the time that is required for the maintenance. This function is useful in environments in which many z/OS or z/VM systems are attached to a DS8880 storage system.

4.7 CKD thin provisioning

Starting with DS8880 R8.1.1 (bundles 88.11 and later), the DS8880 storage system allows CKD volumes to be formatted as thin-provisioned extent space-efficient (ESE) volumes. These ESE volumes perform physical allocation only on writes and only when another new extent is needed to satisfy the capacity of the incoming write block.

The allocation granularity and the size of these extents is 1113 cylinders or 21 cylinders, depending on how the extent pool was formatted. The use of small extents makes more sense in the context of thin provisioning.

One scenario to use such thinly provisioned volumes is for FlashCopy target volumes or GM Journal volumes, which allows them to be space-efficient while maintaining standard (thick) volume sizes for the operational source volumes. ESE volumes that are preferably placed on 21-cylinder extent pools are the replacement for the former track-space efficient (TSE) volumes, which are no longer supported.

Another scenario is to create all volumes as ESE volumes. In PPRC relationships, this idea has the advantage that on initial replication extents that are not yet allocated in a primary volume do not need to be replicated, which also saves on bandwidth.

Thin provisioning in general requires a tight control of the capacity that is free physically in the specific extent pool, especially when over-provisioning is performed. These controls are available along with respective alert thresholds and alerts that can be set.

4.7.1 Advantages

Thin provisioning can make storage administration easier. You can provision large volumes when you configure a new DS8880 storage system. You do not have to manage different volume sizes when you use a 3390 Model 1 size, Model 9, or Model 27, and so on. All volumes can be large and of the same size.

At times, a volume or device address is required to communicate with the control unit, such as the utility device for Extended Remote Copy (XRC). Such a volume can include a minimum capacity. With thin provisioning, you still can use a large volume because less data is written to such a volume, its size in actual physical space remains small, and no storage capacity is wasted.

For many z/OS customers, migrating to larger volumes is a task they avoid because it involves substantial work. As a result, many customers have too many small 3390 Model 3 volumes. With thin provisioning, they can define large volumes and migrate data from other storage systems to a DS8880 storage system that is defined with thin-provisioned volumes and likely use even less space. Most migration methods facilitate copying small volumes to a larger volume. You refresh the VTOC of the volume to recognize the new size.

4.7.2 APARs

Check the following APARs for thin provisioning. The initial z/OS support was provided with the corresponding software use of a small programming enhancement (SPE) for z/OS V2.1 and later:

•PI47180

•OA48710

•OA48723

•OA48711

•OA48709

•OA48707

•OA50453

•OA50675

4.7.3 Space release

Space is released when either of the following conditions are met:

•A volume is deleted.

•When a full FlashCopy Volume relationship is withdrawn and reestablished.

|

Note: This condition is not true when working with data-set- or extent-level FlashCopy with z/VM minidisks. Therefore, use caution when you are working with the DFSMSdss utility because it might use data-set-level FlashCopy depending on the parameters that are used.

|

A space release is also done on the target of an MM or Global Copy when the relationship is established if the source and target are thin-provisioned.

Introduced with DS8880 R8.2 and APAR OA50675 is the ability for storage administrators to perform an extent-level space release with the new DFSMSdss SPACEREL command. It is a volume-level command that is used to scan and release free extents from volumes back to the extent pool. The SPACEREL command can be issued for volumes or SGs and uses the following format:

SPACERel

DDName(ddn)

DYNam(volser,unit)

STORGRP(groupname)

An enhancement was also provided with DS8880 R8.3 to release space on the secondary volume when the SPACEREL command is issued to an MM duplex primary device.

If not prevented by other Copy Services relationships restrictions that are described in this book, the SPACEREL command is now allowed to access primary suspended devices. In this case, the space is released in the primary device and, when the PPRC relationship is reestablished, the extents that were freed on the primary device also are released on the secondary device. The pair remains in the DUPLEX PENDING state until the extents on the secondary device are freed; the sync process resumes later.

To use this enhancement, the source and target DS8880 storage systems must use the R8.3 code or higher.

|

Note: At the time of writing, Global Copy primary devices must be suspended to allow the use of the SPACEREL command. Global Copy Duplex Pending devices are not supported for the SPACEREL command and devices in FlashCopy relationships.

For Multiple Target Peer-to-Peer Remote Copy (MT-PPRC) relationships, each relationship on the wanted device must allow the SPACEREL command to run for the release to be allowed on the primary device (that is, the primary must be in a Suspended state for Global Copy or GM relationships, and in a Duplex or Suspended state in an MM relationship).

Suspended primary devices in a GM session are supported. Cascaded devices follow the same rules as non-cascaded devices, although space is not released on the target because these devices are FlashCopy source devices.

|

For more information, see IBM DS8880 Thin Provisioning (Updated for Release 8.5), REDP-5343.

4.7.4 Overprovisioning controls

Overprovisioning a storage system with thin provisioning brings with it the risk of running out of space in the storage system, which causes a loss of access to the data when applications cannot allocate space that was presented to the servers. To avoid this situation, clients typically use a policy regarding the amount of overprovisioning that they allow in an environment and monitor the growth of allocated space with predefined thresholds and warning alerts.

DS8880 R8.3 provides clients with an enhanced method of enforcing such policies so that overprovisioning is capped to a wanted overprovisioning ratio (see Figure 4-7), which does not allow further space allocations in the system.

Figure 4-7 Overprovisioning ratio formula

As part of the implementation project or permanently in a production environment, some clients might want to enforce an overprovisioning ratio of 100%, which means that no overprovisioning is allowed. Using this ratio does not risk affecting production because of running out of space on the DS8000 storage system. By doing so, the Easy Tier and replication benefits of thin provisioning can be realized without the risk of accidentally overprovisioning the underlying storage. The overprovisioning ratio can be changed dynamically later if wanted.

Implementing overprovisioning control results in the following changes to the standard behavior to prevent an extent pool from exceeding the overprovisioning ratio:

•Prevents volume creation, expansion, and migration.

•Prevents rank depopulation.

•Prevents pool merging.

•Prevents turning on Easy Tier space reservation.

Overprovisioning controls can be implemented at the extent pool level, as shown in Example 4-6.

Example 4-6 Creating an extent pool with a 350% overprovisioning ratio limit

dscli> mkextpool -rankgrp 0 -stgtype fb -opratiolimit 3.5 -encryptgrp 1 test_create_fb

Date/Time: April 19, 2017 2:15:24 AM PDT IBM DSCLI Version: 0.0.0.0 DS: IBM.2107-75xxxxx

CMUC00000I mkextpool: Extent pool P8 successfully created.

An extent pool overprovisioning ratio can be changed by using the chextpool DSCLI command, as shown in Example 4-7.

Example 4-7 Changing the overprovisioning ratio limit on P3 to 3.125%

dscli> chextpool -opratiolimit 3.125 p3

Date/Time: April 7, 2017 4:26:39 AM PDT IBM DSCLI Version: 0.0.0.0 DS: IBM.2107-75xxxxx

CMUC00001I chextpool: Extent pool P3 successfully modified.

To display the overprovisioning ratio of an extent pool, use the showextpool DSCLI command, as shown in Example 4-8.

Example 4-8 Displaying the current overprovisioning ratio of extent pool P3 and the limit set

dscli> showextpool p3

Date/Time: April 7, 2017 4:26:45 AM PDT IBM DSCLI Version: 0.0.0.0 DS: IBM.2107-75xxxxx

...

%limit 100

%threshold 15

...

opratio 0.76

opratiolimit 3.13

%allocated(ese) 0

%allocated(rep) 0

%allocated(std) 75

%allocated(over) 0

%virallocated(ese) -

%virallocated(tse) -

%virallocated(init) –

...

4.8 DSCLI on z/OS

Another synergy item between IBM Z and a DS8000 storage system is that you can now install the DSCLI along with IBM Copy Services Manager (CSM) on a z/OS system. It is a regular SMP/E for z/OS installation.

The DSCLI runs under UNIX System Services for z/OS and has a separate FMID HIWN61K. You can also install the DSCLI separately from CSM.

For more information, see the IBM DSCLI on z/OS Program Directory. Search for this publication by entering the publication number (GI13-3563) at the IBM Publications Center website.

After the installation is complete, access your UNIX System Services for z/OS, which can vary among installations. One common way to access these services is by using TSO option 6 (ISPF Command Shell) and the OMVS command. For more information, contact your z/OS System Programmer.

Access to DSCLI in z/OS is shown in Figure 4-8. It requests the same information that you supply when you are accessing DSCLI on other platforms.

|

$ cd /opt/IBM/CSMDSCLI

$ ./dscli

Enter the primary management console IP address: <enter-your-DS8K-machine-ip-address>

Enter the secondary management console IP address:

Enter your username: <enter-your-user-name-as-defined-on-the-machine>

Enter your password: <enter-your-user-password-to-access-the-machine>

dscli> ver -l

...

dscli>

===>

INPUT

ESC=¢ 1=Help 2=SubCmd 3=HlpRetrn 4=Top 5=Bottom 6=TSO 7=BackScr 8=Scroll 9=NextSess 10=Refresh 11=FwdRetr 12=Retrieve

|

Figure 4-8 Accessing DSCLI on z/OS

Some DSCLI commands that are run in z/OS are shown in Figure 4-9.

|

dscli> lssi

Name ID Storage Unit Model WWNN State ESSNet

========================================================================================

IBM.2107-75ACA91 IBM.2107-75ACA91 IBM.2107-75ACA90 980 5005076303FFD13E Online Enabled

dscli> lsckdvol -lcu EF

Name ID accstate datastate configstate deviceMTM voltype orgbvols extpool cap (cyl)

===========================================================================================

ITSO_EF00 EF00 Online Normal Normal 3390-A CKD Base - P1 262668

ITSO_EF01 EF01 Online Normal Normal 3390-9 CKD Base - P1 10017

dscli> mkckdvol -dev IBM.2107-75ACA91 -cap 3339 -datatype 3390 -eam rotateexts -name ITSO_#h -extpool P1 EF02-EF02

CMUC00021I mkckdvol: CKD Volume EF02 successfully created.

dscli> lsckdvol -lcu EF

Name ID accstate datastate configstate deviceMTM voltype orgbvols extpool cap (cyl)

===========================================================================================

ITSO_EF00 EF00 Online Normal Normal 3390-A CKD Base - P1 262668

ITSO_EF01 EF01 Online Normal Normal 3390-9 CKD Base - P1 10017

ITSO_EF02 EF02 Online Normal Normal 3390-3 CKD Base - P1 3339

dscli> rmckdvol EF02

CMUC00023W rmckdvol: The alias volumes associated with a CKD base volume are automatically deleted before deletion of the CKD base volume. Are you sure you want to delete CKD volume EF02? Ýy/n¨: y

CMUC00024I rmckdvol: CKD volume EF02 successfully deleted.

dscli>

|

Figure 4-9 Common commands on DSCLI

With this synergy, you can use all z/OS capabilities to submit batch jobs and perform DSCLI functions, such as creating disks or LCUs.

For more information about the DS8880 storage system, see the following resources:

•IBM DS8880 Architecture and Implementation (Release 8.51), SG24-8323

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.