Chapter 23

Case Study: A Programmatic Example

WHAT’S IN THIS CHAPTER?

- Understanding the business situation

- Architecting a data quality solution

- Setting up the case study

- Implementing the ETL

Usually, a book like this has to cover so much material that there is not enough space to really dig into some of the typical issues that can arise when you put the book down and begin your first solution. You end up returning to the book to flip through all the one-off examples, but they just don’t seem to provide the insight you need or to apply to your current project or deadline. Looking at a case study enables you to get specific — that is, to get hands-on experience with a real-world business issue and run with it. By working through the example provided in this chapter, you will gain the fundamental knowledge and skills you need to tackle your own project.

You will use the SSIS environment to solve a payment processing problem; you have payment data of varying levels of quality that has to be validated against corporate billing records. This example is a little different from the typical data warehouse-type ETL case study; it’s a little more programmatic. That’s not to say that there is no ETL. You’ll need to import three heterogeneous data formats, but the interesting part is the use of the SSIS Data Flow Transformations that allow for the development of smart validation and matching programming logic. This will all combine into a solid learning opportunity that showcases the real capabilities of SSIS.

The principal advantages of this case study are multiple opportunities to examine specific techniques and use cases that you can add to your SSIS toolkit. Specifically, you’ll learn all of the following:

- How to use expressions in variables to create uniquely named files

- How to use expressions in package properties

- How to use expressions in variables to dynamically configure OLE DB Connection Managers

- How to set variables within Control Flow Script Tasks and Data Flow Script Components

- How to retrieve and set variables in Control Flows with Execute SQL Tasks with output parameters and result sets for both OLE DB and.NET connections

- How to create conditional workflows with expressions and precedence constraints

- How to retrieve currency amounts from a database into a Double variable data type within an Execute SQL Task

- How to retrieve row counts using the Row Count Transformation

- How to iterate through a set of XML files and import using the Data Flow XML Source

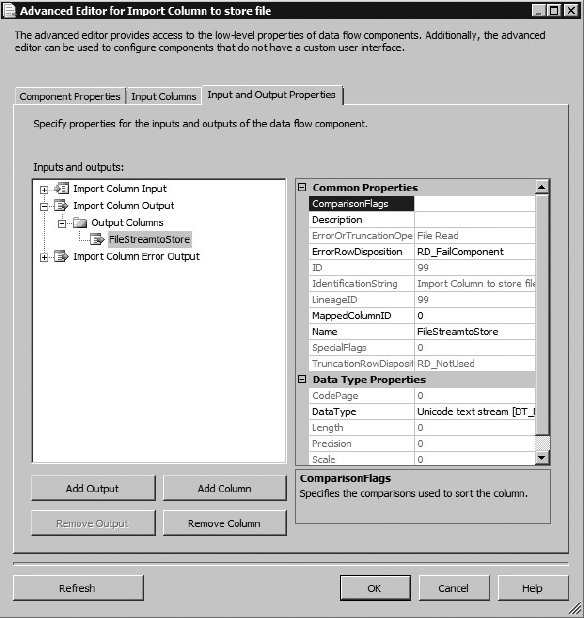

- How to use the Import Column Transformation to save complete files into a database

- How to create a parent package to manage child packages

- How to use the Lookup and Fuzzy Lookup Transformations to compare data elements

Company ABC is a small benefits company that sells several niche products to other small business owners. They offer these products directly to the employees of the small businesses, but the employers are billed, rather than the employees. Company ABC considers the employers to be their customers and creates monthly invoices for the employee-selected services. Each invoice contains an invoice number for reference purposes. Company ABC’s customers deduct the cost of the products from the employee paychecks. These payments are then submitted back to Company ABC in a lump sum, but because of timing issues and ever-changing worker populations, the payment doesn’t always match the billed amount. Customers have the option to pay invoices using one of the following payment methods:

- PayPal or an e-mail payment service: These services directly credit a corporate bank account and typically provide a small description of the service being paid, the amount, and an e-mail address or other type of surrogate user identity. These entries are downloaded daily from an online bank account and are available within an OLE DB–compliant Data Source.

- By check: The customer sends a copy of the invoice and a check in the mail to a special address that is serviced by a bank. The invoice could match fully or partially or not even be provided with the payment. The bank credits the account for each check received and provides an output file containing as much data as practicable from the supporting material to help the company identify and apply the payment. A payment that is serviced like this is commonly known as a lockbox.

- By wire: Payments can be made by direct debit of customer bank accounts or direct credit to the corporate account. These payments are known as wires. This type of payment entry provided through a large bank or an automated clearinghouse is also known as ACH processing.

Working with the low-quality payment data involves a significant amount of manual labor to match payments to customers and invoices. Because Company ABC is growing, the volume of payments is exceeding its capacity to continue to process payments manually. If the invoice number were always received with the payment, an automated process could easily identify the customer from the invoice and make some decisions about the payment by comparing the paid and billed amounts. So far, attempts at automating even the paper invoices sent through the mail have failed because customers don’t always send copies of invoices, or they resend old, outdated invoices. Using a bank lockbox has helped ease the burden of processing the deposits, but the bank makes mistakes too, truncating and transposing customer name or invoice data. Adding the options of wires and PayPal accounts has really complicated matters, because very little corroborating data is provided in these transactions.

Approximately 60 percent of the incoming payments can’t be automatically identified using a strict comparison of invoice number and payment amount. The good news is that they can almost all be manually identified by a small group of subject matter experts (SMEs) who understand the process and know how to use the corporate data. The bad news is that once a customer and invoice are identified by the SMEs, the method of making the match is not recorded. The next month, the process of identification starts all over again. Company ABC needs a way to wade as far as possible through the payments automatically to take the place of the SMEs. This process should match items by invoice number, name, and e-mail address with some measurable certainty and leave only the most troublesome payments for research activity. The company needs a solution that runs continuously to meet the demands of the standard 24-hour turnaround time for its industry.

An alternative to using the pure Integration Services approach discussed in this chapter is to use Data Quality Services. Newly introduced in SQL Server 2012, Data Quality Services creates a knowledge base of data definitions and potential matches to provide a data-cleansing solution. There is a cleansing component provided in Integration Services that can use the Data Quality Services server to manage some of what we are covering here.

Company ABC has made the need to resolve this payment processing hurdle its top priority. It already has a custom software application that enables users to break the bulk payments down to an employee level, but the application requires that the customer and invoice be identified. The solution is to use SSIS to develop a package that can process data from these heterogeneous Data Sources. This solution should require no human intervention on items that can be identified as paid-as-billed items. The solution should be as “smart” as possible and be able to identify bank items that have been manually identified before. Items that can’t be identified will still need to be processed manually, but it is expected that this number should drop 20 to 40 percent.

In preparation for the design of the SSIS package, specification documents for the two input files, from ACH and lockbox processing, have been gathered. Each file provided by the bank contains a batch of multiple payment transactions. These files can be sent by either the bank or the ACH clearinghouse to specific folders. The process should be able to access these folders and continuously look for new files to process. When a file is located in the input folder, it needs to be validated for three items: to ensure proper format, to ensure it has not been previously processed, and to ensure the aggregation of all payments add up to the total deposit. Files not meeting these criteria will be rejected. Once a file is verified, each payment in the file should be examined for matches to existing invoices. If no match is found, the data should be examined against previously matched data. Matched data will flow into a completed queue to be broken into employee-level charges by another piece of software. Unmatched data will flow into a working queue that requires user intervention. Successful customer matches will be stored for future matching. Finally, statistics on the input will be created for reporting purposes. Figure 23-1 is a diagram of the business solution.

Before you jump into building this integration process, you should understand the big picture of what you will accomplish. You have two sets of tasks:

- First, to import files of three different formats, to validate the data, and to load them into your data structures

- Second, to process the payments to find customer and invoice matches

Figure 23-2 shows a design in which the importing logic is divided into three packages, each one specific to the type of file that you need to process. You’ve learned that breaking SSIS packages into service-oriented units simplifies maintenance and troubleshooting, so you will use that method here. Another benefit of this architecture is that it makes it easy for you to choose just one of these three packages to create and still follow along with the case study. Don’t worry about creating these packages at this point; just get the big picture about where you need to go. You can either create the packages for this solution one at a time, as you walk through the case study instructions, or, alternatively, download the complete solution from www.wrox.com.

Because the main job of the first core task is to load, it makes sense to name the three separate packages CaseStudy_Load_Bank, CaseStudy_Load_ACH, and CaseStudy_Load_Email. This enables you to separate the load processes from the identification processes. The identification logic to apply to each transaction in the payment data is universal, so it makes sense to put this logic into a separate package. You can name this package CaseStudy_Process. The final package, named CaseStudy_Driver, is the master package that will coordinate the running of each process using precedence constraints.

When you are building packages that have external dependencies on things such as file hierarchies, it is a good idea to programmatically validate the locations for existence before processing. Therefore, you’ll check for default paths and create them if they don’t exist. If files for the lockbox or ACH processes exist, you should read the files, parse the transaction information, validate totals against a control record in the file, and then persist the information from the file into permanent storage. The toughest part of this processing logic is that the validation routines have to validate file formats, proper data types, and file balances and check for file duplication. When processing any flat file from an external source, be aware of how that import file was generated and what you might find in it. Don’t be surprised to find that some systems allow an invalid date of 02/30/2012 or incomprehensible amount fields with data like 0023E2.

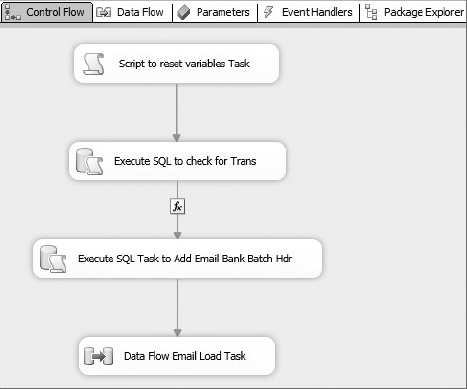

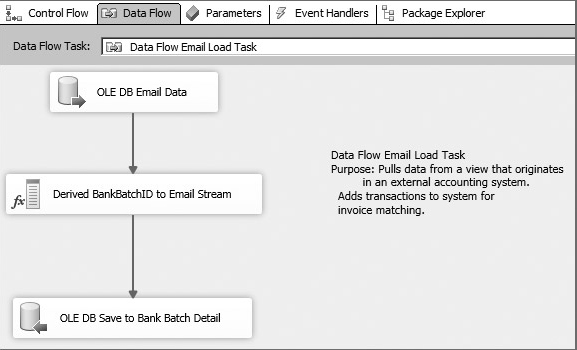

The downloaded bank transactions for the PayPal or e-mail payment transactions will be easier to process — at least from an import standpoint. You only need to read information from a table in another OLE DB–compliant Data Source. You’ll be creating a batch from the transactions, so balancing shouldn’t be an issue either. The hardest part will be identifying these payments, because usually only an amount and an e-mail address are embedded in the transaction. All this information can be summarized in a flowchart like the one in Figure 23-3.

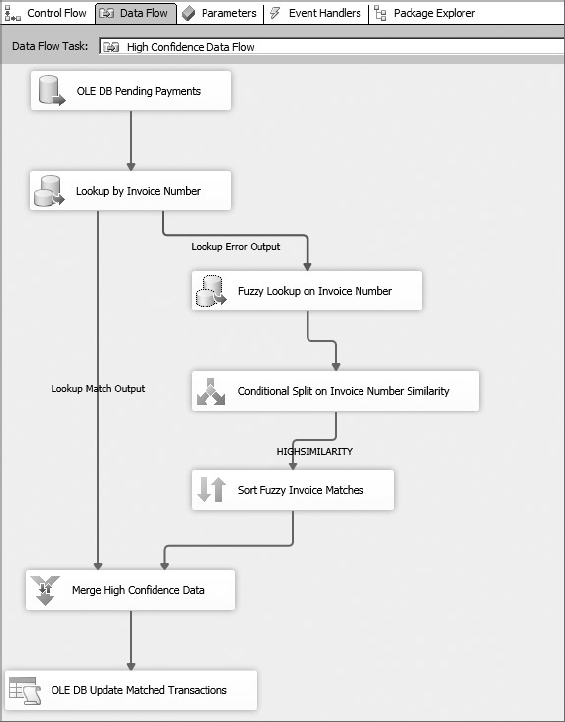

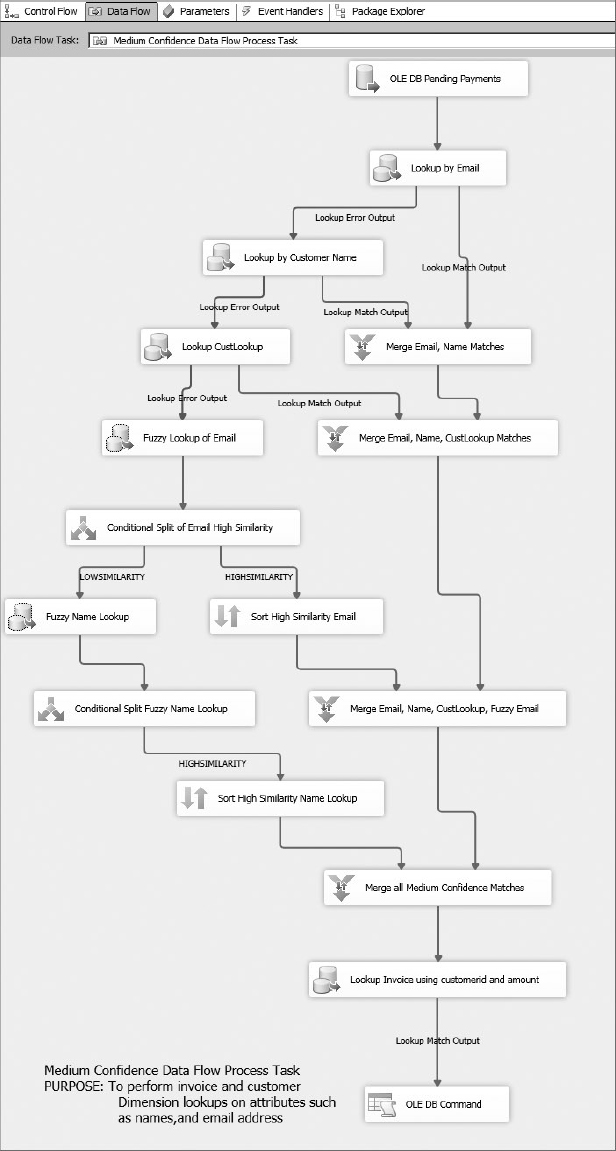

In the CaseStudy_Process package, you will complete a matching process of the payment information to find customers and invoices. You will first attempt a high-confidence match using an exact match to the invoice number. If a match is not made, you’ll move to a Fuzzy Lookup on the invoice number. If a match is still not made, you’ll keep moving down to lower confidence matches until you can retrieve an invoice or at least customer identification. Transactions identifiable only by customer will be checked against available invoices for a match within a billed-to-paid tolerance of 5 percent. Transactions lacking enough certainty to be identified will be left at this point to subject matter experts, who will individually review and research the transactions to identify the customer or refund the payment. Research can be saved via software outside this SSIS project in the CustomerLookup table. A summary flowchart for the CaseStudy_Process package is shown in Figure 23-4.

Naming Conventions and Tips

There’s nothing like opening up a package that fails in production and seeing tasks named Execute SQL Task, Execute SQL Task 1, and Execute SQL Task 2. There’s no way to tell what those tasks actually do without manually examining their properties. On the other hand, too much annotation can be a nightmare to maintain. The right balance depends on your philosophy and your team, but follow these rules for this case study:

- Name the package. Name it something other than Package.dtsx. This matters later when you deploy the packages.

- Name packages with ETL verb extensions. Package Name_Extract, Package Name_Transformation, or Package Name_Load. The extension _Process seems to be explicit enough for those packages that don’t fall into the other three categories.

- Provide a brief annotation about what the package does, where it gets inputs and outputs, and what to do if it fails. Annotations can include answers to the following questions: Can it be rerun again? Is it part of a larger set of packages? Should it be restarted on checkpoints?

- Add short descriptive words to SSIS tasks and components, but don’t alter the name altogether. For example, change an Execute SQL Task to Execute SQL Task to Retrieve Invoice ID. Use the Description field on the object to provide the detailed information. (This information is in a tooltip when the mouse hovers over the object.) It is important to document, but completely changing the name of an Execute SQL Task to Retrieve Invoice ID obscures the “how” that is implied by knowing that the task is an Execute SQL Task. You could, of course, learn what the pictures stand for or use abbreviations, but our stance is that you should use a convention that takes into account the person arriving after you, who has to maintain your package.

Additional SSIS Tips Before You Start a Large Project

This solution has many parts, so don’t feel overwhelmed if you are creating them from scratch or attempting to troubleshoot something. Here are some additional tips for this case study and SSIS package development in general:

- Be aware that packages save themselves when you run them.

- Packages don’t save themselves as you are working, so save periodically as you work on large development packages. There is a nice recovery feature that sometimes will save you — don’t depend on it.

- Data viewers, which are like grid message boxes, are your friends. Add them temporarily to see what is in your transformation stream.

- Default data types are not your friends. If your tables don’t use Unicode text fields, watch your settings when you add columns or source data.

- If you are at a point where you want to troubleshoot or experiment, either use source control or stop and save a copy of the SSIS project directory for the package you are working on. Experiment with the copy until you figure out what is wrong. Go back to the original project folder, make your corrections, and continue.

- Disable tasks or groups of tasks as you work through large packages to focus only on specific functional areas until they work. To disable a task, right-click it and select Disable from the pop-up menu. (Note, however, that you can’t disable a component in the Data Flow.)

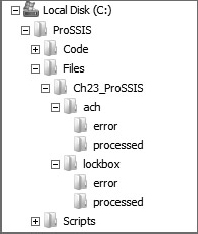

This section details the data sources both in SQL Server and in the format of each of the input files. First you’ll create default locations to simulate your receiving area for the external files, and then you’ll take a closer look at each of the input files that are part of the business requirements. All the sample data and complete set of scripts for this step can be downloaded from www.wrox.com. When you unzip the sample files for this chapter to your C:ProSSIS folder, a directory structure will be created, starting with the parent directory C:ProSSIS. The folder structure contains database scripts, sample import files, and all the packages. There is one solution for all packages in this chapter, which contains the process packages, driver packages, and two sets of load packages: one in which all Script Tasks are done in VB.NET, and another for C#, so you can follow along with the .NET language of your choice.

You’ll need to run the database scripts to create a database called CaseStudy, the table structures, the procedures and other database objects, and specific customer and invoice data. The downloaded zip file also puts all the sample import files into the directories so that you can follow along. Therefore, you can work through the chapter and piece this solution together, or you can download the packages and explore.

File Storage Location Setup

Create a base directory or use the download files at www.wrox.com to store the file-based imports to this project. Throughout the case study, the base location is referred to as C:ProSSISFilesCh23_ProSSIS. In the base directory, you’ll need two subdirectories: ach and lockbox. You will use these locations to store the files you are about to create in the next few sections.

Bank ACH Payments

Customers make payments either within their own bank or by using electronic payment systems to Company ABC through an automated clearinghouse. The automated clearinghouse bundles up all the payments for the day and sends one XML file through an encrypted VPN connection to an encrypted folder. The bank wires contain only a minimum amount of information at the transactional level. Each XML file contains a header row with a unique identifier that identifies the file transmission. The header also contains a total deposit amount and a transaction count that can be used to further verify the file transmission.

Each transactional detail row represents a deposit and contains two date fields: the date the deposit item was received, and the date the deposit item was posted to Company ABC’s deposit account. Each payment contains the amount of the deposit and a free-form field that can contain additional information, such as the customer’s name on a bank account, an e-mail address, or anything the customer adds to the wire. More commonly, the description contains the name on the customer’s bank account — which is often very different from the name in Company ABC’s customer data.

To make the sample ACH file, and for each file in this example, you’ll need to recreate these files manually or download the files from this book’s page at www.wrox.com. The bank ACH file looks like the following:

<BATCH> <HEADER><ID>AAS22119289</ID> <TOTALDEPOSIT>180553.00</TOTALDEPOSIT> <DEPOSITDATE>07/15/2012</DEPOSITDATE> <TOTALTRANS>6</TOTALTRANS> </HEADER> <DETAIL><AMOUNT>23318.00</AMOUNT> <DESC>Complete Enterprises</DESC> <RECEIVEDDATE>07/15/2012</RECEIVEDDATE> <POSTEDDATE>07/15/2012</POSTEDDATE></DETAIL> <DETAIL><AMOUNT>37054.00</AMOUNT> <DESC>Premier Sport</DESC> <RECEIVEDDATE>07/15/2012</RECEIVEDDATE> <POSTEDDATE>07/15/2012</POSTEDDATE></DETAIL> <DETAIL><AMOUNT>34953.00</AMOUNT> <DESC>Intl Sports Association</DESC> <RECEIVEDDATE>07/15/2012</RECEIVEDDATE> <POSTEDDATE>07/15/2012</POSTEDDATE></DETAIL> <DETAIL><AMOUNT>22660.00</AMOUNT> <DESC>Arthur Datum</DESC> <RECEIVEDDATE>07/15/2012</RECEIVEDDATE> <POSTEDDATE>07/15/2012</POSTEDDATE></DETAIL> <DETAIL><AMOUNT>24759.00</AMOUNT> <DESC>Northwind Traders</DESC> <RECEIVEDDATE>07/15/2012</RECEIVEDDATE> <POSTEDDATE>07/15/2012</POSTEDDATE></DETAIL> <DETAIL><AMOUNT>37809.00</AMOUNT> <DESC>Wood Fitness</DESC> <RECEIVEDDATE>07/15/2012</RECEIVEDDATE> <POSTEDDATE>07/15/2012</POSTEDDATE></DETAIL> </BATCH>

Files/Ch23_ProSSIS/ach/sampleach.xml

Lockbox Files

Company ABC has started using a lockbox service that their bank provides for a nominal fee. This service images all check and invoice stubs sent to a specific address that the bank monitors. The bank provides a data file containing the following data attributes for each deposit item:

- The amount

- A reference number for the invoice

- An image key that can be used to review images of the item online

The terms of the service dictate that if the bank can’t determine the invoice number because of legibility issues or the invoice is not sent in with the deposit item, either a customer account number or a customer name might be used in place of the invoice number. Periodically during the day, the bank will batch a series of payments into one file containing a header that includes a batch number, the posted deposit date for all deposit items, and an expected total for the batch.

The structure of the file from the bank is as follows:

HEADER: TYPE 1A TYPE OF LINE H-HEADER POSTDATE 6A DATE DEPOSIT POSTED FILLER 1A SPACE(1) BATCHID 12A UNIQUE BATCH NBR DETAIL (TYPE I): TYPE 1A TYPE OF LINE I-INVOICE IMGID 10A IMAGE LOOK UP ID (2-6 IS ID) DESC 25A INVOICE OR DESC INFO DETAIL (TYPE C) TYPE 1A TYPE OF LINE C-CHECK IMGID 10A IMAGE LOOK UP ID (2-6 IS ID) DESC 8S 2 CHECK AMOUNT

Using the following data, download or create a file named C:ProSSISFilesCh23_ProSSISlockboxsamplelockbox.txt to simulate the lockbox transmission in this example:

H080108 B1239-99Z-99 0058730760 I4001010003 181INTERNAT C4001010004 01844400 I4002020005 151METROSPOOO1 C4002020006 02331800 I4003030009 MAGIC CYCLES C4003030010 02697000 I4004040013 LINDELL C4004040014 02131800 I4005040017 151GMASKI0001 C4005040019 01938800

Files/Ch23_ProSSIS/lockbox/samplelockbox.txt

PayPal or Direct Credits to the Corporate Account

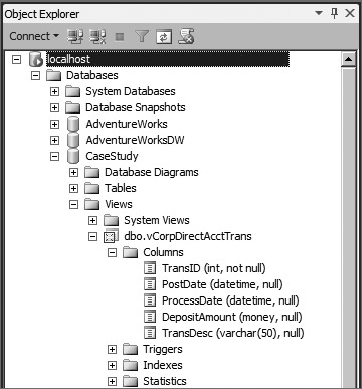

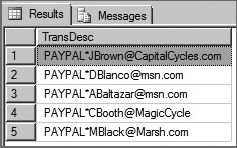

Company ABC has started a pilot program that allows customers to make payments using PayPal and other similar online electronic payment services. Customers like this option because it is easy to use. However, these payments are difficult to process for the Accounting group, because not all e-mail addresses have been collected for the customers, and that is the most common description on the transactions. Accounting personnel have to do some research in their CRM solution to determine who the customer is and to release the deposit to the payment systems. Once they have matched the customer to the transaction description (e-mail address), they would like to be able to save the matching criteria as data to be used in future processing. Currently, the accounting department uses a data synchronization process in its accounting software to periodically download these transactions directly from a special bank account during the day. This information is available through a read-only view in the database called vCorpDirectAcctTrans. Figure 23-5 shows the structure of this view.

Case Study Database Model

The case study database model (see Figure 23-6) is limited to only the information relevant to the case study. The core entities are as follows:

- Customer: An entity that utilizes products and services from Company ABC. To keep it simple, only the customer name, account number, and e-mail address attributes are represented in the table.

- Invoice: A monthly listing of total billed products and services for each customer. Each invoice is represented by a unique invoice number. Invoice details are not shown in the case study data model for simplicity.

- BankBatch: Any set of transactions from a bank or deposit institution that is combined. Auditable information expected for the exchange of monetary data is a major part of this entity. Files, or batches, of transactions must be validated in terms of number of transaction lines and, most important, amount. Care must be taken not to load a batch more than once. Recording the bank batch number or BankBatchNbr field and comparing incoming batches should enable you to prevent this from happening.

- BankBatchDetail: Each bank batch will be composed of many transactions that can be broken down into essentially a check and an invoice. You could receive as much as both pieces of information or as little as none of this information. For auditing purposes, you should record exactly what you received from the input source. You’ll also store in this table logically determined foreign keys for the customer and invoice dimension.

- CustomerLookup: This lookup table will be populated by your SSIS package and an external application. This enables users to store matching information to identify customers for future processing. It enables the data import processes to “learn” good matches from bad data.

Database Setup

To get started, you need to set up the database named CaseStudy. The database and all objects in it will become the basis for your solution to this business issue. Of course, as mentioned earlier, all input files and scripts are available from www.wrox.com. This database is not a consistent data model in the strictest sense. There are places where NVARCHAR and VARCHAR data fields are being used for demonstration purposes, which will be pointed out as you work through this case study. Working with NVARCHAR and VARCHAR fields has been mentioned as one of the difficulties that new SSIS developers struggle with, so you’ll learn how to deal with this issue in this case study.

There are two ways to create a new database. Use Microsoft SQL Server Management Studio to connect to a server or database engine of your choice. On the Databases Node, right-click and select the pop-up menu option New Database. In the New Database editor, provide the database name CaseStudy. Click OK to accept the other defaults. The second easy option is to run the following SQL script in a new query editor, as provided in the file “Step 1. Create Database.sql”:

USE [master] GO CREATE DATABASE [CaseStudy] ON PRIMARY ( NAME = N'CaseStudy', FILENAME = N'C:Program FilesMicrosoft SQL ServerMSSQL11.MSSQLSERVERMSSQLDATACaseStudy.mdf', SIZE = 3072KB, MAXSIZE = UNLIMITED, FILEGROWTH = 1024KB ) LOG ON ( NAME = N'CaseStudy_log', FILENAME = N'C:Program FilesMicrosoft SQL ServerMSSQL11.MSSQLSERVERMSSQLDATACaseStudy_log.ldf', SIZE = 1024KB, MAXSIZE = 2048GB, FILEGROWTH = 10%) COLLATE Latin1_General_CI_AI GO EXEC dbo.sp_dbcmptlevel @dbname=N'CaseStudy', @new_cmptlevel=110 GO

Scripts/Ch23_ProSSIS/Database_Scripts/Step 1. Create Database.sql

Customer Table

The Customer table can be created in Microsoft SQL Server Management Studio. Click the New Query button in the toolbar to open a New Query window. Run the following SQL statement:

USE CaseStudy GO CREATE TABLE [dbo].[Customer]( [CustomerID] [int] IDENTITY(1,1) NOT NULL, [AccountNbr] [char](15) NOT NULL, [Name] [varchar](50) NOT NULL, [Email] [varchar](50) NULL, [SendEmailConfirm] [bit] NOT NULL CONSTRAINT [DF_Customer_SendEmailConfirm] DEFAULT ((0)), CONSTRAINT [PK_Customer] PRIMARY KEY CLUSTERED ( [CustomerID] ASC ) ON [PRIMARY] ) ON [PRIMARY]

Scripts/Ch23_ProSSIS/Database_Scripts/Step 2. Create Customer Table and Data.sql

To fill the table with potential customers, you can manufacture some data using the AdventureWorks database. Don’t worry; you don’t have to have AdventureWorks installed. Use the script “Step 2. Create Customer Table and Data.sql” from the downloaded zip file to populate the Customer table. This script doesn’t need access to the AdventureWorks database to load the customer data into the database. Here is a partial listing of the full script:

--NOTE: THIS IS ONLY A PARTIAL LISTING --THERE ARE 104 CUSTOMERS TO ENTER. EITHER DOWNLOAD --THE FULL SCRIPT OR FOLLOW ALONG TO GENERATE THE --CUSTOMER DATA FROM ADVENTUREWORKS DATA INSERT INTO CaseStudy.dbo.Customer (AccountNbr, [Name], email, SendEmailConfirm) SELECT 'INTERNAT0001', 'International', '[email protected]',1 INSERT INTO CaseStudy.dbo.Customer (AccountNbr, [Name], email, SendEmailConfirm) SELECT 'ELECTRON0002', 'Electronic Bike Repair & Supplies', '[email protected]',1 INSERT INTO CaseStudy.dbo.Customer (AccountNbr, [Name], email, SendEmailConfirm) SELECT 'PREMIER0001', 'Premier Sport, Inc.', NULL, 0 INSERT INTO CaseStudy.dbo.Customer (AccountNbr, [Name], email, SendEmailConfirm) SELECT 'COMFORT0001', 'Comfort Road Bicycles', NULL, 0

Scripts/Ch23_ProSSIS/Database_Scripts/Step 2. Create Customer Table and Data.sql

If you are curious, the queries that generated this data from the AdventureWorks database are commented out at the bottom of the script file.

Invoice Table

To create the Invoice table, run the following SQL statement:

USE [CaseStudy] GO CREATE TABLE [dbo].[Invoice]( [InvoiceID] [int] IDENTITY(1,1) NOT NULL, [InvoiceNbr] [varchar](50) NOT NULL, [CustomerID] [int] NOT NULL, [TotalBilledAmt] [money] NOT NULL, [BilledDate] [datetime] NOT NULL, [PaidFlag] [smallint] NOT NULL CONSTRAINT [DF_Invoice_PaidFlag] DEFAULT (0), CONSTRAINT [PK_Invoice] PRIMARY KEY CLUSTERED ( [InvoiceID] ASC ) ON [PRIMARY] ) ON [PRIMARY] GO ALTER TABLE [dbo].[Invoice] WITH NOCHECK ADD CONSTRAINT [FK_Invoice_CustomerID] FOREIGN KEY([CustomerID]) REFERENCES [dbo].[Customer] ([CustomerID]) GO ALTER TABLE [dbo].[Invoice] CHECK CONSTRAINT [FK_Invoice_CustomerID]

Scripts/Ch23_ProSSIS/Database_Scripts/Step 3. Create Invoice Table and Data.sql

You will use the Customer table to generate three months’ worth of invoice data. In doing so, you are creating invoice numbers that have the customer account number embedded in the invoice number. Companies commonly do this because it provides an extra piece of identification as a cross-check in an environment where there is very limited data. Use the following SQL snippet to simulate and create a set of invoice entries or use the downloaded script “Step 3. Create Invoice Table and Data.sql” to load this data statically:

INSERT INTO Invoice(InvoiceNbr, CustomerID, TotalBilledAmt, BilledDate, PaidFlag)

SELECT InvoiceNbr = '151' + Accountnbr,

CustomerID,

TotalBilledAmt = cast(131 * (ascii(left(name, 1)) +

ascii(substring(name,2, 1))) as money),

BilledDate = '06/01/2012 00:00:00',

PaidFlag = 0

FROM customer

UNION

SELECT InvoiceNbr = '181' + Accountnbr,

CustomerID,

TotalBilledAmt = case

when left(Accountnbr, 1) in ('A', 'B', 'C', 'D', 'E', 'F', 'G')

then cast(131 * (ascii(left(name, 1)) + ascii(substring(name,

2, 1)))

as money)

else

cast(191 * (ascii(left(name, 1)) + ascii(substring(name,

2, 1)))

as money)

end,

BilledDate = '07/01/2012 00:00:00',

PaidFlag = 0

FROM customer

UNION

SELECT InvoiceNbr = '212' + Accountnbr,

CustomerID,

TotalBilledAmt = case

when left(Accountnbr, 1) in ('A', 'F', 'G',)

then cast(132 * (ascii(left(name, 1)) + ascii(substring(name, 2, 1)))

as money)

else

cast(155 * (ascii(left(name, 1)) + ascii(substring(name, 2,

1)))

as money)

end,

BilledDate = '08/01/2012 00:00:00',

PaidFlag = 0

FROM customer

GO

UPDATE invoice set totalbilledamt = 18444.00

WHERE invoicenbr = '151INTERNAT0002' and totalbilledamt = 23973

Scripts/Ch23_ProSSIS/Database_Scripts/Step 3. Create Invoice Table and Data.sql

CustomerLookup Table

The CustomerLookup table will be used to store resolutions of bad customer identification data that continues to be sent through the accounting feeds. Data that the auto-processes can’t match would be matched manually, and the bad data string for an existing customer can be stored for each import type for future matching purposes. The structure can be created using the following SQL script, found in the “Step 4. Create CustLookup Table.sql” file:

USE [CaseStudy] GO CREATE TABLE [dbo].[CustomerLookup]( [RawDataToMatch] [varchar](50) NOT NULL, [ImportType] [char](10) NOT NULL, [CustomerID] [int] NOT NULL, CONSTRAINT [PK_CustomerLookup] PRIMARY KEY CLUSTERED ( [RawDataToMatch] ASC, [ImportType] ASC ) ON [PRIMARY] ) ON [PRIMARY] GO ALTER TABLE [dbo].[CustomerLookup] WITH NOCHECK ADD CONSTRAINT [FK_CustomerLookup_CustomerID] FOREIGN KEY([CustomerID]) REFERENCES [dbo].[Customer] ([CustomerID]) GO ALTER TABLE [dbo].[CustomerLookup] CHECK CONSTRAINT [FK_CustomerLookup_CustomerID]

Scripts/Ch23_ProSSIS/Database_Scripts/Step 4. Create CustLookup Table.sql

BankBatch Table

The BankBatch table will store not only the summary data from the batch file but also the file itself — in the BatchFile field. This table can be created using either the code in the “Step 5. Create Bank Batch Tables.sql” file or the following SQL statement:

USE [CaseStudy] GO CREATE TABLE [dbo].[BankBatch]( [BankBatchID] [int] IDENTITY(1,1) NOT NULL, [BankBatchNbr] [nvarchar](50) NULL, [DepositDate] [datetime] NULL, [ReceivedDate] [datetime] NULL, [BalancedDate] [datetime] NULL, [PostedDate] [datetime] NULL, [BatchTotal] [money] NULL, [BatchItems] [int] NULL, [BatchItemsComplete] [int] NULL, [FileBytes] [int] NULL, [FullFilePath] [nvarchar](1080) NULL, [ImportType] [char](10) NULL, [ErrMsg] [varchar](1080) NULL, [BatchFile] [ntext] NULL, CONSTRAINT [PK_BankBatch] PRIMARY KEY CLUSTERED ( BankBatchID] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY] ) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY] GO CREATE NONCLUSTERED INDEX [IX_BatchNumber_ImportType] ON [dbo].[BankBatch] ( [BankBatchNbr] ASC, [ImportType] ASC ) GO

Scripts/Ch23_ProSSIS/Database_Scripts/Step 5. Create Bank Batch Tables.sql

BankBatchDetail Table

The detail to the BankBatch table can be created using the following SQL script:

USE [CaseStudy] GO CREATE TABLE [dbo].[BankBatchDetail]( [BankBatchDtlID] [int] IDENTITY(1,1) NOT NULL, [BankBatchID] [int] NOT NULL, [RawInvoiceNbr] [nvarchar](50) NULL, [RawAccountNbr] [nvarchar](50) NULL, [ReferenceData1] [nvarchar](50) NULL, [ReferenceData2] [nvarchar](50) NULL, [MatchedInvoiceID] [int] NULL, [MatchedCustomerID] [int] NULL, [MatchedDate] [datetime] NULL, [PaymentAmount] [money] NULL, CONSTRAINT [PK_BankBatchDtlID] PRIMARY KEY CLUSTERED ( [BankBatchDtlID] ASC ) ON [PRIMARY] ) ON [PRIMARY] LTER TABLE [dbo].[BankBatchDetail] WITH NOCHECK ADD CONSTRAINT [FK_BankBatchDetail_BankBatchID] FOREIGN KEY([BankBatchID]) REFERENCES [dbo].[BankBatch] ([BankBatchID]) GO ALTER TABLE [dbo].[BankBatchDetail] CHECK CONSTRAINT [FK_BankBatchDetail_BankBatchID] GO ALTER TABLE [dbo].[BankBatchDetail] WITH CHECK ADD CONSTRAINT [FK_BankBatchDetail_CustomerID] FOREIGN KEY([MatchedCustomerID]) REFERENCES [dbo].[Customer] ([CustomerID]) GO ALTER TABLE [dbo].[BankBatchDetail] WITH CHECK ADD CONSTRAINT [FK_BankBatchDetail_InvoiceID] FOREIGN KEY([MatchedInvoiceID]) REFERENCES [dbo].[Invoice] ([InvoiceID])

Scripts/Ch23_ProSSIS/Database_Scripts/Step 5. Create Bank Batch Tables.sql

Corporate Ledger Data

To simulate a view into your direct credits to the corporate account, you need to create the GLAccountData structure and your view [vCorpDirectAcctTrans]. All the corporate ledger structure scripts can be found in “Step 6. Create Corporate Ledger Table and Data.sql.” Run the following SQL to create the physical table:

USE [CaseStudy] GO CREATE TABLE [dbo].[GLAccountData]( [TransID] [int] IDENTITY(1,1) NOT NULL, [PostDate] [datetime] NULL, [ProcessDate] [datetime] NULL, [DepositAmount] [money] NULL, [TransDesc] [varchar](50) NULL, [GLAccount] [char](10) NULL, CONSTRAINT [PK_GLAccountData] PRIMARY KEY CLUSTERED ( [TransID] ASC ) ON [PRIMARY] ) ON [PRIMARY]

Scripts/Ch23_ProSSIS/Database_Scripts/Step 6. Create Corporate Ledger Table and Data.sql

Run the following to create the logical view to this data:

USE [CaseStudy] GO CREATE VIEW dbo.vCorpDirectAcctTrans AS SELECT TransID, PostDate, ProcessDate, DepositAmount, TransDesc FROM dbo.GLAccountData

Scripts/Ch23_ProSSIS/Database_Scripts/Step 6. Create Corporate Ledger Table and Data.sql

Run this SQL batch to load the GLAccountData with some sample deposit transactions from the direct-pay customers:

INSERT INTO GLACCOUNTDATA (postdate, processdate, depositamount, transdesc, glaccount) SELECT '08/09/12', '08/10/12', 22794.00, 'PAYPAL*[email protected]', 'BANK' UNION SELECT '08/09/12', '08/10/12', 21484.00, 'PAYPAL*[email protected]', 'BANK' UNION SELECT '08/09/12', '08/10/12', 22008.00, 'PAYPAL*[email protected]', 'BANK' UNION SELECT '08/09/12', '08/10/12', 22794.00, 'PAYPAL*CBooth@MagicCycle', 'BANK' UNION SELECT '08/09/012', '08/10/12', 22401.00, 'PAYPAL*[email protected]', 'BANK'

Scripts/Ch23_ProSSIS/Database_Scripts/Step 6. Create Corporate Ledger Table and Data.sql

ErrorDetail Table

There are some great logging options in SSIS, and the case study will log detailed errors that can occur at the column level when processing. This table will enable you to store that information; by storing the Execution ID, you can later join the custom-logged error detail with the step-level error information logged during package execution. You can create the table using the following SQL script, also found in “Step 7. Create Error Detail Table.sql”:

USE [CaseStudy] GO CREATE TABLE [dbo].[ErrorDetail]( [ExecutionID] [nchar](38) NOT NULL, [ErrorEvent] [nchar](20) NULL, [ErrorCode] [int] NULL, [ErrorColumn] [int] NULL, [ErrorDesc] [nvarchar](1048) NULL, [ErrorDate] [datetime] NULL, [RawData] [varchar](2048) NULL )ON [PRIMARY]

Scripts/Ch23_ProSSIS/Database_Scripts/Step 7. Create Error Detail Table.sql

Stored Procedure to Add Batches

Because this is a rigorous financial processing environment, separating the logic that performs basic data insert from core matching logic provides advantages for auditing purposes and enables the process of creating new batches to be more modular. The other advantage to using stored procedures is higher performance. A stored procedure enables you to place all the T-SQL logic in one place, and it can be optimized by the Query Optimizer. A stored procedure can also be placed under separate execution rights and managed separately, instead of embedding the T-SQL into, and applying the rights to, the package itself. The following three stored procedures can be found in steps 8–10 in the downloadable files. This first stored procedure will be used to add a new bank batch to the payment processing system. Run the script to add it to CaseStudy database:

USE [CaseStudy] GO CREATE PROC usp_BankBatch_Add( @BankBatchID int OUTPUT, @BankBatchNbr nvarchar(50)=NULL, @DepositDate datetime=NULL, @ReceivedDate datetime=NULL, @BatchTotal money=NULL, @BatchItems int=NULL, @FileBytes int=NULL, @FullFilePath nvarchar(100)=NULL, @ImportType char(10) ) AS /*======================================================= PROC: usp_BankBatch_Add PURPOSE: To Add BankBatch Header Basic info and to validate that the batch is new. OUTPUT: Will return BankBatchID if new or 0 if exists HISTORY: 04/01/12 Created =======================================================*/ SET NOCOUNT ON If @ReceivedDate is null SET @ReceivedDate = getdate() IF LEN(@BankBatchNbr) <= 1 OR Exists(Select top 1 * FROM BankBatch WHERE BankBatchNbr = @BankBatchNbr AND ImportType = @ImportType) BEGIN SET @BANKBATCHID = 0 RETURN -1 END ELSE BEGIN INSERT INTO BankBatch(BankBatchNbr, DepositDate, ReceivedDate, BatchTotal, BatchItems, FileBytes, FullFilePath, ImportType) SELECT UPPER(@BankBatchNbr), @DepositDate, @ReceivedDate, @BatchTotal, @BatchItems, @FileBytes, UPPER(@FullFilePath), UPPER(@ImportType) SET @BANKBATCHID = Scope_Identity() END SET NOCOUNT OFF GO

Scripts/Ch23_ProSSIS/Database_Scripts/Step 8. usp_BankBatch_Add.sql

Stored Procedure to Update a Batch with Invoice and Customer ID

This stored procedure will be used to update a payment with a matching invoice or customer identification number relating back to the dimension tables. Run the script to add this procedure to the CaseStudy database:

CREATE PROC dbo.usp_BankBatchDetail_Match( @BankBatchDtlID int, @InvoiceID int=NULL, @CustomerID int=NULL) AS /*=============================================== PROC: usp_BankBatchDetail_Match PURPOSE: To update as paid an incoming payment with matched invoice and customerid HISTORY: 04/01/12 Created */ SET NOCOUNT ON --UPDATE BANKBATCH DETAIL WITH INVOICE AND CUSTOMERID --NOTE: IF EITHER IS NULL THEN DON'T UPDATE --MATCHED DATE. THIS WILL PUSH THE ITEM INTO A SUBJECT- --MATTER-EXPERT'S QUEUE TO IDENTIFY. UPDATE BankBatchDetail SET MatchedInvoiceID = @InvoiceID, MatchedCustomerID = @CustomerID, MatchedDate = case when @InvoiceID is NULL or @CustomerID is NULL then NULL else getdate() end WHERE BankBatchDtlID = @BankBatchDtlID SET NOCOUNT OFF

Scripts/Ch23_ProSSIS/Database_Scripts/Step 9. usp_BankBatchDetail_Match.sql

Stored Procedure to Balance a Batch

This stored procedure is used to examine all payments in a batch and to mark the batch as complete when all payments have been identified with an invoice and a customer. Again, you use a stored procedure for all the reasons explained previously for auditing, modularity, and performance:

CREATE PROC usp_BankBatch_Balance AS /*====================================================== PROC: usp_BankBatch_Balance PURPOSE: To update batchdetails when they are matched Then keep BankBatches balanced by matching all line items */ UPDATE bankbatchdetail SET MatchedDate = GetDate() where (matchedinvoiceid is not null and matchedcustomerid is not null) and (matchedinvoiceid <> 0 and matchedcustomerid <> 0) UPDATE BANKBATCH SET BatchItemsComplete = BatchItems - b.NotComplete FROM BANKBATCH A INNER JOIN ( select bankbatchid, count(*) as NotComplete from bankbatchdetail where (matchedinvoiceid is null OR matchedcustomerid is null OR matcheddate is null) group by bankbatchid ) B on A.BankBatchID = B.BankBatchID UPDATE BankBatch SET BalancedDate = getdate() WHERE BalancedDate IS NULL and BatchItems = BatchItemsComplete

Scripts/Ch23_ProSSIS/Database_Scripts/Step 10. usp_BankBatch_Balance.sql

The import integration process, as discussed earlier, will contain three distinct packages (of the four worker packages) that need to be built. To keep this from becoming a 100-step process, you’ll put each together separately, and then within each package, you’ll break up the setup into several sections: Package Setup and File System Tasks, Control Flow Processing, Data Flow Validation, and Data Flow ETL. Each step is explained in detail the first time; then, as things become repetitive, you can see the details on the screenshots to pick up some speed. You can also walk through just one of these load packages and then download the complete solution from www.wrox.com to see and explore the final result.

Bank File Load Package

The bank batch load package will be set up to look in specific file directories for lockbox flat files. External dependencies such as file folders can be a headache during package deployment if you hardcode them, because you have to remember to set them up identically in each environment. Instead, you are going to enable your package to get these paths from variables and build them as needed. You can then use configuration files to set up the package in each environment without any further intervention. However, you could still have some issues if the directories that you provide are not created, so you need to consider this as you set up the precedence and control of flow in your package. It means adding a few extra steps, but it will enable your package to adjust during initial deployment and any future changes to these file locations.

Bank File Package and Variable Setup Tasks

To get started, you need to create a new SSIS project. Create a project named CaseStudy in C:ProSSISCodeCh23_ProSSISCaseStudy. When the project is built, go to Solution Explorer and click the Package.dtsx file. In the Property window, find the Name property and change the name from Package.dtsx to CaseStudy_Load_Bank.dtsx. Alternatively, you can right-click on the Package.dtsx name, select the Rename option, and then type in CaseStudy_Load_Bank.

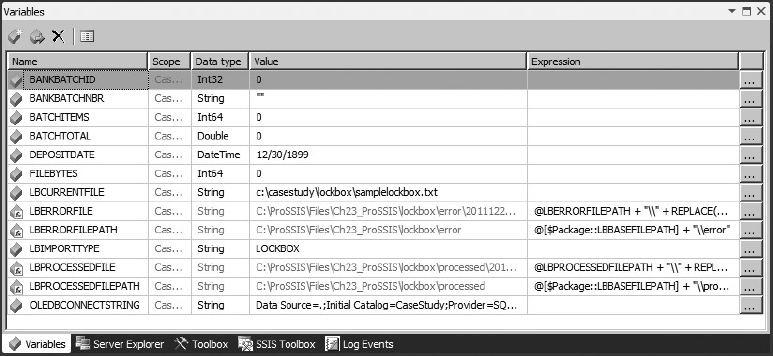

Begin by creating a parameter in the package by going to the Parameters tab on the package. Click the icon on the far left of the tab to add a new parameter. Set the Name of the parameter to LBBASEFILEPATH with a data type of String and an initial value of C:ProSSISFilesCh23_ProSSISlockbox. Because this is a parameter, it can be set external to the package if the directory location needs to change. Processes and variables within the package will use this parameter to build the appropriate file locations. Because all the other file paths and filenames for processed files are based on this parameter, the package can be easily configured for different server environments.

Use the menu named SSIS Variables to access the Variables editor and add the variables at the package level that are shown in the following table. Be sure to use all uppercase letters because variables are case sensitive; when you are new to SSIS, this will help you avoid some frustration if you are not used to case sensitivity. Variable names with all-caps are not required for SSIS package development. Most of these values will be set automatically to default values within each load package, so values are here to aid in understanding.

| VARIABLE NAME | DATA TYPE | VALUE |

| BANKBATCHID | Int32 | 0 |

| BANKBATCHNBR | String | |

| BATCHITEMS | Int64 | 0 |

| BATCHTOTAL | Double | 0 |

| DEPOSITDATE | DateTime | 12/30/1899 |

| FILEBYTES | Int64 | 0 |

| LBCURRENTFILE | String | C:ProSSISFilesCh23_ProSSISlockboxsamplelockbox.txt |

| LBERRORFILE | String | |

| LBERRORFILEPATH | String | |

| LBIMPORTTYPE | String | LOCKBOX |

| LBPROCESSEDFILE | String | |

| LBPROCESSEDFILEPATH | String |

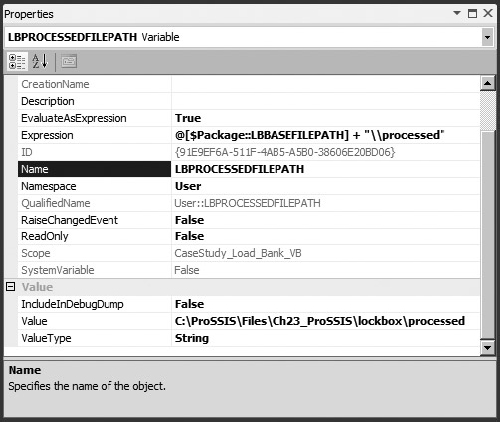

Because the File System Tasks only allow the source and destination properties to be set to variables — not expressions derived from variable values — you need to create a few variables that “go the other way” and instead are derived from expressions.

The variables @LBPROCESSEDFILEPATH and @LBERRORFILEPATH need to retrieve their values relative to the base file paths (see the following table). For example, the variable @LBPROCESSEDFILEPATH should be set up relative to the base lockbox file path in a subdirectory called processed. To do this, you’ll use an expression to generate the value of the variable. Click the variable in the Variables Editor and add the expression to match Figure 23-7. The double backslash (\) is required as an escape sequence for the backslash in the Expressions Editor. Set both variables up to be evaluated as expressions the same way. Notice that in the Variables Editor, and as shown in Figure 23-8, the values change immediately.

| FOR VARIABLE NAME: | SET EXPRESSION TO: |

| LBERRORFILEPATH | @[$Package::LBBASEFILEPATH] + "\error" |

| LBPROCESSEDFILEPATH | @[$Package::LBBASEFILEPATH] + "\processed" |

The variables for specific processed and error versions of the current file being processed need to retrieve a unique value that can be used to rename the file into its respective destination file path. Set up the @LBERRORFILE and @LBPROCESSEDFILE variables to be evaluated using expressions similar to the following formula:

@LBERRORFILEPATH + "" + REPLACE(REPLACE(REPLACE(REPLACE((DT_WSTR,50) GETUTCDATE(),"-","")," ", ""),".", ""),":", "") +(DT_WSTR, 50)@FILEBYTES + ".txt"

This formula will generate a name similar to 201208160552080160000000.txt for the file to be moved into an offline storage area.

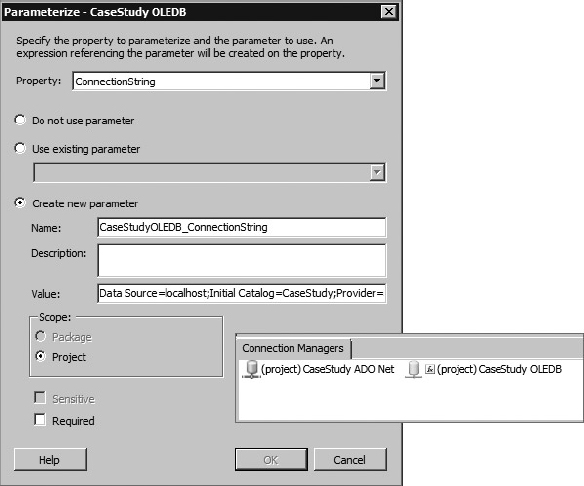

To connect to the database, you will use a new feature in SSIS 2012 called Project Connection Managers. In previous versions of Integration Services, you may recall a folder in Solution Explorer called Data Sources. Unfortunately, the data connection reuse this provided could only be used at design time, so this option was essentially useless. Project Connection Managers have replaced the old Data Sources folder. You can create a connection manager for your project, and it will automatically be included in all packages.

Another helpful feature in SSIS 2012 is parameterization, which we already covered in Chapter 6. Combining Project Connection Managers and parameters will make this case study’s development and configuration even easier than before.

Begin by adding a new OLE DB Connection in Solution Explorer. Name the connection CaseStudy OLEDB. You will use this connection for all control and Data Flow activities that interact with the database. Notice that once it is created, it is immediately added to the package you are developing.

To enable this connection to be configurable during runtime, right-click on the connection manager in the package and select the menu option called Parameterize. The Parameterize menu allows you to modify the value that will initially be used for the connection string.

Notice earlier that this variable was set to the default instance using this connection string value:

Data Source=localhost;Initial Catalog=CaseStudy;Provider=SQLNCLI11.1;Integrated Security=SSPI;Auto Translate=False;

You may need to specifically name your server instance instead of using the “localhost” identifier if the SSIS package will be executed on a separate server from the database. Once confirmed, the connection manager will have a function “adorner” signifying that the connection manager will be set using an expression based on the parameter. Figure 23-9 shows how the connection and connection manager should appear when complete.

Finally, add a New Flat File Connection in the Connection Managers tab on the package. Because this connection will be used only within this one package, you do not need to add it to the Project Connection Managers collection. Configure the connection properties as shown in the following table. Note that instead of parsing out each individual column in the file, here you are bringing the whole 80-character line into the data stream so that you can parse out each data element later. The reason for doing this is that the lockbox flat file will attempt to cast parsed text values into specific data types, resulting in import errors if there are unexpected non-ASCII characters or nonvalid elements like 00000000 for dates. By bringing in the whole line, you’ll be able to parse and test each element, providing more control in your ETL process. This is the preferred ETL technique if the data quality is found to be poor or inconsistent or if you are not using a staging table.

| PROPERTY | SETTING |

| Name | Lockbox Flat File |

| Description | Flat File for Lockbox processing |

| File Name | C:ProSSISFilesCh23_ProSSISlockboxsamplelockbox.txt |

| Format | Ragged right |

| Advanced:OutputColumnWidth | 80 |

| Advanced:DataType | string[DT_STR] |

| Advanced:Name | line (case is important) |

The only problem with the previous step is that you had to set a filename to a literal in order to set up the connection. However, at runtime you want to retrieve the filename that you will be processing from your variable LBCURRENTFILE. Save the Flat File Connection, and then access the Expressions collection in the Properties tab. To enable this connection to be configurable during runtime, set the connection string property of the connection to the variable @LBCURRENTFILE using the Expressions collection on the Connection Properties window.

At this point, you should have a package named CaseStudy_Load_Bank with two connections and a bunch of variables. In the next section, you’ll start adding to the Control Flow.

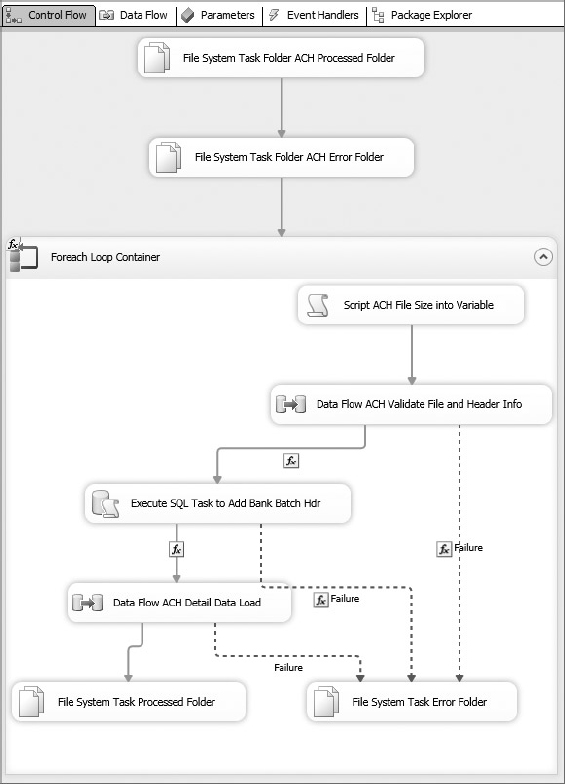

Bank File Control Flow Processing

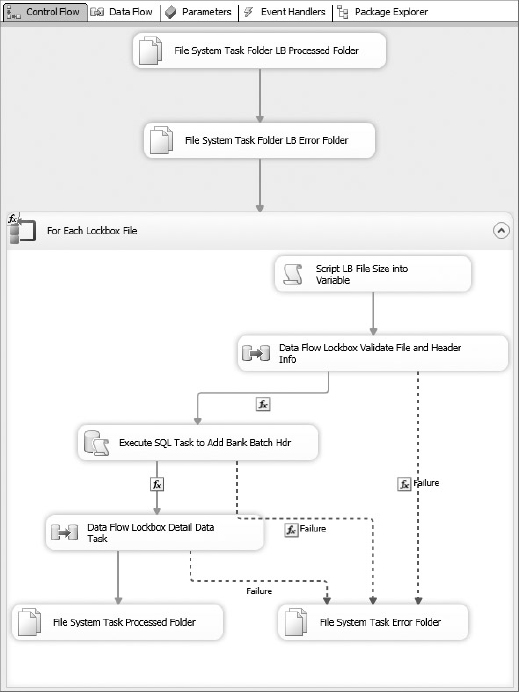

You want the CaseStudy_Load_Bank package to process these flat files streaming in from the bank, but before you start, you need to ensure that the directories needed for your load package exist. You’ll use a File System Task to do this because it can perform the operation of checking for and creating a directory. One nifty thing that it will do by default is create all the subdirectories in the hierarchy, down to the last one, when you create a directory. This is why you’ll set up a File System Task to check for and create a directory using lowest subdirectory path values — LBPROCESSEDFILEPATH and LBERRORFILEPATH. You won’t need to create a path explicitly for the parameter LBBASEFILEPATH. You’ll get this free, when you check for and create the subdirectories. Use this to get started on laying out the Control Flow for the package.

Bank File Control Flow File Loop

Add two File System Tasks to the Control Flow design surface of the package — one for checking and adding the lockbox processed file path, and another for the lockbox error-file path. These two paths represent where the package will move incoming lockbox files depending upon how they are processed. Change the name and description properties to the following:

| NAME | DESCRIPTION |

| File System Task Folder LB Processed Folder | Ensures that the LB Processed Folder exists |

| File System Task Folder LB Error Folder | Ensures that the LB Error Folder exists |

For each File System Task, set the following properties:

| PROPERTY | SETTING |

| Operation | Create Directory |

| UseDirectoryIfExists | True |

| IsSourcePathVariable | True |

| SourceVariable | Choose the corresponding variable for each task (Notice how easy this is when the task is named properly) |

Now connect the two lockbox File System Tasks together by setting a precedence constraint between the File System Task Folder LB Processed Folder Task and the File System Task Folder LB Error Folder Task. The precedence constraint should automatically be set to Success. If you run the package now, you should see a file hierarchy created on your machine resembling Figure 23-10.

Add a Foreach Loop Container named For Each Lockbox File. Connect the precedence from the last Lockbox File System Task to the Foreach Loop Container so that the Foreach Loop Container is not executed unless the File System Task completes successfully.

The Foreach Loop is expecting a literal path to poll. You want the loop to rely on a variable, so you have to use an expression. This task object is a little confusing because there are actually two sets of expression collections: one set, in the left tab, is for the container; the second set appears only when the Collections tab is selected. The second set of expressions is the collection of properties for the Foreach enumerator. It is this second set of expressions that you want to alter. Click the ellipsis to the right of this Expressions collection.

In the Expressions Editor, the property folder doesn’t exist with this name. Don’t worry, it does exist; it is just named Directory instead. Select the Directory property and set its value to the parameter @[$Package::LBBASEFILEPATH]. Evaluate the expression to ensure that it matches the base lockbox path. Close the Expressions Editor. Set the property Files to *.txt. Leave the Retrieve File name property as Fully Qualified. The Collection tab of the Foreach Loop Container should look like Figure 23-11.

To store the name of the file you are processing into a variable, click the Variable Mappings tab on the left side of the Foreach Loop Container. Select the variable named LBCURRENTFILE to retrieve the value of the Foreach Loop for each file found. Leave the index on the variable mapping set to zero (0). This represents the first position in a files collection or the filename returned by the loop. Click OK to complete this task.

Bank File Control Flow Retrieval of File Properties

One of the things you have to save into the BankBatch data table is the filename and the number of bytes in the file. The Foreach Loop Container did the work of storing the filename into the variable LBCURRENTFILE. Now, with the filename, it would be easy to retrieve the file size using a Script Task and some VB.NET or C# code, and then set the value of the variable. You also need to reset the value of some of the other variables, so this is a good spot to add this logic as well. For a more detailed explanation of setting variables within Script Tasks, see Chapter 9.

Getting back to the Control Flow of the Bank File package, add a Script Task within the Foreach Loop. Change the name to “Script LB File Size into Variable.” Provide the variable LBCURRENTFILE for the ReadOnlyVariables property. Select the variables BANKBATCHID, BANKBATCHNBR, BATCHITEMS, BATCHTOTAL, DEPOSITDATE, and FILEBYTES from the dropdown list of variables for the ReadWriteVariables property. Note that if you choose to hand-key variables into this property, that when passing variables into the Script Task, the @ sign should not be used, but you can fully qualify the variables with the namespace, such as User::BATCHITEMS.

Select the Script language of your choice. Click the Edit Script button. This opens the .NET development environment. Add either an Imports or a using reference to the System.IO namespace depending on your selected .NET language, and update the script to pull the file bytes from the filename provided in the DTS object Variables collection. First include a reference to the System.IO library by adding the last line you see in this code:

C#

using System; using System.Data; using Microsoft.SqlServer.Dts.Runtime; using System.IO; //<--Added Input/Output library

VB

Imports System Imports System.Data Imports System.Math Imports Microsoft.SqlServer.Dts.Runtime Imports System.IO '<--Added Input/Output library

Then add a VB Sub or C# void function to reset the variables that you can call in the Script Task Main() function. This is done only to provide code separation, rather than to work with the large procedural code typically found in the Main() function. Note that the conversion of default values is explicit. This is required. Simply assigning the value of 0 to one of these variables would not cast properly.

C#

public void ResetVariables()

{

//Resets variables

Dts.Variables["BANKBATCHID"].Value = System.Convert.ToInt32(0);

Dts.Variables["BANKBATCHNBR"].Value = "";

Dts.Variables["BATCHITEMS"].Value = System.Convert.ToInt64(0);

Dts.Variables["BATCHTOTAL"].Value = System.Convert.ToDouble(0);

Dts.Variables["DEPOSITDATE"].Value = DateTime.MinValue;

Dts.Variables["FILEBYTES"].Value = System.Convert.ToInt64(0);

}

VB

Public Sub ResetVariables()

'Resets variables

Dts.Variables("BANKBATCHID").Value = System.Convert.ToInt32(0)

Dts.Variables("BANKBATCHNBR").Value = ""

Dts.Variables("BATCHITEMS").Value = System.Convert.ToInt64(0)

Dts.Variables("BATCHTOTAL").Value = System.Convert.ToDouble(0)

Dts.Variables("DEPOSITDATE").Value = DateTime.MinValue

Dts.Variables("FILEBYTES").Value = System.Convert.ToInt64(0)

End Sub

Then replace the Main() function within the Script Task with this one:

C#

public void Main()

{

//'**

//'SCRIPT

//'PURPOSE: To take file bytes and save to global variable

//'==================================================================

Int64 lDefault = 0;

Boolean bVal;

Try

{

//Reset Variables

ResetVariables();

//Use.Net IO Library to examine file bytes

FileInfo oFile = new

FileInfo(Dts.Variables["LBCURRENTFILE"].Value.ToString());

Dts.Variables["FILEBYTES"].Value = oFile.Length;

Dts.Events.FireInformation(0, "Script Task to Vars", _

"File Bytes Found:" +

Dts.Variables["FILEBYTES"].Value.ToString(), "", 0, ref bVal);

//Alternative Troubleshooter

//System.Windows.Forms.MessageBox.Show("File Bytes Found:" +

//Dts.Variables["FILEBYTES"].Value.ToString());

Dts.TaskResult = (int)ScriptResults.Success;

}

catch(Exception ex)

{

Dts.Events.FireError(0, "Script Task To Vars", ex.ToString(),

"", 0);

Dts.Variables["FILEBYTES"].Value = lDefault;

Dts.TaskResult = (int)ScriptResults.Failure;

}

}

VB

Public Sub Main()

'**

'SCRIPT

'PURPOSE: To take file bytes and save to global variable

'==================================================================

Dim oFile As FileInfo

Dim lDefault As Int64

Dim bVal As Boolean

lDefault = 0

Try

'Reset Variables

ResetVariables()

'Use.Net IO Library to examine file bytes

oFile = New FileInfo(Dts.Variables("LBCURRENTFILE").Value.ToString)

Dts.Variables("FILEBYTES").Value = oFile.Length

Dts.Events.FireInformation(0, "Script Task to Vars", _

"File Bytes Found:" +

Dts.Variables("FILEBYTES").Value.ToString(), _

"", 0, bVal)

'Alternative Troubleshooter

'System.Windows.Forms.MessageBox.Show("File Bytes Found:" _'

+ Dts.Variables("FILEBYTES").Value.ToString())

Dts.TaskResult = ScriptResults.Success

Catch ex As Exception

Dts.Events.FireError(0, "Script Task To Vars", ex.ToString(), "", 0)

Dts.Variables("FILEBYTES").Value = lDefault

Dts.TaskResult = ScriptResults.Failure

End Try

End Sub

Now close the Script Editor and save this task. Be sure to click OK to save. If you open the script after you edit and have not saved the task, then your earlier script changes are lost. On that same note, another good practice in SSIS is to code and test smaller units of work.

Back to the code, note the use of the FireInformation method in the script. This method will stream an informational entry into the Execution Results tab containing the value of file bytes found in the file to process.

At this point, you would know the filename and file size. The Foreach Loop stored the filename into a variable. The Script Task retrieved the file size and stored the data into the FILEBYTES variable. You still need to figure out whether you have seen this file before. A unique batch number by import type is embedded in the header of the file. There are a few ways to retrieve that information. One way is to use the System.IO library in the Script Task you just created to open and examine the file header row. Another way is to use a Data Flow Task to open and examine the file. Although you could do the same thing in the Script Task, the Data Flow Task enables you to turn the file into a stream to examine the contents easily. It also provides the added advantage of failure upon encountering a bad format at a column level. You can then alter your Control Flow to push this file to the error folder.

To finish the retrieval Control Flow for now, add a Data Flow Task. Connect the successful completion of the Script Task to this task. Change the Name property to “Data Flow Lockbox Validate File and Header Info.” This task will parse out the batch header information into variables, validate the data contents, and then perform a lookup for a similar batch. An existing BankBatchID will be returned in the BankBatchID variable. You’ll configure the Data Flow in the section “Bank File Data Flow Validation.” Disable the Data Flow Task for now by right-clicking the task and selecting Disable in the pop-up menu. (After you have run the package and tested it, follow the same steps and select Enable to reenable the task.) Save the entire package and run it to ensure that everything is working so far.

Bank File Control Flow Batch Creation

The last task for the Bank File Control Flow is to lay out the workflow to validate that key values are in the Batch file and that the batch has not already been processed. This ultimately will be determined by the Data Flow Task that you added but have not yet completed. For now, you know that if certain basic elements in the batch file, such as the batch number, are missing or the batch amount is zero, then the package should move the file to the error folder.

To enable moving the file, add a File System Task named File System Task Error Folder. Instead of choosing a move file operation in the File System Task, select the option to rename the file. This may not be intuitive, but to move the file you need the filename stored separately. Because the variable @LBERRORFILE is a full file path and a unique filename, it is easier to move the file by simply renaming it. The File System Task properties should be set to the values shown in the following table:

| PROPERTY | VALUE |

| IsDestinationPathVariable | True |

| Destination Variable | User::LBERRORFILE |

| OverwriteDestination | True |

| Name | File System Task Error Folder |

| Description | Moves bad files to an error folder |

| Operation | Rename File |

| IsSourcePathVariable | True |

| SourceVariable | User::LBCURRENTFILE |

The File System Task here will complain if the value for User::LBCurrentFile is empty or if it doesn’t have a default value, so make sure you set this up initially as described in the earlier setup section.

To connect the Data Flow Task and File System Task together, add a precedence constraint that looks for the existence of a Bank Batch identifier and amount. On the constraint, select the Multiple constraint option of Logical OR and set the Evaluation Operation to Expression OR Constraint. Set the Value to Failure and the Expression as follows:

@BANKBATCHID!=0 || @BATCHTOTAL == 0.00

If either Data Flow fails, the Data Flow finds an existing BankBatchId, or there is no valid amount in the amount field in the bank file, the precedence constraint will send the workflow to the File System Task, which archives the file in the error folder.

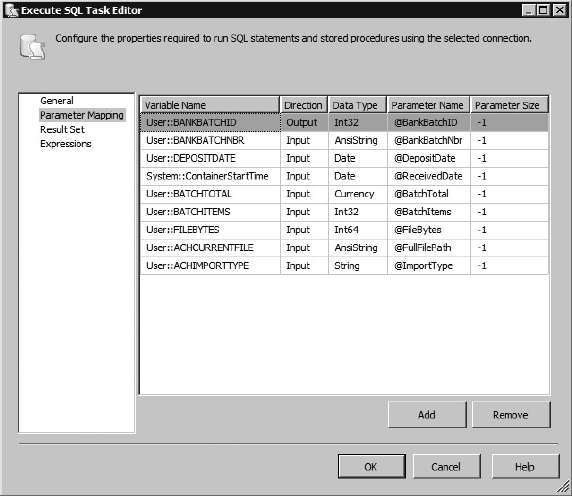

If the elements are all present, and there is no existing bank batch by batch number, the batch needs to be persisted to the database. To do this, add an Execute SQL Task. This task will use a stored procedure, usp_BankBatch_Add, to add the parsed information in the lockbox file as a row in the BankBatch table to represent a new batch file. The procedure usp_BankBatch_Add will return the new BankBatchId if it could be successfully added. Because you are using an OLE DB Connection Manager, set up the Execute SQL Task properties like this:

| PROPERTY | VALUE |

| Name | Execute SQL task to add Bank Batch Hdr |

| ConnectionType | OLE DB |

| Connection | CaseStudy OLEDB |

| SQLStatement | EXEC dbo.usp_BankBatch_Add ? OUTPUT, ?, ?, ?, ?, ?, ?, ?, ? |

| IsQueryStoredProcedure | (Will be grayed out) |

When you use the OLE DB provider, the parameters have to be marked as ? because of the different provider implementations for handling parameters. Map the input parameters to the procedure parameters on the Parameter Mapping tab. Note that the OLE DB provider uses more generic variable mapping than what you’ll do later with the ADO.NET provider. The finished Execute SQL Task Editor should look like Figure 23-12.

If this Execute SQL Task doesn’t return a new BankBatchId indicating that the batch header has been persisted, you don’t want to execute any other tasks connected to it. Furthermore, the offending file needs to be moved into an error folder to be examined because something is wrong. Create another precedence constraint between the Execute SQL Task and the File System Task Error Folder. The Control Flow should take this path if either the Execute SQL Task fails or the BankBatchId is zero (0). Set up the Precedence Constraint Editor to look like Figure 23-13.

Add a second new Data Flow Task to the Foreach Loop. Change the name property to Data Flow Lockbox Detail Data Load. You’ll configure the Data Flow in the next section. Connect the successful completion of the Execute SQL Task to this task. Add an expression to check for a nonzero BankBatchID, and set the constraint to apply when there is a successful completion and an evaluation of the constraint as true between the Execute SQL Task and this new Data Flow Task.

Now if the Data Flow Lockbox Detail Data Load fails to extract, transform, and load the batch details, you still have an issue. Add a simple Failure constraint between the Data Flow Lockbox Detail Data Load and the previously created File System Task Error Folder.

If the file is processed successfully in the Data Flow Lockbox Detail Data Load, you need to move it to the “processed” folder. Add another new File System Task and connect it to the successful completion of the second Data Flow Task. Set up this task just like the Error Folder File System Task but point everything to the processed folder.

| PROPERTY | VALUE |

| IsDestinationPathVariable | True |

| Destination Variable | User::LBPROCESSEDFILE |

| OverwriteDestination | True |

| Name | File System Task Processed Folder |

| Description | Moves completed files to an error folder |

| Operation | Rename File |

| IsSourcePathVariable | True |

| SourceVariable | User::LBCURRENTFILE |

You now have the basic structure set up for the Bank File Lockbox Control Flow. You still need to build your components in the Data Flow Tasks, but you’ll get to that in the next sections. If you are following along, go ahead and save the package at this point. If you want to test the package, you can set up the variables and test the different workflows. Just remember to watch the movement of the sample file into the processed and error folders, and make sure you put it back after each run. The CaseStudy_Load_Bank package at this point should look like Figure 23-14.

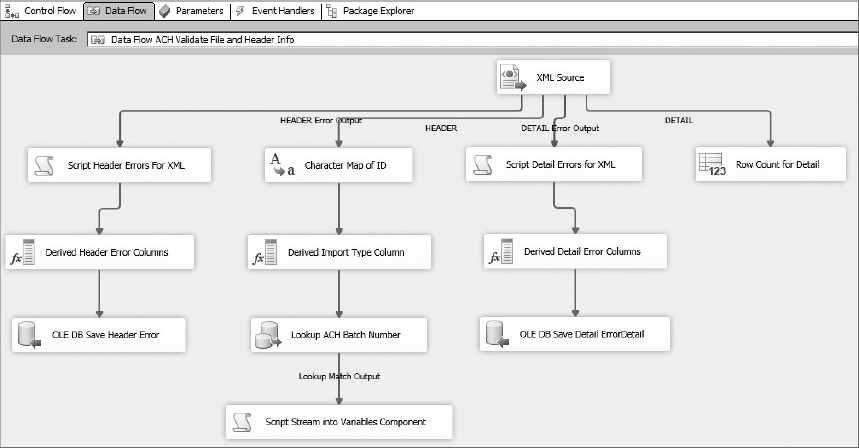

Bank File Data Flow Validation

In this section of the package, you are going to fill in the details of the Data Flow Task for validating the lockbox file. The strategy will be to open the lockbox file and retrieve information from the header to pass back to the Control Flow via variables. You’ll use a Flat File Connection to read the file, a Conditional Split Transformation to separate out the header and the check lines, Derived Column Components to parse out the header line, and an Aggregate Count Transformation to count the check transactions. You’ll use Script Component Transformations to pull this information from the transformation stream and store it in your variables to return them back to the Control Flow. Recall that the Control Flow determines whether the file is good or not, and moves the file into either an error or a processed folder.

Bank File Data Flow Parsing and Error Handling

To use the Flat File Connection you defined earlier in the Data Flow, add a Flat File Source to the Data Flow design surface. Select the Flat File Connection created in the previous step named Lockbox Flat File. Name this source component Flat File Lockbox.

One of the main purposes of this Data Flow is to perform an extraction of the header information and then perform a lookup on the batch number. You will use the Lookup Transformation for this task, and one of the “gotchas” to using this task is that it is case sensitive. Because at this point your source contains all the data in a single column, it makes sense to go ahead and run the data through a component that can convert the data to uppercase in one step. Add a Character Map Transformation to the Data Flow. It should be connected to the output of the Flat File Source and be configured to perform an in-line change to the incoming data. Select the incoming column named line and set the Destination to In-Place Change. Set the operation type to Uppercase and leave the output alias as line. Save the Character Map Transformation.

The lockbox file contains three types of data formats: header, invoice, and check. At this stage, you are trying to determine whether this batch has been previously processed, so you only need the information from the header and a count of the check transactions. To split the one-column flat file input, add a Conditional Split Transformation to the Data Flow. Set up the component to use the leftmost character of the input stream to split the line into two outputs: Header and Check. The transformation should look like Figure 23-15.

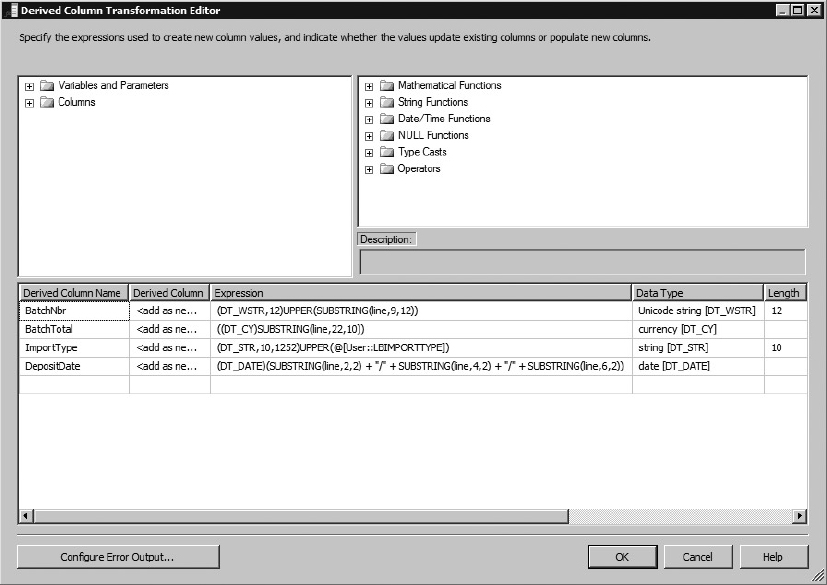

Add a Derived Column Task to the Data Flow and name it Derived Columns from Header. Connect to the Header output of the Conditional Split. This task is where the individual data elements are parsed from the line into the data fields they represent. With the Derived Column Task, you also get the conversion utilities as an added benefit. Because the import stream is a string type, you will convert the data type; this is where you think ahead as to what the final data type should be. Downstream, if you ultimately want to add a row to the BankBatch table, the Batch Number you extract from this input stream must be converted into a Unicode variable text field. If you parse the text string into the data type of [DT_WSTR] at this stage, you will match the destination field. Paying attention to data types early will save you many headaches further into the package. Set up the derived columns to match Figure 23-16.

Wait a minute! These explicit castings of string data could be disastrous. What if the bank provides some bad data in the Batch Total field? Good question. If you just left the default error handling in place, the package would fail. You don’t want that to happen; you just want to reject the file. To do that, you need the Control Flow to recognize that something is wrong and divert the file to the error folder. Notice that we said Control Flow — not Data Flow. This is why the precedence constraint you set up between this Data Flow Task and the Execute SQL Task to add a Bank Batch header is set up to reject the file if the Data Flow Task fails.

To ensure that this happens, click the Configure Error Output button, and make sure that for each derived column the component is set to fail and Redirect Row if there are any errors in creating the columns. See Figure 23-17 for the completed error output.

There are many different options for handling errors in SSIS. In this Data Flow, if there is an error parsing the lockbox header, it is probably an invalid format-type error, so you want to be able to capture information about that error in that column, so the file can be fixed and resubmitted. To do this, add a Script Component to make use of the error stream you created from the Derived Column Component. Set up the Script Component as a transformation. The error stream currently contains your raw data, an error code, and a column number for each error generated. You can use the Script Component to add the error description to your stream, and then in another Component you can log the information into your [ErrorDetail] table. Connect the error output of the Derived Column Component to the Script Component to capture the original input stream. Name this task Script Component Get Error Desc. Open the Script Transformation Editor and select all the input columns on the Input Columns tab. Then, in the Inputs and Outputs tab, expand the Output0 collection and add an output column (not an output) named ErrorDesc. Set the type to [DT_WSTR] with a length of 1048. Open the design environment for the Script Component. Change your ProcessInputRow() method to the following:

C#

public override void Input0_ProcessInputRow(Input0Buffer Row)

{

//'Script

//'Purpose: To retrieve the error description to write to error log

Row.ErrorDesc = ComponentMetaData.GetErrorDescription(Row.ErrorCode);

}

VB

Public Overrides Sub Input0_ProcessInputRow(ByVal Row As Input0Buffer) 'SCRIPT 'PURPOSE: To retrieve the error description to write to error log Row.ErrorDesc= ComponentMetaData.GetErrorDescription(Row.ErrorCode) End Sub

Close the Script Editor and then the Script Transformation Editor. Now add a Derived Column Component and name it Derived Column System Variables. Along with the detailed error message, it will be helpful to add other information such as the ExecutionInstanceGUID to log in to your custom ErrorDetail table. The ExecutionInstanceGUID is a unique identifier given to each run of an SSIS package. It enables you to combine your custom error logging with other package error logging to give you a complete picture of what occurred when a package failed. Create the Derived Columns shown in Figure 23-18.

Add an OLE DB Destination to save this data into the ErrorDetail table. Name the component OLE DB Destination Error Log. Set up the OLE DB Connection and set the name of the table to ErrorDetail. Map the columns. Most input columns should match the destination columns in the table. Map the column Line to the RawData column.

Now you’ve handled the worst-case scenario for bad batch header data. Not only do you store the error of a bad conversion or batch header, but the flow of data will stop at this component. This leaves the value of the BankBatchID to the default of 0, which causes the Control Flow to divert the file to the error folder — just what you want.

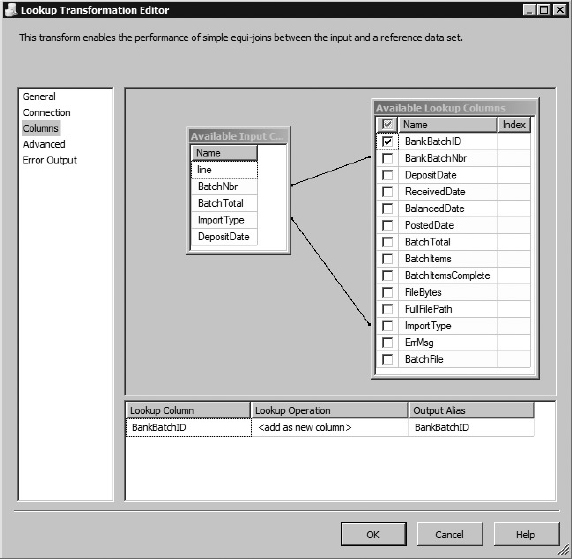

Bank File Data Flow Validation

Now, if all the data elements of the Bank Batch file parse correctly, the Derived Columns from Header Component should contain data that was successfully converted to proper data types. You now have to determine if the BatchNbr parsed from the file has already been processed. This can be accomplished by checking whether it matches any existing BatchNbr in the BankBatch table by import type. Add a Lookup Component to the flow of the Derived Column. Change the name to Lookup BankBatchID. Connect the CaseStudy OLEDB connection. In the Reference tab, select BankBatch table. The Lookup Component is case sensitive, which is why the derived column converted the contents to uppercase.