12 Selecting and Implementing Test Tools

There is often much hope and money invested in the acquisition of tools—the sad truth is that only too often they end up unused in some office cabinet. This chapter is intended to offer sound advice and guidance in the identification of possible tool application areas, the selection of appropriate tools, and their introduction in the test process.

12.1 Why Test Tools?

Test tools can be a means to increase the efficiency of individual test activities in a well-defined and effective test process. This is achieved if the tool saves time and money or if it increases the scope or quality of the results. A typical example are tools for automated test execution—they “merely” make the execution of already defined test cases cheaper, faster, and more accurate, without, however, adding any new dimension to testing. For such tools to be efficiently run, existing processes usually need to be enhanced with activities involving the identification of the test cases that are to be automated and their automated execution. The test process as such remains more or less unchanged.

Increase efficiency

Yet, by creating new or additional technological or organizational possibilities, it may be only through the deployment of test tools that new processes or new activities in an existing test process become possible.

Create new capabilities

Tools for the automated generation of test cases from abstract models of the system under test fall into this second category (e.g., [URL: AGEDIS]). The introduction of such tools has an impact on the entire test process:

![]() Individual test cases can no longer be planned as they are only created during testing and are no longer individually identifiable. The specification phase no longer aims at the description of test cases but provides us with generation strategies for required test cases.

Individual test cases can no longer be planned as they are only created during testing and are no longer individually identifiable. The specification phase no longer aims at the description of test cases but provides us with generation strategies for required test cases.

![]() The execution of generated tests must be automated in order not to lose the advantage of automated test generation, i.e., the creation of a very large amount of test cases at little cost.

The execution of generated tests must be automated in order not to lose the advantage of automated test generation, i.e., the creation of a very large amount of test cases at little cost.

![]() The evaluation of test coverage and product quality must refer to the system’s abstract model underlying the generation of test cases.

The evaluation of test coverage and product quality must refer to the system’s abstract model underlying the generation of test cases.

This shows that one ought to be quite careful when introducing a tool as one may very quickly find oneself facing the dilemma that existing processes need to be adapted to tools and not vice versa.

12.2 Evaluating and Selecting Test Tools

Systematic tool selection is done in several stages or phases:

1. Principal decision to use a tool

2. Identification of the requirements

3. Evaluation

4. Selection

Two different standards may help in the selection of tools: [IEEE 1209] and [ISO 14102]. Both standards are suited for practical use and similar in content; however, both name and structure the individual phases of the process differently. ISO 14102 is noticeably more detailed than IEEE 1209 and more oriented toward an organization-wide selection process. IEEE 1209 is more compact and probably better suited for smaller companies or where decision making is limited to individual teams. The techniques described here are the result of years of practical experience and essentially compatible with both standards.

Standards for tool selection

The following sections will deal in detail with each of the main phases mentioned above and the subphases they contain.

12.2.1 Principal Decision Whether to Use a Tool

Identifying and Quantifying the Goals

Prior to the procurement of a tool, the purpose of its deployment must be identified:

![]() Which test activities are to be improved or supported?

Which test activities are to be improved or supported?

![]() What are the expected benefits or savings and/or improvements?

What are the expected benefits or savings and/or improvements?

These objectives must be quantified and prioritized:

![]() Are new processes or activities to be introduced as a result of the tool?

Are new processes or activities to be introduced as a result of the tool?

![]() What are the consequences?

What are the consequences?

Again, objectives must be quantified.

These objectives must be understood by all the stakeholders. If goals are unclear, badly communicated, or contradictory, and if a tool is acquired without the knowledge of the person who is supposed to work with it, it is quite likely that it will not be used but rather assigned a permanent location in some dusty cabinet.

Traceable objectives

Considering Possible Alternative Solutions

Once the problems that need to be addressed have been accurately identified, the following question must be raised: Can all these objectives be achieved with only one tool or are there any alternatives?

![]() Are the objectives feasible and can they only be covered by a tool? Or could it be possible that the root causes of the problems that we want to resolve are down to incomplete or out-of-date process documents, insufficient staff qualification, or bad communication channels? In this case, we ought of course to eliminate these insufficiencies directly rather than try to conceal them with the introduction of a tool.

Are the objectives feasible and can they only be covered by a tool? Or could it be possible that the root causes of the problems that we want to resolve are down to incomplete or out-of-date process documents, insufficient staff qualification, or bad communication channels? In this case, we ought of course to eliminate these insufficiencies directly rather than try to conceal them with the introduction of a tool.

Evaluate alternatives.

![]() Would it be possible to cover the objectives by extending or parameterizing a tool that already exists or by providing suitable working instructions to make its application easier? If we cannot give a clear no to this question, it makes sense to at least include the existing tool and additional measures in the subsequent selection process.

Would it be possible to cover the objectives by extending or parameterizing a tool that already exists or by providing suitable working instructions to make its application easier? If we cannot give a clear no to this question, it makes sense to at least include the existing tool and additional measures in the subsequent selection process.

![]() Would the in-house or commissioned development of a tool be a possible alternative to an existing tool on the market. This need not necessarily impede continuation of the selection process; the decision in favor of a new development may be the end result of our evaluation.

Would the in-house or commissioned development of a tool be a possible alternative to an existing tool on the market. This need not necessarily impede continuation of the selection process; the decision in favor of a new development may be the end result of our evaluation.

![]() Perhaps the best thing would be to accept the status quo and to save the effort of introducing a new tool? To answer this question, we need to do a cost-benefit analysis.

Perhaps the best thing would be to accept the status quo and to save the effort of introducing a new tool? To answer this question, we need to do a cost-benefit analysis.

Cost-Benefit Analysis

Principally, based on a quantified evaluation of the goals, the expected benefits or savings from tool deployment are set against the various direct and indirect costs, some of which are already known early, whereas others can be ascertained only during subsequent steps. Consequently, the cost-benefit analysis needs perhaps be questioned and revised several times during the selection phase.

![]() To start with, the direct costs for tool acquisition and deployment are to be identified. Of course, costs need to be determined for each evaluation candidate, so in practice, this step can only be completed during the evaluation phase:

To start with, the direct costs for tool acquisition and deployment are to be identified. Of course, costs need to be determined for each evaluation candidate, so in practice, this step can only be completed during the evaluation phase:

Direct costs

• How much does one license cost?

• What is the nature of the licensing model? Does it allow one nontransferable installation per user or workplace or is it based on the maximum number of concurrent users? What does the expected usage profile look like in the organization; i.e., what is the expected number of users and average operating life?

Caution: Some tool producers have started to sell licenses that are valid for only one specific project, thus limiting the use of the tool and making amortization much more difficult.

• What is the average update or upgrade cost and what is the update or upgrade frequency?

• Is a service maintenance contract offered for support, updates, and, where required, upgrades? If so, what are the costs?

Economically, tool leasing may make more sense than buying, particularly if maintenance is included in the leasing agreement. Has such a model been offered?

• Is there a rebate scale based on the number of purchased licenses?

• Depending on the tool’s application, the number of users may vary largely between the minimal, average, and maximum number of users (for example, tools supporting test execution are only needed for test execution cycles). Is there a model for peak licenses that can be used temporarily during peak periods?

![]() Cost considerations are also necessary for the evaluation itself:

Cost considerations are also necessary for the evaluation itself:

• How much staff is tied up for this task and for how long?

• What kind of hard and software environment must be set up?

• Is there a need to purchase software licenses for the evaluation period or can they be obtained from the toolmakers free of charge?

![]() Now the indirect costs need to be considered:

Now the indirect costs need to be considered:

Indirect costs

• Do the tool’s system requirements necessitate possible hard- and software upgrading of the testers’ workstations?

• How expensive is a new installation, an update or upgrade installation, and its configuration? Is there support for centralized installation management?

• What are the training costs involved to have users qualified?

• What is the effort for setting up an operational concept, i.e., the necessary adaptations/enhancements to the process documentation?

• Are customer-specific adaptations to interfaces necessary or do we need to create new ones? How much effort do we need for such customizations? Can the tool manufacturer provide this kind of support or can he find a competent partner for us?

Costs must be weighed against the expected benefit and quantified as much as possible, including, for example, the following:

Quantify the benefits.

![]() Resource savings through more efficient task performance

Resource savings through more efficient task performance

![]() Possible extension of test coverage without, however, spending more resources

Possible extension of test coverage without, however, spending more resources

![]() Enhanced repetition accuracy and precision of test task execution

Enhanced repetition accuracy and precision of test task execution

![]() Standardization of test documentation

Standardization of test documentation

![]() Economical generation of complete standards-compliant documentation

Economical generation of complete standards-compliant documentation

![]() More effective use of test teams less engaged in repetitive routine activities

More effective use of test teams less engaged in repetitive routine activities

![]() Increased test team satisfaction

Increased test team satisfaction

Identification of Constraints

Finally, we need to localize further possible cost factors, constraints, and negative effects of introducing tools that we have not thought of before. This can be done, for example, in a brainstorming session:

![]() Could the introduction of the tool negatively affect other processes—e.g., through the loss of an interface supported by the new tool’s predecessor?

Could the introduction of the tool negatively affect other processes—e.g., through the loss of an interface supported by the new tool’s predecessor?

![]() Which factors or persons can negatively affect tool selection? Can we guarantee that selection is made objectively? There are sometimes people in an organization who are generally biased against innovations and specifically against new tools and who would prefer developing their own tool rather than buy one off the shelf.

Which factors or persons can negatively affect tool selection? Can we guarantee that selection is made objectively? There are sometimes people in an organization who are generally biased against innovations and specifically against new tools and who would prefer developing their own tool rather than buy one off the shelf.

![]() What could happen after the tool has been chosen to turn its implementation into a failure? Does its implementation put a running project at such risk that the required quality or planned schedules cannot be kept?

What could happen after the tool has been chosen to turn its implementation into a failure? Does its implementation put a running project at such risk that the required quality or planned schedules cannot be kept?

Such risk factors must be documented as constraints influencing the tool selection and implementation processes and must be part of a consistent risk management.

Use risk management to deal with possible constraints.

12.2.2 Identifying the Requirements

One can assume that in most cases several tool alternatives can be used to achieve a particular goal. Without detailed preparation and serious comparisons between the alternatives, we run the serious risk of wasting a lot of money on the acquisition and implementation of a tool that will be used little or, worse, not at all.

The comparison must be fair and traceable. During the preparatory stages, the set of requirements collected from the different interest groups must be as comprehensive as possible. The required functional and nonfunctional features such as performance and usability are derived from the tool’s deployment goals:

![]() Collection of the user requirements: Clear identification of the goals of all involved stakeholders; classification and prioritization of these requirements

Collection of the user requirements: Clear identification of the goals of all involved stakeholders; classification and prioritization of these requirements

Same requirements process as in software development

![]() Analysis: Summary of similar and identification of contradictory requirements; conflict resolution

Analysis: Summary of similar and identification of contradictory requirements; conflict resolution

![]() Specification: Itemization of the user requirements into a set of selection criteria; written summary of the criteria, adopting the prioritizations already made for the user requirements

Specification: Itemization of the user requirements into a set of selection criteria; written summary of the criteria, adopting the prioritizations already made for the user requirements

![]() Review: Presentation of the requirements to the stakeholders for review and joint release

Review: Presentation of the requirements to the stakeholders for review and joint release

Prioritization and traceability are very important aspects of this process; i.e., at each step it must be absolutely clear who made the requirement (in order to be able to resolve later requirements conflicts or to resolve cases of doubt) and how important it is for that particular person or for the entire, future user group. Since it is very unlikely that one product will fulfill all the requirements, the final selection of the tool must be based on the importance attributed to each of the requirements and the frequency with which they have been raised.

Functional Requirements of the Test Tool

Typical functional requirements regarding a tool could look like this:

Examples of functional requirements

![]() “The test management system requires an interface for importing requirement objects from the requirement’s management database; besides importing the requirement’s content, all predefined and user-defined attributes must also be importable.”

“The test management system requires an interface for importing requirement objects from the requirement’s management database; besides importing the requirement’s content, all predefined and user-defined attributes must also be importable.”

![]() “The test automation system must be able to recognize and control all the objects of the test object’s graphical user interface (e.g., test objects implemented in Java Swing).”

“The test automation system must be able to recognize and control all the objects of the test object’s graphical user interface (e.g., test objects implemented in Java Swing).”

Nonfunctional Requirements of the Test Tool

Principally, this class of requirements can be collected using the same techniques used for the functional features; in our experience, however, they are more difficult to collect. Typical requirements, like “the system must be user-friendly” and “the system must be fast enough for 20 parallel users” cannot be directly validated against the evaluation candidates. On the one hand, one must try to describe the required nonfunctional features in such a way as to make them clearly quantifiable (e.g., “the system must provide a user interface following the standard MS Windows style guide allowing easy induction and facilitating daily operation”). On the other hand, provision must be made for some nonfunctional tests without which some of the nonfunctional requirements (e.g., usability and performance) cannot be verified without.

Here are some examples for a useful criteria catalogue:

![]() “The terminology used in the test management system must be compliant with the ‘ISTQB Certified Tester’ glossary.”

“The terminology used in the test management system must be compliant with the ‘ISTQB Certified Tester’ glossary.”

Examples for nonfunctional requirements

![]() “Test management system performance must satisfy simultaneous use by the test manager, three test designers, and five testers with typical user profiles; that is, average system response time may not exceed 3 seconds.”

“Test management system performance must satisfy simultaneous use by the test manager, three test designers, and five testers with typical user profiles; that is, average system response time may not exceed 3 seconds.”

The results of the previous cost-benefit considerations are listed as special nonfunctional requirements. As mentioned before, some of them can be assessed only during the actual evaluation. Moreover, functional, nonfunctional, and financial requirements and requirements pertaining to product attendant services can be uniformly treated and managed in the criteria catalogue (more on that later) so that the evaluation result reflects both costs and benefits.

Requirements of Product Attendant Services

Today’s software development tools have acquired a complexity that often necessitates the manufacturers support during their implementation phase and operational lifetime. A vendor providing no after-sales service could be considered less attractive than, for example, a solution vendor providing a broad spectrum of products and services. The following questions may help in the assessment of product attendant services:

![]() What kind of product support may we expect—an outsourced call center or a vendor’s own in-house competence center? This may perhaps give us important hints pertaining to staff competency; further statements about competency can only be made during the evaluation period and after direct contact with support.

What kind of product support may we expect—an outsourced call center or a vendor’s own in-house competence center? This may perhaps give us important hints pertaining to staff competency; further statements about competency can only be made during the evaluation period and after direct contact with support.

Examples: Product attendant services

![]() At what times and by which means (e-mail, telephone, on-site) is support available? Are maximum response times guaranteed? What are they?

At what times and by which means (e-mail, telephone, on-site) is support available? Are maximum response times guaranteed? What are they?

![]() In the case of international organizations, is on-site support available in different countries? Are multiple language telephone and e-mail support available? Are different time zones supported?

In the case of international organizations, is on-site support available in different countries? Are multiple language telephone and e-mail support available? Are different time zones supported?

![]() Does the tool manufacturer offer training, introductory packages, coaching, and consulting by competent staff during operational use?

Does the tool manufacturer offer training, introductory packages, coaching, and consulting by competent staff during operational use?

![]() How reliable is the manufacturer? That is, how long has he been on the market and how stable is he? For example, does his portfolio consist of several or only one product?

How reliable is the manufacturer? That is, how long has he been on the market and how stable is he? For example, does his portfolio consist of several or only one product?

![]() How good is the quality of the products? That is, how many defects are detected in the product, documentation, etc. during the evaluation period?

How good is the quality of the products? That is, how many defects are detected in the product, documentation, etc. during the evaluation period?

The DIN/ISO 9126 standard provides help in identifying functional and nonfunctional requirements; see chapter 5 and [Spillner 07, section 2.1.3]. The [ISO 14102] standard, too, contains a catalogue of generic requirements for different classes of tools for the software development process.

Creating the Criteria Catalogue

Once all requirements have been collected, the criteria catalogue is created. Typically, the catalogue is structured in the form of a so-called benefit value analysis using a spreadsheet so that the evaluation results can automatically be condensed to partial and total evaluations of the individual tools.

Create a criteria table.

The objective is to grade each tool in such a way as to show its usability with respect to its required operational profile.

Criteria catalogues can be set up using very diverse techniques. Here is some advice regarding structure:

1. Group Forming

Individual requirements can be sorted into groups so that later on strengths and weakness profiles of individual tools can be worked out in relation to these groups. Ideally, these groups correspond to the usage objectives drafted during the target definition phase. It may make sense to give each of the groups its own group weighting, which then finds its way into the identification of the overall result. This weighting reflects the prioritization of the original objectives.

2. Classification of the Selection Criteria

Each criterion is now classed into one of the groups where it is weighted again to represent its local importance with respect to the other requirements in the same group.

3. Definition of Metrics for the Criteria

During the evaluation, each criterion is to be valued by a number (a mark) based on a suitable value range valid for the whole catalogue (i.e., 0 = not suitable to 5 = ideal). Each criterion must be accompanied by concrete classification notes explaining the circumstances by which the tool is classified in relation to the criterion.

12.2.3 Evaluation

The evaluation constitutes a well-ordered procedure for the neutral, complete, and reproducibly documented evaluation of the worked-out criteria.

Selection of Evaluation Candidates

Depending on available budget, an evaluation may comprise one or several tools. A suitable entry point for the localization of suitable candidates is an Internet search, looking, for example, for suitable key words such as “Test Automation Tool”. To further simplify the search, already available tool lists on the Internet are useful:

![]() [URL: TESTINGFAQ]

[URL: TESTINGFAQ]

![]() [URL: SQATEST]

[URL: SQATEST]

![]() [URL: APTEST]

[URL: APTEST]

![]() [URL: OSTEST]

[URL: OSTEST]

WWW links related to test tools

Market studies and evaluation reports are also possible sources. These, too, are to a large degree accessible via the Internet; however, in most cases you will be charged money for them. On the other hand, they will save you part of your evaluation effort.

Market studies

Here are some representative sites:

![]() The OVUM Report on Software Testing Tools [URL: OVUM]

The OVUM Report on Software Testing Tools [URL: OVUM]

![]() The Software Test Tools Evaluation Center [URL: STTEC]

The Software Test Tools Evaluation Center [URL: STTEC]

It is highly likely that such a search results in a larger number of candidates than can be evaluated within the available scope. In order to be able to draw up a final list, you can take the following steps:

Other selection criteria

![]() Individual selection criteria as KO criteria; i.e., if a particular tool cannot meet this criterion, it is struck off the list of candidates. An in-depth study of the tool documentation must make it possible to evaluate the criteria.

Individual selection criteria as KO criteria; i.e., if a particular tool cannot meet this criterion, it is struck off the list of candidates. An in-depth study of the tool documentation must make it possible to evaluate the criteria.

![]() Interviewing current reference customers.

Interviewing current reference customers.

![]() Interviewing users of the tool at conferences and tool exhibitions.

Interviewing users of the tool at conferences and tool exhibitions.

![]() Reviewing test reports from projects already using the tool.

Reviewing test reports from projects already using the tool.

Planning and Setup

Prior to actual execution, typical project management activities must be performed:

Evaluation project planning

![]() Agree on staffing for the evaluation and selection team. In addition to the project leader, who in a larger company may come from the IT department, team members are required from each department and role that is going to work with the tool.

Agree on staffing for the evaluation and selection team. In addition to the project leader, who in a larger company may come from the IT department, team members are required from each department and role that is going to work with the tool.

![]() Individualization of the criteria catalogue. “Non-controversial”, objectively evaluatable criteria need not be dealt with by each team member separately. To avoid redundant effort, it can be covered by one person (for example, installability on different platforms). Evaluation criteria that for subjective or individual reasons—or depending on department or role of the team members—may be differently evaluated, need to be considered by the whole team (for example usability issues). You must keep in mind that experience and training of team members must be documented together with the evaluation results.

Individualization of the criteria catalogue. “Non-controversial”, objectively evaluatable criteria need not be dealt with by each team member separately. To avoid redundant effort, it can be covered by one person (for example, installability on different platforms). Evaluation criteria that for subjective or individual reasons—or depending on department or role of the team members—may be differently evaluated, need to be considered by the whole team (for example usability issues). You must keep in mind that experience and training of team members must be documented together with the evaluation results.

![]() Definition of the evaluation scenarios. The closer team members keep to the predefined procedure, the more goal oriented the evaluation process will be. Providing a scenario or sample exercises (as is common in usability testing, too) has this effect but may result in team members adopting a “tunnel vision” that prevents them from noticing incidental irregularities.

Definition of the evaluation scenarios. The closer team members keep to the predefined procedure, the more goal oriented the evaluation process will be. Providing a scenario or sample exercises (as is common in usability testing, too) has this effect but may result in team members adopting a “tunnel vision” that prevents them from noticing incidental irregularities.

![]() Time and resource planning.

Time and resource planning.

![]() Documentation planning. What is to be issued by the project in addition to the completed criteria catalogue (sample data and sample projects in the tool, etc.)?

Documentation planning. What is to be issued by the project in addition to the completed criteria catalogue (sample data and sample projects in the tool, etc.)?

![]() Provision of resources. For instance, of the necessary hardware needed for installing the tool. In principle, it needs to be decided whether each tool will be installed on a separate workstation and whether members of the evaluation team change workstations for evaluation or if all tools are installed on each of the workstations. A side effect of the latter is that during evaluation, several tools can be run in parallel, thus making comparisons easier—with the risk that the software tools may adversely affect and destabilize each other.

Provision of resources. For instance, of the necessary hardware needed for installing the tool. In principle, it needs to be decided whether each tool will be installed on a separate workstation and whether members of the evaluation team change workstations for evaluation or if all tools are installed on each of the workstations. A side effect of the latter is that during evaluation, several tools can be run in parallel, thus making comparisons easier—with the risk that the software tools may adversely affect and destabilize each other.

![]() Organization of the installation media and, if necessary, of evaluation licenses.

Organization of the installation media and, if necessary, of evaluation licenses.

![]() Preparation of the workstations through installation of the tools, provision of the criteria catalogue for online completion, etc.

Preparation of the workstations through installation of the tools, provision of the criteria catalogue for online completion, etc.

![]() Briefing of the team members.

Briefing of the team members.

Tool Evaluation Based on Criteria

To prepare for the evaluation, it may be a good idea to organize, together with the team members, a presentation of the tool by the manufacturer. This will provide a first impression of how the tool works and an opportunity to ask the manufacturer some concrete questions. It also provides the opportunity to validate some evaluation criteria without much effort.

It makes sense to ask for the presentation to be run based on a small self-developed test task, since the manufacturer’s ready-made “video-cliplike” demos naturally have some one-sided bias toward the strengths of its respective tool. The tool manufacturer’s preparedness to provide such individualized presentations will of course grow in proportion to the customer’s preparedness to pay some sort of consultancy fee for this kind of service.

This expenditure quite often pays off by bringing to light tool usage problems and pitfalls at this early stage rather than later in a project and under time pressure.

Individual, criteria-based evaluation

Subsequently, in accordance with resource and time planning, each team member is to evaluate the tool individually and independently through studying and evaluating the documentation, working with given scenarios, and completing the criteria catalog. The latter can be done either in parallel to the other activities or very soon afterward. Any observed irregularities or precarious findings and any subjective impressions are to be documented.

Report Creation

After completion of the evaluation, the evaluation team leader consolidates the results and checks on completeness and consistency. Results are compiled in form of an evaluation report that typically contains the following information:

![]() Name, manufacturer, and version identifier of the evaluated tool, as well as a brief tool profile in form of a “management abstract”

Name, manufacturer, and version identifier of the evaluated tool, as well as a brief tool profile in form of a “management abstract”

![]() Evaluation base; i.e., the used hard- and software configuration

Evaluation base; i.e., the used hard- and software configuration

![]() Background; for example, process and process phase in which the tool is to be deployed

Background; for example, process and process phase in which the tool is to be deployed

![]() Short description of the evaluation process providing, for example, a reference to one of the norms mentioned above (or to this section of the book)

Short description of the evaluation process providing, for example, a reference to one of the norms mentioned above (or to this section of the book)

![]() Detailed evaluation results per criterion

Detailed evaluation results per criterion

![]() A list containing subjective observations, together with the observers’ profiles

A list containing subjective observations, together with the observers’ profiles

Detailed evaluation report

12.2.4 Selecting the Tool to Be Procured

Consolidating Evaluations and Preparing for Decision

If possible, the evaluation results must be consolidated to one individual figure, an “overall score” for each tool. This is done by multiplying the group and criteria weightings with the values assigned during the evaluation and subsequent summation of the results. Provision of a graphical illustration may help in the evaluation of the results.

Consolidating the evaluation results

ISO 14102 describes further evaluation and decision techniques in appendix B.

When consolidating individual evaluation results, it is possible to work with simple multiplication and addition. However, in order to work out tool differences more clearly, one may, for instance, also work with square weighting factors. Moreover, a principal decision has to be made whether an absolute evaluation of the tool is to be achieved in relation to the ideal objectives (i.e., an evaluation of practically all tools with considerably less than 100%) or whether the tools are to be evaluated relative to each other (i.e., one tool will achieve 100%).

The former focuses on the question whether, within a relevant scope, the set objectives can be achieved at all by one or several of the tools, whereas the latter emphasizes the differentiation of the individual features among the tools themselves.

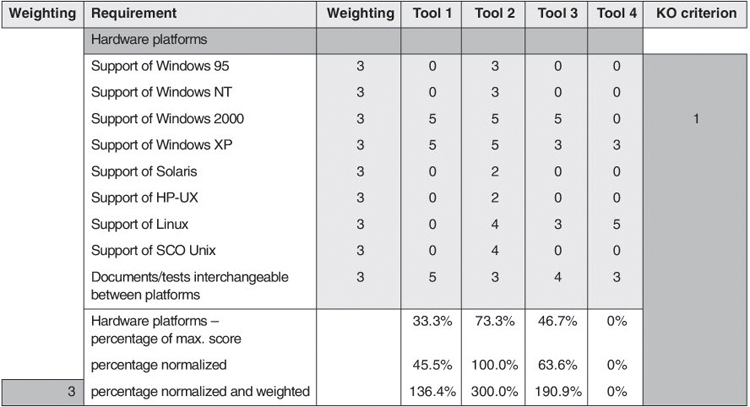

Example for a criteria catalogue and evaluation

Figure 12-1 illustrates a spreadsheet-based Microsoft Excel evaluation catalogue. It shows the structure of a rating group used to evaluate the tools in absolute and relative terms to each other or, in other words, calculating one value with respect to the maximum obtainable number of scores and one standard value relative to the highest score of a tool in the group. Subsequently, the normalized score is weighted with the importance of the group. In addition, the KO criteria mentioned in section 12.2.3 are used to obtain a consistent representation of the decision basis in one document.

Figure 12–1 Rating of a criteria catalogue

The example shows the evaluation of the tools’ usability on different platforms, with the value ranges shown in table 12-1.

Table 12–1 Classification of tool criteria

Value |

Classification of tool usability on operating system X |

0 |

Not supported |

1 |

Installable, but no manufacturer support |

2 |

Usable but unstable |

3 |

Usable, requires increased effort; for example, loading patches into the operating system |

4 |

Usable with some restrictions; for example, GUI inconsistent with the rest of the operating systems, no comprehensive drag&drop or similar |

5 |

Unconditionally usable |

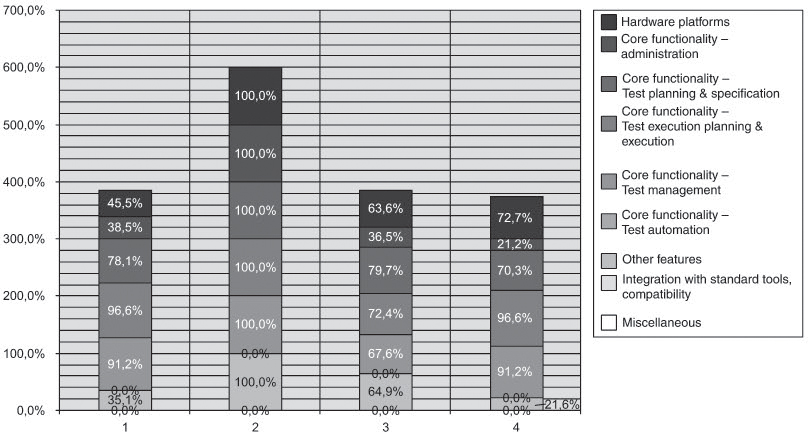

With the evaluation of all criteria completed, the ratings are graphically shown as in figure 12-2.

Figure 12–2 Rating according to area

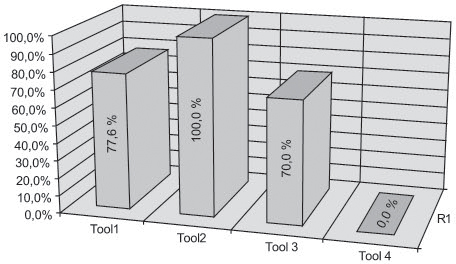

The overall evaluation shows tool 2 as number 1, whereas tool 4 is a dead loss because it cannot meet one KO criterion relating to platform compatibility (see figure 12-3):

Figure 12–3 Score in % of maximum point

[URL: Tooleval-imbus] offers a simple, readily implemented criteria catalogue for downloading. For practical use, names, group numbers, and descriptions of the individual criteria need to be adapted. Partial group results, overall results per tool, and a graphical evaluation are automatically calculated.

Evaluation catalogue for downloading

Decision Making

Typically, the final decision on which tool to use is made by management based on the technical data and sometimes further, nontechnical criteria.

Winner by points

Ideally, after evaluation of the criteria catalogue and the evaluation report, we will have a clear “winner by points”. The evaluation may also possibly result in more than one winner whose overall ratings are very close, or none of the evaluated tools meets the requirements sufficiently enough to justify its introduction.

In case of a neck-and-neck result, further decision criteria may be needed. This may be done either by modifying the criteria prioritization, adding criteria for an ex-post evaluation, or—in many cases the most practical way—by piloting some of the tools under consideration. This way particularly, the evaluation of some nonfunctional features such as usability will be put on a broader footing.

In case of a close run ex post evaluation or piloting

If none of the selected tools turns out to be suitable, the conclusion may be to invest in in-house development, modify or enhance an already existing tool, or reconsider tool acquisition altogether.

Possible result: Do not buy any tool!

12.3 Introduction of Tools

Once the decision is made, it needs to be finally validated by comparing the original objectives and requirements with the evaluation results in a formal review.

“A fool with a tool is still a fool”

The best tool is useless if it is not prudently introduced. The basis for long-term tool success is the establishment of an adequate test process framework.

The process maturity model TPI (see section 7.3.2) comprehensibly identifies the basis for tool success—particular parts of the test processes must show a certain minimum maturity before the use of a tool makes sense. For example, it does not make any sense to introduce a test automation tool without prior establishment of formal test specification methods. TPI, therefore, forms a good basis for identifying test process improvement actions that might be necessary prior to the introduction of a tool and for obtaining concrete implementation assistance. Conversely, test tools will positively affect the maturity grade of particular activities in the test process or at least facilitate the improvement of these activities.

Test process maturity and tools are closely connected.

The following are examples of important tasks to perform prior to the introduction of the tools:

![]() Ensure the necessary maturity of the basic processes to identify the necessary improvement actions.

Ensure the necessary maturity of the basic processes to identify the necessary improvement actions.

![]() Create a basis for the broad implementation of the improvement potentials or for the achievement of the original objectives regarding tool usage (see section 12.2.1).

Create a basis for the broad implementation of the improvement potentials or for the achievement of the original objectives regarding tool usage (see section 12.2.1).

As with the selection procedure, standards exist for the introduction of tools. [IEEE 1348] and [ISO 14471] serve as orientation guides for the correct procedure and again contain brief descriptions of the target finding and selection processes. They also add essential concepts to aid in the introduction of the tool: pilot project and distribution.

Standards regarding tool implementation

12.3.1 Pilot Project

A pilot project serves to validate the usability of the tools. Ideally, its duration is manageable but not too short (3-6 months) and is of a size and criticality representative of the organization. Management must grant the pilot project sufficient support—for instance, by providing additional resources.

Keep the pilot project manageable.

The pilot project evaluates whether the (quantified) objectives for tool deployment can actually be achieved. For this reason, it is important to provide timely definitions of suitable metrics and necessary measurement techniques.

A further goal is the validation of the diverse assumptions of the initial cost-benefit estimation, for example, of the estimated cost for tool introduction and training. Staff training, tool installation, tool configuration, etc. must also be accompanied by corresponding effort measurements.

These measurements and other observations during the pilot project are the basis for essential data that contributes to the later creation of the distribution plan.

Another important outcome of a pilot project is a concept of operations that should, for instance, contain the following information:

Concept of operations as a result of a pilot project

![]() Architectural changes to current testware (if, for example, previous specification documents are to be created with a test design tool or if the design method for test automation changes)

Architectural changes to current testware (if, for example, previous specification documents are to be created with a test design tool or if the design method for test automation changes)

![]() Role distribution and responsibilities, necessary staff training

Role distribution and responsibilities, necessary staff training

![]() New or redefined procedures involving the tool

New or redefined procedures involving the tool

![]() Naming conventions and rules for configuration management

Naming conventions and rules for configuration management

![]() Necessary interfaces to the existing work environment

Necessary interfaces to the existing work environment

![]() Changes in technical infrastructure, such as hardware or software requirements, backup concept, setup of databases and other back office components

Changes in technical infrastructure, such as hardware or software requirements, backup concept, setup of databases and other back office components

In the pilot project, some introduction-specific roles must be assigned that are typically adopted by members of the project teams themselves (next to the project-specific roles such as test manager or tester, which they may also adopt). The choice of the right pilot project depends also on the assignment of several roles: coach (or initiator), change manager (or integrator), tool master, and sponsor.

Important roles in the pilot project

The Coach (or Initiator)

He is convinced of the tool and often it is he who initiates or strongly recommends the tool’s acquisition. He quickly recognizes potential problems and positive aspects of the tool’s operational use.

This, together with his enthusiasm, pushes the introduction of the tool. He is the team’s catalyst and likes to cooperate with it.

The Change Manager (or Integrator)

Compared to the initiator, his method is more methodical and deliberate; consequently, he can plan, organize, and document the tool’s introduction better than the initiator. Often he is the main author of the resulting concept of operations.

The Tool Master

He has cutting-edge expertise regarding tool usage and provides internal support. He often acts as the main contact for external product support, channeling internal queries and communicating support information.

The Sponsor

To assure proper recognition of these roles in addition to the project roles already described, a management sponsor is needed, also to gain management awareness of the additional risks that the introduction of the tool may imply for the pilot project. The sponsor officially supports the introduction of the tool and keeps team members free for the additional task.

After completion of the pilot project, an assessment is made whether or not the introduction objectives can be reached and whether the operational concept allows the tool to fit seamlessly into the existing processes.

Results evaluation and decision

Piloting outcomes, for example, can be facts that restrict the application of the tool:

![]() The tool does not address all objectives except for the most important ones.

The tool does not address all objectives except for the most important ones.

![]() The tool can only be economically used for a particular type of project or corporate division.

The tool can only be economically used for a particular type of project or corporate division.

![]() In order to use the tool successfully, tool modifications, further training, or consulting services by the manufacturer are necessary.

In order to use the tool successfully, tool modifications, further training, or consulting services by the manufacturer are necessary.

![]() Piloting was successful, but problems were discovered during piloting concerning the tool selection and/or tool introduction process, necessitating future changes to the process.

Piloting was successful, but problems were discovered during piloting concerning the tool selection and/or tool introduction process, necessitating future changes to the process.

12.3.2 Distribution

If piloting results in a positive decision, the test tool and the concept of operations will be rolled out into the organization via the process improvement scheme.

Ideally, during the rollout phase, participants of the pilot projects serve as multipliers and are used as internal consultants in the next projects to accompany the tool’s further introduction.

Here are some typical questions for rollout planning and execution:

Keep rollout flexible.

![]() Is distribution supposed to be done in one step in the form of a “big bang” throughout the entire organization or will there be a step-by-step changeover in the individual projects or organizational units? The former carries a higher risk of far-reaching productivity setbacks in case the introduction fails totally or partially; the latter requires more detailed planning and carries the risk of temporary incompatibilities or inconsistencies regarding internal cooperation during the conversion period.

Is distribution supposed to be done in one step in the form of a “big bang” throughout the entire organization or will there be a step-by-step changeover in the individual projects or organizational units? The former carries a higher risk of far-reaching productivity setbacks in case the introduction fails totally or partially; the latter requires more detailed planning and carries the risk of temporary incompatibilities or inconsistencies regarding internal cooperation during the conversion period.

![]() What are the total conversion costs based on the original cost-benefit considerations and the observations made during the pilot projects?

What are the total conversion costs based on the original cost-benefit considerations and the observations made during the pilot projects?

![]() What is done about the resistance of individual team members or groups that may, for example, “have grown to love” the previous tool?

What is done about the resistance of individual team members or groups that may, for example, “have grown to love” the previous tool?

![]() What is done to ensure the necessary staff training?

What is done to ensure the necessary staff training?

12.4 Summary

![]() Tools, by nature, cannot solve process-related problems.

Tools, by nature, cannot solve process-related problems.

![]() Prior to the introduction of a test tool, the goals/objectives for using it, a cost-benefit analysis, and an evaluation of alternative solutions must be performed. This way, unnecessary tool acquisition is avoided and it is ensured that a tool will actually be used.

Prior to the introduction of a test tool, the goals/objectives for using it, a cost-benefit analysis, and an evaluation of alternative solutions must be performed. This way, unnecessary tool acquisition is avoided and it is ensured that a tool will actually be used.

![]() Objectives are primarily related to increased efficiency of existing test activities or to the development of completely new possibilities through the introduction of new technologies provided by the tool. In both cases, it is necessary to try to quantify the intended benefit of these improvements in advance.

Objectives are primarily related to increased efficiency of existing test activities or to the development of completely new possibilities through the introduction of new technologies provided by the tool. In both cases, it is necessary to try to quantify the intended benefit of these improvements in advance.

![]() When finally comparing costs with benefit, it is important to consider not only the acquisition and licensing costs but also the evaluation, introduction, and operational costs.

When finally comparing costs with benefit, it is important to consider not only the acquisition and licensing costs but also the evaluation, introduction, and operational costs.

![]() If a decision is made to purchase a tool, in most cases one candidate must be chosen from among several products on the market. First, these candidates must be chosen by reviewing the market. Different tool lists are helpful and available via the Internet.

If a decision is made to purchase a tool, in most cases one candidate must be chosen from among several products on the market. First, these candidates must be chosen by reviewing the market. Different tool lists are helpful and available via the Internet.

![]() A criteria catalogue is used whereby the criteria are derived from the requirements. These may comprise functional as well as nonfunctional features of the actual tools but also requirements related to accompanying services and the tool manufacturer itself.

A criteria catalogue is used whereby the criteria are derived from the requirements. These may comprise functional as well as nonfunctional features of the actual tools but also requirements related to accompanying services and the tool manufacturer itself.

![]() The evaluation catalogue is best structured in such a way that a weighting factor and a measure for evaluation is assigned to each criterion. A “rating” is then calculated for each tool during the evaluation.

The evaluation catalogue is best structured in such a way that a weighting factor and a measure for evaluation is assigned to each criterion. A “rating” is then calculated for each tool during the evaluation.

![]() The evaluation itself must be well planned and carried out in the form of a project, and it must be staffed with sufficient resources. If the first evaluation does not yield a clear result, the evaluation may be extended using additional criteria or transferred to a follow-up pilot where candidates are compared in parallel.

The evaluation itself must be well planned and carried out in the form of a project, and it must be staffed with sufficient resources. If the first evaluation does not yield a clear result, the evaluation may be extended using additional criteria or transferred to a follow-up pilot where candidates are compared in parallel.

![]() The subsequent introduction of the selected tools must again be conducted in form of a project, following an orderly, step-by-step approach:

The subsequent introduction of the selected tools must again be conducted in form of a project, following an orderly, step-by-step approach:

• Initially, the pilot project is used to draw up the concept of operations for the tool. This concept supplements or modifies existing process definitions.

• During the pilot project, some staff members adopt important roles that speed up the introduction process: the initiator, the integrator, and the tool master.

• Once the pilot project has been successfully completed, the tool and the concept of operations are rolled out. Former members of the pilot project may now act as multipliers. The majority of the decisions regarding tool distribution again require proper project planning and execution.