Maintenance

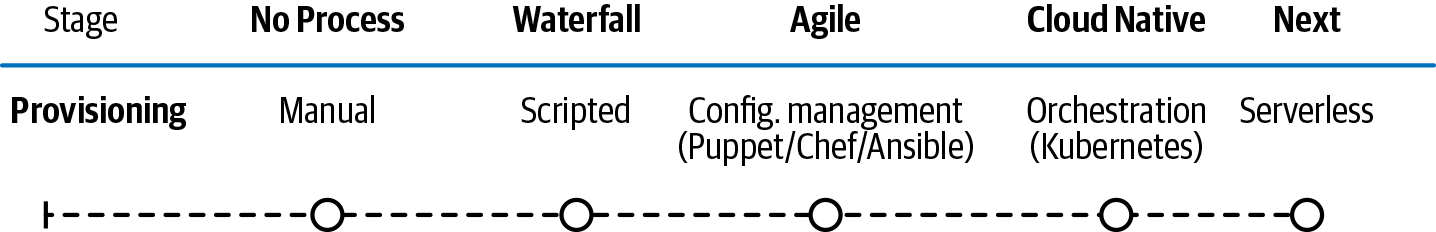

On this axis we assess how you monitor your systems and keep them running. It’s a broad spectrum, from having no process whatsoever to full automation with little or no human intervention. No Process/Ad Hoc means every now and then going in to see if the server is up and what the response time is. (And the somewhat embarrassing fact is, a lot of folks still do just that.) Alerting means having some form of automation to warn when problems arrive, but it is nowhere near fast enough for this new world because once a problem is alerted, a human being still needs to intervene. Comprehensive monitoring and full observability, where system behavior is observed and analyzed so problems can be predicted (and prevented) in advance, rather than responded to when they do happen, are an absolute necessity for cloud native. Figure 5-7 shows the range of Maintenance approaches we look for in a Maturity Matrix assessment.

Figure 5-7. Maintenance axis of the Cloud Native Maturity Matrix

No process: Respond to users’ complaints

The development and operations teams are alerted to most problems only when users encounter them. There is insufficient monitoring to flag issues in advance and allow engineers to fix them before the majority of users will hit them. System downtime may only be discovered by clients, or randomly. There is no alerting.

For diagnosing issues, administrators usually need to log in to servers and view each tool/app log separately. As a result, multiple individuals need security access to production. When fixes to systems are applied, there is a manual upgrade procedure.

This is a common situation in startups or small enterprises, but it has significant security, reliability, and resilience issues, as well as single points of failure (often individual engineers).

Waterfall: Ad-hoc monitoring

This consists of partial, and mostly manual, monitoring of system infrastructure and apps. This includes constant monitoring and alerting on basic, fundamental downtime events such as the main server becoming unresponsive.

Live problems are generally handled by the operations team and only they have access to production. Usually, there’s no central access to logs, and engineers must log in to individual servers for diagnosis, maintenance operations, and troubleshooting. Formal runbooks (documentation) and checklists exist for performing manual update procedures; this is very common in larger enterprises but still does not completely mitigate security, reliability, and resilience issues.

Agile: Alerting

Alerts are preconfigured on a variety of live system events. There is typically some log collection in a central location, but most of the logs are still in separate places.

Operations teams normally respond to these alerts and will escalate to developers if they can’t resolve the issue. Operations engineers still need to be able to log in to individual servers. Update processes, however, may be partially or fully scripted.

Cloud native: Full observability and self-healing

In full observability and self-healing scenarios, the system relies upon logging, tracing, alerting, and metrics to continually collect information about all the running services in a system. In cloud native you must observe the system to see what is going on. Monitoring is how we see this information; observability describes the property we architect into a system so that we are able to discern internal states through monitoring external outputs. Many issue responses happen automatically; for example, system health checks may trigger automatic restarts if failure is detected. Alternatively, the system may gradually degrade its own service to keep itself alive if, for example, resource shortages such as low disk space are detected (Netflix is famous for this). Status dashboards are often accessible to everyone in the business so that they can check the availability of the services.

Operations (sometimes now referred to as “platform”) engineers respond to infrastructure and platform issues that are not handled automatically. Live application issues are handled by development teams or system reliability engineers (SREs). The SRE role may be filled by individuals embedded in a DevOps team or separated into a dedicated SRE team.

Logs are all collected into a single place. This often includes distributed tracing output. Operations, developers, and SREs all have access to the logging location. They no longer have (or need) security access to production servers.

All update processes are fully automated and do not require access by individual engineers to individual servers.

Next: Machine learning (ML) and artificial intelligence (AI)

In the next generation of systems, ML and AI will handle operational and maintenance processes. Systems learn on their own how to prevent failures by, for instance, automatically scaling up capacity. Self-healing is the optimal way for systems to be operated and maintained. It is faster, more secure, and more reliable.