Chapter 5: Regression and ANOVA

Perform a Simple Regression and Examine Results

Understand and Perform Multiple Regression

Understand and Perform Regression with Categorical Data

Introduction

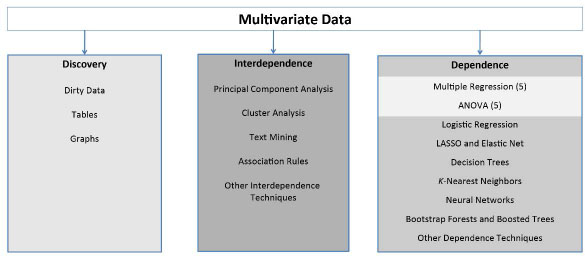

Simple and multiple regression and ANOVA, as shown in our multivariate analysis framework in Figure 5.1, are the dependence techniques that are most likely covered in an introductory statistics course. In this chapter, you will review these techniques, illustrate the process of performing these analyses in JMP, and explain how to interpret the results.

Figure 5.1: A Framework for Multivariate Analysis

Regression

One of the most popular applied statistical techniques is regression. Regression analysis typically has one or two main purposes. First, it might be used to understand the cause-and-effect relationship between one dependent variable and one or more independent variables: For example, it might answer the question, how does the amount of advertising affect sales? Or, secondly, regression might be applied for prediction—in particular, for the purpose of forecasting a dependent variable based on one or more independent variables. An example would be using trend and seasonal components to forecast sales. Regression analyses can handle linear or nonlinear relationships, although the linear models are mostly emphasized.

Perform a Simple Regression and Examine Results

Look at the data set salesperfdata.jmp. This data is from Cravens et al. (1972) and consists of the sales from 25 territories and seven corresponding variables:

● Salesperson’s experience (Time)

● Market potential (MktPoten)

● Advertising expense (Adver)

● Market Share (MktShare)

● Change in Market Share (Change)

● Number of accounts (Accts)

● Workload per account (WkLoad)

First, you will do only a simple linear regression, which is defined as one dependent variable Y and only one independent variable X. In particular, look at how advertising is related to sales:

1. Open the salesperfdata.jmp in JMP.

2. Select Analyze ▶ Fit Y by X.

3. In the Fit Y by X dialog box, click Sales and click Y, Response.

4. Click Adver and click X, Factor.

5. Click OK.

A scatterplot of sales versus advertising, similar to Figure 5.2 (without the red line), will appear. There appears to be a positive relationship, which means that as advertising increases, sales increase. To further examine and evaluate this relationship, click the red triangle and click Fit Line.

The Fit Line causes a simple linear regression to be performed and the creation of the tables that contain the regression results. The output will look like the bottom half of Figure 5.2. The regression equation is: Sales = 2106.09 + 0.2911 * Adver. The y-intercept and Adver coefficient values are identified in Figure 5.2 under Parameter Estimates.

The F test and t test, in simple linear regression, are equivalent because they both test whether the independent variable (Adver) is significantly related to the dependent variable (Sales). As indicated in Figure 5.2, both p-values are equal to 0.0017 and are significant. (To assist you in identifying significant relationships, JMP puts an asterisk next to a p-value that is significant with α = 0.05.) This model’s goodness-of-fit is measured by the coefficient of determination (R2 or RSquare) and the standard error (se or Root Mean Square Error), which in this case are equal to 35.5% and 1076.87, respectively.

Figure 5.2: Scatterplot with Corresponding Simple Linear Regression

Understand and Perform Multiple Regression

You will next examine the multiple linear regression relationship between sales and the seven predictors (Time, MktPoten, Adver, MktShare, Change, Accts, and WkLoad), which is equivalent to those used by Cravens et al. (1972).

Examine the Correlations and Scatterplot Matrix

Before performing the multiple linear regression, you should first examine the correlations and scatterplot matrix:

1. Select Analyze ▶ Multivariate Methods ▶ Multivariate.

2. In the Multivariate and Correlations dialog box, in the Select Columns box, click Sales, hold down the Shift key, and click WkLoad.

3. Click Y,Columns, and all the variables will appear in the white box to the right of Y, Columns, as in Figure 5.3.

4. Click OK.

Figure 5.3: The Multivariate and Correlations Dialog Box

As shown in Figure 5.4, the correlation matrix and corresponding scatterplot matrix will be generated. Examine the first row of the Scatterplot Matrix. There appear to be several strong correlations, especially with the dependent variable Sales. All the variables, except for Wkload, have a strong positive relationship (or positive correlation) with Sales. The oval shape in each scatterplot is the corresponding bivariate normal density ellipse of the two variables. If the two variables are bivariate normally distributed, then about 95% of the points would be within the ellipse. If the ellipse is rather wide (round) and does not follow either of the diagonals, then the two variables do not have a strong correlation. For example, observe the ellipse in the scatterplot for Sales and Wkload, and notice that the correlation is −.1172. The more significant the correlation, the narrower the ellipse, and the more it follows along one of the diagonals (for example Sales and Accts).

Figure 5.4: Correlations and Scatterplot Matrix for the salesperfdata.jmp Data

Perform a Multiple Regression

To perform the multiple regression, complete the following steps:

1. Select Analyze ▶ Fit Model.

2. In the Fit Model dialog box, as shown in Figure 5.5, click Sales and click Y.

3. Next, click Time, hold down the Shift key, and click WkLoad. Now all the independent variables from Time to WkLoad are highlighted.

4. Click Add, and now these independent variables are listed.

5. Notice the box to the right of Personality. It should say Standard Least Squares. Click the drop-down menu to the right and observe the different options, which include stepwise regression (which is discussed later in this chapter). Keep it at Standard Least Squares and click Run.

6. In the Fit Least Squares output, click the red triangle, and click Regression reports ▶ Summary of Fit.

7. Again click the red triangle, and click Regression reports ▶ Analysis of Variance.

8. Also, further down the output, click the display arrow to the left of Parameter estimates.

Figure 5.5: The Fit Model Dialog Box

Examine the Effect Summary Report and Adjust the Model

The Effect Summary report, as shown in Figure 5.6, lists in ascending p-value order the LogWorth or False Discovery Rate (FDR) LogWorth values. These statistical values measure the effects of the independent variables in the model. A LogWorth value greater than 2 corresponds to a p-value of less than .01, The FDR LogWorth is a better statistic for assessing significance since it adjusts the p-values to account for the false discovery rate from multiple tests (similar to the multiple comparison test used in ANOVA).

Figure 5.6: Multiple Linear Regression Output (Top Half)

Complete the following steps:

1. Click the FDR box to view the FDR LogWorth values.

2. This report can be interactive by clicking Remove, Add or Edit. For example, in the Effect Summary report, click Change, hold the Shift key down, and click Workload. The four variables are highlighted.

3. Now, click Remove. Observe that the regression output changed to reflect the removal of those variables. If you want those variables back in the regression model, click Undo.

As shown in Figure 5.7 listed under the Parameter estimates, the multiple linear regression equation is as follows:

Sales = −1485.88 + 1.97 * Time + 0.04 * MktPoten + 0.15 * Adver + 198.31*

MktShare + 295.87 * Change + 5.61 * Accts + 19.90 * WkLoad

Each independent variable regression coefficient represents an estimate of the change in the dependent variable to a unit increase in that independent variable while all the other independent variables are held constant. For example, the coefficient for WkLoad is 19.9. If WkLoad is increased by 1 and the other independent variables do not change, then Sales would correspondingly increase by 19.9.

Figure 5.7: Multiple Linear Regression Output (Bottom Half)

The relative importance of each independent variable is measured by its standardized beta coefficient (which is a unitless value). The larger the absolute value of the standardized beta coefficient, the more important the variable.

To obtain the standardized beta values, move the cursor somewhere over the Parameter Estimates Table. Right-click to open a list of options. Select Columns ▶ Std Beta. Subsequently, the Std Beta column is added to the Parameter Estimates Table, as shown in Figure 5.8. With your sales performance data set, you can see that the variables MktPoten, Adver, and MktShare are the most important variables.

Figure 5.8: Parameter Estimates Table with Standardized Betas and Variance Inflation Factors

The Prediction Profiler displays a graph for each independent variable X against the dependent Y variable as shown in Figure 5.7. Each plot is basically a transformed XY plot of the X-residuals versus the Y-residuals. Added to each X-residual is the mean of the X and correspondingly added to each Y-residual is the Y mean. These transformed residuals are called X leverage residuals and Y leverage residuals. The black line represents the predicted values for individual X values, and the blue dotted line is the corresponding 95% confidence interval. If the confidence region between the upper and lower confidence interval crosses the horizontal line, then the effect of X is significant. The horizontal red dotted line shows the current predicted value of the Y variable as the vertical red dotted lines shows the current X value. You can observe changes in the current X or Y value by clicking in the graph and dragging the dotted line.

Evaluate the Statistical Significance of the Model

The process for evaluating the statistical significance of a regression model is as follows:

1. Determine whether it is good or bad:

a. Conduct an F test.

b. Conduct a t test for each independent variable.

c. Examine the residual plot (if time series data, conduct a Durbin-Watson test).

d. Assess the degree of multicollinearity (variance inflation factor, VIF).

2. Determine the goodness of fit:

a. Compute Adjusted R2.

b. Compute RMSE (or Se)

Step 1a: Conduct an F Test

First, you should perform an F test. The F test, in multiple regression, is known as an overall test.

The hypotheses for the F test are as follows, where k is the number of independent variables:

H0 : β1 = β2 = … = βk = 0

H1 : not all equal to 0

If you fail to reject the F test, then the overall model is not statistically significant. The model shows no linear significant relationships. You need to start over, and you should not look at the numbers in the Parameter Estimates table. On the other hand, if you reject H0, then you can conclude that one or more of the independent variables are linearly related to the dependent variable. So you want to reject H0 of the F test.

In Figure 5.7, for your sales performance data set, you can see that the p-value for the F test is <0.0001. So you reject the F test and can conclude that one or more of the independent variables (that is, one or more of variables Time, Mktpoten, Adver, Mktshare, Change, Accts, and Wkload) is significantly related to Sales (linearly).

Step 1b: Evaluate the T Test for Each Independent Variable

The second step is to evaluate the t-test for each independent variable. The hypotheses are as follows, where k = 1, 2, 3, … K:

H0 : βk = 0

H1 : βk ≠ 0

You are not testing whether independent variable k, xk, is significantly related to the dependent variable. What you are testing is whether xk is significantly related to the dependent variable above and beyond all the other independent variables that are currently in the model. That is, in the regression equation, you have the term βk xk. If βk cannot be determined to be significantly different from 0, then xk has no effect on Y. Again, in this situation, you want to reject H0. You can see in Figures 5.6 and 5.7 that the independent variables MktPoten, Adver, and MktShare each reject H0 and are significantly related to Sales above and beyond the other independent variables.

Step 1c: Examine the Residual Plot

One of the statistical assumptions of regression is that the residuals or errors (the difference between the actual value and the predicted value) should be random. That is, the errors do not follow any pattern. To visually examine the residuals, look at Plot Residual by Predicted in Figure 5.6 and in Figure 5.9. Examine the plot for any patterns—oscillating too often or increasing or decreasing in values or for outliers. The data appears to be random.

Figure 5.9: Residual Plot of Predicted versus Residual

If the observations were taken in some time sequence, called a time series (not applicable to the sales performance data set), the Durbin-Watson test should be performed. (Click the red triangle next to the Response Sales heading; select Row Diagnostics ▶ Durbin-Watson Test.) To display the associated p-value, click the red triangle under the Durbin-Watson test and click Significant p-value. The Durbin-Watson test examines the correlation between consecutive observations. In general, you want high p-values. High p-values of the Durbin-Watson test indicate that there is no problem with first order autocorrelation. In this case, the p-value is 0.2355.

Step 1d: Assess Multicollinearity

An important problem to address in the application of multiple regression is multicollinearity or collinearity of the independent variables (Xs). Multicollinearity occurs when the two or more independent variables explain the same variability of Y. Multicollinearity does not violate any of the statistical assumptions of regression. However, significant multicollinearity is likely to make it difficult to interpret the meaning of the regression coefficients of the independent variables.

One method of measuring multicollinearity is the variance inflation factor (VIF). Each independent variable k has its own VIFk. By definition, it must be greater than or equal to 1. The closer the VIFk is to 1, the smaller the relationship between the kth independent variable and the remaining Xs. Although, there are no definitive rules for identifying a large VIFk, the basic guidelines (Marquardt 1980; Snee 1973) for identifying whether significant multicollinearity exists are as follows:

● 1 ≤ VIFk ≤ 5 means no significant multicollinearity.

● 5 < VIFk ≤ 10 means that you should be concerned that some multicollinearity might exist.

● VIFk > 10 means significant multicollinearity.

Note that exceptions to these guidelines are when you might have transformed the variables (such as nonlinear transformation). To display the VIFk values for the independent variables (similar to what we illustrated to display the standardized beta values), move the cursor somewhere over the Parameter Estimates Table and right-click. Select Columns ▶ VIF. As shown before in Figure 5.8, a column of VIF values for each independent variable is added to the Parameter Estimate Table. The variable Accts has a VIF greater than 5, and we should be concerned about that variable.

Step 2: Determine the Goodness of Fit

There are two opposing points of views as to whether to include those nonsignificant independent variables (that is, those variables for which you failed to reject H0 for the t test) or the high VIFk variables. One perspective is to include those nonsignificant/high VIFk variables because they explain some, although not much, of the variation in the dependent variable. And it does improve your understanding of these relationships. Taking this approach to the extremes, you can continue adding independent variables to the model so that you have almost the same number of independent variables as there are observations, which is somewhat unreasonable. However, this approach is sensible with a reasonable number of independent variables and when the major objective of the regression analysis is to understand the relationships of the independent variables to the dependent variable. Nevertheless, addressing the high VIFk values (especially > 10) might be of concern, particularly if one of the objectives of performing the regression is the interpretation of the regression coefficients.

The other point of view follows the principle of parsimony, which states that the smaller the number of variables in the model, the better. This viewpoint is especially true when the regression model is used for prediction/forecasting (having such variables in the model might actually increase the se). There are numerous approaches and several statistical variable selection techniques to achieve this goal of only significant independent variables in the model. Stepwise regression is one of the simplest approaches. Although it’s not guaranteed to find the “best” model, it certainly will provide a model that is close to the ‘best.” To perform stepwise regression, you have two alternatives:

● Click the red triangle next to the Response Sales heading and select Model Dialog. The Fit Model dialog box as in Figure 5.5 will appear.

● Select Analyze ▶ Fit Model. Click the Recall icon. The dialog box is repopulated and looks like Figure 5.5.

In either case, now, in the Fit Model dialog box, click the drop-down menu to the right of Personality. Change Standard Least Squares to Stepwise. Click Run.

The Fit Stepwise dialog box will appear, similar to the top portion of Figure 5.10. In the drop-down box to the right of Stopping Rule, click the drop-down menu, and change the default from Minimum BIC to P-Value Threshold. Two rows will appear, Prob to Enter and Prob to Leave. Change Direction to Mixed. (The Mixed option alternates the forward and backward steps.) Click Go.

Figure 5.10 displays the stepwise regression results. The stepwise model did not include the variables Accts and Wkload. The Adjusted R2 values improved slightly from 0.890 in the full model to 0.893. And the se, or root mean square error (RMSE), actually improved by decreasing from 435.67 to 430.23. And nicely, all the p-values for the t tests are now significant (less than .05). You can click Run Model to get the standard least squares (Fit Model) output.

Figure 5.10: Stepwise Regression for the Sales Performance Data Set

The goodness of fit of the regression model is measured by the Adjusted R2 and the se or RMSE, as listed in Figure 5.7 and 5.10. The Adjusted R2 measures the percentage of the variability in the dependent variable that is explained by the set of independent variables and is adjusted for the number of independent variables (Xs) in the model. If the purpose of performing the regression is to understand the relationships of the independent variables with the dependent variable, the Adjusted R2 value is a major assessment of the goodness of fit. What constitutes a good Adjusted R2 value is subjective and depends on the situation. The higher the Adjusted R2, the better the fit. On the other hand, if the regression model is for prediction/forecasting, the value of the se is of more concern. A smaller se generally means a smaller forecasting error. Similar to Adjusted R2, a good se value is subjective and depends on the situation.

Two additional approaches to consider in the model selection process that do consider both the model fit and the number of parameters in the model are the information criterion (AIC) approach developed by Akaike and the Bayesian information criterion (BIC) developed by Schwarz. The two approaches have quite similar equations except that the BIC criterion imposes a greater penalty on the number of parameters than the AIC criterion. Nevertheless, in practice, the two criteria often produce identical results. To apply these criteria, in the Stepwise dialog box, when you click the drop-down menu for Stopping Rule, there were several options listed, including Minimum AICc and Minimum BIC.

Further expanding the use of regression, in Chapter 8, you will learn two generalized regression approaches, LASSO and Elastic Net.

Understand and Perform Regression with Categorical Data

There are many situations in which a regression model that uses one or more categorical variables as independent variables might be of interest. For example, suppose that your dependent variable is sales. A few possible illustrations of using a categorical variable that could affect sales would be gender, region, store, or month. The goal of using these categorical variables would be to see whether the categorical variable significantly affects the dependent variable sales. That is, does the amount of sales differ significantly by gender, by region, by store, or by month? Or do sales vary significantly in combinations of several of these categorical variables?

Measuring ratios and distances are not appropriate with categorical data. That is, distance cannot be measured between male and female. So in order to use a categorical variable in a regression model, you must transform the categorical variables into continuous variables or integer (binary) variables. The resulting variables from this transformation are called indicator or dummy variables. How many indicator, or dummy, variables are required for a particular independent categorical variable X is equal to c − 1, where c is the number of categories (or levels) of the categorical X variable. For example, gender requires one dummy variable, and month requires 11 dummy variables. In many cases, the dummy variables are coded 0 or 1. However, in JMP this is not the case. If a categorical variable has two categories (or levels) such as gender, then a single dummy variable is used with values of +1 and -1 (with +1 assigned to the alphabetically first category). If a categorical variable has more than two categories (or levels), the dummy variables are assigned values +1, 0, and −1.

Now look at a data set, in particular AgeProcessWords.jmp (Eysenck, 1974). Eysenck conducted a study to examine whether age and the amount remembered is related to how the information was initially processed. Fifty younger and fifty older people were randomly assigned to five learning process groups (Counting, Rhyming, Adjective, Imagery, and Intentional), where younger was defined as being younger than 54 years old. The first four learning process groups were instructed on ways to memorize the 27 items. For example, the rhyming group was instructed to read each word and think of a word that rhymed with it. These four groups were not told that they would later be asked to recall the words. On the other hand, the last group, the intentional group, was told that they would be asked to recall the words. The data set has three variables: Age, Process, and Words.

To run a simple regression using the categorical variable Age to see how it is related to the number of words memorized (the variable Words) complete the following steps:

1. Select Analyze ▶ Fit Model.

2. In the Fit Model dialog box, select Words ▶ Y.

3. Select Age ▶ Add.

4. Click Run.

Figure 5.11 shows the regression results. You can see from the F test and t test that Age is significantly related to Words. The regression equation is as follows: Words = 11.61 −1.55 * Age[Older].

As we mentioned earlier, with a categorical variable with two levels, JMP assigns +1 to the alphabetically first level and −1 to the other level. So in this case, Age[Older] is assigned +1 and Age[Younger] is assigned −1. So the regression equations for the Older Age group is as follows:

Words = 11.61 − 1.55 * Age[Older] = 11.61 − 1.55(1) = 10.06

And the regression equations for the Younger Age group is as follows:

Words = 11.61 − 1.55 * Age[Older] = 11.61 − 1.55(−1) = 13.16

Therefore, the Younger age group can remember more words.

To verify the coding (and if you want to create a different coding in other situations), you can create a new variable called Age1 by completing the following steps:

1. Select Cols ▶ New Columns. The new column dialog box will appear.

2. In the text box for Column name, where Column 4 appears, enter Age1.

3. Select Column Properties ▶ Formula. The Formula dialog box will appear, Figure 5.12.

4. On the left side, there is a list of Functions. Click the arrow to the left of Conditional ▶ If. The If statement will appear in the formula area. JMP has placed the cursor on expr (blue rectangle).

5. Click Age from the Columns area. Just above the Conditional Function that you clicked on the left side of the Formula dialog box, click Comparison ▶ a==b.

6. In the new blue rectangle, enter “Older” (include quotation marks), and then press the Enter key.

7. Click the then clause, type 1, and then press the Enter key again.

8. Double-click on the lower else clause and type –1, and then press the Enter key. The dialog box should look like Figure 5.12.

9. Click OK and then click OK again.

Figure 5.11: Regression of Age on Number of Words Memorized

Figure 5.12: Formula Dialog Box

A new column Age1 with +1s and −1s will be in the data table. Now, run a regression with Age1 as the independent variable. Figure 5.13 displays the results. Comparing the results in Figure 5.13 to the results in Figure 5.11, you can see that they are exactly the same.

A regression that examines the relationship between Words and the categorical variable Process would use four dummy variables. Complete the following steps:

1. Select Analyze ▶ Fit Model.

2. In the Fit Model dialog box, select Words ▶ Y.

3. Select Process ▶ Add.

4. Click Run.

Figure 5.13: Regression of Age1 on Number of Words Memorized

Table 5.1: Dummy Variable Coding for Process and the Predicted Number of Words Memorized

| Row Process | Predicted Number of Words | Process Dummy Variable | |||

| [Adjective] | [Counting] | [Imagery] | [Intentional] | ||

| Adjective | 12.9 | 1 | 0 | 0 | 0 |

| Counting | 6.75 | 0 | 1 | 0 | 0 |

| Imagery | 15.5 | 0 | 0 | 1 | 0 |

| Intentional | 15.65 | 0 | 0 | 0 | 1 |

| Rhyming | 7.25 | −1 | −1 | −1 | −1 |

Figure 5.14: Regression of Process on Number of Words Memorized

The coding used for these four Process dummy variables is shown in Table 5.1. Figure 5.14 displays the regression results. The regression equation is as follows:

Words = 11.61 + 1.29 * Adjective − 4.86 * Counting + 3.89 * Imagery + 4.04 * Intentional

5. Given the dummy variable coding used by JMP, as shown in Table 5.1, the mean of the level displayed in the brackets is equal to the difference between its coefficient value and the mean across all levels (that is, the overall mean or the y-intercept). So the t tests here are testing if the level shown is different from the mean across all levels. If you have used the dummy variable coding and substituted into the equation, the predicted number of Words that are memorized by process are as listed in Table 5.1. Intentional has the highest words memorized at 15.65, and Counting is the lowest at 6.75.

Analysis of Variance

Analysis of variance (more commonly called ANOVA), as shown in Figure 5.1, is a dependence multivariate technique. There are several variations of ANOVA, such as one-factor (or one-way) ANOVA, two-factor (or two-way) ANOVA, and so on, and also repeated measures ANOVA. (In JMP, repeated measures ANOVA is found under Analysis ▶ Consumer Research ▶ Categorical.) The factors are the independent variables, each of which must be a categorical variable. The dependent variable is one continuous variable. This book provides only a brief introduction to and review of ANOVA.

Assume that one independent categorical variable X has k levels. One of the main objectives of ANOVA is to compare the means of two or more populations:

H0 = μ1 = μ2 … = μk = 0

H1 = at least one mean is different from the other means

The average for each level is µk. If H0 is true, you would expect all the sample means to be close to each other and relatively close to the grand mean. If H1 is true, then at least one of the sample means would be significantly different. You measure the variability between means with the sum of squares between groups (SSBG). On the other hand, large variability within the sample weakens the capacity of the sample means to measure their corresponding population means. This within-sample variability is measured by the sum of squares within groups (or error) (SSE). As with regression, the decomposition of the total sum of squares (TSS) is as follows: TSS = sum of squares between groups + sum of squared error, or TSS = SSBG + SSE. (In JMP: TSS, SSBG, and SSE are identified as C.Total, Model SS, and Error SS, respectively.) To test this hypothesis, you do an F test (the same as what is done in regression).

If H0 of the F test is rejected, which implies that one or more of the population means are significantly different, you then proceed to the second part of an ANOVA study and identify which factor level means are significantly different. If you have two populations (that is, two levels of the factor), you would not need ANOVA, and you could perform a two-sample hypothesis test. On the other hand, when you have more than two populations (or groups) you could take the two-sample hypothesis test approach and compare every pair of groups. This approach has problems that are caused by multiple comparisons and is not advisable, as will be explained. If there are k levels in the X variable, the number of possible pairs of levels to compare is k(k − 1)/2. For example, if you had three populations (call them A, B, and C), there would be 3 pairs to compare: A to B, A to C, and B to C. If you use a level of significance α = 0.05, there is a 14.3% (1 − (.95)3) chance that you will detect a difference in one of these pairs, even when there really is no difference in the populations; the probability of Type I error is 14.3%. If there are 4 groups, the likelihood of this error increases to 26.5%. This inflated error rate for the group of comparisons, not the individual comparisons, is not a good situation. However, ANOVA provides you with several multiple comparison tests that maintain the Type I error at α or smaller.

An additional plus of ANOVA is, if we are examining the relationship of two or more factors, ANOVA is good at uncovering any significant interactions or relationships among these factors. We will discuss interactions briefly when we examine two-factor (two-way) ANOVA. For now, let’s look at one-factor (one-way) ANOVA.

Perform a One-Way ANOVA

One-way ANOVA has one dependent variable and one X factor. Let’s return to the AgeProcessWords.jmp data set and first perform a one-factor ANOVA with Words and Age:

1. Select Analyze ▶ Fit Y by X.

2. In the Fit Y by X dialog box, select Words ▶ Y, Response.

3. Select Age ▶ X, Factor.

4. Click OK.

5. In the Fit Y by X output, click the red triangle and select the Means/Anova/Pooled t option.

Figure 5.15 displays the one-way ANOVA results. The plot of the data at the top of Figure 5.15 is displayed by each factor level (in this case, the age group Older and Younger). The horizontal line across the entire plot represents the overall mean. Each factor level has its own mean diamond. The horizontal line in the center of the diamond is the mean for that level. The upper and lower vertices of the diamond represent the upper and lower 95% confidence limit on the mean, respectively. Also, the horizontal width of the diamond is relative to that level’s (group’s) sample size. That is, the wider the diamond, the larger the sample size for that level relative to the other levels.

In this case, because the level sample sizes are the same, the horizontal widths of all the diamonds are the same. As shown in Figure 5.15, the t test and the ANOVA F test (in this situation, since the factor Age has only two levels, a pooled t test is performed) show that there is a significant difference in the average Words memorized by Age level (p = 0.0024). In particular, examining the Means for One-Way ANOVA table in Figure 5.15, you can see that the Younger age group memorizes more words than the Older group (average of 13.16 to 10.06). Lastly, compare the values in the ANOVA table in Figure 5.15 to Figures 5.11 and 5.14. They have exactly the same values in the ANOVA tables as when you did the simple categorical regression using AGE or AGE1.

Figure 5.15: One-Way ANOVA of Age and Words

Evaluate the Model

The overall steps to evaluate an ANOVA model are as follows:

1. Conduct an F test.

a. If you do not reject H0 (the p-value is not small), then stop because there is no difference in means.

b. If you do reject H0, then go to Step 2.

2. Consider unequal variances; look at the Levine test.

a. If you reject H0, then go to Step 3 because the variances are unequal.

b. If you do not reject H0, then go to Step 4.

3. Conduct Welch’s test, which tests differences in means, assuming unequal variances.

a. If you reject H0, because the means are significantly different, then go to Step 4.

b. If you do not reject H1, stop: Results are marginal, meaning you cannot conclude that means are significantly different.

4. Perform multiple comparison test, Tukey’s HSD.

Test Statistical Assumptions

ANOVA has three statistical assumptions to check and address. The first assumption is that the residuals should be independent. In most situations, ANOVA is rather robust in terms of this assumption. Furthermore, many times in practice this assumption is violated. So unless there is strong concern about the dependence of the residuals, this assumption does not have to be checked.

The second statistical assumption is that the variances for each level are equal. Violation of this assumption is of more concern because it could lead to erroneous p-values and hence incorrect statistical conclusions. You can test this assumption by clicking the red triangle and then clicking Unequal Variances. Added to the JMP ANOVA results is the report named Tests that the Variances are Equal.

Four tests are always provided. However, if, as it is in this case, there are only two groups tested, and then an F test for unequal variance is also performed. This gives you five tests, as shown in Figure 5.16. If you fail to reject H0 (that is, you have a large p-value), you have insufficient evidence to say that the variances are not equal. So you proceed as if they are equal, go to Step 4, and perform multiple comparison tests. On the other hand, if you reject H0, the variances can be assumed to be unequal, and the ANOVA output cannot be trusted. In that case, you should go to Step 3. With your current problem in Figure 15.15 and 15.16, because for all the tests for unequal variances the p-values are small, you need to proceed to Step 3. In Step 3, you look at the Welch’s Test, located at the bottom of Figure 5.16. The p-value for the Welch ANOVA is .0025, which is also rather small. So you can assume that there is a significant difference in the population mean of Older and Younger.

Figure 5.16: Tests of Unequal Variances and Welch’s Test for Age and Words

Create a Normal Quantile Plot

The third statistical assumption is that the residuals should be normally distributed. The F test is very robust if the residuals are non-normal. That is, if slight departures from normality are detected, they will have no real effect on the F statistic. A normal quantile plot can confirm whether the residuals are normally distributed or not. To produce a normal quantile plot, complete the following steps:

1. In the Fit Y by X output, click the red triangle and select Save ▶ Save Residuals. A new variable called Words centered by Age will appear in the data table.

2. Select Analyze ▶ Distribution. In the Distribution dialog box, in the Select Columns area, select Words centered by Age ▶ Y, Response.

3. Click OK.

4. The Distribution output will appear. Click the middle red triangle (next to Words centered by Age), and click Normal Quantile Plot.

The distribution output and normal quantile plot will look like Figure 5.17. If all the residuals fall on or near the straight line or within the confidence bounds, the residuals should be considered normally distributed. All the points in Figure 5.17 are within the bounds, so you can assume that the residuals are normally distributed.

Figure 5.17: Normal Quantile Plot of the Residuals

Perform a One-Factor ANOVA

Now perform a one-factor ANOVA of Process and Words:

1. Select Analyze ▶ Fit Y by X. In the Fit Y by X dialog box, select Words ▶ Y, Response.

2. Select Process ▶ X, Factor.

3. Click OK.

4. Click the red triangle, and click Means/Anova.

Figure 5.18 displays the one-way ANOVA results. The p-value for the F test is <.0001, so one or more of the Process means differ from each other. Also, notice that the ANOVA table in Figure 5.18 is the same as in the categorical regression output of Words and Process in Figure 5.14.

Figure 5.18: One-Way ANOVA of Process and Words

Examine the Results

The results of the tests for unequal variances are displayed in Figure 5.19. In general, the Levene test is more widely used and more comprehensive, so you focus only on the Levene test. It appears that you should assume that the variances are unequal (for all the tests), so you should look at the Welch’s Test in Figure 5.19. Because the p-value for the Welch’s Test is small, you can reject the null hypothesis; the pairs of means are different from one another.

Figure 5.19: Tests of Unequal Variances and Welch’s Test for Process and Words

The second part of the ANOVA study focuses on identifying which factor level means differ from each other. With the AgeProcessWords.jmp data set, because you did not satisfy the statistical assumption that the variances for each level are equal, you needed to go to Step 3 to perform the Welch’s test. You rejected the Welch’s test, which suggests, assuming the variances are different, that the means appear to be significantly different; therefore, you can perform the second part of an ANOVA and test for differences. If you had not rejected the Welch’s test, then, it would not be recommended that you perform these second-stage tests. In such a case, it is recommended that you perform some nonparametric tests that JMP does provide.

Test for Differences

To perform the second part of an ANOVA analysis, you will use a data set that does have equal variances, Analgesics.jmp. The Analgesics data table contains 33 observations and three variables: Gender, Drug (3 analgesics drugs: A, B, and C), and Pain (ranges from 2.13 and 16.64—the higher the value, the more painful).

Figures 5.20 and 5.21 display the two one-way ANOVAs. Males appear to have significantly higher Pain, and you do not have to go further since there are only two levels for gender. Although there does seem to be significant differences in Pain by Drug, the p-value for the Levene test is .0587. So you fail to reject the null hypothesis of all equal variances and can comfortably move on to the second part of an ANOVA study. On the other hand, in Figure 5.21 the diamonds under Oneway analysis of pain by Drug appear different. Drug A distribution is tighter, and the distributions of drugs B and C are somewhat similar. Each distribution has some significantly low values, especially drug C, suggesting possible variances.

If you select the red triangle and click Unequal Variances, you can check the Welch’s Test. Since the p-value for the Welch’s Test is small, you can reject the null hypothesis; the means are significantly different from one another.

Figure 5.20: One-Way ANOVA of Gender and Pain

Figure 5.21: One-Way ANOVA of Drug and Pain

JMP provides several multiple comparison tests, which can be found by clicking the red triangle, clicking Compare Means, and then selecting the test.

Student’s t Test

The first test, the Each Pair, Student’s t test, computes individual pairwise comparisons. As discussed earlier, the likelihood of a Type I error increases with the number of pairwise comparisons. So, unless the number of pairwise comparisons is small, this test is not recommended.

Tukey Honest Significant Difference Test

The second means comparison test is the All Pairs, Tukey HSD test. If the main objective is to check for any possible pairwise difference in the mean values, and there are several factor levels, the Tukey HSD (honest significant difference) also called Tukey-Kramer HSD test is the most desired test. Figure 5.22 displays the results of the Tukey-Kramer HSD test. To identify mean differences, examine the Connecting Letters Report. Groups that do not share the same letter are significantly different from one another. The mean pain for Drug A is significantly different from the mean pain of Drug C.

Figure 5.22: The Tukey-Kramer HSD Test

Hsu’s Multiple Comparison with Best

The third means comparison test is With Best, Hsu MCB. The Hsu’s MCB (multiple comparison with best) is used to determine whether each factor level mean can be rejected as the “best” of all the other means, where “best” means either a maximum or minimum value. Figure 5.23 displays the results of Hsu’s MCB test. The p-value report and the maximum and minimum LSD (Least Squares Differences) matrices can be used to identify significant differences. The p-value report identifies whether a factor level mean is significantly different from the maximum and from the minimum of all the other means. The Drug A is significantly different from the maximum, and Drugs B and C are significantly different from the minimum. The maximum LSD matrix compares the means of the groups against the unknown maximum and, correspondingly, compares the means of the groups against the same for the minimum LSD matrix. Follow the directions below each matrix, looking at positive values for the maximum and negative values for the minimum.

Examining the matrices in Figure 5.23, you can see that the Drug groups AC and AB are significantly less than the maximum and significantly greater than the minimum.

Figure 5.23: The Hsu’s MCB Test

Differences with the Hsu’s MCB test are less conservative than those found with the Tukey-Kramer test. Also, Hsu’s MCB test should be used if there is a need to make specific inferences about the maximum or minimum values.

Dunnett’s Test

The last means comparison test provided, the With Control, Dunnett’s, is applicable when you do not wish to make all pairwise comparisons, but rather only to compare one of the levels (the “control”) with each other level. Thus, fewer pairwise comparisons are made. This is beyond the focus of this book, so this test is discussed no further here.

The JMP Fit Model Platform

In addition, you can perform one-way ANOVA by using the JMP Fit Model platform. Most of the output is identical to the Fit Y by X results, but with a few differences.

So now perform a one-way ANOVA on Pain with Drug:

1. Select Analyze ▶ Fit Model.

2. In the Fit Model dialog box, click Pain ▶ Y.

3. Select Drug ▶ Add.

4. Click Run.

5. On the right side of the output, click the red triangle next to Drug, and select LSMeans Plot and then LSMeans Tukey HSD.

The output will look similar to Figure 5.24. The LSMeans plot shows a plot of the factor means. The LSMeans Tukey HSD is similar to the All Pairs, Tukey HSD output from the Fit Y by X option. In Figure 5.24 and Figure 5.22, notice the same Connecting Letters Report. The Fit Model output does not have the LSD matrices but does provide you with a crosstab report. The Fit Model platform does not directly provide an equal variance test. But, you can visually evaluate the Residual by Predicted Plot in the lower left corner of Figure 5.24.

Figure 5.24: One-Way ANOVA Output Using Fit Model

Perform a Two-Way ANOVA

Two-way ANOVA is an extension of the one-way ANOVA in which there is one continuous dependent variable, but, now you have two categorical independent variables.

There are three basic two-way ANOVA designs: without replication, with equal replication, and with unequal replication. The two two-way designs with replication not only enable you to address the influence of each factor’s contribution to explaining the variation in the dependent variable, but also enable you to address the interaction effects due to all the factor level combinations. Only the two-way ANOVA with equal replication is discussed here.

Using the Analgesics.jmp, perform a two-way ANOVA of Pain with Gender and Drug as independent variables:

1. Select Analyze ▶ Fit Model.

2. In the Fit Model dialog box, select Pain ▶ Y.

3. Click Gender, hold down the shift key, and click Drug.

4. Click Add.

5. Again, click Gender, hold down the shift key, and click Drug.

6. This time, click Cross. (Another approach is to highlight Gender and Drug, click Macros, and click Full Factorial.)

7. Click Run.

Examine the Results

Figures 5.25 to 5.28 display the two-way ANOVA results. The p-value for the F test in the ANOVA table is 0.0006 in Figure 5.26, so there are significant differences in the means. To find where the differences are, examine the F tests in the Effect Tests table. You can see that there are significant differences in the Age, Drug (which you found out already when you did the one-way ANOVAs). But observe a moderately significant difference in the interaction means, p-value = 0.0916. To understand these differences, go back to the top of the output and click the red triangles for each factor. Then complete the following steps:

1. For Gender, select LSMeans Plot ▶ LSMeans Student’s t (since you have only two levels).

2. For Drug, select LSMeans Plot ▶ LSMeans Tukey HSD.

3. For Gender*Drug, select LSMeans Plot ▶ LSMeans Tukey HSD.

The results for Gender and Drug displayed in Figures 5.25 and Figure 5.26 are equivalent to what you observed earlier in the chapter when one-way ANOVA was discussed. Figure 5.27 also has the LSMeans Plot and the Connecting Letter Report for the interaction effect Gender*Drug. If there were no significant interaction, the lines in the LSMeans Plot would not cross and would be mostly parallel.

Figure 5.25: Two-way ANOVA Output (top half)

Figure 5.26: Two-way ANOVA Output (bottom half)

Figure 5.27: Two-way ANOVA Output for Gender and Drug

Figure 5.28: Two-Way ANOVA Output for Gender * Drug

There does appear to be some intereaction. The Connecting Letter Report identifies further the significant interactions. Overall, and clearly in the LS Means Plot, it appears that Females have lower Pain than Males. However, for Drug A, Females have higher than expected Pain.

Evaluate the Model for Equal Variances

To check for equal variances in two-way ANOVA, you need to create a new column that is the interaction, Gender*Drug, and then run a one-way ANOVA on that variable. To create a new column when both variables are in character format, complete the following steps:

1. Select Cols ▶ New Columns. The new column dialog box will appear.

2. In the text box for Column name, where Column 4 appears, type Gender*Drug.

3. Click the drop-down menu for Column Properties and click Formula. The Formula dialog box will appear.

4. Click the arrow next to Character, and click Concat. The Concat statement will appear in the formula area with two rectangles. JMP has placed the cursor on the left rectangle (blue rectangle).

5. Click Gender from the Columns; click the right Concat rectangle, and click Drug from the Columns. The dialog box should look like Figure 5.29.

6. Click OK and then Click OK again.

Figure 5.29: Formula Dialog Box

The results of running a one-way ANOVA of Gender*Drug and Pain are shown in Figure 5.30. The F test rejects the null hypothesis. So, as you have seen before, there is significant interaction.

Figure 5.30: One-Way ANOVA with Pain and Interaction Gender * Drug

Examining the Levene test for equal variances, you have a p-value of .0084, rejecting the null hypothesis of all variances being equal. However, the results show a warning that the sample size is relatively small. So, in this situation, you will disregard these results.

Exercises

1. Using the Countif.xls file, develop a regression model to predict Salary by using all the remaining variables. Use α = 0.05. Evaluate this model—perform all the tests. Run a stepwise model and evaluate it.

2. Using the hmeq.jmp file, develop the best model you can to predict loan amount. Evaluate each model and use α = 0.05.

3. Using the Promotion_new.jmp file, develop each model, evaluate it, and use α = 0.05:

a. Develop a model to predict Combine score, using the variable Position.

b. Create an indicator variable for the variable Position, and use the new indicator variable Captain in a regression model to predict Combine.

c. Create a new variable, called Position_2, which assigns 1 to Captain or −1 otherwise (leave blank as missing). Now run a regression model, using this new variable, Position_2, to predict Combine.

d. What is the differences and similarities among parts a, b, and c?

e. Develop a model using Race and Position to predict Combine.

4. Using the Countif.xls file, address the following:

a. Is there significant interaction between Major and Gender and the average salary?

b. Create a new variable, GPA_greater_3, which is equal to High if GPA ≥ 3; otherwise, it is equal to Low. See how this new variable, GPA_greater_3 has different salary means.

c. Continuing with part b, test to see whether there is significant interaction between GPA_greater_3 and Major and in average Salary.

5. Using the Promotion_new.jmp file, test to see whether there is significant interaction with Race and Position and the average Combine score.

6. Using the Titanic_Passengers_new.jmp file, test to see whether there is significant interaction between whether a passenger survived and passenger class and the passengers’ average age.