Fish nor Fowl

SUMMARY

The most realistic scenario in large enterprises is a mixture of legacy on-premises applications, cloud IaaS or PaaS applications, and SaaS applications. All these applications are likely to need some level of integration with the rest.

Architectures that mix on-premises and cloud applications are likely to be present in enterprise IT for years to come. Many enterprises have successful applications deployed on their premises that are effective and efficient. As long as the investment in these applications continues to return sound economic value, the applications are not likely to be replaced by cloud deployments. In addition, security and regulatory compliance requirements make some applications difficult to move from the premises. Security threatens to be an issue for some time to come. Aside from the hesitance to entrust third parties with critical data and processes, cloud technology is relatively new. Experience has shown that immature technologies are more susceptible to security breaches than more mature technologies. This drives some skepticism of the safety of cloud deployments for critical components.

MIXED CLOUD ARCHITECTURES

The term hybrid cloud is often used with almost the same meaning as mixed architectures is used in this book. The National Institute of Standards and Technologies defines a hybrid cloud as a combination of several clouds, which may be private, community, or public.1 A common example is a private cloud designed to expand into a public cloud when extra capacity is needed. The definition has drifted and sometimes includes on-premises applications. In this book, a mixed architecture or environment refers to an architecture that includes traditional on-premises applications along with clouds. The clouds may be private, community, or public, and applications may be implemented on IaaS or PaaS clouds or may be SaaS. In most cases, cloud and on-premises implementations are integrated in a mixed architecture, although mixed architectures without integration are possible.

Some applications are not likely ever to be moved to the cloud. Security and regulatory issues aside, some applications will be deemed so critical to the corporate mission that they cannot be entrusted to a third-party cloud. Some of these issues are solved by private clouds, which are built exclusively for a single enterprise, but private ownership of a cloud does not convey all the business and technical benefits of a third-party cloud deployment.

Given the business and technical advantages of cloud deployment and the equally potent arguments for avoiding clouds, mixed environments are inevitable.

If the applications in a mixed architecture operated independently, without communicating with each other, the mixed environment architect’s life would be easy. Cloud applications deployed on the cloud would not contend for resources with applications deployed in the local datacenter, which would make planning the on-premises datacenter a bit easier. If cloud applications communicated only with their end users, network concerns about latency and bandwidth communicating between cloud and premises and cloud-to-cloud would not exist. Connectivity issues would be greatly simplified. The architect’s task would only be to decide which applications to replace with SaaS, which to rebuild or port to IaaS or PaaS, and which to leave in the on-premises datacenter.

Mixed Integration

However, as you have seen in earlier chapters, enterprises without integration are unlikely because integrated applications deliver increased value to the enterprise. Integration is always important, but it may be made more important by the cloud. SaaS applications provide an example. As more enterprises move to a limited number of popular SaaS offerings for some types of application, the software in use tends to become uniform. Consequently, the service delivered to enterprise customers also becomes more of a commodity that yields little competitive advantage.

Consider a popular SaaS customer relationship management (CRM) application. The SaaS application is popular because it meets many business needs. The installation effort is minor, the product is well designed, and it is easy for the sales force to learn. Each organization using the application is a tenant of a single multitenant application. In other words, the features for each organization are fundamentally identical, perhaps with a few customizations. The responsiveness of the sales division and the range and content of customer services become essentially the same. This places the organizations using the multitenant application on a level playing field.

In business, level playing fields are desirable only when the field is tilted against you. As soon as the field is level, good business requires you to strive to tilt the field in your favor. Integrating an SaaS application into an organization’s superior IT environment may well be the competitive advantage the organization needs.

When the enterprise integrates with the SaaS CRM system, the business can gain advantage from the design of the entire IT system, not just the commoditized CRM system. For example, an enterprise with a comprehensive supply-chain management system could integrate with the order inquiry module of the CRM SaaS application. When the customer calls about an order, the integration would provide the CRM analyst with exact information on the status of the order in the production line. Armed with this information, the analyst is prepared to present an accurate, up-to-the-minute status to the customer. If competitors do not have this information from their own environment integrated into their CRM application, they cannot offer their customers the same level of service as a more integrated enterprise. They are at a significant disadvantage, and the level playing field has tilted toward the integrated enterprise.

Skipping integration between on-premises and cloud-based applications is unlikely to be a realistic possibility. Bridging the premises and the cloud is likely to offer some of the most critical links in the entire integrated system because applications that require on-premises installation are often mission-critical applications that the entire enterprise depends on in some way. That dependency is expressed as a need to know the state of the on-premises application by many other applications in the system. Mixed integration supplies that need.

For example, a manufacturer may have a highly sophisticated process control system that is tailored exactly to an organization’s facilities and processes. It is the main driver of an efficient and profitable business. Deploying this control application on a cloud would simply not work. The controls work in real time. A network breakdown between a cloud-deployed control application and the physical process could be catastrophic, destroying facilities and threatening lives. In addition, the application represents trade secrets that management is unlikely to allow to leave the premises. But at the same time, data from this critical application also controls much of the rest of the business and must be integrated with the other applications that run the enterprise, both on and off the cloud.

For example, the supply chain system uses flow data from the control system to determine when to order raw materials and predict product availability for filling orders. Management keeps a careful eye on data from the process to plan for future expansion or contraction of the business. Customer resource management relies on the same data to predict to customers when their orders are likely to be filled. Some of this functionality may effectively be deployed on clouds; other functionality will remain on the premises but eventually migrate when it must be replaced or substantially rebuilt. Integration between the process control system and other applications is essential if the organization is to work as a coordinated whole. If a cloud-based application cannot be integrated with the on-premises core system, the application must be kept on-premises where it can be integrated, even though a cloud implementation might be cheaper and better.

The Internet of Things (IoT) also adds to the importance of mixed environment integration. Heating, ventilation, and air conditioning (HVAC) systems have become part of the Internet of Things for many enterprises. For example, controllers embedded in HVAC components become part of the Internet of Things and appear as nodes on the corporate network and may be exposed to the Internet. HVAC engineers can monitor and control the system over the corporate network or the Internet. This presents creative opportunities. A datacenter HVAC could be integrated with the load management system in the datacenter. Since increasing or decreasing loads raise and lower cooling demands, the HVAC system could respond before the temperature actually changes as loads vary, resulting in more precise temperature control and perhaps decreased cooling costs. Similarly, an integrated physical security system might transfer critical resources to a remote cloud site when designated barriers are physically breached, preventing physical tampering with the resources. Since the embedded controllers are located with the equipment they control, they will always be on-premises while the applications that use data from the controllers and manage the equipment may be cheaper and more efficient in a cloud implementation, requiring cloud-to-premises integration.

As long as the environment remains mixed, mixed integration will be crucial to enterprise success.

Challenges of Mixed Integration

Integration in a mixed cloud and on-premises environment has all the challenges of integrating in a traditional on-premises environment, but the addition of cloud implementations erects some barriers of its own.

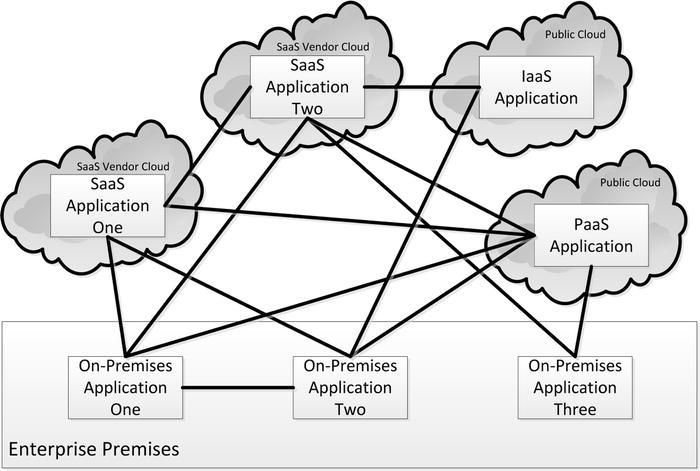

Figure 14-1 represents a hypothetical mixed architecture site. Two SaaS applications reside on their respective vendor’s clouds. They are each integrated with several on-premises applications and two applications that are hosted on public clouds. In addition, the two SaaS applications are integrated. One public cloud application is deployed on IaaS. This application could have been developed by the site and deployed on the cloud, or it could be a traditional off-the-shelf application that the site has deployed on IaaS. There is also an application deployed on PaaS using a platform supplied by the public cloud vendor. The deployment of each of these applications presents unique challenges for integration.

Figure 14-1. The point-to-point integration of SaaS, IaaS, PaaS, and on-premises applications can be complex and frustrating

Figure 14-1 shows the integration connections as solid lines. A point-to-point integration would implement each solid line as a real connection over a physical or virtual network. If the entire system were on-premises, all these connections would flow through the physical network. In a mixed system, connections between on-premises applications (On-Premises Application One and On-Premises Application Two in Figure 14-1) would still travel on the physical network. Applications in the cloud would use combinations of virtual and physical networks.

The current challenge in integration in mixed environments is to manage the network connections within clouds, between clouds, and between the premises and off-premises clouds. A virtual network is a network that is not based on physical connections between physical devices. Virtual networks can be divided between networks that are superimposed on physical networks and networks that are implemented via a hypervisor and may exist on a single physical device. Superimposed virtual networks include virtual private networks (VPNs), which identify nodes on a physical network as elements in a virtual network accessible only to members of the VPN. Network Address Translation (NAT) is another type of superimposed network established by managing IP addresses. Hypervisor-based virtual networks often include virtual network devices such as virtual switches.

Virtual networks are among the least mature technologies in cloud engineering, but the integration of cloud applications depends upon them. A single virtual machine running on a cloud only needs a connection to the Internet to perform useful work for a consumer. When an application consists of several interconnected virtual machines, some sort of network is required to connect the machines and manage the communication. This network must be part of the virtual environment. Sometimes, the virtual machines may all be running on the same hardware processor. In that case, the virtual network will be supported by the memory and data bus of the processor machine. When the virtual machines are running on different machines, the virtual network must be implemented in the hypervisor run on the physical network of the cloud provider.

Virtual networks are often a key to securing an application. When virtual machines from several different consumers are running on a single virtual cloud, the common scenario on public clouds, each consumer has their own virtual networks. These networks are shielded from other consumers. If that shielding were to break down and consumers were to have access to each other’s networks, it would be a severe security breach. The requirement for separation may extend to virtual infrastructure within the same enterprise. A single organization may require groups of virtual machines to be isolated from other groups, say accounting and engineering groups. This can be accomplished with separate virtual networks.

Mixed environment integration also depends on virtual networks to support secure integration between virtual machines running on clouds by controlling security appliances such as firewalls and encryption of information in transit. The configuration of virtual networks also can be a critical factor in the performance and capacity of systems in mixed environment by controlling factors such as quality of service (QoS) and bandwidth.

Software-defined networks (SDNs) are a related technology that increases the speed and efficiency with which complicated networks can be configured. Traffic in networks is controlled by switches that have control and data planes. The control plane determines how each packet is directed and expedited through the network. The data plane carries out the control plane’s instructions. Software-defined networking supplies an abstract management interface that administrators and developers access to control the network. This management interface acts as the control plane for the network and enables rapid complex reconfiguration of both virtual and physical networks. The combination of hypervisor-defined virtual networks and SDN promises to be powerful. The hypervisor and SDN switches can work together to bridge the gap between physical and virtual networks.

Software-defined networking is currently a rapidly developing area that promises to make implementation of all cloud integration, including mixed environments, easier.

Bus and Hub-and-Spoke Architectures

Point-to-point integration is relatively cheap and quick to implement in simple systems, but eventually it can generate considerable redundant code and confusion. A system of the complexity of Figure 14-1 can become a nightmare to troubleshoot and maintain.

Figure 14-2 is the same system as Figure 14-1, redrawn with an integration bus. A bus architecture simplifies the integration diagram, but it requires more up-front development and may not begin to deliver value until later in the evolution of the enterprise when the number of integrated applications has increased.

Figure 14-2. The same system as Figure 14-1. A bus architecture simplifies the integration diagram

Hub-and-spoke integration designs are similar to bus designs. Figure 14-3 shows a spoke-and-hub design for the same system as Figures 14-2 and 14-1. Both reduce the number of connections between applications and simplify adding new applications. The primary difference between the two is that a hub-and-spoke architecture tends to place more intelligence in the hub. In a hub-and-spoke architecture, the hub usually decides which messages are sent down the spokes. In a bus architecture, each client examines the messages on the bus and chooses those of interest. There are advantages and disadvantages to either approach, and many implementations combine the two in different ways. Earlier chapters discussed these two integration architectures in more detail. Both have many strong points, and commercial versions of both are available.

Figure 14-3. Hub-and-spoke integration is similar to bus integration

An integration architecture that avoids the unwieldiness of point-to-point integration is especially important in a complex mixed environment, because in a mixed environment, integration is likely to use a variety of interfaces that may be difficult to manage, especially when they involve proprietary third-party code.

The difficulty of the integration challenge increases with every application that is added to the system. Whether an added application is hosted on-premises or on a cloud, connections have to be added from the new application to each integrated application. When the connections are through proprietary interfaces (as is often the case for SaaS applications) and over virtual networks that may be managed differently from cloud to cloud, a clear and easily understood centralized topology is important. In addition, point-to-point integration often means that adapter code has to be written for both ends of every connection. Either a bus or a hub cuts down the amount of redundant adapter code. Adding new applications becomes much easier, and the resulting system is easier to understand and maintain. In the beginning, there is a substantial investment.

This presents a dilemma. Stakeholders like immediate return on their investment, which argues for starting with a point-to-point system and migrating to a bus or hub architecture later. However, implementing a central architecture from the beginning when there are only a few applications to integrate is by far the most efficient way to implement a centralized system. Site-built applications can be designed to interoperate off the shelf with the bus or hub, and SaaS applications can be chosen for their ease in connecting to the bus. Building a bus or hub later usually entails designing around existing heterogeneous applications, which is possible but usually is more difficult and likely to involve undesirable compromises in the design.

Some thought has to go into deciding whether an integration bus or hub should be deployed on-premises or on a cloud. The bus is a critical component. Its failure potentially shuts down all communications between applications in the system. This argues for placing the hub on-premises with failover either on a cloud or in a remote location. An on-premises hub is under the direct control of enterprise staff, and it may be more convenient to configure the hub on-premises rather than remotely. However, the topology of the system may offer greater bandwidth and network reliability if the hub is deployed on the cloud. See Figure 14-4.

Figure 14-4. Two different integration distribution schemes

Small, Medium, and Large Enterprises

The challenges of integration in a mixed environment hit the smallest organization as well as the largest. In many ways, small organizations benefit more from cloud implementations than larger organizations because they have a “green field” for development. Larger organizations usually have a legacy of applications. In many cases, larger organizations have built their business around their applications. Moving those applications to a cloud may be impossible, even though keeping the applications on the premises is no longer practical. At that point, the enterprise must buy or build a cloud replacement that will integrate both to other applications on the cloud and to applications left on-premises. Smaller organizations can avoid these hazards by considering cloud implementation in their plans immediately.

Application training in larger organizations can be an expensive issue because application changes may require retraining of hundreds of employees, and the training period may stretch into months of reduced productivity. Therefore, large organizations often place a premium on user interfaces that minimize change. This can be a problem if the switch is from a fat client (desktop-based interface) to a thin client (browser-based interface) because thin clients use a different interaction pattern than most fat clients. Although the trend has been to provide browser-based interfaces for all applications, there still many on-premises applications with fat clients that present problems when they are moved to the cloud.

The biggest challenge to large enterprises in a mixed environment is dealing with legacy applications that do not have modern integration interfaces like web services. An example is a legacy application that offers exit routines.2 Integration of these applications is often hard to understand, especially when the original authors are not available. Enhancing or extending the integration to applications deployed on the cloud often require sophisticated code and extensive quality assurance testing. Although exit routines may be a worst-case scenario, many site-built integrations are similarly challenging.

Although large organizations are likely to have the resources to move on-premises applications to cloud deployment and the scale of their IT investment may provide ample financial incentive for the move, they are likely to encounter more technical difficulties than smaller organizations.

Smaller organizations usually don’t have legacy problems. They can base their choices on the cost and functionality of applications. Therefore, cloud deployments often are a smaller organization’s choice. The low investment in hardware is usually attractive, and the functionality is often far beyond the minimal level of automation the organization is used to. Out-of-the-box integration facilities will often satisfy their needs.

In addition, smaller enterprises may have fewer problems with regulations and security. Private enterprises typically do not have the compliance issue of publicly held corporations. Although security is usually as important for both small and large organizations, smaller-scale organizations often do not need the sophisticated security management that enterprises with thousands of employees require. In addition, applications are built now with interfaces for standardized security management, which is much easier to deal with than legacy applications from the pre-Internet days when IT security meant a cipher lock on the computer room.

Choosing Which Applications to Deploy on the Cloud

Everyone knows that some applications are not likely ever to be deployed on a cloud. Security and regulatory compliance were two considerations discussed frequently in earlier chapters.

Some applications are especially well-suited to cloud deployment. Perhaps the most important technical characteristic of clouds is their flexibility. An organization can deploy one or a thousand virtual machines on a sufficiently large cloud with almost equal ease. Configuring machines for a special purpose is also easy. Instead of thumbing through catalogs or talking to sales representatives to find compatible equipment and then having to bolt and cable up the hardware, virtual machines can be configured and launched in minutes. The cloud provider takes responsibility for assembling the right physical equipment, which will probably be a small partition of much larger equipment in the provider’s datacenter.

Which applications require that kind of flexibility? Any application whose load varies widely is a good candidate. An application that needs fifty servers at midday and only two at midnight can use this flexibility well. Similarly, an application that needs fifty servers in the last week of the month and two for the other three weeks is also a candidate. It is not surprising that some of the most successful SaaS applications, such as customer relations management or service desk, fall into this category. Retail web sites also fit the category. In fact, much of the cloud concept was developed to respond to load variations at large retail sites.3

Changing Machine Configurations

Projects that require many different machine configurations are also good candidates. Consider the machine configurations depicted as radar charts in Figures 14-5 and 14-6. Each of the configurations is designed to support applications with specific needs. A cloud provider typically offers several VM sizes. An extra-small VM might have a single CPU and less than a gigabyte of memory; an extra-large VM might have four CPUs and 7GB of memory. For example, the storage configuration may be appropriate for a database server. Sometimes these basic configurations are combined. A database server may also need a high-performance CPU for rapid processing of retrieved data. When using physical hardware on-premises, adding a bank of RAID disks or an additional CPU will require time-consuming and expensive physical hardware reconfiguration such as connecting cables and reconfiguring racks. In a cloud deployment, these configurations can be changed rapidly in software.

Figure 14-5. A standard, multipurpose machine has moderate capacity and roughly equivalent CPU, network, and storage capabilities

Figure 14-6. Specialized machines have extended capacities adapting them to special purposes

Both are associated with development. Developers often need to change hardware while coding to achieve maximum efficiency. Coding and compiling ordinarily do not require a high-end server, but unit testing of the compiled code may require much more capacity. The capacity and type of the required resources vary with the unit testing to be performed. Usually, developers are assigned to a multipurpose device with adequate resources to run the code they write. If they are developing on the cloud, their options increase, and they can tailor their configuration to the job at hand. Costs are decreased because they are not using more capacity than their work requires. The work speeds up and quality improves with proper unit testing. Sometimes, the availability of cloud hardware makes unit tests possible that would have to be deferred to the system test phase. A truism of software development is that the earlier an issue can be discovered, the better and cheaper the fix.

Cloud configuration flexibility is also beneficial during system test. Quality assurance engineers used to tear their hair trying to put together test environments that resembled the user environment. Many defects have been put into production that could have been identified and fixed during system testing if the quality assurance team had an adequate test bed. Cloud test beds reduce cost because they can be stood up and taken down quickly, and the organization is charged only for the time the test bed was deployed.

The cloud is ideal for big problems that require intensive computation and extraordinary storage capacity. A retailer may analyze years of purchasing data from millions of customers to identify buying patterns. The retailer uses the analysis to tailor sales and marketing plans. A structural design firm may use high-capacity computing to plot stresses in a machine part on a highly granular scale to design a critical structural part that is both strong and light weight. In both cases, using cloud computing capacity lets organizations achieve results that used to be possible only using enormously expensive supercomputers.

Cloud implementations offer the opportunity for even smaller organizations to utilize large-scale computing. In the past, only the largest businesses or governmental organizations were able to construct and maintain supercomputers. The hardware costs, facilities, energy supply, and cooling capacity were prohibitively expensive for any but the deepest pockets.

Cloud implementations have brought down the cost of high-capacity computing in two ways. The large public cloud providers have built datacenters and networks of datacenters that are larger than any that preceded them. By relying on relatively low-cost modules that are replicated many times, they have driven down hardware costs. In addition, they have been innovative in situating and designing datacenters to maximize cooling and energy efficiency.

The second way cloud computing has reduced the cost of high-capacity computing is by permitting users to purchase only the capacity they need for the time that they use it. When an organization has a problem that can best be solved by using a very high-capacity system for a relatively short time, they can deploy it on a cloud, run the system for as long as it takes to solve the problem, and then stand down the system. Unlike an on-premises system, the cost of the solution is only for the computing capacity actually used, not the total cost of acquiring and setting up the hardware.

A project that uses a large quantity of cloud resources for a short time may be the lowest hanging of the cloud fruits, if an organization is in a position to use it. Such a project hangs low because it does not involve a potentially risky replacement of an existing on-premises service and will not be expected to be continued or maintained in the future. The resources used depend on the project. Project to evaluate an intricate model, such as those used for scientific predictions, probably will require extensive CPU and memory. Large IoT projects could require high speed and volume input and output. Large-scale data analysis may require extensive storage. The decision to undertake the project usually assumes a cloud implementation because the project can be undertaken only on the cloud. The deciding factors are usually the potential return on the investment and the level of expertise in the organization for undertaking the project. If the expertise is adequate and the return on the investment justifies the effort, the organization will likely see its way to undertake the project.

A short-term high-capacity project is not likely to require extensive integration. These projects are usually batch jobs that start with a large and complex data set and progress to the end without additional interactive input. A project such as this often requires expertise in big data techniques such as map-reduce programming and statistical analysis. If the right expertise is available, a project like this could be a starting point for developing the technical expertise in working with cloud deployments that may lead to deploying more applications on an IaaS or PaaS cloud in a mixed environment.

SaaS can be a great opportunity for organizations of all sizes. Ease of installation and maintenance can free up valuable personnel and improve the user experience. Installing and operating a SaaS application requires the absolute minimum of technical skill. For this reason, it is an easy first step to cloud-based IT. In many cases, SaaS licensing and decreased administration will reduce the total cost of ownership of the application. SaaS applications are usually easily accessible outside the enterprise perimeter. Successful SaaS products are well-designed with appropriate features and are easy to use.

Regardless of the reality, some decision makers may oppose moving applications out of the enterprise perimeter and relinquishing local IT control of applications. In some cases, government regulations, auditing rules, or other constraints may require hosting the applications on the premises. Other times, there may be a deep-seated prejudice against moving off-premises.

There are also reasons not to embrace SaaS for all applications. Although vendors make provision for customizing their SaaS applications, some organizations may require more customization than the SaaS application will support. This often occurs when the application has to fit into a highly technical environment, such as an industrial facility, like a pulp mill or power plant, with special requirements for record keeping or instrumentation.

SaaS also usually involves links that traverse the Internet. These links can present special problems. When the application must be available in locations where Internet access is difficult (for example, at remote power transmission stations in the Alaskan arctic), the bandwidth available may not support SaaS applications. In other cases, the volume of data transferred may be no problem over InfiniBand or Gigabit Ethernet in the datacenter, but the volume is just too much to transfer over the Internet without an unacceptable delay.

An unfortunate aspect of Internet communication is unpredictable latency. Disasters such as cables severed in a construction accident and transoceanic cables attacked by sharks4 can affect bandwidth and latency. Everyday variations in latency that occur when a wireless user moves from provider to provider or latency increases because of traffic increases can all present performance issues and sometimes errors from undetected race conditions.5 In Figure 14-7, the Internet intervenes three times in the SaaS implementation, effectively tripling the unpredictability of the application.

Figure 14-7. SaaS adds Internet links to the network supporting the application that may behave differently from traffic within a datacenter

The ease with which SaaS applications are integrated is largely dependent on the facilities provided by the SaaS vendor and the facilities that are already present in the environment. Most vendors provide some kind of a web service programming interface, usually SOAP- or REST-based. Figure 14-8 illustrates a typical SaaS integration pattern. The SaaS application is deployed on the SaaS vendor’s cloud. Users of the SaaS application interact with the SaaS application via HTTP and a browser. The SaaS application also interacts with an integrated application. The integrated application is deployed on another cloud or on the enterprise premises. Both the integrated application and the SaaS application expose a web service–based programming interface. Frequently, both SOAP and REST messages are supported by the SaaS application.

Figure 14-8. A typical SaaS application integration scenario using web services

Figure 14-8 is somewhat deceptive in its simplicity. The scheme is well-engineered, representing current best practices. However, there are circumstances where such a scheme can be difficult or impossible to work with, especially in a mixed environment. For an integration between the SaaS application and the enterprise application, the SaaS application needs to have a web service that will transmit the data or event needed by the organization’s application. For example, for an SaaS internal service desk, the enterprise could want the SaaS application to request the language preference of the user from the enterprise human relations (HR) system instead of using a preference stored in the SaaS application. This is a reasonable requirement if the HR system is the system of record for language preferences, as it often is. The SaaS application must send a message to the HR system indicating a new interaction has started that needs a language preference. Then the SaaS application web service must accept a callback message containing the language preference from HR. This integration will work only if the SaaS application has the capacity to send the appropriate message to the HR web service and the capacity to receive the callback from HR and use the language preference delivered in the callback. The message sending and receiving capabilities are present in Figure 14-8, but that does not mean the application behind the interfaces can produce the required message when needed or act on the callback from the integrated application.

Organizations have widely varying integration requirements. If the SaaS application integration services open too wide, they risk not being able to change their own code because they have revealed too much to their customers. On the other hand, if the SaaS application’s integration interface is too narrow, the interface cuts out too many customers whose integration requirements are not met.

Enterprises often assume that their requirements are simple and common to all business; this assumption is often invalid and results in disappointment. Users must be aware that although integration facilities are there, it does not guarantee that the facility will meet their needs. Often this problem is worse when the organization attempts to integrate legacy applications with SaaS applications that replace older on-premises applications. Designers may assume that a longstanding integration between the older applications will be easily reproduced with the modern web services provided by the SaaS application. It is possible that it will. But it is also possible that it won’t.

IaaS and PaaS tempt organizations to take an easy way into cloud deployment and port their existing applications onto a cloud rather than redesign the application for cloud deployment. That strategy has worked for some organizations but not for all. The reasons for porting failures were discussed in detail in Chapter 13.

Assuming that applications have been successfully redesigned or ported to the cloud, integration in a mixed environment can have some special challenges. These challenges are approximately the same for IaaS and PaaS. A PaaS provider may supply useful components that can make building the applications much easier. Databases, HTTP servers, and message buses are good examples. Depending on what the platform vendor offers, integration may also be easier when the enterprise can rely on carefully designed and well-tested prebuilt components such as SOAP and REST stacks.

A key to understanding the problems when integrating with a virtual machine is to understand that a file on a virtual machine is not persistent in the same way that a file is persistent on a physical machine. If an application uses the old style of integration in which one application writes a file and the other application reads it, several unexpected things can happen because the files do not behave like files on physical machines.

The files are part of the image of the virtual machine, kept in the memory of the underlying physical machine; unless special measures are taken to preserve the file, it will disappear when the memory goes away. If a virtual machine is stopped and then restarted from an image, its files will be the files in the image, not the files that were written before the virtual machine was stopped. One reason for stopping a virtual machine is for update. Often the update is accomplished by replacing the old image with one developed in the lab. Synchronizing the file that was last written with the file on the updated image adds an extra layer of complexity to shutting down and starting a virtual machine. Since most virtual machines will not need this treatment, the integrated application becomes an annoying special case.

Modern applications usually store the state of an application in a database that is separate from the application. When an application restarts, it takes its state from the database, not files in its environment. Occasionally, a C++ or Java program will persist an object by writing an image of the object to a local file. If these persisted objects are used in integration, they present the same issues.

Conclusion

Computing devices do not communicate well when the communication is through a human intermediary. When devices communicate directly, the data flows faster and more accurately than any human can ever move information.

Removing humans from communication links does not eliminate the human element from enterprise management; instead, humans are freed to do what they do best: evaluate, envision, plan, identify opportunity, and a host of other activities that require uniquely human capacities. The humans in a completely integrated system have the data needed, as well as the data that they may not know they need yet, delivered nearly instantaneously in exactly the form they request. This data is a sound basis for informed decisions and planning.

Compare this integrated scenario with the enterprise of a few decades ago. Technicians copied data from terminal screens and gauges to make management decisions. The information was transferred as fast as a pen could write and a technician could walk across a shop floor. It takes minutes, not milliseconds. However, only exceptional individuals never make transcription mistakes. Therefore, the data was not only late but also unreliable.

Integration replaces poor-quality data with better-quality data and offers the opportunity to combine and analyze data from different sources in useful ways. Integration also supports integrated control, especially when the Internet of Things enters the system. As devices and sensors are attached to the enterprise network and the Internet, the possibilities for integrated monitoring and control multiply. When the system is distributed over the enterprise premises and cloud deployments, integration increases in both importance and complexity.

However, the transition from on-premises to the cloud, with a few exceptions, is gradual. Some applications are not likely ever to be moved to a cloud implementation. Consequently, developers will face integrating between on-premises and cloud applications for a long time to come.

EXERCISES

- Why are some applications unlikely to be moved to a cloud?

- How does regulatory compliance affect cloud applications?

- How do bus and hub-and-spoke integration architectures simplify mixed architecture implementations?

- Do web services always support required integrations? Why?

_______________

1See http://csrc.nist.gov/publications/nistpubs/800-145/SP800-145.pdf, accessed August 1, 2015.

2Exit routines are routines that are coded by the users of an application and linked into the application. They offer a tricky path to integration that tends to expose the internals of the application and result in an inflexible relationship between the integrated applications. They are more commonly used in customizing the kernels of operating systems such as Linux. Installing a new application on Linux occasionally involves relinking the kernel with new or additional code. Applications that offer exit routines frequently need to be relinked when the application is upgraded. Although they are tricky, they are also flexible and powerful, amounting to a do-it-yourself programming interface. The right exit routines can make adding a web service to a legacy application possible, even relatively easy, but some routines seem impossible to work with.

3Amazon is the retail site that inspired much cloud development. Amazon’s cloud offerings are solutions developed by Amazon to cope with its own difficulty standing up systems to respond to its growing system loads. For some background on Amazon’s development, see www.zdnet.com/article/how-amazon-exposed-its-guts-the-history-of-awss-ec2/. Accessed July 28, 2015.

4Google has reinforced the shielding on some undersea fiber-optic cables to prevent damage from shark bites. See www.theguardian.com/technology/2014/aug/14/google-undersea-fibre-optic-cables-shark-attacks, accessed August 5, 2015.

5A race condition is an error that occurs because messages arrive in an unpredicted order. Usually, this occurs when messages always come in the same order in the development lab, and the developer assumes this is stable activity. If quality assurance testing does not happen to generate an environment that brings out the race condition, the error may not be caught until the application moves to production. Undetected race conditions can appear when a stable on-premises application is moved to a cloud.