CHAPTER SIX

Developing an SEO-Friendly Website

In this chapter, we will examine ways to assess the search engine friendliness of your website. A search engine–friendly website, at the most basic level, is one that allows for search engine access to site content—and having your site content accessible to search engines is the first step toward creating prominent visibility in search results. Once your site’s content is accessed by a search engine, it can then be considered for relevant positioning within search results pages.

As we discussed in the introduction to Chapter 2, search engine crawlers are basically software programs, and like all software programs, they come with certain strengths and weaknesses. Publishers must adapt their websites to make the job of these software programs easier—in essence, leverage their strengths and make their weaknesses irrelevant. If you can do this, you will have taken a major step toward success with SEO.

Developing an SEO-friendly site architecture requires a significant amount of thought, planning, and communication due to the large number of factors that influence how a search engine sees your site and the myriad ways in which a website can be put together, as there are hundreds (if not thousands) of tools that web developers can use to build a website—many of which were not initially designed with SEO or search engine crawlers in mind.

Making Your Site Accessible to Search Engines

The first step in the SEO design process is to ensure that your site can be found and crawled by search engines. This is not as simple as it sounds, as there are many popular web design and implementation constructs that the crawlers may not understand.

Indexable Content

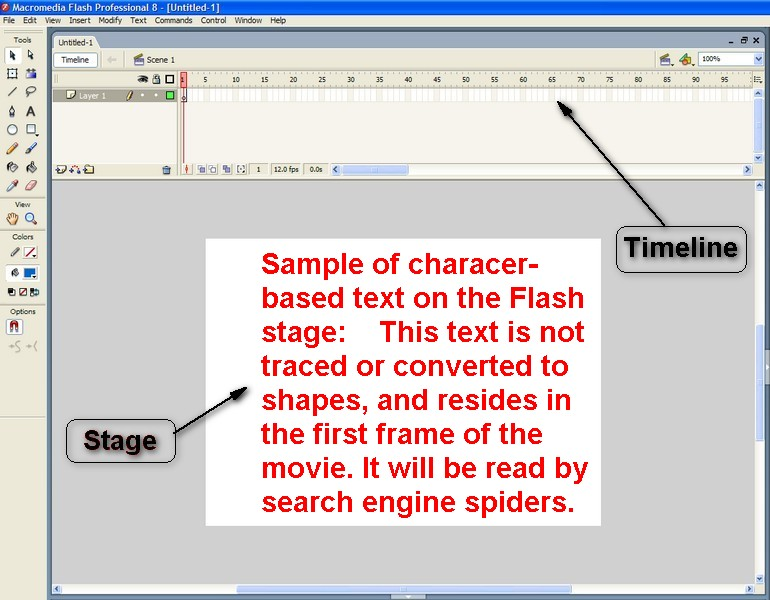

To rank well in the search engines, your site’s content—that is, the material available to visitors of your site—should be in HTML text form. Images and Flash files, for example, while crawled by the search engines, are content types that are more difficult for search engines to analyze and therefore are not ideal for communicating to search engines the topical relevance of your pages.

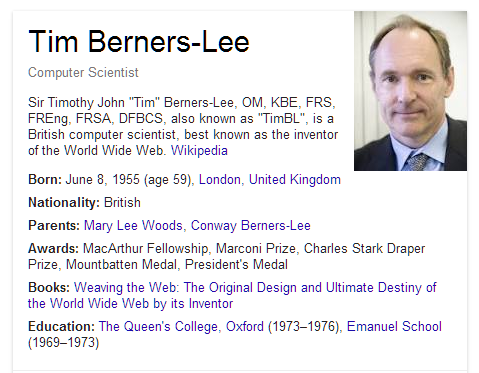

Search engines have challenges with identifying the relevance of images because there are minimum text-input fields for image files in GIF, JPEG, or PNG format (namely the filename, title, and alt attribute). While we do strongly recommend accurate labeling of images in these fields, images alone are usually not enough to earn a web page top rankings for relevant queries. While image identification technology continues to advance, processing power limitations will likely keep the search engines from broadly applying this type of analysis to web search in the near future.

Google enables users to perform a search using an image, as opposed to text, as the search query (though users can input text to augment the query). By uploading an image, dragging and dropping an image from the desktop, entering an image URL, or right-clicking on an image within a browser (Firefox and Chrome with installed extensions), users can often find other locations of that image on the Web for reference and research, as well as images that appear similar in tone and composition. While this does not immediately change the landscape of SEO for images, it does give us an indication of how Google is potentially augmenting its current relevance indicators for image content.

With Flash, while specific .swf files (the most common file extension for Flash) can be crawled and indexed—and are often found when a user conducts a .swf file search for specific words or phrases included in their filename—it is rare for a generic query to return a Flash file or a website generated entirely in Flash as a highly relevant result, due to the lack of “readable” content. This is not to say that websites developed using Flash are inherently irrelevant, or that it is impossible to successfully optimize a website that uses Flash; however, in our experience the preference must still be given to HTML-based files.

Spiderable Link Structures

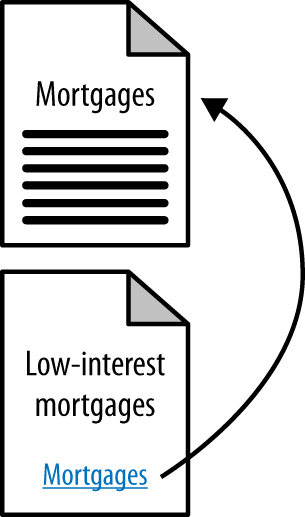

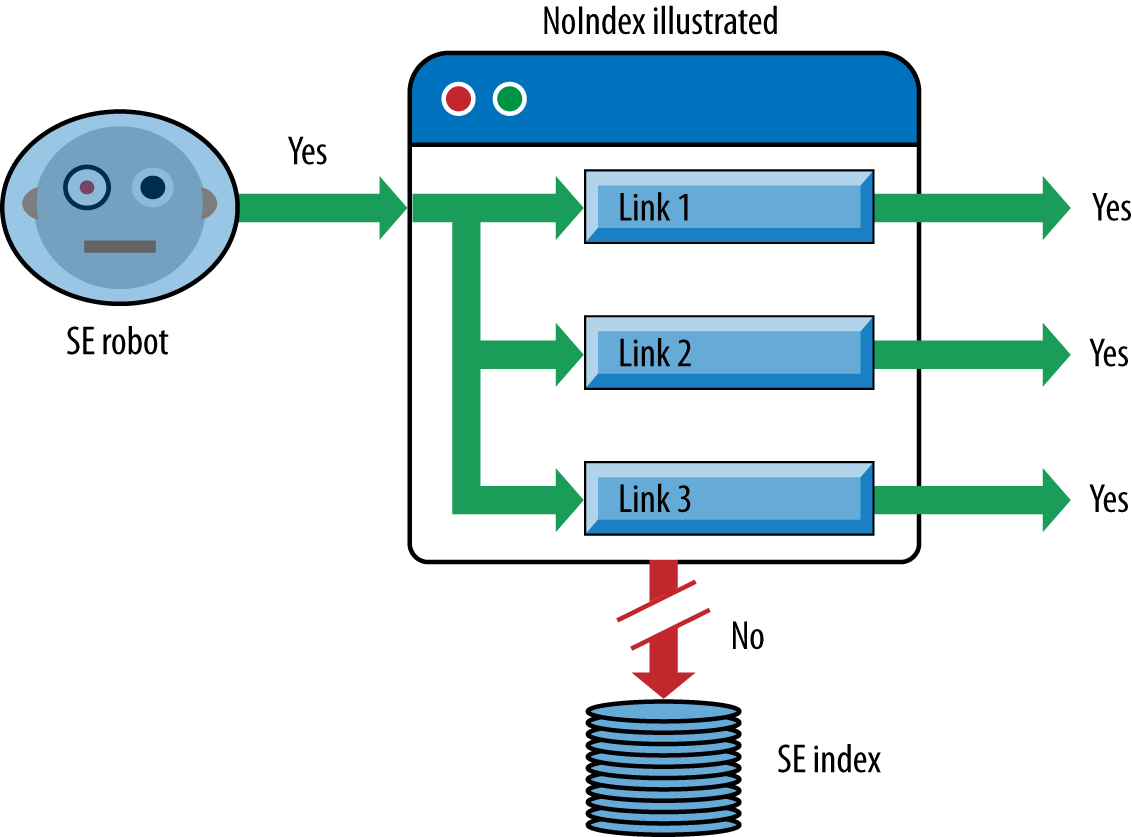

As we outlined in Chapter 2, search engines use links on web pages to help them discover other web pages and websites. For this reason, we strongly recommend taking the time to build an internal linking structure that spiders can crawl easily. Many sites make the critical mistake of hiding or obfuscating their navigation in ways that limit spider accessibility, thus impacting their ability to get pages listed in the search engines’ indexes. Consider Figure 6-1, which shows how this problem can occur.

Figure 6-1. Providing search engines with crawlable link structures

In Figure 6-1, Google’s spider has reached Page A and sees links to pages B and E. However, even though pages C and D might be important pages on the site, the spider has no way to reach them (or even to know they exist) because no direct, crawlable links point to those pages. As far as Google is concerned, they might as well not exist. Great content, good keyword targeting, and smart marketing won’t make any difference at all if the spiders can’t reach those pages in the first place.

To refresh your memory of the discussion in Chapter 2, here are some common reasons why pages may not be reachable:

- Links in submission-required forms

-

Search spiders will rarely, if ever, attempt to “submit” forms, and thus, any content or links that are accessible only via a form are invisible to the engines. This even applies to simple forms such as user logins, search boxes, or some types of pull-down lists.

- Links in hard-to-parse JavaScript

-

If you use JavaScript for links, you may find that search engines either do not crawl or give very little weight to the embedded links. In June 2014, Google announced enhanced crawling of JavaScript and CSS. Google can now render some JavaScript and follow some JavaScript links. Due to this change, Google recommends against blocking it from crawling your JavaScript and CSS files. For a preview of how your site might render according to Google, go to Search Console -> Crawl -> Fetch as Google, input the URL you would like to preview, and select “Fetch and Render.”

- Links in Java or other plug-ins

-

Traditionally, links embedded inside Java and plug-ins have been invisible to the engines.

- Links in Flash

-

In theory, search engines can detect links within Flash, but don’t rely too heavily on this capability.

- Links in PowerPoint and PDF files

-

Search engines sometimes report links seen in PowerPoint files or PDFs. These links are believed to be counted the same as links embedded in HTML documents.

- Links pointing to pages blocked by the meta

robotstag,rel="nofollow", or robots.txt -

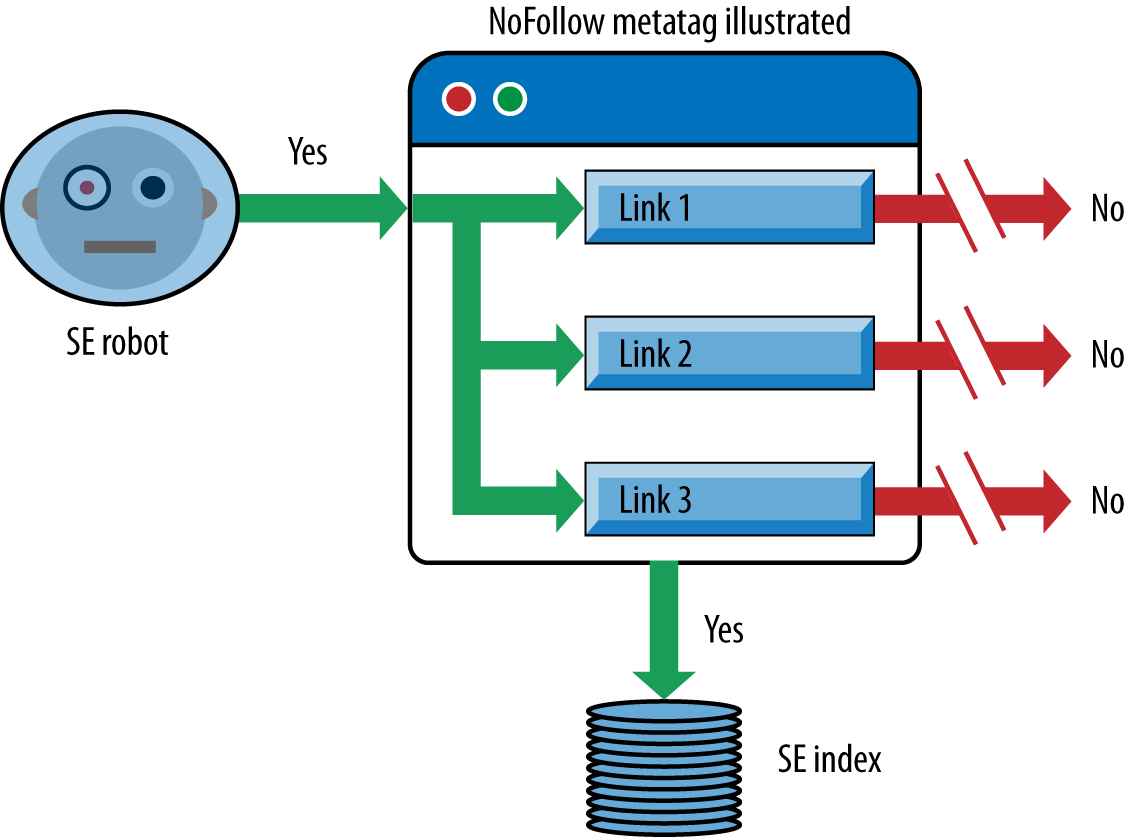

The robots.txt file provides a very simple means for preventing web spiders from crawling pages on your site. Using the

nofollowattribute on a link, or placing the metarobots nofollowtag with thecontent="nofollow"attribute on the page containing the link, instructs the search engine to not pass link authority via the link (a concept we will discuss further in “Content Delivery and Search Spider Control”). The effectiveness of thenofollowattribute on links has greatly diminished to the point of irrelevance as a result of overmanipulation by aggressive SEO practitioners. For more on this, see the blog post “PageRank Sculpting”, by Google’s Matt Cutts. - Links on pages with many hundreds or thousands of links

-

Historically, Google had suggested a maximum of 100 links per page before it may stop spidering additional links from that page, but this recommendation has softened over time. Think of it more as a strategic guideline for passing PageRank. If a page has 200 links on it, then none of the links get very much PageRank. Managing how you pass PageRank by limiting the number of links is usually a good idea. Tools such as Screaming Frog can run reports on the number of outgoing links you have per page.

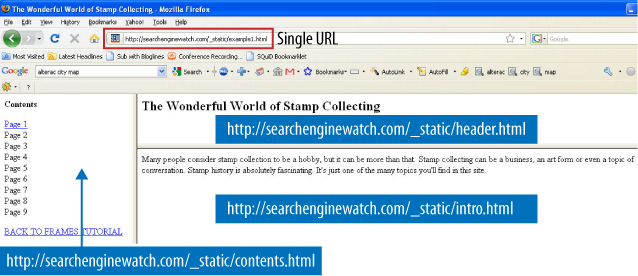

- Links in frames or iframes

-

Technically, links in both frames and iframes can be crawled, but both present structural issues for the engines in terms of organization and following. Unless you’re an advanced user with a good technical understanding of how search engines index and follow links in frames, it is best to stay away from them as a place to offer links for crawling purposes. We will discuss frames and iframes in more detail in “Creating an Optimal Information Architecture”.

XML Sitemaps

Google, Yahoo!, and Bing (formerly MSN Search, and then Live Search) all support a protocol known as XML Sitemaps. Google first announced it in 2005, and then Yahoo! and MSN Search agreed to support the protocol in 2006. Using the Sitemaps protocol, you can supply search engines with a list of all the URLs you would like them to crawl and index.

Adding a URL to a sitemap file does not guarantee that a URL will be crawled or indexed. However, it can result in the search engine discovering and indexing pages that it otherwise would not.

This program is a complement to, not a replacement for, the search engines’ normal, link-based crawl. The benefits of sitemaps include the following:

-

For the pages the search engines already know about through their regular spidering, they use the metadata you supply, such as the last date the content was modified (lastmod date) and the frequency at which the page is changed (changefreq), to improve how they crawl your site.

-

For the pages they don’t know about, they use the additional URLs you supply to increase their crawl coverage.

-

For URLs that may have duplicates, the engines can use the XML Sitemaps data to help choose a canonical version.

-

Verification/registration of XML sitemaps may indicate positive trust/authority signals.

-

The crawling/inclusion benefits of sitemaps may have second-order positive effects, such as improved rankings or greater internal link popularity.

-

Having a sitemap registered with Google Search Console can give you extra analytical insight into whether your site is suffering from indexation, crawling, or duplicate content issues.

Matt Cutts, the former head of Google’s webspam team, has explained XML sitemaps in the following way:

Imagine if you have pages A, B, and C on your site. We find pages A and B through our normal web crawl of your links. Then you build a Sitemap and list the pages B and C. Now there’s a chance (but not a promise) that we’ll crawl page C. We won’t drop page A just because you didn’t list it in your Sitemap. And just because you listed a page that we didn’t know about doesn’t guarantee that we’ll crawl it. But if for some reason we didn’t see any links to C, or maybe we knew about page C but the URL was rejected for having too many parameters or some other reason, now there’s a chance that we’ll crawl that page C.1

Sitemaps use a simple XML format that you can learn about at http://www.sitemaps.org/. XML sitemaps are a useful and in some cases essential tool for your website. In particular, if you have reason to believe that the site is not fully indexed, an XML sitemap can help you increase the number of indexed pages. As sites grow in size, the value of XML sitemap files tends to increase dramatically, as additional traffic flows to the newly included URLs.

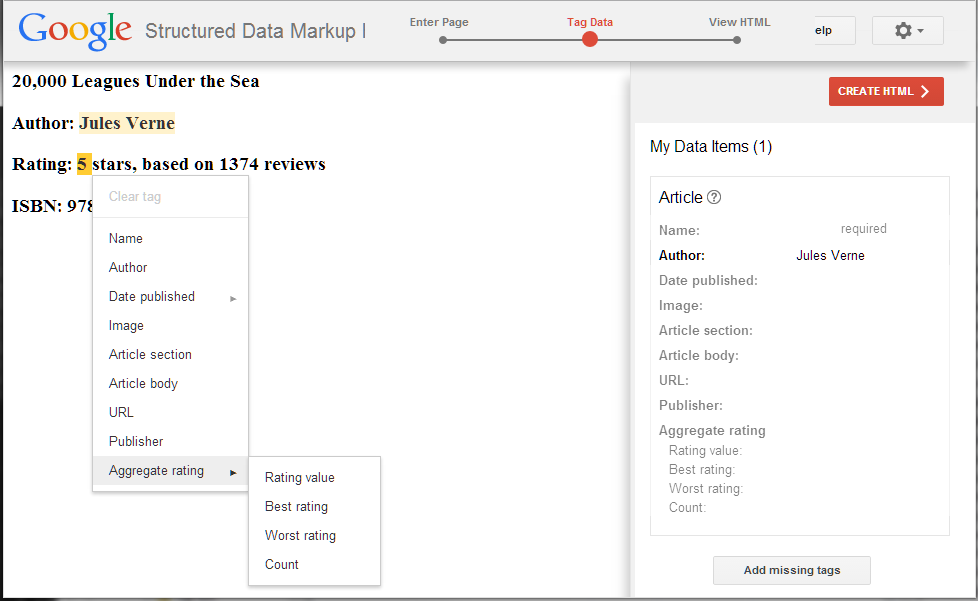

Laying out an XML sitemap

The first step in the process of creating an XML sitemap is to create an XML sitemap file in a suitable format. Because creating an XML sitemap requires a certain level of technical know-how, it would be wise to involve your development team in the XML sitemap generator process from the beginning. Figure 6-2 shows an example of some code from a sitemap.

Figure 6-2. Sample XML sitemap from Google.com

To create your XML sitemap, you can use the following:

- An XML sitemap generator

-

This is a simple script that you can configure to automatically create sitemaps, and sometimes submit them as well. Sitemap generators can create these sitemaps from a URL list, access logs, or a directory path hosting static files corresponding to URLs. Here are some examples of XML sitemap generators:

- Simple text

-

You can provide Google with a simple text file that contains one URL per line. However, Google recommends that once you have a text sitemap file for your site, you use the sitemap generator to create a sitemap from this text file using the Sitemaps protocol.

- Syndication feed

-

Google accepts Really Simple Syndication (RSS) 2.0 and Atom 1.0 feeds. Note that the feed may provide information on recent URLs only.

Deciding what to include in a sitemap file

When you create a sitemap file, you need to take care in situations where your site has multiple URLs that refer to one piece of content: include only the preferred (canonical) version of the URL, as the search engines may assume that the URL specified in a sitemap file is the preferred form of the URL for the content. You can use the sitemap file to indicate to the search engines which URL points to the preferred version of a given page.

In addition, be careful about what not to include. For example, do not include multiple URLs that point to identical content, and leave out pages that are simply pagination pages (or alternate sort orders for the same content) and/or any low-value pages on your site. Last but not least, make sure that none of the URLs listed in the sitemap file include any tracking parameters.

Mobile sitemaps

Mobile sitemaps should be used for content targeted at mobile devices. Mobile information is kept in a separate sitemap file that should not contain any information on nonmobile URLs. Google supports nonmobile markup, XHTML mobile profile, WML (WAP 1.2) and cHTML. Details on the mobile sitemap format can be found here: https://support.google.com/webmasters/answer/34648.

Video sitemaps

Including information on your videos in your sitemap file will increase their chances of being discovered by search engines. Google supports the following video formats: .mpg, .mpeg, .mp4, .m4v, .mov, .wmv, .asf, .avi, .ra, .ram, .rm, .flv, and .swf. You can see the specification on how to implement video sitemap entries here: https://support.google.com/webmasters/answer/80472.

Image sitemaps

You can increase visibility for your images by listing them in your sitemap file. For each URL you list in your sitemap file, you can also list the images that appear on those pages. You can list up to 1,000 images per page. Specialized image tags are associated with the URL. The details of the format of these tags are on this page: https://support.google.com/webmasters/answer/178636.

Listing images in the sitemap does increase the chances of those images being indexed. If you list some images and not others, it may be interpreted as a signal that the unlisted images are less important.

Uploading your sitemap file

When your sitemap file is complete, upload it to your site in the highest-level directory you want search engines to crawl (generally, the root directory), such as www.yoursite.com/sitemap.xml. You can include more than one subdomain in your sitemap provided that you verify the sitemap for each subdomain in Google Search Console, though it’s frequently easier to understand what’s happening with indexation if each subdomain has its own sitemap and its own profile in Google Search Console.

Managing and updating XML sitemaps

Once your XML sitemap has been accepted and your site has been crawled, monitor the results and update your sitemap if there are issues. With Google, you can return to your Google Search Console account to view the statistics and diagnostics related to your XML sitemaps. Just click the site you want to monitor. You’ll also find some FAQs from Google on common issues such as slow crawling and low indexation.

Update your XML sitemap with Google and Bing when you add URLs to your site. You’ll also want to keep your sitemap file up to date when you add a large volume of pages or a group of pages that are strategic.

There is no need to update the XML sitemap when you’re simply updating content on existing URLs. It is not strictly necessary to update when pages are deleted, as the search engines will simply not be able to crawl them, but do update before you have too many broken pages in your feed. Also update your sitemap file whenever you add any new content, and remove any deleted pages at that time. Google and Bing will periodically redownload the sitemap, so you don’t need to resubmit your sitemap to Google or Bing unless your sitemap location has changed.

Enable Google and Bing to autodiscover your XML sitemap locations by using the Sitemap: directive in your site’s robots.txt file.

If you are adding or deleting large numbers of new pages to your site on a regular basis, you may want to use a utility, or have your developers build the ability, for your XML sitemap to regenerate with all of your current URLs on a regular basis. Many sites regenerate their XML sitemap daily via automated scripts.

Google and the other major search engines discover and index websites by crawling links. Google XML sitemaps are a way to feed the URLs that you want crawled on your site to Google for more complete crawling and indexation, which results in improved long-tail searchability. By creating and updating this XML file, you help to ensure that Google recognizes your entire site, and this recognition will help people find your site. It also helps all of the search engines understand which version of your URLs (if you have more than one URL pointing to the same content) is the canonical version.

Creating an Optimal Information Architecture

Making your site friendly to search engine crawlers also requires that you put some thought into your site’s information architecture (IA). A well-designed site architecture can bring many benefits for both users and search engines.

The Importance of a Logical, Category-Based Flow

Search engines face myriad technical challenges in understanding your site, as crawlers are not able to perceive web pages in the way that humans do, creating significant limitations for both accessibility and indexing. A logical and properly constructed website architecture can help overcome these issues and bring great benefits in search traffic and usability.

At the core of website information architecture are two critical principles: usability (making a site easy to use) and information architecture (crafting a logical, hierarchical structure for content).

One of the very early information architecture proponents, Richard Saul Wurman, developed the following definition for information architect:2

1) the individual who organizes the patterns inherent in data, making the complex clear. 2) a person who creates the structure or map of information which allows others to find their personal paths to knowledge. 3) the emerging 21st century professional occupation addressing the needs of the age focused upon clarity, human understanding, and the science of the organization of information.

Usability and search friendliness

Search engines are trying to reproduce the human process of sorting relevant web pages by quality. If a real human were to do this job, usability and user experience would surely play a large role in determining the rankings. Given that search engines are machines and don’t have the ability to segregate by this metric quite so easily, they are forced to employ a variety of alternative, secondary metrics to assist in the process. The most well known and well publicized among these is a measurement of the inbound links to a website (see Figure 6-3), and a well-organized site is more likely to receive links.

Figure 6-3. Make your site attractive to link to

Since Google launched in the late 1990s, search engines have strived to analyze every facet of the link structure on the Web and have extraordinary abilities to infer trust, quality, reliability, and authority via links. If you push back the curtain and examine why links between websites exist and how they come to be, you can see that a human being (or several humans, if the organization suffers from bureaucracy) is almost always responsible for the creation of links.

The engines hypothesize that high-quality links will point to high-quality content, and that great content and positive user experiences will be rewarded with more links than poor user experiences. In practice, the theory holds up well. Modern search engines have done a very good job of placing good-quality, usable sites in top positions for queries.

An analogy

Look at how a standard filing cabinet is organized. You have the individual cabinet, drawers in the cabinet, folders within the drawers, files within the folders, and documents within the files (see Figure 6-4).

Figure 6-4. Similarities between filing cabinets and web pages

There is only one copy of any individual document, and it is located in a particular spot. There is a very clear navigation path to get to it.

If you want to find the January 2015 invoice for a client (Amalgamated Glove & Spat), you would go to the cabinet, open the drawer marked Client Accounts, find the Amalgamated Glove & Spat folder, look for the Invoices file, and then flip through the documents until you come to the January 2015 invoice (again, there is only one copy of this; you won’t find it anywhere else).

Figure 6-5 shows what it looks like when you apply this logic to the popular website, Craigslist.

Figure 6-5. Filing cabinet analogy applied to Craigslist

If you’re seeking an apartment in Los Angeles, you’d navigate to http://losangeles.craigslist.org/, choose apts/housing, narrow that down to two bedrooms, and pick the two-bedroom loft from the list of available postings. Craigslist’s simple, logical information architecture makes it easy for you to reach the desired post in four clicks, without having to think too hard at any step about where to go. This principle applies perfectly to the process of SEO, where good information architecture dictates:

-

As few clicks as possible to any given page

-

One hundred or fewer links per page (so as not to overwhelm either crawlers or visitors)

-

A logical, semantic flow of links from home page to categories to detail pages

Here is a brief look at how this basic filing cabinet approach can work for some more complex information architecture issues.

Subdomains

You should think of subdomains as completely separate filing cabinets within one big room. They may share similar architecture, but they shouldn’t share the same content; and more importantly, if someone points you to one cabinet to find something, he is indicating that that cabinet is the authority, not the other cabinets in the room. Why is this important? It will help you remember that links (i.e., votes or references) to subdomains may not pass all, or any, of their authority to other subdomains within the room (e.g., *.craigslist.org, wherein * is a variable subdomain name).

Those cabinets, their contents, and their authority are isolated from one another and may not be considered to be associated with one another. This is why, in most cases, it is best to have one large, well-organized filing cabinet instead of several that may prevent users and bots from finding what they want.

Redirects

If you have an organized administrative assistant, he probably uses 301 redirects (these are discussed more in the section “Redirects”) inside his literal, metal filing cabinet. If he finds himself looking for something in the wrong place, he might put a sticky note there reminding him of the correct location the next time he needs to look for that item. Anytime he looked for something in those cabinets, he could always find it because if he navigated improperly, he would inevitably find a note pointing him in the right direction.

Redirect irrelevant, outdated, or misplaced content to the proper spot in your filing cabinet, and both your users and the engines will know what qualities and keywords you think it should be associated with.

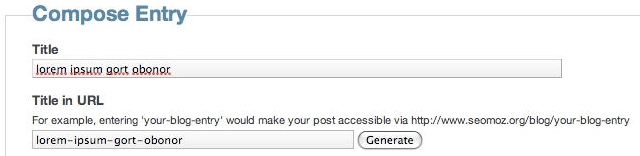

URLs

It would be tremendously difficult to find something in a filing cabinet if every time you went to look for it, it had a different name, or if that name resembled jklhj25br3g452ikbr52k—a not-so-uncommon type of character string found in dynamic website URLs. Static, keyword-targeted URLs are much better for users and bots alike. They can always be found in the same place, and they give semantic clues as to the nature of the content.

These specifics aside, thinking of your site information architecture as a virtual filing cabinet is a good way to make sense of best practices. It’ll help keep you focused on a simple, easily navigated, easily crawled, well-organized structure. It is also a great way to explain an often-complicated set of concepts to clients and coworkers.

Because search engines rely on links to crawl the Web and organize its content, the architecture of your site is critical to optimization. Many websites grow organically and, like poorly planned filing systems, become complex, illogical structures that force people (and spiders) to struggle to find what they want.

Site Architecture Design Principles

In planning your website, remember that nearly every user will initially be confused about where to go, what to do, and how to find what she wants. An architecture that recognizes this difficulty and leverages familiar standards of usability with an intuitive link structure will have the best chance of making a visit to the site a positive experience. A well-organized site architecture helps solve these problems, and provides semantic and usability benefits to both users and search engines.

As shown in Figure 6-6, a recipes website can use intelligent architecture to fulfill visitors’ expectations about content and create a positive browsing experience. This structure not only helps humans navigate a site more easily, but also helps the search engines to see that your content fits into logical concept groups. You can use this approach to help you rank for applications of your product in addition to attributes of your product.

Figure 6-6. Structured site architecture

Although site architecture accounts for a small part of the algorithms, search engines do make use of relationships between subjects and give value to content that has been organized sensibly. For example, if in Figure 6-6 you were to randomly jumble the subpages into incorrect categories, your rankings could suffer. Search engines, through their massive experience with crawling the Web, recognize patterns in subject architecture and reward sites that embrace an intuitive content flow.

Site architecture protocol

Although site architecture—the creation of structure and flow in a website’s topical hierarchy—is typically the territory of information architects (or is created without assistance from a company’s internal content team), its impact on search engine rankings, particularly in the long run, is substantial. It is, therefore, a wise endeavor to follow basic guidelines of search friendliness.

The process itself should not be overly arduous, if you follow this simple protocol:

-

List all of the requisite content pages (blog posts, articles, product detail pages, etc.).

-

Create top-level navigation that can comfortably hold all of the unique types of detailed content for the site.

-

Reverse the traditional top-down process by starting with the detailed content and working your way up to an organizational structure capable of holding each page.

-

Once you understand the bottom, fill in the middle. Build out a structure for subnavigation to sensibly connect top-level pages with detailed content. In small sites, there may be no need for this level, whereas in larger sites, two or even three levels of subnavigation may be required.

-

Include secondary pages such as copyright, contact information, and other nonessentials.

-

Build a visual hierarchy that shows (to at least the last level of subnavigation) each page on the site.

Figure 6-7 shows an example of a well-structured site architecture.

Figure 6-7. Second example of structured site architecture

Category structuring

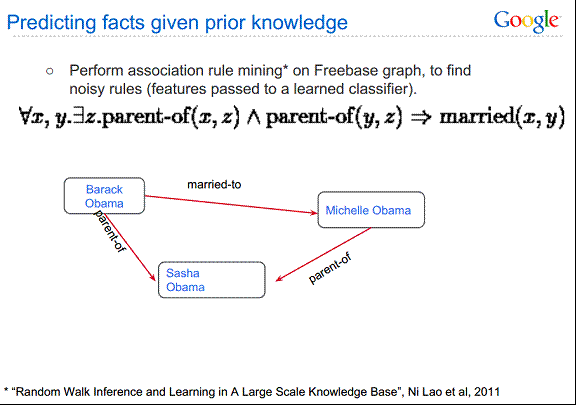

As search engines crawl the Web, they collect an incredible amount of data (millions of gigabytes) on the structure of language, subject matter, and relationships between content. Though not technically an attempt at artificial intelligence, the engines have built a repository capable of making sophisticated determinations based on common patterns. As shown in Figure 6-8, search engine spiders can learn semantic relationships as they crawl thousands of pages that cover a related topic (in this case, dogs).

Figure 6-8. Spiders learning semantic relationships

Although content need not always be structured along the most predictable patterns, particularly when a different method of sorting can provide value or interest to a visitor, organizing subjects logically assists both humans (who will find your site easier to use) and engines (which will award you with greater rankings based on increased subject relevance).

Topical relevance

Naturally, this pattern of relevance-based scoring extends from single relationships between documents to the entire category structure of a website. Site creators can take advantage of this best by building hierarchies that flow from broad, encompassing subject matter down to more detailed, specific content. Obviously, in any categorization system, there is a natural level of subjectivity; think first of your visitors, and use these guidelines to ensure that your creativity doesn’t overwhelm the project.

Taxonomy and ontology

In designing a website, you should also consider the taxonomy and ontology of the website. Taxonomy is essentially a two-dimensional hierarchical model of the architecture of the site. You can think of ontology as mapping the way the human mind thinks about a topic area. It can be much more complex than taxonomy, because a larger number of relationship types are often involved.

One effective technique for coming up with an ontology is called card sorting. This is a user-testing technique whereby users are asked to group items together so that you can organize your site as intuitively as possible. Card sorting can help identify not only the most logical paths through your site, but also ambiguous or cryptic terminology that should be reworded.

With card sorting, you write all the major concepts onto a set of cards that are large enough for participants to read, manipulate, and organize. Your test group assembles the cards in the order they believe provides the most logical flow, as well as into groups that seem to fit together.

By itself, building an ontology is not part of SEO, but when you do it properly it will impact your site architecture, and therefore it interacts with SEO. Coming up with the right site architecture should involve both disciplines.

Flat Versus Deep Architecture

One very strict rule for search friendliness is the creation of flat site architecture. Flat sites require a minimal number of clicks to access any given page, whereas deep sites create long paths of links required to access detailed content. For nearly every site with fewer than 10,000 pages, all content should be accessible through a maximum of four clicks from the home page and/or sitemap page. That said, flatness should not be forced if it does not make sense for other reasons. At 100 links per page, even sites with millions of pages can have every page accessible in five to six clicks if proper link and navigation structures are employed. If a site is not built to be flat, it can take too many clicks for a user or a search engine to reach the desired content, as shown in Figure 6-9. In contrast, a flat site (see Figure 6-10) allows users and search engines to reach most content in just a few clicks.

Figure 6-9. Deep site architecture

Figure 6-10. Flat site architecture

Flat sites aren’t just easier for search engines to crawl; they are also simpler for users, as they limit the number of page visits the user requires to reach his destination. This reduces the abandonment rate and encourages repeat visits.

When creating flat sites, be careful to not overload pages with links either. Pages that have 200 links on them are not passing much PageRank to any of those pages. While flat site architectures are desirable, you should not force an architecture to be overly flat if it is not otherwise logical to do so.

The issue of the number of links per page relates directly to another rule for site architects: avoid excessive pagination wherever possible. Pagination (see Figure 6-11), the practice of creating a list of elements on pages separated solely by numbers (e.g., some ecommerce sites use pagination for product catalogs that have more products than they wish to show on a single page), is problematic for many reasons.

First, pagination provides virtually no new topical relevance, as the pages are each largely about the same topic. Second, content that moves into different pagination can potentially create duplicate content problems or be seen as poor-quality or “thin” content. Last, pagination can create spider traps and hundreds or thousands of extraneous, low-quality pages that can be detrimental to search visibility.

Figure 6-11. Pagination structures

So, make sure you implement flat structures and stay within sensible guidelines for the number of links per page, while retaining a contextually rich link structure. This is not always as easy as it sounds, and accomplishing it may require quite a bit of thought and planning to build a contextually rich structure on some sites. Consider a site with 10,000 different men’s running shoes. Defining an optimal structure for that site could be a very large effort, but that effort will pay serious dividends in return.

Solutions to pagination problems vary based on the content of the website. Here are two example scenarios and their solutions:

- Use

rel="next"andrel="prev" -

Google supports link elements called

rel="next"andrel="prev". The benefit of using these link elements is that it lets Google know when it has encountered a sequence of paginated pages. Once Google recognizes these tags, links to any of the pages will be treated as links to the series of pages as a whole. In addition, Google will show in the index the most relevant page in the series (most of the time this will be the first page, but not always).Bing announced support for

rel="next"andrel="prev"in 2012.These tags can be used to inform Google about pagination structures, and they can be used whether or not you create a

view-allpage. The concept is simple. The following example outlines how to use the tags for content that is paginated into 12 pages:-

In the

<head>section of the first page of your paginated content, implement arel="next"tag pointing to the second page of the content. The tag should look something like this:<link rel="next" href="http://www.

yoursite.com/products?prod=qwert&p=2" /> -

In the

<head>section of the last page of your paginated content, implement arel="prev"link element pointing to the second-to-last page of the content. The tag should look something like this:<link rel="prev" href="http://www.

yoursite.com/products?prod=qwert&p=11" />In the

<head>section of pages 2 through 11, implementrel="next"andrel="prev"tags pointing to the following and preceding pages, respectively. The following example shows what it should look like on page 6 of the content:<link rel="prev" href="http://www.yoursite.com/products?prod=qwert&p=5" /> <link rel="next" href="http://www.yoursite.com/products?prod=qwert&p=7" />

-

- Create a

view-allpage and use canonical tags -

You may have lengthy articles that you choose to break into multiple pages. However, this results in links to the pages whose anchor text is something like

"1","2", and so forth. The titles of the various pages may not vary in any significant way, so they tend to compete with each other for search traffic. Finally, if someone links to the article but does not link to the first page, the link authority from that link will largely be wasted.One way to handle this problem is to retain the paginated version of the article, but also create a single-page version of the article. This is referred to as a

view-allpage. Then use therel="canonical"link element (which is discussed in more detail in the section “Content Delivery and Search Spider Control”) to point from the paginated pages to theview-allpage. This will concentrate all of the link authority and search engine attention on a single page. You should also include a link to theview-allpage from each of the individual paginated pages as well. However, if theview-allpage loads too slowly because of the page size, it may not be the best option for you.

Note that if you implement a view-all page and do not implement any of these tags, Google will attempt to discover the page and show it instead of the paginated versions in its search results. However, we recommend that you make use of one of the aforementioned two solutions, as Google cannot guarantee that it will discover your view-all pages, and it is best to provide it with as many clues as possible.

Root Domains, Subdomains, and Microsites

Among the common questions about structuring a website (or restructuring one) are whether to host content on a new domain, when to use subfolders, and when to employ microsites.

As search engines scour the Web, they identify four kinds of web structures on which to place metrics:

- Individual pages/URLs

-

These are the most basic elements of the Web—filenames, much like those that have been found on computers for decades, which indicate unique documents. Search engines assign query-independent scores—most famously, Google’s PageRank—to URLs and judge them in their ranking algorithms. A typical URL might look something like http://www.yourdomain.com/page.

- Subfolders

-

The folder structures that websites use can also inherit or be assigned metrics by search engines (though there’s very little information to suggest that they are used one way or another). Luckily, they are an easy structure to understand. In the URL http://www.yourdomain.com/blog/post17, /blog/ is the subfolder and post17 is the name of the file in that subfolder. Engines may identify common features of documents in a given subfolder and assign metrics to these (such as how frequently the content changes, how important these documents are in general, or how unique the content is that exists in these subfolders).

- Subdomains/fully qualified domains (FQDs)/third-level domains

-

In the URL http://blog.yourdomain.com/page, three kinds of domain levels are present. The top-level domain (also called the TLD or domain extension) is .com, the second-level domain is yourdomain, and the third-level domain is blog. The third-level domain is sometimes referred to as a subdomain. Common web nomenclature does not typically apply the word subdomain when referring to www, although technically, this too is a subdomain. A fully qualified domain is the combination of the elements required to identify the location of the server where the content can be found (in this example, blog.yourdomain.com/).

-

These structures can receive individual assignments of importance, trustworthiness, and value from the engines, independent of their second-level domains, particularly on hosted publishing platforms such as WordPress, Blogspot, and so on.

- Complete root domains/host domain/pay-level domains (PLDs)/second-level domains

-

The domain name you need to register and pay for, and the one you point DNS settings toward, is the second-level domain (though it is commonly improperly called the “top-level” domain). In the URL http://www.yourdomain.com/page, yourdomain.com is the second-level domain. Other naming conventions may refer to this as the “root” or “pay-level” domain.

Figure 6-16 shows some examples.

Figure 6-16. Breaking down some example URLs

When to Use a Subfolder

If a subfolder will work, it is the best choice 99.9% of the time. Keeping content on a single root domain and single subdomain (e.g., http://www.yourdomain.com) gives the maximum SEO benefits, as engines will maintain all of the positive metrics the site earns around links, authority, and trust, and will apply these to every page on the site.

Subfolders have all the flexibility of subdomains (the content can, if necessary, be hosted on a unique server or completely unique IP address through post-firewall load balancing) and none of the drawbacks. Subfolder content will contribute directly to how search engines (and users, for that matter) view the domain as a whole. Subfolders can be registered with the major search engine tools and geotargeted individually to specific countries and languages as well.

Although subdomains are a popular choice for hosting content, they are generally not recommended if SEO is a primary concern. Subdomains may inherit the ranking benefits and positive metrics of the root domain they are hosted underneath, but they do not always do so (and thus, content can underperform in these scenarios). Of course, there can be exceptions to this general guideline. Subdomains are not inherently harmful, and there are some content publishing scenarios in which they are more appropriate than subfolders; it is simply preferable for various SEO reasons to use subfolders when possible, as we will discuss next.

When to Use a Subdomain

If your marketing team decides to promote a URL that is completely unique in content or purpose and would like to use a catchy subdomain to do it, using a subdomain can be practical. Google Maps is an example that illustrates how marketing considerations make a subdomain an acceptable choice. One good reason to use a subdomain is in a situation in which, as a result of creating separation from the main domain, using one looks more authoritative to users.

Subdomains may also be a reasonable choice if keyword usage in the domain name is of critical importance. It appears that search engines do weight keyword usage in the URL somewhat, and have slightly higher benefits for exact matches in the subdomain (or third-level domain name) than subfolders. Note that exact matches in the domain and subdomain carry less weight than they once did. Google updated the weight it assigned to these factors in 2012.7

Keep in mind that subdomains may inherit very little link equity from the main domain. If you wish to split your site in the subdomains and have all of them rank well, assume that you will have to support each with its own full-fledged SEO strategy.

When to Use a Separate Root Domain

If you have a single, primary site that has earned links, built content, and attracted brand attention and awareness, it is very rarely advisable to place any new content on a completely separate domain. There are rare occasions when this can make sense, and we’ll walk through these, as well as explain how singular sites benefit from collecting all of their content in one root domain location.

Splitting similar or relevant content from your organization onto multiple domains can be likened to a store taking American Express Gold cards and rejecting American Express Corporate or American Express Blue—it is overly segmented and dangerous for the consumer mindset. If you can serve web content from a singular domain, that domain will earn branding in the minds of your visitors, references from them, links from other sites, and bookmarks from your regular customers. Switching to a new domain forces you to rebrand and to earn all of these positive metrics all over again.

Microsites

Although we generally recommend that you do not saddle yourself with the hassle of dealing with multiple sites and their SEO risks and disadvantages, it is important to understand the arguments, if only a few, in favor of doing so.

Optimized properly, a microsite may have dozens or even hundreds of pages. If your site is likely to gain more traction and interest with webmasters and bloggers by being at arm’s length from your main site, it may be worth considering—for example, if you have a very commercial main site, and you want to create some great content (perhaps as articles, podcasts, and RSS feeds) that does not fit on the main site.

When should you consider a microsite?

- When you own a specific keyword search query domain

-

For example, if you own usedtoyotatrucks.com, you might do very well to pull in search traffic for the specific term used toyota trucks with a microsite.

- When you plan to sell the domains

-

It is very hard to sell a folder or even a subdomain, so this strategy is understandable if you’re planning to churn the domains in the secondhand market.

- As discussed earlier, if you’re a major brand building a “secret” or buzzworthy microsite

-

In this case, it can be useful to use a separate domain (however, you should 301-redirect the pages of that domain back to your main site after the campaign is over so that the link authority continues to provide long-term benefit—just as the mindshare and branding do in the offline world).

You should never implement a microsite that acts as a doorway page to your main site, or that has substantially the same content as your main site. Consider a microsite only if you are willing to invest the time and effort to put rich original content on it, and to promote it as an independent site.

Such a site may gain more links by being separated from the main commercial site. A microsite may have the added benefit of bypassing some of the legal and PR department hurdles and internal political battles.

However, a microsite on a brand-new domain can take many months to build enough domain-level link authority to rank in the engines (for more about how Google treats new domains, see “Determining Searcher Intent and Delivering Relevant, Fresh Content”). So, what to do if you want to launch a microsite? Start the clock running as soon as possible on your new domain by posting at least a few pages to the URL and then getting at least a few links to it—as far in advance of the official launch as possible. It may take a considerable amount of time before a microsite is able to house enough high-quality content and to earn enough trusted and authoritative links to rank on its own. If the campaign the microsite was created for is time sensitive, consider redirecting the pages from the microsite to your main site well after the campaign concludes, or at least ensure that the microsite links back to the main site to allow some of the link authority the microsite earns to help the ranking of your main site.

Here are the reasons for not using a microsite:

- Search algorithms favor large, authoritative domains

-

Take a piece of great content about a topic and toss it onto a small, mom-and-pop website; point some external links to it, optimize the page and the site for the target terms, and get it indexed. Now, take that exact same content and place it on Wikipedia, or CNN.com, and you’re virtually guaranteed that the content on the large, authoritative domain will outrank the content on the small niche site. The engines’ current algorithms favor sites that have built trust, authority, consistency, and history.

- Multiple sites split the benefits of links

-

As suggested in Figure 6-17, a single good link pointing to a page on a domain positively influences the entire domain and every page on it. Because of this phenomenon, it is much more valuable to have any link you can possibly get pointing to the same domain to help boost the rank and value of the pages on it. Having content or keyword-targeted pages on other domains that don’t benefit from the links you earn to your primary domain only creates more work.

- 100 links to Domain A ≠ 100 links to Domain B + 1 link to Domain A (from Domain B)

-

In Figure 6-18, you can see how earning lots of links to Page G on a separate domain is far less valuable than earning those same links to a page on the primary domain. For this reason, even if you interlink all of the microsites or multiple domains that you build, the value still won’t be close to what you could get from those links if they pointed directly to the primary domain.

- A large, authoritative domain can host a huge variety of content

-

Niche websites frequently limit the variety of their discourse and content matter, whereas broader sites can target a wider range of foci. This is valuable not just for targeting the long tail of search and increasing potential branding and reach, but also for viral content, where a broader focus is much less limiting than a niche focus.

- Time and energy are better spent on a single property

-

If you’re going to pour your heart and soul into web development, design, usability, user experience, site architecture, SEO, public relations, branding, and so on, you want the biggest bang for your buck. Splitting your attention, time, and resources on multiple domains dilutes that value and doesn’t let you build on your past successes on a single domain. As shown in Figure 6-18, every page on a site benefits from inbound links to the site. The page receiving the link gets the most benefit, but other pages also benefit.

Figure 6-17. How links can benefit your whole site

Figure 6-18. How direct links to your domain are better

When to Use a TLD Other Than .com

There are only a few situations in which you should consider using a TLD other than .com:

-

When you own the .com and want to redirect to an .org, .tv, .biz, and so on, possibly for marketing/branding/geographic reasons. Do this only if you already own the .com and can redirect.

-

When you can use a .gov, .mil, or .edu domain.

-

When you are serving only a single geographic region and are willing to permanently forgo growth outside that region (e.g., .co.uk, .de, .it, etc.).

-

When you are a nonprofit and want to distance your organization from the commercial world, .org may be for you.

New gTLDs

Many website owners have questions about the new gTLDs (generic top-level domains) that ICANN started assigning in the fall of 2013. Instead of the traditional .com, .net, .org, .ca, and so on with which most people are familiar, these new gTLDs range from .christmas to .autos to .lawyer to .eat to .sydney. A full list of them can be found at http://newgtlds.icann.org/en/program-status/delegated-strings. One of the major questions that arises is “Will these help me rank organically on terms related to the TLD?” Currently the answer is no. There is no inherent SEO value in having a TLD that is related to your keywords. Having a .storage domain does not mean you have some edge over a .com for a storage-related business. In an online forum, Google’s John Mueller stated that these TLDs are treated the same as other generic-level TLDs in that they do not help your organic rankings. He also noted that even the new TLDs that sound as if they are region-specific in fact give you no specific ranking benefit in those regions, though he added that Google reserves the right to change that in the future.

Despite the fact that they do not give you a ranking benefit currently, you should still grab your domains for key variants of the new TLDs. You may wish to consider ones such as .spam. You may also wish to register those that relate directly to your business. It is unlikely that these TLDs will give a search benefit in the future, but it is likely that if your competition registers your name in conjunction with one of these new TLDs your users might be confused about which is the legitimate site. For example, if you are located in New York City, you should probably purchase your domain name with the .nyc TLD; if you happen to own a pizza restaurant, you may want to purchase .pizza; and so on.

Optimization of Domain Names/URLs

Two of the most basic parts of any website are the domain name and the URLs for the pages of the website. This section will explore guidelines for optimizing these important elements.

Optimizing Domains

When you’re conceiving or designing a new site, one of the critical items to consider is the domain name, whether it is for a new blog, a company launch, or even just a friend’s website. Here are 12 indispensable tips for selecting a great domain name:

- Brainstorm five top keywords

-

When you begin your domain name search, it helps to have five terms or phrases in mind that best describe the domain you’re seeking. Once you have this list, you can start to pair them or add prefixes and suffixes to create good domain ideas. For example, if you’re launching a mortgage-related domain, you might start with words such as mortgage, finance, home equity, interest rate, and house payment, and then play around until you can find a good match.

- Make the domain unique

-

Having your website confused with a popular site that someone else already owns is a recipe for disaster. Thus, never choose a domain that is simply the plural, hyphenated, or misspelled version of an already established domain. For example, for years Flickr did not own http://flicker.com, and the company probably lost traffic because of that. It recognized the problem and bought the domain, and as a result http://flicker.com now redirects to http://flickr.com.

- Choose only dot-com-available domains

-

If you’re not concerned with type-in traffic, branding, or name recognition, you don’t need to worry about this one. However, if you’re at all serious about building a successful website over the long term, you should be worried about all of these elements, and although directing traffic to a .net or .org (or any of the other new gTLDs) is fine, owning and 301-ing the .com, or the ccTLD for the country your website serves (e.g., .co.uk for the United Kingdom), is critical. With the exception of the very tech-savvy, most people who use the Web still make the automatic assumption that .com is all that’s out there, or that it’s more trustworthy. Don’t make the mistake of locking out or losing traffic from these folks.

- Make it easy to type

-

If a domain name requires considerable attention to type correctly due to spelling, length, or the use of unmemorable words or sounds, you’ve lost a good portion of your branding and marketing value. Usability folks even tout the value of having the words include easy-to-type letters (which we interpret as avoiding q, z, x, c, and p).

- Make it easy to remember

-

Remember that word-of-mouth marketing relies on the ease with which the domain can be called to mind. You don’t want to be the company with the terrific website that no one can ever remember to tell their friends about because they can’t remember the domain name.

- Keep the name as short as possible

-

Short names are easy to type and easy to remember (see the previous two rules). Short names also allow more of the URL to display in the SERPs and are a better fit on business cards and other offline media.

- Create and fulfill expectations

-

When someone hears about your domain name for the first time, he should be able to instantly and accurately guess the type of content he might find there. That’s why we love domain names such as NYTimes.com, CareerBuilder.com, AutoTrader.com, and WebMD.com. Domains such as Monster.com, Amazon.com, and Zillow.com required far more branding because of their nonintuitive names.

- Avoid trademark infringement

-

This is a mistake that isn’t made too often, but it can kill a great domain and a great company when it does. To be sure you’re not infringing on anyone’s registered trademark with your site’s name, visit the U.S. Patent and Trademark office site and search before you buy. Knowingly purchasing a domain with bad-faith intent that includes a trademarked term is a form of cybersquatting referred to as domain squatting.

- Set yourself apart with a brand

-

Using a unique moniker is a great way to build additional value with your domain name. A “brand” is more than just a combination of words, which is why names such as Mortgageforyourhome.com and Shoesandboots.com aren’t as compelling as branded names such as Yelp and Gilt.

- Reject hyphens and numbers

-

Both hyphens and numbers make it hard to convey your domain name verbally and fall down on being easy to remember or type. Avoid spelled-out or Roman numerals in domains, as both can be confusing and mistaken for the other.

- Don’t follow the latest trends

-

Website names that rely on odd misspellings, multiple hyphens (such as the SEO-optimized domains of the early 2000s), or uninspiring short adjectives (such as “top x,” “best x,” and “hot x”) aren’t always the best choice. This isn’t a hard-and-fast rule, but in the world of naming conventions in general, if everyone else is doing it, that doesn’t mean it is a surefire strategy. Just look at all the people who named their businesses “AAA x” over the past 50 years to be first in the phone book; how many Fortune 1000s are named “AAA Company?”

- Use a domain selection tool

-

Websites such as Nameboy make it exceptionally easy to determine the availability of a domain name. Just remember that you don’t have to buy through these services. You can find an available name that you like, and then go to your registrar of choice. You can also try BuyDomains as an option to attempt to purchase domains that have already been registered.

Picking the Right URLs

Search engines place some weight on keywords in your URLs. Be careful, however, as the search engines can interpret long URLs with numerous hyphens in them (e.g., Buy-this-awesome-product-now.html) as a spam signal. The following are some guidelines for selecting optimal URLs for the pages of your site(s):

- Describe your content

-

An obvious URL is a great URL. If a user can look at the address bar (or a pasted link) and make an accurate guess about the content of the page before ever reaching it, you’ve done your job. These URLs get pasted, shared, emailed, written down, and yes, even recognized by the engines.

- Keep it short

-

Brevity is a virtue. The shorter the URL, the easier it is to copy and paste, read over the phone, write on a business card, or use in a hundred other unorthodox fashions, all of which spell better usability and increased branding. Remember, however, that you can always create a shortened URL for marketing purposes that redirects to the destination URL of your content—just know that this short URL will have no SEO value.

- Static is the way

-

Search engines treat static URLs differently than dynamic ones. Users also are not fond of URLs in which the big players are ?, &, and =. They are just harder to read and understand.

- Descriptive text is better than numbers

-

If you’re thinking of using 114/cat223/, you should go with /brand/adidas/ instead. Even if the descriptive text isn’t a keyword or is not particularly informative to an uninitiated user, it is far better to use words when possible. If nothing else, your team members will thank you for making it that much easier to identify problems in development and testing.

- Keywords never hurt

-

If you know you’re going to be targeting a lot of competitive keyword phrases on your website for search traffic, you’ll want every advantage you can get. Keywords are certainly one element of that strategy, so take the list from marketing, map it to the proper pages, and get to work. For dynamically created pages through a CMS, create the option of including keywords in the URL.

- Subdomains aren’t always the answer

-

First off, never use multiple subdomains (e.g., product.brand.site.com); they are unnecessarily complex and lengthy. Second, consider that subdomains have the potential to be treated separately from the primary domain when it comes to passing link and trust value. In most cases where just a few subdomains are used and there’s good interlinking, it won’t hurt, but be aware of the downsides. For more on this, and for a discussion of when to use subdomains, see “Root Domains, Subdomains, and Microsites”.

- Fewer folders

-

A URL should contain no unnecessary folders (or words or characters, for that matter). They do not add to the user experience of the site and can in fact confuse users.

- Hyphens separate best

-

When creating URLs with multiple words in the format of a phrase, hyphens are best to separate the terms (e.g., /brands/dolce-and-gabbana/), but you can also use plus signs (+).

- Stick with conventions

-

If your site uses a single format throughout, don’t consider making one section unique. Stick to your URL guidelines once they are established so that your users (and future site developers) will have a clear idea of how content is organized into folders and pages. This can apply globally as well as for sites that share platforms, brands, and so on.

- Don’t be case-sensitive

-

URLs can accept both uppercase and lowercase characters, so don’t ever, ever allow any uppercase letters in your structure. Unix/Linux-based web servers are case-sensitive, so http://www.domain.com/Products/widgets/ is technically a different URL from http://www.domain.com/products/widgets/. Note that this is not true in Microsoft IIS servers, but there are a lot of Apache web servers out there. In addition, this is confusing to users, and potentially to search engine spiders as well. Google sees any URLs with even a single unique character as unique URLs. So if your site shows the same content on www.domain.com/Products/widgets/ and www.domain.com/products/widgets/, it could be seen as duplicate content. If you have such URLs now, implement a 301-redirect pointing them to all-lowercase versions, to help avoid confusion. If you have a lot of type-in traffic, you might even consider a 301 rule that sends any incorrect capitalization permutation to its rightful home.

- Don’t append extraneous data

-

There is no point in having a URL exist in which removing characters generates the same content. You can be virtually assured that people on the Web will figure it out; link to you in different fashions; confuse themselves, their readers, and the search engines (with duplicate content issues); and then complain about it.

Mobile Friendliness

On April 21, 2015, Google rolled out an update designed to treat the mobile friendliness of a site as a ranking factor. What made this update unique is that it impacted rankings only for people searching from smartphones.

The reason for this update was that the user experience on a smartphone is dramatically different than it is on a tablet or a laptop/desktop device. The main differences are:

-

Screen sizes are smaller, so the available space for providing a web page is significantly different.

-

There is no mouse available, so users generally use their fingers to tap the screen to select menu items. As a result, more space is needed between links on the screen to make them “tappable.”

-

The connection bandwidth is lower, so web pages load more slowly. While having smaller-size web pages helps them load on any device more quickly, this becomes even more important on a smartphone.

To help publishers determine the mobile friendliness of their sites, Google released a tool called the Mobile-Friendly Test. In theory, passing this test means that your page is considered mobile-friendly, and therefore would not be negatively impacted for its rankings on smartphones.

There was a lot of debate on the impact of the update. Prior to its release, the industry referred to it as “Mobilegeddon,” but in fact the scope of the update was not nearly that dramatic.

Coauthor Eric Enge led a study to measure the impact of the mobile friendliness update by comparing rankings prior to the update to those after it. This study found that nearly 50% of non-mobile-friendly URLs lost rank. You can see more details from the study at http://bit.ly/enge_mobilegeddon.

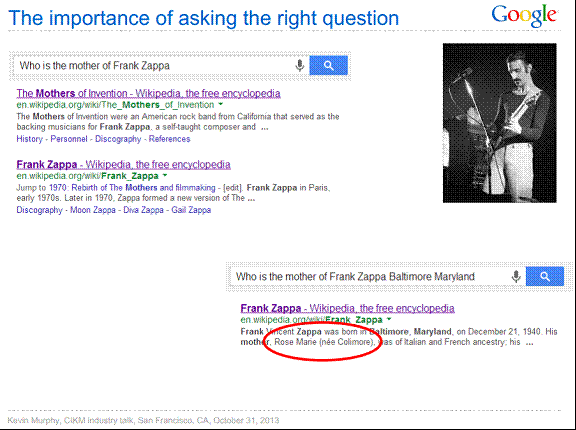

Keyword Targeting

Search engines face a tough task: based on a few words in a query (sometimes only one) they must return a list of relevant results ordered by measures of importance, and hope that the searcher finds what she is seeking. As website creators and web content publishers, you can make this process massively simpler for the search engines and, in turn, benefit from the enormous traffic they send, based on how you structure your content. The first step in this process is to research what keywords people use when searching for businesses that offer products and services like yours.

This practice has long been a critical part of search engine optimization, and although the role keywords play has evolved over time, keyword usage is still one of the first steps in targeting search traffic.

The first step in the keyword targeting process is uncovering popular terms and phrases that searchers regularly use to find the content, products, or services your site offers. There’s an art and science to this process, but it consistently begins with a list of keywords to target (see Chapter 5 for more on this topic).

Once you have that list, you’ll need to include these keywords in your pages. In the early days of SEO, the process involved stuffing keywords repetitively into every HTML tag possible. Now, keyword relevance is much more aligned with the usability of a page from a human perspective.

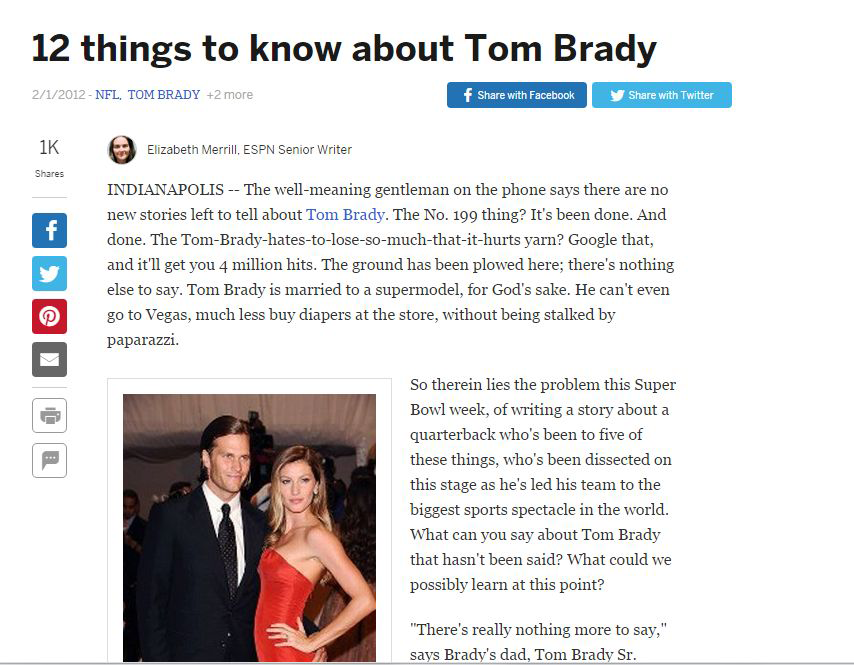

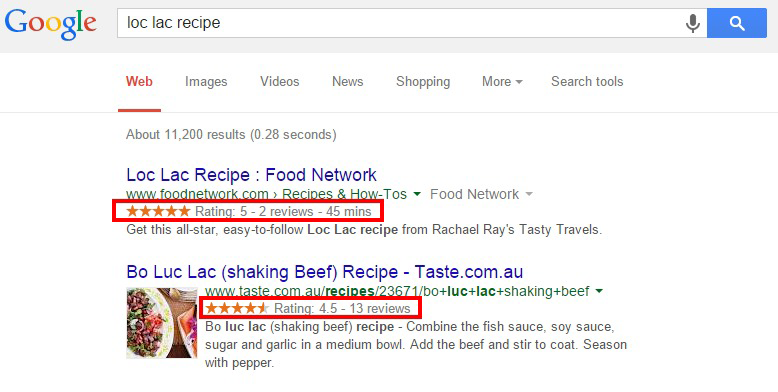

Because links and other factors make up a significant portion of the search engines’ algorithms, they no longer rank pages with 61 instances of free credit report above pages that contain only 60. In fact, keyword stuffing, as it is known in the SEO world, can actually get your pages devalued via search engine penalties. The engines don’t like to be manipulated, and they recognize keyword stuffing as a disingenuous tactic. Figure 6-19 shows an example of a page utilizing accurate keyword targeting.

Keyword usage includes creating titles, headlines, and content designed to appeal to searchers in the results (and entice clicks), as well as building relevance for search engines to improve your rankings. In today’s SEO, there are also many other factors involved in ranking, including term frequency—inverse document frequency (TF-IDF), co-occurrence, entity salience, page segmentation, and several others, which will be described in detail later in this chapter.

However, keywords remain important, and building a search-friendly site requires that you prominently employ the keywords that searchers use to find content. Here are some of the more prominent places where a publisher can place those keywords.

HTML <title> Tags

For keyword placement, <title> tags are an important element for search engine relevance. The <title> tag is in the <head> section of an HTML document, and is the only piece of meta information about a page that directly influences relevancy and ranking.

The following nine rules represent best practices for <title> tag construction. Do keep in mind, however, that a <title> tag for any given page must directly correspond to that page’s content. You may have five different keyword categories and a unique site page (or section) dedicated to each, so be sure to align a page’s <title> tag content with its actual visible content as well.

- Place your keywords at the beginning of the

<title>tag -

This positioning provides the most search engine benefit; thus, if you want to employ your brand name in the

<title>tag as well, place it at the end. There is a trade-off here, however, between SEO benefit and branding benefit that you should think about: major brands may want to place their brand at the start of the<title>tag, as it may increase click-through rates. To decide which way to go, you need to consider which need is greater for your business. - Limit length to 50 characters (including spaces)

-

Content in

<title>tags after 50 characters is probably given less weight by the search engines. In addition, the display of your<title>tag in the SERPs may get cut off as early as 49 characters.8 -

There is no hard-and-fast rule for how many characters Google will display. Google now truncates the display after a certain number of pixels, so the exact characters you use may vary in width. At the time of this writing, this width varies from 482px to 552px depending on your operating system and platform.9

-

Also be aware that Google may not use your

<title>tag in the SERPs. Google frequently chooses to modify your<title>tag based on several different factors that are beyond your control. If this is happening to you, it may be an indication that Google thinks that your<title>tag does not accurately reflect the contents of the page, and you should probably consider updating either your<title>tags or your content. - Incorporate keyword phrases

-

This one may seem obvious, but it is critical to prominently include in your

<title>tag the keywords your research shows as being the most valuable for capturing searches. - Target longer phrases if they are relevant

-

When choosing what keywords to include in a

<title>tag, use as many as are completely relevant to the page at hand while remaining accurate and descriptive. Thus, it can be much more valuable to have a<title>tag such as “SkiDudes | Downhill Skiing Equipment & Accessories” rather than simply “SkiDudes | Skiing Equipment.” Including those additional terms that are both relevant to the page and receive significant search traffic can bolster your page’s value. -

However, if you have separate landing pages for “skiing accessories” versus “skiing equipment,” don’t include one term in the other’s title. You’ll be cannibalizing your rankings by forcing the engines to choose which page on your site is more relevant for that phrase, and they might get it wrong. We will discuss the cannibalization issue in more detail shortly.

- Use a divider

-

When you’re splitting up the brand from the descriptive text, options include | (a.k.a. the pipe), >, -, and :, all of which work well. You can also combine these where appropriate—for example, “Major Brand Name: Product Category – Product.” These characters do not bring an SEO benefit, but they can enhance the readability of your title.

- Focus on click-through and conversion rates

-

The

<title>tag is exceptionally similar to the title you might write for paid search ads, only it is harder to measure and improve because the stats aren’t provided for you as easily. However, if you target a market that is relatively stable in search volume week to week, you can do some testing with your<title>tags and improve the click-through rate. -

Watch your analytics and, if it makes sense, buy search ads on the page to test click-through and conversion rates of different ad text as well, even if it is for just a week or two. You can then look at those results and incorporate them into your titles, which can make a huge difference in the long run. A word of warning, though: don’t focus entirely on click-through rates. Remember to continue measuring conversion rates.

- Target searcher intent

-

When writing titles for web pages, keep in mind the search terms your audience employed to reach your site. If the intent is browsing or research-based, a more descriptive

<title>tag is appropriate. If you’re reasonably sure the intent is a purchase, download, or other action, make it clear in your title that this function can be performed at your site. Here is an example from http://www.bestbuy.com/site/video-games/playstation-4-ps4/pcmcat295700050012.c?id=pcmcat295700050012. The<title>tag of that page is “PS4: PlayStation 4 Games & Consoles - Best Buy.” The<title>tag here makes it clear that you can buy PS4 games and consoles at Best Buy. - Communicate with human readers

-

This needs to remain a primary objective. Even as you follow the other rules here to create a

<title>tag that is useful to the search engines, remember that humans will likely see your<title>tag presented in the search results for your page. Don’t scare them away with a<title>tag that looks like it’s written for a machine. - Be consistent

-

Once you’ve determined a good formula for your pages in a given section or area of your site, stick to that regimen. You’ll find that as you become a trusted and successful “brand” in the SERPs, users will seek out your pages on a subject area and have expectations that you’ll want to fulfill.

Meta Description Tags

Meta descriptions have three primary uses:

-

To describe the content of the page accurately and succinctly

-

To serve as a short text “advertisement” to prompt searchers to click on your pages in the search results

-

To display targeted keywords, not for ranking purposes, but to indicate the content to searchers

Great meta descriptions, just like great ads, can be tough to write, but for keyword-targeted pages, particularly in competitive search results, they are a critical part of driving traffic from the engines through to your pages. Their importance is much greater for search terms where the intent of the searcher is unclear or different searchers might have different motivations.

Here are six good rules for meta descriptions:

- Tell the truth

-

Always describe your content honestly. If it is not as “sexy” as you’d like, spice up your content; don’t bait and switch on searchers, or they’ll have a poor brand association.

- Keep it succinct

-

Be wary of character limits—currently Google displays as few as 140 characters, Yahoo! up to 165, and Bing up to 200+ (it’ll go to three vertical lines in some cases). Stick with the smallest—Google—and keep those descriptions at 140 characters (including spaces) or less.

- Write ad-worthy copy

-

Write with as much sizzle as you can while staying descriptive, as the perfect meta description is like the perfect ad: compelling and informative.

- Analyze psychology

-

The motivation for an organic-search click is frequently very different from that of users clicking on paid results. Users clicking on PPC ads may be very directly focused on making a purchase, and people who click on an organic result may be more interested in research or learning about the company. Don’t assume that successful PPC ad text will make for a good meta description (or the reverse).

- Include relevant keywords

-

It is extremely important to have your keywords in the meta

descriptiontag—the boldface that the engines apply can make a big difference in visibility and click-through rate. In addition, if the user’s search term is not in the meta description, chances are reduced that the meta description will be used as the description in the SERPs. - Don’t employ descriptions universally

-

You shouldn’t always write a meta description. Conventional logic may hold that it is usually wiser to write a good meta description yourself to maximize your chances of it being used in the SERPs, rather than let the engines build one out of your page content; however, this isn’t always the case. If the page is targeting one to three heavily searched terms/phrases, go with a meta description that hits those users performing that search.

-

However, if you’re targeting longer-tail traffic with hundreds of articles or blog entries or even a huge product catalog, it can sometimes be wiser to let the engines themselves extract the relevant text. The reason is simple: when engines pull, they always display the keywords (and surrounding phrases) that the user searched for. If you try to force a meta description, you can detract from the relevance that the engines make naturally. In some cases, they’ll overrule your meta description anyway, but because you can’t consistently rely on this behavior, opting out of meta descriptions is OK (and for massive sites, it can save hundreds or thousands of man-hours). Because the meta description isn’t a ranking signal, it is a second-order activity at any rate.

Heading Tags

The heading tags in HTML (<h1>, <h2>, <h3>, etc.) are designed to indicate a headline hierarchy in a document. Thus, an <h1> tag might be considered the headline of the page as a whole, whereas <h2> tags would serve as subheadings, <h3>s as tertiary-level subheadings, and so forth. The search engines have shown a slight preference for keywords appearing in heading tags. Generally when there are multiple heading tags on a page, the engines will weight the higher-level heading tags heavier than those below them. For example, if the page contains <h1>, <h2>, and <h3> tags, the <h1> will be weighted the heaviest. If a page contains only <h2> and <h3> tags, the <h2> would be weighted the heaviest.

In some cases, you can use the <title> tag of a page, containing the important keywords, as the <h1> tag. However, if you have a longer <title> tag, you may want to use a more focused, shorter heading tag including the most important keywords from the <title> tag. When a searcher clicks a result from the engines, reinforcing the search term he just typed in with the prominent headline helps to indicate that he has arrived on the right page with the same content he sought.

Many publishers assume that they have to use an <h1> tag on every page. What matters most, though, is the highest-level heading tag you use on a page, and its placement. If you have a page that uses an <h3> heading at the very top, and any other heading tags further down on the page are <h3> or lower level, then that first <h3> tag will carry just as much weight as if it were an <h1>.

Again, what matters most is the semantic markup of the page, and the first heading tag presumably is intended to be a label for the entire page (so it plays a complementary role to the <title> tag), and you should treat it as such. Other heading tags on the page should be used to label subsections of the content.