Conclusion

In our world of economic globalization, economic exchanges, and the rapid and profound transformation of science and technology, modern companies must anticipate changes in its environment in order to adapt and remain competitive. As large data sets are currently available from a wealth of different sources, companies are looking to use these resources to promote innovation, customer loyalty and increase operational efficiency. At the same time they are contested for their end use, which requires a greater capacity for processing, analyzing and managing the growing amount of data, equally called the “Big Data” boom.

The revolutionary element of “Big Data”, is indeed, linked to the empowerment of the processes of production and exchange of massive, continuous, and ever faster data. Businesses collect vast amounts of data through interconnected tools, social networks, e-mails, surveys, web forms, and other data collection mechanisms. Each time that someone searches on Google, uses a mobile App, tweets, or posts a comment on Facebook, it creates a data stream. By a simple navigation online, a user leaves various traces, which can be retrieved in real time. It is up to the company to determine which might represent a competitive advantage.

These digital traces must be explored through interrelations and correlations, using specialized tools, in order to offer quality products and services, and to implement appropriate development strategies. So we can add the “value” that these data represent for a company to the other “V’s” that make up Big Data (volume, variety, velocity, etc.). The subject of the “business value” of data highlights several contextual and complex questions, and is dependant on our interpretation (processing, analysis, visualization, etc.) and the importance that we give to data. This is one of the biggest challenges of Big Data, “value creation from data” (data development).

Companies must create value from data flows and the available content in real time. They must consider how to integrate digital into their strategy. This consists of transforming complex, often unstructured data from different sources into “information”. But the analysis of Big Data refers to the tools and methods used to transform the massive amounts of data into strategic information. The challenge, therefore, requires the ability to mix the maximum amount of data from internal and external sources in order to extract the best elements for decision-making.

To create value through the large amounts of available data, especially since the development of the “Web”, it is necessary to:

- – develop and create a favorable culture of data internally, because data analysis is part of every business decision;

- – work in collaboration between the different stages of value creation from data: synergy;

- – master the data (structured, unstructured, etc.), which involves using IT Tools for analysis and processing, which help collect, store, manage, analyze, predict, visualize, and model the data. This requires technical skills to help develop new algorithms for the management and processing of data, particularly given its speed, volume, and variability;

- – master the analysis techniques and know how to choose the most appropriate one in order to generate a profit. This requires human skills, “Data Scientists” or “Big Data analysts” who can make data speak. This necessitates mastering several disciplines (statistics, mathematics, computer science) and an understanding of the business application of “Big Data”;

- – ensure the quality of data because the technology is not sufficient; it is thus necessary to define a preliminary logic and a specific data management strategy. Data governance involves a set of people, processes and technology to ensure the quality and value of a company’s information. Effective data governance is essential to preserve the quality of data as well as its adaptability and scalability, allowing for a full extraction of the value of data;

- – strengthen “Open Data” initiatives or the provision of data, both publicly and privately, so that they are usable at any time;

- – include in the data processing a knowledge extraction process, “data mining” or “text mining”, so that they have meaning. The raw data itself may not be relevant, and it is therefore, important to modify them sot hey become useful and understandable;

- – make the best visual presentation of data; “Business Intelligence” tools should provide a set of features for enhancing data. In order to understand data and the information they provide, it is important to illustrate them with diagrams and graphs in order to present clear and eloquent information.

Big Data – the processing and analysis of very large amounts of data – should therefore revolutionize the work of companies. But for French companies, this approach is particularly weak. According to a study published by the consultancy firm E&Y, French companies are notably behind in this area. For two thirds of them, Big Data is “an interesting concept, but too vague to constitute an opportunity for growth”. However, some sectors are particularly active in the collection and processing of data on a large scale: this is particularly the case in retail, telecommunications, media, and tech companies, which are the most developed in using customer data, according to the firm.

In this light, France must work quickly to be at the forefront of data developement. Data developement represents the seventh priority of the report “one principle and seven ambitions” of the “Innovation 2030” commission chaired by Anne Lavergeon. The report indicates that Big Data is one of the main points to develop in the coming years. According to the same report, the use of mass data that governments and businesses currently have would be a vessel for significant competitive gains.

The report provides a series of guidelines, such as:

- – making data publicly available so that it can be used. France has already embarked on this aim through the project “Etalab”. But the report wishes to accelerate the movement according to the British model, to allow start-ups to “create eco-systems in France through certain uses for commercial purposes”;

- – the creation of five data use licenses, stemming from employment centers, social security, national education, higher education as well as help to the enhancement of national heritage. This is to strengthen the collaboration between public and private actors;

- – the dedication of a right to experimentation, under the auspices of “an observatory of data”. This involves evaluating the effectiveness of certain data processing techniques, before considering a possible legislative framework;

- – the creation of a dedicated technology resource center, to remove the entry barriers and reduce the time-to-market of new companies;

- – strengthening the export capacity of SMEs, to avoid being isolated in the French market whilst encouraging the authorities to intervene further in this sector.

Data has become a gold mine; they represent raw materials like oil in the 20th century. For geological reasons, oil is concentrated in certain parts of the world, whereas data is generated by users worldwide, transported on the Web, and accumulated and analyzed through specialist techniques and tools. The volume of data generated and processed by companies, is therefore, continually increasing. This observation is not new. But in the era of Internet and the proliferation of connected devices, the analysis of these deposits has become complex.

Due to the constantly increasing volume of electronically available data, the design and implementation of effective tools to focus on only relevant information is becoming an absolute necessity. We also understand not only the use of expert systems but also the use of data analysis methods which aim to discover structures and relationships between facts using basic data and appropriate mathematical techniques. It is about analyzing these data in order to find the relevant information to help make decisions.

For example, when we search or order a book from Amazon, the website’s algorithm suggests other items that have been bought by thousands of users before us. Netflix goes even further: the collected data is used to decide which films to buy the move rights to and which new programs to develop. Google Maps offers an optimized route based on traffic conditions in real time while Facebook offers us new friends based on existing connections. All these proposals are made thanks to the analysis of a huge amount of data.

In the USA, the use of facial recognition cameras at the entrance of nightclubs has been transformed into a service. This is “ScenTap”, a mobile App that lets you know in real time the number of men and women present. The directors manage their facilities in real time and customers can decide which venues they prefer to visit.

In France, the SNCF has focused on developing its business for the Internet and smartphones in order to control the information produced and disseminated on the Internet and to prevent Google from becoming the one that manages customer relations. This is what happened with Booking.com, on which all hotels are still dependent today.

Data are currently produced daily and in real time from telephones, credit cards, computers, sensors and so on. It is not just about the quantity and speed of production of these data, the real revolution lies in what can be created by combining and analyzing these flows. Big Data processing requires an investment in computing architecture to store, manage, analyze, and visualize an enormous amount of data.

This specific technology is a means to enrich the analysis and display the strength of available data. We must have the right processing method to best analyze data. But it also involves investing in the human skills that help precisely analyze these quantities and to elaborate algorithms and relevant models. In other words, the company needs data scientists to explain these deposits. It is indeed important to select the most relevant data, and thus, include in the analysis the concept of quality and not only quantity: this is where the “governance strategy” comes in.

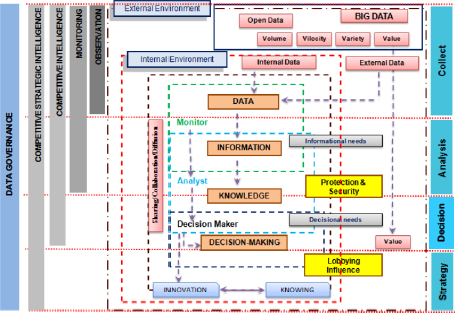

We can thus see that Big Data involves a number of components that work together to provide a rich ecosystem and to ensure even more powerful analyses. Such an ecosystem can be presented as in Figure C.1.

Figure C.1. Data governance model in the age of data revolution developed by Monino and Sedkaoui

Big Data opens up new possibilities of knowledge and provides another form of value creation for businesses. Today companies have large amounts of data. These reservoirs of knowledge must be explored in order to understand their meaning and to identify relationships between data and the models explaining their behavior. The interest of Big Data is to take advantage of the data produced by all stakeholders (companies, individuals, etc.) that becomes a strategic asset, a value creation tool, and gives birth to a new organizational paradigm with opportunities for innovation.