Cloud User Controls

AWS China

Chaoyang District, Beijing

Institute of Information Engineering, Chinese Academy of Sciences

Haidian District, Beijing

Institute of Information Engineering, Chinese Academy of Sciences

Haidian District, Beijing

Pennsylvania State University

University Park, Pennsylvania

College of William and Mary

Williamsburg, Virginia

CONTENTS

13.2 Efficient Key-Enforced Access Control

13.2.2.1 Dual-Header Structure

13.2.3 Access Control Policy Updates

13.2.4.1 The Overhead for Privileges Grant

13.2.4.2 The Overhead for Revocation

13.3 Confidentiality and Access Control of Collaborative Editing

13.3.1 Background and Related Work

13.3.1.1 Real-Time Collaborative Editing Systems

13.3.1.2 Operational Transformation

13.3.2 Assumptions and Threat Model

13.3.4.3 Character Set and Special Character

13.3.6.2 Decryption Time of Pre-Edit Phase

13.1 INTRODUCTION

Cloud storage has certain advantages, such as paying for only what is used, being quick to deploy, offering easy adjustment of capacity, and built-in disaster recovery. Therefore, individuals and companies are resorting more to cloud providers for storing their data and sharing them with collaborators. However, cloud providers are generally considered as honest-but-curious, which means the cloud will carry out its promised operations honestly, but might pry into the sensitive data led by business interest or curiosity. To secure sensitive data and prevent illegal visitors (including cloud providers) from unauthorized access, a straightforward solution is to apply cryptographic techniques, so that data are encrypted at the user end before being outsourced to the cloud. In this case, only the data owner and authorized collaborators with knowledge of the key will be able to access the data. Therefore, access control policies in the cloud are enforced through assigning proper cryptographic keys among the owner and collaborators. Key-enforced cloud access control guarantees the cloud users will outsource their data without outsourcing the control, since the user possesses the key rather than the cloud provider.

However, when the access control policy needs to be updated (e.g., new collaborators join or some collaborators leave), it can be very costly for data owners to re-encrypt the data with a new key in order to satisfy the new policy. As the computation overhead for re-encryption (encryption/decryption) and transmission overhead for downloading are proportional to the size of data [1], policy updates may not propagate in real time, especially for large amounts of data. Therefore, it is not advisable for data owners with limited ability to take the heavy burden. An alternative solution is applying proxy re-encryption [2,3] which migrates the burden for re-encryption from data owners to the proxy. However, the adoption of public key cryptography impedes the wide usage of proxy re-encryption algorithms, because of the computation overhead. A symmetric encryption scheme called overencryption [4], where the data are encrypted again after being encrypted by the client, is a practical symmetric encryption solution for delegating update of the keys and re-encryption to cloud servers. Nevertheless, in the “pay-as-you-go” model of cloud computing, it is still costly for data owners to pay the cloud for the cipher operations. Furthermore, the delay for re-encryption cannot be ignored, especially in the presence of multiple access control policy updates of large data with replicas across multiple cloud servers. In this chapter, we propose a new key-enforced access control mechanism based on overencryption which will be elaborated in Section 13.2.

In addition, applying key-enforced access control to software as a service remains a challenge. For example, the collaborative editing service (e.g., Google Docs, Office Online, and Cloud9) has become a popular and convenient choice for online users. With such a service, a group of users can cooperatively edit documents through the Internet; in particular, they can concurrently modify the same document, even write on the same line. Meanwhile, the collaborative editing cloud service provides consistent views to all clients in a timely manner; for example, if each of two independent users concurrently inserts one character into the same line that is displayed identically on their own screens, all users will immediately see both of these characters appear in the expected positions.

The servers of collaborative editing cloud services carry out heavy processing to coordinate all online users’ operations. First, the cloud servers are responsible for receiving operation inputs from clients, transforming operations by operational transformation (OT) [5] to resolve conflicts, modifying the stored documents into a joint version based on these transformed operations, and then broadcasting modifications to all online clients. To transform operations, the server revises the position of a modification based on all the other concurrent operations. For example, when Alice and Bob, respectively, insert ‘a’ and ‘b’ in the ith and jth positions, Bob’s operation is transformed to be executed in the (j + 1)th if Alice’s operation is executed first and i < j. Second, during the editing phase, the above steps are repeated continuously in a real-time manner, to enable instant reading and writing by clients. Finally, the servers have to maintain a history of joint versions, because users’ operations may be done on different versions due to the uncertain network delays. In short, this centralized architecture takes full advantage of the cloud servers’ powerful computing, elasticity, and scalability, and brings convenience to resource-limited clients. In order to enable the cloud servers to coordinate the operations and resolve possible conflicts by OT, existing online collaborative editing systems process only plain text (or unencrypted) inputs. Therefore, the cloud service provider is always able to read all clients’ documents. This unfriendly feature might disclose users’ sensitive data, for example, to a curious internal operator in the cloud system. Although the secure sockets layer/transport layer security (SSL/TLS) protocols are adopted to protect data in transit against external attackers on the network, the input data are always decrypted before being processed by the cloud servers. In this chapter, we propose LightCore, a collaborative editing cloud service for sensitive data with key-enforced access control which will be elaborated in Section 13.3.

13.2 EFFICIENT KEY-ENFORCED ACCESS CONTROL

In this section, we propose a new key-enforced access control mechanism based on over-encryption [1,4], which implements the update of access control policy by enforcing two-layer encryption. In over-encryption, data resources are doubly encrypted at the base encryption layer (BEL) by data owners and at the surface encryption layer (SEL) by the cloud. When access control updates, the data just need to invoke the cloud to update the encryption policy at SEL. However, both granting and revoking authorizations need the cloud to encrypt over the pre-encrypted data, which brings considerable overhead for re-encryption computation and has an influence on the performance when large amounts of updating operations of access control policy happen concurrently. In order to implement an efficient update of access control policy in cryptographic cloud storage, this section presents a dual-header structure for eliminating the need of re-encrypting related data resources when new authorizations are granted and proposes batch revocation for reducing the overhead for re-encryption when revocations happen.

In our dual-header structure, data are encrypted by data owners at the BEL and then over-encrypted by cloud servers at the SEL. Each data resource is divided into the data content in the body and the cryptographic keys of data content in the header. Before being outsourced to the cloud, both the body and the header of data resources are pre-encrypted by data owners. After data are uploaded to the cloud, the cloud server will first encapsulate the header by encryption. Therefore, the header of all the resources is initialized by a two-layer encryption and is always a relatively small size. When granting new privileges, cloud servers only need to update the small header, instead of the body. Our dualheader structure has the following characteristics:

• High security. The dual-header structure prevents unauthorized visitors from accessing the sensitive data. Even if the cloud server suffers attacks, the sensitive data will not be divulged to unauthorized visitors.

• Low overhead. The dual-header structure makes the overhead for granting privileges independent of data size. With the dual-header structure, there is no re-encryption of any data content (possibly of large size), so it offers significant benefits in reducing the overhead when new privileges are granted.

In order to prevent the revoked user from accessing future versions of the data with the key they possess, the overhead for re-encryption brought by revocation operations cannot be avoided. Our batch revocation mechanism, combining lazy revocation to a certain group of revocation requests, provides a considerable improvement of over-encryption systems, by reducing the number of operations on large amounts of data.

13.2.1 Preliminaries

Cryptographic cloud storage [6] is proposed to securely outsource sensitive data resource to the honest-but-curious cloud. It can protect sensitive data against both the cloud provider and illegal visitors, by encrypting data at the client side before outsourcing. The security lies in appropriate key distribution to users (collaborators) based on the access control policy for sharing data among collaborators. Keeping cryptographic keys secret from the cloud provider is essential for those data owners with a high security requirement. However, it makes it difficult for data owners to resort to the cloud provider for updating the access control policy when the cooperative relationship changes. Additionally, data with different access control policies should be encrypted with different keys when fine-grained data access control is desired. This could upset the users, as they would be required to maintain multiple keys for different data resources.

Our work is based on the over-encryption approach [1,4], which was proposed to avoid the need for shipping resources back to the owner for re-encryption after a change in the access control policy. On the premise of implementing fine-grained access control, over-encryption also forces a user to keep one or two private keys to access all the authorized resources, by subtly constructing a key derivation structure. In overencryption, data resources are doubly encrypted at the BEL and the SEL. At BEL, data are encrypted by data owners at the client side and data owners are responsible for distributing the decryption keys to users. After data are outsourced to the cloud, the encrypted data are overencrypted by the cloud at SEL, for updating access control policies. Only those with keys of the two encryption layers can decrypt the data, so the cloud provider offers additional protection to prevent those who can obtain the keys of the BEL from accessing the data.

When the cooperative relationship or the access control requirements of data owners change, the access control policy should be updated as well. In overencryption, the data owner only needs to call the cloud servers to re-encrypt the data at the SEL. However, re-encrypting large amounts of data and transmitting requests across multiple servers with replicas are also costly for the cloud when multiple access control policy updates happen. One potential limitation of overencryption is that the cloud might need to re-encrypt the content of related data resources when new privileges are granted. Another improvable point is that immediate revocation could increase the overhead for repetitive cipher operations, when revoking privileges toward the same resources frequently happens.

13.2.1.1 Over-Encryption

In over-encryption, if a set of data resources can be accessed by the same access user set, they will be encrypted with the same key at the BEL, or else they will be encrypted with different keys. A user just needs to maintain one or two private keys to access all the resources that are authorized to him. Over-encryption is implemented by constructing a key derivation structure, where one key can be derived from another key through public tokens.

The key derivation structure of over-encryption is based on the access control list (ACL) of data resources, in which over-encryption divides all the users into different access user sets, and each access user set is associated with a key. Data resources with the same access user set are encrypted with the same key. The associated key of the access user set can be derived by the associated key of any subset of the access user set. It is implemented by publishing public tokens and labels on each derivation path. For example, there are three data resources r1, r2, and r3. the access user set of r1 is {A,B} with associated key KAB; r2 and r3 with the same access user set {A,B,C} are encrypted with the key KABC; by publishing token tAB,ABC = KABC ⊗ ha (KAB, lABC) the user who possesses KAB can derive KABC by computing KABC = tAB,ABC ⊗ ha (KAB, lABC), where lABC is a publicly available label associated with KABC, ⊗ is the bita-bit xor operator, and ha is a secure hash function.

TABLE 13.1 An Example of the Implementation of Access Control Policy

(a) Secret Keys |

(b) Public Tokens |

|||

Resources |

Access User Sets |

Encryption Keys |

Labels |

Tokens |

r1,r9,r10 |

A,B |

hd(KAB) |

lAB |

tA,AB = KAB ⊕ ha(KA,lAB) |

r3,r4,r5 |

A,B,C |

hd(KABC) |

lAB |

tB,AB = KAB ⊕ ha(KB,lAB) |

r2,r6 |

C |

hd(KC) |

lABC |

tAB,ABC = KABC ⊕ ha(KAB,lABC) |

r7,r8 |

D |

hd(KD) |

lABC |

tC,ABC = KABC ⊕ ha(KC,lABC) |

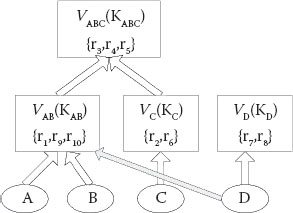

FIGURE 13.1 Key derivation structure.

We express the key derivation structure through a graph, having the vertex vU associated with a group of resources and keys to encrypt the resources. If Ui is a subset of Uj and a token ti,j is published, then there exists an edge connecting two vertices (vUi, vUj). For instance, Table 13.1 represents an example of access control policy, where hd and ha is a secure hash function. In this example, resources {r1,r9,r10} can be accessed by A and B; resources {r1, r9, r10} can be accessed by A, B, and C; resources {r2,r6} can be accessed by C; and resources {r7, r8} can only be accessed by D. In order to reduce keys for users to maintain, the key KABC can be derived by KAB and KC, then a key derivation structure shown in Figure 13.1 is constructed.

13.2.1.2 Limitations

In the key derivation structure, data resources with the same access user set are encrypted with the same key in a vertex. It reduces the number of keys and significantly simplifies key management for users. However, it might result in re-encrypting the other data resources in the same vertex of the granted data resource when new privileges are granted. In the example showed in Figure 13.1, if the data owner grants user D the privilege of accessing the data resource r1, the data owner needs to provide D with the decryption key hd(KAB) instead of the derivation key KAB, which might be used to derive the key of resources (e.g., r3,r4,r5) in other vertices. However, it cannot prevent unauthorized D from decrypting r9 and r10. Therefore, the cloud provider should over-encrypt r9 and r10 at the SEL instead of shipping them back to the data owner. In fact, reencrypting data resources in the same vertex when granting privileges should be avoided.

Another improvable point of over-encryption lies in revocation. In order to prevent the revoked users from accessing future versions of the data resource with the key they possess, the cloud should re-encrypt it at the surface layer encryption. However, the costly re-encryption operations might affect the performance of the cloud storage service when multiple revocations happen. Moreover, as a data resource might be accessed by a set of users, immediately revoking the access to a certain resource will produce repetitive re-encryption operations and may result in a long delay when revoking the privileges on large data.

13.2.2 Main Scheme

We construct a dual-header structure based on overencryption and propose batch revocation to implement an efficient update of the access control policy in cryptographic cloud storage. In order to implement fast encryption, we adopt symmetric ciphers in our proposed scheme. Data are firstly encrypted at the BEL by data owners. When the access control policy changes, data owners will not re-encrypt the encrypted data any more. All of the cipher operations for matching the new access control policy are executed by the cloud. The dual-header structure makes the overhead for granting privileges independent of data size. Therefore, the cloud just needs to update a small header of the granted resource, instead of the large content of other resources encrypted with the same key of the granted resource.

13.2.2.1 Dual-Header Structure

We divide each data resource into two parts: keys in the header and the data content in the body. At the initialization phase (before uploading), the data content in the body is encrypted with the key in the header by the data owner at the BEL. In our scheme, each resource uses a different key to encrypt its content. In order to prevent the cloud provider and unauthorized visitors from obtaining the secret key, the key in the header at the BEL is encrypted by the data owner. When data resources in header/body form are uploaded to the cloud servers, the cloud needs to over-encrypt the header at the SEL. Therefore, the two-layer encryption is imposed on the header of all the resources and we call it a dual-header structure. There are four types of keys in our dualheader structure:

• Data content key: dek. This is a symmetric key used in the BEL to encrypt the data content in the body. It is generated and encrypted by the data owner and stays invariant in the header in the cloud. Each data resource has a different data content key. This key is stored in the header in encrypted form and requires no distribution.

• Surface content key: sek. This is a symmetric key used in the SEL to encrypt the already encrypted data content in the body. At the initialization phase, it is null. When the revocation of the data resource happens, the cloud will set a new surface content key and encrypt the pre-encrypted data content with it in the body. The keys of separate data resources are also different and will be changed when revocations happen. This key is stored in the header in encrypted form and requires no distribution.

• Base head key: BKU. This symmetric key is used to encrypt the data content key in the header. The data owner also generates it before uploading the header to the cloud and it will also stay invariant in the cloud. It might be used to encrypt a set of resources with the same access control policy. This key is distributed to all the authorized users of set U, by constructing derivation paths from their private keys to BKU.

• Surface head key: SKU. This symmetric key is used in the SEL to encrypt the pre-encrypted data content key and surface content key in the header. The cloud generates it, and it will change when the access control policy updates. Data resources with the same access control policy share the same surface head key. This key is also distributed to the authorized users of set U, by constructing derivation paths from their private keys to SKU.

We use the four types of symmetric keys at the two encryption layers to protect the outsourced data. As the access control policy might update, the status of the data stored in the cloud is not immutable. After the data resource is uploaded to the cloud at the initialization phase, the data resource is in the initial status expressed in Table 13.2. When the access control policy of the data resource updates, the status of the data will change into the common status showed in Table 13.3.

At the initialization phase, the data owner first encrypts the data resource with data content key dek and generates the body Edek(data), then encrypts dek with the base head key BKUi and achieves the header EBKUi(dek), and finally uploads Id(r) (the identifier of the data resource r), Edek(data) and EBKUi(dek) to the cloud. After the cloud receives the data, the cloud first encrypts EBKUi(dek) in the header with the surface head key SKUi, and gets ESKUi(EBKUi(dek),null) (null means that the cloud has not over-encrypted Edek(data)). Then the data resource is stored in the initial status.

When the access control policy changes, the data owner should prevent the users who own dek from accessing the data. If data owners are unwilling to download the data resource and re-encrypt it by themselves, they can invoke the cloud to over-encrypt it. If the data resource is still in the initial status, the cloud needs to generate a surface content key sek and a new surface head key SKUj, then over-encrypt Edek(data) with sek and re-encrypt (EBKUi(dek),null) with the new SKUj. Then the status of the data will change into the common status. If the data resource is in the common status, the cloud will decrypt Esek(Edek(data)) with the old sek and re-encrypt it with a new sek.

TABLE 13.2 Initial Status of Data Resource

Id |

Header |

Body |

Id(r) |

ESKUi(EBKUi(dek),null) |

Edek(data) |

TABLE 13.3 Common Status of Data Resource

Id |

Header |

Body |

Id(r) |

ESKUj (EBKUi(dek),sek) |

Esek(Edek(data)) |

Our work assumes that each data resource has an ACL. In order to enforce fine-grained access control through reasonably assigning keys, we define the key derivation function KeyDerivation(U) to generate encryption keys, distribute keys to shared users, and publish tokens to derive keys for authorized users. For the detailed algorithm, code of KeyDerivation(U) refers to the key derivation function defined in the base model of over-encryption [4]. The definition for KeyDerivation (U) → (K, T, L) is as follows:

• Access User Sets U: U is the family of subsets of all the users which derives from the ACLs of all the data resources. For instance, if the ACL of data resource ri regulates that users {A,B,C} can read it, then Ui = {A,B,C} (Ui ∈ U) is the access user set of ri.

• Keys K: K can be the set of all the keys used to derive the keys of the header (base head key BKUi or surface head key SKUi). At the BEL at the initialization phase, ∀Ui ∈ U, ∃KUi is associated with the access user set Ui, where BKUi = hd (KUi). At the SEL, ∀Ui ∈ U, ∃ SKUi is associated with the access user set Ui.

• Public tokens T and labels L: T is the set of all the public tokens which are used to derive keys for the users. L is the set of all the labels which are used to mark access user sets. If ∃Uj ∈ U and Ui is the largest subset of Uj among U, then it must exist that a token tUi,Uj = KUj ⊗ ha (KUi, lUj) is the label of access user set Uj.

13.2.2.2 Batch Revocation

There are two revocation approaches in cryptographic cloud storage, depending on when the re-encryption operations are executed. In an active revocation approach, the revoked data resource is immediately re-encrypted with a new key after a revocation takes place. This is costly and might cause disruptions in the normal operation of cloud storage. In the alternative approach of lazy revocation [7], re-encryption happens only when the data resource is modified for the first time after a revocation.

We propose batch revocation combining lazy revocation to achieve better user experience and reduce the overhead for revocation. In the general scheme, when data owners need to prevent revoked users from accessing their resources, they can invoke the cloud provider to re-encrypt data after a revocation. In this case, revocation operations must involve reading data from the disk, decrypting them and re-encrypting them, so the overhead for revocation cannot be ignored, especially for the data of large size. In our scheme, the cloud can delay the revocations to the time when the predefined conditions are satisfied. The predefined conditions and the final time of revocation can be set by data owners according to their requirements. For example, the cloud can select to delay the revocations on the data of large size to the next read access, which are not frequently accessed. As the base head key is not updated when the data resource is modified, the data owner will use a new data content key to encrypt the content when the data owner modifies it, and the cloud just needs to re-encrypt the header without encrypting the content in the body (the data resource is stored in the initial status). In this case, the cloud can delay the revocations to the next write access in the scenario where multiple revocation operations frequently happen.

13.2.3 Access Control Policy Updates

There are two types of access control policy update operations in most storage systems: (1) grant new privileges to users and (2) revoke privileges. The privileges can be referred to as read privilege or write privilege. Our target is to protect the sensitive data from being disclosed to unauthorized visitors, and we restrict ourselves to the consideration of read privileges.

Policy update operations are often executed in most network applications or systems. For instance, according to the data obtained from MIT, which was given in Plutus [8] about lazy revocation, there are 29,203 individual revocations of users from 2916 different ACLs extracted from 7 months of AFS protection server logs. If the updating of access control policies requires heavy overhead, it will have a negative influence on the performance. In over-encryption, both granting and revoking involve reading data from the disk, encrypting data resource and decrypting data resource, so it results in a large transmission overhead and computation overhead. Our dual-header structure can efficiently reduce the overhead when new privileges are granted, by operating on the small header of the granted resource, instead of the data content with large size. As for revocation, our scheme applies batch revocation to reduce the overhead for repetitive re-encryption operations.

13.2.3.1 Granting Privileges

We define the function Grant(u,r) to authorize a user u to access the data resource r in cryptographic cloud storage systems. Granting privileges in our scheme is implemented by assigning the related keys to the authorized users. In the previous work of over-encryption, grant in both Full SEL and Delta SEL [4] methods involves encryption and decryption operations on the data resource content and other related resources encrypted with the same keys of r. However, we require no reencryption of the content and just require the cloud to re-encrypt the header of r.

When executing Grant(u,r), the data owner first updates the access user set r. USet of r then gets the derivation key K according to r.USet and computes the base head key r.BK of r by hashing K. As resources with the same privileges at the initialization phase are encrypted with the same base head key, which is not changed with the access control policy, r.BK may be derived from the private key Ku of u. If the base head key of r is not included in the set of keys KSet which can be derived by u, the data owner has to add a token from Ku to r.BK, in order to ensure that u can derive r.BK. Then the data owner invokes the cloud to over-encrypt the header of r to make sure only the new access user set r.USet can decrypt the header of r. When the cloud receives the request, it needs to decrypt the header of r with the old surface head key, re-encrypt the header and add tokens to ensure that all the authorized users in the access user set of U can decrypt the header at the SEL, which is implemented by calling the function ReEncryptHeader(header,U). The detailed steps can be seen in Table 13.4.

For the sake of simplicity, we assume that the function Grant(u,r) is referred to a single user u and a single resource r. The extension to sets of users and resources is easy to implement. The main overhead of Grant(u,r) lies in decrypting and re-encrypting the small header of r: DecryptHeader(r,r.SK) and ReEncryptHeader (r.Header,Unew) in Table 13.4.

TABLE 13.4 Algorithms for Granting and Revoking Authorizations

Granting New privileges |

Revoking Privileges |

Data Owner: Grant(u, r) 1. r. USet ← r.USet∪{u} 2. KUi ← GetKey(r) 3. r.BK ← Hd(KUi) 4. KSet ← FindAllKey(Ku) 5. If r.BK ∉ KSet Then AddToken(Ku,r.BK) 6. OverEncryptHeader(r,r.USet) Cloud: OverEncryptHeader(r,Unew) 1. If r.USet ≠ Unew Then r.SK ← GetKey(r) r.Header ← DecryptHeader(r,r.SK) ReEncryptHeader(r.Header, Unew) |

Data Owner: Revoke(U, r) 1. r.USet ← r.USet – U 2. OverEncryptResource(r, r.USet) Cloud: BatchRevoke(r, Unew) 1. r.USet ← Unew 2. If Tcurr ≥ RevocationTime(r) or the predefined conditions are satisfied Then r.SK ← GetKey(r) r.Header ← DecryptHeader(r.Header, r.SK) If r.Header.sek ≠ null Then sekold ← r.Header.sek r.Body ← DecryptBody(r.Body, sekold) r.Header.sek ← GenNewKey() EncryptBody(r.Body, r.Header.sek) ReEncryptHeader(r.Header, Unew) 3. Else wait… |

Cloud: ReEncryptHeader(header; U) 1. If ∃ Ui is an access user set and Ui = U Then SK ← GetSurfaceHeadKey(Ui) 2. Else SK ← GenNewKey() 3. EncryptHeader(header, SK) 4. While U ≠ null % Ensure all the users in U can decrypt the header Umax ← MaxSubUset(U) % Find the maximal access user set Umax, Umax⊆U SKUmax ← GetSurfaceHeadKey(Umax) AddToken(SKUmax, SK) U ← U – Umax |

13.2.3.2 Revoking Privileges

Revocation in our scheme is implemented by updating the keys and re-encrypting the resource at the SEL. Users whose privileges will be revoked might preserve the keys of the related resources locally, therefore the revoked resource should be re-encrypted with new keys. As the cloud could not change the base layer encryption data, we need the cloud to re-encrypt the resource at the SEL.

We define the function Revoke(r,U) at the client side to revoke a set of users U(|U| >= 1) the access to a resource r. At the cloud side, we define the function BatchRevoke(r,Unew) to revoke a set of users not in Unew on r. When executing Revoke(r,U), the data owner updates the access user set r.USet of r by deleting the revoked users U from r. USet, invokes the cloud to over-encrypt r, and requires the cloud to ensure that only users in r.USet can access the new decryption keys by executing OverEncryptResource(r,r.USet). When it receives the request, the cloud will record the freshest access user set of r and wait for the revocation time to execute the function BatchRevoke(r,U). The data owner can define a time period for resources to execute revocations, then the cloud must execute revocations when the final time arrives. The data owner can also predefine conditions to require the cloud execute re-encryption. When the cloud needs to execute re-encryption for revoked resource r, it has to decrypt the header of r and extract the surface content key sekold of r. If sekold is null, it means the body of r has not been over-encrypted by the cloud, or the cloud should decrypt the body of r with sekold. Finally, the cloud should encrypt the body of r with a new surface content key and re-encrypt the header to ensure only the authorized users in the access user set Unew can decrypt the header of r. The details are given in Table 13.4.

13.2.4 Performance Analysis

In cryptographic cloud storage systems, the keys to encrypt data resources need to be updated and re-encryption might be required in order to match the new access control policy. However, the overhead for re-encryption could not be ignored, especially for large amounts of data resources in the cloud. For example, encrypting data with the size of 1 GB will consume 7.15 s by applying OpenSSL 0.9.8 k with a block size of 8 KB (AES-256, encoding rate: 143.30 MB/s) [9]. Therefore, our scheme targets at reducing the overhead for reencryption after the access control policy changes.

13.2.4.1 The Overhead for Privileges Grant

The overhead for privileges grant in our dual-header structure always involves token retrieval and key derivation, reading data from the disk, and encryption/decryption. At the client side, the dominant computation overhead is the retrieval of tokens and key derivation to distribute keys to the new authorized users when new privileges are granted. At the cloud side, the cloud servers have to find the key of related resources by retrieving tokens and deriving keys, read the related resources from the disk, and re-encrypt them.

According to the performance evaluations of overencryption in the extension work [1], the time for retrieving tokens, independent of resource size, is much lower than that for downloading and decrypting large data resources. However, the time required to transfer and decrypt the resource in the experiment analysis of overencryption [1] dominates in the overhead for authorization on resources of a size larger than 1 MB in its local network configuration. The time also grows linearly with the increase in the resource size. Although the cloud does not transfer data resources back to the client, the cloud is required to read the resource from the disk, re-encrypt it, and sometimes might transfer a re-encryption request among different cloud servers with replicas. Therefore, reading data from the disk, decrypting and encrypting data dominate in the overhead for access control policy updates. As the time for reading data from the disk, decrypting and encrypting data is proportional to the size of data resources, our approach that operating on small (about KB level) headers rather than operating on data content (perhaps MB/GB/TB level) has significant benefits in reducing the overhead.

13.2.4.2 The Overhead for Revocation

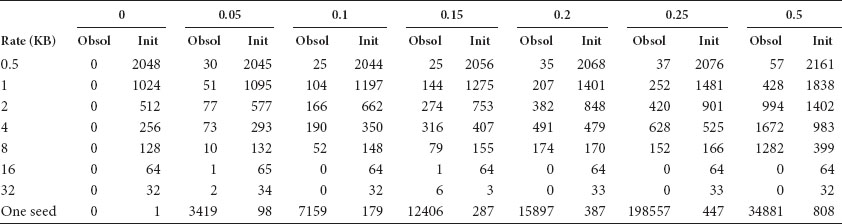

We find that the number of operations of cloud servers on data resources is different between revoking a group of users on a resource one by one and batching the revocations of the group of users on the resource. This is due to data resources with the same ACL encrypted with the same keys. We assume the header or the body of a data resource r is encrypted with a key KABCDEF at the SEL by the cloud, which means it can be read by a set of users {A,B,C,D,E,F} and now r can just be accessed by {A,E,F} after a series of revocations. We give a comparison between revoking {B,C,D} one by one and batching these revocations in Table 13.5. We can see there is a reduction in the number of repetitive operations on the data resource by applying batch revocations. It can significantly reduce overhead for transmission and cipher operations, especially for the data resources of large size when re-encrypting the content in the body.

TABLE 13.5 Comparison of the Number of Operations on Data Resource Content

Function |

Main Operations |

|

Revoking one by one |

Revoke(B,r) |

Read(r), Decrypt(r, KABCDEF), Encrypt(r, KACDEF) |

Revoke(C,r) |

Read(r), Decrypt(r, KACDEF), Encrypt(r, KADEF) |

|

Batch revocation |

Revoke(D,r) |

Read(r), Decrypt(r, KADEF), Encrypt(r, KAEF) |

Revoke({B,C,D},r) |

Read(r), Decrypt(r, KABCDEF), Encrypt(r, KAEF) |

|

Note: Example—Access policy updates: {A,B,C,D,E,F} can read r → {A,E,F} can read r Re-encrypt the header or the body of r with a new surface key: KU, U is the access user set of r.

13.2.5 Security Analysis

Access control of sensitive data in our scheme is implemented by reasonably distributing keys of the two encryption layer (BEL and SEL). In the honest-but-curious model, protecting sensitive data against both unauthorized visitors and the cloud is difficult to implement when re-encryption for the update of access control policy relies on the cloud. Therefore, the security of our scheme lies in the distribution of the cryptographic keys over the two levels, which is executed by the data owner and the cloud provider by appropriately publishing public tokens to construct derivation paths.

In order to prevent sensitive data from unauthorized access, data resources are firstly encrypted with the data content key at the BEL enforced by data owners. Adversaries must obtain the keys (data content key and base head key) of the BEL in order to obtain the plaintext of the data resource. As the data content key in the header is encrypted with the base head key, only with the base head key can the adversary decrypt the data content in the BEL. In fact, the base head key in our approach is equal to the key at the BEL of over-encryption.

We adopt the cloud to protect the base head key of the header at the SEL. In fact, unauthorized users might obtain the base head key in our scheme. For example, a revoked user might locally maintain the base head key of the revoked resource; a newly granted user might unintentionally acquire the base head key of the resource ri, when the user is authorized to access the resource rj, which is encrypted with the same base head key of ri. However, unauthorized users who have acquired the base head key cannot decrypt the data content because the cloud consolidates the defensive barrier. For those with just the base head key, the cloud encrypts the preencrypted data content key in the header with the surface head key. Adversaries cannot get the data content key without the surface head key generated by the cloud. For those who have both the base head key and the data content key generated by the data owner (revoked users), the cloud encapsulates the data content by encrypting it with the surface content key, and the surface content key is also protected by the surface head key. Adversaries cannot decrypt the data content without the surface head key. The surface head key is equal to the key to over-encrypt the pre-encrypted data content in the SEL of over-encryption.

Therefore, the security of our scheme lies in protecting the surface head key and the base head key, which equals to protecting keys at both the BEL and the SEL of over-encryption. The analysis of the related collusion attack by the cloud and the unauthorized users who have obtained the keys of the BEL can be referred to over-encryption [4].

13.2.6 Related Work

In order to protect shared sensitive data from unauthorized access in incompletely trusted servers, shared cryptographic file systems that implement access control have undergone considerable development. SiRiUS [10] and Plutus [8] are earlier file systems, which adopt cryptographic techniques to implement access control. SiRiUS encrypts each data file and divides each file into a meta data file and an encrypted data content file, but the size of meta data file is proportional to the number of authorized users. Plutus groups different files and divides each file into multiple file blocks. Each file group uses a file lock box key and each file block is encrypted with a unique key. However, as different file groups attach different file lock box keys, maintaining multiple keys for a user is inadvisable.

Attribute-based encryption (ABE), which was first proposed in fuzzy identity-based encryption [11], is another branch to share sensitive data in the cloud environment without maintaining keys for each file or each file group. ABE is now widely researched in cloud computing to protect sensitive data [12–14]. Shucheng Yu presents a fine-grained data access control scheme in cloud computing [12], which combines ABE, proxy re-encryption [15,16], and lazy encryption. It supports policy update. However, it cannot update a user’s privilege on a certain specific file, and revoking of users requires updating all the associated attributes and notifying the users who also maintain keys of the related attributes. Our approach just updates the key of the revoked resource.

Over-encryption [1,4] protects the shared sensitive data in honest-but-curious cloud and implements access control policy updates. Its architecture of access control is based on a key derivation structure [17–20] which can be described by an oriented graph, where a key of the vertex V1 can be derived by the key of another vertex V2 only when there is an edge from V1 to V2. In the key derivation structure, a user just needs to maintain private keys to derive all the keys of the authorized resources. In the previous work of over-encryption, both granting and revoking needed to encrypt the related resources. This consumes a lot of resources and time, especially for those data of GB/TB/PB size.

To reduce the overhead of revocations, lazy revocation proposed in Cepheus [21] is widely adopted by existing cryptographic file systems [22]. Lazy reencryption at the price of slightly reduced security [23] delays required re-encryptions until the next write access. Because it brings in much overhead for revocations (reading disc, decrypting data, and encrypting data), we apply batch revocation combining lazy revocation, which reduces the overhead and improves the performance of the cloud storage service.

13.3 CONFIDENTIALITY AND ACCESS CONTROL OF COLLABORATIVE EDITING

In this section, we propose LightCore, a collaborative editing cloud service for sensitive data. In LightCore, before being sent to the cloud all input characters are encrypted by a stream cipher algorithm, which encrypts the plaintext byte by byte. These characters compose the content of the document. The texts are always transmitted, processed, and stored in ciphertext. The cryptographic keys are shared by authorized users, and the encryption algorithms are assumed to be secure. The other operation parameters except the input texts are still sent and processed as plaintext, so the cloud servers can employ OT to coordinate all operations into a joint version but not necessarily understand the document.

LightCore assumes honest-but-curious cloud servers. On one hand, the honest cloud servers always follow their specification to execute the requested operations; on the other hand, a curious server tries to read or infer the sensitive texts in the users’ documents. Note that the honesty feature is assumed to ensure service availability and data integrity, but not for the confidentiality of sensitive data. A malicious cloud server that arbitrarily deviates from its protocol might break service availability or data integrity, but could not harm confidentiality, because the keys are held by clients only and every input character never appears as plaintext outside the clients.

By adopting stream cipher algorithms, LightCore keeps the lightweight load of clients, and takes advantage of the powerful resources of cloud servers as the existing collaborative editing cloud solutions. Because the stream cipher algorithm encrypts only the text byte by byte and the length of each input text is unchanged after being encrypted, the servers can conduct OT and other processing without understanding the ciphertext. On the contrary, the block cipher algorithms encrypt texts block by block (typically, 128 bits or 16 bytes), so the OT processing in ciphertext by servers is extremely difficult because users modify the text (i.e., insert or delete) in characters. That is, each character would have to be encrypted into one block with padding, to support the user operations in characters, which leads to an enormous waste in storage and transmission; otherwise, the workload of resolving edit conflicts would be transferred to the clients, which is unsuitable for resource-limited devices.

In fact, the byte-by-byte encryption feature can be implemented by stream cipher, or the CTR mode of block cipher.* In LightCore (and other collaborative editing systems), the text of a document is composed of a sequence of text segments with unfixed lengths. Because the document is a result of collaborative editing by several users, these text segments are not input and encrypted in chronological order; for example, the sequence of {‘Collaborative’, ‘Editing’, ‘Cloud’} is the result of {‘Collaborative Document Cloud’} after deleting ‘Document’ and then inserting ‘Editing’ by different users. Each text segment is associated with an attribute* called keystream_info, containing the parameters to decrypt it. For the CTR mode of block cipher, keystream_info contains a key identifier, a random string nonceIV, an initial counter, and an offset in a block; for stream cipher, it contains a key identifier and an initial position offset of the keystream. Note that all users share a static master key, and each data key to initialize cipher is derived from the master key and the key identifier.

The efficiency of LightCore varies as the keystream policy changes, that is, (a) different methods are used to generate keystreams, and (b) different key update rules of stream cipher are used in certain use scenarios (if stream cipher is used). In general, stream cipher has higher encryption speed and smaller delay than block cipher [24], but with a relative heavy initialization phase before generating keystreams. Moreover, different from the stateless CTR mode, stream cipher is stateful: given a key, to generate the jth byte of keystream, all kth bytes (k < j) must be generated first. Therefore, insertion operations in random positions (e.g., an insertion in Line 1 after another in Line 2) require the decrypters to cache bytes of keystream to use later; deletion operations cause the decrypters to generate lots of obsoleted bytes of keystream. This performance degradation is mitigated by updating the data keys of stream cipher in LightCore: the user (or encrypter) generates a new data key, re-initializes the encrypter, and then generates key-streams by bytes to encrypt texts. The key update rules are designed by balancing (a) the cost of initialization and keystream generation, and (b) the distribution and order of the deletion and insertion operations.

We implement LightCore based on Etherpad, an open-source collaborative editing cloud system. The LightCore prototype supports the RC4 stream cipher algorithm and the AES CTR mode. Two principles of stream cipher key update rules are adopted, that is, a user (or encrypter) updates the key of stream cipher, if (a) the generated bytes of the keystream come to a predetermined length or (b) the user moves to another position previous to the line of the current cursor to insert texts. Then, the evaluation and analysis on the prototype suggest the suitable keystream policy with detailed parameters for different typical use scenarios. LightCore provides collaborative editing cloud services for online users, with the following properties:

• Reasonable confidentiality against honest-but-curious cloud servers. All input characters are encrypted at the client side before being sent to the cloud servers, either these texts are kept in the document or deleted finally. The content of the document is kept secret to servers, but the format information such as length, paragraph, font and color is known, which enables the servers to coordinate users’ operations.

• Lightweight workload on clients. The cloud servers of LightCore are responsible for receiving users’ edit inputs, resolving edit conflicts, maintaining the documents, and distributing the current freshest versions to online clients. Compared with those of existing collaborative editing solutions, a LightCore user only needs to additionally generate keystreams to protect input texts as an encrypter and decrypt texts from servers as a decrypter.

• Real-time and full functionality. The byte-by-byte lightweight encryption is fully compatible with nonencrypted real-time collaborative editing services, so no editing function is impeded or disabled. Even for a new user that logins into the system to access a very long and repeatedly edited document, the keystream policy facilitates the user to decrypt it in real time.

13.3.1 Background and Related Work

13.3.1.1 Real-Time Collaborative Editing Systems

Collaborative editing is the practice of groups producing works together through individual contributions. In current collaborative editing systems, modifications (e.g., insertions, deletions, font format, or color setting) marked with their authors are propagated from one collaborator to the other collaborator in a timely manner (less than 500 milliseconds). Applying collaborative editing in textual documents, programmatic source code [25,26] or video has been a mainstream.

Distributed systems techniques for ordering [27] and storing have been applied in most real-time collaborative editing systems [28–30], including collaborative editor software and browser-based collaborative editors. Most of these have adopted decentralized settings, but some well-known systems use central cloud resources to simplify synchronization between clients (e.g., Google Docs [31] and Microsoft Office Online [32]). In a collaborative editing system with decentralized settings, the clients take more of the burden on broadcasting, ordering modifications, and resolving conflicts. However, in a cloud-based collaborative system, cloud servers help to order and merge modifications, resolve conflicts, broadcast operations, and store documents. It not only saves the deployment and maintenance costs but also reduces the burden on clients by using cloud resources.

However, the cloud may not be completely trusted by users. In order to protect sensitive data from unauthorized disclosure, data of users are encrypted before being sent to the cloud [18,20,33,34]. SPORC [35] encrypts modifications with block cipher AES at the client side, but the cloud server can only order, broadcast, and store operations, so it is a considerable burden for the clients to resolve conflicts and restore the documents from a series of operations when accessing the documents. In our scheme, data are encrypted with stream cipher, and no functionalities of cloud servers are impeded or disabled.

There are four main features in real-time collaborative editing systems: (a) highly interactive clients are responded to instantly via the network, (b) volatile participants are free to join or leave during a session, (c) modifications are not preplanned by the participants, and (d) edit conflicts on the same data are required to be well resolved to achieve the consistent views to all the clients. As the modifications are collected and sent in less than 500 milliseconds, the size of the input text is relatively small (about 2–4 characters) in spite of the copy and paste operations. In this case, edit conflicts happen very frequently.

13.3.1.2 Operational Transformation

The edit conflict due to concurrent operations is one of the main challenges in collaborative editing systems. Without an efficient solution to edit conflicts, it may result in inconsistent text to different clients when collaborators concurrently edit the same document. There are many methods to resolve conflicts such as the lock mechanism [36,37] and differ-patch [38–40]. Among these methods, operational transformation (OT) [5] adopted in our system is an efficient technology for consistency maintenance when concurrent operations frequently happen. OT was pioneered by C. Ellis and S. Gibbs [41] in the GROVE system. In more than 20 years, OT has evolved to acquire new capabilities in new applications [42–44]. In 2009, OT was adopted as a core technique behind the collaboration features in Apache Wave and Google Docs.

In OT, modifications from clients may be defined as a series of operations. OT ensures consistency by synchronizing shared state, even if concurrent operations arrive at different time points. For example, a string preotty, called S, is shared on the clients C1 and C2, C1 modifies S into pretty by deleting the character at the 3rd position and C2 modifies S into preottily by inserting “il” after the 5th position concurrently, the consistent result should be prettily. However, without appropriate solutions, it may cause inconsistency at client C1: shift from pretty as the result of deletion to prettyil as the result of insertion.

OT preserves consistency by transforming the position of an operation based on the previously applied concurrent operations. By adopting OT, for each two concurrent operations opi and opj irrelevant of the execution sequence, the OT function T(·) satisfies: opi ○ T (opj, opi) ≡ opj ○ T (opi, opj) where opi ○ opj denotes the sequence of operations containing opi followed by opj and ≡ denotes equivalence of the two sequences of operations. In the above example, the consistent result prettily can be achieved at client 1 by transforming the operation “insert ‘il’ after the 5th position” into “insert ‘il’ after the 4th position” based on the operation “delete the character at the 3rd position.”

In a collaborative editing cloud service, the cloud servers can be responsible for receiving and caching editing operations in its queue, imposing order on each editing operation, executing OT on concurrent operations based on the order iteratively, broadcasting these editing operations to other clients, and applying them in its local copy to maintain a latest version of the document. When receiving an operation oprc from the client, the cloud server executes OT as follows:

• Note that the operation oprc is generated from the client’s latest revision rc S0→ S1→… Sr → Src+1…→ SrH denotes the operation series stored in cloud. opc is relevant to Src.

• The cloud server needs to compute new relative to SrH. The cloud server first computes a new relative to Src+1 by computing T (Src+1, oprc). Similarly, the cloud server can repeat for Src+2 and so forth until represented relative to SrH is achieved.

Edit conflicts are also required to be resolved by OT at the client. Considering network delay and the requirement of nonblock editing at the client, the local editing operations may not be processed by the server quickly. Therefore, the client should cache its local operations in its queue and execute OT on the concurrent operations based on these cached operations.

13.3.2 Assumptions and Threat Model

LightCore functionally allows multiple collaborators to edit the shared documents and view changes from other collaborators using cloud resources. We assume that all the authorized collaborators mutually trust each other and strive together to complete the same task (e.g., drafting a report or programming a system). That is, all the changes committed by the client of any collaborator are well intentioned and respected by other collaborators.

The collaborative privileges are granted and managed by a special collaborator, called the initiator, who is in charge of creating the target document for future collaborative editing, generating the shared secret passcode, and distributing it among all authorized collaborators through out-of-band channels. The passcode is used for deriving the master encryption key to protect the shared contents and updates. We assume the passcode is strong enough to resist guessing attacks and brute force attacks. The master key with a random string is used to generate the data key, which initializes cryptographic algorithms to generate keystreams. We assume that the cryptographic algorithms to encrypt data are secure. Meanwhile, we assume that the random string will not be repeatedly generated by the clients. We assume that the client of each collaborator runs in a secure environment which guarantees that

• The generation and distribution of shared secret and privilege management on the client of the initiator are appropriately maintained.

• The secret passcode and keys that appear in the clients would not be stolen by any attackers.

• The communication channel between the client and the cloud is enough to transmit all necessary data in real time and protected by existing techniques such as SSL/TLS.

In LightCore, the cloud server is responsible for storing and maintaining the latest content, executing operations (delete, insert, etc.) against the content, resolving operational conflicts, and broadcasting the updates among multiple clients. The cloud server is considered to be honest-but-curious. In case of risking its reputation, the honest cloud server will timely and correctly disseminate modifications committed by all the authorized clients without maliciously attempting to add, drop, alter, or delay operation requests. However, motivated by economic benefits or curiosity, the cloud provider or its internal employees may spy or probe into the shared content, determine the document type (e.g., a letter) by observing the format and layout, and discover the pivot part of the documents by analyzing the frequency and quantity of access. Additionally, we assume that the cloud servers will protect the content from unauthorized users access and other traditional network attacks (such as DoS attacks), and keep the availability of shared documents, for example, by redundancy.

13.3.3 System Design

This section describes the system design of LightCore. We first give the basic model, including the specifications of clients and servers, and the encryption scheme. Then, the key management of LightCore is presented, and we analyze two different ways to generate keystreams.

13.3.3.1 Basic Model

Similar to existing collaborative editing systems, LightCore involves a group of collaborative users and a cloud server. Each client communicates with the server over the Internet, to send its operations and receive modifications from others in real time. For each document, the server maintains a history of versions. That is, it keeps receiving operations from users, and these modifications make the document shift from one version to another. When applying modifications on a version, the server may need OT to transform some operations. The server also keeps sending the current freshest version to users, that is, all transformed operations since the last version is sent. Because a user is still editing on the stale version when the freshest one is being sent, the OT processing may also be required to update its view at the client side. The above procedure is shown in Figure 13.2.

FIGURE 13.2 LightCore system model.

In LightCore, we design the crypto module for protecting the documents at the client side. The input characters of insertion operation (not deletion operation without inputs) are encrypted with keystreams byte by byte, but the position of each operation is sent in plaintext. When receiving the operation from one client, the cloud server may transform the operation by OT and apply it in the latest version based on the position. That is, no functionalities of the cloud server are impeded or disabled in ciphertext. After receiving the operation from other users through the cloud servers, the input characters of the operation will be firstly decrypted, so that it can be presented at the screen in plaintext.

13.3.3.1.1 Client At the client side, users are authenticated by the cloud before entering the system. The collaborative privileges are granted and managed by the initiator, who is in charge of creating the target document. Therefore, only authorized users can download or edit the document. Meanwhile, the master key to generate keystreams, which are to encrypt the text of the document, is only delivered to the authorized users by the initiator. Without the master key, both the cloud server and attackers from the network cannot read or understand the document.

There are two main phases at the client side to edit a document in LightCore: the pre-edit phase and the editing phase. In this pre-edit phase, the client requests a document to maintain a local copy, and the server will respond with the current freshest version of the document to the client. Before generating the local copy, the user is required to input a passcode, and the document is decrypted with the master key derived from the passcode. This decryption time depends on the length of the document, different from that of decrypting the small text of each operation (Op) in the editing phase. Then, the local copy is used for user’s edit operations, so that edit operations will not be interrupted by network delay or congestion. In the editing phase, the client encrypts its input characters of each operation before sending it to the cloud server. Meanwhile, the operation is cached in a queue (Ops) so that its concurrent operations can be transformed by OT, when it is not successfully received and processed by the server. In the system, every operation is associated with a revision number of the document, which denotes the version that the operation is generated from. When receiving an operation of other clients from the cloud server, the input characters of the operation are firstly decrypted. Then, the client may execute OT on the operation based on the revision number and applies the modification in its local copy.

13.3.3.1.2 Server First of all, to follow users’ requirements and the specification, access control is enforced by the cloud server. The server maintains a history of versions for each document. In the pre-edit phase, the server sends the freshest encrypted document to the client and holds an ordered list of modification records for the document (Ops). Every modification record contains an operation, its revision number, and its author information. In the editing phase, the server keeps receiving operations from the clients, transforming them by executing OT functions based on the modification records, ordering each operation by imposing a global revision number on it and broadcasting these updated operations with new revision numbers to other collaborative clients. Meanwhile, the cloud server merges these operations into a freshest version of the document in ciphertext and adds them to the modification records.

13.3.3.1.3 Encrypted Operations We preserve confidentiality for users’ data by adopting symmetric cryptographic algorithms with the byte-by-byte encryption feature at the client side. In our system, each modification at the client is called an operation. There are two types of edit operations: insertion and deletion. The other operations including copy and paste can also be represented by these two types of operations. An insertion is comprised of the position of the insertion in the document and the inserted text. And a deletion is comprised of the position of the deletion and the length of deleted text. Each inserted text segment of the operation is associated with an attribute called keystream_info, containing the parameters to encrypt and decrypt it. The other operations related to setting font or color are also supported by taking font or color value as attributes.

By applying the byte-by-byte encryption algorithms, the length of each input text is kept unchanged after being encrypted. The cloud server can conduct OT and other processing without understanding the ciphertext. Compared with block cipher, applying stream cipher (including the CTR mode of block cipher) in the system has the following advantages:

• It is difficult for the cloud server to help to resolve conflicts. To satisfy real-time view presentation, the operations are submitted every 500 milliseconds, so the input text of the operation is generally very small (about 2–4 characters). Applying block cipher to encrypt characters block by block makes it difficult for the server to conduct OT functions because users modify the text in characters. That is, the position of the operation related to OT would be extremely difficult to be determined, when modifying a character in a block with an unfixed length of padding. In this case, the OT processing overhead of the server would be transferred to the clients.

• It is feasible for the cloud server to hold a freshest well-organized document. Without understanding the content of the text encrypted by stream cipher, the server can merge operations and apply operations in the latest version of the document based on the position and unchanged length of the text. So, a freshest well-organized document in ciphertext is kept at the server. However, it is costly for the server to apply operations encrypted by block cipher in the latest version of the document. That is, each character would have to be encrypted into one block with fixed-length padding to support operations in characters, which leads to an enormous waste in storage and transmission; otherwise, a series of operations would be processed at the client side when a user requests the document in the pre-edit phase. Although clients can actively submit a well-organized document to the cloud periodically, the transmission cost may also increase the burden on clients.

13.3.3.2 Key Management

We construct a crypto module at the client to encrypt and decrypt the text of the document. In the crypto module, both stream cipher and the CTR mode of block cipher are supported. Each document is assigned a master key (denoted as mk), derived from a passcode. When users access the document, the passcode is required to be input. The passcode may be transmitted through out-of-band channels. We assume that the delivery of the passcode among users is secure.

The text segment of the document is encrypted with the data key (denoted as DK), which initializes the cryptographic algorithm to generate keystreams. The data key is generated by computing

where H is a secure keyed-hash mac function (e.g., SHA-256-HMAC), mk is the master key, userId is the identity of the collaborator, and keyId is a random key identifier. The userId with a unique value in the system is attached to each operation as the attribute author to distinguish different writers. The keyId generated by the client is a parameter contained in the attribute keystream_info. For the CTR mode of block cipher, keystream_info contains a key identifier, a random string nonceIV, an initial counter, and an offset in a block; the string nonceIV ║ counter is the input of the block cipher to generate keystreams, and the counter is increased by one after each block. For stream cipher, it contains a key identifier and an initial position offset of the keystream; the initial position offset locates the bytes of the keystream to decrypt the first character of the text segment. The keyId and nonceIV generated randomly ensure that the keystreams will not be reused. Therefore, different collaborators with different data key generate nonoverlapping keystreams, and bytes of keystreams are not reused to encrypt data.

After encrypting the input texts, the client will send the operation with the attributes author and keystream_info. Therefore, authorized readers and writers with the same master key can compute the data key and generate the same keystreams, based on the attributes when decrypting the texts.

13.3.3.3 Keystream Policies

Both stream cipher and block cipher CTR mode are applied in our system. In general, stream cipher has higher encryption speed and smaller delay than block cipher [24], but the performance of the stateful stream cipher may be degraded when decrypting a document generated from random insertions and deletions. In order to achieve an efficient cryptographic scheme, we design two key update rules for stream cipher, which take full advantage of stream cipher while matching the features of collaborative editing cloud services.

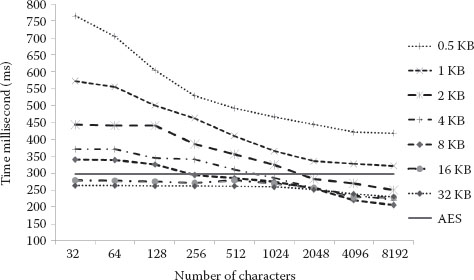

13.3.3.3.1 Comparison of Two Types of Cipher

In both stream cipher and the CTR mode of block cipher, each byte of the plaintext is encrypted one at a time with the corresponding byte of the keystream, to give a byte of the ciphertext. During the execution of the two types of cipher, it involves initialization phase and keystream generation phase. We test the initialization latency and key stream generation speed of ISSAC, Rabbit, RC4, and AES CTR by JavaScript on browsers. The results in Table 13.6 illustrate that the speed of these stream cipher algorithms is much faster than AES, but all of them are with a relatively heavy initialization phase before generating keystreams. For example, the time of executing 1000 times of initialization of RC4 is approximately equal to that of generating 0.38 MB bytes of a keystream. For the CTR mode of stateless block cipher, keystream generation is only related to the counter as the input of block cipher. Given the counter and cryptographic key, the CTR mode of block cipher outputs the corresponding bytes of the keystream.

It generally requires only one initialization (round key schedule) for the CTR mode of block cipher, for multiple block encryption or decryption. Unlike the CTR mode of block cipher, stream cipher is stateful: given a key, to generate the jth byte of keystream, all kth bytes (k < j) must be generated first. Therefore, when decrypting documents by stream cipher, insertion operations in random positions (e.g., an insertion in Line 1 after another in Line 2) require the decrypters to cache bytes of keystreams to use later; deletion operations cause the decrypters to generate lots of obsoleted bytes of keystreams. Examples of the impact from random insertions and deletions are shown in Figure 13.3.

When decrypting a document generated from random insertions, it may require repeatedly initializing the stream cipher and generating obsoleted bytes of keystreams, for the resource-limited clients without enough cache. If all collaborative clients input characters in sequential positions of the document, the position of the inserted texts in a document will be consistent with the position of the used bytes in the keystream. In this case, decrypting the document only requires one initialization and the sequentially generated keystream will be in full use. However, the text segments of the document are not input and encrypted in chronological order due to random insertions. In this case, it may cause inconsistent positions of the text segments and their used bytes of keystreams. For example: a character c1 is inserted in the position previous to the character c2 encrypted with the ith byte of the keystream; as the keystream cannot be reused for security consideration, c1 is encrypted with the jth byte where i < j; to decrypt c1, the bytes from 0th to jth should be firstly generated; if the ith byte is not cached, the client re-initializes the stream cipher to generate bytes from 0th to ith when decrypting c2; therefore, the bytes from 0th to ith called obsoleted bytes are repeatedly generated; otherwise, bytes from 0th to ith shall be preserved until they are reused. In fact, it is difficult to determine whether and when the generated bytes of the keystream will be reused. In this case, the size of cached bytes may be larger than that of the document. It is not advisable to cache such large bytes of keystreams when the document is of a large size.

TABLE 13.6 Comparison of Stream Cipher and CTR Mode of Block Cipher

Algorithms |

||||

Performance |

Stream Cipher |

Block Cipher CTR |

||

ISSAC [45] |

Rabbit [46] |

RC4 [47] |

AES CTR |

|

Initialization latency |

41.73 us |

41.31 us |

35.53 us |

56.79 us |

Keystream generation speed |

24.07 MB/s |

15.45 MB/s |

21.86 MB/s |

3.30 MB/s |

FIGURE 13.3 Examples of random insertions and deletions.

Random deletions also cause the decrypter to generate lots of obsoleted bytes with stream cipher. For example, a text segment T = <c1, c2, …, cn> is firstly inserted by a client, and characters →c2, …, cn–1> are deleted by another client; if all the characters of T are encrypted with the bytes of the keystream initialized by the same key, the bytes of the keystream related to <c2, …, cn–1> are required to be generated to decrypt cn. In this example, n–2 obsoleted bytes of the keystream are generated. However, if cn is encrypted with the bytes of another keystream, which is initialized by an updated key, the n−2 obsoleted bytes would not be generated. In this case, only one additional initialization with the new data key is required. Note that, it is efficient only when the time to generate the continuous deleted bytes of the keystream is longer than that of the additional initialization. If the size of deleted characters is small, it may be less efficient for frequently initializing the stream cipher.

13.3.3.3.2 Key Update Rules for Stream Cipher If a stable performance is expected, adopting the stateless CTR mode of block cipher is suggested. However, to take full advantage of fast stream cipher in LightCore, we design two key update rules to mitigate the performance degradation for stream cipher: the user (or encrypter) generates a new data key, re-initializes the stream cipher algorithm, and then generates keystreams by bytes to encrypt texts. The key update rules are designed by balancing (a) the cost of initialization and keystream generation, and (b) the distribution and order of the insertion and deletion operations.

One key update rule for random insertions is to keep the consistency between the positions of the used bytes in the keystream with the positions of inserted characters in the document. In LightCore, we update the data key to initialize the stream cipher when the user moves to another position previous to the line of the current cursor to insert texts. Therefore, we can ensure that the positions of the bytes in the keystream to encrypt a text T segment are smaller than those of the bytes to encrypt the text in the positions previous to T.

The second key update rule for random deletions is to limit the length of the keystream under each data key. The client updates the key when the generated or used bytes of the keystream come to a predetermined length. The value of the predetermined length should balance the cost of initialization and keystream generation. If the value is too small, it may frequently initialize the stream cipher so the time-consuming initialization may bring much overhead. If the value is too large, lots of deletions may also cause high overhead for generating obsoleted bytes of keystreams related to the deleted characters. By evaluating the performance of stream cipher with the key update rules of different predetermined length, a suitable predetermined length can be set in different use scenarios, which will be illustrated in Section 13.3.6.

13.3.4 Implementation

We built the LightCore prototype on top of Etherpad, a Google open-source real-time collaborative system. The client side code implemented by JavaScript can be executed on different browsers (IE, Chrome, Firefox, Safari, etc.). The cloud server of the system is implemented on Node.js, a platform built on Chrome’s JavaScript runtime. Based on the implementation of Etherpad, there are some issues to be addressed as follows, when we implement the prototype system.

13.3.4.1 Client Improvement

In the pre-edit phase, the decrypter decrypts the whole document from the beginning to the end. If stream cipher is used and the data keys are updated, the decrypter may find multiple data keys are used alternatively; for example, a text segment encrypted with the data key DK1 may be cut into two text segments by inserting another text segment encrypted with another data key DK2; then it results in an alternatively used data key list DK1, DK2, DK1. Therefore, in the pre-edit phase, the decrypter keeps the statuses of multiple stream ciphers initialized with different data keys; otherwise, it may need to initialize a same data key and generate a same keystream more than once. To balance the memory requirement and the efficiency, in the prototype the client maintains the status of two stream ciphers initialized with different data keys for each author of the document. One is called the current decrypter, and the other is to back up the current one called the backup decrypter. When decrypting a text segment encrypted by a new decrypter, the clients back up the current decrypter and update the current decrypter by re-initializing it with a new data key. If a text segment is required to be decrypted by the backup decrypter, the clients will exchange the current decrypter with the backup one. The generated bytes of the keystream by each decrypter are cached, until a predetermined length (1 KB in the prototype system) is reached or the decrypter is updated.

In the editing phase, the input characters of each insertion are encrypted before being sent to the cloud; so, the user (as a decrypter) receives and decrypts texts as the same order that the encrypter encrypts the texts. The client maintains the status of only one decrypter for each client to decrypt the operations from other clients. The bytes of the keystream are sequentially generated to be used, but the generated keystream is not cached since they will not be reused.

13.3.4.1.1 Attributes Update In order to decrypt the text correctly, the attribute keystream_info, including the position information of used bytes of the keystream, is attached to each insertion operation. The position information is expressed by the offset of the byte in the keystream related to the first character of the insertion. However, random insertions will change the value of keystream_info. For example: a text segment T = <c1, c2, …, cn> is encrypted by the bytes from kth to (k + n)th of one keystream, and the offset k is regarded as the value of attribute keystream_info A; then, a new text is inserted between ci and ci+1 of T; finally, T is cut into two text segments T1 = <c1, c2, …, ci> and T2 = <ci+1, ci+2, …, cn> with the same value of A. In fact, the value of A of T2 should be revised into k + i when decrypting the full document. Fortunately, this attribute value is easily revised by the client in the pre-edit phase. Instead of maintaining attributes keystream_info of all the old operations, and revising them for each random insertion in the editing phase, it is more efficient for the client to calculate the correct attribute value of the latter text segment based on the length of the previous text segments with the same keystream_info, because all the texts and the attributes are downloaded from the server during the decryption process in the pre-edit phase.

13.3.4.2 Server Improvement

In order to successfully decrypt data when the whole document is loaded, the server should also update the attributes for random deletions. The end result would be changing key positions and decryption errors that cannot be corrected at the client.