This chapter is a little different from the others. It addresses aspects of writing secure applications that are important but that don’t require an entire chapter to explain. Consider this chapter a catchall!

Cryptic error messages are the bane of normal users and can lead to expensive support calls. However, you need to balance the advice you give to attackers. For example, if the attacker attempts to access a file, you should not return an error message such as "Unable to locate stuff.txt at c:secretstuffdocs"—doing so reveals a little more information about the environment to the attacker. You should return a simple error message, such as "Request Failed," and log the error in the event log so that the administrator can see what’s going on. Another factor to consider is that returning user-supplied information can lead to cross-site scripting attacks if a Web browser might be used with your application. If you’re writing a server, log detailed error messages where the administrator of the system can read them.

Analogous to UNIX daemons, services are the backbone of Microsoft Windows NT and beyond. They provide critical functionality to the operating system and the user without the need for user interaction. Issues you should be aware of if you create a service are described next.

Services in Microsoft Windows are generally console applications designed to run unattended with no user interface. However, in some instances, the service may require interaction with the user. Services running in an elevated security context, such as SYSTEM, should not place windows directly on the desktop. A service that presents users with dialog boxes is known as an interactive service. In the user interface in Windows, the desktop is the security boundary; any application running on the interactive desktop can interact with any window on the interactive desktop, even if that window is invisible. This is true regardless of the security context of the application that creates the window and the security context of the application. The Windows message system does not allow an application to determine the source of a window message.

Because of these design features, any service that opens a window on the interactive desktop is exposing itself to applications executed by the logged-on user. If the service attempts to use window messages to control its functionality, the logged-on user can disrupt functionality by using malicious messages.

Services that run as SYSTEM and access the interactive desktop via calls to OpenWindowStation and GetThreadDesktop are also strongly discouraged.

Note

A future version of Windows might remove support for interactive services completely.

We recommend that the service writer use a client/server technology (such as RPC, sockets, named pipes or COM) to interact with the logged-on user from a service and use MessageBox with MB_SERVICE_NOTIFICATION for simple status displays. However, these methods can also expose your service interfaces over the network. If you don’t intend to make these interfaces available over the network, make sure they are ACL’d appropriately, or if you choose to use sockets, bind to the loopback address (127.0.0.1).

Be wary if your service code has these properties:

Runs as any high-level user, including LocalSystem, AND

The service is marked in the Security Configuration Manager (Log on AsAllow Service to interact with desktop), or registry key HKLMCCSServicesMyServiceType & 0x0100 == 0x0100, OR

CreateService, and dwServiceType & SERVICE_INTERACTIVE_PROCESS= SERVICE_INTERACTIVE_PROCESS, OR

The code calls MessageBox where uType & (MB_DEFAULT_DESKTOP_ONLY | MB_SERVICE_NOTIFICATION | MB_SERVICE_NOTIFICATION_NT3X) != 0, OR

Calls to OpenWindowStation("winsta0",...), SetProcessWindowStation, OpenDesktop("Default",…) and finally, SetThreatDesktop and create UI on that desktop, OR

You call LoadLibrary and GetProcAddress on the above functions.

CreateProcess is also dangerous when creating a new process in SYSTEM context, and the STARTUPINFO.lpDesktop field specifies the interactive user desktop ("Winsta0Default"). If a new process is required in elevated context, the secure way to do this is to obtain a handle to the interactive user’s token and use CreateProcessAsUser.

Services can be configured to run using many different types of accounts, and determining which type of account to use often requires some thought. Let’s review the various types of accounts and take a look at the security implications.

LocalSystem is the most powerful account possible. It has many sensitive privileges available by default. If you’re targeting Windows 2000 and later and are part of a Windows 2000 (or later) domain, this account can also access resources across the network. It has the benefit that it will change its own password. Many services that run as LocalSystem don’t really require this high level of access, especially if the target platform is Windows 2000 and later. Several of the API calls that previously needed high levels of access (e.g., LogonUser) no longer require these rights under Windows XP and later. If you think your service requires operating as LocalSystem, review your reasoning—you may find that it is no longer required. The security implications of running as LocalSystem ought to be obvious: any flaw in your code will lead to complete compromise of the entire system. If you absolutely must run as LocalSystem, review your application design and implementation extremely carefully.

Network Service is a new account introduced in Windows XP. This account doesn’t have many privileges or high-level access but appears to resources across the network the same as the computer or LocalSystem account. Like LocalSystem, it has the benefit of changing its own password (because it is basically a stripped-down version of the LocalSystem account). One drawback to using this account is the fact that several services use this account. If your service gets breached, other services might also be breached.

LocalService is much the same as Network Service, but this account has no access to network resources. Other than this, it shares all the same benefits and drawbacks as Network Service. Both of these accounts should be considered if your service previously ran as LocalSystem.

Using a domain account to run a service can lead to very serious problems, especially if the account has high levels of access to either the local computer, or worse yet, the domain. Services running under domain user accounts create some of the worst security problems I’ve encountered.

Here’s a story. When I (David) first started working at Internet Security Systems, I bet my coworkers lunch that they couldn’t hack my system. At the time, they were all a bunch of UNIX people and I had the lone Windows NT system on our network. I figured that if they managed to hack my system, the price of lunch would be worth the lessons I’d learn. Over a year went by and due to careful administration of my system, no one had hacked me—not a small feat in a group of very sharp security programmers. One day I was scanning the network and found all the systems running a backup service under an account that had Domain Administrator credentials. I immediately went and chewed out our network administrator, who claimed that the boss required him to make the network insecure to get backups running. I told him it wouldn’t be long until the domain was being run by everyone on the network, and he didn’t believe me. The very next day, one of the people I liked least came and notified me that my system had been hacked! Just a few minutes of inspection revealed that the backup account had been used to compromise my system.

The problem with using a domain account to run a service is that anyone who either is or can become an administrator on a system where the service is installed can retrieve the password using the Lsadump2 utility by Todd Sabin of BindView. The first question people ask is whether this isn’t a security hole. In reality, it isn’t—anyone who can obtain administrator-level access could also reconfigure the service to run a different binary, or they could even inject a thread into the running service and get it to perform tasks under the context of the service’s user. In fact, this is how Lsadump2 operates—it injects a thread into the lsass process. You should be aware of this fact when considering which account type to use. If your service gets rolled out in an enterprise, and the administrator uses the same account on all the systems, you can quickly get into a situation where you’re unlikely to be able to secure all the systems at once. A compromise of any one system will result in a password reset on all the systems. Discourage your users from using the same account on all instances of your service, and if your service is meant to run on highly trusted systems like domain controllers, a different account should be used. This is especially true if your service requires high levels of access and runs as a member of the administrators group. If your service must run under a domain user account, try to ensure that it can run as an unprivileged user locally. If you provide enterprise management tools for your service, try to allow administrators to easily manage your service if there is a different user for each instance of the service. Remember that the password will need to be reset regularly.

A local account is often a good choice. Even if the account has local administrator access, an attacker needs administrator-level access to obtain the credentials, and assuming that you’ve generated unique passwords for each system at installation time, the password won’t be useful elsewhere. An even better choice is a local user account without a high level of access. If you can run your service as a low-level local user, then if your service gets compromised, you’re not going to compromise other services on the same system and there’s a much lower chance of leading to the compromise of the system. The biggest reasons you might not be able to run this way is if you require access to network resources or require high-level privileges. If you do run as a local user account, consider making provisions to change your own password. If the domain administrator pushes down a policy that nonexpiring passwords are not allowed, you’d prefer that your service keep running.

As you can see, there are several trade-offs with each choice. Consider your choices carefully, and try to run your service with the least privilege possible.

I have to admit, this is hard advice to follow—many applications, especially Internet protocol applications, announce version details through banner strings because it’s a part of the communications protocol. For example, Web servers can include a Server: header. This can be used by attackers to determine how to attack your application if they know a certain version is vulnerable to a specific attack. Provide an option for changing or removing this header. That said, many attackers would simply launch an attack regardless of the header information.

Note

You can change the version header of an Internet Information Services (IIS) 5 Web server by using URLScan from http://www.microsoft.com/windows2000/downloads/recommended/urlscan/default.asp.

This is similar to the point in the previous section: if error messages change between product versions, an attacker could raise the error condition, determine the product version from the error message, and then mount the attack. For example, in IIS 5, if an attacker wanted to attack Ism.dll, the code that handles .HTR requests, he could request a bogus file, such as Splat.htr, and if the error was Error: The requested file could not be found, he would know Ism.dll was installed and processing HTR requests, because Ism.dll processes its own 404 errors, rather than allowing the core Web server to process the 404.

Code in error paths is often not well tested and doesn’t always clean up all objects, including locks or allocated memory. I cover this in a little more detail in Chapter 19.

If a user or administrator turns off a feature, don’t turn it back on without first prompting the user. Imagine if a user disables FeatureA and installs FeatureB, only to find that FeatureA has miraculously become enabled again. I’ve seen this a couple of times in large setup applications that install multiple products or components.

The same good citizenship practices apply to drivers and kernel mode as with user-mode software. Of course, any kernel-mode failure is catastrophic. Thus, security for drivers includes the even larger issue of driver reliability. A driver that isn’t reliable isn’t secure. This section outlines some of the simple mistakes made and how they can be countered, as well as some best practices. It’s assumed you are familiar with kernel-mode software development.

But before I start in earnest, you must use both Driver Verifier and the checked versions of Ntoskrnl.exe and Hal.dll to test that your driver performs to a minimum quality standard. The Windows DDK documentation has extensive documentation on both of these. You should also consider using the kernel-mode version of Strsafe.h discussed in Chapter 5 for string handling. The kernel-mode version is called NTStrsafe.h and is described in the release notes for the Windows XP Service Pack 1 DDK at http://www.microsoft.com/ddk/relnoteXPsp1.asp. Now let’s look at some specifics.

Almost all drivers that create device objects must set FILE_DEVICE_SECURE_OPEN as a characteristic when the device object is created. The only drivers that should not set this bit in their device objects are those that implement their own security checking, such as file systems. Setting this bit is prerequisite to the I/O Manager always enforcing security on your device object.

Device object protection, set by a discretionary access control list (DACL) in a security descriptor (SD), should be specified in the driver’s INF file. This is the best place to protect device objects. An SD can be specified in an AddReg section in either [ClassInstall32] or [DDInstall.HW] section of the INF file. Note that if the INF is tampered with and the driver has been signed by Windows Hardware Quality Labs (WHQL), the installation will report the tampering.

Use IoCreateDeviceSecure—new to the Microsoft Windows .NET Server 2003 and Windows XP SP1 DDKs—to create named device objects and physical device objects (PDOs) that can be opened in "raw mode" (that is, without a function driver being loaded over the PDO). This function is usable in Windows 2000 and later; you must include Wdmsec.h in your source code and link with Wdmsec.dll.

Many IOCTLs have historically been defined with FILE_ANY_ACCESS. These can’t easily be changed in legacy code, owing to backward compatibility issues. However, for new code, to tighten up security on these IOCTLs, drivers can use IoValidateDeviceIoControlAccess to determine whether the opener has read or write access. This function is usable in Windows 2000 and later and is defined in Wdmsec.h.

Windows Management Instrumentation (WMI) is used to control devices, and its security works differently, in that it is per-interface instead of per-device. For Windows XP and earlier operating system versions, the default security descriptor for WMI GUIDs allows full access to all users. For Windows .NET Server 2003 and later versions, the default security descriptor allows access only to administrators. WMI interface security can be specified by adding a [DDInstall.WMI] section (new to the Windows .NET Server 2003 and Windows XP SP1 DDKs) containing an AddReg section with an SDDL string.

Drivers should avoid implementing their own security checks internally. Hard-coding security rules into driver dispatch routine code can result in drivers defining system policy. This tends to be inflexible and can cause system administration problems.

There are two types of handles that drivers can use: process-specific handles created by user-mode applications and global system handles created by drivers. Drivers should always specify OBJ_KERNEL_HANDLE in the object attributes structure when calling functions that return handles. This ensures that the handle can be accessed in all process contexts, and cannot be closed by a user-mode application.

Drivers must be exceedingly careful when using handles given to them by user-mode applications. First, such handles are context-specific. Second, an attacker might close and reopen the handle to change what it refers to while the driver is using it. Third, an attacker might be passing in such a handle to trick a driver into performing operations that are illegal for the application because access checks are skipped for kernel-mode callers of Zw functions. If a driver must use a user-mode handle, it should call ObReferenceObjectByHandle to immediately swap the handle for an object pointer. Additionally, callers of ObReferenceObjectByHandle should always specify the object type they expect and specify user mode for the mode of access (assuming the user is expected to have the same access that the driver has to the file object).

Many driver writers incorrectly assume their device cannot be opened without a symbolic link. This is not true—Windows NT uses a single unified namespace that is accessible by any application. As such, any "openable" device must be secured.

Drivers often allocate memory on behalf of applications. This memory should be allocated using the ExAllocatePoolWithQuotaTag function under a try/except block. This function will raise an exception if the application has already allocated too much of the system memory.

Don’t mix spin-lock types. If a spin lock is acquired with KeAcquireSpinLock, it must always be acquired using this primitive. You can’t associate this spin lock elsewhere with an in-stack-queued spin lock, for example. Also, it can’t be the external spin lock associated with an interrupt object or the spin lock used to guard an interlocked list via ExInterlockedInsertHeadList. Intermixing spin-lock types can lead to deadlocks.

Note

Build a locking hierarchy for all serialization primitives, and stick with it.

Of course, it’s a basic rule that your driver can’t wait for a nonsignaled dispatcher object at IRQL_DISPATCH_LEVEL or above. Trying to do so results in a bugcheck.

A widespread mistake is not performing correct validation of pointers provided to kernel mode from user mode and assuming that the memory location is fixed. As most driver writers know, the portion of the kernel-mode address space that maps the current user process can change dynamically. Not only that, but other threads and multiple CPUs can change the protection on memory pages without notifying your thread. It’s also possible that an attacker will attempt to pass a kernel-mode address rather than a user-mode address to your driver, causing instability in the system as code blindly writes to kernel memory.

You can mitigate most of these issues by probing all user-mode addresses inside a try/except block prior to using functions such as MmProbeAndLockPages and ProbeForRead and then wrapping all user-mode access in try/except blocks. The following sample code shows how to achieve this:

NTSTATUS AddItem(PWSTR ItemName, ULONG Length, ITEM *pItem) {

NTSTATUS status = STATUS_NO_MORE_MATCHES;

try {

ITEM *pNewItem = GetNextItem();

if (pNewItem) {

// ProbeXXXX raises an exception on failure.

// Align on LARGE_INTEGER boundary.

ProbeForWrite(pItem, sizeof ITEM,

TYPE_ALIGNMENT(LARGE_INTEGER));

RtlCopyMemory(pItem, pNewItem, sizeof ITEM);

status = STATUS_SUCCESS;

}

} except (EXCEPTION_EXECUTE_HANDLER) {

status = GetExceptionCode();

}

return status;

}On the subject of buffers, here’s something you should know: zero-length reads and writes are legal and result in an I/O request packet (IRP) being sent to your driver with the length field (ioStack->Parameters.Read.Length) set at zero. Drivers must check for this before using other fields and assuming they are nonzero.

On a zero-length read, the following are true depending on the I/O type:

For direct I/O

Irp->MdlAddress will be NULL.

For buffered I/O

Irp->AssociatedIrp.SystemBuffer will be zero.

For neither I/O

Irp->UserBuffer is will point to a buffer, but its length will be zero.

Do not rely on ProbeForRead and ProbeForWrite to fail zero-length operations—they explicitly allow zero-length buffers!

When completing a request, the Windows I/O Manager explicitly trusts the byte count provided in Irp->IoStatus.Information if Irp->IoStatus.Status is set to any success value. The value returned in Irp->IoStatus.Information is used by the I/O Manager as the count of bytes to copy back to the user data buffer if the request uses buffered I/O. This byte count is not validated. Never set Irp->IoStatus.Status with the value passed in from the user in, for example, IoStack->Parameters.Read.Length. Doing so can result in an information disclosure problem. For example, a driver provides four bytes of valid data, but the user specified an 8K buffer, so the allocated system buffer is 8K and the I/O Manager copies four bytes of valid data and 8K-4 bytes of random data buffer contents from the system buffer. The system buffer is not initialized when it’s allocated, so the 8K-4 bytes being returned is random, old, contents of the system’s nonpaged pool.

Also note that the I/O Manager also transfers bytes back to user mode if Irp->IoStatus.Status is a warning value (that is, 0x80000000-0xBFFFFFFF). The I/O Manager does not transfer any bytes in the case of an error status (0xC0000000-0xFFFFFFFF). The appropriate status code with which to fail an IRP might depend upon this distinction. For instance, STATUS_BUFFER_OVERFLOW is a warning (data transferred), and STATUS_BUFFER_TOO_SMALL is an error (no bytes transferred).

Direct I/O creates a Memory Descriptor List (MDL) that can be used to directly map a user’s data buffer into kernel virtual address space. This means that the buffer is mapped into kernel virtual address space and into user space simultaneously. Because the user application continues to have access while the driver does, it’s important to never assume consistency of this data between accesses. That is, don’t take "multiple bites" of data from user data buffer and assume data is consistent. Remember, the user could be changing the buffer contents while you’re trying to process it. Similarly, don’t use the user data buffer for temporary storage of intermediate results and assume this data won’t be changed by the user.

One of the most common problems with IOCTLs and FSCTLs is a lack of buffer validity checks (buffer presence assumed, sufficient length assumed, data supplied is implicitly trusted). There’s a common belief that a specified user-mode application is the only one talking to the driver—this is potentially incorrect.

There’s an issue with using METHOD_NEITHER on IOCTLs and FSCTLs; the user arguments for Inbuffer, InBufLen, OutBuffer, and OutBufLen are passed from the I/O Manager precisely as provided by the user and without any validation. This makes using this transfer type much more complicated than using the more generally applicable METHOD_BUFFERED, METHOD_IN_DIRECT, and METHOD_OUT_DIRECT. Don’t forget: access must be done in the context of the requested process!

The same issue occurs when using fast I/O. Although only file systems can implement fast I/O for reads and writes, ordinary drivers can implement fast I/O for IOCTLs. This is the same as using METHOD_NEITHER.

In both of these cases, even though the buffer pointer is non-NULL and the buffer length is nonzero, the buffer pointer still might not be valid or, worse, might specify a location to which the user does not have appropriate access but the driver does.

Probably the single greatest cause of driver problems is the cancel routine because of inherent race conditions between IRP cancellation and I/O initiation, I/O completion, and IRP_MJ_CLEANUP. The best advice is to implement IRP cancel only if necessary. Drivers that can guarantee that IRPs will complete in a "short period of time"—typically, a couple of seconds—generally do not need to implement cancellation. Not implementing cancellation is a great way to reduce errors.

Avoid attempting to cancel in-progress I/O requests, because this is also a common source of problems. Unless the I/O will take an indeterminate amount of time to complete, don’t try to cancel in-progress requests. Obviously, some drivers must implement in-progress cancellation—for example, the serial port driver because a pending read IRP might sit there forever. Even in this case, perhaps use a timer to see if it will complete "on its own" in a "short time."

Never try to optimize IRP cancellation. It’s a rare event, so why optimize it? If you must implement IRP cancellation, consider using the IoCsqXxxx functions. These are defined in CSQ.H.

If using system queuing, consider using IoSetStartIoAttributes with NonCancellable set to TRUE. (This function is available in Windows XP and later.) This ensures that the driver’s startIo entry point is never called with a cancelled IRP. This approach is helpful because it avoids nasty races, and you should always use it when using system queuing and when the driver does not allow in-progress requests to be cancelled.

At numerous security code reviews, code owners have responded with blank looks and puzzled comments when I’ve asked questions such as, "Why was that security decision made?" and "What assertions do you make about the data at this point?" Based on this, it has become obvious that you need to add comments to security-sensitive portions of code. The following is a simple example. Of course, you can use your own style, as long as you are consistent:

// SECURITY!

// The following assumes that the user input, in szParam,

// has already been parsed and verified by the calling function.

HFILE hFile = CreateFile(szParam,

GENERIC_READ,

FILE_SHARE_READ,

NULL,

OPEN_EXISTING,

FILE_ATTRIBUTE_NORMAL,

NULL);

if (hFile != INVALID_HANDLE_VALUE) {

// Work on file.

}This little comment really helps people realize what security decisions and assertions were made at the time the code was written.

Don’t create your own security features unless you absolutely have no other option. In general, security technologies, including authentication, authorization, and encryption, are best handled by the operating system and by system libraries. It also means your code will be smaller.

Often I see applications that rely on the user making a serious security decision. You must understand that most users do not understand security. In fact, they don’t want to know about security; they want their data and computers to be seamlessly protected without their having to make complex decisions. Also remember that most users will choose the path of least resistance and hit the default button. This is a difficult problem to solve—sometimes you must require the user to make the final decision. If your application is one that requires such prompting, please make the wording simple and easy to understand. Don’t clutter the dialog box with too much verbiage.

One of my favorite examples of this is when a user adds a new root X.509 certificate to Microsoft Internet Explorer 5. The dialog box is full of gobbledygook, as shown in Figure 23-1.

I asked my wife what she thought this dialog box means, and she informed me she had no idea. I then asked her which button she would press; once again she had no clue! So I pressed further and told her that clicking No would probably make the task she was about to perform fail and clicking Yes would allow the task to succeed. Based on this information, she said she would click Yes because she wanted her job to complete. As I said, don’t rely on your users making the correct security decision.

This section describes how to avoid common mistakes when calling the CreateProcess, CreateProcessAsUser, CreateProcessWithLogonW, ShellExecute, and WinExec functions, mistakes that could result in security vulnerabilities. For brevity, I’ll use CreateProcess in an example to stand for all these functions.

Depending on the syntax of some parameters passed to these functions, the functions could be incorrectly parsed, potentially leading to different executables being called than the executables intended by the developer. The most dangerous scenario is a Trojan application being invoked, rather than the intended program.

CreateProcess creates a new process determined by two parameters, lpApplicationName and lpCommandLine. The first parameter, lpApplicationName, is the executable your application wants to run, and the second parameter is a pointer to a string that specifies the arguments to pass to the executable. The Platform SDK indicates that the lpApplicationName parameter can be NULL, in which case the executable name must be the first white space–delimited string in lpCommandLine. However, if the executable or pathname has a space in it, a malicious executable might be run if the spaces are not properly handled.

Consider the following example:

CreateProcess(NULL, "C:\Program Files\MyDir\MyApp.exe -p -a", ...);

Note the space between Program and Files. When you use this version of CreateProcess—when the first argument is NULL—the function has to follow a series of steps to determine what you mean. If a file named C:Program.exe exists, the function will call that and pass "FilesMyDirMyApp.exe -p -a" as arguments.

The main vulnerability occurs in the case of a shared computer or Terminal Server if a user can create new files in the drive’s root directory. In that instance, a malicious user can create a Trojan program called Program.exe and any program that incorrectly calls CreateProcess will now launch the Trojan program.

Another potential vulnerability exists. If the filename passed to CreateProcess does not contain the full directory path, the system could potentially run a different executable. For instance, consider two files named MyApp.exe on a server, with one file located in C:Temp and the other in C:winntsystem32. A developer writes some code intending to call MyApp.exe located in the system32 directory but passes only the program’s filename to CreateProcess. If the application calling CreateProcess is launched from the C:Temp directory, the wrong version of MyApp.exe is executed. Because the full path to the correct executable in system32 was not passed to CreateProcess, the system first checked the directory from which the code was loaded (C:Temp), found a program matching the executable name, and ran that file. The Platform SDK outlines the search sequence used by CreateProcess when a directory path is not specified.

A few steps should be taken to ensure executable paths are parsed correctly when using CreateProcess, as discussed in the following sections.

Passing NULL for lpApplicationName relies on the function parsing and determining the executable pathname separately from any additional command line parameters the executable should use. Instead, the actual full path and executable name should be passed in through lpApplicationName, and the additional run-time parameters should be passed in to lpCommandLine. The following example shows the preferred way of calling CreateProcess:

CreateProcess("C:\Program Files\MyDir\MyApp.exe",

"MyApp.exe -p -a",

...);If lpApplicationName is NULL and you’re passing a filename that contains a space in its path, use quoted strings to indicate where the executable filename ends and the arguments begin, like so:

CreateProcess(NULL, ""C:\Program Files\MyDir\MyApp.exe" -p -a", ...);

Of course, if you know where the quotes go, you know the full path to the executable, so why not call CreateProcess correctly in the first place?

The damage potential is high if your application supports shared and writable data segments, but this is not a common problem. Although these segments are supported in Microsoft Windows as a 16-bit application legacy, their use is highly discouraged. A shared/writable memory block is declared in a DLL and is shared among all applications that load the DLL. The problem is that the memory block is unprotected, and any rogue application can load the DLL and write data to the memory segment.

You can produce binaries that support these memory sections. In the examples below, .dangersec is the name of the shared memory section. Your code is insecure if you have any declarations like the following.

-SECTION:.dangersec, rws

Unfortunately, a Knowledge Base article outlines how to create such insecure memory sections: Q125677, "HOWTO: Share Data Between Different Mappings of a DLL."

You can create a more secure alternative, file mappings, by using the CreateFileMapping function and applying a reasonable access control list (ACL) to the object.

If the call to an impersonation function fails for any reason, the client is not impersonated and the client request is made in the security context of the process from which the call was made. If the process is running as a highly privileged account, such as SYSTEM, or as a member of an administrative group, the user might be able to perform actions that would otherwise be disallowed. Therefore, it’s important that you check the return value of the call. If the call fails, raise an error and do not continue execution of the client request.

This is doubly important if the code could run on Microsoft Windows .NET Server 2003, because the ability to impersonate is a privilege and the account attempting the impersonation might not have the privilege. Refer to Chapter 7, for more information about this privilege.

Make sure to check the return value of RpcImpersonateClient, ImpersonateNamedPipeClient, ImpersonateSelf, SetThreadToken, ImpersonateLoggedOnUser, CoImpersonateClient, ImpersonateAnonymousToken, ImpersonateDdeClientWindow, and ImpersonateSecurityContext. Generally, you should follow an access-denied path in your code when any impersonation function fails.

I’ve already outlined this in Chapter 7, but it’s worth repeating. Writing to the Program Files directory requires the user to be an administrator because the access control entry (ACE) for a user is Read, Execute, and List Folder Contents. Requiring administrator privileges defeats the principle of least privilege. If you must store data for the user, store it in the user’s profile: %USERPROFILE%My Documents, where the user has full control. If you want to store data for all users on a computer, write the data to Documents and SettingsAll UsersApplication Datadir.

Writing to Program Files is one of the two main reasons why so many applications ported from Windows 95 to Windows NT and later require that the user be an administrator. The other reason is writing to the HKEY_LOCAL_MACHINE portion of the system registry, and that’s next.

As with writing to Program Files, writing to HKEY_LOCAL_MACHINE is also not recommended for user application information because the ACL on this registry hive allows users (actually, Everyone) read access. This is the second reason so many applications ported from Windows 95 to Windows NT and later require the user to be an administrator. If you must store data for the user in the registry, store it in HKEY_CURRENT_USER, where the user has full control.

This advice has been around since the early days of Windows NT 3.1 in 1993 and it’s covered in detail in other parts of this book, but it’s also worth repeating: if you want to open an object, such as a file or a registry key for read access, open the object for read-only access—don’t request all access. Requiring this means the ACL on the objects in question must be very insecure indeed for the operation to succeed.

Object creation mistakes relate to how some Create functions operate. In general, such functions, including CreateNamedPipe and CreateMutex, have three possible return states: an error occurred and no object handle is returned to the caller, the code gets a handle to the object, and the code gets a handle to the object. The second and third states are the same result, but they have subtle differences. In the second state, the caller receives a handle to an object the code created. In the third state, the caller receives a handle to an already existing object! It is a subtle and potentially dangerous issue if you create named objects—such as named pipes, semaphores, and mutexes—that have predictable names.

The attacker must get code onto the server running the process that creates the objects to achieve any form of exploit, but once that’s accomplished, the potential for serious damage is great.

A security exploit in the Microsoft Telnet server relating to named objects is discussed in "Predictable Name Pipes Could Enable Privilege Elevation via Telnet" at http://www.microsoft.com/technet/security/bulletin/MS01-031.asp. The Telnet server created a named pipe with a common name, and an attacker could hijack the name before the Telnet server started. When the Telnet server "created" the pipe, it actually acquired a handle to an existing pipe, owned by a rogue process.

The moral of this story is simple: when you create a named object based on a well-known name, you must consider the ramifications of an attacker hijacking the name. You can code defensively by allowing your code only to open the initial object and to fail if the object already exists. Here’s some sample code to illustrate the process:

#ifndef FILE_FLAG_FIRST_PIPE_INSTANCE

# define FILE_FLAG_FIRST_PIPE_INSTANCE 0x00080000

#endif

int fCreatedOk = false;

HANDLE hPipe = CreateNamedPipe("\\.\pipe\MyCoolPipe",

PIPE_ACCESS_INBOUND | FILE_FLAG_FIRST_PIPE_INSTANCE ,

PIPE_TYPE_BYTE,

1,

2048,

2048,

NMPWAIT_USE_DEFAULT_WAIT,

NULL); // Default security descriptor

if (hPipe != INVALID_HANDLE_VALUE) {

// Looks like it was created!

CloseHandle(hPipe);

fCreatedOk = true;

} else {

printf("CreateNamedPipe error %d", GetLastError());

}

return fCreatedOk;Note the FILE_FLAG_FIRST_PIPE_INSTANCE flag. If the code above does not create the initial named pipe, the function returns access denied in GetLastError. This flag was added to Windows 2000 Service Pack 1 and later.

Another option that can overcome some of these problems is creating a random name for your pipe, and once it’s created, writing the name of the pipe somewhere that client applications can read. Make sure to secure the place you write the name of the pipe so that a rogue application can’t write its own pipe name. Although this helps with some of the problem, if a denial of service condition is in the server end of the pipe, you could still be attacked.

It’s a little simpler when creating mutexes and semaphores because these approaches have always included the notion of an object existing. The following code shows how you can determine whether the object you created is the first instance:

HANDLE hMutex = CreateMutex(

NULL, // Default security descriptor.

FALSE,

"MyMutex");

if (hMutex == NULL)

printf("CreateMutex error: %d

", GetLastError());

else

if (GetLastError() == ERROR_ALREADY_EXISTS )

printf("CreateMutex opened *existing* mutex

") ;

else

printf("CreateMutex created new mutex

");The key point is determining how your application should react if it detects that a newly created object is actually a reference to an existing object. You might determine that the application should fail and log an event in the event log so that the administrator can determine why the application failed to start.

Remember that this issue exists for named objects only. An object with no name is local to your process and is identified by a unique handle, not a common name.

The Win32 CreateFile call can open not only files but also a handle to a named pipe, a mailslot, or a communications resource. If your application gets the name of a file to open from an untrusted source—which you know is a bad thing, right!—you should ensure that the handle you get from the CreateFile call is to a file by calling GetFileType. Furthermore, you should never call CreateFile from a highly privileged account by using a filename from an untrusted source. The untrusted source could give you the name of a pipe instead of a file. By default, when you open a named pipe, you give permission to the code listening at the other end of the pipe to impersonate you. If the untrusted source gives you the name of a pipe and you open it from a privileged account, the code listening at the other end of the pipe—presumably code written by the same untrusted source that gave you the name in the first place—can impersonate your privileged account (an elevation of privilege attack).

For an extra layer of defense, you should set the dwFlagsAndAttributes argument to SECURITY_SQOS_PRESENT | SECURITY_IDENTIFICATION to prevent impersonation. The following code snippet demonstrates this:

HANDLE hFile = CreateFile(pFullPathName, 0,0,NULL, OPEN_EXISTING, SECURITY_SQOS_PRESENT | SECURITY_IDENTIFICATION, NULL);

There is a small negative side effect of this. SECURITY_SQOS_PRESENT | SECURITY_IDENTIFICATION is the same value as FILE_FLAG_OPEN_NO_RECALL, intended for use by remote storage systems. Therefore, your code could not fetch data from remote storage and move it to local storage when this security option is in place.

Important

Accessing a file determined by a user is obviously a dangerous practice, regardless of CreateFile semantics.

UNIX has a long history of vulnerabilities caused by poor temporary file creation. To date there have been few in Windows, but that does not mean they do not exist. The following are some example vulnerabilities, which could happen in Windows also:

Linux-Mandrake MandrakeUpdate Race Condition vulnerability

Files downloaded by the MandrakeUpdate application are stored in the poorly secured /tmp directory. An attacker might tamper with updated files before they are installed. More information is available at http://www.securityfocus.com/bid/1567.

XFree86 4.0.1 /tmp vulnerabilities

Many /tmp issues reside in this bug. Most notably, temporary files are created using a somewhat predictable name, the process identity of the installation software. Hence, an attacker might tamper with the data before it is fully installed. More information is available at http://www.securityfocus.com/bid/1430.

A secure temporary file has three properties:

A unique name

A difficult-to-guess name

Good access-control policies, which prevent malicious users from creating, changing, or viewing the contents of the file

When creating temporary files in Windows, you should use the system functions GetTempPath and GetTempFileName, rather than writing your own versions. Do not rely on the values held in either of the TMP or TEMP environment variables. Use GetTempPath to determine the temporary location.

These functions satisfy the first and third requirements because GetTempFileName can guarantee the name is unique and GetTempPath will usually create the temporary file in a directory owned by the user, with good ACLs. I say usually because services running as SYSTEM write to the system’s temporary directory (usually C:Temp), even if the service is impersonating the user. However, on Windows XP and later, the LocalService and NetworkService service accounts write temporary files to their own private temporary storage.

However, these two functions together do not guarantee that the filename will be difficult to guess. In fact, GetTempFileName creates unique filenames by incrementing an internal counter—it’s not hard to guess the next number!

Note

GetTempFileName doesn’t create a difficult-to-guess filename; it guarantees only that the filename is unique.

The following code is an example of how to create temporary files that meet the first and second requirements:

#include <windows.h>

HANDLE CreateTempFile(LPCTSTR szPrefix) {

// Get temp dir.

TCHAR szDir[MAX_PATH];

if (GetTempPath(sizeof(szDir)/ sizeof(TCHAR), szDir) == 0)

return NULL;

// Create unique temp file in temp dir.

TCHAR szFileName[MAX_PATH];

if (!GetTempFileName(szDir, szPrefix, 0, szFileName))

return NULL;

// Open temp file.

HANDLE hTemp = CreateFile(szFileName,

GENERIC_READ | GENERIC_WRITE,

0, // Don’t share.

NULL, // Default security descriptor

CREATE_ALWAYS,

FILE_ATTRIBUTE_TEMPORARY |

FILE_FLAG_DELETE_ON_CLOSE,

NULL);

return hTemp == INVALID_HANDLE_VALUE

? NULL

: hTemp;

}

int main() {

BOOL fRet = FALSE;

HANDLE h = CreateTempFile(TEXT("tmp"));

if (h) {

//

// Do stuff with temp file.

//

CloseHandle(h);

}

return 0;

}This sample code is also available with the book’s sample files in the folder Secureco2Chapter 23CreatTempFile. Notice the flags during the call to CreateFile. Table 23-1 explains why they are used when creating temporary files.

Table 23-1. CreateFile Flags Used When Creating Temporary Files

|

Flag |

Comments |

|---|---|

|

CREATE_ALWAYS |

This option will always create the file. If the file already exists—for example, if an attacker has attempted to create a race condition—the attacker’s file is destroyed, thereby reducing the probability of an attack. |

|

FILE_ATTRIBUTE_TEMPORARY |

This option can give the file a small performance boost by attempting to keep the data in memory. |

|

FILE_FLAG_DELETE_ON_CLOSE |

This option forces file deletion when the last handle to the file is closed. It is not 100 percent fail-safe because a system crash might not delete the file. |

Once you have written data to the temporary file, you can call the MoveFile function to create the final file, based on the contents of the temporary data. This, of course, mandates that you do not use the FILE_FLAG_DELETE_ON_CLOSE flag.

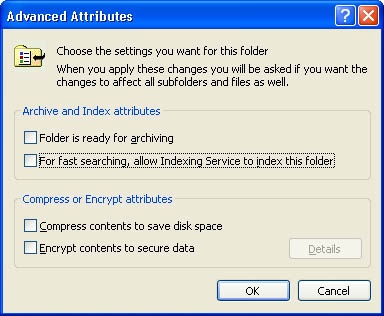

If you want to prevent Indexing Service from indexing the contents of the file, make sure the directory in which the file is created does not have the For Fast Searching, Allow Indexing Service To Index This Folder option set, as shown in Figure 23-2.

Finally, if you are truly paranoid and you want to satisfy the second requirement, you can make it more difficult for an attacker to guess the temporary filename by creating a random prefix for the filename. The following is a simple example using CryptoAPI. You can also find this code with the book’s sample files in the folder Secureco2Chapter 23CreateRandomPrefix.

//CreateRandomPrefix.cpp

#include <windows.h>

#include <wincrypt.h>

#define PREFIX_SIZE (3)

DWORD GetRandomPrefix(TCHAR *szPrefix) {

HCRYPTPROV hProv = NULL;

DWORD dwErr = 0;

TCHAR *szValues =

TEXT("abcdefghijklmnopqrstuvwxyz0123456789");

if (CryptAcquireContext(&hProv,

NULL, NULL,

PROV_RSA_FULL,

CRYPT_VERIFYCONTEXT) == FALSE)

return GetLastError();

size_t cbValues = lstrlen(szValues);

for (int i = 0; i < PREFIX_SIZE; i++) {

DWORD dwTemp;

CryptGenRandom(hProv, sizeof DWORD, (LPBYTE)&dwTemp);

szPrefix[i] = szValues[dwTemp % cbValues];

}

szPrefix[PREFIX_SIZE] = ’�’;

if (hProv)

CryptReleaseContext(hProv, 0);

return dwErr;

}If your users use the Encrypting File System (EFS) it is possible they have encrypted their temporary files directory, as recommended by Microsoft. You may have a little problem if your component creates temporary files in common locations such as the temporary directory, %TEMP%, and then moves them to the final location. Because the files are encrypted using the EFS key of the user account that set up the application, other users might be unable to use your program as they cannot decrypt the files and are denied access by the operating system. Setup programs should perform one of the following actions to ensure their component setup is not broken when used on systems encrypted with EFS:

Create your own random temporary directory

Create the files with the system attribute set (dwFlagsAndAttributes of CreateFile has FILE_ATTRIBUTE_SYSTEM set)

Detect that the %TEMP% directory is encrypted (use GetFileAttributes) and remove the encrypted bit from your files

Starting in Windows 2000, NTFS supports directory junctions. This is similar to a UNIX symbolic link that redirects a reference from one directory to another directory on the same machine. You can create and manage directory junctions using Linkd.exe, a tool available in the Windows Resource Kit.

Directory junctions present a threat to any application that does a recursive traversal of the directory structure. There are two types of applications that an attacker could target. The least dangerous is an application that merely does a recursive scan, such as findstr /s. The attacker could use Linkd.exe to create a loop in the directory hierarchy: for example, he could make c:usersattacker refer to c:. Any recursive search that starts from c:users would never terminate.

A more dangerous attack is to target a process that makes destructive changes recursively through the directory hierarchy, such as rd /s. The attacker can set a trap by making c: emp empdir point to c:windowssystem32. The administrator who thinks temporary files are taking too much disk space will destroy his operating system when he tries to tidy things with the rd /s c: emp command.

It is the responsibility of any application that scans the directory hierarchy—and especially the responsibilities of applications that make destructive changes recursively through the directory hierarchy—to recognize directory junctions and avoid traversing through them. Because directory junctions are implemented using reparse points, applications should see if a directory has the FILE_REPARSE_POINT attribute set before processing that directory. Your code is safe if you do not process any directory with FILE_REPARSE_POINT set, which you can verify with functions such as GetFileAttributes and lpFindFileData->dwFileAttributes in FindFirstFile.

Your application is insecure if you rely solely on client-side security. The reason is simple: you cannot protect the client code from compromise if the attacker has complete and unfettered access to the running system. Any client-side security system can be compromised with a debugger, time, and a motive.

A variation of this is a Web-based application that uses client-side Dynamic HTML (DHTML) code to check for valid user input and doesn’t perform similar validation checks at the server. All an attacker need do is not use your client application but rather use, say, Perl to handcraft some malicious input and bypass the use of a client browser altogether, thereby bypassing the client-side security checks.

Another good reason not to use client-side security is that it gets in the way of delegating tasks to people who aren’t administrators. For example, in all versions of the Windows NT family prior to Windows XP, you had to be an administrator to set the IP address. One would think that all you’d have to do would be to set the correct permissions on the TcpIp registry key, but the user interface was checking to see whether the user was an administrator. If the user isn’t an administrator, you can’t change the IP address through the user interface. If you always use access controls on the underlying system objects, you can more easily adjust who is allowed to perform various tasks.

If you produce sample applications, some of your users will cut and paste the code and use it to build their own applications. If the code is insecure, the client just created an insecure application. I once had one of those "life-changing moments" while spending time with the Microsoft Visual Studio .NET team. One of their developers told me that samples are not samples—they are templates. The comment is true.

When you write a sample application, think to yourself, "Is this code production quality? Would I use this code on my own production system?" If the answer is no, you need to change the sample. People learn by example, and that includes learning bad mistakes from bad samples.

During the Windows Security Push, we set a simple and attainable bar for all Platform SDK samples: "Would you use this code in a Microsoft product?" If the answer was no, the code had to be reworked until it was safe enough to ship.

If you create some form of secure default or have a secure mode for your application, not only should you evangelize the fact that your users should use the secure mode, but also you should talk the talk and walk the walk by using the secure settings in your day to day. Don’t expect your users to use the secure mode if you don’t use the secure mode on a daily basis and live the life of a user.

A good example, following the principle of least privilege, is to remove yourself from the local administrators group and run your application. Does any part of the application fail? If so, are you saying that all users should be administrators to run your application? I hope not!

For what it’s worth, on my primary laptop I am not logged in as an administrator and have not done so for more than two years. Admittedly, when it comes to building a fresh machine, I will add myself to the local administrators group, install all the software I need, and then remove myself. I have fewer problems, and I know that I’m much more secure.

If your application runs as a highly privileged account—such as an administrator account or SYSTEM—or is a component or library used by other applications, you need to be even more vigilant. If the application requires that it be run with elevated privileges, the potential for damage is immense and you should therefore take more steps to make sure the design is solid, the code is secure from attack, and the test plans complete.

The same applies to components or libraries you create. Imagine that you produce a C++ class library or a C# component used by thousands of users and the code is seriously flawed. All of a sudden thousands of users are at risk. If you create reusable code, such as C++ classes, COM components, or .NET classes, you must be doubly assured of the code robustness.

A small number of applications I’ve reviewed contain code that allows access to a protected resource or some protected code, based on there being an Administrator Security ID (SID) in the user’s token. The following code is an example. It acquires the user’s token and searches for the Administrator SID in the token. If the SID is in the token, the user must be an administrator, right?

PSID GetAdminSID() {

BOOL fSIDCreated = FALSE;

SID_IDENTIFIER_AUTHORITY NtAuthority = SECURITY_NT_AUTHORITY;

PSID Admins;

fSIDCreated = AllocateAndInitializeSid(

&NtAuthority,

2,

SECURITY_BUILTIN_DOMAIN_RID,

DOMAIN_ALIAS_RID_ADMINS,

0, 0, 0, 0, 0, 0,

&Admins);

return fSIDCreated ? Admins : NULL;

}

BOOL fIsAnAdmin = FALSE;

PSID sidAdmin = GetAdminSID();

if (!sidAdmin) return;

if (GetTokenInformation(hToken,

TokenGroups,

ptokgrp,

dwInfoSize,

&dwInfoSize)) {

for (int i = 0; i < ptokgrp->GroupCount; i++) {

if (EqualSid(ptokgrp->Groups[i].Sid, sidAdmin)){

fIsAnAdmin = TRUE;

break;

}

}

}

if (sidAdmin)

FreeSid(sidAdmin);This code is insecure on Windows 2000 and later, owing to the nature of restricted tokens. When a restricted token is in effect, any SID can be used for deny-only access, including the Administrator SID. This means that the previous code will return TRUE whether or not the user is an administrator, simply because the Administrator SID is included for deny-only access. Take a look at Chapter 7 for more information regarding restricted tokens. Just a little more checking will return accurate results:

for (int i = 0; i < ptokgrp->GroupCount; i++) {

if (EqualSid(ptokgrp->Groups[i].Sid, sidAdmin) &&

(ptokgrp->Groups[I].Attributes & SE_GROUP_ENABLED)){

fIsAnAdmin = TRUE;

break;

}

}Although this code is better, the only acceptable way to make such a determination is by calling CheckTokenMembership in Windows 2000 and later. That said, if the object can be secured using ACLs, allow the operating system, not your code, to perform the access check.

If your application collects passwords to use with Windows authentication, do not hard-code the password size to 14 characters. Versions of Windows prior to Windows 2000 allowed 14-character passwords. Windows 2000 and later supports passwords up to 256 characters long. You might also need to account for a trailing NULL. The best solution for dealing with passwords in Windows XP is to use the Stored User Names And Passwords functionality described in Chapter 9.

The _alloca function allocates dynamic memory on the stack. The allocated space is freed automatically when the calling function exits, not when the allocation merely passes out of scope. Here’s some sample code using _alloca:

void function(char *szData) {

PVOID p = _alloca(lstrlen(szData));

// use p

}If an attacker provides a long szData, one longer than the stack size, _alloca will raise an exception, causing the application to halt. This is especially bad if the code is present in a server.

The correct way to cope with such error conditions is to wrap the call to _alloca in an exception handler and to reset the stack on failure:

void function(char *szData) {

__try {

PVOID p = _alloca(lstrlen(szData));

// use p

} __except ((EXCEPTION_STACK_OVERFLOW == GetExceptionCode()) ?

EXCEPTION_EXECUTE_HANDLER :

EXCEPTION_CONTINUE_SEARCH) {

_resetstkoflw();

}

}You should be wary also of certain Active Template Library (ATL) string conversion macros because they also call _alloca. The macros include A2W, W2A, CW2CT, and so on. If your code is server code, do not call any of these conversion functions without regard for the data length. This is another example of simply not trusting input.

The version of ATL 7.0 included with Visual Studio .NET 2003 offers support for string conversion macros that offload the data to the heap if the source data is too large. The maximum size allowed is supplied as part of the class instantiation:

#include "atlconv.h" ⋮ LPWSTR szwString = CA2WEX<64>(szString);

Note that C# includes the stackalloc construct, which is similar to _alloca. However, stackalloc can be used only when the code is compiled with the /unsafe option and the function is marked unsafe:

public static unsafe void Fibonacci() {

int* fib = stackalloc int[100];

int* p = fib;

*p++ = *p++ = 1;

for (int i=2; i<100; ++i, ++p)

*p = p[-1] + p[-2];

for (int i=0; i<10; ++i)

Console.WriteLine (fib[i]);

}I know you’ve done this; I certainly have. You’ve written a small code stub to exercise some functionality prior to adding it to the production code. And as you tested it, you needed to make sure it worked with real servers, so you hardcoded an internal server name and connected to it by using a hard-coded account name and potentially a hard-coded password. If you allow this kind of code, you should at least wrap a predefined #ifdef around the code:

#ifdef INTERNAL_USE_ONLY # ifndef _DEBUG # error "Cannot build internal and non-debug code" # endif // _DEBUG // experimental code here #endif // INTERNAL_USE_ONLY

Note

This code goes a little futher. The compiler will fail to compile when the code is being compiled for non-debug (release build) and internal use.

You should also consider scanning all source code for certain words that relate to your company, including the following:

Common server names (DNS [Domain Name System] and NetBIOS names)

Internally well-known e-mail names (such as the CEO)

Domain accounts, such as EXAIRaccount and [email protected].

You may wonder how moving strings to a resource DLL has security implications. From experience, if a security bug must be fixed quickly (and most, if not all, should be), it makes it easier to ship one fix for multiple languages rather than shipping multiple fixes for different languages. If you offload all strings and resources such as dialog boxes, the same binary with the fix is by definition language-neutral because there are no strings in the image. They are located in a single, external resource DLL, and this DLL needs no security fixes because it contains no code. You can differentiate languages in a resource file (.RC file) by using the LANGUAGE directive.

Logs that have an appropriate amount of information can make the difference between being able to trace an attack and sitting helpless. Logs, whether they are event logs or more detailed application logs like those found in IIS and ISA, are used to determine the health, performance, and stability of applications.

One consideration for logging is that when something goes wrong you might have only your logs to help you determine what went wrong. A server application should log detailed information about the client and the data in the request. Be aware that DNS names and NetBIOS names might not have enough information to be helpful—it’s nice to have them, but you should log IP addresses as well.

While we’re on the topic of IP addresses, if your application has information about the source IP at the application level, and it will have information about the source address, log both. Here’s a problem that was found in the logs from Terminal Services: it was recording the IP address of the client, not the IP address of the packet. Now consider the case where the client is behind a NAT or other firewall; the original IP address might be a private address, such as 192.168.0.1. That’s not very helpful for finding the source of the connection! If you log the source IP address, you can at least go back to the ISP or firewall administrator to see who was making the connections.

Whether to log in the Application Log provided by the operating system or in your own logs depends on the volume of the logs you create. If you’re creating a lot of logs, you should have your own files—there are limits on how large the event logs can be. An additional consideration with the Application Log is that prior to Microsoft Windows .NET Server 2003, these logs could be read by any authenticated user across the network. This issue is addressed with a stronger ACL, and network users aren’t allowed access to these logs by default. Think carefully before putting security-sensitive information in the Application Log.

Some additional guidelines are that logs should go into a directory that is user-configurable, and it’s best to create a new log file once a day. You may want to consider having more than one log file—one file could contain routine events, and another could contain detailed information about extraordinary events. You’d probably like to record very detailed information when something unusual happens. Application logs should also be writable only by the administrator and the user the service runs under. If the information could be security-sensitive, it shouldn’t allow ordinary users to read the data.

When code fails for security reasons, such as an access-denied, privilege-not-held error, or permission failure, log the data somewhere accessible to the administrator and only to the administrator. Give just enough information to aid that person, but not too much information to aid attackers by telling them exactly what security settings made their actions fail.

Something we encouraged during the various security pushes across Microsoft is to identify components written in C or C++ that could be migrated to C# or another managed language. This does not mean the code is now more secure, but it does mean that some classes of attacks—most notably buffer overruns—are much harder to exploit, as are memory and resource leaks that lead to denial of service attacks in server code. You should identify portions of your code that are appropriate to migrate to managed code.