Using a Debugger to Trace Program Execution and Change its Behavior |

Using a Decompiler or Disassembler to Reverse Engineer a Program |

Examining a black box component, either by observing its behavior in use or by reverse engineering to determine its inner workings, can provide information that is useful in finding security bugs. In this chapter, we begin by discussing some of the basic methods you can use to study the behavior of black box components without reverse engineering their inner workings. Then we discuss how to use debuggers (programs used to track down bugs by tracing program execution), decompilers (programs that convert a program’s binary code into a higher level programming language), and disassemblers (programs that convert a program’s binary code into assembly language) to reverse engineer a program. Unlike most other chapters of this book, this chapter does not discuss a specific type of security issue. Instead, this chapter discusses techniques that you can use to better understand bugs in your applications. Examination of your own code is a valuable for understanding how others might exploit the binaries you ship.

As discussed later in this chapter, reverse engineering a computer program commonly involves the study and analysis of the program’s binary executable without the use of the program’s source code to help you understand its inner workings. This effort requires that the program first be decompiled into code of high-level programming (like C++) or disassembled into assembly code and may also require the use of a debugger to trace the program’s execution. It also requires a great deal of time because you must acquire in-depth comprehension of a program’s binary code.

Observation of a program’s operation, which we discuss in this section, can be performed in significantly less time. In fact, you can accomplish this type of study without even having a copy of the target program’s binary files. Two common methods of observation are comparing output based on changes in input and the use of monitoring tools to study the effects of program execution.

When you are searching for security flaws, it is helpful to have as much detailed knowledge of an application’s operation as possible. One way to obtain this information is by using the application and noting the details of its functionality. For example, if you are trying to bypass a filter that attempts to block cross-site scripting attacks (discussed in Chapter 10), you could try sending every possible character separately as input and watching to determine which characters are filtered.

Small changes in output often reveal underlying implementation details, anomalies, and bugs. Error messages also disclose helpful information. For example, the error message “SQL error” indicates that the operation requires interaction with a database. This approach – observing the program’s behavior in use – does not require great technical skill or in-depth understanding of code.

A program’s output is sometimes later used as input by the same or a different program. Examples include network traffic and data files. Understanding how a program produces output can help you create similar data that could later be consumed as input. For example, a client application might send data (output) over the network to be read by the server (input). Making small changes in the user-controlled input to an application that produces output data can help determine the data format used by the application. For example, Microsoft Office Word documents can contain hyperlinks (URLs). By repeatedly saving the same file using a slightly different hyperlink each time, comparing the resulting data files and noting their differences, you can begin to discover the document file format.

Recently, we used this approach to save two Microsoft Word files (output), the first with the hyperlink (URL) “Test”, and the second with the URL “Test2”. Figure 17-1 shows offset 0x146c-0x147b of both files when viewed in a binary editor (also known as a hex editor). Notice any differences? In addition to the change in the URL text, the binary data preceding the URL changed from 05 00 00 00 to 06 00 00 00. If the terminating NULL character is included, the length of the embedded URL changed from 5 characters in the first document to 6 characters in the second document. This corresponds directly with the data preceding the URL in the file. Also note that the length of the embedded URL is stored in little endian notation (meaning it is stored backward, so the actual value is 00 00 00 06).

By using the approach outlined in the preceding section, you will be better able to understand an application’s implementation and data format. Understanding the data format and data handling is helpful when you focus testing on a specific area or try to create malicious input. In the Word file example, it is probable that when the application opens this file as input, it reads the size field before reading the data field (the hyperlink’s URL). The program’s parser might assume that the length of the data matches the length specified in the file and that the data is NULL-terminated. Neither of these assumptions should be made!

Imagine that the parser allocates a buffer the size of the size field and then reads the data field until a NULL character is encountered. By creating a test case in which the size field is small and the data field contains a large amount of data, you might cause a buffer overrun. By comparing the output that becomes input, you can more easily understand the various fields contained in the input and use that knowledge to create better test cases.

Monitoring tools can give you even greater insight into how software works without the need for a debugger or assembly knowledge. Some common monitoring tools are Logger/Log Viewer, RegMon, FileMon, Ethereal, and Microsoft SQL Server Profiler.

Monitoring tools enable you to quickly understand key pieces of information about a program’s implementation. By using RegMon and FileMon, you can determine which files and registry keys are written and read. Ethereal shows all network traffic. SQL Server Profiler shows the exact SQL statements made. Logger/Log Viewer enables you to obtain two important pieces of information: application programming interfaces (APIs) and the data used in the parameters when calling the APIs. By knowing this information, you will be better able to understand the application’s implementation and create better test cases.

Earlier chapters discuss in more detail the uses of RegMon (Chapter 3), FileMon (Chapter 3), Ethereal (Chapter 4), and SQL Server Profiler (Chapter 16). Here is some information about Logger/Log Viewer.

You might have noticed that many of the tools used for security testing are general tools not made exclusively for security purposes. The pair of tools, Logger and Log Viewer, is no different. These tools were created to help users more easily debug applications and find performance issues. They are included as part of the Microsoft Debugging Tools for Windows (http://www.microsoft.com/whdc/devtools/debugging/installx86.mspx). These free tools enable you to log for later viewing the API calls a process makes. For example, Log Viewer, in Figure 17-2, shows that Wordpad.exe calls the lstrcpyW API to copy the document filename into a buffer.

Tip

Because of the way Logger uses the stack, it is known not to work in all situations. See the product documentation for details. In cases in which Logger does not function as needed, APISpy32 (http://www.internals.com) can be used instead.

You can also use Logger/Log Viewer to see whether hyperlinks in an application are invoked with a call to WinExec. By viewing the parameter data, you can also see how attacker-supplied data is used throughout the application and can target your testing more effectively. For example, lstrcpyW was used to copy the filename Document.rtf, as shown in Figure 17-2. Because an attacker might be able to control the filename, an interesting targeted buffer overflow case is a long filename (you know the application calls an API to perform an unbounded string copy).

Important

The API functions lstrcpy and strcpy are not compiled into an application’s binary in the same way. lstrcpy is part of kernel32.dll. When lstrcpy is called, code inside kernel32.dll is executed, which enables Logger and APISpy32 to detect its use. On the other hand, the code for strcpy is built into the application’s binary and cannot be detected by the monitoring tools mentioned. Functions like strcpy can be more easily identified by using a disassembler, as discussed later in this chapter.

After you use monitoring tools to better understand the program, using a debugger takes you one step deeper. Even without access to the source code or symbols, you can still debug an application. Instead of seeing the source code in a high-level language such as C/C++, in a debugger you see the source code represented in assembly language. By using a debugger, you can modify the execution flow of a program to make it perform actions that would not normally occur. You can also read and write the process’s memory. Although the ability to modify execution flow under the debugger does not constitute a security flaw, this approach can aid in security testing.

Using a debugger can save you tremendous amounts of time and can also enable you to test cases that defy a program’s logic by forcing the application to abandon its normal pattern of flow. For instance, we recently tested a server and a client application that encrypts and signs each packet in a complex way. We wanted to test the server by sending characters that the client software prevents the user from sending. One way we might have accomplished this task could be to craft the data manually, encrypt it, and sign it. However, such work is not trivial. Instead, we used the existing client by running it under a debugger and skipping the character validation that normally occurs. This approach successfully allowed our illegal characters to be encrypted and signed by the client. The server gladly accepted the data it received because the data was correctly encrypted and signed. We were able to exploit the server because the server logic assumed it was not possible for the client to send these illegal characters and did not validate the data itself. By using this approach, we were able to save several days of testing.

Tip

Debug output is sometimes used by developers to help them better understand the state the application is in without stepping through each line of code. If code that ships to the public contains debug output using an API such as OutputDebugString, attackers can use this data to more easily understand the logic of the program. Also, anyone who attaches a debugger to the running program is able to see the output. Although an attacker could step through the binary’s assembly code to gain information similar to that normally contained in debug output, using debug output takes less time and expertise.

Software vendors often impose restrictions on what users can do with a particular piece of software or how the program can be used, sometimes by introducing checks to prevent anyone from modifying execution flow. Some checks include digitally signing the binary and then verifying the signature, checking to see whether a debugger is attached, and obfuscating the binary. Although these measures slow the inspection and testing process, they do not prevent it from a technical point of view. With a little knowledge of assembly and a debugger, it is possible bypass these restrictions. In some cases, such as software designed to protect against unauthorized copying, bypassing such restrictions may enable use of the program without purchasing a license and could result in a loss of revenue for the software vendor. It is important, therefore, that the vendor identify any such vulnerabilities to ensure the robustness of the protection scheme. The techniques used to defeat copy restrictions can also be used to modify an existing client or server so that it is able to send data not normally allowed. Sending this data might enable you to find a security bug in the software that receives the data, as discussed in Chapter 4 and Chapter 5.

One approach to modifying the execution flow is to use a debugger and manually modify instructions at run time. This approach works, but can become time-consuming if the same modifications need to be made repeatedly. Patching the program’s binary enables you to remove certain restrictions from the software permanently.

When the source code is not available, it is possible to patch binaries to fix bugs in code, to make small functionality changes to better suit the user’s needs, or to remove restrictions built into the software. A common type of restriction built into software is copy protection. It is important that a software vendor test the robustness of its copy protection schemes to minimize the ease with which they will be bypassed by unauthorized users. Typical copy protection schemes might include a requirement that users register the product before use, time restrictions (beta and trial software often do this), and permitting the product to run only in a reduced-functionality mode until the user registers (shareware commonly does this). The following example shows one way that a copy protection check might be removed from a binary or bypassed. The same approach might be taken to effectively remove other restrictions.

Copy protection schemes often involve a process in which the software checks whether it should run and, if it should, which functionality should be exposed. One type of copy protection, common in trial software, allows a program to run only until a certain date. If you can modify the binary file on disk or in memory, you can modify that part of the program’s code or remove it completely.

To follow along with this example, you need the following:

Expiration.exe. Included on this book’s companion Web site

OllyDbg. Shareware debugger/disassembler with great patching and reverse engineering features; available at http://www.ollydbg.de/

When you attempt to run Expiration.exe, it will refuse to load because the current date is past the date on which the authorized trial expired. How can you use this program regardless of the expiration of the trial period? One approach is to set your computer’s clock back so that the program believes today’s date is within the trial period. But this isn’t a very elegant solution, and resetting your system date might cause issues for other programs you are running. However, by patching the binary, the copy protection checks that prevent the program from running after the trial period ends can be removed, and the program can be used without having to set your computer’s clock back. One common approach to finding and neutralizing the time limit code is as follows:

Run the program to understand what happens when the trial period has expired.

Once the program refuses to load normally, break into the debugger.

Trace the code backward to identify the code path(s) that led to the error indicating that the trial period has expired.

Modify the binary to force all code paths to succeed and to never hit the trial expiration code path.

Test the modifications.

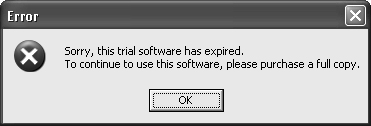

Step 1: Understanding what happens when the trial has expired. This step is simple enough. Run Expiration.exe. Upon loading Expiration.exe, you are greeted with the dialog box shown in Figure 17-3, which says, “Sorry, this trial software has expired. To continue to use this software, please purchase a full copy.”

Figure 17-3. Error message in Expiration.exe that warns the software cannot be used because the trial period has expired

Step 2: Breaking into the debugger once the error code path has been executed. The person who wrote this program has provided you with a very helpful error message indicating when the trial period has expired. You can use this error message to help identify any code that might lead to the error code path. While the error dialog box is still displayed, start debugging this process using OllyDbg.

To debug the Expiration.exe process that is already running, open OllyDbg, and on the File menu select Attach. In the dialog box that is displayed, you can select the process you wish to debug. Select Expiration.exe, and then click the Attach button.

After attaching to the process in OllyDbg, press F9 to continue execution of Expiration.exe. Now you’re ready to find the time limit code.

Step 3: Tracing backward to identify the time limit code. Next, find the code that causes the error message to be displayed. Inside OllyDbg, press F12. This pauses the process and enables you to debug it.

Finding where the error message was displayed. View the call stack by pressing Alt+K. The call stack is displayed as shown in Figure 17-4. Notice that OllyDbg shows that the trial error text is a parameter to MessageBoxA. Select USER32.MessageBoxA near the bottom of the call stack. Right-click in the call stack window, and choose Show Call. The CPU window in which the assembly call to MessageBoxA is selected is displayed. This is a starting point for beginning the backward trace to identify where the time limit check was performed.

Using OllyDbg features to help analyze the code. OllyDbg has a nice Analyze Code feature that enables you more easily to follow the assembly code called. Press Ctrl+A to have OllyDbg analyze Expiration.exe. The CPU window will look similar to the one shown in Figure 17-5. Notice that there are greater than signs (>) next to some of the lines of code. If a line of code begins with a greater than sign, another line of code jumps to that location.

Figure 17-5. CPU window showing the call to MessageBoxA as the cause of the display of the expiration error dialog box

Directly before the call to MessageBoxA, four parameters are pushed onto the stack. The PUSH 10 instruction contains a greater than sign before it, so you know it is referenced by another line of code. Select this line of code containing PUSH 10.

Tip

The line of code directly before the PUSH 10 line is a RETN instruction, which is used to return to an address. The line of code after the RETN instruction will not be executed after RETN is executed. This means that there are only two code paths to get to the MessageBoxA call that displays the error message (0x00401055 and 0x00401063).

Finding what caused the error message to be displayed. When you select the PUSH 10 instruction located at 0x004011C0, the lines of code that reference the selected line are displayed in the text area below the top pane in the CPU window. You should see Jumps For 00401055, 00401063 displayed. Right-click in this text area to open the shortcut menu shown in Figure 17-6 that includes options to go to each location that references PUSH 10.

Figure 17-6. An OllyDbg shortcut menu that allows you to navigate easily to code that refers to a selected line of code

Step 4: Modifying the binary to never hit the expiration code path. The context menu shows that both 0x00401055 and 0x00401063 contain JAs to the PUSH 10 used for MessageBoxA. JA (jump above) is a conditional statement instruction meaning jump if above. It often follows a CMP instruction (used to compare two operands). In this case, the JA is used to jump if the current date is later than (above) the date the trial expires.

Select Go To JA From 00401055. You should now be on code at location 0x00401055. You want to prevent the program from hitting the error code path. One way to do this is by changing the JA instruction to an NOP (which stands for “no operation” and is an instruction that tells the processor to do nothing). This effectively wipes out the JA code path to the expiration code path.

Right-click inside the CPU window, click Binary, and then click Fill With NOPs. You should see the code in the CPU window change from a JA to a series of NOPs. Another common approach you can use when patching binaries is to reverse the logic so the opposite decision is made. For example, a JE (jump equal) is replaced with a JNE (jump not equal), or JA is replaced with JBE (jump below or equal).

Repeat the steps to modify the other jump. Press the hyphen key (-) to go back to the PUSH 10 instruction. Using the same steps you used to replace the JA at 0x00401055 with NOPs, replace the JA at 0x00401063 with NOPs.

Save the modifications. Save the modifications by right-clicking in the CPU window, clicking Copy To Executable, and then clicking All Modifications. Select Copy All in the dialog box that is displayed. A new window will appear named Expiration.exe. Right-click in this window, and choose Save File. Save the file as Expiration-cracked.exe.

Step 5: Testing the modifications. Now load the modified program (Expiration-cracked.exe). Congratulations! You’ve successfully defeated the expiration trial period mechanism. You should see the dialog box shown in Figure 17-7.

This example demonstrates one way to challenge the strength of your copy protection measures and identify ways to make your software more secure against unauthorized use. Can you think of a way to make overcoming this protection example more difficult? One way would be to have the expiration decision made in a thread separate from the one that displays the error dialog. Tracing the code backward as we did in this example would then be more difficult.

Creating uncrackable copy protection is impossible. However, creating copy protection that is reasonably robust and prevents quick cracking is an achievable goal. By attempting to defeat the copy protection of your application, you can learn a great deal about how robust the protection scheme is. By doing this testing before the product becomes publicly available, you can change the code to make circumvention of the copy protection scheme more difficult before it is released.

Just as the execution flow can be observed and modified under a debugger, so can the contents of memory. By using a debugger, you have full access to all of the process’s memory contents. In addition to being able to enter data that would not normally be allowed through the application, you are able to see data that the programmer might not want you to see. For example, some programmers store passwords and other secrets in the program’s binary. Sometimes these passwords or other secret data are constructed in memory at run time and are not easily visible in the binary file. By accessing memory after the program is loaded, you have access to those secrets.

Copy protection is not limited to protecting software programs from being copied. Electronic documents, media files, and other electronic content formats sometimes use Digital Rights Management (DRM) to allow access only to certain users or to otherwise limit their reproduction or use. If you can view (or play, in the case of media files) the content on your computer, the content likely is present in memory at some point. If this is the case, it may be possible to defeat the copy protection and obtain access to the content regardless of limitations normally imposed by the software viewer or player.

By using a debugger, you have access to a process’s memory. OllyDbg enables the user to search, copy, and edit the contents of a process’s memory. This is useful in itself, but it is sometimes helpful to set breakpoints when a memory location is accessed. This technique can help you better understand how attacker-supplied data is used once it is in memory. The following example demonstrates how to set breakpoints on memory reads and writes.

Tip

WinHex (http://www.winhex.com) is a hex editor that contains many features, one of which is searching and modifying data in RAM. This tool makes accessing memory extremely easy without using a debugger.

Programs sometimes require a name/serial code combination to register the software. Failure to register the program can result in the program not running or running in a reduced-functionality mode. When users pay for a program, they are given a serial code and are asked to enter it into the program to bypass the restrictions imposed on unregistered software. If the user enters a valid serial code, the program is unlocked and restrictions associated with an unregistered copy are bypassed.

Often the serial code corresponds in some way to the name of the person registering the program. Software vendors do this as a precaution against software piracy. Paying customers might post their serial codes to the Internet in an effort to share the program illegally, but the serial code alone does not allow other users to register their copies of the software. Under this scheme, both the user’s name and the serial code are required. Software vendors gamble that users won’t post both their names and serial codes to the Internet for sharing, for fear of legal action by the vendor.

Take a look at Crackme1.exe, which is included on this book’s companion Web site. When users enter their name and serial code (see Figure 17-8), the program decides whether the serial code is correct. The program must have some algorithm to determine the correct serial code for the name entered. Think about how a program like this might be implemented. One way a programmer might verify the serial code is to generate the correct serial code for the name entered, and then compare the generated serial code to the one entered. If you can see the data in memory that is compared to the invalid serial code you entered, you could easily copy the valid serial code from the process’s memory and successfully register on your next attempt. Note that not all validation routines generate the full serial number to compare to the one entered; instead, pieces of the entered serial code are validated separately.

Tip

This example shows a quick way to better understand how data you enter is used by an application. Among those who break registration schemes, obtaining the serial code from memory is considered a beginner’s technique. A more elegant approach is to disassemble the registration validation scheme, study the disassembly to learn its internal workings, and create a separate program that generates a valid key given an arbitrary name. Understanding the disassembly to this level requires more skill and is discussed later in this chapter.

The approach of patching the binary is sometimes also used to defeat registration routines. However, patching the binary can introduce bugs and result in unexpected behavior. Reserve patching for places where no other alternative is available.

Now imagine that you want to find the secret serial code in memory. In particular, imagine that you want to find the part of the program that compares the invalid serial code you entered with the valid one generated by the program. How can you do that? You could start by disassembling the program, studying the disassembly to gain a thorough understanding of the code, and then setting a breakpoint on the compare. But this is time-consuming. A faster alternative is to set breakpoints on APIs that might read your data into memory, and then set a memory access breakpoint on the memory address containing your data. This way, whenever a part of the program attempts to access your data, the debugger will pinpoint that part of the program for your examination.

Step 1: Setting breakpoints on APIs the binary uses to obtain your data. The user data comes from text boxes in the user interface (UI), like the one shown in Figure 17-8. On the Microsoft Windows platform, a few common APIs retrieve text from a text box. One of the most common is GetDlgItemText. Like many Windows APIs, two versions of this API are available: ASCII and wide (Unicode). It is helpful to set breakpoints on both versions of APIs so one isn’t missed. After you open Crackme1.exe in OllyDbg, you can set a breakpoint on these APIs by using the command line. Open the Command Line window by pressing Alt+F1, and then enter the commands bpx GetDlgItemTextA and bpx GetDlgItemTextW (appending an A or a W specifies which version of the API is targeted). Press Enter after each command, as shown in Figure 17-9, to display the Intermodular Calls window with breakpoints set for the specified API. Feel free to close this window.

Tip

Microsoft Developer Network (MSDN) at http://msdn.microsoft.com provides a great reference for Windows APIs. Use this resource to understand where to set breakpoints and what the APIs do.

Step 2: Running the program to hit the breakpoint. Now that your breakpoints are set, run the program under the debugger by pressing F9. Enter your name as MyName and serial code as serial, and then click OK. You should now be back in the debugger because you hit a breakpoint.

Step 3: Determining whether this breakpoint reads the data of interest. As discussed in Chapter 8, if a function requires parameters, those are pushed onto the stack directly before the call to that function. Scroll up in the CPU window and you’ll see the parameters used to call GetDlgItemTextA. OllyDbg is smart enough to match the names of the API parameters to the PUSH operations that happen in assembly. As shown in Figure 17-10, it is easy to determine which parameter is named buffer. In this example, buffer is the memory referenced by the EDX register.

Now you need to determine whether this buffer gets the data of interest (the serial code). Click in the dump portion of the CPU window (which is directly beneath the disassembly), press Ctrl+G, and then type EDX into the dialog box. This displays the contents of the memory location that EDX references. You can step over the call to GetDlgItemTextA by pressing F8, and then inspect this memory location.

As it turns out, the memory is populated with the name you enter. That’s not what you’re looking for, so press F9 to continue running the program. You’ll immediately jump back into the debugger. Follow the same steps (hit breakpoint, press Ctrl+G for the register pushed onto the stack for buffer, press F8 to step over the call, and inspect the memory location) to determine whether serial is copied to memory. The second time through, you should see this happen. Now you’ve found the memory of interest—time to set a breakpoint on it.

Step 4: Setting a breakpoint on memory. Strings are typically accessed by their first character. You can set a breakpoint on this memory location by selecting the s in serial displayed in the dump pane (directly below the disassembly pane), right-clicking in the pane, clicking Breakpoint, and then clicking Memory, On Access, as shown in Figure 17-11.

Figure 17-11. Setting a breakpoint on a memory location to allow easy runtime discovery of any code that accesses that memory

Step 5: Watching what happens when memory is accessed. Press F9 to continue to run Crackme1.exe. You’ll again hit a breakpoint. This time it is the breakpoint on memory you just set. The line of code that accessed the memory is the following:

CMP BYTE PTR DS:[ESI],0

This is a comparison operation that compares the memory ESI is pointing to (the serial code entered) to 0. This isn’t very interesting. This is a check to see if the serial code is null. Press F9 to continue running Crackme1.exe.

You’ll hit another memory breakpoint, but this time on the following line of code:

REPNE SCAS BYTE PTR ES:[EDI]

This instruction is used to determine the length of the string at the location pointed to by EDI. (EDI is now pointing to serial.) That’s not what you want. Press F9 again.

Another breakpoint, this time on the following code:

REPE CMPS BYTE PTR ES:[EDI],BYTE PTR DS:[ESI]

This is a compare! The instruction compares two strings—the strings at ESI and EDI. Take a look at each of these locations as discussed in step 3, but this time use the locations of ESI and EDI instead of EDX. Notice anything interesting? Yes, serial is compared to yMaNem. Could this string be the valid serial code? Try to register again and find out. yMaNem is a pretty weak serial code, but regardless of how complex a serial code is, you can obtain it using the technique described here if the serial code you enter is compared to the correct code.

Reverse engineering of computer programs generally involves the study and analysis of a program’s binary code to determine its inner workings. In the case of a program for which only the binary version is available, an assembly code or high level source code version can be created for study using a decompiler or a disassembler. A decompiler is a program that converts another program’s binary code into a high level programming language such as Java, C#, or C. A disassembler performs a similar function but returns an assembly language version. Neither decompilation nor disassembly requires the user to actually run the target program, which is helpful when the target binary is potentially malicious software (a worm, Trojan horse, etc.). In this section, we discuss how decompilers and disassemblers can be used to identify security vulnerabilities that might be exposed in a program that is distributed only in binary form. We also explain how some people analyze security patches to uncover details of the original security bugs.

A program’s binary typically comes in one of two forms—native code or bytecode. Native code contains operations in machine language that run directly on a computer’s processor. Bytecode binaries do not run on the processor directly, but instead contain intermediate code. Bytecode examples include Microsoft Intermediate Language (used for the Microsoft .NET Framework binaries) and Java bytecode (used by the Java Virtual Machine). When a binary containing bytecode is executed, the intermediate code is translated into machine code by an interpreter and is executed by the processor. The Common Language Runtime is the .NET interpreter, and Java’s is the Java Virtual Machine.

Bytecode binaries contain more information than native code binaries do. This allows for more direct translation from a bytecode binary to the original source code and makes bytecode decompilers very effective. Several decompilers were created specifically for Java and .NET binaries. Understanding the decompiler’s results of a bytecode binary is much easier than understanding the results of a disassembled native binary. To more clearly illustrate this point, the following shows the differences between the original source code of a simple C# application and the decompiled code. The decompiler used in this example is .NET Reflector (http://www.aisto.com/roeder/dotnet/).

Tip

Decompilers exist for translating native binaries into C. Currently, these decompilers don’t yield very reliable results. For this reason, a disassembler should be used for native code binaries.

The following is the original source code from a simple C# application, LaunchBrowser, which is included on this book’s companion Web site:

private bool IsValidURL(string URL)

{

return URL.StartsWith("http://") ||

URL.StartsWith("https://");

}A function named IsValidURL takes one parameter named URL and returns true if URL begins with the strings http:// or https://. Otherwise, false is returned.

Note

Symbols are files created when a program’s binaries are built from source code. These files contain information that can help debug the application later. Information about global variables, function names, and information to map code in the binary back to lines in the source code are included in symbol files. Symbols created by Microsoft Visual Studio have the extensions .dgb and .pdb. For more information about these symbol files, see http://support.microsoft.com/kb/121366.

Using a decompiler without access to public or private symbols produces the following code from the binary for the same function:

private bool IsValidURL(string URL)

{

if (!URL.StartsWith("http://"))

{

return URL.StartsWith("https://");

}

return true;

}Wow! These are pretty similar, aren’t they? The function name and variable are the same. The code is slightly different, but it does exactly the same thing. If you’re a programmer, looking at the decompiled binary reinforces what your first computer science professor likely mentioned to you: if you believe one of the or conditions in the if statement is more likely to be true, runtime is more efficient if the more likely condition is placed first.

Because the decompiled version of the binary is extremely similar to the original source code, the decompiled version can be reviewed for security bugs in the same manner the original source can be reviewed. The rest of this chapter focuses on basic information about how disassemblers can be used on native binaries to find security bugs.

More Info

For more information about using disassemblers to find security bugs, see the book Exploiting Software: How to Break Code by Greg Hoglund and Gary McGraw.

Modern compilers often optimize and remove unnecessary code. By disassembling a binary, you can more accurately see exactly what is executed. An interesting example is the ZeroMemory function. For security reasons, programmers often remove sensitive information from memory after the data is no longer needed. A common way to do this is to call ZeroMemory, which fills the memory with zeros. Although this sounds like a good solution, because the memory is no longer used, many compilers treat the ZeroMemory call as unnecessary and do not include it in the binary. This means that in the actual program the memory is not filled with zeros and does contain the sensitive information. This problem isn’t obvious by looking at the original source code, but is obvious by looking at the disassembled binary. To address this particular concern, Microsoft created the SecureZeroMemory function, which is not optimized out of the binary.

More Info

For more detailed information about ZeroMemory vs. SecureZeroMemory, see pages 322–326 in Writing Secure Code, 2nd edition, by Michael Howard and David LeBlanc (Microsoft Press).

Important

Obfuscators and packers/protectors are two types of tools that attempt to hinder reverse engineering by making it difficult to disassemble/decompile a binary or by making the results difficult to understand. Obfuscators scramble binaries (both native code and bytecode). Packers and protectors compress the code and at run time uncompress and execute the code. If a binary is packed or protected, the results of decompiling/disassembling it will contain only the unpacking code and the compressed data. Although these tools can slow down the reverse engineering process, they cannot prevent it completely. In both situations, all of the code in the binary is still available, but might be harder to read. For example, the compression routine inside a packed/protected binary can be reverse engineered, which enables you to uncompress the binary back to its unpacked/unprotected state.

Entire books have been written on how various functions can lead to security vulnerabilities. You’ve seen many examples of such vulnerabilities referenced throughout this book. If you have access to source code, a common way to find security bugs is to search for commonly misused functions. Many people erroneously believe that keeping source code private makes a product more secure. However, even without source code, you can perform code reviews using an approach similar to the one used when source is available.

If you don’t have access to the source code, how do you know whether any of these problematic functions are used? You could use the monitoring tools discussed earlier in this chapter to discover the functions, but some other functions are compiled into the program’s binary and won’t be picked up by monitoring tools. Because some disassemblers recognize the assembly instructions that make up these functions and flag them as the original function name, you can disassemble the binary to better understand whether and how these functions are used.

Black box testers are only able to test code that they know how to exercise. A white box tester can search through the product’s code looking for certain insecure coding practices. For example, find all instances of the strcpy function. Once a suspicious piece of code is identified, the code can be traced backward to identify how an attacker could call into that code. By using a disassembler, you can take this approach against a binary without access to the original source code. The following example shows how to identify format string vulnerabilities without access to the original source code.

Tip

One of the more widely used commercial disassemblers is the Interactive Disassembler (IDA) (http://www.datarescue.com). IDA Pro is the professional version of this product. In this text, IDA Pro is referred to simply as IDA.

IDA allows easy navigation of the disassembled code and commenting the code, and includes a basic debugger. Another strength of IDA is that it is able to identify common library routines compiled into the binary and can automatically comment these. For example, IDA can recognize the assembly code for strcpy in a binary created by common compilers and can call this out. Features like this are great time-savers. Because of its advanced functionality and ease of use, IDA is used to reverse engineer native binaries in this chapter.

Load the sample program Formatstring.exe (which you can find on the companion Web site). The program takes the path and name of a text file as a command-line parameter. Run the program, and you will quickly see that the program echoes the contents of the specified file to the console. Based on the name of this program and its simplicity, you likely have a good idea of how to use the black box approach to test for security bugs. Working through the following example will help you understand an approach that, in more complex programs, will uncover bugs not obvious to the black box tester.

Step 1: Disassembling the binary. Disassemble Formatstring.exe by opening it in IDA. Part of the IDA autoanalysis feature is the capability of identifying the assembly instructions used from such common functions as strcpy and printf. The analysis happens automatically after the binary is disassembled.

Step 2: Understanding which functions are called. After IDA has finished its autoanalysis, you can see whether Formatstring.exe makes any calls to potentially dangerous functions. One of the potentially dangerous functions is printf. Look in the IDA Functions Window, as shown in Figure 17-12, to see whether the target (Formatstrings.exe) calls printf. The printf function is used by this program. Time to investigate how printf is used.

Tip

Please read Chapter 9, now if you haven’t already because this section won’t make a lot of sense unless you understand the dangers of format strings.

Step 3: Finding calls to unsafe functions. To investigate whether printf is called in an unsafe manner, double-click _printf in the Functions Window. This places the Disassembly window on line 0x00401149. You want to know all of the places this program calls printf, which can be determined by scrolling up a few lines. The beginning of the code listing for the printf function is printf proc near. Click the word _printf to select it, and then press X to display a list of all the places the target program calls printf. There are five calls to printf, as shown in Figure 17-13.

Step 4: Determining whether a function call is made in a potentially unsafe manner. Remember that calls to printf can be done like either printf(“%s”, szVariable); or printf(szVariable);. If an attacker can control szVariable, only the second example is a format string bug. Time to check whether there are calls like this to printf in Formatstring.exe.

To investigate whether printf is called in an unsafe manner, select the first item in the Xrefs To _Printf window as shown in Figure 17-13, and click OK. IDA shows the first place printf is called; you should see the following code:

Only one PUSH operation comes before making this call, meaning that printf is called with only one parameter. If this parameter is controlled by the attacker, it’s a bug. In this case, the constant string beginning with “Could not process the entire file” is being pushed onto the stack. (You can verify that the string is from a read-only part of memory by pressing Shift+F7 to look at the IDA segment’s table and checking the columns to ensure it is read-only.) An attacker can’t control a constant string, so this isn’t a bug.

Repeat steps 2 and 3 for each call to the printf function. It isn’t necessary to use the Functions Window to select the original prinf function. To save time, you can select any appearance of printf and then press X to bring up the Xrefs To _Printf window.

The second call to printf is very similar to the first and uses a constant string. The third and fourth calls are a little different and look like the following:

There are two pushes onto the stack before the call to printf. The second PUSH is used as the first parameter to printf. This pushes a constant string containing a format string onto the stack. In this example, the string is “Error opening the file ‘%s’.” The second parameter (referenced by the EDX register) is formatted with the %s in the first parameter. This means the printf call looks like printf(“Error opening the file ‘%s’, szVariable);. This isn’t a format string bug.

The fifth and final printf call contains only one parameter, and it doesn’t appear to be a constant string. Here is the disassembled fifth call to printf:

This call takes the form printf(variable);. If the ECX register references data the attacker controls, this is a format string bug.

Step 5: Determining whether an attacker can control the data. You’ve found a printf call that needs investigation. How can you determine whether the ECX register is pointing to attacker-controlled data? A few ways include tracing through the code wherever attacker data could enter the application and seeing whether it ever hits the printf call, tracing backward through the code starting at the suspicious printf call and figuring out how the value in ECX is determined, and setting a breakpoint on the suspicious printf call and checking the contents ECX references.

Tracing code from the entry points could be very time-consuming. Tracing code backward is faster, but still somewhat time-consuming. Setting a breakpoint and checking the contents of the parameter is fast, but doesn’t guarantee you’ll hit all code paths. For example, the parameter could contain data that isn’t controlled by an attacker (or you in your tests), but under different conditions it could be. Using the breakpoint approach alone will not ensure you hit all code paths for making this printf call.

There is never enough time to completely test an application; good testers are constantly doing cost/benefit analyses. In this situation, it would be most efficient to use the breakpoint approach. If this doesn’t yield a bug, you can make sure there aren’t any bugs by investigating using the more thorough backward-tracing approach to determine that ECX is used as lpBuffer in a call to the ReadFile API and receives the contents of the file.

One way to set the breakpoint is to compute the offset address of the assembly instruction when the binary is loaded in memory at run time and set a breakpoint using a debugger—but there’s an even easier way. IDA Pro version 4.5 and later include an integrated debugger. Select the suspicious printf call, and set a breakpoint on it by pressing F2. Because Formatstring.exe takes a filename on the command line, you need to specify that IDA uses command-line parameters when debugging. Select Debugger, and then click Process Options. For the parameters, enter c: empinput.txt. Now create a file with that name containing the text “Test Input.”

Start the debugger by pressing F9. You should hit the breakpoint on the suspicious printf call. Remember, the register storing the value pushed onto the stack is ECX. You want to see the value referenced by the ECX register. Click in the IDA View-ESP window, and press G (to go to a memory address). You are prompted to enter an address; type ECX, and then click OK.

You should see the memory contents referenced by ECX. This data isn’t currently displayed as a string. To display it as a string click the line where ECX is noted, and press A, which displays bytes as a string. The data is shown as a string (see Figure 17-14). Does this data look familiar? It’s the contents of the input file. If attackers can control the contents of this input file, they can exploit this bug.

As you can see, it isn’t necessary to have the source code or symbols to perform a review of suspicious functions. Don’t be fooled into thinking that not shipping the source code provides security protection. As you’ll see in the next section, a program’s algorithm can be uncovered using an approach similar to this one.

Auditing commonly misused functions is a good way to find bugs quickly, but is an approach that can miss any design or implementation flaw unrelated to those functions. Going a step deeper than the preceding example, you can use a disassembler to ascertain the entire algorithm and the implementation details of the disassembled binary. This section discusses how a security flaw can be identified and exploited by understanding the disassembled code.

Tip

The Open Reverse Code Engineering Web site (http://www.openrce.org/), started by Pedram Amini, is dedicated to sharing knowledge about reverse engineering and is worth checking out.

The RemoteAuth.exe program included on the companion Web site allows you to create accounts and authenticate these accounts by using the correct password.

To test the authentication scheme, you need a test account. Create a user named user1 with the password 2_ManySec3ts with the following command line:

RemoteAuth.exe NEW user1 2_ManySec3ts

You can successfully authenticate with the following command line:

RemoteAuth.exe AUTH user1 2_ManySec3ts

Modify the password in the preceding command line and attempt to authenticate again to see what happens when authentication fails. When the correct user name and password are supplied, the text “Access Granted” is displayed. When authentication fails, “Access Denied” is displayed.

There’s a security hole that allows an attacker to authenticate as a user without knowing the user’s password. You’ll have a hard time finding this flaw quickly (if at all) using the black box approach. However, the disassembly makes it clear that there is a major problem.

For brevity we don’t include detailed step-by-step instructions, but instead focus on the important areas. Feel free to use the information you learned from the previous examples to follow along.

Important

For readability we’ve added comments to the following disassembly examples. If you are following along in IDA, you won’t see many of these comments. If you know a little assembly, you will likely be able to determine what the code is doing without reading these comments.

Step 1: Hashing together the entered user name and password. Lines 0x00401291–0x0040135A of the disassembly include fairly lengthy but easy-to-read code that calls the following:

CryptAcquireContextW to get a cryptographic service provider

CryptCreateHash to specify that a SHA1 hash be created

CryptHashData to add the user name to the hash

CryptHashData to add the password to the hash

CryptGetHashParam to retrieve the newly created hash

This hash, along with the user name, is stored in a file named Secrets.txt.

Step 2: Retrieving the password from Secrets.txt. The following code retrieves the requested user name from Secrets.txt:

By understanding the preceding assembly, you have a good understanding of how the user names and hashes are retrieved. The user name and hash are both retrieved as part of the fread call. Because the user name is NULL-terminated in Secrets.txt, a string compare compares only up to the NULL and does not include the hash. A simplified pseudocode listing for the preceding disassembly follows. We include the corresponding line numbers from the disassembly in the comments on the right.

ReadAndCompareUsrName: bytesRead = fread(buffer, 1, 384, file); //Lines 0x401400–401410 if (bytesRead != 384) goto AccessDenied; //Lines 0x401418-40141D bReturn = strcmp(UserNameEntered,UserNameFromFile); //Lines 0x401431-401437 if (bReturn == 0) goto ComparePasswdHashes; //Lines 0x40143A-0x40143C else goto ReadAndCompareUsrName; //Line 0x401444

Step 3: Comparing the password hashes. The following disassembly makes the decision whether authentication will succeed or fail. Can you spot the flaw? Hint: The added comments are very telling.

The flaw is on line 0x0040144D. Only the first byte of each hash is compared. If an attacker can enter a password that, when hashed, has the same first character as the hash stored in Secrets.txt, the attacker will be able to log on without using the correct password. For example, the password aaaaalh can be used to successfully authenticate the user1 account.

You can determine that by reading the assembly code to understand how RemoteAuth.exe creates hashes (discussed in step 1 of this example). You can use the same algorithm to brute force passwords until the hash has the same first character as the target hash’s first character.

Even if the attacker cannot determine the first character of the target hash (because he or she might not have Read access to Secrets.txt), the attacker can use the hash generation algorithm to reduce the number of logon attempts required to gain access to an account by never attempting two passwords that result in a hash with the same first character.

By examining the disassembled binary and using assembly knowledge, it takes only a few minutes to find this bug. Without examining the disassembly or source code, you would likely miss this bug, or it could take you a long time to find.

Tip

Some binary analysis can be automated by using existing or creating new IDA plug-ins or scripts. The IDA Palace (http://www.backtrace.de/) contains several plug-ins and scripts that are useful for this purpose.

Software vendors often issue security patches to fix security bugs. These patches replace or modify certain binaries on the machine being patched. Many times, the details of bugs updated are disclosed on security mailing lists. Other times, no details or very vague details are made public. Dedicated attackers can disassemble and use their analytical skills to find out which code was changed by the update. By knowing which code changed, an attacker can more easily understand how to exploit the flaw on unupdated systems.

A clever security researcher named Halvar Flake has given several presentations on how he does this at the Black Hat Security conference. Details of his technique can be found at http://www.blackhat.com/presentations/bh-usa-04/bh-us-04-flake.pdf. During the presentation he states that he can often disassemble and analyze an update and build an exploit for the bug in less than one day! This is yet another compelling reason why it is important to test and fix security bugs before software is made available to the public.

Halvar Flake also published a paper titled “Graph-Based Comparison of Executable Objects” (http://www.sabre-security.com/files/dimva_paper2.pdf) that describes how the prepatch and postpatch versions of H323ASN1.DLL were compared in the hope of uncovering details of a security update for Microsoft Internet Security and Acceleration Server (ISA) 2000. What he found was very interesting. He discovered that the update performed range checks on one of the parameters before calling the ASN1PERDecZeroTableCharStringNoAlloc function (part of the Microsoft ASN.1 library) to ensure that the parameter was smaller than 129. This meant that the real bug was in the ASN1PERDecZeroTableCharStringNoAlloc function, but calling it in an exploitable way was prevented by ISA Server. Halvar then searched for other binaries that called this function. If these didn’t perform the range check, they might allow an attacker to hit the same bug that occurred in ISA Server. One place he found that didn’t contain the range check was in Microsoft NetMeeting. So, by adding the range check in the ISA Server update, Microsoft inadvertently revealed an exploitable condition in NetMeeting! Microsoft was contacted and the ASN1PERDecZeroTableCharStringNoAlloc function was fixed.

Following are some tips on how you can use analytical observation or reverse engineering to aid your testing:

Compare small changes in input (files, network traffic, etc.) to better understand the input format. As discussed in the Word file example, by including slightly different data in input and comparing the input format, the format can be more easily understood.

Modify a program’s binary or memory to allow for easier testing of malicious input scenarios. It can be difficult to quickly craft malicious input that is formatted correctly enough to pass sanity checks by the target application; for example, if the data is encoded or digitally signed by the client and sent to the server. By modifying the program’s binary or memory, you can include data in your input that isn’t normally allowed by the application, but still format it correctly.

Unless necessary, don’t make debug symbols available. In many situations, it is useful for customers to have access to symbols. For example, the symbols for the Windows operating system are publicly available so that developers can more easily debug software that is built on top of it. Unless there is a legitimate need, don’t blindly give access to your product’s symbols. Information included in symbols helps attackers analyze the software.

Copy protection routines require testing without using source code. To assess how easy it is to bypass a program’s copy protection scheme without access to the original source code, attempt to bypass it using debuggers, decompilers, and disassemblers.

Realize that if you are trying to hide information, you’re fighting a losing battle. Although information included in binaries and memory that an attacker can control can be obfuscated or hidden, a dedicated attacker can uncover this information.

Fix the root cause. If an API contains a security problem, fix the API instead of just adding a validation routine before calling the API. Validation routines might solve the cases that you are aware of but may also alert attackers to the root cause. Attackers will eagerly seek out all callers of the API in the hope that some don’t perform validation. Some of these callers might be in other applications you ship.

Use decompilers and disassemblers for components missing source code. If you don’t have the source code to a component you ship, decompilers and disassemblers can help you better understand the component. The knowledge you gain can aid your security testing.

If your analysis and testing are directed solely at code that you yourself created, you are almost certainly within your rights to do all of the types of work discussed in this chapter. However, if the code you wish to study contains components developed or distributed by others, you should be aware that there may be laws or license agreements that could be violated by doing some of these things. For example, the U.S. Digital Millennium Copyright Act prohibits the circumvention of technological measures to protect copyrighted material except under limited circumstances, and many End User License Agreements contain provisions that purport to restrict or prohibit decompilation, disassembly, or other reverse engineering of licensed software. Therefore, you should to talk with an attorney and obtain permission before doing work in this area.

There many levels of examination and analysis that can help you understand and fix security bugs in your applications. Simple approaches involve the observation of programs in use to study their behavior and do not require you to understand the inner workings of the code. Reverse engineering requires a higher level of understanding of the code and its implementation details and, if the source code is not available, the use of a debugger and/or decompiler/disassembler to reveal its inner workings.

Remember also that reverse engineering enables attackers with access to the binary to obtain all of the implementation details of the code. In addition to understanding the binary’s implementation to find security flaws, reverse engineering can be used to modify a program’s original behavior to fix bugs or to bypass software-imposed restrictions. Obfuscation and other techniques that attempt to prevent reverse engineering might make the process more difficult, but they cannot completely prevent it. Examination of your code by the methods discussed in this chapter is a valuable tool for understanding how others might exploit the binaries you ship.