Chapter 3. Electronic Discovery

James O. Holley, Paul H. Luehr, Jessica Reust Smith and Joseph J. Schwerha IV

Contents

Introduction to Electronic Discovery63

Legal Context66

Case Management74

Identification of Electronic Data78

Forensic Preservation of Data83

Data Processing106

Production of Electronic Data130

Conclusion132

Cases132

References132

Introduction to Electronic Discovery

Electronic discovery or “e-discovery” is the exchange of data between parties in civil or criminal litigation. The process is largely controlled by attorneys who determine what data should be produced based on relevance or withheld based on claims of privilege. Forensic examiners, however, play crucial roles as technical advisors, hands-on collectors, and analysts.

Some examiners view electronic discovery as a second-class endeavor, void of the investigative excitement of a trade secret case, an employment dispute, or a criminal “whodunit.” These examiners, however, overlook the enormous opportunities and challenges presented by electronic discovery. In sheer economic terms, e-discovery dwarfs traditional digital forensics and will account for $10.67 billion in estimated revenues by 2010 (Socha & Gelbman, 2008a).

This financial projection reflects the high stakes in e-discovery, where the outcome can put a company out of business or a person in jail. Given the stakes, there is little room for error at any stage of the e-discovery process—from initial identification and preservation of evidence sources to the final production and presentation of results. Failing to preserve or produce relevant evidence can be deemed spoliation, leading to fines and other sanctions.

In technical terms, electronic discovery also poses a variety of daunting questions: Where are all the potentially relevant data stored? What should a company do to recover data from antiquated, legacy systems or to extract data from more modern systems like enterprise portals and cloud storage? Does old data need to be converted? If so, will the conversion process result in errors or changes to important metadata? Is deleted information relevant to the case? What types of false positives are being generated by keyword hits? Did the tools

In Coleman v. Morgan Stanley, after submitting a certificate to the court stating that all relevant e-mail had been produced, Morgan Stanley found relevant e-mail on 1600 additional backup tapes. The judge decided not to admit the new e-mail messages, and based on the company's failure to comply with e-discovery requirements, the judge issued an “adverse inference” to the jury, namely that they could assume Morgan Stanley had engaged in fraud in the underlying investment case. As a result, Morgan Stanley was ordered to pay $1.5 billion in compensatory and punitive damages. An appeals court later overturned this award, but the e-discovery findings were left standing, and the company still suffered embarrassing press like the The Wall Street Journal article, “How Morgan Stanley botched a big case by fumbling e-mails” (Craig, 2005).

Confusion over terminology between lawyers, forensic examiners, and lay people add to the complexity of e-discovery. For instance, a forensic examiner may use the term “image” to describe a forensic duplicate of a hard drive, whereas an IT manager may call routine backups an “image” of the system, and a lawyer may refer to a graphical rendering of a document (e.g., in TIFF format) as an “image.” These differing interpretations can lead to misunderstandings and major problems in the e-discovery process, adding frustration to an already pressured situation.

Fortunately, the industry is slowly maturing and establishing a common lexicon. Thanks to recent definitions within the 2006 amendments to the U.S. Federal Rules of Civil Procedure (F.R.C.P.), attorneys and examiners now typically refer to e-discovery data as ESI—short for Electronically Stored Information. This term is interpreted broadly and includes information stored on magnetic tapes, optical disks, or any other digital media, even if it is not technically stored in electronic form. In addition, George Socha and Thomas Gelbman have created a widely accepted framework for e-discovery consulting known as the Electronic Discovery Reference Model (EDRM). Shown in Figure 3.1, the EDRM breaks down the electronic discovery process into six different stages.

|

| Figure 3.1 Diagram of the Electronic Discovery Reference Model showing stages from left to right (Socha & Gelbman, 2008a). |

The first EDRM stage involves information management and the process of “getting your electronic house in order to mitigate risk & expenses should electronic discovery become an issue.” (Socha & Gelbman, 2008a). The next identification stage marks the true beginning of a specific e-discovery case and describes the process of determining where ESI resides, its date range and format, and its potential relevance to a case. Preservation and collection cover the harvesting of data using forensic or nonforensic tools. The processing stage then covers the filtering of information by document type, data range, keywords, and so on, and the conversion of the resulting data into more user-friendly formats for review by attorneys. At this stage, forensic examiners may be asked to apply their analysis to documents of particular interest to counsel. During production, data are turned over to an opposing party in the form of native documents, TIFF images, or specially tagged and encoded load files compatible with litigation support applications like Summation or Concordance. Finally, during the presentation stage, data are displayed for legal purposes in depositions or at trial. The data are often presented in their native or near-native format for evidentiary purposes, but specific content or properties may be highlighted for purposes of legal argument and persuasion.

The Electronic Discovery Reference Model outlines objectives of the processing stage, which include: “1) Capture and preserve the body of electronic documents; 2) Associate document collections with particular users (custodians); 3) Capture and preserve the metadata associated with the electronic files within the collections; 4) Establish the parent-child relationship between the various source data files; 5) Automate the identification and elimination of redundant, duplicate data within the given dataset; 6) Provide a means to programmatically suppress material that is not relevant to the review based on criteria such as keywords, date ranges or other available metadata; 7) Unprotect and reveal information within files; and 8) Accomplish all of these goals in a manner that is both defensible with respect to clients’ legal obligations and appropriately cost-effective and expedient in the context of the matter.”

This chapter explores the role of digital forensic examiners throughout these phases of e-discovery, particularly in large-scale cases involving disputes between organizations. It addresses the legal framework for e-discovery as well as unique forensic questions that arise around case management, identification and collection of ESI, and culling and production of data. Finally, this chapter describes common pitfalls in the complex, high-stakes field of e-discovery, with the goal of helping both new and experienced forensic examiners safely navigate this potential minefield.

Legal Context

In the past few years, the complexity of ESI and electronic discovery has increased significantly. The set of governing regulations has become so intricate that even professionals confess that they do not understand all the rules. A 2008 survey of in-house counsel found that 79% of the 203 respondents in the United States and 84% of the 200 respondents in the United Kingdom were not up to date with ESI regulations (Kroll Ontrack, 2008). Although it is beyond the scope of this chapter to cover all aspects of the legal context of discovery of ESI, the points that are most relevant to digital investigators are presented in this section.

This chapter focuses on the requirements of the United States, but digital examiners should be aware that even more stringent requirements may be present when evidence is in foreign countries. Most of Europe, for example, affords greater privacy protections to individuals in the workplace. Therefore, in countries such as France, it is often necessary to obtain the consent of an employee before conducting a search on his or her work computer. The very acts of imaging and reviewing a hard drive also may be subject to different country-specific regulations. Spanish rules, for instance, may require examiners to image a hard drive in the presence of a public notary, and analysis may be limited to information derived from specific keyword searches, not general roaming through an EnCase file. Thus, a civil examination in that country may look more like a computer search, which is subject to a criminal search warrant in the United States. For more information on conducting internal investigations in European Union countries, see Howell & Wertheimer (2008).

Legal Basis for Electronic Discovery

In civil litigation throughout the United States, courts are governed by their respective rules of civil procedure. Each jurisdiction has its own set of rules, but the rules of different courts are very similar as a whole. 1 As part of any piece of civil litigation, the parties engage in a process called discovery. In general, discovery allows each party to request and acquire relevant, nonprivileged information in possession of the other parties to the litigation, as well as third parties (F.R.C.P. 26(b)). When that discoverable information is found in some sort of electronic or digital format (i.e., hard disk drive, compact disc, etc.), the process is called electronic discovery or e-discovery for short.

1For the purposes of this chapter, we are concentrating on the Federal Rules of Civil Procedure; however, each state has its own set of civil procedures.

The right to discover ESI is now well established. On December 1, 2006, amended F.R.C.P. went into effect and directly addressed the discovery of ESI. Although states have not directly adopted the principles of these amendments en masse, many states have changed their rules to follow the 2006 F.R.C.P. amendments.

ESI Preservation: Obligations and Penalties

Recent amendments to various rules of civil procedure require attorneys—and therefore digital examiners—to work much earlier, harder, and faster to identify and preserve potential evidence in a lawsuit. Unlike paper documents that can sit undisturbed in a filing cabinet for several years before being collected for litigation, many types of ESI are more fleeting. Drafts of smoking-gun memos can be intentionally or unwittingly deleted or overwritten by individual users, server-based e-mail can disappear automatically following a system purge of data in a mailbox that has grown too large, and archived e-mail can disappear from backup tapes that are being overwritten pursuant to a scheduled monthly tape rotation.

Just how early attorneys and digital examiners need to act will vary from case to case, but generally they must take affirmative steps to preserve relevant information once litigation or the need for certain data is foreseeable. In some cases like employment actions, an organization may need to act months before a lawsuit is even filed. For example, in Broccoli v. Echostar Communications, the court determined that the defendant had a duty to act when the plaintiff communicated grievances to senior managers one year before the formal accusation. Failure to do so can result in severe fines and other penalties such as described next.

The seminal case of Zubulake v. UBS Warburg outlined many ESI preservation duties in its decision. Laura Zubulake was hired as a senior salesperson to UBS Warburg. She eventually brought a lawsuit against the company for gender discrimination, and she requested, “all documents concerning any communication by or between UBS employees concerning Plaintiff.” UBS produced about 100 e-mails and claimed that its production was complete, but Ms. Zubulake's counsel learned that UBS had not searched its backup tapes. What began as a fairly mundane employment action turned into a grand e-discovery battle, generating seven different opinions from the bench and resulting in one of the largest jury awards to a single employee in history.

The court stated that “a party or anticipated party must retain all relevant documents (but not multiple identical copies) in existence at the time the duty to preserve attaches, and any relevant documents created thereafter,” and outlined three groups of interested parties who should maintain ESI:

▪ Primary players: Those who are “likely to have discoverable information that the disclosing party may use to support its claims or defenses” (F.R.C.P. 26(a)(1)(A)).

▪ Assistants to primary players: Those who prepared documents for those individuals that can be readily identified.

▪ Witnesses: “The duty also extends to information that is relevant to the claims or defenses of any party, or which is ‘relevant to the subject matter involved in the action’” (F.R.C.P. 26(b)(1)).

The Zubulake court realized the particular difficulties associated with retrieving data from backup tapes and noted that they generally do not need to be saved or searched, but the court noted:

[I]t does make sense to create one exception to this general rule. If a company can identify where particular employee documents are stored on backup tapes, then the tapes storing the documents of “key players” to the existing or threatened litigation should be preserved if the information contained on those tapes is not otherwise available. This exception applies to all backup tapes.

In addition to clarifying the preservation obligations in e-discovery, the Zubulake case revealed some of the penalties that can befall those who fail to meet these obligations. The court sanctioned UBS Warburg for failing to preserve and produce e-mail backup tapes and important messages, or for producing some evidence late. The court required the company to pay for additional depositions that explored how data had gone missing in the first place. The jury heard testimony about the missing evidence and returned a verdict for $29.3 million, including $20.2 million in punitive damages.

The Zubulake court held the attorneys partially responsible for the lost e-mail in the case and noted, “[I]t is not sufficient to notify all employees of a litigation hold and expect that the party will then retain and produce all relevant information. Counsel must take affirmative steps to monitor compliance so that all sources of discoverable information are identified and searched.” (Zubulake v. UBS Warburg, 2004). Increasingly, attorneys have taken this charge to heart and frequently turn to their digital examiners to help assure that their discovery obligations are being met.

Rather than grappling with these challenges every time new litigation erupts, some organizations are taking a more strategic approach to prepare for e-discovery and engage in data-mapping before a case even begins. The two most fundamental aspects of being prepared for e-discovery are knowing the location of key data sources and ensuring that they meet regulatory requirements while containing the minimum data necessary to support business needs. The data-mapping process involves identifying pieces of data that are key to specific and recurring types of litigation (e.g., personnel files that are relevant to employment disputes). In turn, organizations attempt to map important pieces of data to functional categories that are assigned clear backup and retention policies. Organizations can then clean house and expunge unnecessary data, not to eliminate incriminating digital evidence, but to add greater efficiency to business operations and to reduce the amount of time and resources needed to extract and review the data for litigation.

In the best of all worlds, the data-mapping process cleanses a company of redundant data and rogue systems and trains employees to store their data in consistent forms at predictable locations. In a less perfect world, the data-mapping process still allows a company to think more carefully about its data and align an organization's long-term business interests with its recurring litigation concerns. For example, the data-mapping process may prompt an organization to create a forensic image of a departing employee's hard drive, especially when the employee is a high-ranking officer or is leaving under a cloud of suspicion.

Determining Violations of the Electronic Discovery Paradi

As pointed out by the Zubulake decision, the consequences of failing to preserve data early in a case can be severe. Under F.R.C.P. Rule 37, a court has broad latitude to sanction a party in a variety of ways. Of course, courts are most concerned about attorneys or litigation parties that intentionally misrepresent the evidence in their possession, as seen in the Qualcomm case.

In Qualcomm Inc. v. Broadcom Corp., the underlying dispute centered on whether Qualcomm could claim a patent to video compression technology after it allegedly had participated in an industry standards-setting body known as the Joint Video Team (JVT). Qualcomm brought a lawsuit against Broadcom claiming patent infringement, but the jury ultimately returned a unanimous verdict in favor of Broadcom.

During all phases of the case, Qualcomm claimed that it had not participated in the JVT. Qualcomm responded to numerous interrogatories and demands for e-mails regarding its involvement in the JVT. When a Qualcomm witness eventually admitted that the company had participated in the JVT, over 200,000 e-mails and other ESI were produced linking Qualcomm to the JVT! The court determined that Qualcomm had intentionally and maliciously hidden this information from Broadcom and the court. As a result Qualcomm had to pay sanctions (including attorney fees) of over $8 million and several attorneys for Qualcomm were referred to the State Bar for possible disciplinary action.

The following 10 recommendations are provided for investigators and in-house counsel to avoid the same fate as Qualcomm (Roberts, 2008):

1. Use checklists and develop a standard discovery protocol;

2. Understand how and where your client maintains paper files and electronic information, as well as your client's business structures and practices;

3. Go to the location where information is actually maintained—do not rely entirely on the client to provide responsive materials to you;

4. Ensure you know what steps your client, colleagues, and staff have actually taken and confirm that their work has been done right;

5. Ask all witnesses about other potential witnesses and where and how evidence was maintained;

6. Use the right search terms to discover electronic information;

7. Bring your own IT staff to the client's location and have them work with the client's IT staff, employ e-discovery vendors, or both;

8. Consider entering into an agreement with opposing counsel to stipulate the locations to be searched, the individuals whose computers and hard copy records are at issue, and the search terms to be used;

9. Err on the side of production;

10. Document all steps taken to comply with your discovery protocol.

This is a useful and thorough set of guidelines for investigators to use for preservation of data issues, and can also serve as a quick factsheet in preparing for depositions or testimony.

Initial Meeting, Disclosures, and Discovery Agreements

In an effort to make e-discovery more efficient, F.R.C.P. Rule 26(f) mandates that parties meet and discuss how they want to handle ESI early in a case.

Lawyers often depend on digital examiners to help them prepare for and navigate a Rule 26(f) conference. The meeting usually requires both technical and strategic thinking because full discovery can run counter to cost concerns, confidentiality or privacy issues, and claims of privilege. For example, an organization that wants to avoid costly and unnecessary restoration of backup tapes should come to the table with an idea of what those tapes contain and how much it would cost to restore them. At the same time, if a party might be embarrassed by personal information within deleted files or a computer's old Internet history, counsel for that party might be wise to suggest limiting discovery to specific types of active, user documents (.DOC, .XLS, .PDF, etc.). Finally, privilege concerns can often be mitigated if the parties can agree on the list of attorneys that might show up in privileged documents, if they can schedule sufficient time to perform a privilege review, and if they allow each other to “claw back” privileged documents that are mistakenly produced to the other side.

The initial meetings between the parties generally address what ESI should be exchanged, in what format (e.g., native format versus tiffed images; electronic version versus a printout, on CD/DVD versus hard drive delivery media), what will constitute privileged information, and preservation considerations. Lawyers must make ESI disclosures to each other and certify that they are correct. This process is especially constructive when knowledgeable and friendly digital investigators can help lawyers understand their needs, capabilities, and costs associated with various ESI choices. The initial meeting may result in an agreement that helps all the parties understand their obligations. This same agreement can help guide the parties if a dispute should arise.

Consider the case of Integrated Service Solutions, Inc. v. Rodman. Integrated Service Solutions (ISS) brought a claim against Rodman, which in turn required information from a nonparty, VWR. VWR was subpoenaed to produce ESI in connection with either ISS or Rodman. VWR expressed its willingness to provide data but voiced several objections, namely that the subpoena was too broad, compliance costs were too great, and that ISS might obtain unfettered access to its systems (all common concerns).

VWR and ISS were able to reach a compromise in which ISS identified particular keywords, PricewaterhouseCoopers (PwC) conducted a search for $10,000, and VWR reviewed the resulting materials presented by PwC. However, the relationship between VWR and ISS deteriorated, and when VWR stated that it did not possess information pertinent to the litigation, ISS responded that it was entitled to a copy of each file identified by the search as well as a report analyzing the information.

The case went before the court, which looked at the agreement between the parties and held that ISS should receive a report from PwC describing its methods, the extent of VWR's cooperation, and some general conclusions. The court also held that VWR should pay for any costs associated with generating the report.

This case underscores several key principals of e-discovery. First, even amicable relationships between parties involved in e-discovery can deteriorate and require judicial intervention. Second, digital investigators should be sensitive to the cost and disclosure concerns of their clients. Third, digital examiners may be called upon to play a neutral or objective role in the dispute, and last, the agreement or contract between the parties is crucial in establishing the rights of each party.

Assessing What Data Is Reasonably Accessible

Electronic discovery involves more than the identification and collection of data because attorneys must also decide whether the data meets three criteria for production, namely whether the information is (1) relevant, (2) nonprivileged, and (3) reasonably accessible (F.R.C.P. 26(b)(2)(B)). The first two criteria make sense intuitively. Nonrelevant information is not allowed at trial because it simply bogs downs the proceedings, and withholding privileged information makes sense in order to protect communications within special relationships in our society, for example, between attorneys and clients, doctors and patients, and such. Whether information is “reasonably accessible” is harder to determine, yet this is an important threshold question in any case.

In the Zubulake case described earlier, the employee asked for “all documents concerning any communications by or between UBS employees concerning Plaintiff,” which included “without limitation, electronic or computerized data compilations,” to which UBS argued the request was overly broad. In that case Judge Shira A. Scheindlin, United States District Court, Southern District of New York, identified three categories of reasonably accessible data: (1) active, online data such as hard drive information, (2) near-line data to include robotic tape libraries, and (3) offline storage such CDs or DVDs. The judge also identified two categories of data generally not considered to be reasonably accessible: (1) backup tapes and (2) erased, fragmented, and damaged data. Although there remains some debate about the reasonable accessibility of backup tapes used for archival purposes versus disaster recovery, many of Judge Scheindlin's distinctions were repeated in a 2005 Congressional report from the Honorable Lee H. Rosenthal, Chair of the Advisory Committee on the Federal Rules of Civil Procedure (Rosenthal, 2005), and Zubulake's categories of information still remain important guideposts (Mazza, 2007).

The courts use two general factors—burden and cost—to determine the accessibility of different types of data. Using these general factors allows the courts to take into account challenges of new technologies and any disparity in resources among parties (Moore, 2005). If ESI is not readily accessible due to burden or cost, then the party possessing that ESI may not have to produce it (see F.R.C.P. 26(b)). Some parties, however, make the mistake of assessing the burden and cost on their own and unilaterally decide not to preserve or disclose data that is hard to reach or costly to produce. In fact, the rules require that a party provide “a description by category and locations, of all documents” with potentially relevant data, both reasonably and not reasonably accessible (F.R.C.P. 26(a)(1)(B)). This allows the opposing side a chance to make a good cause showing to the court why that information should be produced (F.R.C.P. 26(a)(2)(B)).

These rules mean that digital examiners may have to work with IT departments to change their data retention procedures and schedules, even if only temporarily, until the parties can negotiate an ESI agreement or a court can decide what must be produced. The rules also mean that digital examiners may eventually leave behind data that they would ordinarily collect in many forensic examinations, like e-mail backups, deleted files, and fragments of data in unallocated space. These types of data may be relatively easy to acquire in a small forensic examination but may be too difficult and too costly to gather for all custodians over time in a large e-discovery case.

Utilizing Criminal Procedure to Accentuate E-Discovery

In some cases, such as lawsuits involving fraud allegations or theft of trade secrets, digital examiners may find that the normal e-discovery process has been altered by the existence of a parallel criminal investigation. In those cases, digital examiners may be required to work with the office of a local US Attorney, State Attorney General, or District Attorney, since only these types of public officials, and not private citizens, can bring criminal suits.

There are several advantages to working with a criminal agency. The first is that the agency might be able to obtain the evidence quicker than a private citizen could. For example, in United States v. Fierros-Alaverez, the police officer was permitted to search the contents of a cellular phone during a traffic stop. Second, the agency has greater authority to obtain information from third parties. Third, there are favorable cost considerations since a public agency will not charge you for their services. Finally, in several instances, information discovered in a criminal proceeding can be used in a subsequent civil suit.

Apart from basic surveillance and interviews, criminal agencies often use four legal tools to obtain evidence in digital investigations—a hold letter, a subpoena, a ‘d’ order, and a search warrant. 2

2Beyond the scope of this chapter are pen register orders, trap and trace orders, or wire taps that criminal authorities can obtain to collect real-time information on digital connections and communications. These tools seldom come into play in a case that has overlapping e-discovery issues in civil court.

A criminal agency can preserve data early in an investigation by issuing a letter under 18 U.S.C. 2703(f) to a person or an entity like an Internet Service Provider (ISP). Based on the statute granting this authority, the notices are often called “f letters” for short. The letter does not actually force someone to produce evidence but does require they preserve the information for 90 days (with the chance of an additional 90 day extension). This puts the party with potential evidence on notice and buys the agency some time to access that information or negotiate with the party to surrender it.

Many criminal agencies also use administrative or grand jury subpoenas to obtain digital information as detailed in Federal Rules of Criminal Procedure Rule 17. The subpoenas may be limited by privacy rights set forth in the Electronic Communication Privacy Act (18 U.S.C. § 2510). Nevertheless, criminal agencies can often receive data such as a customer's online account information and method of payment, a customer's record of assigned IP numbers and account logins or session times, and in some instances the contents of historic e-mails.

Another less popular method of obtaining evidence is through a court “d” order, under 18 U.S.C. §2703(d). This rule is not used as often because an official must be able to state with “specific and articulateable” facts that there is a reasonable belief that the targeted information is pertinent to the case. However, this method is still helpful to obtain more than just subscriber information—data such as Internet transactional information or a copy of a suspect's private homepage.

Search warrants are among the most powerful tools available to law enforcement agencies (see Federal Rules of Criminal Procedure Rule 41). Agents must receive court approval for search warrants and must show there is probable cause to believe that evidence of a specified crime can be found on a person or at a specific place and time. Search warrants are typically used to seize digital media such as computer hard drives, thumb drives, DVDs, and such, as well as the stored content of private communications from e-mail messages, voicemail messages, or chat logs.

Despite the advantages of working a case with criminal authorities, there are some potent disadvantages that need to be weighed. First, the cooperating private party loses substantial control over its case. This means that the investigation, legal decisions (i.e., venue, charges, remedies sought, etc.), and the trial itself will all be controlled by the government. Second, and on a corollary note, the private party surrenders all control over the evidence. When government agents conduct their criminal investigation, they receive the information and interpret the findings, not the private party. If private parties wish to proceed with a civil suit using the same evidence, they will typically have to wait until the criminal case has been resolved.

It is imperative for digital examiners to understand the legal concepts behind electronic discovery, as described earlier. You likely will never know more than a lawyer who is familiar with all the relevant statutes and important e-discovery court decisions; however, your understanding of the basics will help you apply your art and skills and determine where you can add the most value.

Case Management

The total volume of potentially relevant data often presents the greatest challenge to examiners in an e-discovery case. A pure forensic matter may focus on a few documents on a single 80 GB hard drive, but an e-discovery case often encompasses a terabyte or more of data across dozens of media sources. For this reason, e-discovery requires examiners to become effective case managers and places a premium on their efficiency and organizational skills. These traits are doubly important considering the tight deadlines that courts can impose in e-discovery cases and the high costs that clients can incur if delays or mistakes occur.

Effective case management requires that examiners establish a strategic plan at the outset of an e-discovery project, and implement effective and documented quality assurance measures throughout each step of the process. Problems can arise from both technical and human errors, and the quality assurance measures should be sufficiently comprehensive to identify both. Testing and verification of tools’ strengths and weaknesses before using them in case work is critical, however it should not lull examiners into performing limited quality assurance of the results each time the tool is used (Lesemann & Reust, 2006).

Effective case management requires that examiners plan ahead. This means that examiners must quickly determine where potentially relevant data reside, both at the workstation and enterprise levels. As explained in more detail later in this chapter (see the section, “Identification of Electronic Data”), a sit-down meeting with a client's IT staff, in-house counsel, and outside counsel can help focus attention on the most important data sources and determine whether crucial information might be systematically discarded or overwritten by normal business processes. Joining the attorneys in the interviews of individual custodians can also help determine if data are on expected media like local hard drives and file servers or on far-flung media like individual thumb drives and home computers. This information gathering process is more straightforward and efficient when an organization has previously gone through a formal, proactive data-mapping process, and knows where specific data types reside in their network.

Whether examiners are dealing with a well-organized or disorganized client, they should consider drafting a protocol that describes how they intend to handle different types of data associated with their case. The protocol can address issues such as what media should be searched for specific file types (e.g., the Exchange server for current e-mail, or hard drives and home directories for archived PST, OST, MSG, and EML files), what tools can be used during collection, whether deleted data should be recovered by default, what keywords and date ranges should be used to filter the data, and what type of deduplication should be applied (e.g., eliminating duplicates within a specific custodian's data set, or eliminating duplicates across all custodians’ data). Designing a protocol at the start of the e-discovery process increases an examiner's efficiency and also helps manage the expectations of the parties involved.

A protocol can also help attorneys and clients come to terms with the overall volume and potential costs of e-discovery. Often it will be the digital examiner's job to run the numbers and show how the addition of even a few more data custodians can quickly increase costs. Though attorneys may think of a new custodian as a single low-cost addition to a case, that custodian probably has numerous sources of data and redundant copies of documents across multiple platforms. The following scenario shows how this multiplicative effect can quickly inflate e-discovery costs.

One Custodian's Data:

Individual hard drive = 6GB of user data

Server e-mail = 0.50GB

Server home directory data = 1GB

Removable media (thumb drives) = 0.50GB

Blackberries, PDAs = 0GB (if synchronized with e-mail)

Scanned paper documents = 1GB

Backup tapes – e-mail for 12 mo × 0.50GB = 6GB

Backup tapes – e-docs for 12 mo × 1.0GB = 12GB

Potential data for one additional custodian = 28GB

Est. processing cost (at $1,500/GB) = $42,000

Digital examiners may also be asked how costly and burdensome specific types of information will be to preserve, collect, and process. This assessment may be used to decide whether certain data are “reasonably accessible,” and may help determine if and how preservation, collection, processing, review, and production costs should be shared between the parties. Under Zubulake, a court will consider seven factors to determine if cost-shifting is appropriate (Zubulake v. UBS Warburg):

1. The extent to which the request is specifically tailored to discover relevant information.

2. The availability of such information from other sources.

3. The total cost of production, compared to the amount in controversy.

4. The total cost of production, compared to the resources available to each party.

5. The relative ability of each party to control costs and its incentive to do so.

6. The importance of the issues at stake in the litigation.

7. The relative benefits to the parties of obtaining the information.

In an attempt to cut or limit e-discovery costs, a client will often volunteer to have individual employees or the company's own IT staff preserve and collect documents needed for litigation. This can be acceptable in many e-discovery cases. As described in more detail later, however, examiners should warn their clients and counsel of the need for more robust and verifiable preservation if the case hinges on embedded or file system metadata, important dates, sequencing of events, alleged deletions, contested user actions, or other forensic issues.

If an examiner is tasked with preserving and collecting the data in question, the examiner should verify that his or her proposed tools are adequate for the job. A dry run on test data is often advisable because there will always be bugs in some software programs, and these bugs will vary in complexity and importance. Thus it is important to verify, test, and document the strengths and weaknesses of a tool before using it, and apply approved patches or alternative approaches before collection begins.

Effective case management also requires that examiners document their actions, not only at the beginning, but also throughout the e-discovery process. Attorneys and the courts appreciate the attention to detail applied by most forensic examiners, and if an examiner maintains an audit trail of his or her activities, it often mitigates the impact of a problem, if one does arise.

In a recent antitrust case, numerous employees with data relevant to the suit had left the client company by the time a lawsuit was filed. E-mail for former employees was located on Exchange backups, but no home directories or hard drives were located for these individuals. Later in the litigation, when the opposing party protested the lack of data available on former employees, the client's IT department disclosed that data for old employees could be found under shared folders for different departments. The client expressed outrage that this information had not been produced, but digital examiners who had kept thorough records of their collections and deliveries were able to show that data for 32 of 34 former employees had indeed been produced, just under the headings of the shared drives not under individual custodian names. Thus, despite miscommunications about the location of data for former employees, careful record-keeping showed that there was little missing data, and former employee files had been produced properly in the form they were ordinarily maintained, under Federal Rules of Civil Procedure Rule 34.

Documenting one's actions also helps outside counsel and the client track the progress of e-discovery. In this vein, forensic examiners may be accustomed to tracking their evidence by media source (e.g., laptop hard drive, desktop hard drive, DVD), but in an e-discovery case, they will probably be asked to track data by custodian, as shown in Table 3.1. This allows attorneys to sequence and prepare for litigation events such as a document production or the deposition of key witnesses. A custodian tracking sheet also allows paralegals to determine where an evidentiary gap may exist and helps them predict how much data will arrive for review and when.

Case management is most effective when it almost goes unnoticed, allowing attorneys and the client to focus their attention on the substance and merits of their case, not the harrowing logistical and technical hurdles posed by the e-discovery process in the background. As described earlier, this means that examiners should have a thorough understanding of the matter before identification and preservation has begun, as well as a documented quality assurance program for collecting, processing, and producing data once e-discovery has commenced.

Identification of Electronic Data

Before the ESI can be collected and preserved, the sources of potentially relevant and discoverable ESI must be identified. Although the scope of the preservation duty is typically determined by counsel, the digital investigator should develop a sufficient understanding of the organization's computer network and how the specific custodians store their data to determine what data exists and in what locations. Oftentimes this requires a more diligent and iterative investigation than counsel expects, however it is a vital step in this initial phase of e-discovery.

A comprehensive and thorough investigation to identify the potentially relevant ESI is an essential component of a successful strategic plan for e-discovery projects. This investigation determines whether the data available for review is complete, and if questions and issues not apparent at the outset of the matter can be examined later down the road (Howell, 2005). A stockpile of media containing relevant data being belatedly uncovered could call into question any prior findings or conclusions reached, and possibly could lead to penalties and sanctions from the court.

There are five digital storage locations that are the typical focus of e-discovery projects (Friedberg & McGowan, 2006):

▪ Workstation environment, including old, current, and home desktops and laptops

▪ Personal Digital Assistants (PDAs), such as the BlackBerry® and Treo®

▪ Removable media, such as CDs, DVDs, removable USB hard drives, and USB “thumb” drives

▪ Server environment, including file, e-mail, instant messaging, database, application and VOIP servers

▪ Backup environment, including archival and disaster recovery backups

Although these storage locations are the typical focus of e-discovery projects, especially those where the data are being collected in a corporate environment, examiners should be aware of other types of storage locations that may be relevant such as digital media players and data stored by third parties (for example, Google Docs, Xdrive, Microsoft SkyDrive, blogs, and social networking sites such as MySpace and Facebook).

Informational interviews and documentation requests are the core components of a comprehensive and thorough investigation to identify the potentially relevant ESI in these five locations, followed by review and analysis of the information obtained to identify inconsistencies and gaps in the data collected. In some instances a physical search of the company premises and off-site storage is also necessary.

Informational Interviews

The first step in determining what data exist and in what location is to conduct informational interviews of both the company IT personnel and the custodians. It is helpful to have some understanding of the case particulars, including relevant data types, time period, and scope of preservation duty before conducting the interviews. In addition, although policy and procedure documentation can be requested in the IT personnel interviews, it may be helpful to request them beforehand so they can be reviewed and any questions incorporated into the interview. Documenting the information obtained in these interviews is critical for many reasons, not least of which is the possibility that the investigator may later be required to testify in a Rule 30(b)(6) deposition.

For assistance in structuring and documenting the interviews, readers might develop their own interview guide. Alternatively, readers might consult various published sources for assistance. For example, Kidwell et al. (2005) provide detailed guides both for developing Rule 26 document requests and for conducting Rule 30(b)(6) depositions of IT professionals. Another source for consideration is a more recent publication of the Sedona Conference (Sedona Conference, 2008).

IT Personnel Interviews

The goal of the IT personnel interviews is to gain a familiarity and understanding with the company network infrastructure to determine how and where relevant ESI is stored.

When conducting informational interviews of company IT personnel, IT management such as the CIO or Director of IT will typically be unfamiliar with the necessary infrastructure details, but should be able to identify and assemble the staff that have responsibility for the relevant environments. Oftentimes it is the staff “on the ground” who are able to provide the most accurate information regarding both the theoretical policies and the practical reality. Another point to keep in mind is that in larger companies where custodians span the nation if not the world, there may be critical differences in the computer and network infrastructure between regions and companies, and this process is complicated further if a company has undergone recent mergers and acquisitions. Suggested questions to ask IT personnel are:

▪ Is there a centralized asset inventory system, and if so, obtain an asset inventory for the relevant custodians. If not, what information is available to determine the history of assets used by the relevant custodians?

▪ Regarding workstations, what is the operating system environment? Are both desktops and laptops issued? Is disk or file level encryption used? Are the workstations owned or leased? What is the refresh cycle and what steps are taken prior to the workstations being redeployed? Are users permitted to download software onto their workstations? Are software audits performed on the workstations to determine compliance?

▪ Regarding PDAs and cell phones, how are the devices configured and synchronized? Is it possible that data, such as messages sent from a PDA, exist only on the PDA and not on the e-mail server? Is the BlackBerry® server located and managed in-house?

▪ What are the policies regarding provision and use of removable media?

▪ Regarding general network questions, are users able to access their workstations/e-mail/file shares remotely and if so what logs are enabled? What are the Internet browsing and computer usage policies? What network shares are typically mapped to workstations? Are any enterprise storage and retention applications implemented such as Symantec Enterprise Vault®? Is an updated general network topology or data map available? Are outdated topologies or maps available for the duration of the relevant time period?

▪ Regarding e-mail servers, what are their numbers, types, versions, length of time deployed and locations? What mailbox size or date restrictions are in place? Is there an automatic deletion policy in place? What logging is enabled? Are employees able to replicate or archive e-mail locally to their workstations or to mapped network shares?

▪ Regarding file servers, what are the numbers, types, versions, locations, length of time deployed, data type stored, and departments served. Do users have home directories? Are they restricted by size? What servers provide for collaborative access, such as group shares or SharePoint®? To which shares and/or projects do the custodians have access?

▪ Regarding the backup environment, what are the backup systems used for the different server environments? What are the backup schedules and retention policies? What is the date of the oldest backup? Have there been any “irregular” backups created for migration purposes or “test” servers deployed? What steps are in place to verify the success of the backup jobs?

▪ Please provide information on any other data repositories such as database servers, application servers, digital voicemail storage, legacy systems, document management systems, and SANs.

▪ Have there been any other prior or on-going investigations or litigation where data was preserved or original media collected by internal staff or outside vendors? If so, where does this data reside now?

Obtaining explicit answers to these questions can be challenging and complicated due to staff turnover, changes in company structure, and lack of documentation. On the flip side when answers are provided (especially if just provided orally), care must be taken to corroborate the accuracy of the answers with technical data or other reliable information.

Custodian Interviews

The goal of the custodian interviews is to determine how and where the custodians store their data. Interviews of executive assistants may be necessary if they have access to the executive's electronic data. Suggested questions to ask are:

▪ How many laptops and desktops do they currently use? For how long have they used them? Do they remember what happened to the computers they used before, if any? Do they use a home computer for company-related activities? Have they ever purchased a computer from the company?

▪ To what network shares do they have access? What network shares are typically mapped to a drive letter on their workstation(s)?

▪ Do they have any removable media containing company-related data?

▪ Do they have a PDA and/or cell phone provided by the company?

▪ Do they use encryption?

▪ Do they use any instant messaging programs? Have they installed any unapproved software programs on their workstation(s)?

▪ Do they archive their mail locally or maintain a copy on a company server or removable media?

▪ Do they access their e-mail and/or files remotely? Do they maintain an online storage account containing company data? Do they use a personal e-mail address for company related activities, including transfer of company files?

The information and documentation obtained through requests and the informational interviews can assist in creating a graphical representation of the company network for the relevant time period. Although likely to be modified as new information is learned, it will serve as an important reference throughout the e-discovery project. As mentioned earlier, some larger corporations may have proactively generated a data map that will serve as the starting point for the identification of ESI.

Analysis and Next Steps

Review and analysis of the information obtained is essential in identifying inconsistencies and gaps in the data identification and collection. In addition, comparison of answers in informational interviews with each other and against the documentation provided can identify consistent, corroborative information between sources, which is just as important to document as inconsistencies. This review and analysis is not typically short and sweet, and is often an iterative process that must be undertaken as many times as new information is obtained, including after initial review of the data collected and from forensic analysis of the preserved data.

E-discovery consultants had been brought in by outside counsel to a national publicly-held company facing a regulatory investigation into its financial dealings, and were initially tasked with identifying the data sources for custodians in executive management. Counsel had determined that any company-issued computer used by the custodians in the relevant date range needed to be collected, thereby necessitating investigation into old and home computers. Without an updated, centralized asset tracking system, company IT staff had cobbled together an asset inventory from their own memory and from lists created by previous employees and interns. The inventory showed that two Macintosh laptops had been issued to the Chief Operations Officer (COO), however only one had been provided for preservation by the COO, and he maintained that he had not been issued any other Macintosh laptop. The e-discovery consultants searched through the COO's and his assistant's e-mail that had already been collected, identifying e-mail between the COO and the IT department regarding two different Macintosh laptops, and then found corresponding tickets in the company helpdesk system showing requests for technical assistance from the COO. When confronted with this evidence, the COO “found” the laptop in a box in his attic and provided it to the digital investigator. Subsequent analysis of the laptop showed extensive deletion activity the day before the COO had handed over the laptop.

There are many challenges involved in identifying and collecting ESI, including the sheer number and variety of digital storage devices that exist in many companies, lack of documentation and knowledge of assets and IT infrastructure, and deliberate obfuscation by company employees. Only through a comprehensive, diligent investigation and analysis you are likely to identify all relevant ESI in preparation for collection and preservation.

In a standard informational interview, investigators were told by the IT department in the Eastern European division of an international company that IT followed a strict process of wiping the “old” computer whenever a new computer was provided to an employee. The investigators attempted to independently verify this claim through careful comparison of serial numbers and identification of “old” computers that had been transferred to new users. This review and analysis showed intact user accounts for Custodian A on the computers being used by Custodian B. The investigators ultimately uncovered rampant “trading” and “sharing” of assets, together with “gifting” of assets by high level executives to subordinate employees, thereby prompting a much larger investigation and preservation effort.

Forensic Preservation of Data

Having conducted various informational interviews and having received and reviewed documents, lists and inventories from various sources to create an initial company data map, the next step for counsel is to select which of the available sources of ESI should be preserved and collected. The specific facts of the matter will guide counsel's decision regarding preservation. Federal Rules of Evidence Rule 26(b)(1) allows that parties “may obtain discovery regarding any non-privileged matter that is relevant to any party's claim or defense – including the existence, description, nature, custody, condition, and location of any documents or other tangible things and the identity and location of persons who know of any discoverable matter.”

Once counsel selects which sources of ESI are likely to contain relevant data and should be preserved in the matter, the next two phases of the electronic discovery process as depicted in Figure 3.1 include preservation and collection. Preservation includes steps taken to “ensure that ESI is protected against inappropriate alteration or destruction” and collection is the process of “gathering ESI for future use in the electronic discovery process…”

Preservation for electronic discovery has become a complicated, multi-faceted, steadily-changing concept in recent years. Starting with the nebulous determination of when the duty to preserve arises, then continuing into the litigation hold process (often equated to the herding of cats) and the staggering volumes of material which may need to be preserved in multiple global locations, platforms and formats, the task of preservation is an enormous challenge for the modern litigator. Seeking a foundation in reasonableness, wrestling with the scope of preservation is often an exercise in finding an acceptable balance between offsetting the risks of spoliation and sanctions related to destruction of evidence, against allowing the business client to continue to operate its business in a somewhat normal fashion. (Socha & Gelbman, 2008b)

Although the EDRM defines “preservation” and “collection” as different stages in electronic discovery for civil litigation, it has been our experience that preservation and collection must be done at the same time when conducting investigations, whether the underlying investigation is related to a financial statement restatement, allegations of stock option backdating, alleged violations of the Foreign Corrupt Practices Act, or other fraud, bribery, or corruption investigation. Given the volatile nature of electronic evidence and the ability of a bad actor to quickly destroy that evidence, a digital investigator's perspective must be different.

Electronic evidence that is not yet in the hands of someone who recognizes its volatility (i.e., the evidence has not been collected) and who is also absolutely committed to its protection has not really been preserved, regardless of the content of any preservation notice corporate counsel may have sent to custodians.

Besides the case cited, we have conducted numerous investigations where custodians, prior to turning over data sources under their control, have actively taken steps to destroy relevant evidence in contravention of counsels’ notice to them to “preserve” data. These steps have included actions like:

▪ Using a data destruction tool on their desktop hard drive to destroy selected files

▪ Completely wiping their entire hard drive

▪ Reinstalling the operating system onto their laptop hard drive

▪ Removing the original hard drive from their laptop and replacing it with a new, blank drive

▪ Copying relevant files from their laptop to a network drive or USB drive and deleting the relevant files from their laptop

▪ Printing relevant files, deleting them from the computer, and attempting to wipe the hard drive using a data destruction tool

▪ Setting the system clock on their computer to an earlier date and attempting to fabricate electronic evidence dated and timed to corroborate a story

▪ Sending themselves e-mail to attempt to fabricate electronic evidence

▪ Physically destroying their laptop hard drive with a hammer and reporting that the drive “crashed”

▪ Taking boxes of relevant paper files from their office to the restroom and flushing documents down the toilet

▪ Hiding relevant backup tapes in their vehicle

▪ Surreptitiously removing labels from relevant backup tapes, inserting them into a tape robot, and scheduling an immediate out-of-cycle backup to overwrite the relevant tapes

▪ Purchasing their corporate owned computer from the company the day before a scheduled forensic collection and declaring it “personal” property not subject to production

We were retained by outside counsel as part of the investigation team examining the facts and circumstances surrounding a financial statement restatement by an overseas bank with US offices. The principal accounting issue focused on the financial statement treatment of certain loans the bank made and then sold. It was alleged that certain bank executives routinely made undisclosed side agreements with the purchasers to buy back loans that eventually defaulted after the sale. Commitments to buy back defaulted loans would have an affect on the accounting treatment of the transactions. Faced with pending regulatory inquiries, in-house counsel sent litigation hold notices to custodians and directed IT staff to preserve relevant backup tapes. The preservation process performed by IT staff simply included temporarily halting tape rotations. But no one actually took the relevant tapes from IT to lock them away. In the course of time, IT ran low on tape inventory for daily, weekly and monthly backups. Eventually, IT put the relevant tapes back into rotation. By the time IT disclosed to in-house counsel that they were rotating backup tapes again, more than 600 tapes potentially holding relevant data from the time period under review had been overwritten. The data, which had been temporarily preserved at the direction of counsel was never collected and was eventually lost.

Forensic examiners might use a wide variety of tools, technologies, and methodologies to preserve and collect the data selected by counsel, depending on the underlying data source. 3 Regardless of the specific tool, technology, or methodology, the forensic preservation process must meet certain standards, including technical standards for accuracy and completeness, and legal standards for authenticity and admissibility.

3Research performed by James Holley identified 59 hardware and software tools commercially or publicly available for preserving forensic images of electronic media (Holley, 2008).

Historically, forensic examiners have relied heavily on creating forensic images of static media to preserve and collect electronic evidence. 4 But more and more often, relevant ESI resides on data sources that can not be shut down for traditional forensic preservation and collection, including running, revenue generating servers or multi-Terabyte Storage Area Networks attached to corporate servers.

4“Static Media” refers to media that are not subject to routine changes in content. Historically, forensic duplication procedures included shutting down the computer, removing the internal hard drive, attaching the drive to a forensic write blocker, and preserving a forensic image of the media. This process necessarily ignores potentially important and relevant volatile data contained on the memory of a running computer. Once the computer is powered down, the volatile memory data are lost.

Recognizing the evolving nature of digital evidence, the Association of Chief Police Officers has published its fourth edition of The Good Practice Guide for Computer-Based Electronic Evidence (ACPO, 2008). This guide was updated to take into account that the “traditional ‘pull-the-plug’ approach overlooks the vast amounts of volatile (memory-resident and ephemeral) data that will be lost. Today, digital investigators are routinely faced with the reality of sophisticated data encryption, as well as hacking tools and malicious software that may exist solely within memory. Capturing and working with volatile data may therefore provide the only route towards finding important evidence.” Additionally, with the advent of full-disk encryption technologies, the traditional approach to forensic preservation is becoming less and less relevant. However, the strict requirement to preserve and collect data using a sound approach that is well documented, has been tested, and does not change the content of or metadata about electronic evidence if at all possible, has not changed.

Preserving and Collecting E-mail from Live Servers

Laptop, desktop, and server computers once played a supporting role in the corporate environment: shutting them down for traditional forensic imaging tended to have only a minor impact on the company. However, in today's business environment, shutting down servers can have tremendously negative impacts on the company. In many instances, the company's servers are not just supporting the business—they are the business. The availability of software tools and methodologies capable of preserving data from live, running servers means that it is no longer absolutely necessary to shut down a production e-mail or file server to preserve data from it. Available tools and methodologies allow investigators to strike a balance between the requirements for a forensically sound preservation process and the business imperative of minimizing impact on normal operations during the preservation process (e.g., lost productivity as employees sit waiting for key servers to come back online or lost revenue as the company's customers wait for servers to come back online).

Perhaps the most requested and most produced source of ESI is e-mail communication. Counsel is most interested to begin reviewing e-mail as soon as practicable after forensic preservation. Because the content of e-mail communications might tend to show that a custodian knew or should have known certain facts; or took, should have taken, or failed to take certain action; proper forensic preservation of e-mail data sources is a central part of the electronic discovery process. In our experience over the last 10 years conducting investigations, the two most common e-mail infrastructures we've encountered are Microsoft Exchange Server (combined with the Microsoft Outlook e-mail client) and Lotus Domino server (combined with the Lotus Notes e-mail client). There are, of course, other e-mail servers/e-mail clients in use in the business environment today. But those tend to be less common. In the course of our investigations, we've seen a wide variety of e-mail infrastructures, including e-mail servers (Novell GroupWise, UNIX Sendmail, Eudora Internet Mail Server and Postfix) and e-mail clients (GroupWise, Outlook Express, Mozilla, and Eudora). In a few cases, the company completely outsourced their e-mail infrastructure by using web-based e-mail (such as Gmail or Hotmail) or AOL mail for their e-mail communications.

Preserving and Collecting E-mail from Live Microsoft Exchange Servers

To preserve custodian e-mail from a live Microsoft Exchange Server, forensic examiners typically take one of several different approaches, depending on the specific facts of the matter. Those approaches might include:

▪ Exporting a copy of the custodian's mailbox from the server using a Microsoft Outlook e-mail client

▪ In older versions of Exchange, exporting a copy of the custodian's mailbox from the server using Microsoft's Mailbox Merge utility (Exmerge)

▪ In Exchange 2007, exporting a copy of the custodian's mailbox using the Exchange Management Shell

▪ Exporting a copy of the custodian's mailbox from the server using a specialized third-party tool (e.g., GFI PST-Exchange Email Export wizard)

▪ Obtaining a backup copy of the entire Exchange Server “Information Store” from a properly created full backup of the server

▪ Temporarily shutting down Exchange Server services and making a copy of the Exchange database files that comprise the Information Store

▪ Using a software utility such as F-Response™ or EnCase Enterprise to access a live Exchange Server over the network and copying either individual mailboxes or an entire Exchange database file

Microsoft Exchange stores mailboxes in a database comprised of two files: priv1.edb and priv1 .stm. The priv1.edb file contains all e-mail messages, headers, and text attachments. The priv1.stm file contains multimedia data that are MIME encoded. Similarly, public folders are stored in files pub1.edb and pub1.stm. An organization may maintain multiple Exchange Storage Groups, each with their own set of databases. Collectively, all databases associated with a given Exchange implementation are referred to as an Information Store, and for every .EDB file there will be an associated .STM file (Buike, 2005).

Each approach has its advantages and disadvantages. When exporting a custodian's mailbox using Microsoft Outlook, the person doing the exporting typically logs into the server as the custodian. This can, under some circumstances, be problematic. One advantage of this approach, though, is that the newer versions of the Outlook client can create very large (>1.7GB) Outlook e-mail archives. For custodians who have a large volume of mail in their accounts, this might be a viable approach if logging in as the custodian to collect the mail does not present an unacceptable risk. One potential downside to this approach is that the Outlook client might not collect deleted e-mail messages retained in the Microsoft Exchange special retention area called “the dumpster,” which is a special location in the Exchange database file where deleted messages are retained by the server for a configurable period of time. Additionally, Outlook will not collect any part of any “double-deleted” message. Double-deleted is a term sometimes used to refer to messages that have been soft-deleted from an Outlook folder (e.g., the Inbox) into the local Deleted Items folder and then deleted from the Deleted Items folder. These messages reside essentially in the unallocated space of the Exchange database file, and are different from hard-deleted, which bypass the Deleted Items folder altogether during deletion. Using Outlook to export a custodian's mailbox would not copy out any recoverable double-deleted messages or fragments of partially overwritten messages.

One advantage of using the Exmerge utility to collect custodian e-mail from a live Exchange server is that Exmerge can be configured to collect deleted messages retained in the dumpster and create detailed logs of the collection process. However, there are at least two main disadvantages to using Exmerge. First, even the latest version of Exmerge cannot create Outlook e-mail containers larger than 1.7GB. For custodians who have a large volume of e-mail in their account, the e-mail must be segregated into multiple Outlook containers, each less than about 1.7GB. Exmerge provides a facility for this, but configuring and executing Exmerge multiple times for the task and in a manner that does not miss messages can be problematic. Second, Exmerge will not collect any part of a double-deleted message that is not still in the dumpster. So there could be recoverable deleted messages or fragments of partially overwritten messages that Exmerge will not copy out.

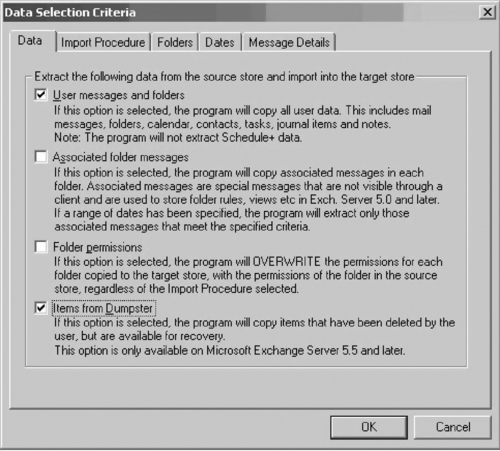

Exmerge can be run with the Exmerge GUI or in batch mode from the command line. The screenshots in Figure 3.2, Figure 3.3 and Figure 3.4 show the steps to follow to extract a mailbox, including the items in the Dumpster, using the Exmerge GUI.

|

| Figure 3.2 |

|

| Figure 3.4 |

To enable the maximum logging level for Exmerge, it is necessary to edit the Exmerge.ini configuration file, setting LoggingLevel to the value 3.

With the release of Exchange Server 2007, Microsoft did not update the Exmerge utility. Instead, the latest version of Exchange Server includes new command-line functionality integrated into the Exchange Management Shell essentially to replace Exmerge.

The Exchange Management Shell provides a command-line interface and associated command-line plug-ins for Exchange Server that enable automation of administrative tasks. With the Exchange Management Shell, administrators can manage every aspect of Microsoft Exchange 2007, including mailbox moves and exports. The Exchange Management Shell can perform every task that can be performed by Exchange Management Console in addition to tasks that cannot be performed in Exchange Management Console. (Microsoft, 2007a)

The Exchange Management Shell PowerShell (PS) Export-Mailbox command-let (cmdlet) can be used either to export out specific mailboxes or to cycle through the message store, allowing the investigator to select the mailboxes to be extracted. By default, the Export-Mailbox cmdlet copies out all folders, including empty folders and subfolders, and all message types, including messages from the Dumpster. For a comprehensive discussion of the Export-Mailbox cmdlet and the permissions required to run the cmdlet see Microsoft (2007b).

The following is the command to export a specific mailbox, “[email protected],” to a PST file named jsmith.pst:

Export-Mailbox -Identity [email protected] -PSTFolderpath c:jsmith.pst

The following is the command to cycle through message store “MailStore01” on server named “EXMAIL01,” allowing the investigator to select the mailboxes to be extracted:

Get-Mailbox -Database EXMAIL01MailStore01 | Export-Mailbox -PSTFolderpath c:pst”

The screenshots in Figure 3.5, Figure 3.6 and Figure 3.7 show the steps to follow to cycle through message store “EMT” on the Exchange server named “MAIL2,” allowing the investigator to select the mailboxes to be extracted.

|

| Figure 3.5 |

|

| Figure 3.6 |

|

| Figure 3.7 |

(Note: The following screen captures were taken during a live investigation, however the names have been changed to protect custodian identities.)

The most complete collection from a Microsoft Exchange Server is to collect a copy of the Information Store (i.e., the priv1.edb file and its associated .STM file for the private mailbox store as well as the pub1.edb and associated .STM file for the Public Folder store). The primary advantage of collecting the entire information store is that the process preserves and collects all e-mail in the store for all users with accounts on the server. If during the course of review it becomes apparent that new custodians should be added to the initial custodian list, then the e-mail for those new custodians has already been preserved and collected.

Traditionally, the collection of these files from the live server would necessitate shutting down e-mail server services for a period of time because files that are open for access by an application (i.e., the running Exchange Server services) cannot typically be copied from the server. E-mail server services must be shut down so the files themselves are closed by the exiting Exchange application and they are no longer open for access. This temporary shutdown can have a negative impact on the company and the productivity of its employees. However, the impact of shutting down e-mail server services is rarely as significant as shutting down a revenue-producing server for traditional forensic imaging. In some cases, perhaps a process like this can be scheduled to be done off hours or over a weekend to further minimize impact on the company.

More recently, software utilities such as F-Response™ can be used to access the live Exchange Server over the network and to preserve copies of the files comprising the Information Store. F-Response (to enable access to the live server) coupled with EnCase® Forensic or AccessData's FTK Imager® could be used to preserve the .EDB and .STM files that comprise the Information Store. Alternatively, F-Response coupled with Paraben's Network E-mail Examiner™ could be used to preserve individual mailboxes from the live server.

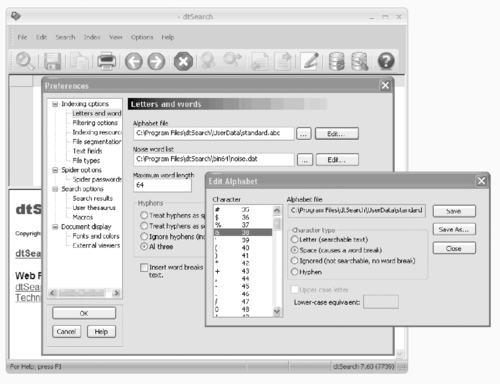

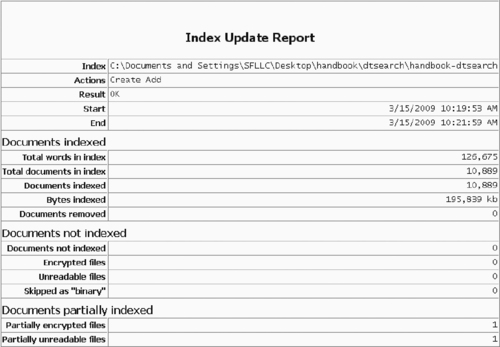

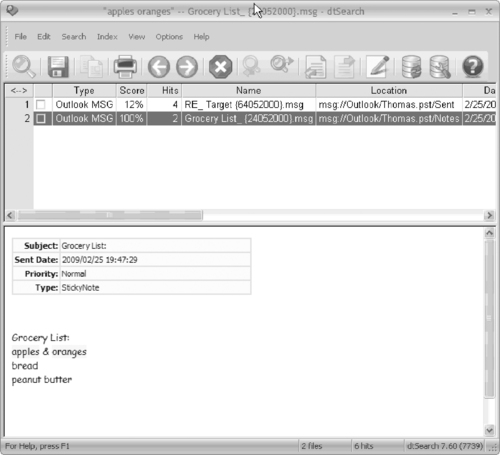

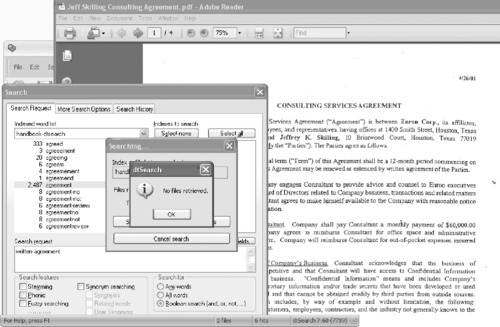

F-Response (www.f-response.com/) is a software utility based on the iSCSI standard that allows read-only access to a computer or computers over an IP network. The examiner can then use his or her tool of choice to analyze or collect data from the computer. Different types of licenses are available, and the example shown in Figure 3.8, Figure 3.9, Figure 3.10, Figure 3.11 and Figure 3.12 (provided by Thomas Harris-Warrick) is shown using the Consultant Edition, which allows for multiple computers to be accessed from one examiner machine.

|

| Figure 3.10 |

|

| Figure 3.12 |

The examiner's computer must have the iSCSI initiator, F-Response and the necessary forensic collection or analysis tools installed, and the F-Response USB dongle inserted in the machine. The “target” computer must be running the “F-Response Target code,” which is an executable than can be run from a thumb drive.

1. Start F-Response NetUniKey Server

The first step is to initiate the connection from the examiner's computer to the target computer, by starting the F-Response NetUniKey server. The IP address and port listed are the IP and port listening for validation requests from the target computer(s).

2. Start F-Response Target Code on Target Computer

Upon execution of the F-Response Target code, a window will appear requesting the IP address and port of the examiner's machine that is listening for a validation request. After entering in this information, the window in Figure 3.9 will appear. The host IP address, TCP address, username and password must be identified.

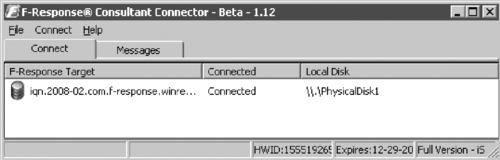

3. Consultant Connector

The next step involves opening and configuring the iSCSI Initiator, which used to be completed manually. F-Response has released a beta version of Consultant Connector, however, which completes this process for you, resulting in read-only access to the hard drive of the target computer.

4. Preservation of EDB File

The Microsoft Exchange Server was live when accessed with F-Response, and it was not necessary to shut down the server during the collection of the EDB file using FTK Imager (see additional detail, further, on FTK Imager).

Another approach to collecting the .EDB and .STM files might be to collect a very recent full backup of the Exchange Server Information Store if the company uses a backup utility that includes an Exchange Agent. The Exchange Agent software will enable the backup software to make a full backup of the Information Store, including the priv1.edb file, the pub1.edb file, and their associated .STM files.

Once the Information Store itself or the collective .EDB and .STM files that comprise the Information Store are preserved and collected, there are a number of third-party utilities on the market today that can extract a custodian's mailbox from them. Additionally, if the circumstances warrant, an in-depth forensic analysis of the .EDB and .STM files can be conducted to attempt to identify fragments of partially overwritten e-mail that might remain in the unallocated space of the .EDB or .STM files.

This was the case in an arbitration. The central issue in the dispute was whether the seller had communicated certain important information to the buyer prior to the close of the transaction. The seller had received this information from a third party prior to close. The buyer claimed to have found out about the information after the close and also found out the seller possessed the information prior to close. The buyer claimed the seller intentionally withheld the information. An executive at the seller company had the information in an attachment to an e-mail in their inbox. Metadata in the Microsoft Outlook e-mail client indicated the e-mail had been forwarded. However, the Sent Items copy of the e-mail was no longer available. The employee claimed the addressee was an executive at the buyer company. The buyer claimed the recipients must have been internal to the seller company and that the buyer did not receive the forwarded e-mail.