Chapter 9. Network Investigations

Eoghan Casey, Christopher Daywalt, Andy Johnston and Terrance Maguire

Contents

Introduction437

Overview of Enterprise Networks439

Overview of Protocols442

Evidence Preservation on Networks457

Collecting and Interpreting Network Device Configuration458

Forensic Examination of Network Traffic479

Network Log Correlation— A Technical Perspective505

Conclusion516

References516

Introduction

Tracking down computer criminals generally requires digital investigators to follow the cybertrail between the crime scene and the offender's computer. The cybertrail can cross multiple networks and geographical boundaries, and can be comprised of many different kinds of digital evidence including proxy and firewall logs, intrusion detection systems, and captured network traffic. Dialup server logs at the suspect's Internet Service Provider (ISP) may show that a specific IP address was assigned to the suspect's user account at the time. The ISP may also have Automatic Number Identification (ANI) logs—effectively Caller-ID—connecting the suspect's home telephone number to the dialup activity. Routers on the ISP network that connect the suspect's computer to the Internet may have associated NetFlow logs containing additional information about the network activities under investigation. Each of these logs would represent steps on the trail.

Ideally, each step in the cybertrail can be reconstructed from one or more records from this evidence, enabling digital investigators to connect the dots between the crime scene and the offender's computer and establish the continuity of offense. If there is more than one type of evidence for a particular step, so much the better for correlation and corroboration purposes. Your reconstruction of events is like a scientific hypothesis. The more evidence you collect that is consistent with the hypothesis, the stronger the case for that hypothesis becomes.

Networks present investigators with a number of challenges. When the networks are involved in a crime, evidence is often distributed on many computers making collection of all hardware or even the entire contents of a network unfeasible. Also, evidence is often present on a network for only a split second—the windows of opportunity for collecting such volatile evidence are very small. Additionally, encryption software is becoming more commonplace, allowing criminals to scramble incriminating evidence using very secure encoding schemes. Furthermore, unlike crime in the physical world, a criminal can be several places on a network at any given time. A solid comprehension of computer networks and the application of forensic science principles to this technology is a prerequisite for anyone who is responsible for identifying, securing, and interpreting evidence on a network. To that end, this chapter provides an overview of network protocols, references to more in-depth materials, and discusses how forensic science is applied to networks. Furthermore, to help investigators interpret and utilize this information in a network-related investigation, this chapter focuses on the most common kinds of digital evidence found on networks, and provides information that can be generalized to other situations. This chapter assumes a basic understanding of network topology and associated technologies, as covered in Casey (2004).

It is not uncommon for intruders to maintain a trophy list of the systems they have compromised. In some cases, intruders inadvertently record their unauthorized actions with their own network capture programs. For instance, in one large-scale network intrusion the intruder placed a rootkit on over 40 servers, which included a sniffer that recorded network traffic.

Forensic examination of the compromised servers found sniffer logs created by the intruder's rootkit, showing the intruder gaining unauthorized access via a backdoor. These sniffer logs showed the IP address from which the intruder was connecting, enabling us to track the attacker back to the UUnet ISP. We promptly contacted the ISP and instructed them to preserve logs associated with the intrusion in anticipation of a search warrant for these records. In addition, we started collecting network traffic originating from the network block used by the intruder to gather evidence of ongoing intrusion activities.

Further investigation revealed that the intruder was using a stolen UUnet dialup account. Fortunately, the ISP maintained ANI records and was able to provide the phone number used to dial into the Internet. The FBI determined which house was assigned the phone number, obtained a search warrant, and seized the intruder's computers.

A forensic examination of the intruder's computer revealed substantial linkage with the victim systems. Information about stolen dialup accounts and victim systems were neatly organized in folders and files on the intruder's computer:

Sniffer logs from the compromised systems containing captured usernames and passwords were found on one of the intruder's hard drives. These sniffer files were accompanied by a file created by the intruder that listed the servers and associated usernames and passwords to which he had gained administrative access on various networks around the world. In addition, a tar file on the intruder's hard drive containing the rootkit found on the compromised systems had metadata in the header of the tar files that showed it was created on one of the compromised systems. A keyword search of unallocated space found partial home directory listings from compromised servers, further demonstrating that the intruder's computer was used to gain unauthorized access to those systems. Furthermore, chat logs recovered from the computers showed the intruder exchanging information about compromised servers with his cohorts on Internet Relay Chat (IRC).

Records provided by UUnet, as a result of an FBI subpoena, indicated several dates and times, as well as ranges of times, that the stolen dialup account was used by the intruder to connect to the Internet from the intruder's home when gaining unauthorized access to victim systems. These time ranges correlated with unauthorized activities on the victim systems as well as with IRC chat logs recovered from the intruder's computer.

Although this chapter concentrates on servers, network devices, and network traffic, keep in mind that personal computers often have traces of network activities that can be preserved and examined using the techniques for examining hosts covered in previous chapters. Locard's Exchange Principle states that, when an offender comes in contact with a location or another person, an exchange of evidence occurs (Saferstein, 1998). As a result of this exchange, offenders leave something of themselves behind and take something of that person or place away with them. Locard was talking about the physical world, but his maxim holds for the human-engineered world of information technology as well. Sometimes the evidence transfer is intentionally designed into a system (as with logs). Sometimes, the transfer is an incidental (and perhaps temporary) by-product of the system design. To understand more clearly the application of this principle to forensic investigation of computer networks, suppose an individual uses his home computer to gain unauthorized access to a remote server via a network. Some transfer of digital data occurs. Something as simple as a listing of a directory on the server may remain on the intruder's hard drive for some time, providing a connection between the suspect and the crime scene. Examples of evidence transfer exist for almost every service provided over the Internet.

To provide practical examples of how logs are interpreted and used in digital investigations, data associated with the intrusion investigation scenario introduced in Chapter 4 are examined in further detail.

Overview of Enterprise Networks

Digital investigators must be sufficiently familiar with network components found in a typical organization to identify, preserve, and interpret the key sources of digital evidence in an Enterprise. This chapter concentrates on digital evidence associated with routers, firewalls, authentication servers, network sniffers, Virtual Private Networks (VPNs), and Intrusion Detection Systems (IDS). This section provides an overview of how logs from these various components of an Enterprise network can be useful in an investigation. Consider the simplified scenario in Figure 9.1 involving a secure server that is being misused in some way.

Logs generated by network security devices like firewalls and IDSs can be a valuable source of data in a network investigation. Access attempts blocked by a firewall or malicious activities detected by an IDS may be the first indication of a problem, alarming system administrators enough to report the activity to digital investigators. As discussed in Chapter 4, “Intrusion Investigation,” configuring firewalls to record successful access as well as denied connection attempts gives digital investigators more information about how the system was accessed and possibly misused. By design, IDS devices only record events of interest, including known attack signatures like buffer overflows and potentially malicious activities like shell code execution. However, some IDSs can be configured to capture the full contents of network traffic associated with a particular event, enabling digital forensic analysts to recover valuable details like the commands that were executed, files that were taken, and the malicious payload that was uploaded as demonstrated later in this chapter.

Routers form the core of any large network, directing packets to their destinations. As discussed in the NetFlow section later in this chapter, routers can be configured to log summary information about every network connection that passes through them, providing a bird's eye view of activities on a network. For example, suppose you find a keylogger on a Windows server and you can determine when the program was installed. Examining the NetFlow logs relating to the compromised server for the time of interest can reveal the remote IP address used to download the keylogger. Furthermore, NetFlow logs could be searched for that remote IP address to determine which other systems in the Enterprise were accessed and may also contain the keylogger. As more organizations and ISPs collect NetFlow records from internal routers as well as those at their Internet borders, digital investigators will find it easier to reconstruct what occurred in a particular case.

Digital investigators may be able to obtain full network traffic captures, which are sometimes referred to as logging or packet capture, but are less like a log of activities than like a complete videotape of them—recorded network traffic is live, complete, and compelling. Replaying an individual's online activities as recorded in a full packet capture can give an otherwise intangible sequence of events a very tangible feel.

Authentication servers form the heart of most enterprise environments, associating activities with particular virtual identities. Logs from RADIUS and TACACS servers, as well as Windows Security Event logs on Domain Controllers, can help digital investigators attribute activities to a particular user account, which may lead us to the person responsible.

Because user accounts may be shared or stolen, it is not safe to assume that the owner of the user account is the culprit. Therefore, you are never going to identify a physical, flesh-and-blood individual from information logs. The universe of digital forensics deals with virtual identities only. You can never truly say that John Smith logged in at 9:00 am, only that John Smith's account was authenticated at 9:00 am. It is common, when pursuing an investigation, to conflate the physical people with the virtual identities in your mind and in casual speech with colleagues. Be careful. When you are presenting your findings or even when evaluating them for your own purposes, remember that your evidence trail will stop and start at the keyboard, not at the fingers on the keys. Even if you have digital images from a camera, the image may be consistent with the appearance of a particular individual, but as a digital investigator you cannot take your conclusions any farther.

As discussed later in this chapter, VPNs are often configured to authenticate via RADIUS or Active Directory, enabling digital investigators to determine which account was used to connect. In addition, VPNs generally record the remote IP address of the computer being used to connect into the network, as well as the internal IP address assigned by the VPN to create a virtual presence on the enterprise network. These VPN logs are often critical for attributing events of concern within an organization to a particular user account and remote computer.

When a computer is connected to a network it needs to know several things before it can communicate with a remote server: its own IP address, the IP address of its default router, the MAC address of its default router, and the IP address of the remote server. Many networks use the Dynamic Host Configuration Protocol (DHCP) to assign IP addresses to computers. When a networked system that uses DHCP is booted, it sends its MAC address to the DHCP server as a part of its request for an IP address. Depending on its configuration, the server will either assign a random IP address or a specific address that has been set aside for the MAC address in question. In any event, DHCP servers maintain a table of the IP addresses currently assigned.

DHCP servers can retain logs to enable digital investigators to determine which computer was assigned an IP address during a time of interest, and potentially the associated user account. For instance, the DHCP lease in Table 9.1 shows that the computer with hardware address 00:e0:98:82:4c:6b was assigned IP address 192.168.43.12 starting at 20:44 on April 1, 2001 (the date format is weekday yyy/mm/dd hh:mm:ss where 0 is Sunday).

lease 192.168.43.12 { starts 0 2001/04/01 20:44:03; ends 1 2001/04/02 00:44:03; hardware ethernet 00:e0:98:82:4c:6b; uid 01:00:e0:98:82:4c:6b; client-hostname "oisin"; } |

Some DHCP servers can be configured to keep an archive of IP address assignments, but this practice is far from universal. Unless you are certain that archives are maintained, assume that the DHCP history is volatile and collect it as quickly as possible.

A DHCP lease does not guarantee that a particular computer was using an IP address at a given time. An individual could configure another computer with this same IP address at the same time, accidentally conflicting with the DHCP assignment or purposefully masquerading as the computer that originally was assigned this IP address via DHCP. The bright side is that such a conflict is often detected and leaves log records on the systems involved.

The same general process occurs when an individual connects to an Internet Service Provider (ISP) via a modem. Some ISPs record the originating phone number in addition to the IP address assigned, thus enabling investigators to track connections back to a particular phone line in a house or other building.

Obtaining additional information about systems on the Internet is beyond the scope of this chapter. See Nikkel (2006) for a detailed methodology on documenting Internet name registry entries, Domain name records, and other information relating to remote systems.

Overview of Protocols

To communicate on a network, computers must use the same protocol. For example TCP/IP is the standard for computers to communicate across the Internet. The principle is fairly straightforward. Information is transmitted from a networked system in chunks called packets or datagrams. The chunks contain the data to be transferred along with the information needed to deliver them to their destination and to reconstruct the chunks into the original data. The extra information is added in layers when transmitted and stripped off in layers at the destination. This layering effectively wraps or encapsulates control details around the data before they are sent to the next layer, providing modular functionality at each layer. One layer, for instance, is used to specify the IP address of the destination system, and another layer is used to specify the destination application on that system by specifying the port being used by that application (there may be several ports on a server willing to receive data, and you don't want your request for a web page to end up in an SSH server). Both of these layers will contain instructions for reconstructing the separated chunks, how to deal with delayed or out-of-order deliveries, and so forth. The TCP/IP model consists of four layers: data link, Internet, transport, and application layers, summarized in Table 9.2 and discussed further later.

At the data link layer, many computers run standard Ethernet (IEEE 802.3) to communicate with other computers on the same local area network. Ethernet provides a method for conveying bits of data over network cables, using the unique hardware identifiers associated with network cards (MAC addresses or physical addresses) to direct data to their destination. The format of a standard Ethernet frame is shown in Figure 9.2.

The preamble and start-of-frame fields are functional components of the protocol, and are of little interest from an investigative or evidentiary standpoint. The source and destination Ethernet addresses are six bytes that are associated with the network cards on each computer. The length field contains the number of bytes in the data field—each frame must be at least 64 bytes long to allow network cards to detect collisions accurately (Held, 1994). The padding in the Ethernet frame ensures that each datagram is at least 64 bytes long and the cyclic redundancy check (CRC) is used to verify the integrity of the datagram at the time it is received.

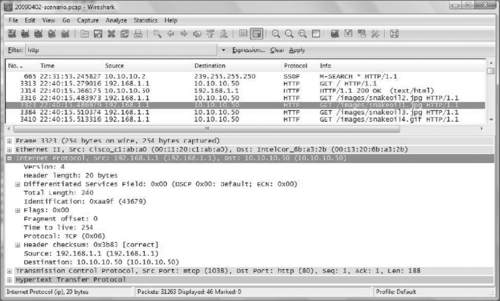

In Figure 9.3, there is an example of an Ethernet frame from the intrusion investigation scenario; it shows the source and destination Ethernet (MAC) address and the next layer protocol, IP. The Wireshark application displays the chunks of information that have been transmitted and captured over a network. The display parses the information into the various layers dictated by the protocols used for transmission and into any further protocol layers required by the type of service being used at the destination. In the second frame of the display, Wireshark has parsed four layers of delivery information (e.g., the third layer specifies the addresses of the source and destination systems and the fourth layer defines the source and destination ports) and then further parsed the protocol being used for the particular service being used in the transaction.

To communicate with machines on different networks, computers must run higher-level protocols such as Internet Protocol (IP) at the network layer and Transport Control Protocol (TCP) at the transport layer. TCP/IP provides a method for conveying packets of data over many physically distant and dissimilar networks, using Internet Protocol (IP) addresses to direct traffic to their destination. The format of a standard TCP/IP datagram is shown in Figure 9.4.

In Figure 9.5, there is an example of an IP packet in Wireshark from the intrusion investigation scenario; it shows the source and destination IP address and the next layer protocol, TCP.

The Transmission Control Protocol (TCP) is a connection-mode service, often called a virtual-circuit service that enables transmission in a reliable, sequenced manner that is analogous to a telephone call. TCP differs from the User Datagram Protocol (UDP), which is connectionless, meaning that each datagram is treated as a self-contained unit rather than part of a continuous transmission, and delivery of each unit is not guaranteed—analogous to a postal letter. Both TCP and UDP use ports to keep track of the communication session. By accepted convention, most ports used by most server applications are standardized. World Wide Web servers listen on port 80, FTP servers on port 21, DNS servers on port 53, SMB on port 445, and so forth. Clients are not normally so restricted since they can tell the server what client port they are using when they make their initial request to the server. A complete list of all TCP and UDP ports and services are defined in RFC 1700, which can be found at the following resource: www.ietf.org/rfc/rfc1700.txt. From an investigative standpoint, it is important to know that services can run on alternative ports; this reference is just a guideline for administrators to follow when configuring the server.

A TCP virtual-circuit is initiated using a process known as the three-way hand shake, illustrated in Figure 9.6.

The client informs the server that it wants to initiate a connection by sending a packet that is known commonly as a SYN packet—a packet containing the special SYN bit. This SYN packet also contains a sequence number that will be incremented in each subsequent packet that is sent, which enables the server to maintain the order of packets even if they are not received in their proper order. 1 When the server is ready to communicate, it responds with a packet that contains a SYN bit and an additional acknowledgement (ACK) bit. This packet also contains a sequence number that enables the client to maintain the order of packets as they are received from the server, and an ACK sequence number that informs the client of the next expected packet.

1Initial sequence numbers were originally obtained in a predictable manner but this allowed for a specific form of attack known as IP spoofing. Therefore, initial sequence numbers in more recent implementations of TCP are randomized so that an attacker cannot predict them.

Once this acknowledgement packet is received, the client can begin sending data to the server in what is called a “flow”, and will send as many packets as are necessary to convey its message. When the client has finished sending data, it closes the virtual-circuit by sending a packet containing a FIN bit. Significantly, whereas a flow is unidirectional, a TCP session is bidirectional, allowing data to be sent in both directions. Thus, a TCP connection is comprised of two flows, one from the client to the server, and another from the server to the client. 2

2Both client and server use their own sequence numbers to enable full-duplex communication (Stevens, 1994).

In Figure 9.7, there is an example of a TCP segment in Wireshark from the intrusion investigation scenario; it shows the source and destination ports, the flags that were set and the sequence and acknowledgement numbers. For more details regarding the TCP/IP specification refer to Comer and RFC 768, RFC 791, RFC 792, and RFC 793.

In the displayed packet, the information inside the delivery layers is often formatted according to other protocols that are specific to the server being addressed on the destination system or to the type of transaction taking place between the source and the destination systems. Next we examine some of these higher-level protocols.

HyperText Transfer Protocol (HTTP)

HTTP is an application layer protocol used for transferring information between computers on the World Wide Web. HTTP is based on a request/response standard between a client; usually the host and a server, a web site. The client initiates a request for a particular resource via a user agent and establishes a TCP connection usually on port 80 with a server. The server responds to the request with a status line and additional information that should include the resource requested. Resources to be accessed by HTTP are identified by Universal Resource Identifier (URI), which functions as a pathname to the resource. A resource can include all forms of data such as text, images, or multimedia shared on the Internet.

HTTP is defined in RFC 1945 (HTTP/1.0) and RFC 2068 (HTTP/1.1). The RFC related to a protocol provides information that is helpful for understanding related log files. Another protocol, called the File Transfer Protocol or FTP (defined in RFC 0959), enables individuals to transfer files from one computer to another over the Internet.

As an investigator, it is important to understand the basic structure of HTTP because web browsing can be used for any online communication. Although HTTP is usually configured on TCP port 80, the administrator can configure the web server on any port. Furthermore, HTTP traffic can be encrypted with HTTP over TLS (Transport Layer Security), also called Secure HTTP (HTTPS). HTTPS typically uses TCP port 443, and though HTTPS still follows the HTTP standards, all the contents of the messages are encrypted, making it difficult to analyze the network traffic.

When conducting log analysis, it is important to understand the request method used by the client and the corresponding status code sent by the server. HTTP defines eight methods indicating the desired action to be performed on the requested resource. Table 9.3 summarizes the different actions that can be performed on the resource requested.

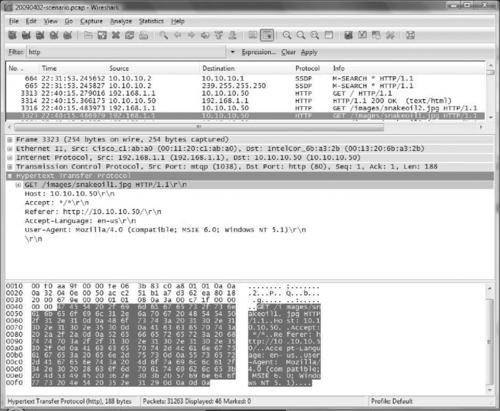

As an example of an HTTP request, Figure 9.8 shows a GET request for the snakeoil1.jpg image on the intranet server (10.10.10.50) from the intrusion investigation scenario.

The server status line is the initial line of the server response and it includes the HTTP version, the status code, and the status message. It is important to understand that the status code and status message indicates how the server is responding to the specific request. Table 9.4(a) and Table 9.4(b) summarizes the different categories of responses that the server might send.

| Success | Redirection | Client Errors | Server Error |

|---|---|---|---|

| 200Success | 300Data requested has moved | 404File not found | 500Internal error |

| 201Okay post | 301Found data, has a temp URL | 400Bad request | 501Method not |

| 202Okay processing | 302Try another location | 401Unauthorized access | Implemented |

| 203Partial information | 303Not modified | 402External redirect error | 5028Server overloaded |

| 204Okay no response | 304Success/not modified | 403Forbidden | 503Gateway timeout |

For instance, Figure 9.9 shows the HTTP success code (200) returned in response to the GET request for the snakeoil1.jpg image in Figure 9.8.

Each time a resource on a web server is accessed over the Internet, an entry is made in an access log on the server detailing which computer on the Internet was used to access which files at a certain time, as well as the HTTP return status code. Although the format of access log files depends on the web server and its configuration, they all resemble the Common Log Format (CLF) or extended CLF:

CLF: remote host, userID, date, time, request, status code, # bytes returned

Extended: remote host, userID, date, time, request, status code, # bytes returned, referring URL, browser

On Microsoft web services, these logs are generally located in %systemroot%system32logfiles in a subdirectory associated with the server in question (e.g., W3SVC, FTPSVC) and have a slightly different format from the CLF. The following IIS web server log associated with the request for the snakeoil1.jpg image in Figure 9.9 is shown here:

2009-04-03 22:38:10 W3SVC1 10.10.10.50 GET /images/snakeoil1.jpg - 80 - 192.168.1.1 Mozilla/4.0+(compatible;+MSIE+6.0;+Windows+N T+5.1) 200 0 0

We were called in to investigate suspected theft of credit cards from an e-commerce site. Forensic examination of the MSSQL database server used to store the credit cards showed no signs of compromise. However, the primary web server used to fulfill e-commerce functions did contain evidence of intrusion. Specifically, the web server access logs showed repeated SQL injection attacks that enabled the intruder to bypass the e-commerce application on the web server and directly access records in the backend SQL database. The log entries resembled the following, showing the IP address used by the intruder (changed to 192.168.14.24 here for sanitization purposes) as well as the customer record and associated credit card number that was obtained by the intruder.

2009-03-07 04:22:51 W3SVC WWWSRV1 10.1.0.12 GET/ecommerce/purchase.aspItemID=35745’%20=convert(int,(select%20top%201%20convert(varchar,isnull(convert(varchar,CreditCardExpires),’NULL’))%2b’/’%2bconvert(varchar,isnull(convert(varchar,CreditCardName),’NULL’))%2b’/’%2bconvert(varchar,isnull(convert(varchar,CreditCardNumber),’NULL’))%2b’/’%2bconvert(varchar,isnull(convert(varchar,CreditCardType),’NULL’))%2b’/’%2bconvert(varchar,isnull(convert(varchar,CustomerID),’NULL’))%2b’/’%2bconvert(varchar,isnull(convert(varchar,payID),’NULL’))%20from%20EPayment%20where%20right(CreditCardExpires,2)%20not%20in%20(‘01’,’05’,’04’,’03’,’02’)%20and%20CreditCardExpires%20not%20in%20(‘01/2006’,’02/2006’,’03/2006’,’04/2006’,’05/2006’,’06/2006’,’07/2006’,’08/2006’)%20and%20CustomerID%3E’0000000’%20order%20by%20CustomerID))--sp_password½230½80040e07½Syntax_error_converting_the_varchar_value_’04/2010/Joe_Blow/1234567891011121314/1/20/27981’_to_a_column_of_data_type_int.80 - 192.168.14.24 HTTP/1.1Mozilla/5.0+(Windows;+U;+Windows+NT+5.1;+en-US;+rv:1.8.0.6)+Gecko/20060728+Firefox/1.5.0.6 -www.ecommerce1.com5000 0 2613 1341 312

Searching the web access logs further revealed the initial vulnerability scan the intruder launched against the system from a different IP address, and all subsequent exploration and unauthorized access events. The log entries showed that, over a period of days, the intruder was able to extract every credit card from the customer database.

Server Message Block (SMB)

Server Message Block (SMB) is an application layer protocol originally developed by Microsoft that runs on top of other protocols to provide remote access to files, printers, and other network resources. SMB uses a client/server approach where the client initiates a request for resources and the server responds accordingly. Also, the server side service port will depend on the underlying transport protocol; SMB over TCP/IP commonly uses port 445 but the older NetBIOS API uses TCP port 139. While SMB is primarily a Windows file sharing protocol, it allows non-Windows machines to share resources in a fashion similar to that of native NetBIOS (see www.samba.org). As the protocol has evolved there are other implementations called Common Internet File System (CIFS).

The SMB protocol has many different commands, and a complete list is documented in SNIA (2002). The command sequence outlined in Table 9.5 is a typical message exchange for a client connecting to a server communicating with the SMB protocol.

| Client Command | Server Response |

|---|---|

| SMB_COM_NEGOTIATE | Must be the first message sent by a client to the server. Includes a list of SMB dialects supported by the client. Server response indicates which SMB dialect should be used. |

| SMB_COM_SESSION_ SETUP_ANDX | Transmits the user's name and credentials to the server for verification. Successful server response has UID field set in SMB header used for subsequent SMBs on behalf of this user. |

| SMB_COM_TREE_ CONNECT_ANDX | Transmits the name of the disk share (exported disk resource) the client wants to access. Successful server response has TID field set in SMB header used for subsequent SMBs referring to this resource. |

| SMB_COM_OPEN_ANDX | Transmits the name of the file, relative to TID, the client wants to open. Successful server response includes a file id (FID) the client should supply for subsequent operations on this file. |

| SMB_COM_READ | Client supplies TID, FID, file offset, and number of bytes to read. Successful server response includes the requested file data. |

| SMB_COM_CLOSE | Client closes the file represented by TID and FID. Server responds with success code. |

| SMB_COM_TREE_DISCONNECT | Client disconnects from resource represented by TID. |

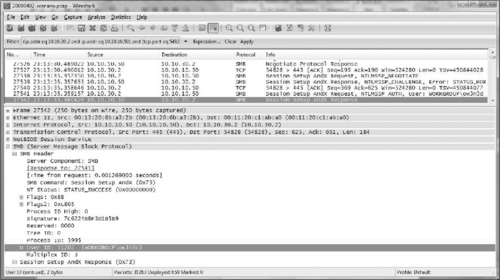

The SMB packet header contains significant information about the protocol in various header fields. Figure 9.10 shows the header and command code for an SMB_COM_NEGOTIATE packet that initiates an SMB session. Notice the protocol identifier xffx53x4dx42 in hexadecimal at the beginning of the packet that is common to all SMB packets. This hexadecimal value could be useful when an investigator needs to search, filter, or create a custom signature for SMB packets.

The next byte in an SMB packet contains the command code, indicating the type of SMB traffic such as in Table 9.5. Table 9.6 provides the hexadecimal values for some common SMB command codes.

Digital investigators can extract various details about a specific SMB session, like the username involved and resources accessed, by understanding the relationship of the process id (PID), multiplex id (MID), user id (UID), and tree id (TID) fields in the protocol header. The PID is set by the client to identify the specific request made to the server, and the MID field is used to keep track of multiple requests made by the same process. The UID field is set by the server once the user has authenticated, and the TID field identifies connections to shares once the connection has been established.

Figure 9.11 shows the PID, MID and UID fields for an SMB_COM_SESSION_SETUP_ANDX packet from the intrusion investigation scenario. All communications associated with a particular SMB session will have the same UID, providing digital forensic examiners with a useful value for searching and filtering as discussed in “Forensic Examination of Network Traffic,” later in this chapter.

Figure 9.12 shows an SMB_COM_TREE_CONNECT_ANDX packet from the intrusion investigation scenario containing the associated TID field and name of the disk share (SECRETFORMULAS) being accessed. Searching the network traffic for a specific TID field will produce all packets associated with access to the specific resource on the server.

Finally, Figure 9.13 shows another packet with the TID 61447, revealing the ow3n3d user transferring data from the Secret Formulas network share.

An intruder had gained unauthorized access to the core network of a major retailer and had obtained the password to a domain-level administrator account. The intruder was using this account to access NetBIOS shares on many Windows systems on the victim organization's internal network. Digital investigators captured network traffic of the intruder's activities and, by interpreting information in the SMB protocol, were able to determine which files were taken from particular systems and provided the resulting list of stolen assets to the client.

Security Event Logs on the server may contain log entries associated with SMB connections like account names used to authenticate, and the name and IP address of the client computer. For instance, a log entry associated with the preceding SMB connections from the intrusion investigation scenario is shown in Figure 4.5 of Chapter 4, “Intrusion Investigation.”

An organization discovered that an intruder had gained unauthorized access to its internal network and was looking for open network shares on Windows systems. We were asked to determine whether the intruder could have taken files from any of the systems that were targeted via SMB. An examination of Security Event logs on the systems of concern revealed that the intruder only had anonymous access to many of the systems. Anonymous access is the default connection type that SMB creates when a username is not provided, and Windows systems can be configured to prevent anonymous access to resources. Further examination of the systems of concern confirmed that the intruder would not have had access to files on the disk with just anonymous access.

As this section begins to demonstrate, investigating criminal activity that involves computer networks requires a familiarity with a variety of different protocols. For the investigator to understand the network traffic and the resulting network log entries, it will require some research on the part of the analyst to learn the different aspects of each protocol. Practical applications of interpreting network traffic using Wireshark and other utilities are covered in “Forensic Examination of Network Traffic,” later in this chapter.

Evidence Preservation on Networks

There are some unique forensic challenges associated with preserving digital evidence on networks. Although some network-related data are stored on hard drives, more information is stored in volatile memory of network devices for a short time or in network cables for an instant. Even when collecting relatively static information such as firewall log files, it may not be feasible to shut down the system that contains these logs and then make a bitstream copy of the hard drive. The system may be a part of an organization's critical infrastructure and removing it from the network may cause more disruption or loss than the crime. Alternately, the storage capacity of the system may be prohibitively large to copy. So, how can evidence on a network be collected and documented in a way that demonstrates its authenticity, preserves its integrity, and maintains chain of custody?

In the case of log files, it is relatively straightforward to make copies of the files, calculate their message digest values (or digitally sign them), and document their characteristics (e.g., name, location, size, MAC times). All this information can be useful for establishing the integrity of the data at a later date and digitally signing files is a good method of establishing chain of custody, provided only a few people have access to the signing key. A failure to take these basic precautions can compromise an investigation. In 2000, for example, an individual known as Maxus stole credit card numbers from the Internet retailer CD Universe and demanded a $100,000 ransom. When denied the money, he posted 25,000 numbers on a web site. Apparently, employees from one or more of the computer security companies that handled the break-in inadvertently altered log files from the day of the attack—this failure to preserve the digital evidence eliminated the possibility of a prosecution (Bunker 2000 and Villano, 2001).

Networked systems can also contain crucial evidence in volatile memory, evidence that can be lost if the network cable is disconnected or the computer is turned off. For instance, active network connections can be used to determine the IP address of an attacker. Methods and tools for preserving volatile data on Windows and UNIX systems are covered in Malware Forensics (Malin, Casey & Aquilina, 2008).

In addition to preserving the integrity of digital evidence, it is advisable to seek and collect corroborating information from multiple, independent sources. Last but not least, when collecting evidence from a network, it is important to keep an inventory of all the evidence with as much information describing the evidence as possible (e.g., filenames, origin, creation times/dates, modification times/dates, summary of contents). Although time-consuming, this process facilitates the pin-pointing of important items in the large volume of data common to investigations involving networks.

For detailed discussions about preserving various forms of data on a network, see Casey (2004a).

Collecting and Interpreting Network Device Configuration

Network devices are generally configured with minimal internal logging to conserve storage space and for optimal performance. Some network device functions are so thoroughly engineered to optimize performance that they are not normally logged at all. Although these devices can be configured to generate records of various kinds, the logs must be sent to a remote server for safekeeping because these devices do not contain permanent storage. Central syslog servers are commonly used to collect the log data.

In addition to generating useful logs, network devices can contain crucial evidence in volatile memory, evidence that can be lost if the network cable is disconnected or the device is shut down or rebooted. Routers are a prime example of this. Most routers are specialized devices with a CPU; ROM containing power on self-test and bootstrap code; flash memory containing the operating system; nonvolatile RAM containing configuration information; and volatile RAM containing the routing tables, ARP cache, limited log information, and buffered packets when traffic is heavy (Held & Hundley, 1999).

Routers are responsible for directing packets through a network to their destination and can be configured using Access Control Lists (ACLs) to make basic security-related decisions, blocking or allowing packets based on simple criteria. For instance, some organizations implement simple egress and ingress filtering in their border routers (blocking outgoing packets that have source addresses other than their own, and blocking incoming packets that contain source addresses belonging to them). This simple concept—only data addressed from the organization should be allowed out—greatly limits a malicious individual's ability to conceal his location. In some cases, digital investigators must document how a router is configured and other data stored in memory.

When detailed information contained in RAM is required, it may be necessary to connect to the network device and query it via its command interpreter. The most feasible way to examine the contents of RAM is to connect to the Cisco device via a console or over the network and query the router for the desired information. From a forensic standpoint, it is better to connect to the device locally using a console cable, but due to time and location the investigator may have to connect to the device across the network. When connecting across the network, the investigator should always use an SSH connection (secure) if available, in preference to a telnet session. Usually on a Cisco device, there are passwords protecting the console-line mode and the privilege exec mode in the CLI of the Cisco IOS. For additional information on how to connect to a Cisco device via a console cable check the following reference at www.cisco.com/en/US/products/hw/switches/ps700/products_tech_note09186a008010ff7a.shtml.

Cisco routers have different command line modes for executing different types of commands. User Exec Mode is the basic level of access presented when connecting to a router, and provides limited viewing of configuration settings as shown here.

cmdLabs> show users

LineUserHost(s)IdleLocation

*2 vty 0 idle00:00 pool-70-22-11-200.balt.verizon.net

Privileged Exec Mode generally requires a password and is accessed by typing enable at the User Exec Mode. This level of access provides full configuration information but cannot change settings on the device as shown here.

| cmdLabs# show ip interface brief | |||||

| Interface | IP-Address | OK? | Method | Status | Protocol |

| FastEthernet0/0 | 10.10.10.1 | YES | NVRAM | up | up |

| FastEthernet0/1 | 192.168.1.2 | YES | NVRAM | up | up |

| Loopback0 | 10.1.1.1 | YES | NVRAM | up | |

Global Configuration Mode is accessed by typing configure terminal (config t) at the Privileged Exec Mode. This level of access allows the user to change the settings on the device, for example, with the following command:

cmdLabs(config)# exception core-file DFI2/cmdLab_router

Much of the information collected from Cisco network devices can be obtained by running the show commands at the privileged exec mode on the command line interface of the Cisco IOS. The collection process can be documented by saving the HyperTerminal session and hashing the resulting file so that its integrity can be verified later. For example, the following output from a Cisco router shows portions of the results of the show clock details and show running-config commands.

cmdLabs_router#show clock detail

15:50:15.869 EST Wed May 13 2009

Time source is user configuration

cmdLabs_router#show running-config

Building configuration…

Current configuration : 2593 bytes

!

! Last configuration change at 15:45:38 EST Wed May 13 2009

! NVRAM config last updated at 15:45:46 EST Wed May 13 2009

!

version 12.3

service timestamps debug datetime msec

service timestamps log datetime msec

service password-encryption

!

hostname cmdLabs_router

!

boot-start-marker

boot-end-marker

!

logging buffered 51200 warnings

enable secret 5 $1$FU94$vZKrjHD75AkECB4IrMTdW1

!

username cmdlabs privilege 15 secret 5 $1$.g2n$7JBa2JiOWDb4ZppSYT40G/

clock timezone EST -5

!

ip ftp username anonymous

ip ftp password 7 151305030A33262B3D20

no ip domain lookup

ip domain name yourdomain.com

<cut for brevity>

ip http server

ip http authentication local

ip flow-export source Loopback0

ip flow-export version 5

ip flow-export destination 10.10.10.10 9990

ip flow-aggregation cache as

export destination 10.10.10.10 9991

enabled

!

ip classless

ip route 0.0.0.0 0.0.0.0 192.168.1.1

!

!

banner motd ^C This is the cmdLab Router Authorized Access Only ^C

!

exception core-file cmdLabs_router

exception protocol ftp

exception region-size 65536

exception dump 10.10.10.100

!

!

end

Table 9.7 shows other Cisco commands that can be run to collect configuration information from the device.

As of the Cisco IOS release 11.2 the command show tech-support will allow for the collection of multiple sources of configuration information from the Cisco device. From a forensic standpoint, this is done by limiting the number of commands issued, and simplifying the collection process. This single command will contain the same output as:

show version

show running –config

show stacks

show interface

show controller

show process cpu

show process memory

show buffers

Cisco Core Dumps

A core dump is a full copy of your router's memory. A router can be configured to write a core dump when the device crashes, and an investigator can manually create a core dump without rebooting the device by running the write core command in Privileged Exec Mode. The Cisco IOS can store or transfer the core dump file using various methods, but Cisco recommends using File Transfer Protocol (FTP) to a server attached to the router (Cisco, 2009). The following commands configure the FTP server authentication for the location to save the core dump:

cmdLabs# conf t

cmdLabs(conf)# exception core-file ROUTERNAME

cmdLabs(conf)# exception dump FTPSERVER

cmdLabs(conf)# exception protocol ftp

cmdLabs(conf)# exception region-size 65536

If the FTP server requires authentication, the correct username and password must be specified as follows:

cmdLabs(conf)# ip ftp username USERNAME

cmdLabs(conf)# ip ftp password PASSWORD

Then, to dump the contents of memory and send it to the FTP server, type write core in Privileged Exec Mode and you should see something like the following:

cmdLabs_router#write core

Remote host [10.10.10.100]?

Base name of core files to write [temp/cmdLab_router]?

Writing temp/cmdLab_routeriomem!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

Writing temp/cmdLab_router!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

cmdLabs_router#

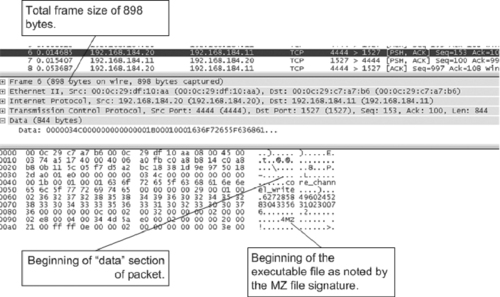

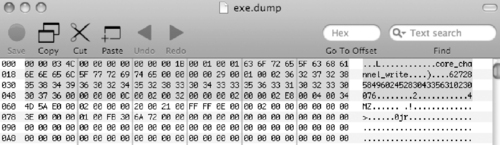

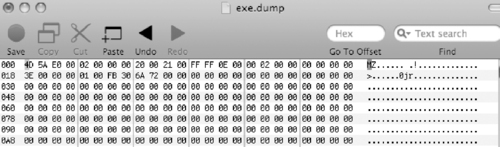

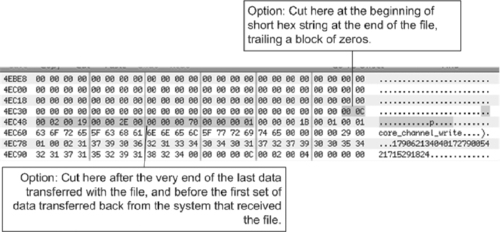

Analysis of the core dump file can be difficult, although the core dump can be viewed in a hex editor to extract some information as shown in Figure 9.14.

Core dump files can be submitted to Security Labs for basic analysis through its web site (http://cir.recurity.com/cir/), and it sells a tool with additional functionality, including extraction of packet headers into file in packet capture, or pcap, format that can be viewed using network traffic analysis tools.

Firewalls

A firewall is a device that filters network traffic, restricting access to protected computer systems. Like a router, a firewall usually sends its logs to another computer for easy management and long-term storage but can also keep a list of recent log entries in its memory. Firewall logs generally show attempts to contact secured systems that were not permitted by the firewall configuration, and are not always specific about the reason the attempts were blocked. Typically, the computer attempting to access a machine behind the firewall without authorization will generate firewall activities as illustrated by the following Cisco Private Internet eXchange (PIX) firewall log segment.

Jun 14 10:00:07 firewall.secure.net %PIX-2-106001: Inbound TCP connection denied from 10.14.21.57/41371 to 10.14.42.6/113 flags SYN

Jun 14 10:00:07 firewall.secure.net %PIX-2-106001: Inbound TCP connection denied from 10.14.43.23/2525 to 10.14.40.26/139 flags SYN

The format of these log entries is similar to those of a router, starting with the date and time, followed by the name of the firewall, the PIX alert information (facility, severity, and message ID), the action, source, and destination. Additional information about PIX alerts is available at Cisco PIX (2000).

Firewall logs can be even more useful in an investigation when logging is enabled to record successful connections as shown here with a Cisco ASA device.

Apr 02 2009 23:12:23: %ASA-6-302013: Built inbound TCP connection 18 for dmz:10.10.30.2/54828 (10.10.30.2/54828) to inside:10.10.10.50/445 (10.10.10.50/445) (10.10.10.50/445)

Apr 02 2009 23:27:17: %ASA-6-302014: Teardown TCP connection 18 for dmz:10.10.30.2/54828 to inside:10.10.10.50/445 duration 0:14:54 bytes 33114 TCP FINs FINs

These two log entries are from the intrusion investigation scenario and show the SSH server (10.10.30.2 in the DMZ) connected to port 445 on the intranet server (10.10.10.50 on the secure network). The first log entry shows a TCP connection being established at 23:12 and the second log entry shows the session being ended at 23:27, which corresponds to the theft of trade secrets from a network file share.

Virtual Private Networks

Many organizations use Virtual Private Networks (VPN) to allow authorized individuals to connect securely to restricted network resources from a remote location using the public Internet infrastructure. For instance, an organization might use a VPN to enable traveling sales representatives to connect to financial systems that are not generally available from the Internet. Using a VPN, sales representatives could dial into the Internet as usual (using low cost, commodity Internet service providers) and then establish a secure, encrypted connection the organization's network. A VPN essentially provides an encrypted tunnel through the public Internet, protecting all data that travels between the organization's network and the sales representative's computer.

Newer operating systems, including Windows 2000/XP/Vista have integrated VPN capabilities, implementing protocols like Point to Point Tunneling Protocol (PPTP) and IPsec to establish VPN. Newer network security devices like the Cisco ASA and Juniper SA Series also support VPN services via SSL, enabling users to establish a virtual connection simply using a web browser.

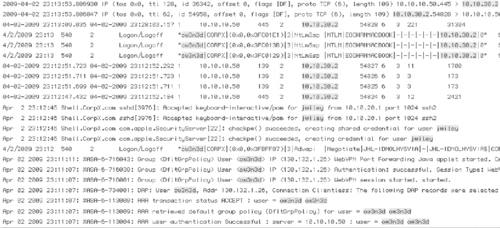

Digital investigators most commonly encounter VPN logs as a source of evidence associated with remote users accessing secured resources within the network from the Internet. The following logs from the Intrusion Investigation scenario for this chapter shows the ow3n3d user account authenticating with the VPN from a computer on the Internet with IP address 130.132.1.26.

Apr 02 2009 23:11:07: %ASA-6-113004: AAA user authentication Successful : server = 10.10.10.50 : user = ow3n3d ow3n3d

Apr 02 2009 23:11:07: %ASA-6-113009: AAA retrieved default group policy (DfltGrpPolicy) for user = ow3n3d ow3n3d

Apr 02 2009 23:11:07: %ASA-6-113008: AAA transaction status ACCEPT : user = ow3n3d ow3n3d

Apr 02 2009 23:11:07: %ASA-6-734001: DAP: User ow3n3d, Addr 130.132.1.26, Connection Clientless: The following DAP records were selected for this connection: DfltAccessPolicy DfltAccessPolicy

Apr 02 2009 23:11:07: %ASA-6-716001: Group <DfltGrpPolicy> User <ow3n3d> IP <130.132.1.26> WebVPN session started. started.

Apr 02 2009 23:11:07: %ASA-6-716038: Group <DfltGrpPolicy> User <ow3n3d> IP <130.132.1.26> Authentication: successful, Session Type: WebVPN. WebVPN.

Apr 02 2009 23:11:07: %ASA-6-302013: Built inbound TCP connection 4 for outside:130.132.1.26/1484 (130.132.1.26/1484) to NP Identity Ifc:130.132.1.25/443 (130.132.1.25/443) (130.132.1.25/443)

Apr 02 2009 23:11:07: %ASA-6-725001: Starting SSL handshake with client outside:130.132.1.26/1484 for TLSv1 session.

<cut for brevity>

Apr 02 2009 23:29:20: %ASA-6-302014: Teardown TCP connection 19 for outside:130.132.1.26/1495 to NP Identity Ifc:130.132.1.25/443 duration 0:02:12 bytes 2591 TCP Reset-O Reset-O

Dedicated VPN network devices are available that implement protocols such as Layer 2 Tunneling Protocol (L2TP) and IPsec. One such device is used in the following case example to demonstrate how information from a VPN server can be useful in an investigation. The following case example demonstrates how to investigate an attack against a firewall coming from the Internet via a VPN.

This case example demonstrates how information gathered from running systems may be useful in an investigation, provided the information is documented thoroughly. However, we rarely catch intruders in the act, which emphasizes the importance of establishing reliable logging on all critical networked systems to support investigations after the fact as discussed in Chapter 4, “Intrusion Investigation.”

A system administrator notices that an intruder is actively connected to the organization's main PIX firewall (Figure 9.15) and immediately contacts the Computer Incident Response Team. Knowing that a direct connection to the PIX using SSH does not show up in the list of connected users in PIX software version 5.2(3), investigators connect without fear of alerting the intruder of their presence. 3 The who command shows that the intruder is connected through the organization's VPN.

pix# who

1: 192.168.120.4 (pc4.vpn.corpX.com)

The investigators then examine the active connections through the firewall to determine which protected servers the intruder is accessing. Using the show conn command to list all connections from 192.168.120.4 indicates that the intruder is connected to two servers using SSH (port 22). 4

4The UIO flags indicate that the connection is Up and that data is being transmitted In, through and Out of the PIX.

pix# show conn foreign 192.168.120.4 255.255.255.255

5947 in use, 31940 most used

TCP out 192.168.120.4:2189 in 192.168.50.5:22 idle 0:14:06 Bytes 6649925 flags UIO

TCP out 192.168.120.4:2382 in 192.168.50.22:22 idle 0:00:01 Bytes 5061 flags UIO

Whenever an examination must be performed on an active computer, the investigators perform analysis and collection tasks simultaneously. For instance, while listing the active connections through a firewall, investigators determine which connections are of interest and narrow their search accordingly. Similarly, to determine if the intruder changed the configuration and is connecting through the firewall via a newly opened hole, the investigators list the current configuration and compare it with a backup copy of the original configuration. This comparison indicates that a new rule was inserted to permit access from the VPN to server that contained highly sensitive, proprietary information. 5 Note that printing the command history can be used to document actions—the following listing shows that more commands were executed than were just presented:

5This reconfiguration suggests that the intruder is highly skilled and knows which systems have information of interest.

pix# show history

enable

show version

show clock

who

show config

show logging

show arp

show conn

show conn foreign

show conn foreign 192.168.120.4 255.255.255.255

show conn lport 10-100

show conn lport 22

show conn state

show history

In this case, to determine the user account used to connect through the VPN server, investigators connect to the server via its web interface and obtain a list of active sessions. This list indicates that user4 is assigned 192.168.120.4 by the VPN server.

VPN Concentrator Type: 3030

Bootcode Rev: Cisco Systems, Inc./VPN 3000 Concentrator Series Version 2.5.Rel Jun 21 2000 18:57:52

Software Rev: Cisco Systems, Inc./VPN 3000 Concentrator Series Version 2.5.2 (D) Oct 26 2000 15:18:42

Up Since: 12/18/2000 07:45:27

RAM Size: 128MB

| User Name | Public IP Address | Assigned IP Address | Protocol | Encryption | Login Time | Duration | Bytes Tx | Bytes Rx |

|---|---|---|---|---|---|---|---|---|

| user1 | 64.252.34.247 | 192.168.120.1 | PPTP | RC4-40 Stateless | Feb 19 07:16:11 | 9:27:59 | 173066 | 81634 |

| user2 | 205.167.18.179 | 192.168.120.2 | PPTP | RC4-128 Stateless | Feb 19 08:42:08 | 8:02:02 | 2651367 | 409564 |

| user3 | 64.252.65.193 | 192.168.120.3 | PPTP | RC4-40 Stateless | Feb 19 08:46:16 | 7:57:54 | 307537 | 90636 |

| user4 | 64.252.7.79 | 192.168.120.4 | PPTP | RC4-40 Stateless | Feb 19 13:58:35 | 2:45:35 | 1146346 | 258365 |

| user5 | 65.2.201.230 | 192.168.120.5 | PPTP | RC4-40 Stateless | Feb 17 08:03:33 | 56:40:37 | 88055544 | 37564000 |

| user6 | 63.22.219.90 | 192.168.120.6 | PPTP | RC4-128 Stateless | Feb 19 10:21:18 | 6:22:52 | 88556 | 9861816 |

| user7 | 64.252.36.212 | 192.168.120.7 | PPTP | RC4-40 Stateless | Feb 19 15:35:47 | 1:08:23 | 13430 | 14978 |

| user8 | 24.50.21.175 | 192.168.120.8 | PPTP | RC4-128 Stateless | Feb 19 11:02:00 | 5:42:10 | 2323577 | 469812 |

| user9 | 64.252.97.103 | 192.168.120.9 | PPTP | RC4-40 Stateless | Feb 18 20:51:41 | 19:52:29 | 9858730 | 4715345 |

The individual responsible for this account is connected from her home computer. A search of her home computer shows that she gained unauthorized access to many of the organization's most important systems and had stolen a significant amount of proprietary information. The individual had been recently hired and had used her position within the organization to steal valuable information and sell it to competitors.

NetFlow

A growing number of routers (e.g., Cisco, Juniper, Extreme Networks) have a logging feature called NetFlow (Juniper calls it J-Flow data) that is invaluable in network investigations. NetFlow logs provide detailed information about network activities without capturing network content, thus providing high fidelity network visibility while avoiding the cost and privacy concerns of capturing full packet contents. Security-conscious organizations take full advantage of the power and lightweight nature of NetFlow logs (to say nothing of the price), collecting NetFlow logs from both internal and border routers to obtain greater visibility and situational awareness of their network activities.

When the NetFlow feature is enabled, routers record information about each flow, including the start and end times for the flow, source and destination IP addresses and port numbers, and the number of packets and octets in the flow. In the case of Internet Control Message Protocol (ICMP) traffic, the ICMP type and subtype are recorded in the destination port field of the NetFlow records. Routers export flow information in a datagram called a Protocol Data Unit (PDU), which records one direction of the connection. The exact content of a PDU depends on the version of NetFlow being used but they all contain the current time according to the router, start and end times of the flow, source and destination IP addresses and ports of the flow, and the number of packets and bytes in the flow (NetFlow Export, 1998). NetFlow datagrams are sent to a system with a specialized NetFlow collector program listening on the specified port (8880). The flow-tools package is a free set of utilities for collecting and analyzing NetFlow logs as demonstrated further in this section.

Seeing all of the flows to and from a machine can be very useful in an investigation (Plonka, 2000). For instance, if a computer is compromised, the related NetFlow logs will show the source of the attack, the protocols used, ports accessed, amount of data transferred, and more. Once the source of the attack is known, the NetFlow logs can be searched for other machines on the network that were targeted by the attacker. As mentioned at the beginning of this chapter, looking at connections to compromised hosts at the time of attack can help pinpoint the attacker, as well as other hosts that were targeted by the same IP address. Of course, a detailed analysis of the compromised host is required to determine the results of each action observed using NetFlow (e.g., which files were downloaded via FTP). Also, the contents of each packet can be important (e.g., identifying a buffer overflow exploit), in which case you would need to analyze the contents of network traffic as discussed in detail later in this chapter.

System administrators received an alert from their host-based IDS that a server had been compromised in the middle of the previous night. A preliminary forensic examination of the system revealed that system logs had been deleted, and recovered logs were incomplete. Intrusion detection system logs showed some activities relating to the attack, but not enough to gain a full understanding of the intruder's activities. NetFlow logs not only showed the connection associated with the remote exploitation of the compromised system, they also showed earlier reconnaissance activities (the intruder methodically scanning hosts on the network for vulnerable hosts), but also subsequent connections the intruder initiated from the compromised system, including obtaining rootkit files from a remote storage location on the Internet, and installing patches to prevent other malicious individuals from gaining unauthorized access to the system.

The maximum duration of a NetFlow record is 30 minutes, so longer TCP sessions will have multiple flow records spread over time. In addition, when no traffic for the flow has been seen in 15 seconds, the NetFlow record will be exported, requiring a new record to be initiated if additional traffic is transferred for that flow. Other events can cause a new NetFlow record to be started, like the flow table on the router reaching its maximum capacity and needing to export and flush older logs to make space for new ones. Therefore, it is often necessary to combine multiple flow records to get all information about a particular TCP session. In these cases, the TCP flags field can be used to determine whether a flow represents data from the start, middle, or end of the TCP session. Flows from the start of a session will have the SYN (but not FIN or RST) bit set, flows from the middle of the session will typically have no flag bits set, and flows from the end of the session will have the FIN or RST bits set (but not SYN).

The flow-tools package is a free, open source collection of utilities for processing and analyzing NetFlow logs (www.splintered.net). When responding to an incident it can be informative to obtain a high-level overview of activities before drilling down into specifics. When the source or target of an attack is not known, the utility named flow-stat can be employed to extract useful patterns from NetFlow logs. For instance, to determine which hosts on the network are sending the most data out of the network, use flow-stat as shown below to generate a report of source IP addresses (–f9), with a descending sort of the third field; octets (–S3). This command output shows that IP address 10.10.10.50 is sending the most data out of the network. The flow-cat program simply reads one or more flow logs and concatenates their contents and send their results to standard out.

$ flow-cat -p /var/flow/insiderouter/2009/ 2009-04/2009-04-02/ ∣ flow-stat -f9 -p -S3 -T “High Exfiltration”

# --- ---- ---- Report Information --- --- ---

#

# Title:High Exfiltration

# Fields:Total

# Symbols:Disabled

# Sorting:Descending Field 3

# Name:Source IP

#

# Args:flow-stat -f9 -p -S3 -T High Exfiltration

#

#

# mode:streaming

# capture start:Thu Apr 2 21:30:24 2009

# capture end:Sun Apr 5 16:45:28 2009

# capture period:242104 seconds

# compress:off

# byte order:little

# stream version:3

# export version:5

# lost flows:0

# corrupt packets:0

# capture flows:789

#

#

# IPaddrflowsoctetspackets

#

10.10.10.504333286296858

10.10.10.10621858281111

10.10.10.212545048554

The -p option in the preceding command instructs flow-stat to include a summary of NetFlow metadata in the report, including the time period covered by the logs, the total number of flows, and any lost flows or corrupt packets.

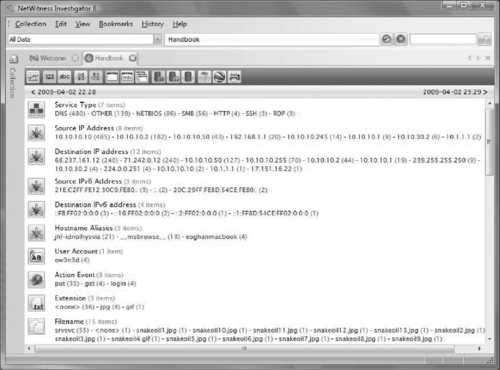

NetFlow logs are most valuable to a network investigator when they are used to obtain an overview of transactions between attacker and victim hosts. As shown in Figure 9.16, the flow-stat source-destination IP address report format (–f10) reveals which source and destination hosts account for the bulk of traffic on the network, with results shown as a percentage of the total.

$ flow-cat /var/flow/insiderouter/2009/ 2009-04/2009-04-02/ ∣ flow-stat -f10 -p -P -S3

<cut for brevity>

# src IPaddrdst IPaddrflowsoctetspackets

#

10.10.10.50192.168.1.13.54995.08677.344

10.10.10.5010.10.30.20.7601.0633.004

10.10.10.1068.237.161.1230.4180.9955.632

This NetFlow output shows that most traffic is being sent to the Internet via the border gateway (192.168.1.1), and the next highest exchange of data was with a host on the DMZ (10.10.30.2). Further review of the detailed NetFlow records for these high exfiltration systems is warranted.

The flow-print utility simply takes the binary NetFlow files and converts them to plain text, displaying different fields depending on the report format specified. One of the more useful report formats for network investigations (−f5) is shown here, with NetFlow logs sorted by end time:

$ flow-cat /var/flow/insiderouter/2009/2009-04/2009-04-02/ ½ flow-filter -f test -Dattacker -Svictim ½ flow-print -f5

StartEndSifSrcIPaddressSrcPDIfDstIPaddressDstPPFlPktsOctets

0402.21:56:03.6660402.21:56:09.772110.10.10.50445210.10.30.2 54823 63 6987

0402.22:12:51.6990402.22:12:51.711110.10.10.50139210.10.30.2 54825 63 3173

0402.22:12:51.7110402.22:12:51.723110.10.10.50139210.10.30.2 54826 63 3173

0402.22:12:47.4320402.22:12:52.184110.10.10.50445210.10.30.2 54824 63 12 2421

0402.22:12:51.7230402.22:12:52.292110.10.10.50139210.10.30.2 54827 63 11 1700

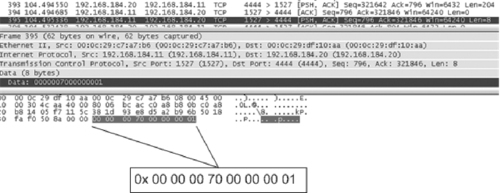

0402.22:13:09.0350402.22:28:03.157110.10.10.50445210.10.30.2 54828 63 22131304

This output includes the start and end time of the flow, source, and destination IP address and TCP or UDP ports, IP protocol type, the input and output interface numbers for the device where the NetFlow record was created, TCP flags, and a count of the number of octets and packets for each flow. In the preceding example we have removed several of the output fields to make it more readable. The column labeled “P” is the IP protocol type (6 is TCP, 17 is UDP). The column labeled “Fl” is the logical OR of all the TCP header flags seen (except for the ACK flag). The last two columns, labeled “Pkts” and “Octets” show the total number of packets and octets for each flow. The date-time stamps in the preceding NetFlow logs are printed as MMDD.HH:MM:SS.SSS, so a timestamp of 0402.22:13:09.035 represents the time 22:13:09.035 on April 2. Observe that the year is not present in the date-time stamp. Therefore, NetFlow logs could be incorrect by a factor of years if the router clock is not set correctly, which can be troublesome from an investigative standpoint unless the clock of the router was checked for accuracy.

In some investigations it can be convenient to import this information into a spreadsheet for examination as shown in Figure 9.16. The spreadsheet filter can be used just to display certain NetFlow records, like those containing IP address 10.10.10.50, or just traffic to or from port 80.

Alternately, the flow-filter utility can be used to extract NetFlow records meeting specific criteria. The following command lists all NetFlow records with a source port 80, revealing that all the connections to port 80 on 10.10.10.50 came via the VPN (192.168.1.1).

$ flow-cat /var/flow/insiderouter/2009/2009-04/2009-04-02/ ½ flow-filter -p80 ½ flow-print -f5

StartEndSifSrcIPaddressSrcPDIfDstIPaddressDstPPFlPktsOctets

0402.21:39:54.0010402.22:39:56.044110.10.10.50802192.168.1.11040 6394127167

0402.21:39:53.7680402.22:39:54.177110.10.10.50802192.168.1.11037 62139193506

0402.21:39:53.9770402.22:39:54.201110.10.10.50802192.168.1.11038 62114156792

0402.21:39:53.9970402.22:39:54.201110.10.10.50802192.168.1.11039 62171237835

0402.21:40:56.2510402.22:41:00.526110.10.10.50802192.168.1.11037 613156

0402.21:40:56.2550402.22:41:00.526110.10.10.50802192.168.1.11038 613156

0402.21:40:56.2550402.22:40:56.255110.10.10.50802192.168.1.11039 601 52

0402.21:41:34.8540402.22:41:34.854110.10.10.50802192.168.1.11039 612 104

To list just the flows between 10.10.10.50 and 10.10.30.2, use flow-filter with a configuration file (flow.acl) containing the following access lists:

ip access-list standard attacker permit host 10.10.30.2

ip access-list standard victim permit host 10.10.10.50

The flow-filter utility uses Cisco standard Access Control Lists (ACLs) to determine which records to extract from NetFlow logs. In this scenario, the preceding ACLs set the attacker IP address to 10.10.30.2 and the victim IP address to 10.10.10.50. The following command reads these ACLs from the flow.acl file and extracts NetFlow records with a destination IP address matching the attacker and source IP address matching the victim IP address. In more complex investigations, multiple IP addresses could be specified in the attacker and victim ACLs, providing powerful and comprehensive log extraction capabilities.

$ flow-cat /var/flow/insiderouter/2009/2009-04/2009-04-02/ ½ flow-filter -f flow.acl -Dattacker -Svictim ½ flow-print

srcIPdstIPprotsrcPortdstPortoctetspackets

10.10.10.5010.10.30.26445548239876

10.10.10.5010.10.30.26139548251733

10.10.10.5010.10.30.26139548261733

10.10.10.5010.10.30.2644554824242112

10.10.10.5010.10.30.2613954827170011

10.10.10.5010.10.30.264455482831304221

The power of flow-filter becomes more apparent when there are multiple attacker or victim systems. By simply adding an ACL line to the attacker group in the flow.acl file for each attacking IP address as shown here, you can instruct flow-filter to provide a single, comprehensive list of malicious flows:

ip access-list standard attacker permit host 10.10.30.2

ip access-list standard attacker permit host 192.168.1.1

ip access-list standard victim permit host 10.10.10.50

In addition to the flow-filter utility, flow-tools includes the flow-nfilter and flow-report utilities, which permit the use of more detailed specifications for data selection and output.

Other available tools for processing NetFlow data include SiLK (http://tools.netsa.cert.org/silk/), NfSen (http://nfsen.sourceforge.net), and Orion NetFlow Traffic Analyzer (NTA) from Solarwinds (www.solarwinds.com/products/orion/nta).

There are several ways that error can be introduced when dealing with NetFlow logs. First, NetFlow PDUs are exported when a flow ends, resulting in a log file with entries sorted by flow end times. This unusual ordering of events can be very confusing and can cause examiners to reach incorrect conclusions. Therefore, it is advisable to sort NetFlow logs using the start time of each flow before attempting to interpret them. Tools such as flow-sort are designed specifically for this purpose, and many NetFlow utilities include sort options specified using –s and –S arguments. Second, a NetFlow record does not indicate which host initiated the connection, only that one host sent data to another host. Therefore, it is necessary to infer which host initiated the connection, for example, by sorting the relevant flows using their start times to determine which flow was initiated first.

When dealing with NetFlow as a source of evidence, digital investigators need to be cognizant of the fact that flow records exported from a router are encapsulated in a UDP datagram and may not reach the intended logging server. Therefore, like syslog, NetFlow logs may not be complete. Fortunately, newer versions of NetFlow records contain a sequence number that can be used to determine if any records are missing or if forged records have been inserted.

A server that contained PII was compromised and used to store and disseminate pirated movies. Digital investigators were asked to ascertain whether the intruders had taken the PII. A forensic examination of the server itself was inconclusive, but the organization provided NetFlow data that had the potential to show whether or not the intruders had accessed the SQL database that contained the data of concern. Unfortunately, a preliminary inspection of the NetFlow logs revealed that a substantial number of records were missing, most likely due to NetFlow UDP packets never reaching the collection server. The incomplete NetFlow logs contained very limited information relating to the compromised server during the time of interest. As a result, it was not possible to determine whether the PII had been stolen.

Authentication Servers

Networks with large numbers of users must maintain a central repository of usernames and passwords, although the mechanism used to authenticate users may vary. For instance, when a home user connects to the Internet using a dial-up or DSL modem, the Internet Service Provider requires a username and password. These credentials are then passed on to an authentication server for validation. After users are authenticated successfully, they are assigned an IP address and a connection is established. A similar process occurs when an individual establishes a VPN connection into an organization's network.

The most common authentication protocols in this context are RADIUS (Remote Authentication Dial In User Service) and TACACS, and both routinely log information that can be useful in an investigation, including the IP address that was assigned to a given user account during a particular time period.

RADIUS

RADIUS logs are generally difficult to read because a single event generates multiple log entries, and the multirecord entry format varies somewhat with the type of event recorded, as shown in Table 9.8.

In order to simplify correlation of RADIUS logs with other formats (or just to read them at all), it's very helpful to select the fields you consider important and then write those fields from each logical record into a corresponding, one line, physical record.

When correlating logs from different sources, recorded in different formats, the first thing to do is to determine what information in each type of log record is worth extracting. In the case of RADIUS logs, there is a lot of information of interest to the network and RADIUS administrators that may not be particularly useful for event reconstruction. The key to correlating logs is to identify what you will need every log record to have in common (a timestamp, at least) and then what extra information specific to each type of log you wish to preserve as well.

What you are really doing is establishing your own log format for that investigation and then converting records from those different sources to that format. Correlating the records is then a very straightforward process.

The following Perl script will convert the multiline RADIUS records in Table 9.8 into short, one-line, summary records:

#!/usr/bin/perl

$/ = ''; # set paragraph mode on input record separator

my @fields = qw/User-Name Acct-Status-Type Framed-IP-Address/;

while ( <> )

{

chomp;

my @records = split /s*[=

]s*/;

$timestamp = shift @records;

s/^s+// for @records;

s/"//g for @records;

my %hash = @records;

print join(',',$timestamp,"RADIUS",@hash{@fields}),"

";

}

The script begins by defining the end-of-record character as a blank line, instead of the default newline character. It then reads each logical record one at a time, parses it, stores it in a hash, then prints out the fields of interest. The timestamp is represented somewhat differently than the other fields, so that is simply pulled out and printed. The other fields selected represent the authenticated userid, the type of RADIUS event logged (e.g., Start, Stop, Interim-Update, etc.), and the IP address from which the session originated. The resulting record is well-defined, compact, and in comma-separated format ready to be correlated with records from other sources.

$ radiuslogparser.pl < samplelog

Fri May 1 00:00:03 2009,RADIUS,jack,Interim-Update,10.20.172.67

Fri May 1 00:00:05 2009,RADIUS,jill,Stop,10.20.31.148

Inserting the field RADIUS in each record to define the type of log file can be very helpful when correlating with other log types, which often contain a server name or can be labeled to distinguish them (e.g., WEBACCESS, SYSLOG).

TACACS

When an individual dials into the Internet, there are usually two forms of evidence at the ISP—the contents of the terminal server's memory and the logs from the associated authentication server. For instance, the TACACS log file in Table 9.9 shows two users (John and Mary) dialing into a dialup terminal server named ppp.corpX.com, authenticating against a TACACS server named tacacs-server, and being assigned IP addresses. For the sake of clarity, these IP addresses have been resolved to their associated canonical names (e.g., static2.corpX.com).