Chapter 4. Intrusion Investigation

Eoghan Casey, Christopher Daywalt and Andy Johnston

Contents

Introduction135

Methodologies139

Preparation143

Case Management and Reporting157

Common Initial Observations170

Scope Assessment174

Collection175

Analyzing Digital Evidence179

Combination/Correlation191

Feeding Analysis Back into the Detection Phase202

Conclusion206

References206

Introduction

Intrusion investigation is a specialized subset of digital forensic investigation that is focused on determining the nature and full extent of unauthorized access and usage of one or more computer systems. We treat this subject with its own chapter due to the specialized nature of investigating this type of activity, and because of the high prevalence of computer network intrusions.

If you are reading this book, then you probably already know why you need to conduct an investigation into computer network intrusions. If not, it is important to understand that the frequency and severity of security breaches has increased steadily over the past several years, impacting individuals and organizations alike. Table 4.1 shows that the number of publicly known incidents has risen dramatically since 2002.

| Year | # of Incidents Known | Change |

|---|---|---|

| 2002 | 3 | NA |

| 2003 | 11 | +266% |

| 2004 | 22 | +100% |

| 2005 | 138 | +527% |

| 2006 | 432 | +213% |

| 2007 | 365 | –15.5% |

| 2008 | 493 | +35% |

These security breaches include automated malware that collects usernames and passwords, organized theft of payment card information and personally identifiable information (PII), among others. Once intruders gain unauthorized access to a network, they can utilize system components such as Remote Desktop or customized tools to blend into normal activities on the network, making detection and investigation more difficult. Malware is using automated propagation techniques to gain access to additional systems without direct involvement of the intruder except for subsequent remote command and control. There is also a trend toward exploiting nontechnological components of an organization. Some of this exploitation takes the form of social engineering, often combined with more sophisticated attacks targeting security weaknesses in policy implementation and business processes. Even accidental security exposure can require an organization to perform a thorough digital investigation.

A service company that utilized a wide variety of outside vendors maintained a database of vendors that had presented difficulties in the past. The database was intended for internal reference only, and only by a small group of people within the company. The database was accessible only from a web server with a simple front end used to submit queries. The web server, in turn, could be accessed only through a VPN to which only authorized employees had accounts. Exposure of the database contents could lead to legal complications for the company.

During a routine upgrade to the web server software, the access controls were replaced with the default (open) access settings. This was only discovered a week later when an employee, looking up a vendor in a search engine, found a link to data culled from the supposedly secure web server.

Since the web server had been left accessible to the Internet, it was open to crawlers from any search engine provider, as well as to anyone who directed a web browser to the server's URL. In order to determine the extent of the exposure, the server's access logs had to be examined to identify the specific queries to the database and distinguish among those from the authorized employees, those from web crawlers, and any that may have been directed queries from some other source.

Adverse impacts on organizations that experienced the most high impact incidents, such as TJX and Heartland, include millions of dollars to respond to the incident, loss of revenue due to business disruption, ongoing monitoring by government and credit card companies, damage to reputation, and lawsuits from customers and shareholders. The 2008 Ponemon Institute study of 43 organizations in the United States that experienced a data breach indicates that the average cost associated with an incident of this kind has grown to $6.6 million (Ponemon, 2009a). A similar survey of 30 organizations in the United Kingdom calculated the average cost of data breaches at £1.73 million (Ponemon, 2009b).

Furthermore, these statistics include only those incidents that are publicly known. Various news sources have reported on computer network intrusions into government-related organizations sourced from foreign entities (Grow, Epstein & Tschang, 2008). The purported purpose of these intrusions tends to be reported as espionage and/or sabotage, but due to the sensitivity of these types of incidents, the full extent of them has not been revealed. However, it is safe to say that computer network intrusions are becoming an integral component to both espionage and electronic warfare.

In addition to the losses directly associated with a security breach, victim organizations may face fines for failure to comply with regulations or industry security standards. These regulations include Health Insurance Portability and Accountability Act of 1996 (HIPAA), the Sarbanes-Oxley Act of 2002 (SOX), the Gramm-Leach-Bliley Act (GLBA), and the Payment Card Industry Data Security Standard (PCI DSS).

In the light of these potential consequences, it is important for the digital forensic investigators to develop the ability to investigate a computer network intrusion. Investigating a security breach may require a combination of file system forensics, collecting evidence from various network devices, scanning hosts on a network for signs of compromise, searching and correlating network logs, combing through packet captures, and analyzing malware. The investigation may even include nondigital evidence such as sign-in books and analog security images. The complexity of incident handling is both exciting and daunting, requiring digital investigators to have strong technical, case management, and organizational skills. These capabilities are doubly important when an intruder is still active on the victim systems, and the organization needs to return to normal operations as quickly as feasible. Balancing thoroughness with haste is a demanding challenge, requiring investigators who are conversant with the technology involved, and are equipped with powerful methodologies and techniques from forensic science.

This chapter introduces basic methodologies for conducting an intrusion investigation, and describes how an organization can better prepare to facilitate future investigations. This chapter is not intended to instruct you in technical analysis procedures. For technical forensic techniques, see the later chapters in this text that are dedicated to specific types of technology. This chapter also assumes that you are familiar with basic network intrusion techniques.

A theoretical intrusion investigation scenario is used throughout this chapter to demonstrate key points. The scenario background is as follows:

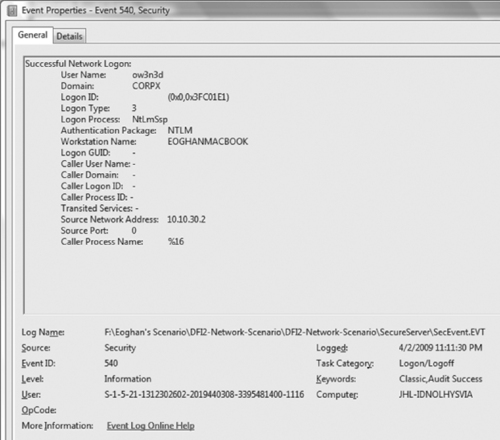

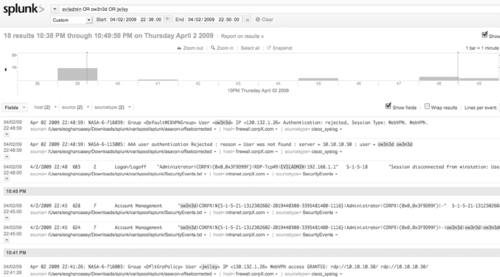

Part 1: Evidence Sources

A company located in Baltimore named CorpX has learned that at least some of their corporate trade secrets have been leaked to their competitors. These secrets were stored in files on the corporate intranet server on a firewalled internal network. A former system administrator named Joe Wiley, who recently left under a cloud, is suspected of stealing the files and the theft is believed to have taken place on April 2, 2009, the day after Joe Wiley was terminated. Using the following logs, we will try to determine how the files were taken and identify any evidence indicating who may have taken them:

▪ Asavpn.log: Logs from the corporate VPN (192.168.1.1)

▪ SecEvent.evt: Authentication logs from the domain controller

▪ Netflow logs: Netflow data from the internal network router (10.10.10.1)

▪ Server logs from the SSH server on the DMZ (10.10.10.50)

Although the organization routinely captures network traffic on the internal network (10.10.10.0), the data from the time of interest is archived and is not immediately available for analysis. Once this packet capture data has been restored from backup, we can use it to augment our analysis of the immediately available logs.

In this intrusion investigation scenario, the organization had segmented high-value assets onto a secured network that was not accessible from the Internet and only accessible from certain systems within their network as shown in Figure 4.1. Specifically, their network is divided into a DMZ (10.10.30.0/24) containing employee desktops, printers, and other devices on which sensitive information isn't (or at least shouldn't be) stored. In order to simplify remote access for the sales force, a server on DMZ can be accessed from the Internet using Secure Shell (SSH) via a VPN requiring authentication for individual user accounts. The internal network (10.10.10.0/24) is apparently accessible only remotely using Remote Desktop via an authenticated VPN connection. The intranet server (10.10.10.50) provides both web (intranet) and limited drive share (SMB) services. In addition to isolating their secure systems, they monitored network activities on the secured network using both NetFlow and full packet capture as shown in Figure 4.1. However, they did not have all of their system clocks synchronized, which created added work when correlating logs as discussed in the section on combination/correlation later in this chapter.

Methodologies

The Incident Response Lifecycle

There are several different prevalent methodologies for responding to and remediating computer security incidents. One of the more common is the Incident Response Lifecycle, as defined in the NIST Special Publication 800-61, “Computer Security Incident Handling Guide.” This document, along with other NIST computer security resources, can be found at http://csrc.nist.gov. The lifecycle provided by this document is shown in Figure 4.2.

|

| Figure 4.2 NIST Incident Response Lifecycle (Scarfone, Grance & Masone, 2008). |

The purpose of each phase is briefly described here:

▪ Preparation: Preparing to handle incidents from an organizational, technical, and individual perspective.

▪ Detection and Analysis: This phase involves the initial discovery of the incident, analysis of related data, and the usage of that data to determine the full scope of the event.

▪ Containment, Eradication and Recovery: This phase involves the remediation of the incident, and the return of the affected organization to a more trusted state.

▪ Post-Incident Activity: After remediating an incident, the organization will take steps to identify and implement any lessons learned from the event, and to pursue or fulfill any legal action or requirements.

The NIST overview of incident handling is useful and deserves recognition for its comprehensiveness and clarity. However, this chapter is not intended to provide a method for the full handling and remediating of security incidents. The lifecycle in Figure 4.2 is provided here mainly because it is sometimes confused with an intrusion investigation. The purpose of an intrusion investigation is not to contain or otherwise remediate an incident, it is only to determine what happened. As such, intrusion investigation is actually a subcomponent of incident handling, and predominantly serves as the second phase (Detection and Analysis) in the NIST lifecycle described earlier. Aspects of intrusion investigation are also a component of the Post-incident Activity including presentation of findings to decision makers (e.g., writing a report, testifying in court). This chapter will primarily cover the process of investigating an intrusion and important aspects of reporting results, as well as some of the preparatory tasks that can make an investigation easier and more effective.

Forensic investigators are generally called to deal with security breaches after the fact and must deal with the situation as it is, not as an IT security professional would have it. There is no time during an intrusion investigation to bemoan inadequate evidence sources and missed opportunities. There may be room in the final report for recommendations on improving security, but only if explicitly requested.

Intrusion Investigation Processes: Applying the Scientific Method

So if the Incident Response Lifecycle is not an intrusion investigation methodology, what should you use? It is tempting to use a specialized, technical process for intrusion investigations. This is because doing something as complex as tracking an insider or unknown number of attackers through an enterprise computer network would seem to necessitate a complex methodology. However, you will be better served by using simpler methodologies that will guide you in the right direction, but allow you to maintain the flexibility to handle diverse situations, including insider attacks and sophisticated external intrusions.

In any investigation, including an intrusion case, you can use one or more derivatives of the scientific method when trying to identify a way to proceed. This simple, logical process summarized here can guide you through almost any investigative situation, whether it involves a single compromised host, a single network link, or an entire enterprise.

1. Observation: One or more events will occur that will initiate your investigation. These events will include several observations that will represent the initial facts of the incident. You will proceed from these facts to form your investigation. For example, a user might have observed that his or her web browser crashed when they surfed to a specific web site, and that an antivirus alert was triggered shortly afterward.

2. Hypothesis: Based upon the current facts of the incident, you will form a theory of what may have occurred. For example, in the initial observation described earlier, you may hypothesize that the web site that crashed the user's web browser used a browser exploit to load a malicious executable onto the system.

3. Prediction: Based upon your hypothesis, you will then predict where you believe the artifacts of that event will be located. Using the previous example hypothesis, you may predict that there will be evidence of an executable download in the history of the web browser.

4. Experimentation/Testing: You will then analyze the available evidence to test your hypothesis, looking for the presence of the predicted artifacts. In the previous example, you might create a forensic duplicate of the target system, and from that image extract the web browser history to check for executable downloads in the known timeframe. Part of the scientific method is to also test possible alternative explanations—if your original hypothesis is correct you will be able to eliminate alternative explanations based on available evidence (this process is called falsification).

5. Conclusion: You will then form a conclusion based upon the results of your findings. You may have found that the evidence supports your hypothesis, falsifies your hypothesis, or that there were not enough findings to generate a conclusion.

This basic investigative process can be repeated as many times as necessary to come to a conclusion about what may have happened during an incident. Its simplistic nature makes it useful as a grounding methodology for more complex operations, to prevent you from going down the rabbit hole of inefficient searches through the endless volumes of data that you will be presented with as a digital forensic examiner.

The two overarching tasks in an intrusion investigation to which the scientific method can be applied are scope assessment and crime reconstruction. The process of determining the scope of an intrusion is introduced here and revisited in the section, “Scope Assessment,” later in this chapter. The analysis techniques associated with reconstructing the events relating to an intrusion, and potentially leading to the source of the attack, are covered in the section, “Analyzing Digital Evidence” at the end of this chapter.

Determining Scope

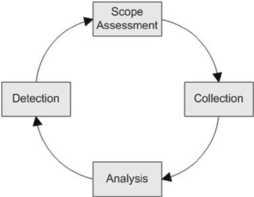

When you begin an intrusion investigation, you will not know how many host and network segments have been compromised. In order to determine this, you will need to implement the process depicted in Figure 4.3. This overall process feeds scope assessment, which specifies the number of actually and potentially compromised systems, network segments, and credentials at a given point in time during the investigation with the ultimate goal of determining the full extent of the damage.

The phases in the process are described here:

1. Scope Assessment: Specify the number of actually and potentially compromised systems, network segments, and credentials at a given point in time during the investigation.

2. Collection: Obtain data from the affected systems, and surrounding network devices and transmission media.

3. Analyze: Consolidate information from disparate sources to enable searching, correlation, and event reconstruction. Analyze available data to find any observable artifacts of the security event. Correlate information from various sources to obtain a more complete view of the overall intrusion.

4. Detection: Use any of the artifacts uncovered during the analysis process to sweep the target network for any additional compromised systems (feeding back into the Scope Assessment phase).

The main purpose of the scope assessment cycle is to guide the investigator through the collection and analysis of data, and then push the results into the search for additional affected devices. You will repeat this cycle until no new compromised systems are discovered. Note that depending upon your role, you may be forced to halt the cycle when the target organization decides that it is time to begin containment and/or any other remediation tasks.

The initial investigation into a data breach of a major commercial company focused on its core credit card processing systems, and found evidence of extensive data theft. Digital investigators were dedicated to support the data breach of the credit card systems, and were not permitted to look for signs of intrusion in other areas of the company. One year later, it was discovered that the same attackers had gained unauthorized access to the primary servers in one of the company's largest divisions one year before they targeted the core credit card systems. The implications of this discovery were far-reaching. Not only did the intruders have access to sensitive data during the year prior to the discovery of the intrusion, they had continuous access during the year that digital investigators were focusing their efforts on the core credit card processing systems. If the earlier intrusion had been discovered during the initial investigation, there would have been a better chance of finding related digital evidence on the company's IT systems. However, two years after the initial intrusion, very limited information was available, making it difficult for digital investigators to determine where the intrusion originated and what other systems on the company's network were accessed. Until digital investigators learned about the earlier activities, they had no way to determine how the intruders originally gained unauthorized access to the company's network. As a result, remediation efforts were not addressing the root of the problem.

In certain cases, the evidence gathered from the victim network may lead to intermediate systems used by the attacker, thus expanding the scope of the investigation. Ultimately, the process depicted in Figure 4.3 can expand to help investigators track down the attacker.

As with any digital investigation, make sure you are authorized to perform any actions deemed necessary in response to a security breach. We have dealt with several cases in which practitioners have been accused of exceeding their authorization. Even if someone commands you to perform a task in an investigation, ask for it in writing. If it isn't written down, it didn't happen. Ideally the written authorization should provide specifics like who or what will be investigated, whether covert imaging of computers is permitted, what types of monitoring should be performed (e.g., computer activities, eavesdropping on communications via network or telephones or bugs, surveillance cameras), and who is permitted to examine the data. Also be sure that the person giving you written authorization is authorized to do so! When in doubt, get additional authorization.

Preparation

Sooner or later, almost every network will experience an information security breach. These breaches will range from simple spyware infections to a massive, intentional compromise from an unknown source. Given the increasing challenges of detecting and responding to security breaches, preparation is one of the keys to executing a timely and thorough response to any incident. Any experienced practitioner will tell you that it is more straightforward to investigate an incident when the problem is detected soon after the breach, when evidence sources are preserved properly, and when there are logs they can use to reconstruct events relating to the crime. The latest Ponemon Institute study indicates that the cost of data breaches were lower for organizations that were prepared for this type of incident (Ponemon, 2009a).

Therefore, it makes sense to design networks and equip personnel in such a way that the organization can detect and handle security events as quickly and efficiently as possible, to minimize the losses associated with downtime and data theft. Preparation for intrusion investigation involves developing policies and procedures, logging infrastructure, configuring computer systems as sources of evidence, a properly equipped and trained response team, and performing periodic incident response drills to ensure overall readiness.

Digital investigators should use their knowledge and control of the information systems to facilitate intrusion investigation as much as feasible. It is important for digital investigators to know where the most valuable information assets (a.k.a., crown jewels) are located. Since this is the information of greatest concern, it deserves the greatest attention. Furthermore, even if the full scope of a networkwide security breach cannot be fully ascertained, at least the assurance that the crown jewels have not been stolen may be enough to avoid notification obligations.

Developing an understanding of legitimate access to the crown jewels in an organization can help digital investigators discern suspicious events. Methodically eliminating legitimate events from logs leaves a smaller dataset to examine for malicious activity. For instance, connections to a secure customer database may normally originate from the subnet used by the Accounting Department, so connections from any other subnet within the organization deserve closer attention. In short, do not just instrument the Internet borders of an organization with intrusion detection systems and other monitoring systems. Some internal monitoring is also needed, especially focused monitoring of systems that contain the most valuable and sensitive data.

Beyond being able to monitor activities on hosts and networks, it is useful to establish baselines for host configuration and network activities in order to detect deviations from the norm. Added advantage can be gained through strategic network segmentation prior to an incident, placing valuable assets in more secure segments, which can make containment easier. Under the worst circumstances, secure segments can be completely isolated from the rest of the network until remediation is complete.

One of the most crucial aspects of preparation for a security breach is identifying and documenting the information assets in an organization, including the location of sensitive or valuable data, and being able to detect and investigate access to these key resources. An organization that does not understand its information assets and the associated risks cannot determine which security breaches should take the highest priority. Effective intrusion investigation is, therefore, highly dependent on good Risk Management practices. Without a Risk Management plan, and the accompanying assessment and asset inventory, incident handling can become exceedingly difficult and expensive. An intelligent approach to forensic preparedness is to identify the path these information assets take within the business processes, as well as in the supporting information systems. Data about employees, for example, will likely be maintained in offices responsible for Personnel and Payroll. Customer information may be gathered by a sales force and eventually end up in an Accounting office for billing. Such information may be stored and transmitted digitally around the clock in a large business. Security measures need to be put in place to safeguard and track this information and to insure that only appropriate parties have access to it.

Being able to gauge the relative severity of an incident, and knowing when to launch an investigation is the first step in effective handling of security breaches. The inability to prioritize among different incidents can be more harmful to a business than the incidents themselves. Imagine a bank that implemented an emergency response plan (designed for bank robberies) each time an irate customer argued with a manager over unexplained bank charges. Under these conditions, most banks would be in constant turmoil and would lose most of their customers. In information security, incidents must undergo a triage process in order to prioritize the most significant problems and not waste resources on relatively minor issues.

This section of the text enumerates some of the more important steps that can be taken to ensure that future intrusion investigations can be conducted more effectively. Note that this section is not intended to be comprehensive with respect to security recommendations; only recommendations that will assist in an investigation are listed.

When preparing an organization from a forensic perspective, it is important to look beyond expected avenues of attack, since intrusion often involve anomalous and wily activities that bypass security. Therefore, effective forensic preparation must account for the possibility that security mechanisms may not be properly maintained or that they may be partially disabled through improper maintenance. Moreover, all mechanisms may work exactly as intended, yet still leave avenues of potential exploitation. A prime example of this arises in security breach investigations when firewall logs show only denied access attempts but will not record a successful authentication that may be an intrusion. In such cases, it is more important to have details about successful malicious connections than about unsuccessful connection attempts. Therefore, logs of seemingly legitimate activity, as well as exceptional events, should be maintained.

As another example, social engineering exploits often target legitimate users and manipulate them into installing malware or providing data that can be used for later attacks. Intrusion Prevention/Detection Systems will record suspicious or clearly hostile activity, but investigation of sophisticated attacks requires examination of normal system activity as well. Social engineering attacks do not exploit technological vulnerabilities and normally do not carry any signatures to suggest hostile activity. It is important to realize that, in social engineering attacks, all technological components are behaving exactly as they were designed to. It is the design of the technology, and often the design of the business process supported by the technology, that is being exploited. Since an exploit may not involve any unusual transactions within the information systems, the forensic investigator must be able to investigate normal activity, as well as exceptional activity, within an IT infrastructure. For this reason, preparing for an incident also supports the ongoing information assurance efforts of an organization, helping practitioners maintain the confidentiality, integrity and availability of information.

Asset/Data Inventory

The rationale for this is straightforward. To protect an organization's assets, and investigate any security event that involves them, we must know where they are, and in what condition they are supposed to be. To benefit an intrusion analysis several key types of asset documentation should be kept, including:

▪ Network topology. Documents should be kept that detail how a network is constructed, and identify the logical and physical boundaries. A precisely detailed single topology document is not feasible for the enterprise, but important facets can be recorded, such as major points of ingress/egress, major internal segmentation points, and so on.

▪ Physical location of all devices. Forensic investigators will inevitably need to know this information. It is never a good situation when digital investigators trace attacker activity to a specific host name, and nobody at the victimized organization has any idea where the device actually is.

▪ Operating system versions and patch status. It is helpful to the intrusion investigator to understanding what operating systems are in use across the network, and to what level they are patched. This will help to determine the potential scope of any intrusion.

▪ Location of key data. This could be an organization's crown jewels, or any data protected by law or industry regulations. If an organization maintains data that is sufficiently important that it would be harmed by the theft of that data, then its location should be documented. This includes the storage location, as well as transit paths. For example, credit data may be sent from a POS system, through a WAN link to credit relay servers where it is temporarily stored, and then through a VPN connection to a bank to be verified. All of these locations and transit paths should be documented thoroughly for valuable or sensitive data.

Fortunately, this is a standard IT practice. It is easier to manage an enterprise environment when organizations know where all of their systems are, how the systems are connected, and their current patch status. So an organization may already be collecting this information regularly. All that a digital investigator would need to know is how to obtain the information.

Policies and Procedures

Although they might not be entertaining to read and write, policies and procedures are necessary for spelling out exactly how an organization will deal with a security event when it occurs. An organization with procedures will experience more efficient escalations from initial discovery to the initiation of a response. An organization without procedures will inevitably get bogged down in conference calls and arguments about how to handle the situation, during which the incident could continue unabated and losses could continue to mount. Examples of useful policies and procedures include:

▪ Key staff: This will define all individuals who will take part in an intrusion investigation, and what their roles will be. (See the section, “Organizational Structure” of this chapter for a description of common roles.)

▪ Incident definition and declaration thresholds: These will define what types of activity can be considered a security incident and when an organization will declare that an incident has occurred and initiate a response (and by extension an investigation).

▪ Escalation procedures: These will define the conditions under which an organization will escalate security issues, and to whom.

▪ Closure guidelines: These will define the conditions under which an incident is considered closed.

▪ Evidence handling procedures: These will define how items of evidence will be handled, transferred, stored, and otherwise safeguarded. This will include chain of custody and evidence documentation requirements, and other associated items.

▪ Containment and remediation guidelines: This will define the conditions under which an organization will attempt to contain and remediate an incident. Even if this is not your job as an investigator, it is important to understand when this will be done so that you can properly schedule your investigation to complete in time for this event.

▪ Disclosure guidelines: These will specify who can and should be notified during an investigation, and who should not.

▪ Communication procedures: These will define how the investigative team will communicate without alerting the attacker to your activity.

▪ Encryption/decryption procedures: These will define what is encrypted across an organization's systems, and how data can be decrypted if necessary. Investigative staff will need access to the victim organization's decryption procedures and key escrow in order to complete their work if the enterprise makes heavy use of on-disk encryption.

In terms of forensic preparation, an organization must identify the mechanisms for transmission and storage of important data. Beyond the data flows, policies should be established to govern not just what data is stored, but how long it is stored and how it should be disposed of. These policies will not be determined in terms of forensic issues alone, but forensic value should be considered as a factor when valuing data for retention. As with many issues involving forensic investigation and information security in general, policies must balance trade-offs among different organizational needs.

Host Preparation

Computer systems are not necessarily set up in such a way that their configuration will be friendly to forensic analysis. Take for example NTFS Standard Information Attribute Last Accessed Time date-time stamp on modern Windows systems. Vista is configured by default not to update this date-time stamp, whereas previous versions of Windows would. This configuration is not advantageous for forensic investigators, since date-time stamps are used heavily in generating event timelines and correlating events on a system.

It will benefit an organization to ensure that its systems are configured to facilitate the job of the forensic investigator. Setting up systems to leave a more thorough audit trail will enable the investigator to determine the nature and scope of an intrusion more quickly, thereby bringing the event to closure more rapidly. Some suggestions for preparing systems for forensic analysis include:

▪ Activate OS system and security logging. This should include auditing of:

▪ Account logon events and account management events

▪ Process events

▪ File/directory access for sensitive areas (both key OS directories/files as well as directories containing data important to the organization)

▪ Registry key access for sensitive areas, most especially those that involve drivers and any keys that can be used to automatically start an executable at boot (note that this is available only in newer versions of Windows, not in Windows XP)

Aren't sure which locations to audit? Use the tool autoruns from Microsoft (http://technet.microsoft.com/en-us/sysinternals/bb963902.aspx) on a baseline system. It will give you a list of locations on the subject system that can be used to automatically run a program. Any location fed to you by autoruns should be set up for object access auditing. Logs of access to these locations can be extremely valuable, and setting them up would be a great start. This same program is useful when performing a forensic examination of a potentially compromised host. For instance, instructing this utility to list all unsigned executables configured to run automatically can narrow the focus on potential malware on the system.

▪ Turn on file system journals, and increase their default size. The larger they are, the longer they can retain data. For example, you can instruct the Windows operating system to record the NTFS journal to a file of a specific size. This journal will contain date-time stamps that can be used by a forensic analyst to investigate file system events even when the primary date-time stamps for file records have been manipulated.

▪ Activate all file system date-time stamps, such as the Last Accessed time previously mentioned in Vista systems. This can be done by setting the following Registry DWORD value to 0: HKLMSYSTEMCurrentControlSetControlFileSystemNtfsDisableLastAccessUpdate

▪ Ensure that authorized applications are configured to keep a history file or log. This includes web browsers and authorized chat clients.

▪ Ensure that the operating system is not clearing the swap/page file when the system is shut down, so that it will still be available to the investigator who images after a graceful shutdown. In Windows this is done by disabling the Clear Virtual Memory Pagefile security policy setting.

▪ Ensure that the operating system has not been configured to encrypt the swap/page file. In newer versions of Windows, this setting can be found in the Registry at HKLMSYSTEMCurrentControlSetControlFileSystemNtfsEncryptPagingFile.

▪ Ensure that the log file for the system's host-based firewall is turned on, and that the log file size has been increased from the default.

Right about now, you might be saying something along the lines of “But attackers can delete the log files” or “The bad guys can modify file system date-time stamps.” Of course there are many ways to hide one's actions from a forensic analyst. However, being completely stealthy across an enterprise is not an easy task. Even if they tried to hide their traces, they may be successful in one area, but not in another. That is why maximizing the auditing increases your chances to catch an attacker. To gain more information at the host level, some organizations deploy host-based malware detection systems. For instance, one organization used McAfee ePolicy Orchestrator to gather logs about suspicious activities on their hosts, enabling investigators to quickly identify all systems that were targeted by a particular attacker based on the malware. Let us continue the list for some helpful hints in this area.

▪ Configure antivirus software to quarantine samples, not to delete them. That way if antivirus identifies any malicious code, digital investigators will have a sample to analyze rather than just a log entry to wonder about.

▪ Keep log entries for as long as possible given the hardware constraints of the system, or offload them to a remote log server.

▪ If possible, configure user rights assignments to prevent users from changing the settings identified earlier.

▪ If possible, employ user rights assignments to prevent users from activating native operating system encryption functions—unless they are purposefully utilized by the organization. If a user can encrypt data, they can hide it from you, and this includes user accounts used by an attacker.

Note that this will leave the pagefile on disk when the system is shut down, which some consider a security risk. Note that some settings represent a tradeoff between assisting the investigator and securing the system. For example, leaving the swap/page file on disk when the system is shut down is not recommended if the device is at risk for an attack involving physical access to the disk. The decision in this tradeoff will depend upon the sensitivity of the data on the device, and the risk of such an attack occurring. Such decisions will be easier if the organization in question has conducted a formal risk assessment of their enterprise.

Logging Infrastructure

The most valuable resources for investigating a security breach in an enterprise are logs. Gathering logs with accurate timestamps in central locations, and reviewing them regularly for problems will provide a foundation for security monitoring and forensic incident response.

Most operating systems and many network devices are capable of logging their own activities to some extent. As discussed in Chapter 9, “Network Analysis,” these logs include system logs (e.g., Windows Event logs, Unix syslogs), application logs (e.g., web server access logs, host-based antivirus and intrusion detection logs), authentication logs (e.g., dial-up or VPN authentication logs), and network device logs (e.g., firewall logs, NetFlow logs).

The National Institute of Standards and Technology publication SP800-92, “Guide to Computer Security Log Management” provides a good overview of the policy and implementation issues involved in managing security logs. The paper presents log management from a broad security viewpoint with attention to compliance with legally mandated standards. The publication defines a log as “a record of the events occurring within an organization's systems and networks.” A forensic definition would be broader: “any record of the events relevant to the operation of an organization's systems and networks.” In digital investigation, any record with a timestamp or other feature that allows correlation with other events is a log record. This includes file timestamps on storage media, physical sign-in logs, frames from security cameras, and even file transaction information in volatile memory.

Having each system keep detailed logs is a good start, but it isn't enough. Imagine that you have discovered that a particular rootkit is deleting the Security Event Logs on Windows systems that it infects, and that action leaves Event ID 517 in the log after the deletion. Now you know to look for that event, but how do you do that across 100,000 systems that each store their logs locally? Uh-oh. Now you've got a big problem. You know what to look for, but you don't have an efficient way to get to the data.

When a centralized source of Windows Event logs is not available, we have been able to determine the scope of an incident to some degree by gathering the local log files from every host on the network. However, this approach is less effective than using a centralized log server because individual hosts may have little or no log retention, and the intruder may have deleted logs from certain compromised hosts. In situations in which the hosts do not have synchronized clocks, the correlation of such logs can become a very daunting task.

The preceding problem is foreseeable and can be solved by setting up a remote logging infrastructure. An organization's systems and network devices can and should be configured to send their logs to central storage systems, where they will be preserved and eventually archived. Although this may not be feasible for every system in the enterprise, it should be done for devices at ingress/egress points as well as servers that contain key data that is vital to the organization, or otherwise protected by law or regulations. Now imagine that you want to look for a specific log event, and all the logs for all critical servers in the organization are kept at a single location. As a digital investigator, you can quickly and easily grab those logs, and search them for your target event. Collecting disparate logs on a central server also provides a single, correlated picture of all the organization's activity on one place that can be used for report generation without impacting the performance of the originating systems. Maintaining centralized logs will reduce the time necessary to conduct your investigation, and by extension it will bring your organization to remediation in a more timely manner.

There are various types of solutions for this, ranging from using free log aggregation software (syslog) to commercial log applications such as Splunk, which provide enhanced indexing, searching, and reporting functions. There are also log aggregation and analysis platforms dedicated to security. These are sometimes called Security Event Managers, or SEMs, and a common example is Cisco MARS. Rolling out any of these solutions in an enterprise is not a trivial task, but will significantly ease the burden of both intrusion analysts as well as a variety of other roles from compliance management to general troubleshooting. Due to the broad appeal, an organization may be able to leverage multiple IT budgets to fund a log management project.

To gain better oversight of network-level activities, it is useful to gather three kinds of information. First, statistical information is useful for monitoring general trends. For instance, a protocol pie chart can show increases in unusual traffic whereas a graph of bytes/minute can show suspicious spikes in activity. Second, session data (e.g., NetFlow or Argus logs) provide an overview of network activities, including the timing of events, end-point addresses, and amount of data. Third, traffic content enables digital investigators to drill into packet payloads for deeper analysis.

Because central log repositories can contain key information about a security breach, they are very tempting targets for malicious individuals. Furthermore, by their nature, security systems often have access to sensitive information as well as being the means through which information access is monitored. Therefore, it is important to safeguard these valuable sources of evidence against misuse by implementing strong security, access controls, and auditing. Consider using network-based storage systems (network shares, NFS/AFS servers, NAS, etc.) with strong authentication protection to store and provide access to sensitive data. Minimizing the number of copies of sensitive data and allowing access only using logged authentication will facilitate the investigation of a security breach.

Having granular access controls and auditing on central log servers has the added benefit of providing a kind of automatically generated chain of custody, showing who accessed the logs at particular times. These protective measures can also help prevent accidental deletion of original logs while they are being examined during an investigation.

Technical controls can be undermined if a robust governance process is not in place to enforce them. An oversight process must be followed to grant individuals access to logging and monitoring systems. In addition, periodic audits are needed to ensure that the oversight process is not being undermined.

A common mistake that organizations make is to configure logging and only examine the logs after a breach occurs. To make the most use of log repositories, it is necessary to review them routinely for signs of malicious activity and logging failures. The more familiar digital investigators are with available logs, the more likely they are to notice deviations from normal activity and the better prepared they will be to make use of the logs in an investigation. Logs that are not monitored routinely often contain obvious signs of malicious activities that were missed. The delay in detection allows the intruder more time to misuse the network, and investigative opportunities are lost. Furthermore, logs that are not monitored on a routine basis often present problems for digital investigators, such as incomplete records, incorrect timestamps, or unfamiliar formats that require specialized tools and knowledge to interpret.

We have encountered a variety of circumstances that have resulted in incomplete logs. In several cases, storage quotas on the log file or partition had been exceeded and new logs could not be saved. This logging failure resulted in no logs being available for the relevant time period. In another case, a Packeteer device that rate-limited network protocols was discarding the majority of NetFlow records from a primary router, preventing them from reaching the central collector. As a result, the available flow information was misleading because it did not include large portions of the network activities during the time of interest. As detailed in Chapter 9 (Network Investigations), NetFlow logs provide a condensed summary of the traffic through routers. They are designed primarily for network management, but they can be invaluable in an investigation of network activity.

Incomplete or inaccurate logs can be more harmful than helpful, diverting the attention of digital investigators without holding any relevant information. Such diversions increase the duration and raise the cost of such investigations, which is why it is important to prepare a network as a source of evidence.

A simple and effective approach to routine monitoring of logs is to generate daily, weekly, or monthly reports from the logs that relate to security metrics in the organization. For instance, automatically generating summaries of authentication (failed and successful) access activity to secure systems on a daily basis can reveal misuse and other problems. In this way, these sources of information can be used to support other information assurance functions, including security auditing. It is also advisable to maintain and preserve digital records of physical access, such as swipe cards, electronic locks, and security cameras using the same procedures as those used for system and network logs.

Log Retention

In digital investigative heaven, all events are logged, all logs are retained forever, and unlimited time and resources are available to examine all the logged information. Down on earth, however, it does not work that way. Despite the ever-decreasing cost of data storage and the existence of powerful log management systems, two cost factors are leading many organizations to review (or create) data retention policies that mandate disposal of information based on age and information type. The first factor is simply the cost of storing data indefinitely. Although data storage costs are dropping, the amount of digital information generated is increasing. Lower storage costs per unit of data do not stop the total costs from becoming a significant drain on an IT budget if all data is maintained in perpetuity (Gartner, 2008). The second factor is the cost of accessing that data once it has been stored. The task of sifting through vast amounts of stored data, such as e-mail, looking for relevant information can be overwhelming. Therefore, it is necessary to have a strategy for maintaining logs in an enterprise.

Recording more data is not necessarily better; generally, organizations should only require logging and analyzing the data that is of greatest importance, and also have non-mandatory recommendations for which other types and sources of data should be logged and analyzed if time and resources permit. (NIST SP800-92)

This strategy involves establishing log retention policies that identify what logs will be archived, how long they will be kept, and how they will be disposed of. Although the requirements within certain sectors and organizations vary, we generally recommend having one month of logs immediately accessible and then two years on backup that can be restored within 48 hours. Centralized logs should be backed up regularly and backups stored securely (off-site, if feasible).

Clearly documented and understood retention policies provide two advantages. First, they provide digital investigators with information about the logs themselves. This information is very useful in planning, scheduling, and managing an effective investigation from the start. Second, if the organization is required by a court to produce log records, the policies define the existing business practice to support the type and amount of logs that can be produced.

It is important to make a distinction between routine log retention practices and forensic preservation of logs in response to a security breach. When investigating an intrusion, there is nothing more frustrating than getting to a source of evidence just days or hours after it has been overwritten by a routine process. This means that, at the outset of an investigation, you should take steps to prevent any existing data from being overwritten. Routine log rotation and tape recycling should be halted until the necessary data has been obtained, and any auto deletion of e-mail or other data should be stopped temporarily to give investigators an opportunity to assess the relevance and importance of the various sources of information in the enterprise. An effective strategy to balance the investigative need to maximize retention with the business need for periodic destruction is to snapshot/freeze all available log sources when an incident occurs. This log freeze is one of the most critical parts of an effective incident response plan, promptly preserving available digital evidence.

Network Architecture

The difficulty in scoping a network intrusion increases dramatically with the complexity of the network architecture. The more points of ingress/egress added to an enterprise network, the more difficult it becomes to monitor intruder activities and aggregate logs. In turn, this will make it more difficult to monitor traffic entering and leaving the network. If networks are built with reactive security in mind, they can be set up to facilitate an intrusion investigation.

▪ Reduce the number of Internet gateways, and egress/ingress points to business partners. This will reduce the number of points digital investigators may need to monitor.

▪ Ensure that every point of ingress/egress is instrumented with a monitoring device.

▪ Do not allow encrypted traffic in and out of the organization's network that is not sent through a proxy that decrypts the traffic. For example, all HTTPS traffic initiated by workstations should be decrypted at a web proxy before being sent to its destination. When organizations allow encrypted traffic out of their network, they cannot monitor the contents.

▪ Remote logging. Did we mention logging yet? All network devices in an organization need to be sending their log files to log server for storage and safe keeping.

Note that the approach to network architecture issues may need to vary with the nature of the organization. In the case of a university or a hospital, for instance, there may be considerable resistance to decrypting HTTPS traffic at a proxy. As with all other aspects of preparedness, the final design must be a compromise that best serves all of the organization's goals.

Domain/Directory Preparation

Directory services, typically Microsoft's Active Directory, play a key role in how users and programs are authenticated into a network, and authorized to perform specific tasks. Therefore Active Directory will be not only a key source of information for the intrusion investigator, but will also often be directly targeted by advanced attackers. Therefore it must be prepared in such a way that intrusion investigators will be able to obtain the information they need. Methods for this include:

▪ Remote logging. Yes, we have mentioned this before, but keeping logs is important. This is especially true for servers that are hosting part of a directory service, such as Active Directory Domain Controllers. These devices not only need to be keeping records of directory-related events, but logging them to a remote system for centralized access, backup, and general safekeeping.

▪ Domain and enterprise administrative accounts should be specific to individual staff. In other words, each staff member that is responsible for some type of administrative work should have a unique account, rather than multiple staff members using the same administrative account. This will make it easier for digital investigators to trace unauthorized behavior associated with an administrative account to the responsible individual or to his or her computer.

▪ Rename administrator accounts across all systems in the domain. Then create a dummy account named “administrator” that does not actually have administrative level privilege. This account will then become a honey token, where any attempt to access the account is not legitimate. Auditing access attempts to such dummy accounts will help digital investigators in tracing unauthorized activity across a domain or forest.

▪ Ensure that it is known which servers contain the Global Catalog, and which servers fill the FSMO (Flexible Single Master Operation) roles. The compromise of these systems will have an effect on the scope of the potential damage.

▪ Ensure that all trust relationships between all domains and external entities are fully documented. This will have an impact on the scope of the intrusion.

▪ Use domain controllers to push policies down that will enforce the recommendations listed under “Host Preparation” later in this chapter.

At the core of all authentication systems, there is a fundamental weakness. The system authenticates an entity as an account and logs activity accordingly. Even with biometrics, smart cards and all the other authentication methods, the digital investigator is reconstructing activity that takes place under an account, not the activity of an individual person. The goal of good authentication is to minimize this weakness and try to support the accountability of each action to an individual person. Group accounts, like the group administrative account mentioned earlier, seriously undermine this accountability. Accountability is not (or shouldn't be) about blame. It's about constructing an accurate account of activities.

Time Synchronization

Date and time stamps are critical pieces of information for the intrusion investigator. Because an intrusion investigation may involve hundreds or thousands of devices, the time settings for these devices should be synchronized. Incorrect timestamps can create confusion, false accusations, and make it more difficult to correlate events using multiple log types. Event correlation and reconstruction can proceed much more efficiently if the investigators can be sure that multiple sources of event reports are all using the same clock. This is very difficult to establish if the event records are not recorded using synchronized clocks. If one device is five minutes behind its configured time zone, and another device is 20 minutes ahead, it will be difficult for an investigator to construct an event timeline from these two systems. This problem is magnified when dealing with much larger numbers of systems. Therefore, it is important to ensure that the time on all systems in an organization is synchronized with a single source.

▪ Windows: How Windows Time Service Works (http://technet.microsoft.com/en-us/library/cc773013.aspx)

▪ UNIX/Linux: Unix Unleashed 4th edition, Chapter 6

▪ Cisco: Network Time Protocol www.cisco.com/en/US/tech/tk648/tk362/tk461/tsd_technology_support_sub-protocol_home.html

Operational Preparedness, Training and Drills

Following the proverb, “For want of a nail the shoe was lost,” a well-developed response plan and well-designed logging infrastructure can be weakened without some basic logistical preparation. Like any complex procedure, staff will be more effective at conducting an intrusion investigation if they have been properly trained and equipped to preserve and make use of the available evidence, and have recent experience performing an investigation.

Responding to an incident without adequate equipment, tools, and training generally reduces the effectiveness of the response while increasing the overall cost. For instance, rushing out to buy additional hard drives at a local store is costly and wastes time. This situation can be avoided by having a modest stock of hard drives set aside for storing evidence. Other items that are useful to have in advance include a camera, screwdrivers, needle-nose pliers, flashlight, hardware write blockers, external USB hard drive cases, indelible ink pens, wax pencils, plastic bags of various sizes, packing tape, network switch, network cables, network tap, and a fire-resistant safe. Consider having some redundant hardware set aside (e.g., workstations, laptops, network hardware, disk drives) in order to simplify securing equipment and media as evidence without unduly impacting the routine work. If a hard drive needs to be secured, for instance, it can be replaced with a spare drive so that work isn't interrupted. Some organizations also employ tools to perform remote forensics and monitoring of desktop activities when investigating a security breach, enabling digital investigators to gather evidence from remote systems in a consistent manner without having to travel to a distant location or rely on untrained system administrators to acquire evidence (Casey, 2006).

Every effort should be made to send full-time investigators and other security staff to specialized training every year, and there is a wide variety of incident handling training available. However, it will be more difficult to train nonsecurity technical staff in the organization to assist during an incident. If the organization is a large enterprise, it may not be cost- and time-effective to constantly fly their incident handlers around the country or around the world to collect data during incidents. Instead the organization may require the help of system and network administrators at various sites to perform operations to serve the collection phase of an intrusion investigation (e.g., running a volatile data script, collecting logs, or even imaging a system). In this situation it will also not be cost-effective to send every nonsecurity administrator to specialized incident handling training, at the cost of thousands of dollars per staff member. A more effective solution is to build an internal training program, either with recorded briefings by incident handling staff or even computer-based training (CBT) modules if the organization has the capability to produce or purchase these. Coupled with explicit data gathering procedures, an organization can give nonsecurity technical staff the resources they need to help in the event of an intrusion.

In addition to training, it is advisable to host regular response drills in your organization. This is especially the case if the organization does not experience security incidents frequently, and the security staff also performs other duties that do not include intrusion investigation. As with any complex skill, one can get out of practice.

Case Management and Reporting

Effective case management is critical when investigating an intrusion, and is essentially an exercise in managing fear and frustration. Managing an intrusion investigation can be a difficult task, requiring you to manage large, dispersed teams and to maintain large amounts of data. Case management involves planning and coordinating the investigation, assessing risks, maintaining communication between those involved, and documenting each piece of media, the resulting evidence, and the overall investigative process. Continuous communication is critical to keep everyone updated on developments such as new intruder activities or recent security actions (e.g., router reconfiguration). Ideally, an incident response team should function as a unit, thinking as one. Daily debriefings and an encrypted mailing list can help with the necessary exchange of information among team members. Valuable time can be wasted when one uninformed person believes that an intruder is responsible for a planned reconfiguration of a computer or network device, and reacts incorrectly. Documenting all actions and findings related to an incident in a centralized location or on an e-mail list not only helps keep everyone on the same page, but also helps avoid confusion and misunderstanding that can waste invaluable time.

The various facets of case management with respect to computer network intrusions are described in the following sections.

Organizational Structure

There are specific roles that are typically involved in an intrusion investigation or incident response. Figure 4.4 is an example organizational chart of such individuals, followed by a description of their roles.

Who are these people?

▪ Investigative Lead: This is the individual who “owns” the investigation. This individual determines what investigative steps need to be taken, delegates them to staff as needed, and reports all results to management. The “buck” stops with this person.

▪ Designated Approval Authority: This is the nontechnical manager or executive responsible for the incident response and/or investigation. Depending on the size of the organization, it could be the CSO, or perhaps the CIO or CTO. It is also the person who you have to ask when you want to do something like take down a production server, monitor communications, or leave compromised systems online while you continue your investigation. This is the person who is going to tell you “No” the most often.

▪ Legal Counsel: This is the person who tells the DAA what he or she must or should do to comply with the law and industry regulation. This is one of the people who will tell the DAA to tell you “No” when you want to leave compromised devices online so that you can continue to investigate. This person may also dictate what types of data need to be retained, and for how long.

▪ Financial Counsel: This is the person who tells the DAA how much money the organization is losing. This is one of the people you'll have to convince that trying to contain an incident before you have finished your investigation could potentially cost the organization more money in the long run.

▪ Forensic Investigators: These are the digital forensics specialists. As the Investigative Lead, these are the people you will task with collection and analysis work. If the team is small, each person may be responsible for all digital forensic analysis tasks. If the organization or team is large, these individuals are sometimes split between operating system, malware, and log analysis specialists. These last two skills are absolutely required for intrusion investigations, but may not be present in other forms of digital investigation.

▪ IT Staff: These are the contacts who you will require to facilitate your investigation through an organization.

▪ The Desktop Engineers will help you to locate specific desktop systems both physically and logically, and to facilitate scans of desktop systems across the network.

▪ Server Engineers will help you to locate specific servers both physically and logically, and to facilitate scans of all systems across the network.

▪ Network Engineers will help you to understand the construction of the network, and any physical or logical borders in place that could either interfere with attacker operation, or could serve as sources of log data.

▪ Directory Service (Usually Active Directory) Administrators will help you to understand the access and authorization infrastructure that overlays the enterprise. They may help you to deploy scanning tools using AD, and to access log files that cover user logins and other activities across large numbers of systems.

▪ Security Staff: These individuals will assist you in gathering log files from security devices across the network, and in deploying new logging rules so that you can gather additional data that was not previously being recorded. These are also the people who may be trying to lock down the network and “fix” the problem. You will need to keep track of their activities, whether or not you are authorized to directly manage them, because their actions will affect your investigation.

It is not uncommon for some technical staff to take initiative during an incident, and to try and “help” by doing things like port scanning the IP address associated with an attack, running additional antivirus and spyware scans against in-scope systems, or even taking potentially compromised boxes offline and deleting accounts created by the intruders. Unfortunately, despite the best intentions behind these actions, they can damage your investigation by alerting the intruder to your activities. You will need to ensure that all technical staff understands that they are to take no actions during an incident without the explicit permission of the lead investigator or incident manager.

Any organization concerned about public image or publicly available information should also designate an Outside Liaison, such as a Public Relations Officer, to communicate with media. This function should report directly to the Designated Approval Authority or higher and probably should not have much direct contact with the investigators.

Task Tracking

It can be very tempting just to plunge in and start collecting and analyzing data. After all, that is the fun part. But intrusion investigations can quickly grow large and out of hand. Imagine the following scenario. You have completed analysis on a single system. You have found a backdoor program and two separate rootkits, and determined that the backdoor was beaconing to a specific DNS host name. After checking the DNS logs for the network, you find that five other hosts are beaconing to the same host name. Now all of a sudden you have five new systems from which you will need to collect and analyze data. Your workload has just increased significantly. What do you do first, and in what order do you perform those tasks?

To handle a situation like this, you will need to plan your investigation, set priorities, establish a task list, and schedule those tasks. If you are running a group of analysts, you will need to split those tasks among your staff in an efficient delegation of workload. Perhaps you will assign one person the task of collecting volatile data/memory dumps from the affected systems to begin triage analysis, while another person follows the first individual and images the hard drives from those same devices. You could then assign a third person to collect all available DNS and web proxy logs for each affected system, and analyze those logs to identify a timeline of activity between those hosts and known attacker systems. Table 4.2 provides an example of a simple task tracking chart.

Task tracking is also important in the analysis phase of an intrusion investigation. Keeping track of which systems have been examined for specific items will help reduce missed evidence and duplicate effort. For instance, as new intrusion artifacts emerge during investigations like IP addresses and malware, performing ad hoc searches will inevitably miss items and waste time. It is important to search all media for all artifacts; this can be tracked using a chart of relevant media to record the date each piece of media was searched for each artifact list as shown in Table 4.3. In this way, no source of evidence will be overlooked.

If case management sounds like project management, that's because it is. Common project management processes and tools may benefit your team. But that doesn't mean that you can use just any project manager for running an investigation, or run out immediately to buy MS Project to track your investigative milestones. A network intrusion is too complicated to be managed by someone who does not have detailed technical knowledge of computer security. It would be more effective to take an experienced investigator, who understands the technical necessities of the trade, and further train that individual in project management. If you take this approach, your integration of project management tools and techniques into incident management will go more smoothly, and your organization may see drastic improvements in efficiency, and reductions in cost.

Communication Channels

By definition, you will be working with an untrusted, compromised network during an intrusion investigation. Sending e-mails through the e-mail server on this network is not a good idea. Documenting your investigative priorities and schedules on this network is also not a good idea. You should establish out-of-band communication and off-network storage for the purposes of your investigation. Recommendations include the following:

▪ Cell network or WiMAX adapters for your investigative laptops. This way you can access the Internet for research or communication without passing your traffic or connecting to the compromised network.

▪ Do not send broadcast e-mail announcements to all staff at an organization about security issues that have arisen from a specific incident until after the incident is believed to have been resolved.

▪ Communicate using cell phones rather than desk phones, most especially if those desk phones are attached to the computing environment that could be monitored easily by intruders (i.e., IP phones).

When investigating a security breach, we always assume that the network we are on is untrustworthy. Therefore, to reduce the risk that intruders can monitor our response activities, we always establish a secure method for exchanging data and sharing updated information with those who are involved in the investigation. This can be in the form of encrypted e-mail or a secure server where data.

Containment/Remediation versus Investigative Success

There is a critical decision that needs to be made during any security event, and that decision involves choosing when to take defensive action. Defensive action in this sense could be anything from attempting to contain an incident with traffic filtering around an affected segment to removing malware from infected systems, or rebuilding them from trusted media. The affected organization will want to take these actions immediately, where “immediately” means “yesterday.” You may hear the phrase, “Stop the bleeding,” because no one in charge of a computer network wants to allow an attacker to retain access to their network. This is an understandable and intuitive response. Continued unauthorized access means continued exposure, increased risk and liability, and an increase in total damages. Unfortunately, this response can severely damage your investigation.

When an intrusion is first discovered, you will have no idea of the full extent of the unauthorized activity. It could be a single system, 100 systems, or the entire network with the Active Directory Enterprise Administrator accounts compromised. Your ability to discover the scope of the intrusion will often depend upon your ability to proceed without alerting the attacker(s) that there is an active investigation. If they discover what you are doing, and what you have discovered so far about their activity, they can take specific evasive action. Imagine that an attacker has installed two different backdoors into a network. You discover one of them, and immediately shut it down. The attacker realizes that one of the backdoors has been shut off, and decides to go quiet for a while, and not take any actions on or against the compromised network. Your ability to then observe the attacker in action, and possibly locate the second backdoor, now has been severely reduced.

Attribute Tracking

In a single case, you may collect thousands of attributes or artifacts of the intrusion. So where do you put them, and how do you check that list when you need information? If you are just starting out as a digital investigator, the easier way to track attributes is in a spreadsheet. You can keep several worksheets in a spreadsheet that are each dedicated to a type of artifact. Table 4.4 provides an example attribute tracking chart used to keep a list of files associated with an incident.

You could also keep an even more detailed spreadsheet, by adding rows that include more granular detail. For example, you could add data such as file system date-time stamps, hash value, and important file metadata to Table 4.4 to provide a more complete picture of the files of interest in the table. However, as you gradually add more data, your tables will become unwieldy and difficult to view in a spreadsheet. You will also find that you will be recording duplicate information in different tables, and this can easily become a time sink. A more elegant solution would be a relational database that maintains the same data in a series of tables that you can query using SQL statements from a web front-end rather than scrolling and sorting in Excel. However, developing such a database will require that you be familiar with the types of data that you would like to keep recorded, and how you typically choose to view and report on it.

There are third-party solutions for case management. Vendors such as i2 provide case management software that allows you to input notes and lists of data that can be viewed through their applications. The i2 Analysts Notebook application can also aid in the analysis phase of intrusion investigation by automating link and timeline analysis across multiple data sets, helping digital investigators discern relationships that may not have been immediately obvious, and to visualize those relationships for more human-friendly viewing. This type of tool can be valuable, but it is important to obtain an evaluation copy first to ensure that it will record all the data that you would like to track. A third-party solution will be far easier to maintain, but not as flexible as a custom database. Custom databases can be configured to more easily track attributes such as file metadata values than could a third-party solution. You may find that a combination of third-party tools and custom databases suits your needs most completely.

Attribute Types

An intrusion investigation can potentially take months to complete. During this time, you may be collecting very large amounts of data that are associated with the incident. Examples of common items pertinent to an intrusion investigation are described in this section, but there are a wide variety of attributes that can be recorded. As a general rule, you should record an artifact if it meets any of the following criteria:

▪ It can be used to prove that an event of interest actually occurred.

▪ It can be used to search other computers.

▪ It will help to explain to other people how an event transpired.

Specific examples of attributes include the following.

Host Identity

You will need to identify specific hosts that are either compromised or that are sources of unauthorized activity. These are often identified by:

▪ Internal host name: For example, a compromised server might be known as “app-server-42.widgets.com.” These names are also helpful to remember as internal technical staff will often refer to their systems by these names.

▪ IP address: IP addresses can be used to identify internal and external systems. However, be aware that internal systems may use DHCP, and therefore their IP may change. Record the host name as well.