Chapter 10. Testing Microservices and Microservice-Based Systems

The division of a system into microservices has an impact on testing. Section 10.1 explains the motivation behind software tests. Section 10.2 discusses fundamental approaches to testing broadly, not just with regard to microservices. Section 10.3 explains why there are particular challenges when testing microservices that are not present in other architectural patterns. For example, in a microservice-based system the entire system consisting of all microservices has to be tested (section 10.4). This can be difficult when there are a large number of microservices. Section 10.5 describes the special case of a legacy application that is being replaced by microservices. In that situation the integration of microservices and the legacy application has to be tested—testing just the microservices is not sufficient. Another way to safeguard the interfaces between microservices are consumer-driven contract tests (section 10.7). They reduce the effort required to test the system as a whole—although, of course, the individual microservices still have to be tested as well. In this context the question arises of how individual microservices can be run in isolation without other micro-services (section 10.6). Microservices provide technology freedom; nevertheless, there have to be certain standards, and tests can help to enforce the technical standards (section 10.8) that have been defined in the architecture.

10.1 Why Tests?

Even though testing is an essential part of every software development project, questions about the goals of testing are rarely asked. Ultimately, tests are about risk management. They are supposed to minimize the risk that errors will appear in production systems and be noticed by users or do other damage.

With this in mind, there are a number of things to consider:

• Each test has to be evaluated based on the risk it minimizes. In the end, a test is only meaningful when it helps to avoid concrete error scenarios that could otherwise occur in production.

• Tests are not the only way to deal with risk. The impact of errors that occur in production can also be minimized in other ways. An important consideration is how long it takes for a certain error to be corrected in production. Usually, the longer an error persists, the more severe the consequences. How long it takes to put a corrected version of the services into production depends on the Deployment approach. This is one place where testing and Deployment strategies impact on each other.

• Another important consideration is the time taken before an error in production is noticed. This depends on the quality of monitoring and logging.

There are many measures that can address errors in production. Just focusing on tests is not enough to ensure that high-quality software is delivered to customers.

Tests Minimize Expenditure

Tests can do more than just minimize risk. They can also help to minimize or avoid expenditure. An error in production can incur significant expense. The error may also affect customer service, something that can result in extra costs. Identifying and correcting errors in production is almost always more difficult and time-consuming than during tests. Access to the production systems is normally restricted, and developers will have moved on to work on other features and will have to reacquaint themselves with the code that is causing errors.

In addition, using the correct approach for tests can help to avoid or reduce expenditures. Automating tests may appear time-consuming at first glance. However, when tests are so well defined that results are reproducible, the steps needed to achieve complete formalization, and automation are small. When the costs for the execution of the tests are negligible it becomes possible to test more frequently, which leads to improved quality.

Tests = Documentation

A test defines what a section of code is supposed to do and therefore represents a form of documentation. Unit tests define how the production code is supposed to be used and also how it is intended to behave in exceptional and borderline cases. Acceptance tests reflect the requirements of the customers. The advantage of tests over documentation is that they are executed. This ensures that the tests actually reflect the current behavior of the system and not an outdated state or a state that will only be reached in the future.

Test-Driven Development

Test-driven development is an approach to development that makes use of the fact that tests represent requirements: In this approach developers initially write tests and then write the implementation. This ensures that the entire codebase is safeguarded by tests. It also means that tests are not influenced by knowledge of the code because the code does not exist when the test is written. When tests are implemented after code has been written, developers might not test for certain potential problems because of their knowledge about the implementation. This is unlikely when using test-driven development. Tests turn into a very important base for the development process. They push the development: before each change there has to be a test that does not work. Code can only be adjusted when the test was successful. This is true not only at the level of individual classes, which are safeguarded by previously written unit tests, but also at the level of requirements that are ensured by previously written acceptance tests.

10.2 How to Test?

There are different types of tests that handle different types of risks. The next sections will look into each type of test and which risk it addresses.

Unit Tests

Unit tests examine the individual units that compose a system—just as their name suggests. They minimize the risk that an individual unit contain errors. Unit tests are intended to check small units, such as individual methods or functions. In order to achieve this, any dependencies that exist in the unit have to replaced so that only the unit under test is being exercised and not all its dependencies. There are two ways to replace the dependencies:

• Mocks simulate a certain call with a certain result. After the call the test can verify whether the expected calls have actually taken place. A test can, for instance, define a mock that will return a defined customer for a certain customer number. After the test it can evaluate whether the correct customer has actually been fetched by the code. In another test scenario the mock can simulate an error if asked for a customer. This enables unit tests to simulate error situations that might otherwise be hard to reproduce.

• Stubs, on the other hand, simulate an entire microservice, but with limited functionality. For example, the stub may return a constant value. This means that a test can be performed without the actual dependent microservice. For example, a stub can be implemented that returns test customers for certain customer numbers—each with certain properties.

The responsibility for creating unit tests lies with developers. To support them there are unit testing frameworks that exist for all popular programming languages. The tests use knowledge about the internal structure of the units. For example, they replace dependencies by mocks or stubs. Also, this knowledge can be employed to run through all code paths for code branches within the tests. The tests are white box tests because they exploit knowledge about the structure of the units. Logically, one should really call it a transparent box; however, white box is the commonly used term.

One advantage of unit tests is their speed: even for a complex project the unit tests can be completed within a few minutes. This enables, literally, each code change to be safeguarded by unit tests.

Integration Tests

Integration tests check the interplay of the components. This is to minimize the risk that the integration of the components contains errors. They do not use stubs or mocks. The components can be tested as applications via the UI or via special test frameworks. At a minimum, integration tests should evaluate whether the individual parts are able to communicate with each other. They should go further, however, and, for example, test the logic based on business processes.

In situations where they test business processes the integration tests are similar to acceptance tests that check the requirements of the customers. This area is covered by tools for BDD (behavior-driven design) and ATDD (acceptance test-driven design). These tools make possible a test-driven approach where tests are written first and then the implementation—even for integration and acceptance tests.

Integration tests do not use information about the system under test. They are called black box tests, for they do not exploit knowledge about the internal structure of the system.

UI Tests

UI tests check the application via the user interface. In principle, they only have to test whether the user interface works correctly. There are numerous frameworks and tools for testing the user interface. Among those are tools for web UIs and also for desktop and mobile applications. The tests are black box tests. Since they test the user interface, the tests tend to be fragile: changes to the user interface can cause problems even if the logic remains unchanged. Also this type of testing often requires a complete system setup and can be slow to run.

Manual Tests

Finally, there are manual tests. They can either minimize the risk of errors in new features or check certain aspects like security, performance, or features that have previously caused quality problems. They should be explorative: They look at problems in certain areas of the applications. Tests that are aimed at detecting whether a certain error shows up again (regression tests) should never be done manually since automated tests find such errors more easily and in a more cost-efficient and reproducible manner. Manual testing should be limited to explorative tests.

Load Tests

Load tests analyze the behavior of the application under load. Performance tests check the speed of a system, and capacity tests examine how many users or requests the system is able to handle. All of these tests evaluate the efficiency of the application. For this purpose, they use similar tools that measure response times and generate load. Such tests can also monitor the use of resources or check whether errors occur under a certain load. Tests that investigate whether a system is able to cope with high load over an extended period of time are called endurance tests.

Test Pyramid

The distribution of tests is illustrated by the test pyramid (Figure 10.1): The broad base of the pyramid represents the large number of unit tests. They can be rapidly performed, and most errors can be detected at this level. There are fewer integration tests since they are more difficult to create and take longer to run. There are also fewer potential problems related to the integration of the different parts of the system. The logic itself is also safeguarded by the unit tests. UI tests only have to verify the correctness of the graphical user interface. They are even more difficult to create since automating UI is complicated, and a complete environment is necessary. Manual tests are only required now and then.

Test-driven development usually results in a test pyramid: For each requirement there is an integration test written, and for each change to a class a unit test is written. This leads to many integration tests and even more unit tests being created as part of the process.

The test pyramid achieves high quality with low expenditure. The tests are automated as much as possible. Each risk is addressed with a test that is as simple as possible: is tested by simple and rapid unit tests. More difficult tests are restricted to areas that cannot be tested with less effort.

Many projects are far from the ideal of the test pyramid. Unfortunately, in reality tests are often better represented by the ice-cream cone shown in Figure 10.2. This leads to the following challenges:

• There are comprehensive manual tests since such tests are very easy to implement, and many testers do not have sufficient experience with test automation. If the testers are not able to write maintainable test code, it is very difficult to automate tests.

• Tests via the user interface are the easiest type of automation because they are very similar to the manual tests. When there are automated tests, it is normally largely UI tests. Unfortunately, automated UI tests are fragile: Changes to the graphical user interface often lead to problems. Since the tests are testing the entire system, they are slow. If the tests are parallelized, there are often failures resulting from excessive load on the system rather than actual failures of the test.

• There are few integration tests. Such tests require a comprehensive knowledge about the system and about automation techniques, which testers often lack.

• There can actually be more unit tests than presented in the diagram. However, their quality is often poor since developers often lack experience in writing unit tests.

Other common problems include unnecessarily complex tests that are often used for certain error sources and UI tests or manual tests being used to test logic. For this purpose, however, unit tests would normally be sufficient and much faster. When testing, developers should try to avoid these problems and the ice-cream cone. Instead the goal should be to implement a test pyramid.

The test approach should be adjusted according to the risks of the respective software and should provide tests for the right properties. For example, a project where performance is key should have automated load or capacity tests. Functional tests might not be so important in this scenario.

Continuous Delivery Pipeline

The continuous delivery pipeline (Figure 4.2, section 4.1) illustrates the different test phases. The unit tests from the test pyramid are executed in the commit phase. UI tests can be part of the acceptance tests or could also be run in the commit phase. The capacity tests use the complete system and can therefore be regarded as integration tests from the test pyramid. The explorative tests are the manual tests from the test pyramid.

Automating tests is even more important for microservices than in other software architectures. The main objective of microservice-based architectures is independent and frequent software Deployment. This can only be achieved when the quality of microservices is safeguarded by tests. Without these, Deployment into production will be too risky.

10.3 Mitigate Risks at Deployment

An important benefit of microservices is that they can be deployed quickly because of the small size of the deployable units. Resilience helps to ensure that the failure of an individual microservice doesn’t result in other microservices or the entire system failing. This results in lower risks should an error occur in production despite the microservice passing the tests.

However, there are additional reasons why microservices minimize the risk of a Deployment:

• It is much faster to correct an error, for only one microservice has to be redeployed. This is far faster and easier than the Deployment of a Deployment monolith.

• Approaches like Blue/Green Deployment or Canary Releasing (section 11.4) further reduce the risk associated with Deployments. Using these techniques, a microservice that contains a bug can be removed from production with little cost or time lost. These approaches are easier to implement with microservices since it requires less effort to provide the required environments for a microservice than for an entire Deployment monolith.

• A service can participate in production without doing actual work. Although it will get the same requests as the version in production, changes to data that the new service would trigger are not actually performed but are compared to the changes made by the service in production. This can, for example, be achieved by modifications to the database driver or the database itself. The service could also use a copy of the database. The main point is that in this phase the microservice will not change the data in production. In addition, messages that the microservice sends to the outside can be compared with the messages of the microservices in production instead of sending them on to the recipients. With this approach the microservice runs in production against all the special cases of the real life data—something that even the best test cannot cover completely. Such a procedure can also provide more reliable information regarding performance, although data is not actually written, so performance is not entirely comparable. These approaches are very difficult to implement with a Deployment monolith because of the difficulty of running the entire Deployment monolith in another instance in production. This would require a lot of resources and a very complex configuration because the Deployment monolith could introduce changes to data in numerous locations. Even with microservices this approach is still complex, and comprehensive support is necessary in software and Deployment. Extra code has to be written for calling the old and the new version and to compare the changes and outgoing messages of both versions. However, this approach is at least feasible.

• Finally, the service can be closely examined via monitoring in order to rapidly recognize and solve problems. This shortens the time before a problem is noticed and addressed. This monitoring can act as a form of acceptance criteria of load tests. Code that fails in a load test should also create an alarm during monitoring in production. Therefore, close coordination between monitoring and tests is sensible.

In the end the idea behind these approaches is to reduce the risk associated with bringing a microservice into production instead of addressing the risk with tests. When the new version of a microservice cannot change any data, its Deployment is practically free of risk. This is difficult to achieve with Deployment monoliths since the Deployment process is much more laborious and time consuming and requires more resources. This means that the Deployment cannot be performed quickly and therefore cannot be easily rolled back when errors occur.

The approach is also interesting because some risks are difficult to eliminate with tests. For example, load and performance tests can be an indicator of the behavior of the application in production. However, these tests cannot be completely reliable since the volume of data will be different in production, user behavior is different, and hardware is often differently sized. It is not feasible to cover all these aspects in one test environment. In addition, there can be errors that only occur with data sets from production—these are hard to simulate with tests. Monitoring and rapid Deployment can be a realistic alternative to tests in a microservices environment. It is important to think about which risk can be reduced with which type of measure—tests or optimizations of the Deployment pipeline.

10.4 Testing the Overall System

In addition to the tests for each of the individual microservices, the system as a whole also has to be tested. This means that there are multiple test pyramids: one for each individual microservice and one for the system in its entirety (see Figure 10.3). For the complete system there will also be integration tests of the microservices, UI tests of the entire application and manual tests. Unit tests at this level are the tests of the microservices since they are the units of the overall system. These tests consist of a complete test pyramid of the individual microservices.

The tests for the overall system are responsible for identifying problems that occur in the interplay of the different microservices. Microservices are distributed systems. Calls can require the interplay of multiple microservices to return a result to the user. This is a challenge for testing: distributed systems have many more sources of errors, and tests of the overall system have to address these risks. However, when testing microservices another approach is chosen: with resilience the individual micro-services should still work even if there are problems with other microservices. So a failure of parts of the system is expected and should not have severe consequences. Functional tests can be performed with stubs or mocks of the other microservices. In this way microservices can be tested without the need to build up a complex distributed system and examine it for all possible error scenarios.

Shared Integration Tests

Before being deployed into production, each microservice should have its integration with other microservices tested. This requires changes to the continuous delivery pipeline as it was described in section 5.1: At the end of the Deployment pipeline each microservice should be tested together with the other microservices. Each microservice should run through this step on its own. When new versions of multiple microservices are tested together at this step and a failure occurs it will not be clear which microservice has caused the failure. There may be situations where new versions of multiple microservices can be tested together and the source of failures will always be clear. However, in practice such optimizations are rarely worth the effort.

This reasoning leads to the process illustrated in Figure 10.4: The continuous delivery pipelines of the microservices end in a common integration test into which each microservice has to enter separately. When a microservice is in the integration test phase, the other microservices have to wait until the integration test is completed. To ensure that only one microservice at a time runs through the integration tests the tests can be performed in a separate environment. Only one new version of a microservice may be delivered into this environment at a given point in time, and the environment enforces the serialized processing of the integration tests of the microservices.

Such a synchronization point slows down the Deployment and therefore the entire process. If the integration test lasts for an hour, for example, it will only be possible to put eight microservices through the integration test and into production per eight-hour work day. If there are eight teams in the project, each team will only be able to deploy a microservice once a day. This is not sufficient to achieve rapid error correction in production. Besides, this weakens an essential advantage of microservices: It should be possible to deploy each microservice independently. Even though this is in principle still possible, the Deployment takes too long. Also, the microservices now have dependencies to each other because of the integration tests—not at the code level but in the Deployment pipelines. In addition, things are not balanced when the continuous delivery without the last integration test requires, for example, only one hour, but it is still not possible to get more than one release into production per day.

Avoiding Integration Tests of the Overall System

This problem can be solved with the test pyramid. It moves the focus from integration tests of the overall system to integration tests of the individual microservices and unit tests. When there are fewer integration tests of the overall system, they will not take as much time to run. In addition, less synchronization is necessary, and the Deployment into production is faster. The integration tests are only meant to test the interplay between microservices. It is sufficient when each microservice can reach all dependent microservices. All other risks can then be taken care of before this last test. With consumer-driven contract tests (section 10.7) it is even possible to exclude errors in the communication between the microservices without having to test the microservices together. All these measures help to reduce the number of integration tests and therefore their total duration. In the end there is no reduction in overall testing—the testing is just moved to other phases: to the tests of the individual microservices and to the unit tests.

The tests for the overall system should be developed by all teams working together. They form part of the macro architecture because they concern the system as a whole and cannot therefore be the responsibility of an individual team (see section 12.3).

The complete system can also be tested manually. However, it is not feasible for each new version of a microservice to only go into production after a manual test with the other microservices. The delays will just be too long. Manual tests of the system can, for example, address features that are not yet activated in production. Alternatively, certain aspects like security can be tested in this manner if problems have occurred in these areas previously.

10.5 Testing Legacy Applications and Microservices

Microservices are often used to replace legacy applications. The legacy applications are usually Deployment monoliths. Therefore, the continuous delivery pipeline of the legacy application tests many functionalities that have to be split into microservices. Because of the many functionalities the test steps of the continuous delivery pipeline for Deployment monoliths take a very long time. Accordingly, the Deployment in production is very complex and takes a long time. Under such conditions it is unrealistic that each small code change to the legacy application goes into production. Often there are Deployments at the end of a sprint of 14 days or even only one release per quarter. Nightly tests inspect the current state of the system. Tests can be transferred from the continuous delivery pipeline into the nightly tests. In that case the continuous delivery pipeline will be faster, but certain errors are only recognized during the nighttime testing. Then the question arises of which of the changes of the past day is responsible for the error.

Relocating Tests of the Legacy Application

When migrating from a legacy application to microservices, tests are especially important. If just the tests of the legacy application are used, they will test a number of functionalities that meanwhile have been moved to microservices. In that case these tests have to be run at each release of a microservice—which takes much too long. The tests have to be relocated. They can turn into integration tests for the microservices (see Figure 10.5). However, the integration tests of the microservices should run rapidly. In this phase it is not necessary to use tests for functionalities, which reside in a single microservice. Then the tests of the legacy application have to turn into integration tests of the individual microservices or even into unit tests. In that case they are much faster. Additionally, they run as tests for a single microservice so that they do not slow down the shared tests of the microservices.

Not only the legacy application has to be migrated, but also the tests. Otherwise fast Deployments will not be possible in spite of the migration of the legacy application.

The tests for the functionalities that have been transferred to microservices can be removed from the tests of the legacy application. Step by step this will speed up the Deployment of the legacy application. Consequently, changes to the legacy application will also get increasingly easier.

Integration Test: Legacy Application and Microservices

The legacy application also has to be tested together with the microservices. The microservices have to be tested together with the version of the legacy production that is in production. This ensures that the microservices will also work in production together with the legacy application. For this purpose, the version of the legacy application running in production can be integrated into the integration tests of the microservices. It is the responsibility of each microservice to pass the tests without any errors with this version (see Figure 10.6).

When the Deployment cycles of the legacy application last days or weeks, a new version of the legacy application will be in development in parallel. The microservices also have to be tested with this version. This ensures that there will not suddenly be errors occurring upon the release of the new legacy application. The version of the legacy application that is currently in development runs an integration test with the current microservices as part of its own Deployment pipeline (Figure 10.7). For this the versions of the microservices that are in production have to be used.

The versions of the microservices change much more frequently than the version of the legacy application. A new version of a microservice can break the continuous delivery pipeline of the legacy application. The team of the legacy application cannot solve these problems since it does not know the code of the microservices. This version of the microservice is possibly already in production though. In that case a new version of the microservice has to be delivered to eliminate the error—although the continuous delivery pipeline of the microservice ran through successfully.

An alternative would be to also send the microservices through an integration test with the version of the legacy application that is currently in development. However, this prolongs the overarching integration test of the microservices and therefore renders the development of the microservices more complex.

The problem can be addressed by consumer-driven contract tests (section 10.7). The expectations of the legacy application to the microservices and of the micro-services to the legacy application can be defined by consumer-driven contract tests so that the integration tests can be reduced to a minimum.

In addition, the legacy application can be tested together with a stub of the microservices. These tests are not integration tests since they only test the legacy application. This enables reduction of the number of overarching integration tests. This concept is illustrated in section 10.6 using tests of microservices as example. However, this means that the tests of the legacy application have to be adjusted.

10.6 Testing Individual Microservices

Tests of the individual microservices are the duty of the team that is responsible for the respective microservice. The team has to implement the different tests such as unit tests, load tests, and acceptance tests as part of their own continuous delivery pipeline—as would also be the case for systems that are not microservices.

However, for some functionalities microservices require access to other micro-services. This poses a challenge for the tests: It is not sensible to provide a complete environment with all microservices for each test of each microservice. On the one hand, this would use up too many resources. On the other hand, it is difficult to supply all these environments with the up-to-date software. Technically, lightweight virtualization approaches like Docker can at least reduce the expenditure in terms of resources. However, for 50 or 100 microservices this approach will not be sufficient anymore.

Reference Environment

A reference environment in which the microservices are available in their current version is one possible solution. The tests of the different microservices can use the microservices from this environment. However, errors can occur when multiple teams test different microservices in parallel with the microservices from the reference environment. The tests can influence each other and thereby create errors. Besides the reference environment has to be available. When a part of the reference environment breaks down due to a test, in extreme cases tests might be impossible for all teams. The microservices have to be hold available in the reference environment in their current version. This generates additional expenditure. Therefore, a reference environment is not a good solution for the isolated testing of microservices.

Stubs

Another possibility is the simulation of the used microservice. For the simulation of parts of a system for testing there are two different options as section 10.2 presented, namely stubs and mocks. Stubs are the better choice for the replacement of microservices. They can support different test scenarios. The implementation of a single stub can support the development of all dependent microservices.

If stubs are used, the teams have to deliver stubs for their microservices. This ensures that the microservices and the stubs really behave largely identically. When consumer-driven contract tests also validate the stubs (see section 10.7), the correct simulation of the microservices by the stubs is ensured.

The stubs should be implemented with a uniform technology. All teams that use a microservice also have to use stubs for testing. Handling the stubs is facilitated by a uniform technology. Otherwise a team that employs several microservices has to master a plethora of technologies for the tests.

Stubs could be implemented with the same technology as the associated microservices. However, the stubs should use fewer resources than the microservices. Therefore, it is better when the stubs utilize a simpler technology stack. The example in section 13.13 uses for the stubs the same technology as the associated microservices. However, the stubs deliver only constant values and run in the same process as the microservices that employ the stub. Thereby the stubs use up less resources.

There are technologies that specialize on implementing stubs. Tools for client-driven contract tests can often also generate stubs (see section 10.7).

• mountebank1 is written in JavaScript with Node.js. It can provide stubs for TCP, HTTP, HTTPS, and SMTP. New stubs can be generated at run time. The definition of the stubs is stored in a JSON file. It defines under which conditions which responses are supposed to be returned by the stub. An extension with JavaScript is likewise possible. mountebank can also serve as proxy. In that case it forwards requests to a service—alternatively, only the first request is forwarded and the response is recorded. All subsequent requests will be answered by mountebank with the recorded response. In addition to stubs, mountebank also supports mocks.

• WireMock2 is written in Java and is available under the Apache 2 license. This framework makes it very easy to return certain data for certain requests. The behavior is determined by Java code. WireMock supports HTTP and HTTPS. The stub can run in a separate process, in a servlet container or directly in a JUnit test.

• Moco3 is likewise written in Java and is available under the MIT license. The behavior of the stubs can be expressed with Java code or with a JSON file. It supports HTTP, HTTPS, and simple socket protocols. The stubs can be started in a Java program or in an independent server.

3. https://github.com/dreamhead/moco

• stubby4j4 is written in Java and is available under the MIT license. It utilizes a YAML file for defining the behavior of the stub. HTTPS is supported as protocol in addition to HTTP. The definition of the data takes place in YAML or JSON. It is also possible to start an interaction with a server or to program the behavior of stubs with Java. Parts of the data in the request can be copied into the response.

4. https://github.com/azagniotov/stubby4j

10.7 Consumer-Driven Contract Tests

Each interface of a component is ultimately a contract: the caller expects that certain side effects are triggered or that values are returned when it uses the interface. The contract is usually not formally defined. When a microservice violates the expectations, this manifests itself as error that is either noticed in production or in integration tests. When the contract can be made explicit and tested independently, the integration tests can be freed from the obligation to test the contract without incurring a larger risk for errors during production. Besides, then it would get easier to modify the microservices because it would be easier to anticipate which changes cause problems with using other microservices.

Often changes to system components are not performed because it is unclear which other components use that specific component and how they us it. There is a risk of errors during the interplay with other microservices, and there are fears that the error will be noticed too late. When it is clear how a microservice is used, changes are much easier to perform and to safeguard.

Components of the Contract

These aspects belong to the contract5 of a microservice:

5. http://martinfowler.com/articles/consumerDrivenContracts.html

• The data formats define in which format information is expected by the other microservices and how they are passed over to a microservice.

• The interface determines which operations are available.

• Procedures or protocols define which operations can be performed in which sequence with which results.

• Finally, there is meta information associated with the calls that can comprise for example a user authentication.

• In addition, there can be certain nonfunctional aspects like the latency time or a certain throughput.

Contracts

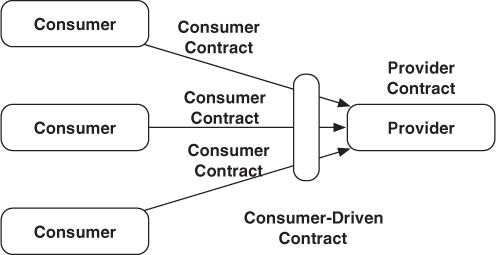

There are different contracts between the consumers and the provider of a service:

• The Provider Contract comprises everything the service provider provides. There is one such contract per service provider. It completely defines the entire service. It can, for instance, change with the version of the service (see section 8.6).

• The Consumer Contract comprises all functionalities that a service user really utilizes. There are several such contracts per service—at least one with each user. The contract comprises only the parts of the service that the user really employs. It can change through modifications to the service consumer.

• The Consumer-Driven Contract (CDC) comprises all user contracts. Therefore, it contains all functionalities that any service consumer utilizes. There is only one such contract per service. Since it depends on the user contracts, it can change when the service consumers add new calls to the service provider or when there are new requirements for the calls.

Figure 10.8 summarizes the differences.

The Consumer-Driven Contract makes clear which components of the provider contracts are really used. This also clarifies where the microservice can still change its interface and which components of the microservice are not used.

Implementation

Ideally, a Consumer-Driven Contract turns into a consumer-driven contract test that the service provider can perform. It has to be possible for the service consumer to change these tests. They can be stored together in the version control with the microservice of the service provider. In that case the service consumers have to get access to the version control of the service provider. Otherwise the tests can also be stored in the version control of the service consumers. In that case the service provider has to fetch the tests out of the version control and execute them with each version of the software. However, in that case it is not possible to perform version control on the tests together with the tested software since tests and tested software are in two separate projects within the version control system.

The entirety of all tests represents the Consumer-Driven Contract. The tests of each team correspond to the Consumer Contract of each team. The consumer-driven contract tests can be performed as part of the tests of the microservice. If they are successful, all service consumers should be able to successfully work together with the microservice. The test precludes that errors will only be noticed during the integration test. Besides, modifications to the microservices get easier because requirements for the interfaces are known and can be tested without special expenditure. Therefore, the risk associated with changes that affect the interface is much smaller since problems will be noticed prior to integration tests and production.

Tools

To write consumer-driven contract tests a technology has to be defined. The technology should be uniform for all projects because a microservice can use several other microservices. In that case a team has to write tests for different other microservices. This is easier when there is a uniform technology. Otherwise the teams have to know numerous different technologies. The technology for the tests can differ from the technology used for implementation.

• An arbitrary test framework is an option for implementing the consumer-driven contract tests. For load tests additional tools can be defined. In addition to the functional requirements there can also be requirements with regard to the load behavior. However, it has to be clearly defined how the microservice is provided for the test. For example, it can be available at a certain port on the test machine. In this way the test can take place via the interface that is also used for access by other microservices.

• In the example application (section 13.13), simple JUnit tests are used for testing the microservice and for verifying whether the required functionalities are supported. When incompatible changes to data formats are performed or the interface is modified in an incompatible manner, the tests fail.

• There are tools especially designed for the implementation of consumer-driven contract tests. An example is Pacto.6 It is written in Ruby and is available under the MIT license. Pacto supports REST/HTTP and supplements such interfaces with a contract. Pacto can be integrated into a test structure. In that case Pacto compares the header with expected values and the JSON data structures in the body with JSON schemas. This information represents the contract. The contract can also be generated out of a recorded interaction between a client and a server. Based on the contract Pacto can validate the calls and responses of a system. In addition, Pacto can create with this information simple stubs. Moreover, Pacto can be used in conjunction with RSpec to write tests in Ruby. Also test systems that are written in other languages than Ruby can be tested in this way. Without RSpec, Pacto offers the possibility to run a server. Therefore it is possible to use Pacto outside of a Ruby system also.

6. http://thoughtworks.github.io/pacto/

• Pact7 is likewise written in Ruby and under MIT license. The service consumer can employ Pact to write a stub for the service and to record the interaction with the stub. This results in a Pact file that represents the contract. It can also be used for testing whether the actual service correctly implements the contract. Pact is especially useful for Ruby, however pact-jvm8 supports a similar approach for different JVM languages like Scala, Java, Groovy or Clojure.

7. https://github.com/realestate-com-au/pact

8. https://github.com/DiUS/pact-jvm

10.8 Testing Technical Standards

Microservices have to fulfill certain technical requirements. For example, microservices should register themselves in Service Discovery and keep functioning even if other microservices break down. Tests can verify these properties. This entails a number of advantages:

• The guidelines are unambiguously defined by the test. Therefore, there is no discussion how precisely the guidelines are meant.

• They can be tested in an automated fashion. Therefore it is clear at any time whether a microservice fulfills the rules or not.

• New teams can test new components concerning whether they comply with the rules or not.

• When microservices do not employ the usual technology stack, it can still be ensured that they behave correctly from a technical point of view.

Among the possible tests are:

• The microservices have to register in the Service Discovery (section 7.11). The test can verify whether the component registers at service registry upon starting.

• Besides, the shared mechanisms for configuration and coordination have to be used (section 7.10). The test can control whether certain values from the central configuration are read out. For this purpose, an individual test interface can be implemented.

• A shared security infrastructure can be checked by testing the use of the microservice via a certain token (section 7.14).

• With regard to documentation and metadata (section 7.15) whether a test can access the documentation via the defined path can be tested.

• With regard to monitoring (section 11.3) and logging (section 11.2) whether the microservice provides data to the monitoring interfaces upon starting and delivers values resp. log entries can be examined.

• With regard to Deployment (section 11.4) it is sufficient to deploy and start the microservice on a server. When the defined standard is used for this, this aspect is likewise correctly implemented.

• As test for control (section 11.6) the microservice can simply be restarted.

• To test for resilience (section 9.5) in the simplest scenario whether the micro-service at least boots also in absence of the dependent microservices and displays errors in monitoring can be checked. The correct functioning of the microservice upon availability of the other microservices is ensured by the functional tests. However, a scenario where the microservice cannot reach any other service is not addressed in normal tests.

In the easiest case the technical test can just start and deploy the microservice. Therefore Deployment and control are already tested. Dependent microservices do not have to be present for that. Starting the microservice should also be possible without dependent microservices due to resilience. Subsequently, logging and monitoring can be examined that should also work and contain errors in this situation. Finally, the integration in the shared technical services like Service Discovery, configuration and coordination, or security can be checked.

Such a test is not hard to write and can render many discussions about the precise interpretation of the guidelines superfluous. Therefore, this test is very useful. Besides, it tests scenarios that are usually not covered by automated tests—for instance, the breakdown of dependent systems.

This test does not necessarily provide complete security that the microservice complies with all rules. However, it can at least examine whether the fundamental mechanisms function.

Technical standards can easily be tested with scripts. The scripts should install the microservice in the defined manner on a virtual machine and start it. Afterwards the behavior, for instance with regard to logging and monitoring, can be tested. Since technical standards are specific for each project, a uniform approach is hardly possible. Under certain conditions a tool like Serverspec9 can be useful. It serves to examine the state of a server. Therefore, it can easily determine whether a certain port is used or whether a certain service is active.

10.9 Conclusion

Reasons for testing include, on the one hand, the risk that problems are only noticed in production and, on the other hand, that tests serve as an exact specification of the system (section 10.1).

Section 10.2 illustrated how using the concept of the test pyramid tests should be structured: The focus should be on fast, easily automatable unit tests. They address the risk that there are errors in the logic. Integration tests and UI tests then only ensure the integration of the microservices with each other and the correct integration of the microservices into the UI.

As section 10.3 showed, microservices can additionally deal with the risk of errors in production in a different manner: microservice Deployments are faster, they influence only a small part of the system, and microservices can even run blindly in production. Therefore the risk of Deployment decreases. Thus it can be sensible instead of comprehensive tests to rather optimize the Deployment in production to such an extent that it is, for all practical purposes, free of risk. In addition, the section discussed that there are two types of test pyramids for microservice-based systems: one per microservice and one for the overall system.

Testing the overall system entails the problem that each change to a microservice necessitates a run through this test. Therefore, this test can turn into a bottleneck and should be very fast. Thus, when testing microservices, one objective is to reduce the number of integration tests across all microservices (section 10.4).

When replacing legacy applications not only their functionality has to be transferred into microservices, but also the tests for the functionalities have to be moved into the tests of the microservices (section 10.5). Besides, each modification to a microservice has to be tested in the integration with the version of the legacy application used in production. The legacy application normally has a much slower release cycle than the microservices. Therefore, the version of the legacy application that is at the time in development has to be tested together with the microservices.

For testing individual microservices the other microservices have to be replaced by stubs. This enables you to uncouple the tests of the individual microservices from each other. Section 10.6 introduced a number of concrete technologies for creating stubs.

In section 10.7 client-driven contract tests were presented. With this approach the contracts between the microservices get explicit. This enables a microservice to check whether it fulfills the requirements of the other microservices—without the need for an integration test. Also for this area a number of tool are available.

Finally, section 10.8 demonstrated that technical requirements to the microservices can likewise be tested in an automated manner. This enables unambiguous establishment of whether a microservice fulfills all technical standards.

Essential Points

• Established best practices like the test pyramid are also sensible for microservices.

• Common tests across all microservices can turn into a bottleneck and therefore should be reduced, for example, by performing more consumer-driven contract tests.

• With suitable tools stubs can be created from microservices.