Chapter 6. Tuning, Delivering, and Protecting Video Content

Now that you’ve learned how to design and develop for the 10-foot UI experience, we’ll turn to the most popular content consumed on TV: video. Whenever it comes to video content, developers and publishers on the web have many factors to take into consideration in order to ensure a good experience for users. This is especially important on Google TV because the video experience on big display devices like Google TV is expected to emulate, if not rival, the generally accepted viewing experience of high definition TV (HDTV).

To really achieve the best video experience possible, you’ll need to develop a good understanding of video delivery and make intelligent trade-offs among many variables. For example, you’ll need to learn about digitizing and editing your videos, choosing encoding formats, evaluating license and support issues, and selecting delivery options and protocols. You’ll also need to learn about how to optimize video based on factors such as compression quality, frames per second, pixel resolution, bit rate, and bandwidth.

Before we discuss these key factors and how they play out on Google TV, however, we’ll briefly recap some video basics.

Video Basics

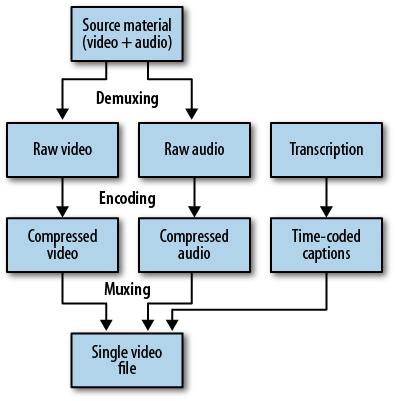

Raw video files are ideal as far as video quality is concerned, but their sheer size usually hinders the speed and performance needed for online video, and also results in high storage and bandwidth costs. Subsequently, all web videos files are served in compressed or encoded formats. The process of video encoding is illustrated in Figure 6-1.

There are three main steps for turning source material of video and audio into a single video file:

Demuxing: separates out video and audio streams

Encoding: compresses video and audio streams

Muxing: combines video and audio streams plus captions (if any)

Note that text transcription can optionally be converted into time-coded captions and added into the mix to yield a single final video file during the step of muxing. There are four main concepts in video encoding:

Video codec format: defines how pixels are compressed

Audio codec format: defines how waveforms are compressed

Caption format: defines if and when captions are displayed

Video container format: holds it all together (think about zip format)

The video container formats define how video and audio are packaged together and they are commonly shown as the suffix of video files, therefore often referred to as video formats. Popular container formats include AVI, MP4, OGG, and FLV. Each has its own compatible audio and video codecs and caption formats, as detailed in the comparison found at http://goo.gl/G0q01.[14]

On Google TV, there are two options for serving video content with a web app:

Embed video using HTML5 in a web page in Google Chrome

Embed video using the Flash Player plug-in in Google Chrome

Next, let’s go over each one of these options and discuss specific recommendations in terms of encoding codecs and container formats, as well as optimization and tuning techniques.

Embedding Video with HTML5 in Google Chrome

Prior to the advent of HTML5, there was no standards-based way to achieve video content delivery. Instead, video “on the web” typically was funneled through a third party plug-in, such as QuickTime or Flash Player.

HTML5 introduced the <video> element, which

allows developers to include video directly in web pages without using

any plug-ins. Moreover, since the <video> tag is a

part of the HTML5 specification, it enables deep and natural integration

with the other layers of the web development stack, such as CSS,

Javascript, and other HTML tags (e.g., canvas). This potentially opens

up opportunities for rich interactive applications that don’t rely on

any plug-ins.

The Markup

In the Google Chrome browser and other modern browsers, you can

embed the HTML5 <video> tag as follows:

<video> <source src="movie.mp4" type='video/mp4; codecs="avc1.42E01E, mp4a.40.2"' /> <source src="movie.webm" type='video/webm; codecs="vp8, vorbis"' /> </video>

Alternatively, you may use a single video format which makes the syntax very similar to the tag used below:

<video src="movie.webm"></video>

There are various ways that you can integrate

<video> tag via HTML5, and we recommend that you

check out the examples provided at HTML5rocks.com to get a better feel

for how video can be embedded this way.

Container Formats and Codecs

As you can see, multiple <source> tags can be

used in general to include multiple container formats as fallback

types for a variety of browsers. On Google TV, however, in order to

minimize hardware requirements and software complexity, it is

recommended that the container format MP4, combined with the H.264

video codec and the AAC audio codec, be used as this is the most

widely adopted combination for encoding video content. Additionally,

hardware acceleration of H.264 decoding is built into Google TV to

ensure good performance on big screen devices.

Encoding, Tuning, and All That

Aside from choosing your container format and video and audio codecs, there are other variables and considerations you need to take into account when encoding and tuning your video. The following are specific recommendations applicable for Google TV that you should keep in mind when encoding and tuning your video:

| Video codec | H.264 |

| Audio codec | AAC |

| Resolution | 720p or 1080p |

| Frame rate | 24 to 30 frames per second (FPS) |

| Combined bit rate | Up to 2 Mbps |

| Pixel aspect ratio | 1x1 |

While the HTML5 delivery option offers a quick and simple way of embedding video with a native tag inside Google Chrome, there are some missing features such as access to microphone and camera, support for streaming, and content protection and rights management. If any of these features are critical to your application, you’ll want to consider Flash as an alternative.

Embedding Video Using the Flash Player Plug-in in Google Chrome

Flash is another option that you can use to encode video content. Video content will be embedded inside a SWF video player, and rendered via the SWF file using the Flash Player plug-in, which is bundled with Google Chrome by default. Here are the typical steps you’ll have to perform:

Encode raw video files into appropriate video formats (e.g. FLV and F4V)

Write video player in ActionScript and load encoded video files as video assets into the video player and generate a SWF file

Embed the SWF file inside a web page and render it using the Flash Player plug-in in Google Chrome

The Markup

The following code snippet shows a typical way of embedding a SWF file inside a web page:

<object classid="clsid:D27CDB6E-AE6D-11cf-96B8-444553540000" codebase="http://download.macromedia.com/.../swflash.cab#version=6,0,29,0" width="400" height="400" > <param name="movie" value="videoplayer.swf"> <param name="quality" value="high" > <param name="LOOP" value="false"> <embed src="videoplayer.swf" width="400" height="400" loop="false" quality="high" pluginspage="http://www.macromedia.com/go/getflashplayer" type="application/x-shockwave-flash"> </ embed > </ object >

The <object> tag in this code is for general

compatibility with the Internet Explorer browser, but on Google TV,

since Chrome is the only available browser, the <embed> tag

alone is sufficient.

Container Formats and Codecs

The supported container formats for Flash Player include MP4, FLV, F4V, AVI, and ASF. For Google TV, we recommend the following combinations of container formats, video codec, audio codec, and caption format:

| Container format | Video codec | Audio codec | Caption |

| MP4 | H.264 | AAC | MPEG-4 Timed Text |

| FLV | H.264 | AAC | DXFP |

| F4V | H.264 | AAC | (N/A) |

Encoding and Tuning Guidelines

The following are guidelines for Google TV that you may keep in mind when encoding and tuning your video for Flash:

| Video codec | H.264, Main or High Profile, progressive encoding |

| Audio codec | AAC-LC or AC3, 44.1kHz, Stereo |

| Resolution | 720p or 1080p |

| Frame rate | 24 to 30 frames per second (FPS) |

| Combined bit rate | Up to 2 Mbps (or higher depending on available bandwith) |

| Pixel aspect ratio | 1 × 1 |

Video Player and Rendering Optimization

The standard way of playing back Flash video (i.e., FLV video

files) in a video player via ActionScript is to instantiate a

Video object:

/ AS3

var myVideo:Video = new Video();

addChild(myVideo);

var nc:NetConnection = new NetConnection();

nc.connect(null);

var ns:NetStream = new NetStream(nc);

myVideo.attachNetStream(ns);

ns.play("http://yourserver.com/flash-files/MyVideo.flv");However, this generic Video object comes with many

features, like rotation, blendMode, alpha channel, filter, mask, etc.,

that may be overkill for a high resolution display (they also tend to

result in less than ideal performance, especially on a device using a

less-powerful CPU). Because of this, Adobe introduced a new

StageVideo API to improve video rendering on

high-resolution devices like Google TV.

You can implement the StageVideo object using the

following code:

var v:Vector.<StageVideo> = stage.stageVideos;

var stageVideo:StageVideo;

if ( v.length >= 1 ) {

stageVideo=v[0];

}

netConnection = newNetConnection();

netConnection.connect(null);

netStream = new NetStream(netConnection);

var v:Vector.<StageVideo> = stage.stageVideos;

if ( v.length >= 1 ) {

varstageVideo:StageVideo=v[0];

stageVideo.viewPort=new Rectangle(100,100,320,240);

stageVideo.addEventListener(StageVideoEvent.RENDER_STATE,<callback>);

stageVideo.attachNetStream(netStream);

}

netStream.play("MyVideo.f4v")By introducing StageVideo object, Adobe has made a

trade-off in favor of performance at the expense of certain features.

For example, on Google TV, the StageVideo object does not

have alpha property for transparency and you cannot play multiple

StageVideo objects simultaneously. You can read more

about the StageVideo object on Adobe’s website at http://goo.gl/qXWoV.

Video Delivery Guidelines

The Adobe Flash Platform offers a variety of protocols to support various applications that serve video over a network. These protocols include:

HTTP Dynamic Streaming (F4F format)

RTMP/e Streaming

HTTP Progressive Download

RTMFP Peer-to-Peer

RTMFP Multicast

Depending on the nature of your applications and existing delivery infrastructure, you may want to choose your protocols appropriately. For example, you may want streaming video for real-time application or progressive download for faster playback or broadcast versus peer-to-peer video experiences. These options offer you a lot of flexibility and make it possible to develop applications that go beyond simple video playback.

Another aspect of your video delivery system over the Internet is that available bandwidth changes in real time throughout the time a video is playing back. For this reason, your delivery system needs to be smart enough to dynamically adjust bitrate.

On the server side, you could consider Adobe Flash Media Server technology, which offers adaptive bitrate capabilities as well as recovery during network outages.

Video Player and Rendering Optimizations

It is important to optimize your video player. If your video player hogs too many CPU cycles, it may affect the frame rate of the video playback, or it may cause the UI to feel sluggish and unresponsive. Typically, excessive script execution and excessive rendering are often culprits for poor user experiences.

Here are some considerations to keep in mind when developing your video player:

Simplify rendering complexity: reduce the number of objects on Stage as much as possible

Limit use of timer functions and check for duplicates: minimize ActionScript processing intervals

Avoid render items not currently visible

Cose the NetStream when video is finished

Do not set wmode to “transparent” and avoid transparency in general

Avoid executing large amount of script while video is playing back

Content Protection and DRM

Google TV supports Flash Platform content protection for premium video content. Many of the leading premium video providers use the Flash Platform to provide a seamless viewing experience.

Streaming video securely from Flash Media Server (FMS) is possible by using technologies such as RTMPE (Real Time Media Protocol Encrypted) and SWF Verification. The content protection features in FMS are supported by the vast majority of content delivery networks (CDNs), enabling an easy content workflow and broad geographical reach. See Adobe’s white paper entitled Protecting Online Video Distribution with Adobe Flash Media Technology, for an overview of typical content protection workflows using RTMPE, SWF Verification, and related features: http://goo.gl/93zHm.

We also expect other available content protection mechanisms will be supported in future versions of Google TV.

Tip

Remember to only present video and other content on your site that you have the legal right to use, and to get legal advice if you have any doubts about your ability to use specific content.

[14] Note that some codecs may be protected by patents or other intellectual property rights; you should get appropriate legal advice and information to help you determine if you need to seek a license for any particular codec.