Diving deeper into our VPC, we are now going to look at ways to enhance the security around our EC2 instances.

IAM EC2 Roles are the recommended way to grant your application access to AWS services.

As an example, let us assume we had a web app running on our web server EC2 instance and it needs to be able to upload assets to S3.

A quick way of satisfying that requirement would be to create a set of IAM access keys and hardcode those into the application or its configuration. This however means that from that moment on it might not be very easy to update those keys unless we perform an app/config deployment. Furthermore, we might for one reason or another end up re-using the same set of keys with other applications.

The security implications are evident: reusing keys increases our exposure if those get compromised and having them hardcoded greatly increases our reaction time (it takes more effort to rotate such keys).

An alternative to the preceding method would be to use Roles. We would create an EC2 Role, grant it write access to the S3 bucket and assign it to the web server EC2 instance. Once the instance has booted, it is given temporary credentials which can be found in its metadata and which get changed at regular intervals. We can now instruct our web app to retrieve the current set of credentials from the instance metadata and use those to carry out the S3 operations. If we were to use the AWS CLI on that instance, we would notice that it fetches the said metadata credentials by default.

Roles can be used to assume other roles, making it possible for your instances to temporarily escalate their privileges by assuming a different role within your account or even across AWS accounts (ref: http://docs.aws.amazon.com/STS/latest/APIReference/API_AssumeRole.html).

The most common way to interact with an EC2 instance would be over SSH. Here are a couple of ideas to make our SSH sessions even more secure.

When a vanilla EC2 instance is launched it usually has a set of PEM keys associated with it to allow initial SSH access. If you also work within a team, my recommendation would be not to share that same key pair with your colleagues.

Instead, as soon as you, or ideally your configuration management tool, gain access to the instance, individual user accounts should be created and public keys uploaded for the team members (plus sudo access where needed). Then the default ec2-user account (on Amazon Linux) and PEM key can be removed.

Regardless of the purpose that an EC2 instance serves, it is rarely the case that you must have direct external SSH access to it.

Assigning public IP addresses and opening ports on EC2 instances is often an unnecessary exposure in the name of convenience and somewhat contradicts the idea of using a VPC in the first place.

SSH can unarguably be useful however. So, to maintain the balance between the forces, one could setup an SSH gateway host with a public address. You would then restrict access to it to your home and/or office network and permit SSH connections from that host towards the rest of the VPC estate.

The chosen node becomes the administrative entry point of the VPC.

Latency is of importance. You will find brilliant engineering articles online from expert AWS users who have put time and effort into benchmarking ELB performance and side-effects.

Perhaps not surprisingly their findings show that there is a given latency penalty with using an ELB, as opposed to serving requests directly off of a backend web server farm. The other side to this however is the fact that such an additional layer, be it an ELB or a cluster of custom HAProxy instances, acts as a shield in front of those web servers.

With a balancer at the edge of the VPC, web server nodes can remain within the private subnet which is not a small advantage if you can afford the said latency trade-off.

Services like the AWS Certificate Manager, make using SSL/TLS encryption even easier and more affordable. You get the certificates plus automatic renewals for free (within AWS).

Whether traffic between an ELB and the backend instances within a VPC should be encrypted is another good question, but for now please do add a certificate to your ELBs and enforce HTTPS where possible.

Logically, since we are concerned with encrypting our HTTP traffic, we should not ignore our data at rest.

The most common type of storage on AWS must be the EBS volume with S3 right behind it. Each of the two services supports a strong and effortless implementation of encryption.

First, it should be noted that not all EC2 instance types support encrypted volumes. Before going any further, please consult this table: http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/EBSEncryption.html#EBSEncryption_supported_instances

Also, let us see what does get encrypted and how:

When you create an encrypted EBS volume and attach it to a supported instance type, the following types of data are encrypted:

- Data at rest inside the volume

- All data moving between the volume and the instance

- All snapshots created from the volume

The encryption occurs on the servers that host EC2 instances, providing encryption of data-in-transit from EC2 instances to EBS storage.

ref: http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/EBSEncryption.html

Note that the data gets encrypted on the servers that host EC2 instances, that is to say the hypervisors.

Naturally, if you wanted to go the extra mile you could manage your own encryption on the instance itself. Otherwise, you can be reasonably at peace knowing that each volume gets encrypted with an individual key which is in turn encrypted by a master key associated with the given AWS account.

In terms of key management, AWS recommends that you create a custom key to replace the one which gets generated for you by default. Let us create a key and put it to use.

On the IAM dashboard, select Encryption Keys on the left:

Choose to Create Key and fill in the details:

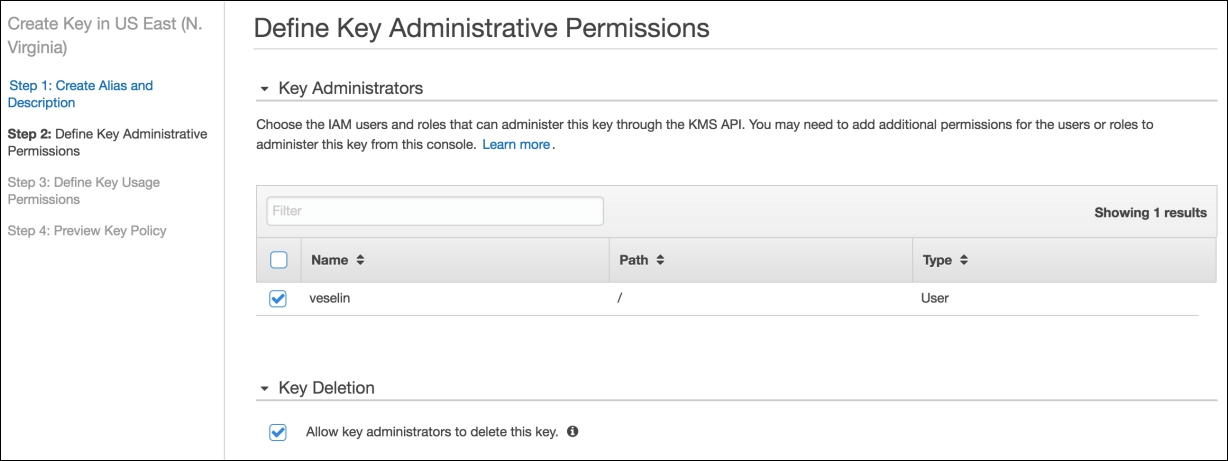

Then you can define who can manage the key:

As well as who can use it:

And the result should be visible back on that dashboard among the list of keys:

Now if you were to switch to the EC2 Console and choose to create a new EBS volume, the custom encryption key should be available as an option:

You can now proceed to attach the new encrypted volume to an EC2 instance as per the usual process.

S3 allows the encryption of all, or a selection of objects within a bucket with the same AES-256 algorithm as EBS here.

A few methods of key management are available (ref: http://docs.aws.amazon.com/AmazonS3/latest/dev/serv-side-encryption.html):

- You can import your own, external set of keys

- You can use the KMS service to generate custom keys within AWS

- You can use the S3 service default (unique) key

Encrypting existing data can be done on the folder level:

or by selecting individual files:

New data is encrypted on demand by either specifying a header (x-amz-server-side-encryption) in the PUT request or by passing any of the --sse options if using the AWS S3 CLI.

It is also possible to deny any upload attempts which do not specify encryption by using a bucket policy (ref: http://docs.aws.amazon.com/AmazonS3/latest/dev/UsingServerSideEncryption.html).

If you follow any security bulletins, you would have noticed the frequency with which new security flaws are being published. So, it is probably not much of an exaggeration to state that OS packages become obsolete days if not hours after a fully up-to-date EC2 instance has been provisioned. And unless the latest vulnerability is affecting BASH or OpenSSL, we tend to take comfort in the fact that most of our hosts reside within an isolated environment (such as a VPC), postponing updates over and over again.

I believe we all agree this is a scary practice, which likely exists due to the anxiety that accompanies the thought of updating live, production systems. There is also a legitimate degree of complication brought about by services such as Auto Scaling, but this can be turned to an advantage. Let us see how.

We'll separate a typical EC2 deployment into two groups of instances: static(non-autoscaled) and autoscaled. Our task is to deploy the latest OS updates to both.

In the case of static instances, where scaling is not an option due to some application specific or other type of limitation, we will have to resort to the well-known approach of first testing the updates in a completely separate environment then updating our static production hosts (usually one at a time).

With Auto Scaling however, OS patching can be a much more pleasant experience. You will recall Packer and Serverspec from previous chapters, where we used these tools to produce and test AMIs. A similar Jenkins pipeline can also be used for performing OS updates:

- Launch the source AMI.

- Perform a package update.

- Run tests.

- Package a new AMI.

- Proceed with a phased deployment in production.

To be comfortable with this process, we certainly need to put a decent amount of effort into ensuring that tests, deployment and rollback procedures are as reliable as practically possible, but then the end justifies the means.