In the effort of achieving effortless scalability, we must put emphasis on building stateless applications where possible. Not keeping state on our application nodes would mean storing any valuable data away from them. A classic example is WordPress, where user uploads are usually kept locally, making it difficult to scale such a setup horizontally.

While it is possible to have a shared file system across your EC2 instances using Elastic File System (EFS), for reliability and scalability we are much better off using an object storage solution such as AWS S3.

It is fair to say that accessing S3 objects is not as trivial as working with an EFS volume, however the AWS tools and SDKs lower the barrier considerably. For easy experimenting, you could start with the S3 CLI. Eventually you would want to build S3 capabilities into your application using one of the following:

- Java/.NET/PHP/Python/Ruby or other SDKs (ref: https://aws.amazon.com/tools/)

- REST API (ref: http://docs.aws.amazon.com/AmazonS3/latest/dev/RESTAPI.html)

In previous chapters we examined IAM Roles as a convenient way of granting S3 bucket access to EC2 instances. We can also enhance the connectivity between those instances and S3 using VPC Endpoints:

A VPC endpoint enables you to create a private connection between your VPC and another AWS service without requiring access over the Internet, through a NAT device, a VPN connection, or AWS Direct Connect. Endpoints are virtual devices. They are horizontally scaled, redundant, and highly available VPC components that allow communication between instances in your VPC and AWS services without imposing availability risks or bandwidth constraints on your network traffic. | ||

| --http://docs.aws.amazon.com/AmazonVPC/latest/UserGuide/vpc-endpoints.html | ||

If you have clients in a different geographic location uploading content to your bucket, then S3 transfer acceleration (ref: http://docs.aws.amazon.com/AmazonS3/latest/dev/transfer-acceleration.html) can be used to improve their experience. It is simply a matter of clicking Enable on the bucket's settings page:

We have now covered speed improvements; scalability comes built into the S3 service itself and for cost optimization we have the different storage classes.

S3 currently supports four types (classes) of storage. The most expensive and most durable being the Standard class, which is also the default. This is followed by the Infrequent Access class (Standard_IA) which is cheaper, however keep in mind that it is indeed intended for rarely accessed objects otherwise the associated retrieval cost would be prohibitive. Next is the Reduced Redundancy class which, despite the scary name, is still pretty durable at 99.99%. And lastly, comes the Glacier storage class which is akin to a tape backup in that objects are archived and there is a 3-5 hour retrieval time (with 1-5 minute urgent retrievals available at a higher cost).

You can specify the storage class (except for Glacier) of an object at time of upload or change it retrospectively using the AWS console, CLI or SDK. Archiving to Glacier is done using a bucket lifecycle policy (bucket's settings page):

We need to add a new rule, describing the conditions under which an object gets archived:

Incidentally, Lifecycle rules can also help you clean up old files.

The days of the Wild Wild West when one used to setup web servers with public IPs and DNS round-robin have faded away and the load balancer has taken over.

We are going to look at the AWS ELB service, but this is certainly not the only available option. As a matter of fact, if your use case is highly sensitive to latency or you observe frequent, short lived traffic surges then you might want to consider rolling your own EC2 fleet of load balancing nodes using NGINX or HAProxy.

The ELB service is priced at a flat per-hour fee plus bandwidth charges, so perhaps not much we can do to reduce costs, but we can explore ways of boosting performance.

Under normal conditions, a Classic ELB would deploy its nodes within the zones which our backend (application) instances occupy and forward traffic according to those zones. That is to say, the ELB node in zone A will talk to the backend instance in the same zone, and the same principle applies for zone B:

This is sensible as it clearly ensures lowest latency, but there are a couple of things to note:

- An equal number of backend nodes should be maintained in each zone for best load spread

- Clients caching the IP address for an ELB node would stick to the respective backend instance

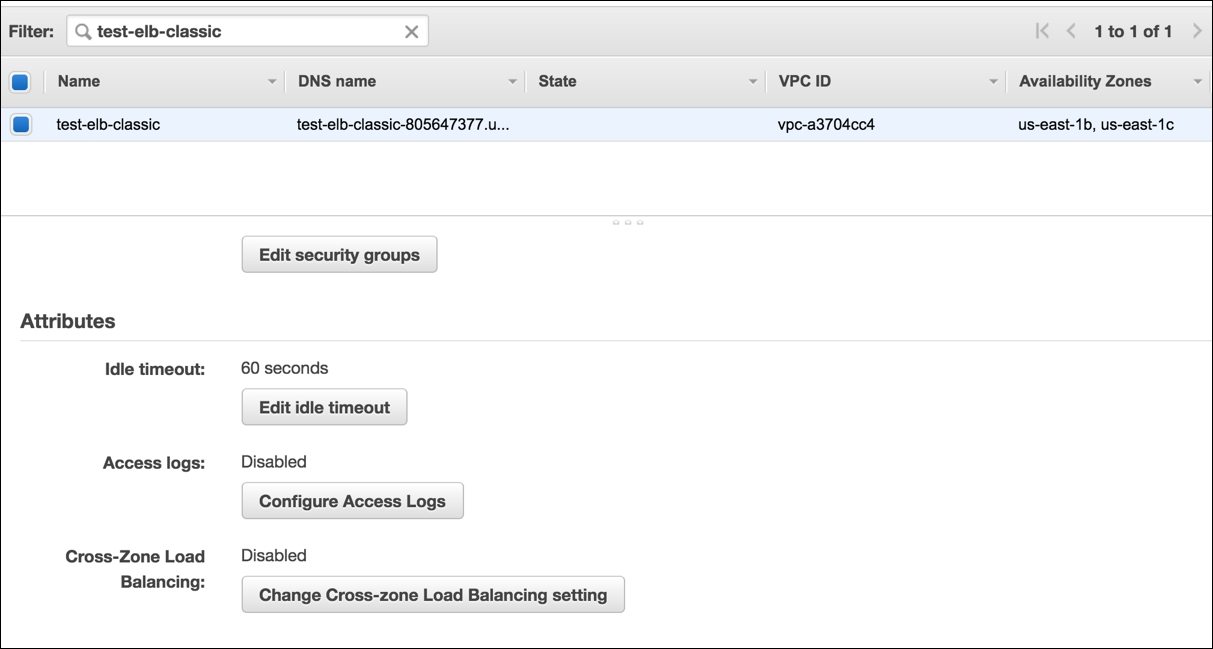

To improve the situation at the expense of some (minimal) added latency, we can enable Cross-Zone Load Balancing in the Classic ELB's properties:

This will change the traffic distribution policy, so that requests to a given ELB node will be evenly spread across all registered (status: InService) backend instances, changing our earlier diagram to this:

An unequal number of backend nodes per zone would no longer have an impact on load balancing, nor would external parties targeting a single ELB instance.

An important aspect of the ELB service is that it runs across a cluster of EC2 instances of a given type, very much like our backend nodes. With that in mind, it should not come as a surprise that ELB scales based on demand, again much like our Auto Scaling Group does.

This is all very well when incoming traffic fluctuates within certain boundaries, so that it can be absorbed by the ELB or increases gradually, allowing enough time for the ELB to scale and accommodate. However, sharp surges can result in ELB dropping connections if large enough.

This can be prevented with a technique called pre-warming or essentially scaling up an ELB ahead of anticipated traffic spikes. Currently this is not something that can be done at the user end, meaning you would need to contact AWS Support with an ELB pre-warming request.

CloudFront or AWS's CDN solution is yet another method of improving the performance of the ELB and S3 services. If you are not familiar with CDN networks, those, generally speaking, provide faster access to any clients you might have in a different geographic location from your deployment location. In addition, a CDN would also cache data so that subsequent requests won't even reach your server (also called origin) greatly reducing load.

So, given our VPC deployment in the US, if we were to setup a CloudFront distribution in front of our ELB and/or S3 bucket, then requests from clients originating in say Europe would be routed to the nearest European CloudFront Point-of-Presence which in turn would either serve a cached response or fetch the requested data from the ELB/S3 over a high-speed, internal AWS network.

To setup a basic web distribution we can use the CloudFront dashboard:

Once we Get Started then the second page presents the distribution properties:

Conveniently, resources within the same AWS account are suggested. The origin is the source of data that CloudFront needs to talk to, for example the ELB sitting in front of our application. In the Alternate Domain Names field we would enter our website address (say www.example.org), the rest of the settings can remain with their defaults for now.

After the distribution becomes active all that is left to do is to update the DNS record for www.example.org currently pointing at the ELB to point to the distribution address instead.

Our last point is on making further EC2 cost savings using Spot instances. These represent unused resources across the EC2 platform, which users can bid on at any given time. Once a user has placed a winning bid and has been allocated the EC2 instance, it remains theirs for as long as the current Spot price stays below their bid, else it gets terminated (a notice is served via the instance meta-data, ref: http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/spot-interruptions.html).

These conditions make Spot instances ideal for workflows, where the job start time is flexible and any tasks can be safely resumed in case of instance termination. For example, one can run short-lived Jenkins jobs on Spot instances (there is even a plugin for this) or use it to run a workflow which performs a series of small tasks that save state regularly to S3/RDS.

Lastly, a simple yet helpful tool to give you an idea of how much your planned deployment would cost: http://calculator.s3.amazonaws.com/index.html (remember to untick the FREE USAGE TIER near the top of the page)

And if you were trying to compare the cost of on-premise to cloud, then this might be of interest: https://aws.amazon.com/tco-calculator/.