2

MAS-aware Approach for QoS-based IoT Workflow Scheduling in Fog-Cloud Computing

Marwa MOKNI1,2 and Sonia YASSA2

1MARS Laboratory LR17ES05, University of Sousse, Tunisia

2ETIS Laboratory CNRS UMR8051, CY Cergy Paris University, France

Scheduling latency-sensitive Internet of Things (IoT) applications that generate a considerable amount of data is a challenge. Despite the vital computing and storage capacities, Cloud computing affects latency values due to the distance between end-users and Cloud servers. Therefore, this limitation of the Cloud has led to the development of the Fog Computing paradigm in order to build the new Fog-Cloud Computing architecture. In this chapter, we make use of the collaboration between Fog-Cloud Computing to schedule IoT applications, formed as a workflow, by considering the relationships and communications between IoT objects. The proposed scheduling approach is supported by a multi-agent system (MAS) to exploit each agent’s independent functionalities. The main objective of our work is to create the most appropriate scheduling solution that optimizes several QoS metrics simultaneously; thus, we adopt the widely used metaheuristic “genetic algorithm” as an optimization method. The proposed scheduling approach is tested by simulating a healthcare IoT application modeled as a workflow and several scientific workflow benchmarks. The results demonstrate the effectiveness of the proposed approach; it generates a scheduling plan that better optimizes the various QoS metrics considered.

2.1. Introduction

The Internet of Things (IoT) holds a vast amount of interconnected objects (Davami et al. 2021). In some cases, IoT objects model automation routines (Smart Home, Healthcare, etc.) that involve execution within a strict response time and advanced programming skills. Notably, in human health monitoring, patients need to be provided with tools to easily monitor and control their surrounding IoT devices and model simple processes that automate daily tasks. To address this issue, we propose a self-management workflow modeling that monitors, controls and synchronizes the relationship between applications generated by IoT objects as an acyclic graph. At this point, workflow technology supplies orchestration sequences of activities to ensure the execution of IoT applications, without any errors or perturbations (Hoang and Dang 2017; Mokni et al. 2021). For this reason, the IoT-based workflow requires computing resources that meet its needs and optimize its execution. However, even though many existing studies propose a workflow model for IoT processes, IoT workflow scheduling is yet to be widely discussed. In this chapter, we focus on providing IoT processes that are coordinated by a workflow model. Given the nature of IoT applications (Matrouk and Alatoun 2021), stringent latency needs make the processing of IoT workflows at the Cloud computing level a performance bottleneck. Fog computing is a paradigm, proposed by Cisco (2015), that creates a cooperative architecture with Cloud computing, in which they complement each other. The collaboration between Fog and Cloud services is a suitable solution for producing an optimal IoT-based workflow scheduling plan that may require both reduced latency and significant computing capacity (Saeed et al. 2021). From Cisco’s perspective, Fog computing (Chen et al. 2017) is considered a local extension of cloud computing from the network core to the network edge. Fog computing nodes are installed under the cloud computing layer, close to end-users. This highly virtualized platform provides computing, storage and networking services between endpoints and traditional cloud servers, accessible through users’ mobiles. However, with the increasing complexity of workflows, networks and security requirements, scheduling algorithms are becoming more and more challenging because they have to manage several contradictory parameters that concern distinCTActors with different interests. For example, the reduction in the workflow execution cost will increase the overall execution time and can lead to the use of less reliable machines. Generally, workflow scheduling in the Fog-Cloud computing environment is an Np-Hard problem (Helali and Omri 2021) that requires more than simple rules to be solved. Therefore, in this chapter, we propose an IoT-workflow scheduling approach based on a genetic algorithm (GA) (Bouzid et al. 2020), which aims to create a multi-objective optimization solution that optimizes more than one QoS metric. The critical issue surrounding distributed infrastructures, such as Cloud computing, is implementing techniques and methods that adapt to changes in states and behaviors following high-level user directives. Self-management, self-detection and autonomy techniques that meet these requirements can be based on multi-agent systems (MAS). Indeed, an SMA (Mokni et al. 2018) is a very well-adjusted paradigm for simulating phenomena in which the interactions between different entities are complex, with the need to negotiate user access, develop intelligent services, and efficiently exploit Cloud computing and Fog computing resources. In this context, we are interested in modeling an SMA around three layers: the IoT layer, the Fog computing layer and the Cloud computing layer. The key contributions of this work are:

- – proposing a workflow modeling for IoT processes;

- – developing a multi-objective scheduling solution based on the GA;

- – modeling an MAS to enhance scheduling approach entities.

The remainder of the chapter is organized as follows: section 2.2 presents work related to existing scheduling approaches. The problem formulation is provided in section 2.3. The proposed model, its design and implementation are described in section 2.4. Section 2.6 describes the experimental setup and presents the results of the performance evaluation. Section 2.7 presents the conclusion and future works.

2.2. Related works

Workflow scheduling problems attract much interest from researchers, are adopted in various computing environments (e.g. Cloud computing, Fog computing and Cloud-Fog computing) and serve several domains, such as science, bioinformatics and the IoT. The approach presented in Saeedi et al. (2020) proposes a workflow scheduling approach in Cloud computing based on the Particle Swarm Optimization algorithm. Authors aim to satisfy both user and cloud provider’s conflicting exigence by reducing the makespan, cost and energy consumption, and maximizing the reliability of virtual machines. The results obtained demonstrate that the developed algorithm outperforms the LEAF, MaOPSO and EMS-C algorithms, in terms of makespan, cost and energy consumption. In Sun et al. (2020), a workflow scheduling approach based on an immune-based PSO algorithm is proposed, which intends to generate an optimal scheduling plan in terms of cost and makespan, respectively. The proposed approach yields a scheduling solution with minimum cost and makespan compared with PSO results. In Shirvani (2020), a scientific workflow scheduling approach is proposed, based on the discrete PSO combined with a Hill Climbing local search algorithm. The approach aims to create a scheduling solution that minimizes makespan and the total execution time, balancing exploration and exploitation. Energy consumption in Cloud computing was addressed in Tarafdar et al. (2021), where the authors proposed an energy-efficient scheduling algorithm for deadline-sensitive workflows with budget constraints. Results show that the proposed approach succeeded in minimizing energy consumption and deadline violation by adopting Dynamic Voltage and Frequency Scaling (DVFS), in order to adjust the voltage and frequency of the virtual machines executing the tasks in the workflow. In Nikoui et al. (2020), a scientific workflow scheduling approach was developed in a Cloud computing environment that aimed to minimize the total execution time and the makespan by applying the hybrid discrete particle swarm optimization (HDPSO) algorithm. The authors proved the efficiency of the parallelizable task scheduling on parallel computing machines to minimize execution time. Another workflow scheduling approach proposed in Gu and Budati (2020) intended to reduce the energy consumption caused by the immense scale of workflow applications and the massive amount of resource consumption. The authors developed the proposed scheduling approach based on the bat algorithm. Aburukba et al. (2020) developed an IoT request scheduling approach in Fog-Cloud computing that aimed to minimize the latency value. The GA adopted results in a reduced latency compared with various algorithms. Kaur et al. (2020) proposed a prediction-based dynamic multi-objective evolutionary algorithm, called the NN-DNSGA-II algorithm. They incorporated an artificial neural network with the NSGA-II algorithm to schedule scientific workflow in Cloud computing, taking into account both resource failures and the number of objectives that may change over time. The detailed results show that the proposed method significantly outperforms the non-prediction-based algorithms in most test instances. The experimental results demonstrate that the proposed approach provides a low response time and makespan compared with CPOP, HEFT and QL-HEFT. Also, a Workflow Scheduling Approach was proposed in Setlur et al. (2020), combining the HEFT algorithm with multiple Machine Learning techniques, ranging from Supervised Classification like Logistic Regression, Max Entropy Models for the replication count for each task. The proposed approach improves upon metrics like Resource Wastage and Resource Usage in comparison to the Replicate-All algorithm. Hoang and Dang (2017) proposed a task scheduling concept based on region to satisfy resource and sensitive latency requirements and utilize appropriate cloud resources for heavy computation tasks. In Abdel-Basset et al. (2020), an IoT task scheduling problem in Fog computing is addressed. The authors proposed a scheduling approach based on the marine predator’s algorithm (MPA), aiming to improve the different QoS metrics. In Aburukba et al. (2021), an IoT scheduling approach based on the weighted round-robin algorithm is proposed in Fog computing. The developed approach is based on smart city application and intends to assure effective task execution according to the processing capacity available.

As summarized in Table 2.1, proposed scientific workflow scheduling approaches were developed in the Cloud environment to optimize one or two QoS metrics, without considering the amount of latency. Otherwise, scheduling approaches for IoT tasks in hybrid Fog-Cloud computing are proposed to maximize the utilization of Fog resources than those of Cloud computing to minimize cost and latency. Otherwise, the authors do not consider the heterogeneity, the relationship between IoT tasks or the high dynamicity of computing infrastructures.

This chapter presents the MAS-GA-based approach for IoT workflow scheduling in Fog-Cloud computing, which aims to optimize makespan, cost and latency values. The proposed approach is based on a MAS, taking into account the different QoS metrics. A multi-objective optimization method is adopted to create the IoT workflow scheduling solution by developing the GA.

2.3. Problem formulation

The workflow scheduling problem on distributed infrastructures revolves around three main entities:

- – workflow tasks to be executed;

- – resources to be allocated;

- – quality of service metrics to be optimized.

Formally, the workflow scheduling problem consists of two sides: a supplier of m virtual machines (VMs) and a user workflow that consists of n tasks. The tasks must be mapped on the virtual machines by respecting precedence constraints between tasks and optimizing the different QoS metrics.

Table 2.1. Comparison of existing scheduling approaches

|

Work |

Fog-Cloud |

Cloud Fog Metaheuristic IoT Workflow Dynamicity Makespan Cost Latency | ||||||||

|

Saeedi et al. (2020) |

V |

V |

V |

V |

V | |||||

|

Sun et al. (2020) |

V |

V |

V |

V |

V | |||||

|

Shirvani (2020) |

V |

V |

V |

V | ||||||

|

Tarafdar et al. (2021) |

V |

V |

V | |||||||

|

Nikoui et al. (2020) |

V |

V |

V | |||||||

|

Gu and Budati (2020) |

V |

V |

V | |||||||

|

Aburukba et al. (2020) |

V |

V |

V |

V |

V | |||||

|

Kaur et al. (2020) |

V |

V |

V | |||||||

|

Setlur et al. (2020) |

V |

V |

V |

V |

V | |||||

|

Hoang and Dang (2017) |

V |

V |

V |

V | ||||||

|

Abdel-Basset et al. (2020) |

V |

V |

V |

V | ||||||

|

Aburukba et al. (2021 ) |

V |

V |

V |

V | ||||||

|

MAS-GA-based approach |

V |

V |

V |

V |

V |

V |

V |

V |

V | |

2.3.1. IoT-workflow modeling

In this chapter, we model a workflow application as a directed acyclic graph (DAG) noted by G (T; A). T is the set of workflow tasks T = (T1; ...; Tn), where each task Ti ∈ T consists of the set of instructions needed to execute an IoT application. All tasks forming the IoT-workflow G are characterized by an Id (an identifier to distinguish the task), a Computing Size CSi representing the number of instruction lines, a Geographic Position GPi (namely, a longitude and latitude value to specify its position) and a set of predecessor tasks. Every task Ti in G can only be executed after all of its predecessor’s tasks have been executed. The set of T tasks is interrelated by a precedence constraint, where a task Ti is connected to its successor task Tj with an Arc Aij, characterized by a Communication Weight CWij, which represents the amount of data that must be transferred from Ti to Tj.

2.3.2. Resources modeling

A computing infrastructure (Cloud computing and Fog computing) holds a set of resource nodes N = (N1, ..., Nk). Each Nj is characterized by a Utilization Threshold UTj, a Geographical Area GAj and set of virtual machines VM(VM1, ..., VMh). Every VMi residing on VM is characterized by a computing capacity expressed in the number of instructions processed per second (MIPS), a bandwidth, that is the amount of data that may be transmitted per second, and a price per unit of time.

2.3.3. QoS-based workflow scheduling modeling

Multi-objective optimization is a critical issue for all real-world problems, such as the workflow scheduling problem in the Cloud-Fog computing environment. Generally, the workflow scheduling problem is characterized by a set of complex and conflicting objectives. The group of objectives is depicted in a set of QoS metrics that must be optimized; namely, (1) makespan, which is used to evaluate workflow planning algorithms; (2) cost, which is the total cost of execution of a workflow; (3) response Time, which is the data transfer time via the network and the demand time in the Fog layer. These metrics are defined respectively by the following equations [2.1], [2.2] (Yassa et al. 2013), and [2.3]:

- – The makespan metric:

where,

- - Ti is a task of the workflow;

- - DF (Ti) is the date of the end execution of the task Ti.

- – The cost metric:

This metric represents the total cost of execution of a workflow. It is given by the following equation:

where:

- - DF (Ti) represents the date of the end execution of the task Ti;

- - Uj is the unit price of a vmj that process the task Ti;

- - Cwij is the communication weight between Ti and Tj;

- - T RCij is the cost of communication between the machine where Ti is mapped and another machine where Tj is affected.

- – The response time metric:

The response time represents the data transfer time via the network and the elapsed time in the Fog layer. In this work, we calculate it as the sum of the requested Transfer Time TT from the CTA to the Fog Agent, between Fog Agents, between Fog Agent and Cloud Agent and from Fog Agent to CTA. The propagation Time PT represents the time a signal takes to propagate from one point to another. In our case, we use Wi-Fi technology as an internet medium between agents.

where,

and

with:

- - Requestsize is the size of data that must be transferred;

- - VMBandwidth(MB/s) is the amount of data that can be transferred per second;

- - Distance is the distance in meters, between two virtual machines;

- - PropagationSpeed(s/m) is the time elapsed to transfer the request per meter.

Therefore, the average latency relies on the distance value between each scheduling actor (users, Fog nodes and Cloud data center). Thus, we define the different Earth positions of users, Fog nodes and Cloud data centers. With this in mind, we adopted the Haversine formula, which determines the orthodromic distance (the shortest distance between two points on the surface of a sphere) between two points on the Earth’s surface, which depend on their longitude and latitude. The following equation presents the Haversine formula:

where:

- - R is the radius of the sphere;

- - Φ1, Φ2 are the latitude of points 1 and 2, respectively;

- - λ1, λ2 are the longitude of points 1 and 2, respectively.

2.4. MAS-GA-based approach for IoT workflow scheduling

In this section, we present the main concepts, definitions and notations related to the proposed approach.

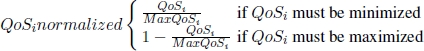

2.4.1. Architecture model

The proposed approach is modeled around three layers. The user layer consists of an IoT system with three levels that communicate with one other to connect the tangible world of objects to the virtual world. As illustrated in Figure 2.1, an IoT level holds a set of IoT devices connected to a workflow management layer; this layer involves establishing communication with the hardware level and the software level via the network, as well as extracting services from IoT objects to form a workflow. This layer is managed by a Manager Agent that communicates with the scheduler level, driven by a set of Contractual Agents. The Fog layer presents the Fog computing infrastructure consisting of interconnected resource nodes N = (N1, ..., Nk). Each Fog node is supervised by a Fog agent that communicates with other Fog agents to maximize Fog resources. The fog environment adopted in this work consists of node sets that can reside on a factory floor, along a railroad track, or in a vehicle. Any device with computer, storage and network connectivity can be a fog node, such as Wi-Fi Hot Spots, switches, routers and embedded servers. Fog nodes are stationed everywhere with a network connection (Cisco 2015). The Fog computing layer collaborates with the Cloud computing layer, which is also equipped with a set of interconnected resource nodes and supervised by Cloud Agents.

Figure 2.1. Solution architecture. For a color version of this figure, see www.iste.co.uk/chelouah/optimization.zip

2.4.2. Multi-agent system model

The MAS comprises agents located in a specific environment that interact, collaborate, negotiate and cooperate according to certain relationships. Each agent in the MAS has explicit information and plans that allow it to accomplish its goals. In this chapter, we model an MAS consisting of a set of agent types with a specific mission to meet. Each agent type presents a component in the approach proposed in the following section:

2.4.2.1. Agent types

- – Manager Agent

The global workflow is managed by the first type of agent called a Manager Agent (MA). It is responsible for supervising the workflow execution by respecting the imposed deadline and budget. Firstly, the MA develops the Mixed Min-Cut Graph algorithm that partitions the global workflow in p sub-workflows to reduce overall workflow execution time by minimizing data movement and communication costs between tasks. Secondly, each generated sub-workflow is associated with a Contractual Agent as a reached contract. The MA specifies the sub-workflow characteristics, budget and deadline to respect.

- – Contractual Agents

The MAS adopted in our work consists of a set of Contractual Agents CTA(CTA1...CTAn) equal to the sub-workflow number. They collaborate between them to execute the global workflow in a minimum execution time. Each of them is responsible for affecting all sub-workflow tasks on the appropriate resources by communicating with the closer Fog Agent. The restrictions of a CTAi are a limited deadline and budget that must be respected.

- – Fog Agent

The created MAS disposes of a set of Fog Agents FA(FA1, ... FAf), which represents the Fog resource providers in the Fog computing environment. Each FAi ∈ FA is responsible for creating a scheduling plan for a received sub-workflow by optimizing the QoS metrics.

- – Cloud Agent

The Cloud Agent CAi ∈ CA(CA1, ..., CAc) manages a cloud data center, which is responsible for collaborating with FA to create the most suitable scheduling solution.

2.4.3. MAS-based workflow scheduling process

The MA launched the MAS-based workflow scheduling process while it is the supervisor of the global workflow execution process. After applying the partitioning algorithm MMCG (Italiano et al. 2011) on the workflow, the MA initiates p Contractual Agents (CTA) and affects each sub-workflow characteristic, the deadline and the budget constraints. Each created CTA analyzes the received sub-workflow and makes resource requests RRi, as indicated in formula [2.7], which will be sent to the closer Fog Agent FAi.

where:

- – Id Tasks: represents a group of the unique identifier of each task in the sub-workflow;

- – Precedence Constraints: represents the relationship between tasks that determine the order of scheduling which must be respected;

- – QoSs: represents the different quality of service metrics that must be satisfied;

- – CSi: represents the Computing Size;

- – Di: represents the Deadline of the sub-workflow execution which is imposed by the Manager Agent;

- – Bi: represents the budget of the sub-workflow execution which is imposed by the Manager Agent.

The CTA agent disposes of a Knowledge Directory KD (it is similar to the Directory Facilitator “DFAgentDescription” created by the JADE framework (Bellifemine et al. 2000), which provides a directory system that allows agents to find service provider agents by ID), which contains all Fog Agents information, and through it, the CTA picks the closer Fog agent. After receiving the request RRi from the CTA, the FA compares the computing size CSi of the received RRi with its Utilization Threshold UTi. If RRi respects the UTi, the Fog Agent proposes to the CTA, a scheduling solution on its resources created by the GA. Otherwise, in the context of maximizing the Fog resource utilization, the FAi must generate a scheduling solution on its resources to the sub-workflow tasks that respect its utilization threshold and create an RRj for the remaining tasks. The RRj is then sent to all Fog Agents in FAi in KD to collaborate between them and find the appropriate scheduling plan on Fog resources. Thus, the FAi must define a Waiting Time (WT) for receiving a scheduling solution from Fog Agents. If the WT exceeds without accepting any scheduling offer, the FAi sends the RRj to the Cloud Agent. The MA ends the process after receiving the global workflow scheduling plan from all CTAs. The proposed MAS-GA workflow scheduling approach steps are illustrated in algorithm 2.1.

Figure 2.2. GA flowchart

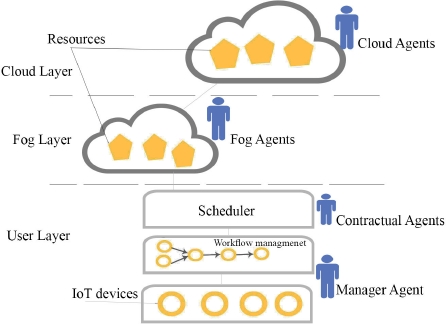

2.5. GA-based workflow scheduling plan

The GA is one of the widely used metaheuristics to solve multi-objective optimization problems by optimizing the QoS metrics of the workflow scheduling solution. Figure 2.2 depicts the global GA flowchart. The GA starts by randomly creating the first population, which consists of a set of scheduling solutions. Based on the different QoS metrics, the GA evaluates each population individual (scheduling solution) by calculating the Fitness function F. Afterward, the GA applies the roulette wheel technique to select individuals most appropriate to reproduce a new generation and uses the crossover operator on each pair of individuals, called “parents”, to produce two new individuals called “children”. Then, the mutation operator is applied to the produced children. The GA is repeated until it converges to the most suitable scheduling solution or the maximum number of generations is reached. Figure 2.3 illustrates an example of the workflow scheduling process, which is the essential part of generating a population. The scheduling process selects the ready task (the task for which all of its predecessor tasks are already scheduled). It schedules it in a virtual machine that minimizes the fitness function. The process is repeated until all workflow tasks are scheduled.

Figure 2.3. Workflow scheduling process

2.5.1. Solution encoding

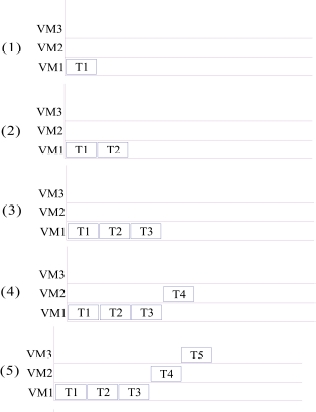

The workflow schedule means that every workflow task must be scheduled on the appropriate resource without breaking the precedence constraint between them. Figure 2.4 illustrates an example of a workflow composed of five tasks, where T1, T2 and T3 are the input tasks and can be executed in parallel since precedence constraints do not interconnect them. Then, tasks T4 and T5 are grouped in sequence and must respect the precedence constraint between them. For example, T4 can only be started when all of its predecessors have been executed and have transmitted their data.

Figure 2.4. Workflow example. For a color version of this figure, see www.iste.co.uk/chelouah/optimization.zip

Figure 2.5 demonstrates an example of three VMs interconnected with a bandwidth speed and modeled as a non-directed graph.

Figure 2.5. Example of resources. For a color version of this figure, see www.iste.co.uk/chelouah/optimization.zip

A scheduling solution based on a VM graph and the workflow must be created while considering all imposed objectives and constraints. An example of an encoding solution is presented in Table 2.2, where each workflow task is assigned to a resource.

Table 2.2. Solution encoding

|

Task |

T1 |

T2 |

T3 |

T4 |

T5 |

|

Resource |

VM1 |

VM1 |

VM1 |

VM2 |

VM3 |

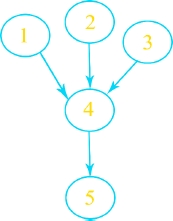

2.5.2. Fitness function

The Fitness function F is adopted to optimize several QoS metrics by assigning a weight Wi to each of these metrics. Each weight defines the importance of the metric given by the user. The fitness function is illustrated in equation [2.8].

As illustrated in equation [2.9], we adopt the normalization calculation to determine the fitness value of a solution. Indeed, the QoS metrics have different values and scales; moreover, specific metrics have to be maximized, and others minimized.

where:

MaxQoSi is the maximal value of QoSi founded during the previous iteration.

2.5.2.1. Crossover operator

The crossover operator combines various parts of selected individuals to produce new individuals. Firstly, the crossover operator chooses two points at random from the scheduling order of the selected first parent (individual 1). Then, the positions of the tasks that are between these two points are permuted. The results of this step are two produced individuals, called children. An illustration of the crossover operator is shown in Figure 2.6.

2.5.3. Mutation operator

The mutation method used is the swap mutation. For a given individual, two randomly selected tasks will exchange their assigned resources. Figure 2.7 depicts an illustration of the swap mutation operation. In the chosen solution, the resources allocated to task 1 and task 4 are exchanged.

Figure 2.6. Illustration of crossover operator

Figure 2.7. Illustration of mutation operation

2.6. Experimental study and analysis of the results

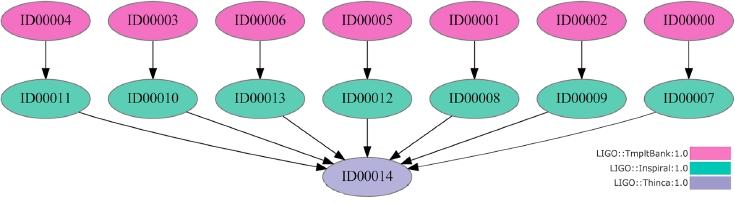

To evaluate the proposed MAS-GA-based approach for IoT workflow scheduling, we were inspired by the characteristics of Amazon EC2 instances to define the Fog-Cloud compute resources used in our experiments. We have tested the proposed scheduling approach on a real case IoT workflow of the health monitoring process, as illustrated in Figure 2.8, compared with the Inspiral-15 scientific workflow benchmark shown in Figure 2.9. We have set four VMs as the number of resources on the Cloud environment and vary the Fog environment’s number from 1 VM to 3 VMs. Figure 2.8 illustrates the health monitoring process that aims to monitor the health of patients in a hospital remotely. The process is managed by a workflow containing three main tasks. The first task, called “coordination node”, receives data from sensors, stores it and generates diagnostic information. The second task is the “medical assistant node”, which receives the diagnostic information from the previous node about whether to signal an emergency alert or not. The last task is a “hospital node” which makes the previous decision according to feedback received from the previous node. Furthermore, we executed the inspiral-15 workflow benchmark, a scientific workflow requiring necessary computing capacities to be completed. As illustrated by Figure 2.9, inspiral-15 holds 15 tasks that are structured in parallel and in sequence.

Figure 2.8. Health monitoring process

Figure 2.9. Inspiral-15 workflow benchmark. For a color version of this figure, see www.iste.co.uk/chelouah/optimization.zip

2.6.1. Experimental results

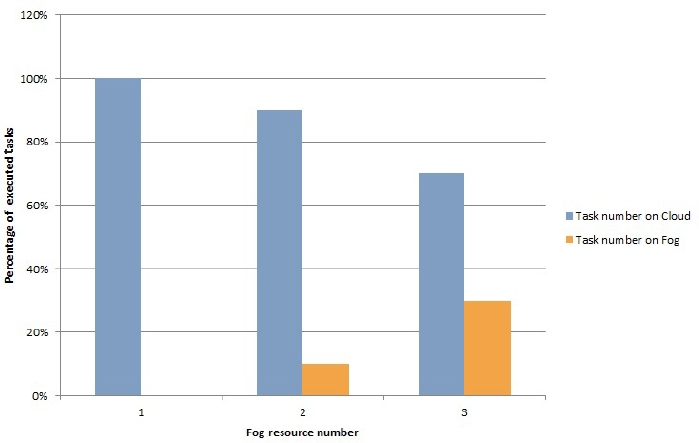

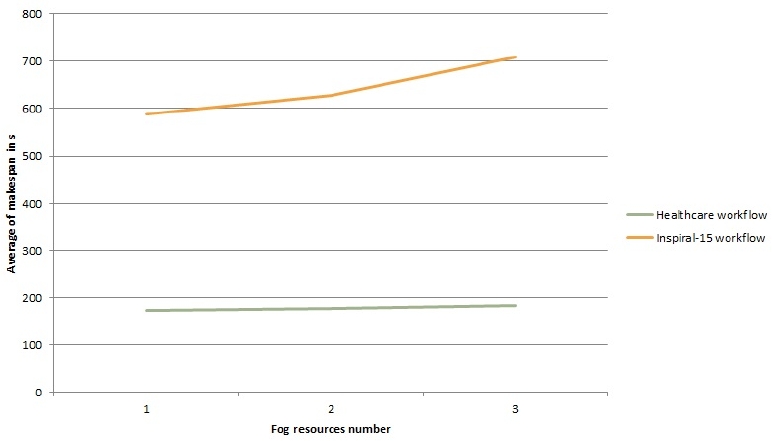

The experimentation results reveal that when we enhanced the resource number at the Fog layer, the collaboration between Cloud and Fog computing is improved. As demonstrated in Figure 2.10, the third result, while adopting the number of VMs on the Fog layer to 3, maximizes the number of tasks executed on the Fog layer compared to the Cloud layer, without ignoring the collaboration between them, which leads to minimizing the cost and latency, respectively. Otherwise, Cloud-Fog computing collaboration affects the makespan values, since the resources that reside in the Fog layer are less efficient than those in Cloud computing, making the workflow execution process slow. In Figure 2.11, the number of inspiral-15 scientific workflow tasks executed on the Cloud layer is greater than the number of tasks performed in the Fog layer, even when the number of Fog resources is increased; this is because scientific workflows like inspiral-15 require high computational capacity. Furthermore, the results obtained show that executing all of the inspiral-15 workflow tasks in the Cloud layer generates a makespan lower than that developed by the collaboration between Fog-Cloud computing and high cost and latency values. The inspiral-15 scheduling solution developed by Fog-Cloud is the cheapest and has the lowest latency value. Makespan, cost and latency are calculated by the average values taken from 10 execution times. Figure 2.13 demonstrates the average makespan when executing the healthcare workflow and the inspiral-15 workflow. In healthcare implementation cases, average makespan increases relatively by increasing the number of Fog VMs, contrary to the inspiral-15 workflow executing case; average makespan increases exponentially. Notably, the collaboration of Fog-Cloud computing affects the makespan results when performing a scientific workflow; this is because fog computing is characterized by resources with low efficiency. The results illustrated in Figure 2.14 demonstrate the required cost of scheduling the healthcare workflow and the inspiral-15 workflow; the cost decreased by augmenting the Fog VMs number. According to the results obtained, the explanation is that the Fog resources are cheaper than those of Cloud computing.

As in Figure 2.10, Figure 2.12 depicts the latency results. It is apparent that enhancing the collaboration between Fog-Cloud resources is a suitable method for reducing average latency when executing the healthcare workflow and the inspiral-15 workflow; this is because the proposed scheduling approach attempts to maximize utilization of Fog resources rather than those of Cloud computing. Finally, from the presented results we can extract that Fog-Cloud computing is suitable for exeuting an IoT-based workflow, since it optimizes the cost and latency simultaneously.

Figure 2.10. Percentage of IoT workflow executed tasks on Fog computing and Cloud computing. For a color version of this figure, see www.iste.co.uk/chelouah/optimization.zip

Figure 2.11. Percentage of Inspiral-15 workflow executed tasks on Fog computing and Cloud computing. For a color version of this figure, see www.iste.co.uk/chelouah/optimization.zip

Figure 2.12. Average of latency when executing the healthcare workflow and Inspiral-15 workflow. For a color version of this figure, see www.iste.co.uk/chelouah/optimization.zip

Figure 2.13. Average of makespan when executing the healthcare workflow and Inspiral-15 workflow. For a color version of this figure, see www.iste.co.uk/chelouah/optimization.zip

Figure 2.14. Average of cost when executing the healthcare workflow and Inspiral-15 workflow. For a color version of this figure, see www.iste.co.uk/chelouah/optimization.zip

Otherwise, Fog-Cloud computing affects the makespan when running a scientific workflow and an IoT workflow.

2.7. Conclusion

In this chapter, we have presented the MAS-GA-based approach for IoT workflow scheduling in Fog-Cloud computing environments. IoT workflow scheduling was proposed to create a multi-objective scheduling solution based on the widely used GA metaheuristic. We have tested and validated the efficiency of the proposed approach in creating the optimal IoT workflow scheduling solution, in terms of makespan, cost and latency when executing a real use case IoT workflow, by using various experiment scenarios on the WorkflowSim simulator. Experimental results demonstrate that the MAS-GA-based approach for IoT workflow scheduling in Fog-Cloud computing generates a scheduling solution that optimizes the cost and latency values when maximizing the workflow task number in Fog resources. Future work will expand the proposed model to include an agent negotiation mechanism, which may be helpful to achieve a scheduling solution that satisfies both the consumer and the resource provider.

2.8. References

Abdel-Basset, M., Mohamed, R., Elhoseny, M., Bashir, A.K., Jolfaei, A., Kumar, N. (2020). Energy-aware marine predators algorithm for task scheduling in IoT-based fog computing applications. IEEE Transactions on Industrial Informatics, 17(7), 5068–5076.

Aburukba, R.O., AliKarrar, M., Landolsi, T., El-Fakih, K. (2020). Scheduling internet of things requests to minimize latency in hybrid fog–cloud computing. Future Generation Computer Systems, 111, 539–551.

Aburukba, R.O., Landolsi, T., Omer, D. (2021). A heuristic scheduling approach for fog-cloud computing environment with stationary IoT devices. Journal of Network and Computer Applications, 102994.

Bellifemine, F., Poggi, A., Rimassa, G. (2000). Developing multi-agent systems with jade. International Workshop on Agent Theories, Architectures, and Languages, 89–103.

Bouzid, S., Seresstou, Y., Raoof, K., Omri, M., Mbarki, M., Dridi, C. (2020). Moonga: Multi-objective optimization of wireless network approach based on genetic algorithm. IEEE Access, 8, 105793–105814.

Chen, S., Zhang, T., Shi, W. (2017). Fog computing. IEEE Internet Computing, 21(2), 4–6.

Cisco (2015). Fog computing and the Internet of Things: Extend the cloud to where the things are. Wite Paper. 10032019 [Online]. Available at: https://www.cisco.com/c/dam/en_us/solutions/trends/iot/docs/computing-overview.pdf.

Davami, F., Adabi, S., Rezaee, A., Rahmani, A.M. (2021). Fog-based architecture for scheduling multiple workflows with high availability requirement. Computing, 15, 1–40.

Gu, Y. and Budati, C. (2020). Energy-aware workflow scheduling and optimization in clouds using bat algorithm. Future Generation Computer Systems, 113, 106–112.

Helali, L. and Omri, M.N. (2021). A survey of data center consolidation in cloud computing systems. Computer Science Review, 39, 100366.

Hoang, D. and Dang, T.D. (2017). FBRC: Optimization of task scheduling in fog-based region and cloud. IEEE Trustcom/BigDataSE/ICESS, 1109–1114.

Italiano, G.F., Nussbaum, Y., Sankowski, P., Wulff-Nilsen, C. (2011). Improved algorithms for min cut and max flow in undirected planar graphs. Proceedings of the Forty-Third Annual ACM Symposium on Theory of Computing, 313–322.

Kaur, A., Singh, P., Batth, R.S., Lim, C.P. (2020). Deep-Q learning-based heterogeneous earliest finish time scheduling algorithm for scientific workflows in cloud. Software Practice and Experience, February.

Matrouk, K. and Alatoun, K. (2021). Scheduling algorithms in fog computing: A survey. International Journal of Networked and Distributed Computing, 9, 59–74.

Mokni, M., Hajlaoui, J.E., Brahmi, Z. (2018). MAS-based approach for scheduling intensive workflows in cloud computing. IEEE 27th International Conference on Enabling Technologies: Infrastructure for Collaborative Enterprises (WETICE), 27, 15–20.

Mokni, M., Yassa, S., Hajlaoui, J.E., Chelouah, R., Omri, M.N. (2021). Cooperative agents-based approach for workflow scheduling on fog-cloud computing. Journal of Ambient Intelligence and Humanized Computing, 1–20.

Nikoui, T.S., Balador, A., Rahmani, A.M., Bakhshi, Z. (2020). Cost-aware task scheduling in fog-cloud environment. CSI/CPSSI International Symposium on Real-Time and Embedded Systems and Technologies (RTEST), 1–8.

Saeed, W., Ahmad, Z., Jehangiri, A.I., Mohamed, N., Umar, A.I., Ahmad, J. (2021). A fault tolerant data management scheme for healthcare Internet of Things in fog computing. KSII Transactions on Internet and Information Systems (TIIS), 15, 35–57.

Saeedi, S., Khorsand, R., Bidgoli, S.G., Ramezanpour, M. (2020). Improved many-objective particle swarm optimization algorithm for scientific workflow scheduling in cloud computing. Computers & Industrial Engineering, 147, 106649.

Setlur, A.R., Nirmala, S.J., Singh, H.S., Khoriya, S. (2020). An efficient fault tolerant workflow scheduling approach using replication heuristics and checkpointing in the cloud. Journal of Parallel and Distributed Computing, 136, 14–28.

Shirvani, M.H. (2020). A hybrid meta-heuristic algorithm for scientific workflow scheduling in heterogeneous distributed computing systems. Engineering Applications of Artificial Intelligence, 90, 103501.

Sun, J., Yin, L., Zou, M., Zhang, Y., Zhang, T., Zhou, J. (2020). Makespan-minimization workflow scheduling for complex networks with social groups in edge computing. Journal of Systems Architecture, 108, 101799.

Tarafdar, A., Karmakar, K., Khatua, S., Das, R.K. (2021). Energy-efficient scheduling of deadline-sensitive and budget-constrained workflows in the cloud. International Conference on Distributed Computing and Internet Technology, 65–80.

Yassa, S., Sublime, J., Chelouah, R., Kadima, H., Jo, G.-S., Granado, B. (2013). A genetic algorithm for multi-objective optimisation in workflow scheduling with hard constraints. International Journal of Metaheuristics, 2, 415–433.