Introduction

Today data comes from everywhere: GPS tracking, smartphones, social networks where we can share files, videos and photos, as well as online client transactions made possible through the intermediary of credit cards. Of the 65 million people in France, 83% are Internet users and 42% (or 28 million) are on Facebook. More than 72 million telephones are active, and the French people spend a daily average of 4 hours online. Mobile phone users spend over 58 minutes online, and 86% of the population is on a social network. The French people spend over 1.5 hours per day on social networks.

Developing this massive amount of data and making access to it possible is known as “Big Data”. This intangible data comes in a constant stream and its processing is especially challenging in terms of knowledge extraction. This is why new automatic information extraction methods are put into place such as, for example, “data mining” and “text mining”. These are the sorts of processes behind radical transformations in the economy, marketing and even politics. The amount of available data will increase greatly with the appearance of new connected objects on the market that are going to be used more and more.

Some objects we use in our daily lives are already connected: cars, television sets and even some household appliances. These objects have (or will one day have) a chip designed to collect and transfer data to their users through a computer, a tablet or a smartphone. More importantly, these objects will also be able to communicate with one another! We will be able to control equipment in our homes and in our car by simply logging onto our smartphone or some other device. This phenomenon is known as the “Internet of Things”.

This phenomenon has attracted the interest of operational decision-makers (marketing managers, finance chiefs, etc.) seeking to benefit from the immense potential involved in analyzing data hosted by companies in real-time. In order to meet the Big Data challenge, measures must be taken, including incorporating tools that make more restrictive data processing possible and actors capable of analyzing that data. This will only be possible if people become more aware of the benefits of “data development”. When databases are organized, reorganized and processed by statistical methods or econometric modeling, they become knowledge.

For a company, it is essential to have access to more and more data about the environment in which it operates. This will make it possible to scrutinize not classes of behavior, but individual cases. This explains why this revolution has brought with it the emergence of so-called “start-up” companies whose objective is to process the data known as Big Data automatically. We certainly find ourselves in front of one of the elements of what some people are calling the “new industrial revolution”. The Internet and the digital and connected objects have opened up new horizons in a wide array of fields.

In order to get the full potential out of data, it must be available to all interested parties with no additional obstacles and at reasonably accessible costs. If data is open to users [MAT 14], other specialized data processing companies can be created. This activity will meet the needs of users without them having to develop models and equations themselves.

Open Data, beyond its economic and innovative potential, involves a philosophical or ethical choice4. Data describes collective human behavior, and therefore, belongs to those whose behaviors it measures. The cultivation of these phenomena depends on the availability of data that can be communicated easily.

The Internet Age has detonated a boom in information research. Companies are flooded by the wealth of data that results from simple Internet browsing. In other words, they are forced to purchase pertinent information to develop high added value strategies that allows them to succeed in the face of incessant changes in their business environment. Industrial strategies now rely strongly on the capacity of companies to access strategic information to better navigate their environment. This information can, thus, become the source of knew knowledge (knowledge pyramid).

The process of gathering, processing and interpreting information is not limited to defining ideas, but also consists of materializing them in order to ensure improved knowledge production that leads to innovation. Competitive intelligence allows each company to optimize its service offerings in qualitative and quantitative terms, as well as to optimize its production technology.

Example I.2. Information processing C2i certificate security and massive processing5 by QRCode6

Beyond the advent of ICT and of increased data production, dissemination and processing speeds, another element has recently become critically important: time. The importance of time carries with it a notion of information circulation speed. This prompts companies to rethink their strategies beyond the challenges involved in processing large volumes of data. The value of a given piece of data increases in time and depends on the variety of uses it is given.

In this sense, companies must possess the capacity to absorb the entirety of data available, which allows them to assimilate and reproduce knowledge. This capacity requires specific skills that make it possible to use that knowledge. Training “data scientists” is, therefore, indispensable in order to be able to identify useful approaches for new opportunities, or for internal data exploitation, and in order to quantify their benefits in terms of innovation and competitiveness. However, Big Data is just a single element in the new set of technical tools known as “data science”.

Data scientists have the task of extracting knowledge from company data. They hold a strategic function within the firm, and to that end, must be in command of the necessary tools. They must also be able to learn on the go and increase their understanding of data mining regularly, as the volume of data requires increasing skills and techniques.

When confronted with this multiplicity of data, companies are driven to apply sophisticated processing techniques. In fact, technical competence in data processing is today a genuine strategic and useful stake for companies’ competitive differentiation [BUG 11]. Processing this mass of data plays a key role for tomorrow’s society because it can be applied in fields as varied as science, marketing, customer services, sustainable development, transportation, health and even education.

Big Data groups together both processing, collection, storage and even visualization of these large volumes of data. This data, thus, becomes the fuel of the digital economy. It is the indispensable raw material of one of the new century’s most important activities: data intelligence. This book shows that the main challenges for Big Data revolve around data integration and development within companies. It explores data development processes within a context of strong competition.

More specifically, this book’s research brings together several different fields (Big Data, Open Data, data processing, innovation, competitive intelligence, etc.). Its interdisciplinary nature allows it to contribute considerable value to research on the development of data pools in general.

I.1. The power of data

Companies are very conscious of the importance of knowledge and even more so of the way it is “managed”, enriched and capitalized. Beyond all (financial, technical and other) factors, the knowledge that a company has access to is an important survival tool, whether it is market knowledge, or legal, technological and regulatory information.

Knowledge is an extension of information to which value has been added because it is underpinned by an intention. It is a specifically-human phenomenon that concerns thought in rational, active and contextual frameworks. It represents an acquisition of information translated by a human element and which requires analysis and synthesis in order to assimilate, integrate, criticize and admit new knowledge.

Information corresponds to data that has been registered, classified, organized, connected and interpreted within the framework of a specific study. Exploiting collected data requires: sorting, verification, processing, analysis and synthesis. It is through this process that raw data collected during research is transformed into information. Data processing provides information that can be accessed in decision-making moments.

Lesca [LES 08] explores the problems behind interpreting data to transform it into strategic information or knowledge. Interpretation systems, which are at the heart of competitive intelligence, are defined as “meaning attribution systems”, since they assign meaning to information that companies receive, manipulate and store [BAU 98].

According to Taylor [TAY 80], the value of information begins with data, which takes on value throughout its evolution until it achieves its objective and specifies an action to take during a decision. Information is a message with a higher level of meaning. It is raw data that a subject in turns transforms into knowledge through a cognitive or intellectual operation.

This implies that in the information cycle, collected data must be optimized in order to immediately identify needs and address them as soon as possible. This will, in turn enhance interactions between a diversity of actors (decision-makers, analysts, consultants, technicians, etc.) within a group dynamic favoring knowledge complementarity, one which would be aimed at improving the understanding, situational analyses and information production necessary for action. Indeed, “operational knowledge production quality depends on the human element’s interpretation and analysis skills when it is located in a collective problem solving environment” [BUI 06, BUI 07].

Everyone produces data, sometimes consciously, sometimes unconsciously: humans, machines, connected objects and companies. The data we produce, as well as other data we accumulate, constitutes a constant source of knowledge. Data is, therefore, a form of wealth, and exploiting it results in an essential competitive advantage for an ever-tougher market.

The need to exploit, analyze and visualize a vast amount of data confirms the well-known hierarchical model: “data, information and knowledge”. This model is often cited in the literature concerning information and knowledge management. Several studies show that the first mention of the hierarchy of knowledge dates back to T.S. Elliot’s 1934 poem “The Rock”. The poem contains the following verses:

- – where is the wisdom we have lost in knowledge;

- – where is the knowledge we have lost in information.

In a more recent context, several authors have cited “From Data to Wisdom” [ACK 89] as being the source of the knowledge hierarchy. Indeed, the hierarchic model highlights three terms: “data”, “information” and “knowledge”7. The relationship between the three terms can be represented in the following figure, where knowledge is found at the top to highlight the fact that large amounts of data are necessary in order to achieve knowledge.

Figure I.1. Relationship between data, information and knowledge [MON 06]

Wisdom accumulation (intelligence) is not truth. Wisdom emerges when the fundamental elements giving rise to a body of knowledge are understood. For Elliot, wisdom, is hence, the last phase of his poem. In our presentation of the concept of competitive intelligence, we have made decision-making the equivalent of wisdom (see Figure I.2).

Figure I.2. The hierarchic model: data, information, and knowledge [MON 06]8

The boom in the number of available data sources, mostly coming from the Internet, coupled with the amount of data managed within these sources, has made it indispensable to develop systems capable of extracting knowledge from the data that would otherwise be hidden by its complexity. This complexity is mostly due to the diversity, dispersion and great volume of data.

However, the volume will continue to increase at an exponential rate in years to come. It will, therefore, become necessary for companies to address challenges to position themselves on the market, retain market share and maintain a competitive advantage. The greatest challenge has to do with the capacity to compare the largest possible amount of data in order to extract the best decision-making elements.

Example I.4. An example from France’s Bouches-du-Rhône Administrative Department and from the city of Montpellier

Exploited data generates billions of dollars, according to different reports. See, for example, the McKinsey Institute’s report, which recently showed that making “Open Data” available to the public would allow the United States to save 230 billion dollars by 2020 by allowing startups to develop innovative services aimed at reducing useless energy spending.

The challenge with Big Data has to do with studying the large volume of available data and constructing models capable of producing analyses compatible with the businesses’ requirements. This data can in turn be used as explaining variables in the “models” on which a variety of users rely to make predictions or, more precisely, to draw relations based on past events that may serve to make future projections.

If data is collected and stored it is because it holds great commercial value for those who possess it. It allows to target services and products to a more and more precise set of customers as determined by the data. It is indeed at the heart of “intelligence” in the most general sense of the word.

I.2. The rise of buzzwords related to “data” (Big, Open, Viz)

In recent years, economic articles that do not make some mention or other of terms related to “data” (such as “Big Data”, “Open Data”, or “data mining”) when talking about companies have become few and far between. This tendency has led some scholars to believe that these concepts are emerging as new challenges that companies can profit from. We will chart the emergence of the data boom, especially of Big Data and Open Data, by studying search tendencies on Google (Google Trends).

Figure I.3. Web searches on “Big Data” and “Open Data” 2010–13 according to Google Trends. For a color version of the figure, see www.iste.co.uk/monino/data.zip

But what exactly is “data”? Data is a collection of facts, observations or even raw elements that are not organized or processed in any particular manner, and that contain no discernible meaning. Data is the basic unit recording a thing, an event, an activity or a transaction. A single piece of data is insignificant on its own. Data does not become significant until it is interpreted and thus comes to bear meaning.

The way in which we “see” and analyze data has progressively changed since the dawn of the digital age. This change is due to the latest technology (Smart, 4G, Clouds, etc.), the advent of the Internet, and a variety of data processing and exploration software, since the potential to exploit and analyze this large amount of data has never been as promising and lucrative as it is today.

In order to process more and more data, and to advance in the direction of real-time analysis, it is necessary to raise awareness about this new universe composed of data and whose processing is a genuine asset. In general terms, the technological evolution related to information processing capacities through its entire transformation chain drives us to explore the current interest in terms related to “data”9.

One of the most important terms related to the data family is of course “Big Data”, a phenomenon that interests companies as much as scholars. It establishes constraints to which companies must adapt: a continuous flow of data, a much faster circulation of data, even more complex methods. Big Data represents a challenge for companies that want to develop strategies and decision-making processes through analysis and transformation of that data into strategic information. In these new orders of magnitude, data gathering, storage, research, sharing, analysis and visualization must be redefined.

The growing production of data, generated by the development of information and communication technologies (ICTs), requires increased data openness, as well as useful sharing that would transform it into a powerful force capable of changing the social and economic world. “Open Data”, another term assigned to the data craze, became popular despite the novelty of the practice, thanks to its capacity to generate both social and economic value. This phenomenon has attracted much attention in the last few years, and one of the Open Data initiative’s main objectives is to make public data accessible in order to promote innovation.

Moreover, a number of professional contexts require reading and understanding very diverse sets of data: market studies, dashboards, financial statistics, projections, statistical tests, indicators, etc. To this end, a variety of techniques aimed at solving a variety of problems is used in the professional world, from client service management to maintenance and prevention, including fraud detection and even website optimization. These techniques are known today as “data mining”.

The concept of data mining has gained traction as a marketing management tool since it is expected to bring to light information that can prove useful for decision-making in conditions of uncertainty. Data mining is often considered a “mix of statistics, artificial intelligence, and database exploration” [PRE 97]. Due to its growing use, this field is considered an ever more important domain (even in statistics), where important theoretical progress has taken place.

However, in order to explore this data, human intelligence coupling mastery of statistical methods and analysis tools is becoming gradually more important in the business world: the term “data scientist” has become more and more common. In order to understand data and take the first steps towards more efficient processing, however, data scientists must embrace Big Data.

The term “data science” was invented by William Cleveland in a programmatic article [CLE 14]. Data science constitutes a new discipline that combines elements of mathematics, statistics, computer science and data visualization.

“Data visualization” refers to the art of representing data in a visual manner. This may take the form of graphs, diagrams, mappers, photos, etc. Presenting data visually makes it easier to understand. Beyond illustration, it is possible to go as far as presenting data in the form of animations or videos.

These terms surrounding the data craze are ultimately not just buzzwords, they are also terms that confer more value to data. This is why we can now combine enormous volumes of data, which leads to deeper and more creative insight.

“Big Data”, however, is not just big because of the volume of data it deals with. The word “big” also refers to the importance and impact involved in the processing of this data, since, with Open Data, the amount will progressively increase, which means that “Big Data” helps us “think big”. Therefore, the universe surrounding data is a movement in which France, with all its assets, must participate from now on. But, in order to meet the challenge, it is important for all actors to come together around this great movement and transform the risks associated with Big Data into opportunities.

I.3. Developing a culture of openness and data sharing

The growing production of data, generated by the development of information and communication technologies (ICTs) requires increased data openness as well as useful sharing that transforms it into a powerful force capable of changing the social and economic world. Big Data has become an important element in companies’ top management decision-making processes. They must learn to work together to innovate with their customers, taking into account their behavior and remaining attentive to their needs, so as to create the products and services of tomorrow, which will allow companies to produce benefits to achieve their objectives.

This forces us to rethink the value of data in a world that is advancing towards constant digital interoperability, sharing and collaboration. Open Data has spread throughout the world due to its capacity to generate both social and economic values. In this regard, several countries are actively contributing to the evolution of data by allowing access and reuse of government data.

The term “Open Data”, which means that all data should be freely available, was first used at the end of the 2000s, originally in the US, and then in the UK, since the two countries were the first to commit themselves to the process. In 2007, the city of Washington DC was the first to make its data openly available on an online platform. In January 2009, President Obama signed a transparency initiative that led to the establishment of the Internet portal data.gov in 2009. It was, thus, at the beginning of 2010, that data marketplaces emerged in the US, such as Data-market, Factual, Infochimps, Kasabi, and Windows Azure Data Marketplace.

We can say that the principle of Open Data dates back to the Declaration of the Rights of Man and of the Citizen, which states: “society has the right to call for an account of the administration of every public agent”. This obviously requires complete transparency on behalf of the government. This imperative, whose realization has unfortunately always been imperfect, was formalized in French history through the Right of Access to Public Records Law, in 1794, through the creation of a large-scale statistics and information government body [INS 46] and through the establishment of a public body to diffuse the same right.

The French government’s Open Data initiative was materialized in the launching of the data.gouv.fr website in 201110. Furthermore, on 27 January 2011, the city of Paris launched the platform “Paris Data”.

Open Data and data sharing are the best ways for both the government and companies to organize, communicate and bring about the world of collective intelligence11. Open Data’s culture is founded on the availability of data that can be communicated easily. This makes it possible to generate knowledge through transformation effects where data is supplied or made available for innovative applications.

It is also a means for constructing working relationships with actors who care about the public good in order to prolong public action by designing new services that favor all parties involved. The objective is to favor or make it easier to reuse and reinterpret data in the most automated way possible. This has great potential, in terms of activities that create added value through data processing.

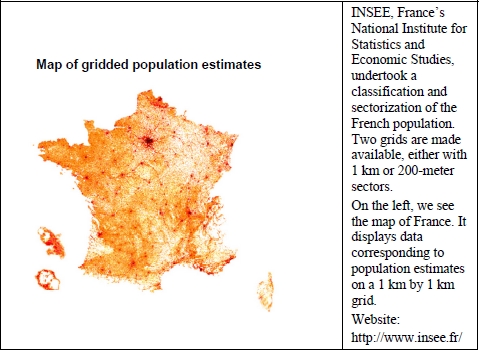

In France, INSEE supplies a massive amount of economic, demographic and sociological data (complimented by data from the OECD, Eurostat, the IMF and INED). This data is not quite raw, since it has been processed slightly. This data can, in turn, be used to explain variables in the “models” that a variety of users rely on to make predictions, draw relations or make comparisons.

In order to be actually reusable, and in order for Open Data to give its full effect, it must be further processed. The entities that may benefit from this data do not possess the statistical capacities to link some datasets with others and draw operative conclusions. Because of the costs involved, not all companies have access to the statistical means required to identify correlations or causal links within the data, or to “locate” a piece of data with respect to the rest through “data analysis”.

The collection of these econometric techniques constitutes a specific kind of knowledge that governments and large public entities may possess, but that is unviable for most of the companies. And yet, without econometrics, there is little competitive intelligence to be had. “Processed” means that the data should be freely accessible to all (which is not always the case) so that it can become “information”.

Today, in order to process data, companies must often hire specialized companies, consulting firms, to gather, process and draw operative conclusions from data. This call to subcontractors, inevitably, has a cost, and it does not always lead to satisfactory results. The subcontractor does not always treat the data with the same care that the company itself would apply if it were transforming the data into information on its own. Administrators sometimes resort to external companies, either because they lack the technical resources to process data internally, or because the specialized companies ultimately offer a lower price for processing than it would cost to do it internally.

Data processing algorithms that users can access and that process data according to their requirements are available. Currently, data processing chains forms a sort of “ecosystem” around “data”:

- – producers and transmitters of data: entities that produce and, at most, make their data available openly by making it accessible to all;

- – data users who possess the necessary processing tools as well as the human elements required to deliver and comment information resulting from open data processing;

- – lastly, the end users who benefit from this information, and thus, develop intelligence about their economic, political, social or even cultural “environment”.

Big Data, in the present context, represents an all-new opportunity for creating “online” companies that do not sell data processing services, but which provide algorithms and rent them out for use by the final users, the third actor in the abovementioned ecosystem. There is an enormous potential for data-processing algorithm-development favoring all the users.

When an internal statistic research service in charge of processing data is created, a license is purchased for a fixed or undetermined duration, and it becomes necessary to assign someone to the job of processing. If companies hire a consulting service that goes out to gather, process and draw information and knowledge from the data, they are forced to always purchase their information, even if in a limited degree. Ultimately, the experts will always choose data to be processed (or not), select available data or data necessary to choose in order to ideally arrive at operative conclusions.

French startups like Qunb and Data Publica have adopted the same strategy as American startups like Infochimps and DataMarket, which rely on data drawn from Open Data for processing and sharing. For its part, Data Publica12 offers the possibility of preparing datasets according to clients’ requirements. Public data can be a genuine source of energy for startups in any domain.

With these algorithm startups, as with many other sectors, there is a transition from purchasing software to purchasing a usage license for the software. This is comparable to the move towards car or bike-sharing systems in transportation.

To give a more precise example in the field of economics, a startup could offer a sales forecast model. Imagine a company that sells a given product or a category of products, and that seeks to predict its future sales. The startup develops a series of explaining variables to define its stock (growth rate, revenue growth, price index, etc.) and it performs one or several linear regressions to relate the quantity of product sold by the company to those explaining variables.

The final user only needs to introduce its own sales series and specify or let the algorithm specify the explaining sales variables. The user, thus, obtains a relationship between those explaining variables and its sales directly, which allows it to predict future performance with precision. There is neither the need to go out and look for external data, nor to update the “model” – already developed by the startup and made available to users willing to pay. There can be as many startups as there are models or groups of models.

It is also possible to imagine, based on the coloration model [MON 10] that a client seeks to know how to “color” its PR communications. The startup can have a search engine of all texts that of interest to the firm, and establish a global coloration analysis system on all those texts, possibly based on words provided by the client itself.

It is easy to see why there is that much potential for new automatic Big Data processing companies. We certainly find ourselves in front of one of the components of the industrial revolution inaugurated by the digital age.