Chapter 11 – SQL-MapReduce

“A bird does not sing because it has the answers, it sings because it has a song.”

-Anonymous

MapReduce History

MapReduce is a programming framework which is used to process enormous data sets. It began getting taken seriously in 2004 when Google released a paper "MapReduce: Simplified Data Processing on Large Clusters by Jeffrey Dean and Sanjay Ghemawat".

Since then, its use has grown exponentially, and today many of the largest companies in the world are using MapReduce technology in the form of Hadoop or Aster Data to solve some very complex problems traditional databases would find difficult. While MapReduce works elegantly in some situations, it is not a replacement for a traditional database. However, if implemented correctly, it can complement one beautifully.

MapReduce was designed to process extremely large data sets such as clickstream data from a website, large text files (html, logs etc.), or perhaps a digital version of every book housed in the Library of Congress! Google uses MapReduce to perform analytics on data entered into its search engine. If you have ever used the search engine of a tiny company named Google, your data has most likely been run through MapReduce!

What is MapReduce?

MapReduce does what it says it is going to do. It maps data and then reduces data by utilizing a Master node and one or more Worker nodes.

The Map Step

The Master node will take input data and it will slice and dice it into smaller sub-problems and then distributes these to Worker nodes. The Master node is also know as the Queen node in Aster Data.

The Worker nodes will then take these sub-problems and may decide to break these down into smaller sub-problems, but eventually they finish their processing and send their individual answers back to the Master node.

The Reduce Step

The Master node then takes all of the answers from the Worker nodes and combines them to form the answer to the problem which needed to be solved.

What is SQL-MapReduce?

SQL-MapReduce (SQL-MR) was created by Aster Data and it is referred to as an "In-Database MapReduce framework".

It is implemented using two basic steps.

![]() Programmers write SQL-MR functions and then load them inside Aster Data using the Aster Command Line Tools (ACT).

Programmers write SQL-MR functions and then load them inside Aster Data using the Aster Command Line Tools (ACT).

Programming languages which are supported are:

• Java

• C#

• C++

• Python

• R

![]() Analysts then call the SQL-MR functions using familiar SELECT query syntax, and data is returned as a set of rows.

Analysts then call the SQL-MR functions using familiar SELECT query syntax, and data is returned as a set of rows.

SQL-MapReduce Input

SQL-MapReduce will always take a set of rows as input. This could be one or more tables in a database, the results of a SELECT statement, or the output from another SQL-MR function. SQL-MR can also take input parameters from the calling query.

Aster Data 5.0 SQL-MR can accept multiple inputs gaining these benefits:

• Allows rows from different data sets to be analyzed in more complex ways inside the SQL-MR function.

• Avoid the issues of the past which required users to have to JOIN and UNION answer sets together to get only one input.

• Avoiding this additional processing reduces memory consumption and increases performance.

There are two types of input into SQL-MR:

1. Partitioned inputs which are split among the workers based on the PARTITION BY ANY or PARTITION BY clause.

2. Dimensional inputs using the DIMENSION keywords.

The function will combine the partitions and dimensions from multiple data sets and then create a single nested data set against which the SQL-MR function will run.

SQL-MapReduce Output

Here is the easy part. The output from a SQL-MR function will always be a single answer set.

Using five tables as input into an Aster Data function may result in an Employee of the Year award.

Subtle SQL-MapReduce Processing

There are a few subtle events which can occur in SQL-MapReduce on Aster Data.

1. The data is partitioned by the partitioning attribute.

2. **Data is distributed to the worker nodes.

3. The Worker nodes return their result sets to the Queen node.

4. The Queen then processes the answer set which may include some additional calculations. For example, if the Workers have produced a sum for their records, the Queen will then need to perform a sum on the Worker’s sum to product a final answer set.

**If the data on your workers is already distributed on the workers the same as in your SQL-MR function, then no data distribution is needed, and you get a performance boost!

Aster Data Provides an Analytic Foundation

Over 50+ out-of-the-box analytic modules

Aster Data comes with over 50 MapReduce functions that have already been prepared for users. Above are just some of the categories that have MapReduce functions that come automatically with Aster Data.

Path Analysis

Path Analysis

Designed to discover patterns in rows of data that are sequential

nPath: complex sequential analysis for time series and behavioral pattern analysis.

nPath Extensions: count entrants, track exit paths, count children, and generate subsequences.

Sessionization: identifies sessions from time series data in a single pass.

Attribution: operator to help ad networks and websites to distribute "credit“.

Text Analysis

Text Analysis

Designed to derive patterns and extract features in textual data

Text Parser: Counts occurrences of words, identifies their roots, tracks relative positions of words, and even tracks multi-word phrases.

nGram: Generates ngrams based on textual input.

Levenshtein Distance: Computes the distance between two words.

Text Classifier: Classifies text content into categories (e.g. complaints, spam).

Sentiment Analysis: Classifies content to be either positive or negative (for product review, customer feedback).

Entity Recognition: identifies addresses, phone numbers, and names from textual data.

Statistical Analysis

Statistical Analysis

Designed for high-performance processing of statistical calculations

Histogram: Function to provide capability of generating based on history.

Approximate percentiles and distinct counts: Calculates percentiles and counts within a specific variance.

Correlation: Calculation that characterizes the strength of the relation between different columns.

Regression: Performs linear or logistic regression between an output variable and a set of input variables.

Averages: Calculate moving, weighted, exponential or volume weighted averages over a window of data.

GLM: Generalized linear model function that supports logistic, linear, log-linear and regression models.

PCA: (Principal Component Analysis) transforms a set of observations into a set of uncorrelated variables.

Segmentation (Data Mining)

Segmentation

Designed to discover natural

groupings of data points

k-Means: Designed to cluster data into a specified number of groupings.

Canopy: Partitions data into overlapping subsets within which k-means is performed.

Minhash: Buckets highly-dimensional items for further cluster analysis.

Basket analysis: Creates configurable groupings of related items from transaction records in single pass.

Collaborative Filter: Predicts the interests of a user by collecting interest information from many users.

Decision Trees: Native implementation of parallel random forests.

K-Nearest Neighbor: Principal Component Analysis that transforms a set of observations into a set of uncorrelated variables.

Naïve Bayes Classifier: Simple probabilistic classifier that applies the Bayes Theorem to data sets.

Graph Analysis

Graph Analysis

Analyze connected data

nTree: Provides new function for performing operations on tree hierarchies.

Single shortest path: Will find the shortest paths from a given vertex to all the other vertices in the graph.

Beta: Page rank, eigenvector centrality, local clustering coefficient, shape finding.

Transformation of Data

Transformation of Data

Designed for high-performance processing of statistical calculations

Unpack: Extracts nested data for further analysis.

Pack: Compresses multi-column data into a single column.

Antiselect: Returns all columns except for specified columns.

Multicase: Case statement that supports row match for multiple cases.

Pivot: Convert columns to rows or rows to columns.

Apache log parser: Generalized tool for parsing Apache logs.

Sessionize

The SQL-MR function Sessionize shows a different session if a particular user took more than 60 seconds between clicks. If more than 60 seconds passed, it is assumed that the user left and a new session opened.

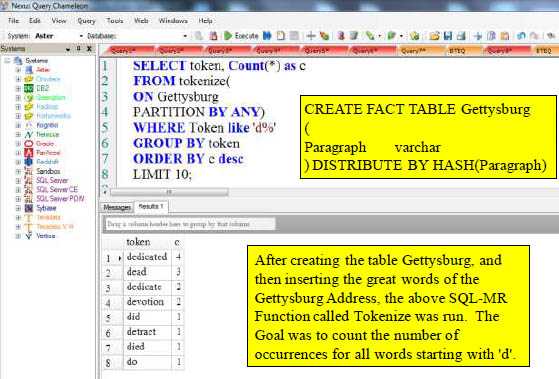

Tokenize

The SQL-MR function Tokenize works with text fields. Tokenize can take an input stream of words, optionally group them, and then deliver the individual words and counts for the each word appearance.

SQL-MapReduce Function . . . nPath

Aster Data nPath is a SQL-MapReduce function which is a close cousin of the programming function regular expressions with one major difference.

Regular Expressions are used to match a sequence of text in a string.

nPath is used to match a sequence of rows in a database!

Aster Data uses regular expressions for matching for three basics reasons:

1. The are very flexible and can be used to match a very simple pattern or a very complex pattern.

2. They are understood by a wide variety of people and used in probably every programming language, so the learning curve is small.

nPath SELECT Clause

SELECT *

FROM npath

(

ON SQL_CLASS.Emp_Job_Table

PARTITION BY Job_No

ORDER BY Emp_No

MODE( NONOVERLAPPING )

PATTERN( 'Emp.Job' )

SYMBOLS(Emp_no > 0 as Emp,

Job_No > 0 Job)

RESULT( First(Job_No of Emp) as Job_Number,

First(Emp_No of Job) as Employee_Number)

) ORDER BY Job_Number;

The SELECT statement in usually used to SELECT columns from a table or view. In this case, the SELECT statement is used to SELECT columns from a SQL-MapReduce function.

nPath is the name of the SQL-MR function which is being selected FROM.

This is the beauty of SQL-MapReduce! It uses familiar query syntax to access powerful analytic functions.

nPath ON Clause

SELECT *

FROM npath (

ON SQL_CLASS.Emp_Job_Table

PARTITION BY Job_No

ORDER BY Emp_No

MODE( NONOVERLAPPING )

PATTERN( 'Emp.Job' )

SYMBOLS(Emp_no > 0 as Emp,

Job_No > 0 Job)

RESULT( First(Job_No of Emp) as Job_Number,

First(Emp_No of Job) as Employee_Number)

) ORDER BY Job_Number;

The ON clause defines the number of input streams into the nPath function. The on clause can reference a table, a view, and even a query. If you use a query as input, make sure you enclose the query in single quotes.

nPath PARTITION BY Expression

SELECT *

FROM npath

(

ON SQL_CLASS.Emp_Job_Table

PARTITION BY Job_No

ORDER BY Emp_No

MODE( NONOVERLAPPING )

PATTERN( 'Emp.Job' )

SYMBOLS(Emp_no > 0 as Emp,

Job_No > 0 Job)

RESULT( First(Job_No of Emp) as Job_Number,

First(Emp_No of Job) as Employee_Number)

) ORDER BY Job_Number;

The PARTITION BY expression defines how your data is partitioned before it is searched. SQL-MapReduce will only look at pattern matches for rows which are in the same partition.

If you have multiple inputs into the function, each input must be partitioned the same way.

You can have one or more PARTITION BY expressions in your functions.

nPath DIMENSION Expression

SELECT *

FROM nPath

(

ON SQL_CLASS.Web_Ads_Table PARTITION BY user_id ORDER BY dt

ON SQL_CLASS.Clicks_Table PARTITION BY user_id ORDER BY dt

ON SQL_CLASS.Radio_Ads_Table DIMENSION ORDER BY dt

MODE(NONOVERLAPPING)

SYMBOLS(true as ad, true as click, true as radio_ad)

PATTERN('(ad|radio_ad)*.click')

RESULT(COUNT(* of ad) as ads_before_click, COUNT(* of click) as num_click)

);

Using the DIMENSION expression will make input available on every partition.

It requires that you have at least two inputs. You can have zero or more DIMENSION expressions while you have to have at least one PARTITION BY expression.

Using the DIMENSION expression also requires your function call to have at least two ON clauses: One ON clause for the PARITITION BY expression and one ON clause for the DIMENSION expression.

nPath ORDER BY Expression

SELECT *

FROM npath

(

ON SQL_CLASS.Emp_Job_Table

PARTITION BY Job_No

ORDER BY Emp_No ASC

MODE( NONOVERLAPPING )

PATTERN( 'Emp.Job' )

SYMBOLS(Emp_no > 0 as Emp,

Job_No > 0 Job)

RESULT( First(Job_No of Emp) as Job_Number,

First(Emp_No of Job) as Employee_Number)

) ORDER BY Job_Number;

The ORDER BY expression specifies how the rows are ordered on the Workers. Descending order is the default. Use ASC after the ORDER BY expression to sort in ascending order.

nPath MODE Clause has Overlapping or NonOverlapping

SELECT *

FROM npath

(

ON SQL_CLASS.Emp_Job_Table

PARTITION BY Job_No

ORDER BY Emp_No ASC

MODE( NONOVERLAPPING )

PATTERN( 'Emp.Job' )

SYMBOLS(Emp_no > 0 as Emp,

Job_No > 0 Job)

RESULT( First(Job_No of Emp) as Job_Number,

First(Emp_No of Job) as Employee_Number)

) ORDER BY Job_Number;

• Overlapping mode will return every possible instance of a pattern match. If rows 1 and 2 match the pattern, then nPath will continue its search on rows 2 and 3.

• NonOverlapping mode says once a match is found, nPath will begin searching for the next pattern match at the next row. If row 1 and 2 match, then nPath will continue its pattern search on rows 3 and 4.

nPath PATTERN Clause

SELECT *

FROM npath

(

ON SQL_CLASS.Emp_Job_Table

PARTITION BY Job_No

ORDER BY Emp_No ASC

MODE( NONOVERLAPPING )

PATTERN( 'Emp.Job' )

SYMBOLS(Emp_no > 0 as Emp,

Job_No > 0 Job)

RESULT( First(Job_No of Emp) as Job_Number,

First(Emp_No of Job) as Employee_Number)

) ORDER BY Job_Number;

The Pattern clause defines the pattern which nPath searches on using these elements:

• Symbols -The symbols used are Emp and Job and are defined in the SYMBOLS clause.

• Operators - The operator used is the dot between Emp and Job. The dot can be translated as "is followed by" and looks for a pattern match on Emp followed by match on Job.

• Parentheses - These are used to nest patterns and are not pictured. (Pattern1 (Pattern2) )

• Anchors — "^" and "$" define the start and the end of a sequence respectively. "^" is always used at the start of a sequence and "$" is always used at the end. Also, not pictured.

Pattern Operators

You can use the following operators in a pattern:

· The dot is known as the cascade operator. For A.B it means we have a pattern match when symbol A is followed by symbol B.

| The pipe is the or alternative operator. A|B would mean when either symbol A or B are true, then we have a match.

? Is a frequency operator and means the symbol occurs, at most, once.

* Is a frequency operator and means the symbol occurs zero or more times.

+ Is a frequency operator and means the symbol must occur at least once.

Pattern operators follow an order of precedence.

Pattern Operators Order of Precedence

Any patterns in parenthesis are calculated first.

Then, pattern operators follow this order.

![]() The frequency operators ?, * and + are figured first.

The frequency operators ?, * and + are figured first.

![]() The cascade or "dot" operator.

The cascade or "dot" operator.

![]() The or / alternative operator if determined.

The or / alternative operator if determined.

Here are some examples.

A.B? - B occurs, at most, once preceded by A. Equivalent to A.(B?).

A|B+ - B occurs at least once or A. Equivalent to A|(B+).

C|A.B - A is followed by B or C. Equivalent to C|(A.B).

A+.B*.C|D - A occurs at least once followed by B which occurs zero or more times followed by C or D. Equivalent to (A+B*.C)|D

A+.(B*.C)|D - B occurs zero or more times followed by C preceded by A occurring at least once or D. Equivalent to (A+(B*.C))|D.

Matching Patterns Which Repeat

If you match any section of a pattern which occur multiple times, you can use the following syntax:

pattern-section{n} or pattern-section{n,} are equivalent and it means the pattern should repeat n number of times.

pattern-section{n,m} means that a patterns should repeat n number of time, but no more than m number of times.

A.B|(C.D){2}

The pattern C.D must occur two times in order for a match to happen. You can also write this pattern section as (C.D){2,}

(A.B){3,6}|C.D

The pattern A.D must occur at least three times, but no more than six.

nPath SYMBOLS Clause

SELECT *

FROM npath

(

ON SQL_CLASS.Emp_Job_Table

PARTITION BY Job_No

ORDER BY Emp_No ASC

MODE( NONOVERLAPPING )

PATTERN( 'Emp.Job' )

SYMBOLS(Emp_no > 0 as Emp,

Job_No > 0 Job)

RESULT( First(Job_No of Emp) as Job_Number,

First(Emp_No of Job) as Employee_Number)

) ORDER BY Job_Number;

Symbols are the rows of a pattern. Multiple symbols are separated by commas. In the example above, we have two symbols Emp which is any employee number greater than zero, and Job which is any job number greater than zero.

Symbols have different context depending on where they are used. If the symbol is used in a pattern, then it represents the row of a type which you are performing a pattern search on.

If the symbol is used in the RESULTS clause, it represents all the rows which match the definition in the SYMBOLS clause as found in the pattern you are searching.

nPath RESULTS Clause

SELECT *

FROM npath

(

ON SQL_CLASS.Emp_Job_Table

PARTITION BY Job_No

ORDER BY Emp_No ASC

MODE( NONOVERLAPPING )

PATTERN( 'Emp.Job' )

SYMBOLS(Emp_no > 0 as Emp,

Job_No > 0 Job)

RESULT( First(Job_No of Emp) as Job_Number,

First(Emp_No of Job) as Employee_Number)

) ORDER BY Job_Number;

The RESULTS clause defines the columns used in the output of the function. Each column is separated by a comma.

The RESULTS clause is evaluated one-time for each matched pattern value per partition.

The alias is the name of the columns which will be returned, and you can refer to them in your SELECT clause.

Adding an Aggregate to nPath Results

The RESULTS clause of the nPath function will support the COUNT, SUM, MIN, MAX, and AVG SQL aggregates.

In addition, the following nPath aggregates are allowed:

• FIRST is the FIRST ( Column Of A ): Gets the first column in the pattern match for the symbol A.

• LAST is the LAST ( Column Of A): The last row which maps to symbol A in the pattern match.

• FIRST_NOTNULL as an example FIRST_NOTNULL (Column Of A): Gets the first non-NULL column in the pattern match for symbol A.

• LAST_NOTNULL as an example LAST_NOTNULL (Column A): Gets the last non-NULL column in the pattern match for symbol A.

• ACCUMULATE as an example ACCUMULATE (Column of A): This will concatenate every column which matches pattern A.

Adding an Aggregate to nPath Results (Continued)

• MAX_CHOOSE

MAX_CHOOSE(ColumnA, ColumnB of A): After determining the max value for ColumnA, the corresponding ColumnB value will be displayed.

MAX_CHOOSE(Max_Students, Course_Name of A) would return the Course Name for the course which has the maximum amount of student per partition, matching the pattern for symbol A.

• MIN_CHOOSE

MIN_CHOOSE(ColumnA, ColumnB of A): Does the same thing as MAX_CHOOSE, but uses the minimum value for ColumnA instead of the maximum value.

• DUPCOUNT

DUPCOUNT(<expression> OF ANY <list of symbols>): For each row in the <list of symbols> sequence, this aggregate will count the number of times the value has appeared preceding the row.

• DUPCOUNTCUM

DUPCOUNTCUM(<expression> OF ANY <list of symbols>): This will count the number of duplicate values of the <expression> which appear immediately preceding the row. When the <expression> used also matches the ORDER BY <expression>, it is the same as performing ROW_NUMBER() - DENSE_RANK() OLAP.

SQL-MapReduce Examples - Use Regular SQL

SELECT Cast(Job_Number AS VARCHAR(10))

FROM npath

(

ON SQL_CLASS.Emp_Job_Table

PARTITION BY Job_No

ORDER BY Emp_No ASC

MODE( NONOVERLAPPING )

PATTERN( 'Emp.Job' )

SYMBOLS(Emp_no > 0 as Emp, Job_No > 0 Job)

RESULT( First(Job_No of Emp) as Job_Number, First(Emp_No of Job) as

Employee_Number)

) ORDER BY Job_Number

WHERE Job_Number = '10030';

Everything outside of the SQ-MR function behaves like normal SQL. You can have clauses and expressions such as WHERE, GROUP BY, HAVING, ORDER BY, LIMIT, and OFFSET.

SELECT * will select everything from the RESULTS clause, but you can also select individual columns such as Job_Number in this example and perform functions on them like TRIM, SUBSTRING, OR CAST.

SQL-MapReduce Examples - Create Objects

CREATE VIEW SQL_VIEWS.nPath_v AS

(

SELECT Job_Number, Employee_Number FROM npath

(

ON SQL_CLASS.Emp_Job_Table

PARTITION BY Job_No

ORDER BY Emp_No ASC

MODE( NONOVERLAPPING )

PATTERN( 'Emp.Job' )

SYMBOLS(Emp_no > 0 as Emp, Job_No > 0 Job)

RESULT( First(Job_No of Emp) as Job_Number, First(Emp_No of Job) as

Employee_Number)

) ORDER BY Job_Number

);

You can create objects such as tables and views from SQL-MR functions you create. Just make sure you enclose the entire function in parenthesis.

SQL-MapReduce Examples - Subquery

SELECT Cast(Job_Number AS VARCHAR(10))

FROM npath

(

ON SQL_CLASS.Emp_Job_Table

PARTITION BY Job_No

ORDER BY Emp_No ASC

MODE( NONOVERLAPPING )

PATTERN( 'Emp.Job' )

SYMBOLS(Emp_no > 0 as Emp, Job_No > 0 Job)

RESULT( First(Job_No of Emp) as Job_Number, First(Emp_No of Job) as

Employee_Number)

) ORDER BY Job_Number

WHERE Job_Number = '10030';

Everything outside of the SQ-MR function behaves like normal SQL. You can have clauses and expressions such as WHERE, GROUP BY, HAVING, ORDER BY, LIMIT, and OFFSET.

SELECT * will select everything from the RESULTS clause, but you can also select individual columns such as Job_Number in this example and perform functions on them like TRIM, SUBSTRING, OR CAST.

SQL-MapReduce Examples - Query as Input

SELECT *

FROM ANTISELECT

(ON (SELECT * FROM SQL_VIEWS.Employee_V)

EXCLUDE('lname', 'fname'));

You don’t have to use a table or view as input. You can use a query!

Here, we are using the ANTISELECT function which takes the following parameters.

ON is the input. This can be a table a view or in the case SQL. EXCLUDE is used to simply not return the columns you specify in the result set.

Had we used EXCLUDE('Lname', 'Fname'), the function would have returned an error saying the columns are not present in the input table. Keep in mind these functions columns are case sensitive.

SQL-MapReduce Examples - Nesting Functions

SELECT *

FROM ANTISELECT

(ON (SELECT *

FROM ANTISELECT

(ON (SELECT * FROM SQL_VIEWS.Employee_v)

EXCLUDE ('fname'))

)

EXCLUDE('lname')

);

It is possible to nest two functions together. Remember, the ON clause is used to define inputs into the functions. In this case, the input is another function!

SQL-MapReduce Examples - Functions in Derived Tables

SELECT *

FROM

( SELECT Product_Id, Sale_Date, Daily_Sales

FROM SMAVG

(ON SQL_CLASS.Sales_Table

PARTITION BY Product_Id

ORDER BY Daily_Sales

WINDOW_SIZE(3) ) ) derived_table;

This query uses the Simple Moving Average function which allows us to see an average of our daily sales for each product id, resetting every three rows which, in this case, correspond to days.

Running this query by itself would be too easy, so we’ve decided to put it in its own derived table.

SQL-MapReduce Examples - SMAVG

SELECT Product_Id, Sale_Date, Daily_Sales

FROM SMAVG

(ON SQL_CLASS.Sales_Table

PARTITION BY Product_Id

ORDER BY Daily_Sales

WINDOW_SIZE(3)

RETURN_ALL('true'));

PARTITION BY is the columns your data is partitioned by.

ORDER BY is how the data is sorted inside the partition.

WINDOW_SIZE tells the function after how many rows to reset and start new calculations.

RETURN_ALL if set to true will return all columns including NULL for the very first window size.

COLUMN_NAMES (not pictured) are the column names you can defined when exponential moving average is required. If this is omitted, all input rows are returned "as is".

SQL-MapReduce Examples - Pack Function

SELECT *

FROM PACK

(

ON SQL_CLASS.Employee_Table

COLUMN_DELIMITER('|')

PACKED_COLUMN_NAME('my_packed_column')

INCLUDE_COLUMN_NAME('false')

COLUMN_NAMES('employee_no', 'dept_no', 'last_name', 'first_name', 'salary')

);

This will returns packed data in the form of column values separated by whatever delimiter you choose. Instead of writing SELECT statements, which cast numbers as text and use concatenation to delimit columns, a user can use this function.

This helps avoid SQL such as this where Column2 is an INTEGER column.

SELECT Column1 || '|' || TRIM(CAST (column2 As char10)) || '|' || Column2 FROM TableA;

Use the UNPACK function to unpack a column.

SQL-MapReduce Examples - Pack Function (Continued)

SELECT *

FROM PACK

(

ON SQL_CLASS.Employee_Table

COLUMN_DELIMITER('|')

PACKED_COLUMN_NAME('my_packed_column')

INCLUDE_COLUMN_NAME('false')

COLUMN_NAMES('employee_no', 'dept_no', 'last_name', 'first_name', 'salary')

);

COLUMN_DELIMITER is the character you've chosen to delimit your columns.

PACKED_COLUMN_NAME is the name you're giving to your final column.

INCLUDE_COLUMN_NAME if this is set to true, it will prepend your column name before your column value.

COLUMN_NAMES are the columns from the table specified in your ON clause.

Only the ON clause and the PACKED_COLUMN_NAME clause are required.

SQL-MapReduce Examples - Pivot Columns

SELECT * FROM PIVOT

(ON SQL_CLASS.Sales_Table

PARTITION BY product_id

PARTITIONS('product_id')

ROWS(7)

METRICS('daily_sales'));

The Pivot function will take table data and convert it into a new schema based on the arguments which are given. It handles Nulls.

PARTITION BY will define your rows.

PARTITIONS should have the same columns as your PARTITION BY statement, but they can be in a different order.

ROWS is the maximum number of rows which can be in each partition. In the example above, if we have more rows than 7 in a partition, then they are not shown. If we have less, then NULL values will be added to our answer. PIVOT_KEYS & PIVOT_COLUMNS (not shown) You will either use PIVOT_KEY and PIVOT_COLUMNS together or you will use the ROWS clause. They cannot be combined. Any row that has a pivot_column value which is not in the pivot key will be omitted. NULL will be added if a partition does not contain a pivot key value.

METRICS contain the columns you want to pivot.