2

CHAPTER TWO

Gather Information, Plan, and Conceptualize the Evaluation

This chapter focuses on developing a deep understanding of the initiative’s purpose and design as well as the perspectives of key stakeholders. The ideal time to plan an evaluation is when the development initiative is being designed. Asking evaluation questions can help stakeholders understand the desired impact of the development initiative in concrete terms. That information can be used to design the initiative in a way that will likely promote the desired results. However, it is not always possible to plan an evaluation during the design of the initiative. Often those funding or conducting leadership development don’t realize the need for an evaluation until after the initiative has been conducted. Even if you are in that situation, it’s not too late for an evaluation. Although far from ideal, some evaluation is better than none. Evaluations can be conducted retrospectively, and the ideas shared in this chapter can be applied in those situations as well.

Whether the evaluation is designed in unison with the creation of the development initiative or designed after participants complete the process, it’s essential to define the key elements of the evaluation design. The following actions can help you successfully gather the information you will need to design an effective evaluation.

![]() Identify and engage stakeholders. Who will be affected by the development initiative and its evaluation? Who is most interested in the evaluation findings?

Identify and engage stakeholders. Who will be affected by the development initiative and its evaluation? Who is most interested in the evaluation findings?

![]() Surface expectations and define the purpose of the initiative. What are the reasons behind the development initiative? What results do stakeholders expect? When do they expect those results?

Surface expectations and define the purpose of the initiative. What are the reasons behind the development initiative? What results do stakeholders expect? When do they expect those results?

![]() Understand the initiative design. Who is the target audience? What will its experience entail? How is the initiative being delivered (face-to-face, blended, virtual, asynchronous, or synchronous)? What are the expected outcomes of the initiative?

Understand the initiative design. Who is the target audience? What will its experience entail? How is the initiative being delivered (face-to-face, blended, virtual, asynchronous, or synchronous)? What are the expected outcomes of the initiative?

![]() Create a results framework. Is the initiative designed in a way that is likely to achieve the desired results? If not, what aspects of the design should be modified?

Create a results framework. Is the initiative designed in a way that is likely to achieve the desired results? If not, what aspects of the design should be modified?

![]() Determine the evaluation questions. How can stakeholder expectations be reflected in the evaluation questions?

Determine the evaluation questions. How can stakeholder expectations be reflected in the evaluation questions?

![]() Create an evaluation plan. How will the evaluation gather data, from whom, and when? What resources will be used? How will results be communicated to stakeholders and when?

Create an evaluation plan. How will the evaluation gather data, from whom, and when? What resources will be used? How will results be communicated to stakeholders and when?

Identify and Engage Stakeholders

Stakeholders are people who are, or will be, affected by the initiative being evaluated or by the outcomes of the evaluation itself. If they do not get their questions answered or do not feel their perspective is represented, it’s unlikely that your evaluation will serve its purpose, no matter how rigorous your design. To avoid this disappointment and to get the highest-quality, most-relevant data possible, identify and include stakeholders early in the evaluation-design process and understand the different perspectives and power dynamics at play. To identify the key stakeholders, ask the following questions:

![]() Who has an interest in the development initiative?

Who has an interest in the development initiative?

![]() Who has an interest in the evaluation’s process and results?

Who has an interest in the evaluation’s process and results?

![]() Are there additional people whose support is required for the success of the initiative or the evaluation?

Are there additional people whose support is required for the success of the initiative or the evaluation?

![]() Who has decision-making authority with respect to both the initiative and the evaluation?

Who has decision-making authority with respect to both the initiative and the evaluation?

Once the key stakeholders have been identified, clarify their interests and expectations about why and how the evaluation is being conducted so that any differences in expectations can be addressed. Later we will examine stakeholder perspectives on the initiative itself, but for now the focus is on the evaluation.

Identifying stakeholders and understanding their perspectives must be part of an ongoing process. Although it is critical to do so early in evaluation planning, reexamining who key stakeholders are and what they are interested in or expect is good practice, particularly if it is a complex, multiyear evaluation. Engaging stakeholders throughout the evaluation will likely result in an evaluation that is more relevant and useful.

Surface Expectations and Define the Purpose of the Initiative

The purpose of a development initiative may seem to be clear, but often, when you check your understanding against that of different stakeholder groups, you will find a lack of alignment. Working with stakeholders to surface their expectations for the initiative and helping them reach a shared understanding of its purpose helps bring clarity to the type of evaluation that will be most useful. Designing a development initiative and its evaluation at the same time is an effective way to ensure that stakeholders have that alignment and understanding. Defining how your evaluation will measure the impact of the development initiative puts abstract goals (such as “We will develop better leaders”) into practical terms (such as “Our senior managers will understand how to give feedback to their direct reports”).

Understanding stakeholder assumptions will help you better define the purpose of the initiative so you can design a more effective evaluation. Although not all stakeholders will, or should, participate in determining the scope or focus of the evaluation, it will be helpful to you to understand all of the assumptions the stakeholder groups hold about the purposes of the development initiative. A thorough understanding of how elements of the development initiative fit together and the context in which the initiative takes place are needed to design the evaluation. Integrating the design of the evaluation with the design of the initiative prompts clear conversations about stakeholder expectations at a time when adjustments can be made to make sure that both the initiative and the evaluation are on track to deliver on those expectations. With guidance, stakeholders should be able to articulate the type and amount of impact they expect, as well as the expected timing of that impact.

In reviewing the leadership-development initiative, evaluators can gain a full picture of the process by discussing expectations with stakeholders. The following list of questions can be helpful in guiding these discussions with key stakeholders.

Exhibit 2.1 – Questions for Surfacing Expectations and Defining Purpose

Overall Purpose (or Development Strategy)

![]() What specific challenge does the initiative hope to address?

What specific challenge does the initiative hope to address?

![]() How does the initiative support the organization’s business or leadership strategy?

How does the initiative support the organization’s business or leadership strategy?

![]() What specific leadership needs does the initiative address?

What specific leadership needs does the initiative address?

![]() Are there any other external and internal pressures or demands for creating the initiative?

Are there any other external and internal pressures or demands for creating the initiative?

![]() What is the overall purpose of the initiative?

What is the overall purpose of the initiative?

Expected Impact

![]() What type of outcomes is the initiative intended to promote? Knowledge acquisition? Awareness change? Behavioral change? Skill development? Performance improvement? Network enhancement? Culture change?

What type of outcomes is the initiative intended to promote? Knowledge acquisition? Awareness change? Behavioral change? Skill development? Performance improvement? Network enhancement? Culture change?

![]() What type of impact is the initiative expected to have? Will it affect only individuals, or will it also affect teams or groups? Will it have a broad organizational impact?

What type of impact is the initiative expected to have? Will it affect only individuals, or will it also affect teams or groups? Will it have a broad organizational impact?

![]() What conditions are needed for the initiative to be successful?

What conditions are needed for the initiative to be successful?

![]() When is it realistic to expect to see results? How will we know when these results have occurred?

When is it realistic to expect to see results? How will we know when these results have occurred?

Defining and Categorizing Impact

The Kirkpatrick Four Levels is a widely used model for training evaluation (Kirkpatrick & Kirkpatrick, 2014). For many years the model has provided a common language for understanding among stakeholders about the kinds of outcomes that are expected from a training initiative, including leadership-development training. An increasing number of models are being used to evaluate training and development initiatives, such as the one offered by Bersin (2006), but Kirkpatrick remains prominent. We have found that stakeholders often expect us to frame our approach to an evaluation in terms of the levels in the Kirkpatrick model. As a reminder (or perhaps as a brief introduction), the model organizes the types of outcomes from training and development initiatives into four levels: Level 1 (Reactions, which includes Satisfaction, Engagement, and Relevance); Level 2 (Learning, which includes Knowledge, Skill, Attitude, Confidence, and Commitment); Level 3 (Behaviors, which includes Performance as well as the Processes and Systems that reinforce, encourage, and reward performance); and Level 4 (Results, including leading indicators). Commonly, measuring Level 1 is expected and routine, while measuring Levels 2, 3, and 4 is considered more challenging.

Although widely used and simple to understand, the Kirkpatrick model may not fully meet your needs for designing an evaluation of a leadership-development initiative because (1) not all leadership-development initiatives are limited to training (for example, they can include components such as consulting with executive teams, developing networks, and engaging in action-learning projects) and (2) the model as it is commonly referenced does not take into account the more complex factors that contribute to the impact of leadership development, such as how participants are selected and prepared for the initiative, how closely the design is aligned with the organizational needs it is meant to address, and the supports and barriers to learning transfer. That said, the Kirkpatrick model can be helpful when thinking about the depth of impact. It focuses on individual and organizational change, but change can be expected at other levels as well. Understanding where and what kinds of change are expected and linking that back to the initiative helps create a deeper understanding of impact and provides a framework for thinking about areas of potential disconnection between what is expected and what the initiative is designed to create.

Over the next few sections we’ll briefly discuss various ways that leadership development can lead to impact at the individual, group, organizational, and societal levels. Every leadership-development initiative is intended to have some sort of an impact on the individuals who experience it, but some may also be designed to have an impact at collective levels.

Individuals. Although an impact on individuals is expected in leadership-development work, what that impact is can vary. For example, individuals may learn new leadership models and practices, or they may learn how to be effective with different types of people or in different contexts. Participants may develop an increased awareness of their personal leadership style and how it affects others. They might also change work-related behaviors or increase their effectiveness at using newly acquired skills. Impact can vary depending on the content and design of the initiative and the development needs of individual participants. For example, three- to five-day assessment-for-development initiatives are likely to result in participants acquiring critical knowledge, building awareness, and gaining ability to apply lessons to certain behaviors and situations. For changes to become ingrained in an individual’s performance, additional developmental experiences, such as one-on-one coaching and challenging assignments, are necessary.

Groups. Development initiatives created for individual leaders can also have an impact at the group level. For example, a leader’s group might perform more effectively after the leader has enhanced his or her leadership capability. The group may be able to get products to market more quickly because its manager has improved his or her skill in focusing group effort. After participating in a development initiative and learning skills that encourage better communication among team members, a leader may be able to guide the team toward more effectively supporting community goals. When development initiatives are targeted at intact teams, outcomes are more pronounced and more quickly observable because the team as a whole is able to put the members’ new awareness, knowledge, and skills into practice immediately.

Organizations. When organizations invest substantial resources in developing leadership capabilities, they look for results that will help them achieve strategic objectives, sustain their effectiveness, and maintain their competitive position. The quality of an organization’s leadership is only one contributing factor to reaching these goals. Even so, a leadership-development initiative can, among other things, facilitate culture change, enhance the organizational climate, improve the company’s bottom line, and build a stronger, more influential organizational profile. An organization may also use development initiatives to augment the internal branding of the company, as is the case when a company offers development opportunities in order to present itself as an appealing place to work. With regard to evaluation, stakeholders will want to gather information that provides evidence of the link between leadership development and measures of organizational success. Further, organizations may take on initiatives that are focused on achieving results for the organization as a whole, rather than primarily for individual leaders. For instance, an organization could be working on changing its leadership culture. (See the sidebar “Evaluating Organizational-Leadership Initiatives.”)

Societal. Leadership-development initiatives can also aim to have an impact on societal or system-level change (Hannum, Martineau, & Reinelt, 2007). Because these types of outcomes typically take longer to occur, it may be difficult to see them in the time frame of most evaluations. For these types of evaluations, evaluators look for changes in social norms, social networks, policies, allocation of resources, and quality-of-life indicators. In a corporate setting, for example, an organization could be motivated to provide leadership development designed to move an entire industry toward improving working conditions and safety for all employees. In a community context, where building networks is a core focus of the leadership-development effort, evaluators may look for changes in the diversity and composition of networks, levels of trust and connectedness, and capacity for collective action (Hoppe & Reinelt, 2010).

Evaluating Organizational-Leadership Initiatives

In contrast to leadership initiatives that focus on enhancing individual leadership skills, organizational-leadership solutions focus on enhancing leadership capabilities for an entire organization. There are many approaches to developing organizational leadership, and many of them are not programmatic. For instance, a leadership team may choose to define and implement a new leadership strategy for its organization. This may entail making changes to talent systems, promoting a more collaborative culture, or even restructuring the organization to better support the strategy. It could also involve coaching of an executive team, using employee forums to conduct culture-change work, or launching action-learning teams, among many other possibilities.

Organizational-leadership initiatives are often multifaceted and complex. It may be more difficult to articulate clear outcomes and define a clear path to achieving the outcomes than is the case with individual leadership development. However, assessing the effectiveness of organizational-leadership initiatives is still important, perhaps more so than with individual initiatives. Many of the steps and approaches that would be recommended when evaluating leadership-development initiatives for individuals would still be useful for evaluating organizational-leadership initiatives. For instance, engaging stakeholders in conversations early on about expectations and what success would look like can help everyone be aligned about the initiative and can inform what to measure to assess the initiative’s progress and success.

Evaluation of complex and adaptive initiatives requires continuous data gathering and collective sensemaking. Developmental Evaluation (Patton, 2011; see also Gamble, 2008) is one approach that offers promise for evaluation of organizational-leadership initiatives. Unlike traditional evaluation approaches, a developmental approach is particularly suited to adaptive work in complex and dynamic situations. In these situations, systematically gathering and sharing information helps to frame concepts, surface issues, and track development. Doing so can close the gap between guessing and knowing what is happening from different perspectives.

Impact Over Time

If the impact of an initiative is expected to occur over a period of time, you can design your measurements to account for short-term, midrange, and long-term impact. The short-term impact of a development initiative can include what participants think about the initiative and their experience with it immediately after completion. Short-term impact also includes the development of new ideas or new self-awareness based on what participants have recently learned from their developmental experience.

To measure midrange impact on individuals, we recommend that the evaluation should occur three to six months after the development initiative ends or after key components of the initiative are delivered or experienced. Measurements at this time usually relate to individual skill improvement, behavioral change, or team development. Assessing a development initiative’s long-term impact often occurs nine months to a year or more after the initiative ends, especially if the expected outcomes include promotions and enhanced leadership roles for participants. Areas that benefit most from this type of evaluation include the attainment of complex skills and organizational-level change. Changes in organizations take longer to achieve. Assessing change over time allows you to see trends and to determine when change occurs and if change is enduring.

Understand the Initiative Design

Once the overall purpose of the initiative is understood, how it fits into the broader strategy for the organization has been established, and outcomes have been defined, the next step is to understand the proposed design (although in practice these steps often occur simultaneously). This section outlines some of the key elements of the design that are important to understand.

Target population and participant selection. The outcomes of a leadership-development initiative depend heavily on the individuals participating. The skills and perspectives they bring to the initiative and the context in which they work affect what they are able to learn and the results they are able to achieve. Therefore, it’s critical that you fully understand the target population for the development initiative you are evaluating, including how participants were selected. Understanding the needs, concerns, and expectations that participants bring to the development process can help you measure the results of that process. Related to this, understanding how the participants are selected and whether they will participate as one group or as multiple cohorts is also key to designing the evaluation. We make a distinction between the target population and the participants in the initiative because differences can be created in the selection process. For example, if the target population is all middle managers but only managers from a specific geographic region are selected, that can be a cue to ask questions to understand the selection process and whether it might be biased in a manner that could undercut the desired impact. It may make sense to focus on a certain region initially, but in that case it may also make sense to examine organizational impact for only that region as well.

Organizational context. Because the success of a development initiative is affected by its context, evaluators may need to become familiar with the profile of the group of managers to whom participants report and the types of colleagues with whom participants work. The support and involvement of others has an impact on participants’ ability to effectively integrate what they’ve learned and apply the skills they’ve acquired. Understanding what, if any, role these stakeholders have had in the process can provide a missing link when it comes to understanding impact. For example, if an initiative is seeking organizational change through a leadership-development initiative for high-potential leaders, but managers of high-potentials are resistant to these types of changes, then understanding that dynamic is important to the evaluation. It’s also important to understand any practices or policies that are relevant to the initiative.

Duration of the initiative. Initiatives can vary widely in their duration. Understanding the duration of the initiative can help you identify when to look for different types of impact and also help calibrate the depth of impact sought. As you examine the duration of the initiative relative to the desired impact, ask yourself whether the length of the initiative seems adequate for fostering the desired level and types of results. For example, a two-hour workshop on leading across cultures may raise awareness but it is unlikely to lead to significant behavior change.

Delivery mode. Development designs usually have a road map that demonstrates the major elements of the initiative, how they are to be delivered (for example, face-to-face, virtually, or a blend of both), and the timing within and between each. For instance, if it is a traditional face-to-face initiative, what are the major components and outcomes expected? If there is a virtual-learning component, how many modules will be covered and over what period of time? Will these be short engagements for accelerated development? Will these engagements build on one another so that they must be done in chronological order, or will the initiative have more distributed control, being self-paced and user-controlled?

Evaluating Virtual Initiatives

The substantial growth of digital- or virtual-learning offerings—including online modules, mobile learning, Massive Open Online Courses (MOOCs), and other virtual-learning opportunities—by leadership-development providers points to the need for increased understanding of evaluation beyond a traditional face-to-face context. CCL has also been offering and evaluating initiatives within the virtual space. Every evaluation will have similarities and differences regardless of the mode of delivery, but you will probably want to pay attention to the following tips if the initiative is situated in a digital space (Mehta & Downs, 2016):

![]() Include data-collection measures that focus not only on the content and experience of participants but also on their previous experiences with and attitudes toward the training-delivery mode. If a participant had to learn the platform and content, the cognitive load will be higher, and this might negatively impact his or her experience.

Include data-collection measures that focus not only on the content and experience of participants but also on their previous experiences with and attitudes toward the training-delivery mode. If a participant had to learn the platform and content, the cognitive load will be higher, and this might negatively impact his or her experience.

![]() Check all evaluation elements for appropriateness in the nontraditional digital or virtual space. Some characteristics that make a traditional face-to-face initiative successful may not directly translate without adjustment in the digital space; the same is true for the evaluation instruments. For instance, a standard survey that asks about something that can only occur in the face-to-face space needs to be tweaked or removed.

Check all evaluation elements for appropriateness in the nontraditional digital or virtual space. Some characteristics that make a traditional face-to-face initiative successful may not directly translate without adjustment in the digital space; the same is true for the evaluation instruments. For instance, a standard survey that asks about something that can only occur in the face-to-face space needs to be tweaked or removed.

![]() Collect other information that might not normally be necessary or available in the face-to-face space. For instance, participant rates of time spent in the management system for online learning might be useful in talking about how much time is spent in the virtual classroom.

Collect other information that might not normally be necessary or available in the face-to-face space. For instance, participant rates of time spent in the management system for online learning might be useful in talking about how much time is spent in the virtual classroom.

![]() Think carefully about data-collection timing and triggers. Participants who have experienced a self-paced online program might not have completed the program at the same time or in the same way. For instance, if some participants take a class and provide feedback before a company restructures and others are still taking the class online when the restructure is announced, the participant experiences and scores might be different before and after the event.

Think carefully about data-collection timing and triggers. Participants who have experienced a self-paced online program might not have completed the program at the same time or in the same way. For instance, if some participants take a class and provide feedback before a company restructures and others are still taking the class online when the restructure is announced, the participant experiences and scores might be different before and after the event.

![]() Make sure the initiative objectives are clear and measurable; this is especially true in the digital environment. There is research in higher education that suggests that some instructors in the face-to-face environment are sometimes able to overcome weaker content alignment in part because they are charismatic and also possibly because they are more able to set the tone and clarify expectations in the moment. The digital environment often does not present as many opportunities to clarify expectations between the instructor and the students, so clear, measurable objectives are especially vital.

Make sure the initiative objectives are clear and measurable; this is especially true in the digital environment. There is research in higher education that suggests that some instructors in the face-to-face environment are sometimes able to overcome weaker content alignment in part because they are charismatic and also possibly because they are more able to set the tone and clarify expectations in the moment. The digital environment often does not present as many opportunities to clarify expectations between the instructor and the students, so clear, measurable objectives are especially vital.

Design content. The content of the design includes the topics the initiative focuses on. Is the initiative based on a specific framework or theory of leadership? What leadership competencies are addressed? What mindset shifts are fostered? Are collaborative approaches addressed? What types of modules will be completed? How does the design ensure learning transfer? For example, does it engage participants in applying what they learn in the initiative to leadership challenges relevant to their work? Does it include strategies for participants to receive ongoing support for applying new skills from coaching sessions or from their supervisors or colleagues?

Existing sources of data. Data are often collected during the initiative that may be useful in an evaluation. It is helpful to identify what data are already being gathered as part of the initiative or by other groups or departments that may be useful in an evaluation. The risk here is using what’s available even though it may not be that useful in the evaluation. Do, however, think carefully about whether the data available are a good fit for the evaluation. If there’s a match, then by all means use what’s available. Conversely, when you design your data-collection efforts you may find ways to weave collection of evaluation data into the initiative experience. For example, having participants reflect on and document what they learned and how they have changed can be a source of evaluation data as well as part of the development experience.

The following questions can be used to gain an understanding of the initiative design and round out your overall picture of the initiative.

Exhibit 2.2 – Understanding the Initiative Design

Use this worksheet to get a better understanding of the initiative and how it is being designed or has been designed.

Target Population, Participant Selection, and Other Stakeholders

![]() Who is the target population for this initiative (for example, high-potential early managers, organizational executives, or human resource managers)?

Who is the target population for this initiative (for example, high-potential early managers, organizational executives, or human resource managers)?

![]() Why do these individuals need a development initiative? Why does this initiative focus on them in particular?

Why do these individuals need a development initiative? Why does this initiative focus on them in particular?

![]() What is the selection process to participate?

What is the selection process to participate?

![]() Does the selection process make sense in light of the overall target population and initiative goals?

Does the selection process make sense in light of the overall target population and initiative goals?

![]() What are relevant practices and policies related to this initiative?

What are relevant practices and policies related to this initiative?

![]() How do the participants view this particular initiative? What positive and negative associations does it have from their perspective?

How do the participants view this particular initiative? What positive and negative associations does it have from their perspective?

![]() Who are other stakeholders involved in the development process?

Who are other stakeholders involved in the development process?

![]() How do the stakeholders view this particular initiative? What positive and negative associations does it have from their perspective?

How do the stakeholders view this particular initiative? What positive and negative associations does it have from their perspective?

Design Elements

![]() Is the initiative based on a particular leadership framework or competency model?

Is the initiative based on a particular leadership framework or competency model?

![]() What content will be delivered and how will it be delivered?

What content will be delivered and how will it be delivered?

![]() How will participants engage in the initiative, and over what span of time? Will it include virtual components?

How will participants engage in the initiative, and over what span of time? Will it include virtual components?

![]() Are there plans to develop or change the initiative over time?

Are there plans to develop or change the initiative over time?

Barriers and Supports

![]() How will learning transfer be supported? What role will managers play in supporting participants in their development? What type of organizational supports may be needed to enhance learning transfer?

How will learning transfer be supported? What role will managers play in supporting participants in their development? What type of organizational supports may be needed to enhance learning transfer?

![]() What barriers might get in the way of participants applying what they learn?

What barriers might get in the way of participants applying what they learn?

![]() To what extent and how will participants and their managers be held accountable for their development as a result of the initiative?

To what extent and how will participants and their managers be held accountable for their development as a result of the initiative?

Existing Data Sources

![]() What data will be collected during the initiative that may be useful in an evaluation? What data are being collected by other groups or departments that may be useful in an evaluation? What evaluation techniques, such as end-of-initiative surveys, are already designed for or in use by the initiative?

What data will be collected during the initiative that may be useful in an evaluation? What data are being collected by other groups or departments that may be useful in an evaluation? What evaluation techniques, such as end-of-initiative surveys, are already designed for or in use by the initiative?

![]() What assessment instruments will the initiative use that could also be used to measure change during the evaluation?

What assessment instruments will the initiative use that could also be used to measure change during the evaluation?

Create a Results Framework

Once the desired results of the initiative have been clearly articulated and the initiative has been designed (or at least an initial design has been proposed), different tools and processes can be applied to assess whether the design is likely to achieve the desired results. We recommend mapping the initiative to expected outcomes in a results framework. Similar approaches and tools to show these connections are referred to as a results model, logic model, theory of change, or pathway map (see, for example, Chapter Two in Hannum, Martineau, & Reinelt, 2007, or Martineau & Patterson, 2010).

Asking key stakeholders to help develop or review the results framework for your initiative can create valuable dialogue and alignment around the purpose of the initiative. It can ensure that designs are based on logical connections to and theory about what will actually work versus what is the most exciting or innovative development activity at the time. For example, a designer who has just had a great experience with a newly developed simulation may have a bias toward incorporating that into every initiative, even when it isn’t aligned with initiative goals. Similarly, it can help designers identify gaps in an initiative and make adjustments as necessary. You can learn more about logic modeling on the W. K. Kellogg Foundation website: http://www.wkkf.org/resource-directory/resource/2006/02/wk-kellogg-foundation-logic-model-development-guide.

Whatever approach you choose to use, it is important to make sure the purpose of the initiative and the evaluation are clear from the perspective of key stakeholders. One way to do that is to create a leadership-development results framework like the one in Table 2.2 below. This type of results framework can be used to check your understanding of the intended results and to engage stakeholders in discussions that lead to common understanding and agreement about the intended outcomes of the initiative.

The framework outlines common areas to examine as part of an evaluation and is organized by the type of information that would be gathered. The areas listed are intended to spark ideas for what you might include in a similar framework customized for your situation; they reflect what we tend to examine in our own practice as well as what we’ve seen frequently in the literature. That said, this is not a comprehensive list. The following provides an explanation of what to include in each of the sections of our sample results map.

Needs assessment. The needs-assessment column focuses on information that is gathered as part of or prior to the design of a leadership-development initiative or process. The focus of the evaluation at this stage is to gather data about the context of and need for leadership development. This assessment should lead to a clear understanding of the business challenges and needs from the perspective of different stakeholders, the purpose of and need for leadership development, and appropriate contextual considerations including supports and barriers to an effective initiative.

Initiative design. The design column should include key components including elements that ensure the design is responsive to the culture and needs of the organization and the target population and how it will lead to the desired results.

Initial response to the initiative. This column includes data gathered shortly after or during the leadership-development experience to document the response to the initiative and to identify areas that may require further attention (such as participants not seeing how to apply what they learned on the job).

Expected results. Results are organized into four subcolumns: individual, group, organizational, and societal. Not all initiatives will need all four columns, depending on the intended outcomes of the development. Completing these columns with stakeholder input and buy-in can be the most challenging and, at the same time, rewarding part of this process.

Contextual factors. These factors reflect conditions within or outside the organization that may not be directly related to the leadership-development initiative but are important to consider when designing the evaluation and interpreting data, as they may influence outcomes and results. Internal factors can include a recent merger, major organizational change, or a turnover of the organization’s key leadership. External factors can include changes in the economy, market trends and changes, or a natural disaster.

Determine the Evaluation Questions

Once you understand the expectations of key stakeholders and the leadership-development initiative, you can define the questions the evaluation should answer. It is important to understand the difference between evaluation questions and survey or interview questions. Evaluation questions focus on the big picture. They include critical questions about the initiative and its impact. Survey or interview questions are more detailed and are created specifically to generate data for analysis. Evaluation questions should be well defined and linked to stakeholder expectations so that they appropriately address their specific interests and concerns. Clarifying the key overarching questions linked to stakeholder expectations is a critical step to ensuring the evaluation leads to relevant and useful data, analysis, and findings.

We recommend that your evaluation address only a few key questions to keep the evaluation goals clear and to maintain a focused effort during the implementation phase.

Several specific questions related to a particular development initiative can be investigated. Those questions might include the following:

![]() To what extent does the leadership-development initiative meet its stated objectives?

To what extent does the leadership-development initiative meet its stated objectives?

![]() Are there any unintended benefits or challenges raised by the initiative?

Are there any unintended benefits or challenges raised by the initiative?

![]() To what degree have participants applied what they have learned to their work?

To what degree have participants applied what they have learned to their work?

![]() To what extent have participants made significant behavioral changes?

To what extent have participants made significant behavioral changes?

![]() What is the impact of participants’ behavioral changes (or other changes) on those around them?

What is the impact of participants’ behavioral changes (or other changes) on those around them?

![]() Has the organization experienced the intended changes (benefits) as a result of the initiative?

Has the organization experienced the intended changes (benefits) as a result of the initiative?

![]() To what extent did the various components of the initiative contribute to impact?

To what extent did the various components of the initiative contribute to impact?

![]() How can the initiative be strengthened to improve impact?

How can the initiative be strengthened to improve impact?

Prioritizing evaluation questions serves two functions. The first is to reduce the overall number of questions. If a question is not important, should resources be expended to address it? The second function is to determine the merit of the different questions. Determining what is critical to know versus what is interesting is important. There are multiple ways to prioritize evaluation questions, from having stakeholder groups vote or rate the importance, to asking funders to decide what they are willing to provide resources for. As you focus in on a narrow group of evaluation questions, consider the following: What kind of information about the impact of the initiative do various stakeholders need? What will they do with the information? Why do they need it?

This is also a good time to work with stakeholders to create an evaluative rubric. Evaluative rubrics are high-level interpretation guides for data that help evaluators provide overall assessments about the merit and worth of a program or initiative. They can be used to combine information from different sources to arrive at an evaluative judgment such as “Is the program good enough to warrant continued investment?” For example, if a primary evaluation question is “Has the organization experienced the intended changes (benefits) as a result of the initiative?” then a rubric would define or provide guidance about what level and types of change would be needed to decide if the result was excellent, good, adequate, or inadequate.

Creating a rubric is one way to help stakeholders identify what is most important to know and what evidence to consider when addressing an evaluation question. It can also help stakeholders agree on and document what “adequate performance” looks like. Having this conversation before data are collected can reveal what information, and in what form, would be most useful and important for key stakeholders. Rubrics can range from general to very specific. The sample rubric provided in Table 2.3 is general. It can be helpful to scope out a general rubric during the planning stage of the evaluation and then add more detail once more is known about data collection. You can see from the sample rubric that even a general rubric provides cues about how stakeholders define success and what kinds of data would be helpful.

“Has the organization experienced the intended changes (benefits) as a result of the initiative?”

Create an Evaluation Plan

An evaluation plan ensures (1) that the results of a development initiative are measured so that they meet the expectations of participants and other stakeholder groups and (2) helps stakeholders understand exactly what will be evaluated so they can design the development initiative to produce desired results. Organizations benefit from an evaluation plan because it clarifies what is happening, when, and why. As you plan your evaluation, be sure to indicate the relationship between specific evaluation questions, components of the leadership-development initiative, timelines, and selected evaluation methods. The evaluation plan examples that follow can serve as templates for a design that serves your particular circumstances.

Your plan provides an overview of data-collection activities and helps ensure that you are collecting the appropriate data needed to answer the evaluation questions. A good evaluation plan will include other activities, such as the communication of results (for example, what results are communicated, to whom, and by what media).

When you create your evaluation plan, you don’t yet have to know the specific content for each method (for example, the questions for your survey). Your intent at this point is to choose methods that are:

![]() likely to produce the type of data valued by key stakeholders;

likely to produce the type of data valued by key stakeholders;

![]() capable of addressing each specific evaluation question;

capable of addressing each specific evaluation question;

![]() logistically feasible and set for an appropriate time frame; and

logistically feasible and set for an appropriate time frame; and

![]() appropriate for the people who will be asked to provide the data.

appropriate for the people who will be asked to provide the data.

In developing your evaluation plan, it’s important to understand the context in which the leadership development and evaluation are taking place. For example, in an organizational setting an evaluator needs to consider the ebb and flow of work and to take critical times in an organization’s calendar into account. Many organizations have periods that are particularly busy and stressful—these are bad times to conduct evaluation activities if it can be avoided. For example, if the people you wish to collect survey data from are enmeshed in annual budget planning, they may not have time to complete your survey or may rush through it without providing useful data. You may be able to get more people to respond to your survey if you wait a month. Making the evaluation process as simple and convenient as possible can reduce scheduling problems and help you gather the information you need from the people you need to hear from.

Creating a budget is part of designing an evaluation; you’ll want to have some idea about the amount and type of resources (money, time, and staff) available. As a general rule, evaluations typically take up 5 to 20% of the cost of a development initiative. The cost depends on the complexity of the initiative and the evaluation. The following are important questions to ask when considering the resources needed for your evaluation:

![]() When are stakeholders expecting to see results?

When are stakeholders expecting to see results?

![]() Will you have enough support to hire professional evaluators to design and either implement or oversee the evaluation?

Will you have enough support to hire professional evaluators to design and either implement or oversee the evaluation?

![]() Will you have access to survey software that has built-in analysis and reporting or will you need to source that yourself (if needed)?

Will you have access to survey software that has built-in analysis and reporting or will you need to source that yourself (if needed)?

![]() How much data will be collected during the evaluation? How will the organization use the evaluation results?

How much data will be collected during the evaluation? How will the organization use the evaluation results?

You will need to make resources available to collect the necessary data, analyze it, and communicate the results. If stakeholders want to measure results at multiple stages, you will need to allocate your resources appropriately to meet that need. Skill sets you may want to tap for conducting an evaluation include database experience, statistical knowledge, survey development, interviewing experience, focus-group facilitation, and project management. If the organization has available staff with these skills, that can reduce costs. Many evaluation approaches encourage stakeholders to be part of the entire evaluation process, not only as advisers but as gatherers and analyzers of information. This approach is often referred to as participatory evaluation and it can reduce costs and serve as a development opportunity.

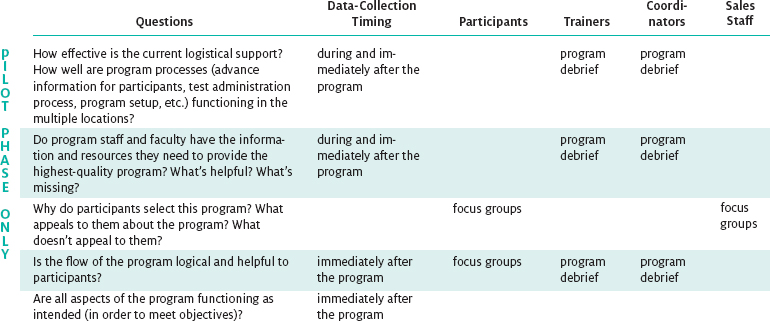

Evaluation plans can be as simple or as complex as you need them to be. We’ve included a few examples in Tables 2.4 and 2.5 to illustrate the different ways information can be displayed.

Once you have taken these initial steps, you have laid the foundation for planning the evaluation. You are now ready to get more specific about the evaluation plan and flesh out the methods that will be used. The next chapter provides an overview of commonly used methods and approaches to evaluating leadership-development initiatives.

Table 2.4 – Evaluation Plan Example 1

Longitudinal Outcome Evaluation for the ABC Leadership Development Program

Table 2.5 – Evaluation Plan Example 2

Pilot and Ongoing Implementation and Outcome Evaluation of the MNQ Leadership Development Program

Checklist – Chapter Two

Gather Information, Plan, and Conceptualize the Evaluation

![]() Plan your evaluation when the development initiative is being designed.

Plan your evaluation when the development initiative is being designed.

![]() Identify and engage stakeholders with an interest in the initiative and its evaluation.

Identify and engage stakeholders with an interest in the initiative and its evaluation.

![]() Surface expectations and define purpose of the initiative.

Surface expectations and define purpose of the initiative.

![]() Understand the initiative design, including the types of impact the initiative is expected to have, its target audience, and the period of time over which the impact is expected to occur.

Understand the initiative design, including the types of impact the initiative is expected to have, its target audience, and the period of time over which the impact is expected to occur.

![]() Create a results framework.

Create a results framework.

![]() Determine and prioritize evaluation questions.

Determine and prioritize evaluation questions.

![]() Create an evaluative rubric.

Create an evaluative rubric.

![]() Create an evaluation plan that includes data-collection methods, resources for the evaluation, and how the evaluation results will be used.

Create an evaluation plan that includes data-collection methods, resources for the evaluation, and how the evaluation results will be used.