Chapter 1: Introduction

Two Questions Organizations Need to Ask

Business Intelligence and Business Analytics

Introductory Statistics Courses

Added Complexities in Multivariate Analysis

Obtaining and Cleaning the Data

Understanding the Statistical Study as a Story

The Plan-Perform-Analyze-Reflect Cycle

Framework and Chapter Sequence

Historical Perspective

In 1981, Bill Gates made his infamous statement that “640KB ought to be enough for anybody” (Lai, 2008).

Looking back even further, about 10 to 15 years before Bill Gates’s statement, we were in the middle of the Vietnam War era. State-of-the-art computer technology for both commercial and scientific areas at that time was the mainframe computer. A typical mainframe computer weighed tons, took an entire floor of a building, had to be air-conditioned, and cost about $3 million. Mainframe memory was approximately 512 KB with disk space of about 352 MB and speed up to 1 MIPS (million instructions per second).

In 2016, only 45 years later, an iPhone 6 with 32-GB memory has about 9300% more memory than the mainframe and can fit in a hand. A laptop with the Intel Core i7 processor has speeds up to 238,310 MIPS, about 240,000 times faster than the old mainframe, and weighs less than 4 pounds. Further, an iPhone or a laptop costs significantly less than $3 million. As Ray Kurzweil, an author, inventor, and futurist has stated (Lomas, 2008): “The computer in your cell phone today is a million times cheaper and a thousand times more powerful and about a hundred thousand times smaller (than the one computer at MIT in 1965) and so that's a billion-fold increase in capability per dollar or per euro that we've actually seen in the last 40 years.”

Technology has certainly changed!

Two Questions Organizations Need to Ask

Many organizations have realized or are just now starting to realize the importance of using analytics. One of the first strides an organization should take toward becoming an analytical competitor is to ask themselves the following two questions.

Return on Investment

With this new and ever-improving technology, most organizations (and even small organizations) are collecting an enormous amount of data. Each department has one or more computer systems. Many organizations are now integrating these department-level systems with organization systems, such as an enterprise resource planning (ERP) system. Newer systems are being deployed that store all these historical enterprise data in what is called a data warehouse. The IT budget for most organizations is a significant percentage of the organization’s overall budget and is growing. The question is as follows:

With the huge investment in collecting this data, do organizations get a decent return on investment (ROI)?

The answer: mixed. No matter if the organization is large or small, only a limited number of organizations (yet growing in number) are using their data extensively. Meanwhile, most organizations are drowning in their data and struggling to gain some knowledge from it.

Cultural Change

How would managers respond to this question: What are your organization’s two most important assets?

Most managers would answer with their employees and the product or service that the organization provides (they might alternate which is first or second).

The follow-up question is more challenging: Given the first two most important assets of most organizations, what is the third most important asset of most organizations?

The actual answer is “the organization’s data!” But to most managers, regardless of the size of their organizations, this answer would be a surprise. However, consider the vast amount of knowledge that’s contained in customer or internal data. For many organizations, realizing and accepting that their data is the third most important asset would require a significant cultural change.

Rushing to the rescue in many organizations is the development of business intelligence (BI) and business analytics (BA) departments and initiatives. What is BI? What is BA? The answers seem to vary greatly depending on your background.

Business Intelligence and Business Analytics

Business intelligence (BI) and business analytics (BA) are considered by most people as providing information technology systems, such as dashboards and online analytical processing (OLAP) reports, to improve business decision-making. An expanded definition of BI is that it is a “broad category of applications and technologies for gathering, storing, analyzing, and providing access to data to help enterprise users make better business decisions. BI applications include the activities of decision support systems, query and reporting, online analytical processing (OLAP), statistical analysis, forecasting, and data mining” (Rahman, 2009).

Figure 1.1: A Framework of Business Analytics

The scope of BI and its growing applications have revitalized an old term: business analytics (BA). Davenport (Davenport and Harris, 2007) views BA as “the extensive use of data, statistical and quantitative analysis, explanatory and predictive models, and fact-based management to drive decisions and actions.” Davenport further elaborates that organizations should develop an analytics competency as a “distinctive business capability” that would provide the organization with a competitive advantage.

In 2007, BA was viewed as a subset of BI. However, in recent years, this view has changed. Today, BA is viewed as including BI’s core functions of reporting, OLAP and descriptive statistics, as well as the advanced analytics of data mining, forecasting, simulation, and optimization. Figure 1.1 presents a framework (adapted from Klimberg and Miori, 2010) that embraces this expanded definition of BA (or simply analytics) and shows the relationship of its three disciplines (Information Systems/Business Intelligence, Statistics, and Operations Research) (Gorman and Klimberg, 2014). The Institute of Operations Research and Management Science (INFORMS), one of the largest professional and academic organizations in the field of analytics, breaks analytics into three categories:

● Descriptive analytics: provides insights into the past by using tools such as queries, reports, and descriptive statistics,

● Predictive analytics: understand the future by using predictive modeling, forecasting, and simulation,

● Prescriptive analytics: provide advice on future decisions using optimization.

The buzzword in this area of analytics for about the last 25 years has been data mining. Data mining is the process of finding patterns in data, usually using some advanced statistical techniques. The current buzzwords are predictive analytics and predictive modeling. What is the difference in these three terms? As discussed, with the many and evolving definitions of business intelligence, these terms seem to have many different yet quite similar definitions. Chapter 17 briefly discusses their different definitions. This text, however, generally will not distinguish between data mining, predictive analytics, and predictive modeling and will use them interchangeably to mean or imply the same thing.

Most of the terms mentioned here include the adjective business (as in business intelligence and business analytics). Even so, the application of the techniques and tools can be applied outside the business world and are used in the public and social sectors. In general, wherever data is collected, these tools and techniques can be applied.

Introductory Statistics Courses

Most introductory statistics courses (outside the mathematics department) cover the following topics:

● descriptive statistics

● probability

● probability distributions (discrete and continuous)

● sampling distribution of the mean

● confidence intervals

● one-sample hypothesis testing

They might also cover the following:

● two-sample hypothesis testing

● simple linear regression

● multiple linear regression

● analysis of variance (ANOVA)

Yes, multiple linear regression and ANOVA are multivariate techniques. But the complexity of the multivariate nature is for the most part not addressed in the introduction to statistics course. One main reason—not enough time!

Nearly all the topics, problems, and examples in the course are directed toward univariate (one variable) or bivariate (two variables) analysis. Univariate analysis includes techniques to summarize the variable and make statistical inferences from the data to a population parameter. Bivariate analysis examines the relationship between two variables (for example, the relationship between age and weight).

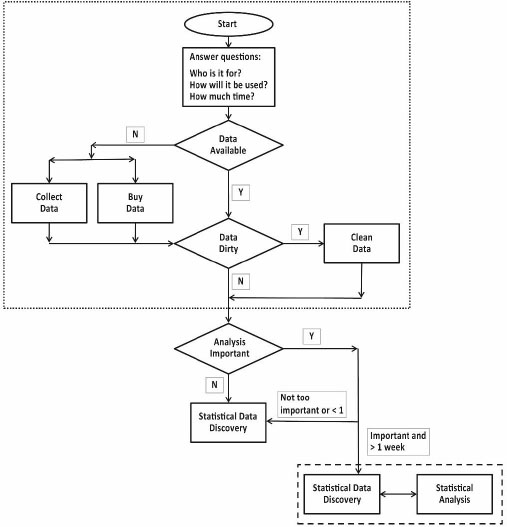

A typical student’s understanding of the components of a statistical study is shown in Figure 1.2. If the data are not available, a survey is performed or the data are purchased. Once the data are obtained, all at one time, the statistical analyses are done—using Excel or a statistical package, drawing the appropriate graphs and tables, performing all the necessary statistical tests, and writing up or otherwise presenting the results. And then you are done. With such a perspective, many students simply look at this statistics course as another math course and might not realize the importance and consequences of the material.

Figure 1.2: A Student’s View of a Statistical Study from a Basic Statistics Course

The Problem of Dirty Data

Although these first statistics courses provide a good foundation in introductory statistics, they provide a rather weak foundation for performing practical statistical studies. First, most real-world data are “dirty.” Dirty data are erroneous data, missing values, incomplete records, and the like. For example, suppose a data field or variable that represents gender is supposed to be coded as either M or F. If you find the letter N in the field or even a blank instead, then you have dirty data. Learning to identify dirty data and to determine corrective action are fundamental skills needed to analyze real-world data. Chapter 3 will discuss dirty data in detail.

Added Complexities in Multivariate Analysis

Second, most practical statistical studies have data sets that include more than two variables, called multivariate data. Multivariate analysis uses some of the same techniques and tools used in univariate and bivariate analysis as covered in the introductory statistics courses, but in an expanded and much more complex manner. Also, when performing multivariate analysis, you are exploring the relationships among several variables. There are several multivariate statistical techniques and tools to consider that are not covered in a basic applied statistics course.

Before jumping into multivariate techniques and tools, students need to learn the univariate and bivariate techniques and tools that are taught in the basic first statistics course. However, in some programs this basic introductory statistics class might be the last data analysis course required or offered. In many other programs that do offer or require a second statistics course, these courses are just a continuation of the first course, which might or might not cover ANOVA and multiple linear regression. (Although ANOVA and multiple linear regression are multivariate, this reference is to a second statistics course beyond these topics.) In either case, the students are ill-prepared to apply statistics tools to real-world multivariate data. Perhaps, with some minor adjustments, real-world statistical analysis can be introduced into these programs.

On the other hand, with the growing interest in BI, BA, and predictive analytics, more programs are offering and sometimes even requiring a subsequent statistics course in predictive analytics. So, most students jump from univariate/bivariate statistical analysis to statistical predictive analytics techniques, which include numerous variables and records. These statistical predictive analytics techniques require the student to understand the fundamental principles of multivariate statistical analysis and, more so, to understand the process of a statistical study. In this situation, many students are lost, which simply reinforces the students’ view that the course is just another math course.

Practical Statistical Study

Even with these ill-prepared multivariate shortcomings, there is still a more significant concern to address: the idea that most students view statistical analysis as a straightforward exercise in which you sit down once in front of your computer and just perform the necessary statistical techniques and tools, as in Figure 1.2. How boring! With such a viewpoint, this would be like telling someone that reading a book can simply be done by reading the book cover. The practical statistical study process of uncovering the story behind the data is what makes the work exciting.

Obtaining and Cleaning the Data

The prologue to a practical statistical study is determining the proper data needed, obtaining the data, and if necessary cleaning the data (the dotted area in Figure 1.3). Answering the questions “Who is it for?” and “How will it be used?” will identify the suitable variables required and the appropriate level of detail. Who will use the results and how they will use them determine which variables are necessary and the level of granularity. If there is enough time and the essential data is not available, then the data might have to be obtained by a survey, purchasing it, through an experiment, compiled from different systems or databases, or other possible sources. Once the data is available, most likely the data will first have to be cleaned—in essence, eliminating erroneous data as much as possible. Various manipulations of data will be taken to prepare the data for analysis, such as creating new derived variables, data transformations, and changing the units of measuring. Also, the data might need to be aggregated or compiled various ways. These preliminary steps can account for about 75% of time of a statistical study and are discussed further in Chapter 17.

As shown in Figure 1.3, the importance placed on the statistical study by the decision-makers/users and the amount of time allotted for the study will determine whether the study will be only a statistical data discovery or a more complete statistical analysis. Statistical data discovery is the discovery of significant and insignificant relationships among the variables and the observations in the data set.

Figure 1.3: The Flow of a Real-World Statistical Study

Understanding the Statistical Study as a Story

The statistical analysis (the enclosed dashed-line area in Figure 1.3) should be read like a book—the data should tell a story. The first part of the story and continuing throughout the study is the statistical data discovery.

The story develops further as many different statistical techniques and tools are tried. Some will be helpful, some will not. With each iteration of applying the statistical techniques and tools, the story develops and is substantially further advanced when you relate the statistical results to the actual problem situation. As a result, your understanding of the problem and how it relates to the organization is improved. By doing the statistical analysis, you will make better decisions (most of the time). Furthermore, these decisions will be more informed so that you will be more confident in your decision. Finally, uncovering and telling this statistical story is fun!

The Plan-Perform-Analyze-Reflect Cycle

The development of the statistical story follows a process that is called here the plan-perform-analyze-reflect (PPAR) cycle, as shown in Figure 1.4. The PPAR cycle is an iterative progression.

Figure 1.4: The PPAR Cycle

The first step is to plan which statistical techniques or tools are to be applied. You are combining your statistical knowledge and your understanding of the business problem being addressed. You are asking pointed, directed questions to answer the business question by identifying a particular statistical tool or technique to use.

The second step is to perform the statistical analysis, using statistical software such as JMP.

The third step is to analyze the results using appropriate statistical tests and other relevant criteria to evaluate the results. The fourth step is to reflect on the statistical results. Ask questions, like what do the statistical results mean in terms of the problem situation? What insights have I gained? Can you draw any conclusions? Sometimes, the results are extremely useful, sometimes meaningless, and sometimes in the middle—a potential significant relationship.

Then, it is back to the first step to plan what to do next. Each progressive iteration provides a little more to the story of the problem situation. This cycle continues until you feel you have exhausted all possible statistical techniques or tools (visualization, univariate, bivariate, and multivariate statistical techniques) to apply, or you have results sufficient to consider the story completed.

Using Powerful Software

The software used in many initial statistics courses is Microsoft Excel, which is easily accessible and provides some basic statistical capabilities. However, as you advance through the course, because of Excel’s statistical limitations, you might also use some nonprofessional, textbook-specific statistical software or perhaps some professional statistical software. Excel is not a professional statistics software application; it is a spreadsheet.

The statistical software application used in this book is the SAS JMP statistical software application. JMP has the advanced statistical techniques and the associated, professionally proven, high-quality algorithms of the topics/techniques covered in this book. Nonetheless, some of the early examples in the textbook use Excel. The main reasons for using Excel are twofold: (1) to give you a good foundation before you move on to more advanced statistical topics, and (2) JMP can be easily accessed through Excel as an Excel add-in, which is an approach many will take.

Framework and Chapter Sequence

In this book, you first review basic statistics in Chapter 2 and expand on some of these concepts to statistical data discovery techniques in Chapter 4. Because most data sets in the real world are dirty, in Chapter 3, you discuss ways of cleaning data. Subsequently, you examine several multivariate techniques:

● regression and ANOVA (Chapter 5)

● logistic regression (Chapter 6)

● principal components (Chapter 7)

● cluster analysis Chapter 9)

The framework that of statistical and visual methods in this book is shown in Figure 1.5. Each technique is introduce with a basic statistical foundation to help you understand when to use the technique and how to evaluate and interpret the results. Also, step-by-step directions are provided to guide you through an analysis using the technique.

Figure 1.5: A Framework for Multivariate Analysis

The second half of the book introduces several more multivariate/predictive techniques and provides an introduction to the predictive analytics process:

● LASSO and Elastic Net (Chapter 8),

● decision trees (Chapter 10),

● k-nearest neighbor (Chapter 11),

● neural networks (Chapter 12)

● bootstrap forests and boosted trees (Chapter 13)

● model comparison (Chapter 14)

● text mining (Chapter 15)

● association rules (Chapter 16), and

● data mining process (Chapter 17).

The discussion of these predictive analytics techniques uses the same approach as with the multivariate techniques—understand when to use it, evaluate and interpret the results, and follow step-by-step instructions.

When you are performing predictive analytics, you will most likely find that more than one model will be applicable. Chapter 14 examines procedures to compare these different models.

The overall objectives of the book are to not only introduce you to multivariate techniques and predictive analytics, but also provide a bridge from univariate statistics to practical statistical analysis by instilling the PPAR cycle.