So far, we've seen multiple activation functions, but the one thing that remains constant is the limitation that they can provide only two classes, 0 or 1. Consider the heart disease example:

The neural network predicted 15% not having heart disease and 85% having heart disease, and we set the threshold to 50%. This implies that as soon as one of these percentages exceeds the threshold, we output its index, which would be 0 or 1. In this example, obviously 85% is greater than 50%, so we will output 1, meaning that this person will not have heart disease in the future.

These days, neural networks can actually predict thousands of classes. In the case of ImageNet, we can predict thousands of images. We do this by labeling our images with more than just 0 and 1. Look at the following photos:

Here, we label the photos from 0 to 6 and let the neural network assign each image some percentage. In this case, we consider the maximum, which would be 38%. The neural network will then give us image 4 as the output. Notice how the sum of these percentages will be 100%.

Let us now move into implementing the multiclass classification. Here is what we have seen so far:

This is a neural network containing an activation function in the outer layer. Let us replace this activation function with three nodes, assuming we need to predict three classes instead of two:

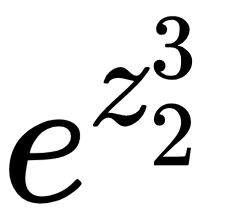

Each of these nodes will have a different function, which will be  . Thus, we will have

. Thus, we will have  ,

,  , and

, and  , sum up this

, sum up this  , and conclude by dividing

, and conclude by dividing  by the sum of the three values. This step of division is just to make sure that the sum of these percentages will be 100%.

by the sum of the three values. This step of division is just to make sure that the sum of these percentages will be 100%.

Consider an example where  is 5, making

is 5, making  equal to 148.4.

equal to 148.4.  is 2, which makes

is 2, which makes  equal to 7.4. Similarly,

equal to 7.4. Similarly,  can be set to -1 and

can be set to -1 and  will be equal to 0.4. The sum of these values is 156.2. The next step, as discussed, is dividing each of these values by the sum to attain the final percentages.

will be equal to 0.4. The sum of these values is 156.2. The next step, as discussed, is dividing each of these values by the sum to attain the final percentages.

For class 1, we get 95%, class 2 gives us 4.7%, and class 3 gives us 0.3%.

As logic dictates, the neural network will choose class 1 as the outcome. And since 95% is much greater than 50%, this is what we will choose as a threshold.

Here is what our final neural network looks like:

The weights depicted by the subscript 1 are going to the hidden layer 1, and the weights depicted by the subscript 2 are for the hidden layer 2.

The Z values are the sum of multiplication of the inputs with the weights, which, in this case, is the sum of the multiplication of the activation function with the weights.

In reality, we have another weight called the bias, b, which is added to the older value Z. The following diagram should help you understand this better:

The bias weight is updated in the same way as the v weight.