CHAPTER 12

A Call to Action: How to Roll Out Cybersecurity Risk Management

There are three general themes to this book:

- What measurement is;

- How to apply measurement;

- How to improve measurement.

What distinguishes this tome from its predecessors, How to Measure Anything: Finding the Value of “Intangibles” in Business and The Failure of Risk Management, is that this book is domain focused. More than that, it's designed to be a road map for establishing cybersecurity risk management as the C‐level strategic technology risk practice. To this point, we believe cybersecurity as an operational function should be redefined around quantitative risk management. This book has provided more evangelism and proof to that quantitative end. If you are of the mind that cybersecurity risk management (CSRM) should be a program as opposed to a grab bag of quantitative tricks, then this chapter is a high‐level proposition to that end. We will lay out what such an organization might look like and how it could work alongside other technology risk functions.

Establishing the CSRM Strategic Charter

This section answers the question “What should the overarching corporate role be for CSRM?” What we are framing as the CSRM function should become the first gate for executive, or board‐level, large‐investment consideration. Leaders still make the decisions, but they are using their quantitative napkins to add, multiply, and divide dollars and probabilities. Ordinal scales and other faux risk stuff should not be accepted.

The CSRM function is a C‐level function. It could be the CISO's function, but we actually put this role as senior to the CISO and reporting directly to the CEO or board. Of course, if the CISO reports to those functions, then this may work, but it requires an identity shift for the CISO. “Information and security” are subsumed by “risk,” and that risk is exclusively understood as likelihood and impact in the manner an actuary would understand it. In terms of identity or role changes we have seen titles such as “chief technology risk officer” (CTRO) and “chief risk officer” (CRO). Unfortunately, the latter is typically purely financial and/or legal in function. Whatever the job title, it should not be placed under a CIO/CTO. That becomes the fox watching the henhouse. A CSRM function serves at the pleasure of the CEO and board and is there to protect the business from bad technology investments.

The charter, simply put, is as such:

- The CSRM function will report to the CEO and/or board of directors. The executive title could be CTRO, CRO, or perhaps CISO, as long as the role is quantitatively redefined.

- The CSRM function reviews all major initiatives for technology risk inclusive of corporate acquisitions, major investments in new enterprise technology, venture capital investments, and the like. “Review” means to quantitatively assess and forecast losses, gains, and strategic mitigations and related optimizations.

- The CSRM function will also be responsible for monitoring and analysis for existing controls investments. The purpose is optimization of technology investments in relation to probable future loss. The dimensional modeling practices and associated technology covered in Chapter 11 are key. Operationally the goal of this function is answering the question, “Are my investments working well together in addressing key risks?”

- The CSRM function will use proven quantitative methods inclusive of probabilities for likelihoods and dollars as impact to understand and communicate risk. Loss exceedance curves will be the medium for discussing and visualizing risks and associated mitigation investments in relationship to tolerance. This includes risks associated with one application and/or a roll‐up of one or more portfolios of risk.

- The CSRM is responsible for maintaining corporate risk tolerances in conjunction with the office of the CFO, general counsel, and the board. Specifically, risk tolerance exceedance will be the KPI used to manage risk.

- The CSRM function will be responsible for managing and monitoring technology exception–management programs that violate risk tolerances.

- The CSRM function will maintain cyberinsurance policies in conjunction with other corporate functions such as legal and finance. CSRM provides the main parameters that inform the insurance models.

Organizational Roles and Responsibilities for CSRM

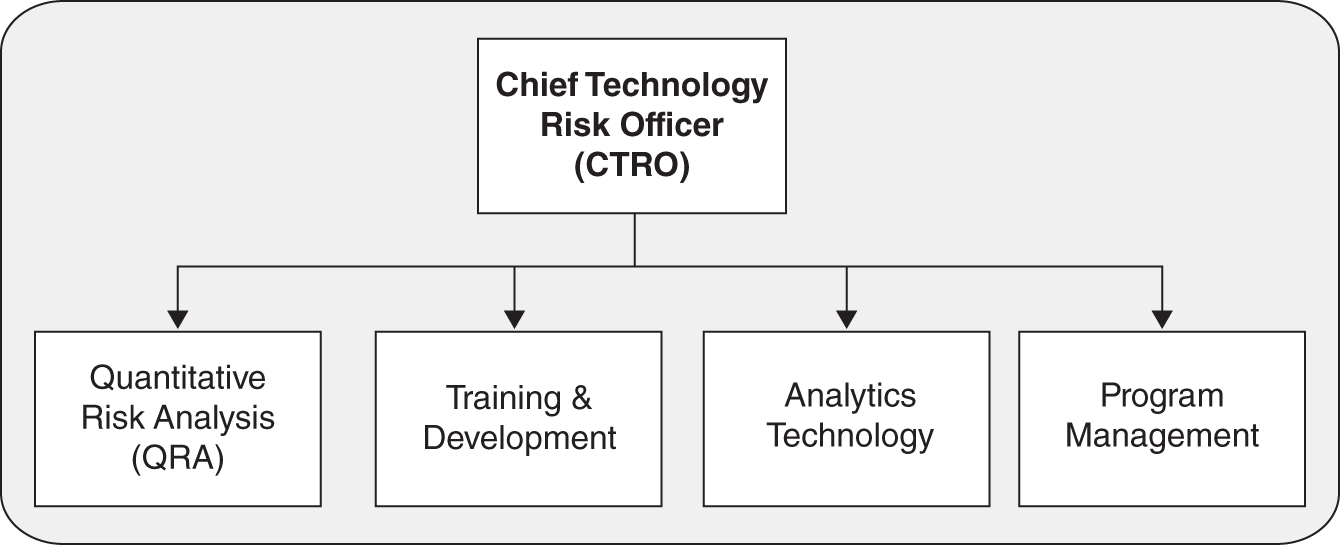

Figure 12.1 shows an example of an organizational structure for cybersecurity risk. The structure is more appropriate for a large (Fortune 1000) organization that is managing hundreds of millions of dollars, if not many billions of dollars, at risk. It presupposes that technology investments are key strategic initiatives, which is easy enough in this day and age. It also presupposes that cybersecurity risks are considered a top‐five, if not top‐three, board‐level/CEO risk.

Quantitative Risk Analysis

The quantitative risk analysis (QRA) team consists of trained analysts with a great bedside manner. You could call them consultants or advisors, but the distinction is that they have quantitative skills. They essentially are decision scientists that can program and communicate clearly with subject‐matter experts and leaders. This is a highly compensated function, typically with graduate work in statistics and/or a quantitative MBA. While degrees are nice, quantitative skill and business acumen are key. The more readily available statistics and quantitative business experts will need to work side‐by‐side with security SMEs and leadership. As the reality of cybersecurity as a measurement discipline (as most sciences are) sets in, this role and skill set will be more plentiful.

FIGURE 12.1 Cybersecurity Risk Management Function

You will want the right ratio of QRAs to risk assessment engagements. Practically speaking, there are only so many models that can be run and maintained at any given time. Ratios like 10:1 in terms of new models to each QRA may be appropriate. It depends on the complexity of the models. Also, ongoing risk tracking against tolerances is required for completed models. Enterprise technology of course will help in terms of scaling this out. But even with advanced prescriptive analytics there will be ongoing advisory work based on exceedance of risk tolerances. That is, if a particular portfolio of risk needs optimization, perhaps through acquisition of new technology and/or decommissioning of failing investments, that all takes time. A QRA will be involved in ongoing risk‐framing discussions, development of models using statistics and statistics tools (R, Python, etc.), and coordinating with technologists in the organization to design and build real‐time risk monitoring systems.

Training and Development

You can certainly use your QRA team to provide quantitative training material and even actual training delivery. But the goal here is to build its DNA at various layers of the broader organization. This is similar to building general security DNA in engineering and IT teams (we assume you do this already). You can only hire so many QRA folks—they are rare, expensive, and prone to flight due to demand. You need to build tools and skills broadly if you are to fight the good fight with the bad guys. You will need a leader for this function and a content and delivery team. Using technology to deliver is key for large, distributed organizations.

Analytics Technology

This will be the most expensive and operationally intensive function. It presupposes big data, stream analytics, and cloud‐deployed solutions to support myriad analytics outcomes. The analytics team will manage the movement of large swaths of data and the appropriate deployment of telemetry into systems. If you have any hope of implementing the practices from Chapter 11 in an agile manner, this is the group to do it. This group consists of systems engineers, big data database administrators, programmers, and the like. It is an optimized IT organization focused on analytic outcomes.

Program Management

When an activity involves multiple functions across multiple organizations, it's a program. The CSRM function typically will be deployed to a variety of organizations. The QRA team will be the technical face, but tying everything together—from engagement to training and development to technology—is a program management function. No need to go overboard on this one, but don't skimp on program management; you will fail if you do.

We could have gone deep on every single role and function, building out various matrices, job descriptions, Gantt charts, and the like. That is not necessary. All the practices revealed throughout this book provide a glimpse into the core content of a larger cybersecurity risk management function. The roles and responsibilities have some flexibility. You can start lean‐targeting one or two projects just using the spreadsheets provided. But if you are serious about fighting the bad guys with analytics, then you need a plan. Start by identifying where you are on the maturity model provided in the beginning of Part III. Then chart a course to build both the skills and organization that lead to prescriptive analytics capability. Having a plan removes a key roadblock to success; as Benjamin Franklin said, “Those who fail to plan, plan to fail!”

While there are many potential barriers to success such as failed planning, there is one institutional barrier that can obstruct quantitative analysis: compliance audits. In theory, audits should help ensure the right thing is occurring at the right time and in the right way. To that extent, we think audits are fantastic. What makes compliance audits a challenge is when risk management functions focus on managing to an audit as opposed to managing to actual risks. Perhaps this is why the CEO of Target was shocked when they had their breach; he went on record claiming they were compliant to the PCI (payment card industry) standard. That is compliance versus risk‐management mindset, and it is a deadly error in the face of our foes.

What if there was an audit function that assessed—actually measured—the effectiveness of risk management approaches? That is, would it determine the actual impact on risk reduction of soft methods versus quantitative methods? And what if scoring algorithms were put to the test? Of course, advanced methods such as Monte Carlo simulations, beta distributions, and the like would also need to be tested. Good! Again, we think one of the reasons we lose is because of untested soft methods.

There is, however, a risk that this will backfire. For example, what if methods that were predicated on measuring uncertainty got audited because they were viewed as novel, but methods using risk matrices, ordinal scales, and scoring systems got a pass? That would become a negative incentive to adopting quantitative methods. Unfortunately, this is a reality in at least one industry, as described hereafter. We bring it up here as an example of compliance audit gone wild in a nefarious manner that could have a profound negative impact on cybersecurity risk management.

Getting Audit to Audit

Audit plays a key role in ensuring quality of risk models, especially in heavily regulated industries such as banking and insurance. If new quantitative models are developed that have any influence at all on financial transactions, then audit is a necessary checkpoint to make sure that models don't have unintended consequences—possibly as a result of simple errors buried in complex formulae. Such scrutiny should be applied to any model proposed for financial transactions and certainly for decisions regarding the exposure of the organization to risks such as market uncertainty and cyberattacks.

Auditors might get excited when they see a model that involves more advanced math. The auditors have been there at one point in their careers. It's interesting to finally get to use something for which so much study and time was spent mastering. So they will eagerly dive into a model that has some statistics and maybe a Monte Carlo simulation—as they should. If the method claims to be based on some scientific research they haven't heard of, they should demand to see reference to that research. If the model is complex enough, perhaps the audit ought to be done twice on each calculation by different people. If an error is found, then any modeler whose primary interest is quality should happily fix it.

But even well‐intentioned and qualified auditors inadvertently discourage the adoption of better models in decision making. In some cases the more sophisticated model was replacing a very soft, unscientific model. In fact, this is the case in every model the authors have developed and introduced. The models we have developed replaced models that were based on the methods we have already made a case against: doing arithmetic with ordinal scales, using words such as “medium” as a risk assessment, heat maps, and so on. Yet these methods get no such scrutiny by auditors. If another method were introduced for cybersecurity that merely said we should subjectively assess likelihood and impact and then only represent those with subjective estimates using ambiguous scales, the auditors are not demanding research showing the measured performance of uncalibrated subjective estimates or the mathematical foundations of the method or the issues with using verbal scales as representations of risk.

So what happens when they audit only those methods that involve a bit more advanced calculations? The manager who sticks with a simple scoring method they just made up that day may not be scrutinized the same way as one who uses a more advanced method simply because it isn't advanced. This creates a disincentive for seeking improvement with more quantitative and scientifically sound methods in risk management and decision making.

For audit to avoid this (surely unintended) disincentive for improving management decision making, they must start auditing the softer methods with the same diligence they would for the methods that allow them to flex some of their old stats education.

How Auditors Can Avoid Killing Better Methods

- Audit all models. All decision making is based on a model, whether that model is a manager's intuition, a subjective point system, a deterministic cost/benefit analysis, stochastic models, or a coin flip. Don't make the mistake of auditing only things that are explicitly called models in the organization. If a class of decisions are made by gut feel, then consider the vast research on intuition errors and overconfidence and demand to see the evidence that these challenges somehow don't apply to this particular problem.

- Don't make the mistake of auditing the model strictly within its context. For example, if a manager used a deterministic cost/benefit model in a spreadsheet to evaluate cybersecurity controls, don't just check that the basic financial calculations are correct or ask where the inputs came from. Instead, start with asking whether that modeling approach really applies at all. Ask why they would use deterministic methods on decisions that clearly rely on uncertain inputs.

- Just because the output of a model is ambiguous, don't assume that you can't measure performance. If the model says a risk is “medium,” you should ask whether medium‐risk events actually occur more often than low‐risk and less often than high‐risk events. Attention is often focused on quantitative models because their output is unambiguous and can be tracked against outcomes. The very thing that makes auditors more drawn to investigate statistical models more than softer models is actually an advantage of the former.

- Ask for research backing up the relative performance of that method versus alternatives. If the method promotes a colored heat map, look up the work of Tony Cox. If it relies on verbal scales, look up the work of David Budescu and Richard Heuer. If they claim that the softer method proposed somehow avoids the problems identified by these researchers, the auditors should demand evidence of that.

- Even claims about what levels of complexity are feasible in the organization should not be taken at face value. If a simpler scoring method is proposed based on the belief that managers won't understand anything more complex, then demand the research behind this claim (the authors’ combined experience seems to contradict this).

One legitimate concern is that this might require too much of audit. We certainly understand they have a lot of important work to do and are often understaffed. But audit could at least investigate a few key methods, especially when they have some pseudo‐math. If at least some of the softer models get audited as much as the models with a little more math, organizations would remove the incentive to stick with less scientifically valid methods just to avoid the probes of audit.

What the Cybersecurity Ecosystem Must Do to Support You

Earlier in this book we took a hard look at popular risk analysis methods and found them wanting. They do not add value and, in fact, apparently add error. Some will say that at least such methods help “start the conversation” about risk management, but since lots of methods can do that, why not choose one based on techniques that have shown a measurable improvement in estimates? Nor can anybody continue to support the claim that quantitative methods are impractical, since we've actually applied them in real environments, including Monte Carlos, Bayesian empirical methods, and more advanced combinations of them as discussed in Chapters 8 and 9. And, finally, nothing in the evidence of recent major breaches indicates that the existing methods were actually helping risk management at all.

But we don't actually fault most cybersecurity professionals for adopting ineffectual methods. They were following the recommendations of standards organizations and following methods they've been trained in as part of recognized certification requirements. They are following the needs of regulatory compliance and audit in their organizations. They are using tools developed by any of the myriad vendors that use the softer methods. Thus, if we want the professionals to change, then the following must change in the ecosystem of cybersecurity.

- Standards organizations must end the promotion of risk matrices as a best practice and promote evidence‐based (scientific) methods in cybersecurity risk management unless and until there is evidence to the contrary. We have shown that the evidence against non–evidence‐based methods is overwhelming.

- To supplement the first point, standards organizations must adopt evidence‐based as opposed to testimonial‐based and committee‐based methods of identifying best practices. Only if standards organizations could show empirical evidence of the effectiveness of their current methods sufficient to overturn the empirical evidence already against them (which seems unlikely given such evidence), they should reinstate them.

- An organization could be formed to track and measure the performance of risk assessment methods themselves. This could be something modeled after the NIST National Vulnerability Database—perhaps even part of that organization. (We would argue, after all, that the poor state of risk assessment methods certainly counts as a national vulnerability.) Then standards organizations could adopt methods based on informed, evidence‐based analysis of alternative methods.

- Certification programs that have been teaching risk matrices and ordinal scales must pivot to teaching both proper evidence‐based methods and new methods as evidence is gathered (from published research and the efforts just mentioned).

- Auditors in organizations must begin applying the standards of model validity equally to all methods in order to avoid discouraging some of the best methods. When both softer methods and better quantitative methods are given equal scrutiny (instead of only assessing the latter and defaulting to the former if any problem is found), then we are confident that better methods will eventually be adopted.

- Regulators must help lead the way. We understand that conservative regulators are necessarily slower to move, but they should at least start the process of recognizing the inadequacies of methods they currently consider “compliant” and encouraging better methods.

- Vendors, consultants, and insurance companies should seize the business opportunities related to methods identified in this book. The survey mentioned in Chapter 5 indicated a high level of acceptance of quantitative methods among cybersecurity practitioners. The evidence against some of the most popular methods and for more rigorous evidence‐based methods grows. Early promoters of methods that can show a measurable improvement will have an advantage. Insurance companies are already beginning to discover that the evidence‐based methods are a good bet. Whether and how well an insurance customer uses such methods should eventually be part of the underwriting process.

Integrating CSRM with the Rest of the Enterprise

CSRM is not an island in the organization. It is only one part of risk management and decision making. Where sound, quantitative methods are already established, CSRM needs to be integrated with those solutions. Where management may rely on the unscientific methods such as what we've discredited in this book, CSRM has the opportunity to lead by example.

Enterprise Risk Management

Doug Hubbard's second book, The Failure of Risk Management: Why It's Broken and How to Fix It, mentions cybersecurity risk management but also covers risk management from engineering projects, financial portfolios, emergency response, and so on. That book covered problems shared by many areas of risk management including unjustified high confidence in expert intuition and unscientific methods. The risk assessment methods Hubbard has seen in fields outside of cybersecurity, such as oil & gas, aerospace, health care, and electrical utilities, are virtually indistinguishable from what is seen in cybersecurity. Only actuarial science and some (not all) areas of quantitative portfolio management seemed to be on the right track for adopting sound methods.

Sometimes, Hubbard Decision Research is asked to completely replace ERM where part of that effort included cybersecurity. There are other cases where the initial objective was only to develop quantitative methods for cybersecurity, but after seeing the improved methods, management becomes interested in applying that approach to all risks. This has happened more than once among the clients of Hubbard Decision Research. In one such example, described in the previously mentioned book, Hubbard's team developed a cybersecurity risk management solution for a group health insurer and then the board of directors asked that enterprise risk management (ERM) should also be based on quantitatively sound methods. The comprehensive solution included project risks, market risks, and more.

At the summary level, the ERM model looked a lot like the one‐for‐one substitution model described in Chapter 3. There were lists of events with assigned probabilities and ranges for potential losses. But at the next level of detail behind those risks, the different risk models varied structure and inputs. Project risk models included factors such as schedule risk, cancelation, and low adoption rate. Market risks included detailed historical data and simulated the outcomes of various portfolio positions.

But even though the underlying details revealed very different structures and inputs, the outputs could all be expressed in terms of a loss exceedance curve, individually or all rolled up into one enterprise‐wide curve.

Most firms large enough to justify someone specializing in quantitative risk analysis of cybersecurity may have someone in charge of ERM. If your ERM is not already adopting the quantitative language you are implementing for cybersecurity, you should show them what ERM could look like. Issues with adopting quantitative methods in ERM are essentially the same as what we find in cybersecurity. Use the points in Chapter 4 to explain the problems with the methods they may be currently using and to address their doubts about adopting quantitative methods.

Integrated Decision Management

ERM is much broader than cybersecurity, but even ERM is only part of the picture. Risk is one part of decision making. In one sense, a risk management department makes about as much sense as a left‐foot shoe department.

Decisions under uncertainty have to include uncertainty about rewards as well as losses. There are methods needed for the broader goal of decisions under uncertainty that are not directly addressed in risk management. For example, the loss exceedance curve doesn't, by itself, indicate how much more risk a firm would be willing to take if the potential rewards were higher. If ERM, including cybersecurity risk management, is supposed to support real decision making, then they should all be considered aspects of the decision analysis process in the organization.

We have mentioned the field of decision analysis multiple times in this book. In fact, what we referred to as “prescriptive analytics” in the previous chapter is just another name for the analysis we need to make better decisions under uncertainty. And while prescriptive analytics may be a relatively new term, decision analysis is not. There is a lot we don't have to reinvent. As it should do with ERM, cybersecurity will need to integrate with existing quantitative methods in decision making or lead by example where such methods are lacking.

Operations research, quantitative portfolio management, management science, actuarial science, decision psychology, and decision theory are a few of the disparate, partly overlapping fields that all attempt to improve decisions. An ambitious mission, beyond the scope of this book, would be to develop a type of “integrated decision management” (IDM) for the enterprise. The methods would have some differences as required by the specifics of the problems they solve, but they would all be based on procedures that can prove a measurable improvement over existing methods. They would help reduce uncertainty in key decisions and make better decisions under whatever uncertainty must remain.

You don't have to boil the ocean, and we aren't suggesting you can do this alone. Starting on this path is beyond the responsibility and authority of cybersecurity and probably beyond ERM or any other single department in the organization. Developing IDM would be piecemeal, incrementally improving one area and then another after securing buy‐in from other stakeholders. But if you build your part of this solution based on a vision of where the entire organization should be and even help promote the larger vision, then you will be an insider instead of an outsider for important decisions of the organization.

Can We Avoid the Big One?

In Chapter 1 of both the current edition and the first edition of this book, we referred to the risk of the “Big One.” This is an extensive cyberattack that affects multiple large organizations, potentially across many countries. It could involve, in concert, interruption of basic services, such as utilities and communications. This, combined with a significant reduction in trust in online and credit card transactions, could actually have an impact on the economy well beyond the costs to a single large company—even the biggest firms hit so far. We think this risk is at least as significant as it was in the first edition, if not more.

As stated earlier in this book, the nature of the security breaches such as those at Target, Merck, and SolarWinds indicates a combination of threats that, if they happened in a more coordinated manner, could produce much larger losses than we have seen so far. Recall that Target was attacked in a way that exposed a threat common to many firms: that companies and their vendors are connected to each other, and many of those companies and vendors are connected through networks to many more. Even government agencies are connected to these organizations in various ways. All of these organizations may only be one or two degrees of separation away from each other. If it is possible for a vendor to expose a company and if that vendor has many clients and those clients have many vendors, then we have a kind of total network risk that—while it has not yet been exploited—it is entirely possible it could be exploited in the future. SolarWinds showed how one widely used software vendor creates a shared risk among all of its customers. Merck showed how the attack distributed by SolarWinds could have been much more disruptive. The attacks on the Ukrainian electrical grid and the Colonial Pipeline showed how basic infrastructure is threatened.

We said this in the first edition, and we'll keep repeating it as long as necessary. Analyzing the risks of this kind of situation is far too complex to evaluate with existing popular methods or in the heads of any of the leading experts. Proper analysis will require real, quantitative models to compute the impacts of these connections. Organizations with limited resources (which are, of course, all of them) will have to use evidence‐based, rational methods to decide how to mitigate such risks. If we begin to accept that, then we may improve our chances of avoiding—or at least recovering more gracefully from—the Big One.