Memory

Abstract

Memory is the human brain’s recollection, maintenance, reappearance, or recognition of experience.

Keywords

Memory; memory system; short-term memory; long-term memory; semantic memory; episodic memory; working memory; complementary learning; hierarchical temporal memory

Memory is the human brain’s recollection, maintenance, reappearance, or recognition of experience. It is the basis of thinking, imagination, and other advanced psychological activities. Because of the existence of memory, people can keep past reflections, make current reflections based on the previous reflections, and make the reflections more comprehensive and in-depth. With memory, people can accumulate experience and expand it. Memory is mental continuity in time. With memory, successive experiences can be connected, making psychological activities become a developing process, making a person’s psychological activities become a unified process, and forming a person’s psychological characteristics. Memory is an important aspect of mind ability.

8.1 Overview

Memory is the psychological process that contains the accumulation, preservation, and extraction of individual experience in the brain. In information processing terms, the human brain enters information from the outside world for the coding, storage, and retrieval process. Perceptions of the things people have to think about, the questions raised, and experiences of emotional engagement in activities all leave impressions in people’s minds to varying degrees. This is the process in the mind; under certain conditions, according to an individual’s needs, the impressions can be aroused, the person can participate in the ongoing activities once again. This is the process of memory. From storage in the brain to extracting the application again, the integrated process is referred to as memory.

Memory consists of three basic processes: entering the information into the memory systems (coding), storing information in memory (storing), extracting the information from memory (extraction). Memory encoding is the first fundamental process by which information from the sensory memory system becomes able to be received and in usable form. In general, we obtain information through a variety of external sensory systems; it is then necessary to convert it into a variety of memory code, that is, the formation of an objective mental representation of physical stimulation. The coding process requires the participation of the attention: attention to the processing of different coding standards or to different forms. For example, looking at characters, you can pay attention to their shape, structure, word pronunciation, or the meaning of the word to form a visual code, sound code, or semantic code. Encoding has a direct impact on the endurance of memory. Of course, a strong emotional experience also enhances the memory effect. In short, how the information is encoded has direct impact on memory storage and on subsequent extraction. Under normal circumstances, information is encoded using a variety of memory effects.

The information that has been encoded must be preserved in the mind and, after a certain period, may be extracted. However, in most cases, the preservation of information for future application is not always automatic; we must strive to find ways to preserve information. Information that has been stored may also be undermined and then forgotten. For psychologists studying memory, the main concern is with the factors that affect memory storage and that fight against oblivion.

Information saved in memory, only to be extracted for application is meaningless. There are two ways to extract information: recall and recognition. Day to day, “remembering” refers to memories. Recognition is easier because the original stimulus is front of us. We have a variety of clues that can be used merely to determine our familiarity with it. Some materials studied cannot be recalled or reidentified. Have they completely disappeared in our minds? No. Memory traces will not completely disappear; further study can serve as good examples of this. Let the subjects learn the same material twice and reach the same proficiency level each time. The number of exercises or time needed for the second study must be less than that of the first study. The difference in the time or times used for the second study indicates the amount in preservation.

According to its content, memory can be divided into the following four types:

- 1. Image memory: Memory with the content of the perceived image of things is called image memory. These specific images can be visual, auditory, olfactory, tactile, or taste images. For example, the memory of a picture that people have seen and a piece of music that they have heard is image memory. The remarkable feature of this kind of memory is that it preserves the perceptual feature of things, which is typically intuitive.

- 2. Emotional memory: This is the memory of emotions or emotions experienced in the past, such as the memory of students’ happy mood when they receive the admission notice. In the process of knowing things or communicating with people, people always have a certain amount of emotional color or emotional content, which is also stored in the brain as the content of memory and becomes a part of human psychological content. Emotional memory is often formed once and never forgotten, which has a great influence on people’s behavior. The impression of emotional memory is sometimes more lasting than other forms of memory impression. Even though people have forgotten the fact that caused the emotional experience, the emotional experience remains.

- 3. Logical memory: This is memory in the form of ideas, concepts, or propositions, such as the memory of a mathematical theorem, formula, philosophical proposition, etc. This kind of memory is based on abstract logical thinking and has the characteristics of generality, understanding, and logicality.

- 4. Action memory: This is memory based on people’s past operational behavior. All the actions and patterns that people keep in their minds belong to action memory. This kind of memory is of great significance to the coherence and accuracy of people’s movements, and it is the basis of the formation of movement skills.

These four memory forms are not only different but also closely linked. For example, action memory has a distinct image. If there is no emotional memory in logical memory, its content is difficult to keep for a long time.

American neuroscientist E. R. Kandel devoted his whole life to exploring the mystery of memory. His outstanding contribution to memory research won him the 2000 Nobel Prize in physiology or medicine [1]. There are a lot of deep questions about memory. Although we have a good foundation now, we are only beginning to fully understand the complexity of memory, persistence, and recall. In 2009 E. R. Kandel proposed 11 unsolved problems in memory research [2].

- 1. How does synaptic growth occur, and how is signaling across the synapse coordinated to induce and maintain growth?

- 2. What trans-synaptic signals coordinate the conversion of short- to intermediate- to long-term plasticity?

- 3. What can computational models contribute to understanding synaptic plasticity?

- 4. Will characterization of the molecular components of the presynaptic and postsynaptic cell compartments revolutionize our understanding of synaptic plasticity and growth?

- 5. What firing patterns do neurons actually use to initiate LTP at various synapses?

- 6. What is the function of neurogenesis in the hippocampus?

- 7. How does memory become stabilized outside the hippocampus?

- 8. How is memory recalled?

- 9. What is the role of small RNAs in synaptic plasticity and memory storage?

- 10. What is the molecular nature of the cognitive deficits in depression, schizophrenia, and non-Alzheimer’s age-related memory loss?

- 11. Does working memory in the prefrontal cortex involve reverberatory self-reexcitatory circuits or intrinsically sustained firing patterns?

If a breakthrough can be made in these important open problems, it will be of great value to the development of intelligence science, especially the study of memory.

8.2 Memory system

According to the temporal length of memory operation, there are three types of human memory: sensory memory, short-term memory, and long-term memory (LTM). The relationship among these three is illustrated in Fig. 8.1. First, the information from the environment reaches the sensory memory. If the information is attentional, it is entered into short-term memory. In the short-term memory of the individual, the information can be restructured, used, and responded to. The information in short-term memory may be analyzed, and the resultant knowledge stored in LTM storage. At the same time, the short-term memory preservation of information, if necessary, can also be redeposited after forwarding to LTM. In Fig. 8.1, the arrows indicate the flow of information storage in three runs in the direction of the model.

R. Atkinson and R. M. Shiffrin carried out their expanded memory system model in 1968 [3], that is, sensory memory (register), short-term memory, and LTM of three parts. The difference is that they joined the contents of the control process, and the control of the course of the process is stored in the three works. The model also has a point of concern regarding its LTM of information. In their model of LTM, the information does not disappear; the information does not dissipate from the library of self-addresses.

8.2.1 Sensory memory

Information impinging on the senses initially goes into what is called sensory storage. Sensory storage is the direct impression, the sensory information, that comes from the sense organ. The sensory register can keep information from each sense organ for only between some dozens to several hundreds of milliseconds. In the sensory register, information can be noticed, the meaning can be encoded, and then it can enter the next step in processing. If it cannot be noticed or encoded, it will disappear automatically.

All kinds of sensory information continue keeping up for some time and working in its characterized form in the sensory register. These forms are called iconic store and echoic store. Representation can be said to be the most direct, most primitive memory. Mental image can only exist for a short time, if the most distinct video lasts only dozens of seconds. Sensory memory possesses the following characteristics:

George Sperling’s research verified the concept of sensory memory [4]. In this research, Sperling flashed an array of letters and numbers on a screen for a mere 50 ms. Participants were asked to report the identity and location of as many of the symbols as they could recall. Sperling could be sure that participants got only one glance because previous research had shown that 0.05 s is long enough for only a single glance at the presented stimulus.

Sperling found that when participants were asked to report on what they saw, they remembered only about four symbols. The number of symbols recalled was pretty much the same, without regard to how many symbols had been in the visual display. Some of Sperling’s participants mentioned that they had seen all the stimuli clearly, but while reporting what they saw, they forgot the other stimuli. The procedure used initially by Sperling is a whole-report procedure. Sperling then introduced a partial-report procedure, in which participants needed to report only part of what they saw.

Sperling found a way to obtain a sample of his participants’ knowledge and then extrapolated from this sample to estimate their total knowledge of course material. Sperling presented symbols in three rows of four symbols each. Sperling informed participates that they would have to recall only a single row of the display. The row to be recalled was signaled by a tone of either high, medium, or low pitch, corresponding to the need to recall the top, middle, or bottom row, respectively. Participants had to recall one-third of the information presented but did not know beforehand which of the three lines they would be asked to report.

Using this partial-report procedure, Sperling found that participants had available roughly 9 of the 12 symbols if they were cued immediately before or immediately after the appearance of the display. However, when they were cured 1 s later, their recall was down to four or five of the 12 items, about the same as was obtained through the whole report procedure. These data suggest that the iconic store can hold about nine items, and that it decays very rapidly. Indeed, the advantage of the partial-report procedure is drastically reduced by 0.3 s of delay and is essentially obliterated by 1 s of delay from onset of the tone.

Sperling’s results suggest that information fades rapidly from iconic storage. Why are we subjectively unaware of such a fading phenomenon? First, we are rarely subjected to stimuli that appear for only 50 ms and then disappear, for which we need to report. Second and more important, however, we are unable to distinguish what we see in iconic memory from what we actually see in the environment. What we see in iconic memory is what we take to be in the environment. Participants in Sperling’s experiment generally reported that they could still see the display up to 150 ms after it had been terminated.

In 1986 Professor Xinbi Fan et al. proposed a visual associative device, auditory associative device, and tactile associative device [5]. Furthermore, an advanced intelligent system with reasoning and decision can be constructed by using various associative devices. The parallel search of associative devices provides the possibility of high-speed data processing. In short, associative devices can be used as components of new computers.

8.2.2 Short-term memory

Information encoded in sensory memory enters short-term memory, through further processing, and then enters into the LTM in which information can be kept for a long time. Information generally is kept in short-term memory only for 20–30 s, but if repeated, it can continue there. Repeating guarantees the delay of disappearance of the information stored in short-term memory—an important function in psychological activity. First, short-term memory is acting as part of consciousness, enabling us to know what we are receiving and what is being done. Second, short-term memory can combine some sensory messages into an intact picture. Third, short-term memory functions as a register temporarily while the individual is thinking and solving a problem. For example, before making the next step in a calculation question, people will temporarily deposit the latest results of the calculation for eventual utilization. Finally, short-term memory keeps present tactics and will. All of these enable us to adopt various complicated behaviors until reaching the final goal. Even though we find these important functions in short-term memory, most present research renames it working memory. Compared with sensory memory, which has much of the information to be used, the ability of short-term memory is quite limited. Participants were given a piece of figure bunch, such as 6-8-3-5-9, which they should be able to recite immediately. If it is more than seven figures, people cannot recite them completely. In 1956 George A. Miller, an American psychologist proposed clearly that our immediate memory capacity for a wide range of items appears to be about seven items, plus or minus 2. To chunk means to combine several little units into a familiar and heavy unit of information processing; it also means the unit that is made up like this. Chunk is a course and a unit. As for that knowledge experience and the chunk, the function of the chunk is to reduce the unit in short-term memory and to increase information in each unit. The more knowledge that people have, the more messages that can be in each chunk. At the same time, the chunk is not divided by meaning; that is, there is no meaning connection between the elements of the composition. So to remember a long figure, we can divide it into several groups. It is an effective method is to reduce the quantity of independent elements in the figures. This kind of organization, called a chunk, plays a great role in LTM.

It has been pointed out that information was stored in short-term memory according to its acoustic characteristic. In other words, even if a message is received by vision, it would be encoded according to the characteristics of acoustics. For example, when you see a group of letters B-C-D, you are according them their pronunciation [bī:]-[sī:]-[dī:] but not according them their shape to encode.

Fig. 8.2 provides the short-term memory buffer. Short-term memory encoding in humans may have a strong acoustical attribute but cannot be rid of the code of other properties. Many who cannot speak can do the work of short-term memory. For example, after seeing a figure, they will select one of two colorful geometric figures, consisting of several slots. Every slot is equivalent to an information canal. The informational unit coming from sensory memory enters different slots separately. The repeated process of the buffer selectively repeats the information in the slot. The information repeated in the slots is entered into LTM. Information that is not repeated is cleared from short-term memory and disappears.

The times for information in every slot differ. The longer the time information is kept in the slot, the greater the chance that it will enter LTM, and the greater the chance that it may be washed and squeezed out by a new message coming from sensory memory. Comparatively, LTM is the real storehouse of information, but information can be forgotten by subsidizing, interference, and intensity.

Retrieving the course of short-term memory is quite complicated. It involves a lot of questions and gives rise to different hypotheses. There is no consensus so far.

8.2.2.1 Classic research of Sternberg

Saul Sternberg’s research indicates that information retrieval in short-term memory occurs by way of series scanning that is exhaustive to effect. We can interpret it as the model of scanning [6].

Sternberg’s experiment is a classic research paradigm. The supposition of the experiment is that until participants scan all items into short-term memory, they will judge the items of the test as “yes” or “no,” so that the reaction time of participants’ correct judgements should not change with the size of memory. The experimental results show that the reaction time is lengthened with the size of memory. It means that the scanning of short-term memory does not carry on parallel scanning but rather serial scanning.

Sternberg’s theory must solve another problem: If information retrieval in short-term memory is serial scanning instead of parallel scanning, then where does the scanning begins, and how does it expire? According to Sternberg, information retrieval takes place via serial scanning even though participants are busy with their own affairs. Meanwhile, the course of judgment includes the comparing and decision-making process. So while participants are judging, they are not self-terminating.

8.2.2.2 Direct an access model

W. A. Wickelgren does not think that retrieving items in short-term memory is done through comparing. People can get to the positions of items in short-term memory directly and retrieve them directly.

The direct access model asserts that the retrieval of information does not occur by scanning in short-term memory [7]. The brain can directly access the positions where items are stored to draw on them directly. According to this model, each item in short-term memory has a certain familiar value or trace intensity. So, according to these standards, people can make a judgement. There is a judgment standard within the brain: If the familiarity is higher than this standard, then the response if “yes”; if it is lower, then “no.” The more between familiarity there is with the standard, the faster people can give a “yes” or “no” response.

The direct access model can explain serial position effect (primacy and recency effect). But how does short-term memory know the positions of items? If the retrieval of information is by direct access, why does the reaction time increase linearly when the number of items increases?

8.2.2.3 Double model

R. Atkinson and J. Juola think the retrieval of information already includes scanning and direct access during short-term memory. In brief, both ends are direct, and the middle is scanning, as shown in Fig. 8.3.

The search model and direct access model both have their favorable aspects, but there is a deficiency. The double model attempts to combine the two. Atkinson and Juola put forward the notion of information retrieval in the double model in short-term memory [8]. They imagine that each of the words is input that can be encoded according to its sensorial dimensionality, called sensory code; words has a meaning, called its concept code. Sensory code and concept code form a concept node. Each concept node has a different level of activation or familiarity value.

There are two standards of judging within the brain. One is high-standard (C1): If the familiarity value of a certain word is equal to or higher than this standard, people can make a rapid “yes” response. The other one is the low standard (C0): If the familiarity value of a certain word is equal to or lower than this standard, people can make a rapid “deny” response. Atkinson and Juola state that this is a direct access course. However, if a survey word with a familiarity value lower than the high-standard but higher than the low standard, it will carry on a serial search and make a reaction. So the reaction times are greater.

The research on short-term memory finds that processing speed and material properties or information type of information have relationships. The speed of processing rises with increasing memory capacity; the bigger the capacity of the material, the faster it will scan.

Experimental results indicate that processing speed in short-term memory has a relationship with material properties or information type. In 1972 Cavanaugh, through calculating the average experimental results with materials in different research works, obtained the average time taken for scanning one item and contrasted the corresponding capacity of short-term memory. An interesting phenomenon was found: The processing speed rises with an increase in the memory capacity. The greater the capacity of the material, the faster of scanning is. It is difficult to explain this phenomenon clearly. One imagining is that in short-term memory, information is signified with characteristics. But the storage space of short-term memory is limited; so the larger that each average characteristic is in quantity, then the smaller the quantity will be of free short-term memory. Cavanaugh then thinks that each stimulus’s processing time is directly proportional to its average characteristic quantity. If the average characteristic quantity is heavy, then it will need more time to process; otherwise less time is needed. Also, there are many doubtful areas in this explanation, even though it connects the information retrieval of short-term memory, memory capacity, and information representation. This is really an important problem. The processing speed reflects the characteristic of the processing course. Behind the processing speed difference of different materials, the cause may be that memory capacity, information representation, etc. have different courses of information retrieval.

Through the Peterson-Peterson method, the information that was forgotten in short-term memory is discovered [9].

E. R. Kandel found through his research that cAMP plays a very important role in repairing brain cells, activating brain cells, and regulating the function of brain cells, turning short-term memory into LTM [1].

8.2.3 Long-term memory

Information maintained for more than a minute is referred to as LTM. The capacity of LTM is the greatest of all memory systems. Experiments are needed to illustrate the capacity, storage, restoration, and duration of LTM. The result measuring how long one thing can be memorized is not definite. Memory cannot last for long time because attention is unstable; if accompanied by repetitions, however, memory will be retained for long time. The capacity of LTM is infinite. Some 8 s are needed to retain one chunk. Before being recovered and applied, information stored in LTM needs to be transferred into short-term memory. During the phase of LTM recovery, the first number needs 2 s; then every following number needs 200–300 s. Different figure numbers, such as 34, 597, 743218, can be used for the experiment to measure how long each number needs to be recovered. The result indicates that a two-figure number needs 2200 ms, a three-figure number needs 2400 ms, and six-figure number needs 3000 ms.

The Bluma Zeigarnik effect results from instructing a subject to complete some tasks [10] while leaving other tasks unfinished. Afterward, the subjects can remember unfinished work better than the finished work when they are asked to remember what they had done. It was revealed that, if a task is stopped before being finished, the task continues activity in another space. Some things are easier to retrieve than others in LTM because their threshold is lower. Other things with high thresholds need more clues to retrieve. The unfinished task’s threshold is low and can be activated and spread easily. Activation spreads along the net until the location stores information, and then the information is retrieved.

A lot of psychologists agree that sensory memory and short-term memory will disappear fast and become passive, but few psychologists agree there is the same simple decline mechanism in LTM because it is difficult to explain why some materials are forgotten slightly faster than others? Does the completion intensity of the original study material relate to forgetting? Is being forgotten affected by something that happens during the time between studying and remembering? Many psychologists in this area believe that LTM disappears because of interference. This is a passive point. There are some points about being forgotten as an active process as a supplement or substitution for interference. Floyd thinks being forgotten results from constraining. The material will be difficult to remember if it is extremely painful and threatening to the mind. Another standpoint is the “productive forgotten” proposed by Bartlett. If you have not received accurate memory, you will create something like the memory, and then you will get to the real memory. The characteristics of these three kinds of memory systems are provided in Table 8.1.

Table 8.1

The human memory system is very much like that of computer. Computer memory layers consist of cache, main memory, and auxiliary memory, whose speed runs from fast to slow and whose capacity runs from small to large, with the optimum control deployment algorithm and reasonable cost composing an acceptable memory system.

8.3 Long-term memory

Information maintained for more than a minute is referred to as LTM. The capacity of LTM is the greatest among all memory systems. Experiments are needed to illustrate the capacity, storage, restoration, and duration of LTM. The result determined that how long something can be memorized is not definite. Memory cannot last for long time because attention is unstable; if accompanied by repetitions, however, memory can be retained for long time. The capacity of LTM is infinite. Eight seconds are needed to retain one chunk. Before recovered and applied, information stored in LTM needs to be transferred into short-term memory.

LTM can be divided into procedural memory and declarative memory. Procedural memory keeps the skill about operating something, which mainly consists of perceptive-motor skills and cognitive skills. Declarative memory stores the knowledge represented by symbols to reflect the essences of things. Procedural memory and declarative memory are the same memories reflecting someone’s experience and action influenced by previous experience and action.

The differences between procedural memory and declarative memory are as follows:

- 1. There is only one way for representation in procedural memory, which needs skills research. The representations of declarative information can vary and are different from action completely.

- 2. With respect to true-or-false questions of knowledge, there is no difference between true and false for skilled procedures. Only the knowledge of cognition of the world and the relationship between the world and us has the problem of true or false.

- 3. The study forms of these two kinds of information are different. Procedural information must be taken through certain exercise, and declarative information needs only chance practice.

- 4. Skilled action works automatically, but the reparation of declarative information needs attention.

Declarative memory can be further divided into episodic memory and semantic memory. Episodic memory is a person’s personal and biographical memory. Semantic memory stores the essential knowledge of the incident that an individual understands or, in other words, world knowledge. Table 8.2 shows the differences between these two kinds of memories.

Table 8.2

LTM is divided into two systems in terms of information encoding: the image system and verbal system. The image system stores information on specific objects and events by image code. The verbal system uses verbal code to store verbal information. The theory is called two kinds of coding or dual coding because these two systems are independent while related to each other.

8.3.1 Semantic memory

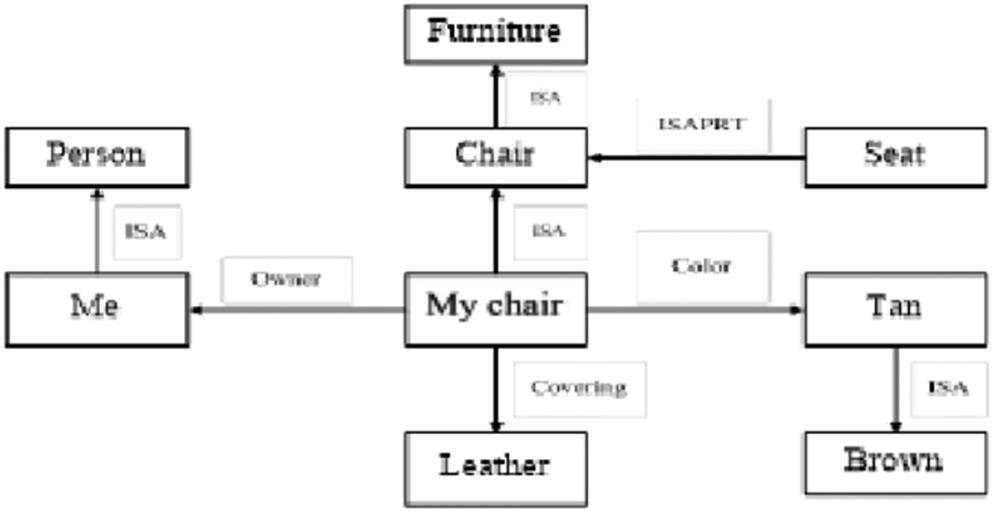

Semantic memory was proposed by M. Ross Quillian in 1968 [11]. It is the first model of semantic memory in cognitive psychology. Anderson and Bower, Rumelhart, and Norman all had proposed various memory models based on the semantic network in cognitive psychology. In this model, the basic unit of semantic memory is the concept that has certain characteristics that are also concept in fact, but they are used to explain other concepts. In the semantic network, information is represented as a set of nodes that are connected with each other by an arc with a mark that represents the relationship between nodes. Fig. 8.4 is a typical semantic network. The is a link is used to represent the layer relationship of the concept node and to link the node-represented specific object with a relevant concept is part links the concepts of global and partial. For example, in Fig. 8.4, Chair is a part of Seat.

8.3.1.1 Hierarchical network model

The hierarchical network model for semantic memory was proposed by Quillian et al. In this model, the primary unit of LTM is concept. Concepts are related to one another and then form a hierarchical structure. As shown in Fig. 8.5, the block is a node representing concept, and the line with an arrow point expresses the dependence between the concepts. For instance, the higher hierarchical concept of bird is animal, while its lower hierarchical concept is ostrich. The lines represent the relationships between concept and attribute to designate the attribute of each hierarchical, for example, has wing, can fly, and has feathers are features of bird. Nodes represent the respective hierarchical concepts, and concept and feature are connected with lines to construct a complicated hierarchical network in which lines are association with a certain significance in fact. This hierarchical network model stores features of concept in a corresponding hierarchy that only stores the concepts within same hierarchy, while the common attributes of concepts in the same hierarchy are stored in at a higher hierarchical level.

Fig. 8.5 is a fragment of a concept structure from the literature [12]. Concepts such as “canary” and “shark,” which are located at the bottom, are called level-0 concepts. Concepts “bird”, “fish,” and so on are called level-1 concepts. Concept “Animal” is called a level-2 concept. The higher the level is, the more abstract the concepts are, and correspondingly the longer the processing time is. At each level, this level only stores the unique concept characteristics. Thus the meaning of a concept is associated with other characteristics determined by the concept.

8.3.1.2 Spreading activation model

The spreading activation model was proposed by Collins et al. [13]. It is also a network model. Different from the hierarchical network model, this model organizes concepts by semantic connection or semantic similarity instead of hierarchical structure of concept. Fig. 8.6 reveals a fragment of the spreading activation model. Those squares are nodes of network representing concepts. The length of lines means a compact degree of relation—for example, a shorter length indicates that the relationship is close and that there are more common features between two concepts—or if more lines between two nodes with common features mean their relationship is compact, the connected concepts denote their relationship.

As a concept is stimulated or processed, the network node that this concept belongs to is active, and activation will spread all around along lines. The amount of this kind of activation is finite, so if a concept is processed for a long time, the time of spread activation will increase and the familiarity effect may be formed; on the other hand, activation also follows the rule that energy decreases progressively. This model is a modification of the hierarchical network model, which considers that attributes of every concept may have the same or different hierarchies. The relation of the concept-length of lines illustrates the category size effect and other effects and is the form of spreading activation. This model may be a humanized hierarchical network model.

8.3.1.3 Human association memory

The greatest advantage of the human associative memory (HAM) model is that this model can represent semantic memory as well as episodic memory; it can process semantic information and nonverbal information. What is more, this model can offer a proper explanation of the practice effect and imitate it through the computer very well. But it cannot explain the phenomenon of familiarity effect. The comparison process is composed of several stages and asserts that the basic unit of semantic memory is proposition rather than concept.

A proposition is made up of a small set of associations, and each association combines two concepts. There are four different kinds of association. (1) Context–fact association: The context and fact are combined into associations, in which facts refer to events that happened in the past, and context means the location and exact time of the event. (2) Subject-predicate association: The subject is the principal part of a sentence, and the predicate is intended to describe the specialties of the subject. (3) Relation–object association: This construction served as predicate. Relation means the connection between some special actions of the subject and other things, while the object is the target of actions. (4) Concept–example association: For instance, furniture-desk is a concept-example construction.

Proper combination of these constructions can make up a proposition. The structures and processes of HAM can be described by the propositional tree. When a sentence is received, for example, “The professor ask Bill in classroom,” it could be described by a propositional tree (see Fig. 8.7). The figure is composed of nodes and labeled arrows. The nodes are represented by lower-case letters and the labels on the arrows by upper-case letters, while the arrows represented various kinds of association. Node A represents the idea of proposition, which is composed by facts and associations between contexts. Node B represents the idea of the context, which could be further subdivided into a location node D and a time code E (past time, for the professor had asked). Node C represents the idea of fact, and it leads by arrows to the subject node F and a predicate node G, which could in turn be subdivided into a relation node H and an object node I. At the bottom of the propositional tree are the general nodes that represent our ideas of each concept in the LTM, such as classroom, in the past, professor, ask, Bill. They are terminal node for their indivisibility. The propositional tree is meaningless without these terminal nodes. Those concepts are organized in a propositional tree according to the propositional structure, rather than their own properties or semantic distance. Such an organization approach possesses a network quality. The LTM could be regarded as a network of propositional trees, which also demonstrate the advantage of the HAM model in that it can represent both semantic memory and episodic memory and combine them together. This propositional tree can comprise all kinds of personal events as long as the episodic memory information is represented with a proposition, which is absent in those memory models previously mentioned. HAM propositional structure enables one proposition implanted into another and combined into a more complicated proposition. For example, the two propositions that “The professor asked Bill in classroom,” and “it makes the examination over on time” could be combined into a new proposition. In this situation, these two original propositions become the subject and predicate of the new proposition, respectively. This complicated proposition could also be represented with a propositional tree.

According to the HAM model, four stages are needed to accomplish the process of information retrieval to answer a question or understand a sentence:

8.3.2 Episodic memory

Episodic memory was proposed by Canadian psychologist Tulving. In his book Elements of Episodic Memory published in 1983, Tulving discussed the principle of episodic memory [14].

The base unit of episodic memory is personal recall behavior. Personal recall behavior begins with an event or a subjective reproducing (remembering experience) of an experience produced by a scene, or a change to other forms that keep information, or a combination of them. There are a lot of composition elements and the relations among the elements about recall. The composition elements that are elements of episodic memory can be divided into two kinds: one observes the possible incident, and the other made up of composition concepts of the hypothesis. The elements of episodic memory consist of encoding and retrieval. Encoding is about the information of the incident of the experience in a certain situation at some given time; it points out the process of transforming to the traces of memory. Retrieval is mainly about the form and technique of retrieval. The elements of episodic memory and their relations are demonstrated in Fig. 8.8.

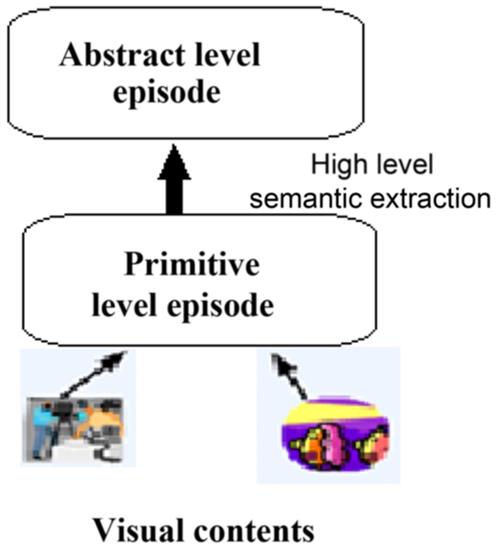

Nuxoll and Laird first introduced scene memory into Soar episodic memory in such a way that a scene memory can support a variety of cognitive functions agents [15]. In CAM, the plot fragment is stored in the basic module scenario memory. Plot segment is divided into two levels: the logical level of abstraction and the original level, shown in Fig. 8.9. The abstraction level is described by logic symbols. In the original stage, it includes a description of the related object abstraction–level perceptual information. In order to effectively represent and organize the plot fragments, we use dynamic description logic (DDL) for representation in the abstract level and make use of ontology in the original level. Furthermore, we use an object data map to describe plot fragments.

Fig. 8.10 depicts the structure of the object data graph for the film Waterloo Bridge, in which the plot is associated with other objects by URI fragments. Fig. 8.10 shows the three objects: M2, W2, and the movie Waterloo Bridge. In addition, there is object W2 wearing a blue skirt. The film also associated with the two main characters M1, W1, and W1 in a white coat.

In Soar, the plot is the case retrieval model–based reasoning, based on past experience, to find solutions to problems [16]. According to the clues in back episodes, we follow this idea to build a case-based system. To simplify the system, we restrict the plot to be like a change sequence. Then the plot retrieval model is aimed at solving the most relevant search event question. In the abstract level plot, it can accurately represent the contents of the event, thus matching the level of abstraction between the tips and the plot. In CAM, the conversion sequence is formally defined as a possible sequence of the world, and the plot clues suggest a tableau by DDL inference algorithm [17,18]. One possible sequence of the world is a directed acyclic graph Seq=(Wp, Ep),where each wi∈Wp represents a possible world, and each edge ei=(wi, wj) indicates that the action αi is executed in wi and consequently causes the translation from the world wi to the world wj.

With DDL, a plot ep implicitly indicates c if the formula ep→c is valid. Therefore, we can check whether ep→c is about the implication relations of effective process between plot and clues. The inference procedure is described in Algorithm 8.1.

Algorithm 8.1 CueMatch(e,c)

Input: episodee,cuec

Output: whetherc![]() p e hold

p e hold

(1) if length(e) <length(c) then

(2) return false;

(3) end

(4) ne:=first_node(e);

(5) nc:=first_ node(c);

(6) if MatchPossibleWorld(ne; nc) then

(7) αe:=Null;

(8) αc:=action(nc);

(9) if ¬ (Pre(αe) → Pre(αc)) unsatisfiable according DDL tableaualgorithm then

(10) ntmp:=ne;

(11) while next_ node(ntmp) ≠Null do

(12) αe:=(αe; action(ntmp));

(13) if MatchAction(αe; αc) then

(14) Let sube be the subsequence by removing αe from e;

(15) Let subc be the subsequence by removing αc from c;

(16) if CueMatching(sube; subc) then

(17) return true;

(18) end

(19) end

(20) ntmp:=next node(ntmp);

(21) end

(22) end

(23) end

(24) Remove ne from e;

(25) return CueMatching(e; c);

Function: MatchPossibleWorld(wi,wj)

Input: possible worlds wi, wj

Output: whetherwi![]() wjhold

wjhold

(1) fw:=Conj(wi)→ Conj(wj);

(2) if ¬fw is unsatisfiable according to DDL tableau algorithm then

(3) return true;

(4) else

(5) return false;

(6) end

Function: MatchAction(αi,αj)

Input: actionαi, αj

Output: whether αi![]() αj hold

αj hold

(1) if αi==null or αj==null then

(2) return false

(3) end

(4) fpre:=Conj(Pre(αi)) → Conj(Pre(αj));

(5) feff:=Conj(Eff(αi)) → Conj(Eff(αj));

(6) if ¬fpre and ¬feff are unsatisfiable according to DDL Algorithm then

(7) return true;

(8) else

(9) return false;

(10) end

In Algorithm 8.1, the function length returns the length of a possible sequence of the world. The length of a possible world sequence is determined by the number contained in the sequence of nodes. Function returns (n), and the implementation of the action is done in a possible world order N inside. Function next node return n′ next node in the possible world’s sequence. Next node n, by performing the operation of operation (n), reaches the possible sequences of the world. Algorithm 8.1, step 14, through the sequence of actions constructors “;”, produce a combined action, connecting the two movements α and action (ntmp). To simplify the algorithm, we assume that the action (NULL;) == α. In Algorithm 8.1, MatchPossibleWorld() and MatchAction() are also functions. We use the DDL tableau algorithm to decide whether the negation of the formula is satisfiable or not.

8.3.3 Procedural memory

Procedural memory refers to the memory of how to do things, including perception skills, cognitive skills, motor skills, and memory. Procedural memory is memory of inertia; also known as memory skills, it is often difficult to use language to describe such memories, and often several attempts are needed to gradually get it right.

Procedural memory is the memory of procedural knowledge; that is, “know-why” is about the skills of knowledge and understanding of “how-to” memories. Individuals achieve a degree of automation and refinement via much practice then you can do two things at the same time without feeling labored, such as using a mobile phone while driving or driving when the program remains natural and smooth, that is, when the a call does not affect driving.

Procedural knowledge presumes knowledge of its existence only by means of some form of indirect jobs. It includes inspiration, methods, planning, practices, procedures, practices, strategies, techniques, and tricks to explain “what” and “how.” It is about how to do something or knowledge about the link between stimulus and response but also about the behavior of the program or basic skills learning. For example, people know how to drive a car, how to use the ATM, how to use the Internet to search for target information.

Procedural knowledge representation in ACT-R, Soar, and other systems using production systems, that is, a “condition–action” rule. An action procedure takes place when certain conditions are met. Output refers to the coupling condition and action, resulting in an action under the rules of a condition; it consists of the condition items “if” (if) and the action item “then” (then).

We adopt the DDL for describing the procedural knowledge of CAM. To be compatible with the action formalism proposed by Baader et al. [19], we extend the atomic action definition of DDL to support occlusion and conditional postconditions. With respect to a TBox T, the extended atomic action of DDL is a triple α≡ (P, O, E) [20], where:

- – α∈NA.

- – P is a finite set of ABox assertions for describing preconditions.

- – O is a finite set of occlusions, where each occlusion is of the form A(p) or R(p, q), with A a primitive concept name, R a role name, and p, q ∈NI.

- – E is a finite set of conditional postconditions, where each postcondition is of the form φ/ψ, with φ an ABox assertion and ψ a primitive literal. In this definition, the set of preconditions describes the conditions under which the action is executable. For each postcondition φ/ψ, if φ holds before the execution of the action, then the formula ψ will hold after the execution. Occlusions describe the formulas that cannot be determined during the execution of the action.

As an example, an action named BuyBookNotified(Tom, Kin) is specified as follows: BuyBookNotified(Tom,Kin)≡

({customer(Tom), book(KingLear)}, { },

{instore(KingLear)/bought(Tom, KingLear),

instore(KingLear)/¬instore(KingLear),

instore(KingLear)/notify(Tom, NotifyOrderSucceed),

¬instore(KingLear)/notify(Tom, NotifyBookOutOf Stock)})

According to this description, if the book KingLear is at the store before the execution of the action, then after the execution of the action, the formulas bought(Tom, KingLear), ¬instore(KingLear) and notify(Tom, NotifyOrderSucceed) will hold; otherwise the formula notify(Tom, NotifyBookOutOfStock) will be true, which indicates that Tom is notified that the book is out of stock.

The difference between declarative memory and procedural memory is that declarative memory works for the description of things, such as “self-introduction,” which is an explicit memory, while procedural memory works for technical actions, such as “cycling,” which is an implicit memory. The organs for procedural memory are the cerebellum and striatum, and the most essential part is the deep cerebellar nuclei.

8.3.4 Information retrieval from long-term memory

There are two ways of information retrieval from long-term memory (LTM): recognition and recall.

8.3.4.1 Recognition

Recognition is the knowledge of feeling that someone or something had been a perception, thinking, or experiencing before. There is no essential difference between the recognition and recall processes, though recognition is easier than recall. In personal development, recognition appears prior to recall. Infants have the ability of recognition in the first half year after their birth, while they do not acquire the ability of recall until a later stage. Shimizu, a Japanese scholar, had investigated the development of recognition and recall among child in primary school by using pictures. The result indicated that the performance of recognition was better than recall for children in nursery or primary school. This recognition advantage, however, would diminish, and children in the fifth-year class and later had similar recognition and recall performance. There are two kinds of recognition, perceived and cognitive, in the form of compact and opening, respectively. Recognition at the perceived level always happens directly in the compact form. For instance, you could recognize a familiar band just through several melodies. On the contrary, recognition at the cognitive level depends on some special hints, including some other cognitive activities, such as recalling, comparison, deduction, and so on. The recognition process probably causes mistakes, such as failing to recognize a familiar item or making a wrong recognition. Different reasons could be responsible for the mistakes, such as incorrect received information, failure to separate similar objects, or nervousness and sickness.

Several aspects determine whether the recognition process is fast and correct. The most important factors are as follows:

- 1. Quantity and property of the material: Similar material or items can confound and cause difficulty in the recognition process. The quantity of the items may also affect recognition. It has been found that 38% additional time is needed if a new English word is introduced during the recognition process.

- 2. Retention interval: Recognition depends on the retention interval. The longer the interval is, the worse the recognition will be.

- 3. Initiative of the cognitive process: Active cognition helps in comparison, deduction, and memory enhancement when recognizing strange material. For instance, it might be difficult to recognize an old friend immediately whom you had not met for a long time. Then the recall of the living scene in the past makes the recognition easier.

- 4. Personal expectation: Besides retrieval stimuli information, the subject’s experience, mental set, and expectations also affect recognition.

- 5. Personality: Witkinet and his colleagues divided people into two kinds: field-independent and field-dependent. It was demonstrated that field-independent people are less affected by surrounding circumstances than field-dependent people. These two kinds of people showed significant differences in recognizing the embedded picture, namely, recognizing simple figures to form a complex picture. Generally speaking, field-independent people always have better recognition than field-dependent people.

8.3.4.2 Recall

Recall refers to a process of the reappearance of past things or definitions in the human brain. For instance, people recalled what they have learned according to the content of an examination; the mood of a festival leads to recalling relatives or best friends who are at a distance.

Different approach used in the recalling process would lead to difference memory output.

- 1. Based on associations: Everything in the outside word is not isolated but dependent on one another. Experiences and memories kept in the human brain are not independent; rather, they are connected with one another. The rise of one thing in the brain can also be a cue to recalling other related things. A cloudy day might remind us rain, and a familiar name might remind us of the voice and appearance of a friend. Such mind activity is called association, which means the recalling of the first thing induces the recalling of another thing. Association has certain characteristics: Proximity is one such characteristics. Elements that are temporally or spatially close to one another tend to be constructed together. For instance, the phrase “Summer palace” might remind people of “Kunming Lake,” “Wanshou Mountain,” or “The 17-Arch Bridge.” A word can be associated with its pronunciation and meaning, and the coming of New Year’s Day might remind us of the Spring Festival. Similarly, items that are similar in form or property might be associated. For example, the mention of “Spring” would lead to recalling the revival and burgeoning of life. In contrast, the opposite characteristic of items can form associations. People might think of black from white, short from tall. The cause and effect, another characteristic, between elements form construction. A cloudy day might be associated with raining; snow and ice might be associated with cold.

- 2. Mental set and interest: These can influence the recalling process directly. Due to differences in the prepared state, the mental set may have great effect on recalling. Despite same stimuli, people have different memories and associations. Additionally, interest and emotional state might also prefer some specific memories.

- 3. Double retrieval: Finding useful information is an important strategy. During the recalling process, representation and semantics would improve integrity and accuracy. For instance, when answering the question, “How many windows are there in your home?” the representation of windows and the retrieval of the number would help the recalling effect. Recalling the key point of the material would facilitate information retrieval. When asked what letter comes after “B” in the alphabet, most people would know it is “C.” But it would be difficult if the question is, “What letter comes after ‘J’ in the alphabet?” Some people recall the alphabet from “A” and then know “K” is after “J,” while most people go from “G” or “H,” because “G” has an distinctive image in the whole alphabet and thus could become a key point for memory.

- 4. Hint and recognition: When recalling unfamiliar and complex material, the presentation of a context hint is helpful. Another useful approach is the presentation of hints related to the memory content.

- 5. Disturbance: A common problem during the recalling process was the difficulty of information retrieval resulting from interference. For instance, in an examination, you may fail to recall the answer of some item due to tension, although you know the answer in fact. Such a phenomenon is called the “tip of the tongue” effect; namely you could not say the answer even though you know it. One way to overcome this effect is to give up the recall and try to recall later, and then the memory might come to mind.

8.4 Working memory

In 1974 A. D. Baddeley and Hitch put forward the concept of working memories based on experiments that imitated short-term memory deficit [21]. In the traditional Baddeley model, working memory is composed of a central executive system and two subsidiary systems, including the phonological loop and visuospatial sketch pad [21]. The phonological loop is responsible for information storage and control on the foundation of voice. It consists of the phonological storage and articulatory control process, which can hold information through subvocal articulation to prevent the disappearance of spoken representation and also switch from the written language to the spoken code. The visuospatial scratch pad is mainly responsible for storing and processing the information in visual or spatial form, possibly including the two subsystems of vision and space. The central executive system is the core of working memory and is responsible for the subsystems and their connection with LTM, the coordination of attention resources, strategy choice and the plan, etc. A large number of behavioral researches and a lot of evidence in neural psychology have shown the existence of three subcompositions; the understanding of the structure of working memory and the function form is enriched and perfected constantly.

8.4.1 Working memory model

All working memory models can be roughly divided into two big classes: One is the European traditional working memory model, among which the representative one is the multicomposition model brought forward by Baddeley, which divided the working memory model into many subsidiary systems with independent resources, stress modality-specific processing, and storage. The other is the North American traditional working memory model, which is represented by the ACT-R model, emphasizing the globality of working memory, general resource allocation, and activation. The investigation of the former mainly focuses on the storage component of the working memory model, that is, phonological loop and visuospatial sketchpad. Baddeley pointed out that short-term storage should be clarified first for it to be operated easily before answering more complicated questions of processing, whereas the North American class emphasizes the working memory’s role in complicated cognitive tasks, such as reading and speech comprehension. So the North American working memory model is like the European general central executive system. Now two lines of research are coming up with more and more findings and are exerting a mutual influence with respect to theory construction. For example, the concept of the episodic buffer is like the proposition representation of Barnard’s interacting cognitive model. So the two classes have already demonstrated certain integrated and unified trends.

Baddeley developed his working memory theory in recent years based on the traditional model in which a new subsystem-episodic buffer is increased [21]. Baddeley has suggested that the traditional model does not notice how the different kinds of information are combined and how the combined results are maintained, so it cannot explain how subjects could only recall about 5 words in the memory task in random word lists, but they could recall about 16 words in the memory task according to prose content. The episodic buffer represents a separate storage system that adopts a multimodal code; it offers a platform where information is combined temporarily in a phonological loop, visuospatial sketchpad, and LTM and integrates information from multiple resources into an intact and consistent form through the central executive system. The episodic buffer, phonological loop, and visuospatial sketchpad are equally controlled by the central executive system, which, though the integration of different kinds of information, maintains and supports the subsequent integration by the episodic buffer. The episodic buffer is independent of LTM, but it is a necessary stage in long-term episodic learning. The episodic buffer can explain questions such as the interference effect of serial position recall, the mutual influence question among speech and visuospatial processing, the memory trunk and unified consciousness experience, etc. The four-component model of working memory, including the newly increased episodic buffer, is shown in Fig. 8.11 [21].

The ACT-R model from Lovett and his colleagues can explain a large number of data with individual difference [22]. This model regards working memory resources as one kind of attention activation, named source activation. Source activation spreads from the present focus-of-attention to the memory node related to the present task and conserves those accessible nodes. ACT-R is a production system that processes information according to the activation production regularity. It emphasizes that the processing activities depend on goal information: The stronger the present goal is, the higher the activation level of relevant information will be, and the more rapid and accurate information processing is. This model suggests that the individual difference of the working memory capacity reflects total amount of “source activation” expressed with the parameter W. And it is field-universal and field-unitary. This source activation in phonological and visuospatial information is based on the same mechanism.

The obvious deficiency of this model lies in the fact that it explains only a parameter of the individual difference in a complicated cognitive task and neglects that the individual difference of working memory might be related to processing speed, cognitive strategy, and past knowledge skill. But the ACT-R model emphasizes the singleness of working memory, in order to primarily elucidate the common structure in detail; it thereby can remedy the deficiency of the model emphasizing the diversity of working memory.

8.4.2 Working memory and reasoning

Working memory is closely related to reasoning and has two functions: maintaining information and forming the preliminary psychological characteristics. The representation form of the central executive system is more abstract than the two subsystems. Working memory is the core of reasoning, and reasoning is the sum of working memory ability.

According to the concept of the working memory system, a dual-task paradigm is adopted to study the relationship between the components of work memory and reasoning. The dual-task paradigm means that two kinds of tasks are carried out at the same time: One is the reasoning task; the other is the secondary task used to interfere with every component of working memory. The tasks to interfere with the central executive system are to demand subjects to randomly produce the letter or figure or to utilize sound to attract subjects’ attention and ask them to make corresponding responses. The task to interfere with the phonological loop cycle is to ask subjects to pronounce constantly such as “the, the …” or to count numbers in a certain order, such as 1, 3, 6, 8. The task to interfere with the visuospatial sketchpad is a lasting space activity, for example typewriting blindly in certain order. All secondary tasks should guarantee certain speed and correct rate and conduct the reasoning task at the same time. The principle of the dual task is that two tasks compete for the limited resources at the same time. For example, interference in the phonological loop is to make the reasoning task and the secondary task take up the limited resources of the subsystem in the phonological loop of working memory, both at the same time. If the correct rate of reasoning is decreased and the response time is increased in this condition, then we can confirm that the phonological loop is involved in reasoning process. A series of research works indicate that the secondary task could effectively interfere in the components of working memory.

K. J. Gilhooly studied the relationship between reasoning and working memory. In the first experiment, it was found that the way of presenting the sentence influenced the correct rate of the reasoning: The correct rate was higher in visual presentations than in hearing presentations, the reason for which is that the load of memory in visual presentations was lower than in hearing presentations. In the second experiment, it was found that the deductive reasoning task presented visually was most prone to be damaged by the dual-task paradigm (memory load) used to interfere with the executive system, next by the phonological loop, and least by the visuospatial processing system. This indicates that representation in deductive reasoning is a more abstract form, which is in accordance with the psychological model theory of reasoning and has caused the central executive system to be involved in reasoning activities. Probably, the phonological loop played a role too because the concurrent phonological activities with the reasoning activity slowed down, indicating that two kinds of tasks may compete for the limited resource. In this experiment, Gilhooly and his colleagues found that the subjects may adopt a series of strategies in deductive reasoning and that the kind of strategy adopted can be inferred according to the results of reasoning. Different secondary tasks force the subjects to adopt different strategies, so their memory load is different too. Vice versa. Increasing the memory load will also change the strategy because the changing strategy causes the decreased memory load.

In 1998, Gilhooly and his colleagues explored the relationship between the components of working memory and deductive reasoning in presenting the serial sentence visually using the dual-task paradigm. Sentences presented serially require more storage space than those presented in simultaneously. The result showed that the visuospatial processing system and phonological loop all participated in deductive reasoning and that the central executive system still plays an important role among them. The conclusion can be drawn that the central executive systems all participate in deductive reasoning whether in serial or simultaneous presentation. When memory load increases, the visuospatial processing system and phonological loop may participate in the reasoning process.

8.4.3 Neural mechanism of working memory

The development of brain sciences over nearly a decade has already found that two kinds of working memory are involved in the thinking process: One is used for storing the speech material (concept) with speech coding; the other is used for storing the visual or spatial material (imagery) with figure coding. Further research indicates that not only do concept and imagery have their own working memory, but also imagery itself has two kinds of working memory. There are two kinds of imagery of things: One represents basic attributes of the things used for recognizing them, generally called attribute imagery or object image. The other is used for reflecting the relationship of the spatial and structural aspects of the things (related to visual localization), generally called spatial image or relation image. Spatial image does not include the content information of the object but the characteristic information used to define the required spatial position information and structural relation of the objects. In this way, there are three kinds of working memory:

- 1. working memory of storing speech material (abbreviated as the speech working memory): suitable for time logical thinking

- 2. working memory of storing the object image (attribute image) (abbreviated as the object working memory): suitable for the spatial structural thinking that regarded the object image (attribute image) as the processing target, usually named idiographic thinking

- 3. working memory of storing the spatial image (related image) (abbreviated as the spatial working memory): suitable for the spatial structural thinking that regarded the spatial image (relation image) as the processing target, usually named intuitive thinking

Contemporary neuroscientific research shows that these three kinds of working memory and the corresponding thinking processing mechanisms have their corresponding areas of the cerebral cortex, though the localization of some working memory is not very accurate at present. According to the new development of brain science research, S. E. Blumstein in Brown University has pointed out [23] that the speech function is not localized in a narrow area (according to the traditional idea, the speech function only involves Broca’s area and Wernicke’s area of the left hemisphere) but is widely distributed in the areas around the lateral fissure of the left brain and extend toward the anterior and posterior regions of the frontal lobe, including Broca’s area, inferior frontal lobe close to the face movement cortex and left precentral gyrus (exclude the frontal and occipital pole). Among them, damage to Broca’s area will harm the speech expression function, and damage to Wernicke’s area will harm the speech comprehension function. But the brain mechanism related with the speech expression and comprehension functions are not limited to these two areas. The working memory used for maintaining speech materials temporarily is regarded as generally relating to the left prefrontal lobe, but the specific position is still not accurate at present.

Compared with the speech working memory, the localization of object working memory and the spatial working memory are much more accurate. In 1993, J. Jonides and his colleagues in Michigan University investigated the object image and the spatial image with positron emission tomography (PET) and obtained some clarification about their localization and mechanism [24]. PET detected pairs of gamma rays emitted indirectly by a positron-emitting radionuclide (tracer), which was introduced into the body on a biologically active molecule. Images of tracer concentration in three-dimensional space within the body are then reconstructed by computer analysis. Because of its accurate localization and noninvasiveness, this technique is suitable for human subject studies.

8.5 Implicit memory

The psychological study on memory was launched along two lines: One is traditional research—paying attention to explicit, conscious memory research; the other is on implicit memory, the focus and latest tendency of present memory studies.

In 1960s Warrington and Weiskrantz found that some amnesia patients cannot recall their learned task consciously but showed facilitated memory performance through an implicit memory test. This phenomenon was called the priming effect by Cofer. Afterward, it was found through many studies that the priming effect is ubiquitous in normal subjects. It is a kind of automatic, unconscious memory phenomenon. The priming effect was called implicit memory by P. Graf and D. L. Schacter [25], whereas the traditional and conscious memory phenomenon was called explicit memory.

In 1987 Schacter pointed out that implicit memory was a type of memory in which previous experiences are beneficial for the performance of a task without consciousness of those previous experiences. In 2000 K. B. McDermott defined implicit memory as “manifestations of memory that occurred in the absence of intentions to re-collect” [26]. In recent years, there have been great improvements in the explanation and modeling of the implicit memory. The most prominent one is that the past model of implicit memory is descriptive and qualitative and that its efficiency depends on their qualitative predictability based on the results of experiments, whereas the recent model is computable and the fitting between the model and experimental data can be quantified. Here we introduce two quantitative models of implicit memory: REMI and ROUSE.

The full name of REMI is Retrieving Effectively from Memory, Implicit. This model’s supposition is that people represent the items in the study in vector form of characteristic value and that two characteristics are represented: content information and environmental information. In the task of perceptive recognition, without the priming item, people represent the environmental information of the goal item the same as the interfering item. So people’s responses depend on the basis of the content characteristic, and their decision is optimized according to Bayesian inference. REMI further supposes that this kind of optimization inference is based on the diagnostic characteristic or the difference among the alternative items. First, calculating each item separately to judge whether it is in accordance with the diagnosis characteristic of the perception content, then searching which item has more matching characteristic, the winner item will be the response of the perception recognition.

But with the priming item joined, the goal items have the representation of environmental information additionally to the representation of content information in the recognition task (if the priming item is an alternative one in the recognition task). The additional environmental characteristic information can match the environmental information of the testing condition, so the amount of the diagnostic characteristic of the priming item will increase to match the recognition response bias to the priming item.

REMI can predict how the long-term priming changes along with the similar extent between the goal item and interference item. This model predicts that the less similarity there is among the items, the more diagnostic the characteristics are. As a result, the amount of deviation of the diagnostic characteristics matched successfully in two optional items have a larger possible range. If the abscissa is the amount of deviation of the matched diagnose characteristics and the ordinate is incidence, then high-similarity item will produce one high and narrow distribution, and the low similarity task will produce a flat distribution. So REMI predicts that a similar item will influence by the priming effect, and this prediction has been validated by real experimental results.

ROUSE means Responding Optimally with Unknown Sources of Evidence and explains the mechanism of short-term priming. This model includes three parameters: The priming stimulus activates each correlated diagnostic characteristic in the goal item and the interference item by α-probability (each characteristic value is 0 or 1, the initial value is 0); the perception discrimination of the stimulus activates each characteristic of the goal item by the β-probability; and the neural system and environmental noise activate the characteristic of the goal item and the interference item by the γ-probability. ROUSE’s supposes that:

- 1. The activation by the priming stimulus will be confused with the activation by the flash of goal stimulus, that is, the so-called unknown origin’s evidence;

- 2. On the premise of this confusion, the subject will estimate subjectively the size of α-probability in order to give an optimal reaction and will remove the priming stimulus’s influence from the comparison of diagnostic characteristic according to this revision.

By analogy, if α-probability is overestimated, then the subjects’ reaction will choose the nonpriming item; if it is underestimated, the subjects will choose the priming item.

Then what will happen when the goal item and interference item are all primed or not all primed? According to the prediction of ROUSE, because the goal item is in the equal position with interference one, the subject doesn’t continue to estimate α-probability and optimize judgment but judges directly. In this way, in the all-primed condition, α-probability is added simultaneously to the goal item, and the interference item by the priming stimulus is not different from the noise of the γ-probability, whereas the strengthened noise will decrease the correct rate of the goal recognition.

In the experiment to examine the model of D. E. Huber and his colleagues, in the passive priming group, two priming words were presented 500 ms; subsequently a goal word was flashed briefly, then the forced-choice discrimination task between the alternative words was asked to be completed. In the active priming group, the subjects maintained priming stimulus until they gave a “is there a life” judgment on the priming word, subsequently completing the perception discrimination program. The result is very close to ROUSE’s prediction: More processing causes overestimation of the priming word, so the subjects prefer the primed item in the passive condition and prefer the nonprimed word in the active condition. In the two conditions, the decreased correct rate was found when the predicted goal and the interference item were both primed.

In the overall direction of implicit memory studies, the constructing quantitative model is an important way to investigate implicit memory, even in generalized perception, learning, and memory.

8.6 Forgetting curve

Hermann Ebbinghaus broke through Wundt’s assumption that memory and other advanced mental process cannot be studied with experimental methods. Observing results and strictly controlling the reasoning and carrying on quantitative analysis to the memory course, he specially created the pointless syllable and saving law for the study of memory. His research production Memory was issued in 1885. His experimental results can be plotted in a curve, which is called the forgetting curve [27].

Ebbinghaus’s results that the course of forgetting is unbalanced see obvious: Within the first hour, information kept in long-time memory is reduced rapidly; thereafter, the forgetting speed slackens gradually [27]. In Ebbinghaus’s research, even after 31 days, there is still a certain intensity of saving; the information is still kept to some degree. Ebbinghaus’s original work initiated two important discoveries. One is to describe the forgetting process as the forgetting curve. The psychologist replaced the pointless syllable with various materials such as word, sentence even story later on and finally found that, no matter what the material to be remembered was, the developing trend of the forgetting curve was the same as his results. Ebbinghaus’s second important discovery was how long the information in long-time memory can be kept. Research found that information can be kept in LTM for decades. Therefore, even things learned in childhood that have not been used for many years, given an opportunity to be learned again, will resume their original level very shortly. If things that are not used any longer, which might be considered total forgotten, in fact are not totally and thoroughly gone.

Amnesia and retention are the two respects of memory contradiction. Amnesia is that memory content cannot be retained or is difficult to retrieve. Take things once remembered, for example; they cannot be recognized and recalled in certain circumstances, or mistakes happen while things are being recognized and recalled. There are various types of amnesia: incomplete amnesia is that you can recognize things but cannot retrieve them; complete amnesia is that you cannot recognize things and cannot retrieve them. Temporarily being unable to recognize things or recollect them is called temporary amnesia. Otherwise amnesia is considered perdurable.

As for the reason for amnesia, there are many viewpoints, and they are summing up here: