This chapter deals with all aspects of digital imaging. Digital images are at their most fundamental sets of numbers rather than physical entities. One of the key differences between working with traditional silver halide photography and working digitally is the vast range of options that you have at your fingertips, before, during and after image capture. Of course the problem with this is that things can get very complicated. You need an understanding of all aspects of the digital imaging chain to ensure that you get the optimum results that you require. Digital image manipulation involves playing with numbers and what is more, this can be done in a non-destructive way. Image manipulation is not, however, confined to the use of image processing applications such as Photoshop. The image is changed at every stage in the imaging chain, leading to unexpected results and makes the control of colour a complicated process. The path through the imaging chain requires decisions to be made about image resolution, colour space and file format, for example. Each of these will have an impact on the quality of the final product. To understand these processes, this chapter covers five main areas: digital image workflow, the digital image file, file formats and compression, image processing and colour management.

What is workflow?

The term workflow refers to the way that you work with your images, the order in which you perform certain operations and therefore the path that the image takes through the imaging chain. Your workflow will be partially determined by the devices you use, but as important is the use of software at each stage. The choices you make will also depend upon a number of other factors, such as the type of output, necessary image quality and image storage requirements.

General considerations in determining workflow

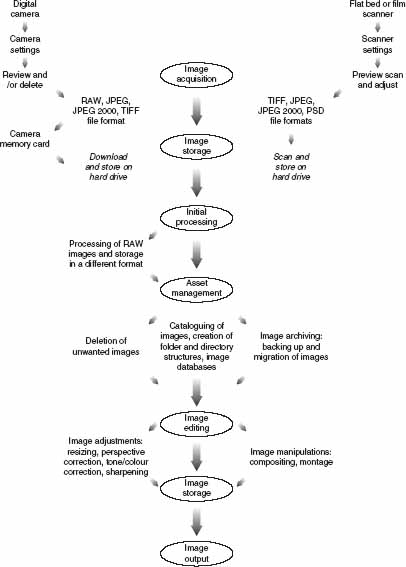

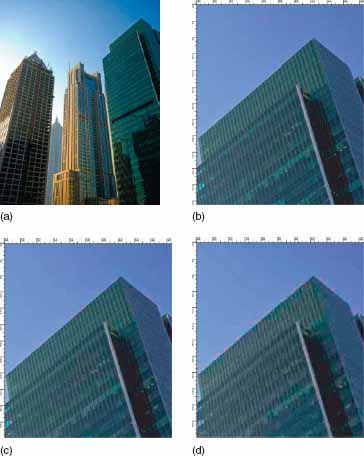

Because of the huge range of different digital imaging applications, there is no one optimal workflow. Some workflows work better for some types of imaging and most people will establish their own preferred methods of working. The aims to bear in mind however, when developing a workflow, are that it should make the process of dealing with digital images faster and more efficient and that it should work towards optimum image quality for the required output – if it is known. Figure 11.1 illustrates a general digital imaging workflow from capture to output. At each stage, there will be many options and some stages are really workflows in themselves.

Figure 11.1 Digital imaging workflow. This diagram illustrates some of the different stages that may be included in a workflow. The options available will depend on software, hardware and imaging application.

Optimum capture versus capture for output

When digital imaging first began to be adopted by the photographic industry, the imaging chain itself tended to be quite restricted in terms of hardware available. At a professional level the hardware chain would consist of a few high-end devices, usually with a single input and output method. These were called closed loop imaging systems and made the workflow relatively simple. The phrase was originally coined to refer to methods of colour management (covered later in this chapter), but may equally be applied to the overall imaging chain, of which colour management is one aspect. Usually a closed loop system would be run by a single operator who, having gained knowledge of how the devices worked together over time, would be able to control the imaging workflow, in a similar way to the quality control processes implemented in a photographic film processing lab, to get required results and correct for any drift in colour or tone from any of the devices.

In a closed loop system, where the output image type and the output device are known, it is possible to use an ‘input for output’ approach to workflow, which means that all decisions made at the earlier stages in the imaging chain are optimized to the requirements of that output. If you are trying to save space and time and know for what purpose the image is being used, then it is possible to shoot for that output. This may mean, for example, when shooting for web output, capturing at quite a low resolution and saving the image as a Joint Photographic Experts Group (JPEG). However, if it is known that an image is to be printed to a required size on a particular printer, the image can be captured with exactly the number of pixels required for that output, converted into the printer colour space immediately and by doing so, the image quality should be as good as it can be for that particular system. The image will need less post-processing work if any and will be ready to be used straight away, correct for your required output. This method of working is simple, fast and efficient. Additionally, if that same method is to be used for many images, then steps in the workflow can be automated, reducing the possibility of operator error and further speeding the process up.

However, it is important to remember that this workflow is designed for one particular imaging chain. As soon as other devices start to be added to the imaging chain, or the image is required for a different type of output, for example to be used in a web page, the system starts to fail in terms of efficiency and may no longer produce such high-quality results. The situation just described is much more typical of the way in which we work in digital imaging today, particularly as the Internet is now a primary method for transmitting images. The use of multiple input and output devices is commonplace by both amateur and professional and these types of imaging chains are known as open loop systems. Often an image may take a number of different forms. For example, it may be that a single image will need to be the output as a low-quality version with a small file size to be sent as an attachment to an email, a high-quality version for print and a separate version to be archived in a database.

Open loop systems are characterized by the ability to adapt to change. In such systems, it is no longer possible to optimize input for output and in this case the approach has to be to optimize quality at capture and then to ensure that the choices made further down the chain do not restrict possible output or compromise image quality. Often multiple copies of an image will exist, for the different types of output, but also multiple working copies, at different stages in the editing process. This ‘non-linear’ approach has become an important part of the image processing stage in the imaging chain, allowing the photographer to go back easily and correct mistakes or try out many different ideas. Many photographers therefore find it is simply easier to work at the highest possible quality available from the input device, at the capture stage and then make decisions about reducing quality or file size where appropriate later on. At least by doing this, they know that they have the high-quality version archived should they need it. This is optimum capture, but requires a decent amount of storage space and time, in both capture and subsequent processing.

Standards

Alongside the development of open loop methods of working, imaging standards have become more important. Bodies such as the International Organization for Standardization (ISO), the International Colour Consortium (ICC) and the JPEG work towards defining standard practice and imaging standards in terms of technique, file format, compression and colour management systems. Their work aims to simplify workflow and ensure interoperability between users and systems and it is such standards that define, for example, the file formats commonly available in the settings of a digital camera, or the standard working colour spaces most commonly used in an application such as Adobe Photoshop.

Standards are therefore an important issue in designing a workflow. They allow you to match your processes to common practice within the industry and help to ensure that your images will ‘work’ on other people’s systems. For example, by using a standard file format, you can be certain that the person to whom you are sending your image will be able to open it and view it in most imaging applications. By attaching a profile to your image, you can help to make sure that the colour reproduction will be correct when viewed on someone else’s display (assuming that they are also working on ICC standards and have profiled their system of course).

Software

There is no standardization in terms of software, but the range of software used by most photographers has gradually become more streamlined, as a result of industry working practices and therefore a few well-known software products dominate at the professional level at least. For the amateur, the choice is much wider and often devices will be sold with ‘lite’ versions of the professional applications. However, software is not just important at the image editing stage, but at every stage in the imaging chain. Some of the software that you might encounter through the imaging chain includes:

• Digital camera software to allow the user to change settings.

• Scanner software, which may be proprietary or an independent software such as Vuescan or Silverfast.

• Organizational software, to import, view, name and file images in a coherent manner. In applications such as Adobe Bridge, images and documents can also be managed between different software applications, such as illustration or desktop publishing packages. Adobe Photoshop Lightroom is a new breed of software, which combines the management capabilities of other packages to organize workflow with a number of image adjustments.

• RAW processing software, both proprietary and as plug-ins for image processing applications.

• Image processing software. A huge variety of both professional and lite versions exist. Within the imaging industry, the software depends upon the type and purpose of the image being produced. Adobe Photoshop may dominate in the creative industries, but forensic, medical and scientific imaging have their own types of software, such as NIH image (Mac)/Scion Image (Windows) and Image J, which are public-domain applications optimized for their particular workflows.

• System software, which controls how both devices and other software applications are set up.

• Database software, to organize, name, archive and backup image files.

• Colour management software, for measuring and profiling the devices used in the system.

It is clearly not possible to document the pros and cons, or detailed operation, of all software available to the user, especially as new versions are continually being brought out, each more sophisticated than the last. The last three types of software in the list, in particular, are unlikely to be used by any but the most advanced user and require a fair degree of knowledge and time to implement successfully. It is possible, however, to define a few of the standard image processes that are common from application to application and that it is helpful to understand. In terms of image processing, these may be classed as image adjustments and are a generic set of operations that will usually be performed by the user to enhance and optimize the image. The main image adjustments involve resizing, rotating and cropping of the image, correction of distortion, correction of tone and colour, removal of noise and sharpening. These are discussed in detail later in the chapter. The same set of operations is often available at multiple points in the imaging chain, although they may be implemented in different forms.

In the context of workflow and image quality, it is extremely useful to understand the implications of implementing a particular adjustment at a particular point. Some adjustments may introduce artefacts into the image if applied incorrectly or at the wrong point. Fundamentally, the order of processing is important. They may also limit the image in terms of output, something to be avoided in an open loop system. Another consideration is that certain operations may be better performed in device software, because the processes have been optimized for a particular device, whereas others may be better left to a dedicated image processing package, because the range of options and degree of control is much greater. Ultimately, it will be down to users to decide how and when to do things, depending on their system, a bit of trial and error and ultimately their preferred methods of working.

Capture workflow

Whichever approach is used in determining workflow, there are a variety of steps that are always performed at image capture. Scanning workflow has been covered in Chapter 10, therefore the following concentrates on workflow using a digital camera. When shooting digitally, there are many more options than are available using a film-based capture system. Eventually, these settings will become second nature to you, but it is useful to know what your options are and how they will influence the final image. Remember, the capture stage is really the most important in the digital imaging chain. A number of settings are set using the menu system and selecting settings or image parameters. Other settings are used on a shot-by-shot basis at the point of capture.

Formatting the card

There are a variety of different types of memory card available, depending upon the camera system being used. These can be bought reasonably cheaply and it is useful to keep a few spare, especially if using several different cameras. It is important to ensure that any images have been downloaded from the card before beginning a shoot, and then perform a full erase or format on the card in the camera. It is always advisable to perform the erase using the camera itself. Erasing from the computer when the camera is attached to it, may result in data such as directory structures being left on the card, taking up space and useless if the card is used in another camera.

Setting image resolution

Image resolution is determined by the number of pixels at image capture, which is obviously limited by the number of pixels on the sensor. In some cameras however it is possible to choose a variety of different resolutions at capture, which has implications for file size and image quality. Unless you are capturing for a particular output size and are short of time or storage space, it is better to capture at the native resolution of the sensor (which can be found out from the camera’s technical specifications), as this will ensure optimum quality and minimize interpolation.

Setting capture colour space

The colour space setting defines the colour space into which the image will be captured and is important when working with a profiled (colour managed) workflow. Usually there are at least two possible spaces available, these are sRGB and Adobe RGB (1998). sRGB was originally developed for images to be displayed on screen, such as those used on the web and in multimedia applications. As it is optimized for only one device, the monitor, it has a relatively small colour gamut. Printer gamuts do not match monitor gamuts well and therefore images captured in sRGB, when printed, can sometimes appear dull and desaturated (see page 226). Adobe RGB (1998) is a later colour space, with a gamut increased to cover the range of colours reproduced by printers as well. Unless you know that the images you are capturing are only for displayed output, the Adobe RGB (1998) is usually the optimum choice.

When using the RAW format, setting the colour space will not have an influence on the results, as colour space is set afterwards during RAW processing.

Setting white balance

As discussed in Chapter 4, the spectral quality of white light sources varies widely. Typical light sources range from low colour temperatures at around 2000 or 3000 K, which tend to be yellowish in colour up to 6000 or 7000 K for bluish light sources, such as daylight or electronic flash. Daylight can go up to about 12 000 K indicating a heavy blue cast. Our eyes adapt to these differences, so that we always see the light sources as white unless they are viewed together. However image sensors do not adapt automatically. Colour film is balanced for a particular light source. The colour response of digital image sensors is altered for different sources using the white balance setting. This alters the relative responses between red-, green- and blue-sensitive pixels.

White Balance may be set in a number of different ways.

White balance presets

These are preset colour temperatures for a variety of typical photographic light sources and lighting conditions. Using these presets is fine if they exactly match the lighting conditions. It is important to note that colour temperature may vary for a particular light source. Daylight may have a colour temperature from approximately 3000 up to 12 000 K depending upon the time of day, year and distance from the equator. Tungsten lamps also vary especially as they age.

Auto white balance

The camera takes a measurement of the colour temperature of the scene and sets the white balance automatically. This can work very well, is reset at each shot, and so adapts as lighting conditions fluctuate.

Custom white balance

Most digital single-lens reflexes (SLRs) have a custom white balance setting. The camera is zoomed in on a white object in the scene, a reference image is taken and this is then used to set the white balance. This can be one of the most accurate methods for setting the white balance.

White balance through RAW processing

When shooting RAW, it is not necessary to set white balance as this is one of the processes performed in the RAW editor post-capture using sliders. It is still useful to have an idea of the colour temperature before capture, but the temperature will be displayed in the RAW editor.

Setting ISO speed

The ISO setting will determine the sensitivity of the sensor to light, ensuring correct exposure across a variety of different lighting levels. The sensor will have a native sensitivity; usually the lowest ISO setting and other ISO speeds are then achieved by amplifying the signal response. Unfortunately this also amplifies the noise levels within the camera. Some of the noise is present in the signal itself and is more noticeable at low light levels. Digital sensors are also susceptible to other forms of noise caused by the electronic processing within the camera. Some of this is processed out on the chip, but noise can be minimized by using low ISO settings. Above an ISO of 400, noise is often problematic. It can be reduced with noise filters during post-processing, but this causes some blurring of the image.

ISO speed settings equate approximately to film, but are not exact as sensor responses vary. For this reason, the camera exposure meter is usually better than an external light meter for establishing correct exposure.

Setting file format

The file format is the way in which the image will be ‘packaged’ and has implications in terms of file size and image quality. The format is set in the image parameters menu before capture and will define the number of images that can be stored on the memory card. The main formats available at image capture are commonly JPEG, TIFF (tagged image file format) (some cameras), RAW (not yet standardized, newer cameras) and RAW JPEG (some cameras).

RAW capture

RAW files are a recent development in imaging and are slowly altering workflow. Capturing to most other image file formats involves a fair degree of image processing being applied in the camera, over which the photographer has limited control. Settings at capture such as exposure, colour space, white balance and sharpening are applied to the image before it being saved and the image is therefore to some extent ‘locked’ in that state. RAW files bypass some of this, producing data from the camera that is much closer to what was actually ‘seen’ by the image sensor and significantly less processed.

The RAW file consists of the pixel data and a header which contains information about the camera and capture conditions. Most digital sensors are based on a Bayer array (see page 114), where each pixel on the sensor is filtered to capture an intensity value representing only the amount of red, green or blue light falling at that point. When capturing to other file formats, the remaining values at each pixel are then interpolated in the camera – this process is described as demosaicing. However, all that is actually being captured at the sensor is a single intensity value, recording the amount of light that was falling on the pixel whether filtered or not. RAW files store only this single value in the pixel data, and the demosaicing is performed afterwards. The upshot of this is that for an RGB image, despite the fact that no compression is being applied, there will be fewer values per pixel stored at capture and therefore a RAW file will be significantly smaller than the equivalent TIFF.

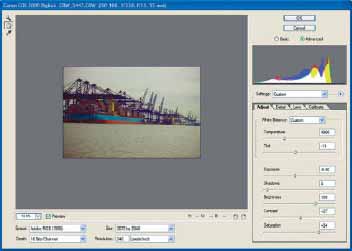

The RAW file is opened after downloading in RAW conversion software, which may be proprietary or a more generic plug-in such as the Camera RAW plug-in in Adobe Photoshop. At this point the image is demosaiced and displayed in a preview window (see Figure 11.2), where the user then applies the adjustments and processing that would have been applied automatically by the camera.

The great advantage of this is that it allows you to control the processing applied to the image post-capture. You have a choice over aspects such as colour space, white point, exposure and sharpening. It also allows alteration of tone, contrast and colour. The image is optimized by the user meaning that the numbers of decisions made at capture are reduced and mistakes can be corrected afterwards. You also view the image on a large screen, while processing, rather than making judgements from the viewing screen on the back of the camera.

It is clear that this offers photographers versatility and control over the image capture stage. The images, being uncompressed, may be archived in the RAW format, and can be opened and reprocessed at any time. After processing, the image is saved in another format, so the original RAW data remains unchanged, meaning that several different versions of the image with different processing may be saved, without affecting the archived data.

The downside of capturing in RAW is that it requires skill and knowledge to carry out the processing. Additionally, it is not yet standardized, each camera manufacturer having their own version of RAW optimized for their particular camera, with a different type of software to perform the image processing, which complicates things if you use more than one camera. Adobe has developed Camera RAW software, which provides a common interface for the majority of modern RAW formats (but not yet all). The problem is that it is a ‘one size fits all’ solution and therefore is not optimized for any of them. It makes the process simpler, but you may achieve better results using the manufacturer’s dedicated software. Despite this, RAW capture is becoming part of the workflow of choice for many photographers.

Figure 11.2 Camera RAW plug-in interface.

Adobe digital negative

In addition to the Camera RAW plug-in, Adobe are also developing the Digital Negative (.dng) file format, which will be their own version of the RAW file format. Currently it can be used as a method of storing RAW data from cameras into a common RAW format. Eventually it is aimed that it will become a standard RAW format, but we are not at that stage yet, as most cameras do not yet output. dng files. A few of the high-end professional camera manufacturers do, however, such as Hasselblad, Leica and Ricoh.

Exposure: using the histogram

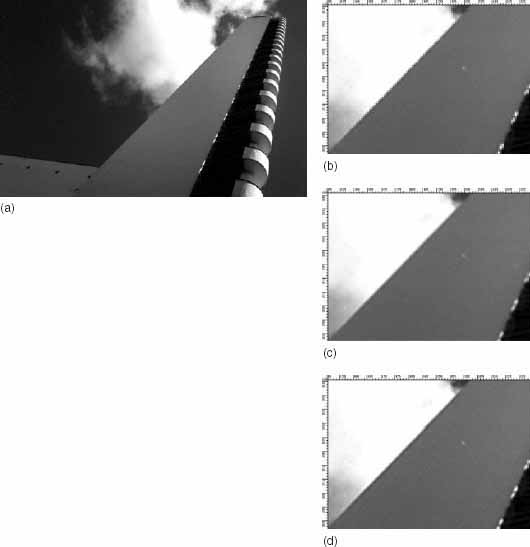

A correctly exposed image will have all the contained pixels within the limits of the histogram and will stretch across most of the extent of the histogram, as shown in Figure 11.3(a). An underexposed image will have its levels concentrated at the left-hand side of the histogram, indicating many dark pixels, and an overexposed image will be concentrated at the other end. If heavily under- or overexposed, the histogram will have a peak at the far end of it, indicating that either shadow or highlight details have been clipped, Figure 11.3(b) and (c). The narrowness of the spread of values across the histogram indicates a lack of contrast. If the histogram is narrow or concentrated at either end, then it indicates that the image should be re-shot to obtain a better exposure and histogram. It is particularly important not to clip highlights within a digital image.

Figure 11.3 (a) Image histogram showing correct exposure, producing good contrast and a full tonal range. (b) Histogram indicating underexposure. (c) Histogram indicating overexposure.

Digital image files

The types of images used in digital photography are raster images, or bitmaps, where image data is stored as an array of discrete values (pixels). A digital image requires that values for position and colour of each pixel are stored, meaning that image files tend to be quite large compared to other types of data.

Any type of data within a computer, text or pixel values, is represented in binary digits of information. Binary is a numbering system, like the decimal system, where decimal numbers can take 10 discrete values from 0 to 9 and all other numbers are combinations of these; binary can only take 2 values, 0 or 1 and all other numbers are combinations of these.

An individual binary digit is known as a bit. 8 bits make up 1 byte, 1024 bytes equal 1 KB, 1024 KB equal 1 MB and so on. The file size of a digital image is equal to the total number of bits required to store the image. The way in which image data is stored is defined by the file format. The raw image data within a file format is a string of binary representing pixel values. The process of turning the pixel values into binary code is known as encoding and is performed when an image file is saved.

File size

The file size of the raw image data in terms of bits can be worked out using the following formula:

File size [H11549] (No. of pixels) [H11547] (No. of colour channels) [H11547] (No. of bits/colour channel)

This can then be converted into megabytes by dividing 8 1024 1024.

Image file size is therefore dependent on two factors: resolution of the image (number of pixels) and bit depth of the image, i.e. the number of binary digits used to represent each pixel, which then defines how many levels of tone and colour can be represented. Increasing either will increase the file size.

Bit depth

The bit depth of image files varies, but currently two bit depths are commonly used to represent photographic quality, 8 bits and 16 bits. Why? Binary code must represent the range of pixel values in an image as a string of binary digits. Each pixel value must be encoded uniquely, i.e. the code must relate to one pixel value; no two pixel values should produce the same code, to prevent incorrect decoding when the file is opened. The number of codes and the number of tonal values possible with k binary digits are defined by 2k.

So the number of distinct colour values represented by 4 bits is 24 16 and the number by 7 bits is 27 128 (Figure 11.4).

Why 8 bits? It is based on the human visual system response. We are used to viewing photographic images and seeing a continuous range of tones and colours. This is because silver halide materials create changes in tone and colour by tiny silver halide particles or dye clouds, which are too small to be distinguished. The random particle layers add to the illusion, creating the appearance of continuous tone. In digital images the image is made up of discrete non-overlapping pixels which can only take certain values. To give the impression of continuous tone, the pixels have to be small enough not to be distinguished when viewed at a normal viewing distance with enough discrete steps in the range of values to fool the eye into seeing smooth changes in tone or colour.

Figure 11.4 Bit depth and numbers of levels. The number of bits per pixel defines the number of unique binary codes and therefore how many discrete grey levels or pixel values may be represented.

Our ability to discriminate between individual tones and colours is complicated, because it is affected by factors such as ambient lighting level, but under normal daylight conditions the human visual system needs the tonal range from shadow to highlight to be divided into between 120 and 190 different levels to see continuous tone. Fewer than this and the image will appear posterized, an image artefact that is a result of insufficient sampling of the tonal range, resulting in large jumps between pixel values. This is particularly problematic in areas containing smoothly changing tone where this quantization artefact appears as contour lines (see Figure 11.16). 8 bits will produce 256 discrete levels, which prevents this and leaves a few bits extra. For an RGB image, 8 bits per channel gives a total of 24 bits per pixel and produces over 16 million different colours.

8 Bit versus 16 bit workflow

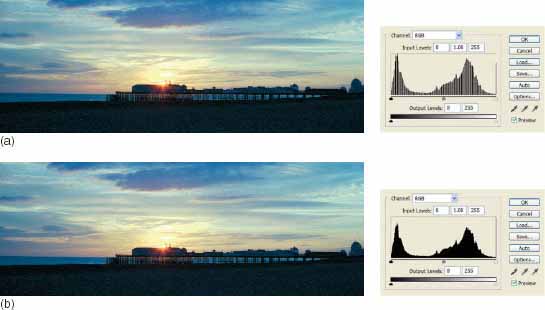

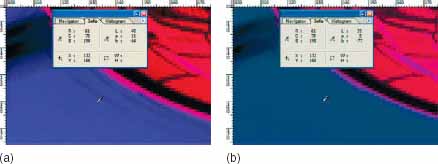

Often, image-capture devices will capture more than 8 bits. It is the process of quantization by the analogue-to-digital converter that allocates the tonal range to 8 bits or 16 bits. The move towards 16 bit imaging is possible as a result of better storage and processing in computers – working with 16 bits per channel doubles the file size. 8 bits is adequate to represent smoothly changing tone, but as the image is processed through the imaging chain, particularly tone and colour correction, pixel values are reallocated which may result in some pixel values missing completely, causing posterization. Starting with 16 bits produces a more finely sampled tonal range helping to avoid this problem. Figure 11.5 illustrates the difference between an 8 bit and a 16 bit image after some image processing. Their histograms show this particularly well: the 8 bit histogram clearly appears jagged, as if beginning to break down, while the 16 bit histogram remains smooth.

Figure 11.5 (a) Processing operations such as levels adjustments can result in the image histogram of an 8 bit image having a jagged appearance and missing tonal levels (posterization). (b) 16 bit images have more tones available, therefore the resulting histogram is smoother and more complete, indicating fewer image artefacts.

Using a 16 bit workflow can present an opportunity to improve and maintain image quality, but may not be available throughout the imaging chain. Certain operations, particularly filtering, are not available for anything but 8 bit images. Additionally, 16 bit images are not supported by all file formats.

Image modes

Digital image data consists of sets of numbers representing pixel values. The image mode provides a global description of what the pixel values represent and the range of values that they can take. The mode is based on a colour model, which defines colours numerically. Some of the modes are only available in image processing software, whereas others, such as RGB, can be used throughout the imaging chain. The common image modes are as follows:

• RGB – Three colour channels representing red, green and blue values. Each channel contains 8 or 16 bits per pixel.

• CMYK – Four channel mode. Values represent cyan, magenta, yellow or black, again 8 or 16 bits per pixel per channel.

• LAB – Three channels, L representing tonal (luminance) information and A and B colour (chrominance) information. LAB is actually the CIELAB standard colour space (see page 79, Chapter 4).

• HSL (and variations) – These three-channel models produce colours by separating hue from saturation and lightness. These are the types of attributes we often use to describe colours verbally and so can be more intuitive to understand and visualize colours represented in red, green and blue. However they are very non-standardized and do not relate to the way any digital devices produce colour, so tend not to be widely used in photography.

• Greyscale – This is a single-channel mode, where pixel values represent neutral tones, again with a bit depth of 8 or 16 bits per pixel.

• Indexed – These are also called paletted images, and contain a much reduced range of colours to save on storage space and are therefore only used for images on the web, or saturated graphics containing few colours. The palette is simply a look-up table (LUT) with a limited number of entries (usually 256); at each position in the table three values are saved representing RGB values. At each pixel, rather than saving three values, a single value is stored, which provides an index into the table, and outputs the three RGB values at that index position to produce the particular colour. Converting from RGB to indexed mode can be performed in image processing software, or by saving in the graphics interchange format (GIF) file format.

Layers and alpha channels

Layers are used in image processing applications to make image adjustments easier to fine tune and to allow the compositing of elements from different images in digital montage. Layers can be thought of as ‘acetates’ overlying each other, on top of the image, each representing different operations or parts of the image. Layers can be an important part of the editing process, but vastly increase file size, and therefore are not usually saved after the image has been edited to its final state for output – the layers are merged down. If the image is in an unfinished state, then file format must be carefully selected to ensure that layers will be preserved.

Alpha channels are an extra greyscale channel within the image. They are designed for compositing and can perform a similar function to layers, but are stored separately and can be saved and even imported into other applications, so are much more permanent and versatile. They can be used in various different ways, for example to store a selection which can then be edited later. They can also be used as masks when combining images, the value at each pixel position defining how much of the pixel in the masked image is blended or retained. They are sometimes viewed as transparencies, with the value representing how transparent or opaque a particular pixel is. Like layers, alpha channels increase file size, but are generally a more permanent part of the image. Again support depends on the file format selected, although more formats support alpha channels than layers.

Choosing file format

There are many different formats available for the storage of images. As previously discussed, the development of file format standards allows use across multiple platforms to allow interoperability between systems. Many standards are free for software developers and manufacturers to use, or may be used under licence. Importantly, the code on which they are based is standardized, meaning that a JPEG file from one camera is similar or identical in structure to a JPEG file from another and will be decoded in the same way.

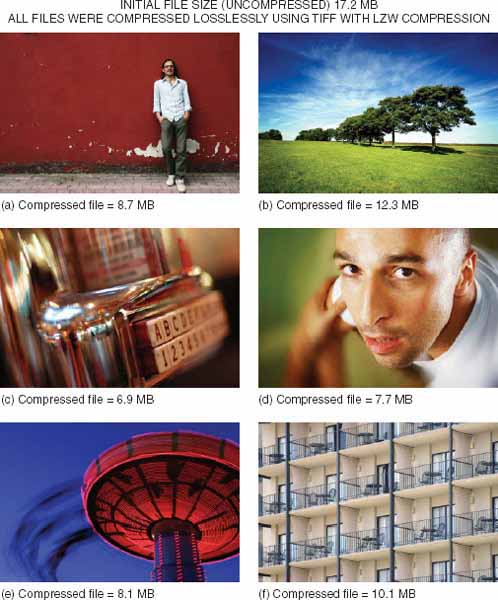

There are other considerations when selecting file format. The format may determine, for example, maximum bit depth of the image, whether lossless or lossy compression has been applied, whether layers are retained separately or flattened, whether alpha channels are supported and a multitude of other information that may be important for the next stage. These factors will affect the final image quality; whether the image is identical to the original or additional image artefacts have been introduced, whether it is in an intermediate editing state or in its final form and of course, the image file size. Whichever format is selected, will contain more than just the image data and therefore file sizes vary significantly from the file size calculated from the raw data, depending on the way that the data is ‘packaged’, whether the file is compressed or not and also the content of the original scene (Figure 11.6).

Figure 11.6 File sizes using different formats. The table shows file sizes for the same image saved as different file formats.

Image compression

The large size of image files has led to the development of a range of methods for compressing images, and this has important implications in terms of workflow. There are two main classes of compression; the first is lossless compression, an example being the LZW compression option incorporated in TIFF, which organizes image data more efficiently without removing any information, therefore allowing perfect reconstruction of the image. Lossy compression, such as that used in the JPEG compression algorithm, discards information which is less important visually, achieving much greater compression rates with some compromise to the quality of the reconstructed image. There is a trade-off between resulting file size and image quality in selecting a compression method. The method used is usually determined by the image file format. Selecting a compression method and file format therefore depends upon the purpose of the image at that stage in the imaging chain.

Lossless compression

Lossless compression is used wherever it is important that the image quality is maintained at a maximum. The degree of compression will be limited, as lossless methods tend not to achieve compression ratios of much above 2:1 (that is, the compressed file size is half that of the original) and the amount of compression will also depend upon image content. Generally, the more fine detail that there is in an image, the lower the amount of lossless compression that will be possible (Figure 11.7). Images containing different scene content will compress to different file sizes.

Figure 11.7 Compressed file size and image content. The contents of the image will affect the amount of compression that can be achieved with both lossless and lossy compression methods.

If it is known that the image is to be output to print, then it is usually best saved as a lossless file. The only exception to this is some images for newspapers, where image quality is sacrificed for speed and convenience of output. As a rule, if an image is being edited, then it should be saved in a lossless format (as it is in an intermediate stage). Some lossless compression methods can actually expand file size, depending upon the image; therefore if image integrity is paramount and other factors such as the necessity to include active layers with the file are to be considered, it is often easier to use a lossless file format with no compression applied. Because of this, the formats commonly used at the editing stage will either incorporate a lossless option or no compression at all. File formats which do include lossless compression as an option include: TIFF, PNG (portable network graphics) and a lossless version of JPEG 2000.

If an image is to be archived, then it is vital that image quality is maintained and TIFF or RAW files will usually be used. In this case, compression is not usually a key consideration. Image archiving is dealt with in Chapter 14.

Lossy compression

There are certain situations, however, where it is possible to get away with some loss of quality to achieve greater compression, which is why the JPEG format is almost always available as an option in digital cameras. Lossy compression methods work on the principle that some of the information in an image is less visually important, or even beyond the limits of the human visual system and use clever techniques to remove this information, still allowing reasonable reconstruction of the images. These methods are sometimes known as perceptually lossless, which means that up to a certain point, the reconstructed image will be virtually indistinguishable from the original, because the differences are so subtle (Figure 11.8).

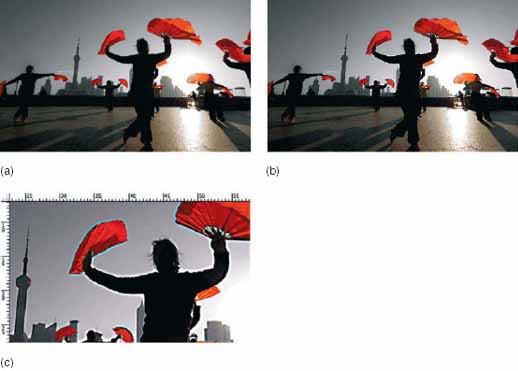

Figure 11.8 Perceptually lossless compression: (a) original uncompressed image and (b) image compressed to a compression ratio of 10:1.

The most commonly used lossy image compression method, JPEG, allows the user to select a quality setting based on the visual quality of the image. Compression ratios of up to 100:1 are possible, with a loss in image quality which increases with decreasing file size (Figure 11.9).

More recently, JPEG 2000 has been developed, the lossy version of which allows higher compression ratios than those achieved by JPEG, again with the introduction of errors into the image and a loss in image quality. JPEG 2000 seems to present a slight improvement in image quality, but more importantly is more flexible and versatile than JPEG.JPEG 2000 has yet to be widely adopted, but is likely to become more popular over the next few years as the demands of modern digital imaging evolve.

Compression artefacts and workflow considerations

Lossy compression introduces error into the image. Each lossy method has its own characteristic artefacts. How bothersome these artefacts are is very dependent on the scene itself, as certain types of scene content will mask or accentuate particular artefacts. The only way to avoid compression artefacts is not to compress so heavily.

Figure 11.9 Loss in image quality compared to file size. When compressed using a lossy method such as JPEG, the image begins to show distortions which are visible when the image is examined close-up. The loss in quality increases as file size decreases. (a) Original image,3.9 MB, (b) JPEG Q6, 187 KB, (c) JPEG Q3, 119 KB and (d) JPEG Q0, 63.3 KB.

JPEG divides the image up into 64 64 pixel blocks before compressing each one separately. On decompression, in heavily compressed images, this can lead to a blocking artefact, which is the result of the edges of the blocks not matching exactly as a result of information being discarded from each block. This artefact is particularly visible in areas of smoothly changing tone. The other artefact common in JPEG images is a ringing artefact, which tends to show up around high-contrast edges as a slight ‘ripple’. This is similar in appearance to the halo artefact that appears as a result of oversharpening and therefore does not always detract from the quality of the image in the same way as the blocking artefact.

One of the methods by which both JPEG and JPEG 2000 achieve compression is by separating colour from tonal information. Because the human visual system is more tolerant to distortion in colours than tone, the colour channels are then compressed more heavily than the luminance channel. This means however that both formats can suffer from colour artefacts, which are often visible in neutral areas in the image.

Because JPEG 2000 does not divide the image into blocks before compression, it does not produce the blocking artefact of JPEG, although it still suffers to some extent from ringing, but the lossy version produces its own ‘smudging’ artefacts (Figure 11.10).

Figure 11.10 (a) Uncompressed image. Lossy compression artefacts produced by (b) JPEG and (c) JPEG 2000.

Because of these artefacts, lossy compression methods should be used with caution. In particular, it is important to remember that every time an image is opened, edited and resaved using a lossy format, further errors are incurred. Lossy methods are useful for images where file size is more important than quality, such as for images to be displayed on web pages or sent by email. The lower resolution of displayed images compared to printed images means that there is more tolerance for error.

Properties of common image file formats

TIFF (tagged image file format)

TIFF is the most commonly used lossless standard for imaging and one of the earliest image file formats to be developed and standardized. Until a few years ago, TIFF was the format of choice for images used for high-quality and professional output and of course for image archiving, either by photographers or by picture libraries. TIFF is also used in applications in medical imaging and forensics, where image integrity must be maintained. TIFF files are usually larger than the raw data itself unless some form of compression has been applied. A point to note is that in the later versions of Photoshop, TIFF files allow an option to compress using JPEG. If this option is selected the file will no longer be lossless. TIFF files support 16 bit colour images and in Photoshop also allow layers to be saved without being flattened down, making it a reasonable option as an editing format (although other formats are more suitable). The common colour modes are all supported, some of which can include alpha channels. The downside is the large file size and TIFF is not offered as an option in many digital cameras as a result of this, especially since capture using RAW files has now begun to dominate.

PSD (photoshop document)

PSD is Photoshop’s proprietary format and is the default option when editing images in Photoshop. It is lossless, and allows saving of multiple layers. Each layer contains the same amount of data as the original image; therefore saving a layer doubles the file size from the original. For this reason, file sizes can become very large and this makes it an unsuitable format for permanent storage. It is therefore better used as an intermediate format, with one of the other formats being used once the image is finished and ready for output.

EPS (encapsulated postscript)

This is a standard format which can contain text, vector graphics and bitmap (raster) images. Vector graphics are used in illustration and desktop publishing packages. EPS is used to transfer images and illustrations in postscript language between applications, for example images embedded in page layouts which can be opened in Photoshop. EPS is lossless and provides support for multiple colour spaces, but not alpha channels. The inclusion of all the extra information required to support both types of graphics means that file sizes can be very large.

PDF (portable document format)

PDF files, like EPS, provide support for vector and bitmap graphics and allow page layouts to be transferred between applications and across platforms. PDF also preserves fonts and supports 16 bit images. Again, the extra information results in large file sizes.

GIF (graphics interchange format)

GIF images are indexed, resulting in a huge reduction in file size from a standard 24 bit RGB image. GIF was developed and patented as a format for images to be displayed on the Internet, where there is tolerance for the reduction in colours and associated loss in quality to improve file size and transmission. GIF is therefore only really suitable for this purpose.

PNG (portable network graphics)

PNG was developed as a patent-free alternative to GIF for the lossless compression of images on the Web. It supports full 8 and 16 bit RGB images and greyscale as well as indexed images. As a lossless format, compression rates are limited and PNG images are not recognized by all imaging applications.

JPEG (joint photographic experts group)

JPEG is the most commonly used lossy standard. It supports 8 bit RGB, CMYK and greyscale images. When saving from a program such as Photoshop, the user sets the quality setting on a scale of 1–10 or 12 to control file size. In digital cameras there is less control, so the user will normally only be able to select low-, medium-, or high-quality settings. JPEG files can be anything from 1/10 to 1/100 of the size of the uncompressed file, meaning that a much larger number of images can be stored on a camera memory card. However the large loss in quality makes it an unsuitable format for high-quality output.

JPEG 2000

JPEG 2000 is a more recent standard, also developed by the JPEG committee, with the aim of being more flexible. It allows for 8 bit and 16 bit colour and greyscale images, supports RGB, CMYK, greyscale and LAB colour spaces and also preserves alpha channels. It allows both lossless and lossy compression, the lossless mode meaning that it will be suitable as an archiving format. The lossy version uses different compression methods from JPEG and aims to provide a slight improvement in quality for the same amount of compression. JPEG 2000 images have begun to be supported by some digital SLRs and there are plug-ins for most of the relevant image processing applications, however JPEG 2000 images can only be viewed on the Web if the browser has the relevant plug-in.

Image processing

There are a huge range of processes that may be applied to a digital image to optimize, enhance or manipulate the results. Equally, there are many types of software to achieve this, from relatively simple easy-to-use applications bundled with devices for the consumer, to high-end professional applications such as Adobe Photoshop. It is beyond the scope of this book to cover the full range of image processing, or to delve into the complexities of the applications. The Adobe Photoshop workspace and various tools are dealt with in more detail in Langford’s Basic Photography. There are however a number of processes that are common to most image processing software packages and will be used time and again in adjusting images; it is useful to understand these, in particular the more professional tools. The following sections concentrate on these key processes and how they fit into an image processing workflow.

Image processing techniques are fundamentally numerical manipulations of the image data and many operations are relatively simple. There are often multiple methods to achieve the same effect. Image processing is applied in some form at all stages in the imaging chain (Figure 11.11).

The operations may be applied automatically at a device level, for example by the firmware in a digital camera, with no control by the user; they may also be applied as a result of scanner, camera or printer software responding to user settings; and some functions are applied ‘behind the scenes’, such as the gamma correction function applied to image values by the video graphics card in a computer monitor to ensure that image tones are displayed correctly. The highest level of user control is achieved in a dedicated application such as Adobe Photoshop or Image J.

Figure 11.11 Image processing through the imaging chain.

Because the image is being processed throughout the image chain, image values may be repeatedly changed, redistributed, rounded up or down and it is easy for image artefacts to appear as a result of this. Indeed, some image processing operations are applied automatically to correct for the artefacts introduced by other operations, for example image sharpening in the camera, which is applied to counteract the softening of the image caused by noise removal algorithms and interpolation. When the aim is to produce professional quality photographic images, the approach to image editing has to be ‘less is more’, avoiding overapplication of any method, applying by trial and error, using layers and history to allow correction of mistakes, with care taken in the order of application and an understanding of why a particular process is being carried out.

Using layers

Making image adjustments using layers is an option in the more recent versions of applications such as Photoshop and allows image processing operations to be applied and the results seen, without being finalized. A background layer contains the unchanged image information, and instead of changing this original information, for example, a tonal adjustment can be applied to all or part of the image in an adjustment layer. While the layer is on top of the background and switched ‘on’ the adjustment can be seen on the image. If an adjustment is no longer required, the layer can be discarded. Selections can also be made from the image and copied into layers, meaning that adjustments may be performed to just that part of the image, or that copied part can be imported into another image. Layers can be grouped, their order can be changed to produce different effects, their opacity changed to alter the degree by which they affect the image and they can be blended into each other using a huge range of blending modes. Layers can also be made partially transparent, using gradients, meaning that in some areas the lower layers or the original image state will show through. Working with layers allows image editing to become an extremely fluid process, with the opportunity to go back easily and correct, change or cancel operations already performed. When the editing process is finished, the layers are flattened down and the effects are then permanent.

Image adjustment and restoration techniques

Image adjustments are a basic set of operations applied to images post-capture to optimize quality and image characteristics, possibly for a specific output. These operations can also be applied in the capture device (if scanning) after previewing the image, or in RAW processing and Lightroom software. Image adjustments include cropping, rotation, resizing, tone and colour adjustments. Note that image resizing may be applied without resampling to display image dimensions at a particular output resolution – this does not change the number of pixels in the image and does not count as an image adjustment.

Image correction or restoration techniques correct for problems at capture. These can be globally applied or may be more local adjustments, i.e. applied only to certain selected pixels, or applied to different pixels by different amounts. Image corrections include correction of geometric distortion, sharpening, noise removal, localized colour and tonal correction and restoration of damaged image areas. It is not possible to cover all the detailed methods available in all the different software applications to implement these operations; however some general principles are covered in the next few sections, along with the effects of overapplication.

Image processing workflow

The details of how adjustments are achieved vary between applications, but they have the same purpose, therefore a basic workflow can be defined. You may find alternative workflows work better for you, depending upon the tools that you use; what is important is that you think about why you are performing a particular operation at a particular stage and what the implications are of doing things in that order. It will probably require a good degree of trial and error. A typical workflow might be as follows:

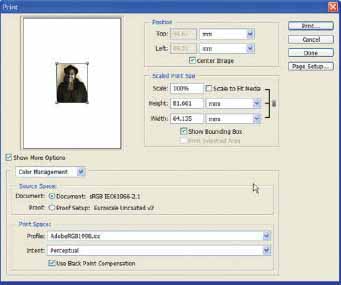

1. Image opened, colour profile identified/assigned: In a colour profiled workflow, the profile associated with the image will be applied when the image is opened (see later section on ICC colour management). This ensures that the image colours are accurately displayed on screen.

2. Image resized: This is the first of a number of resampling operations. If the image is to be resized down, then it makes sense to perform it early in the workflow, to reduce the amount of time that other processes may take.

3. Cropping, rotation, correction of distortion: These are all spatial operations also involving resampling and interpolation and should be performed early in the workflow allowing you to frame the image and decide which parts of the image content are important or less relevant.

4. Setting colour temperature: This applies to Camera RAW and Lightroom, as both are applications dealing with captured images, and involves an adjustment of the white point of the image, to achieve correct neutrals. This should be performed before other tone or colour correction, as it will alter the overall colour balance of the image.

5. Global tone correction: This should be applied as early as possible, as it will balance the overall brightness and contrast of the image and may alter the colour balance of the image. There are a range of exposure, contrast and brightness tools available in applications such as Photoshop, Lightroom and Camera RAW. The professional tools, allowing the highest level of user control, especially over clipping of highlights or shadows, are based upon levels or curves adjustments, which are covered later in this chapter.

6. Global colour correction: After tone correction, colours may be corrected in a number of ways. Global corrections are usually applied to remove colour casts from the image. If across the whole range from shadows to highlights, then this is easier, changing a single or several colour channels; and the simpler tools, such as ‘photo filter’ in Photoshop, will be successful. More commonly there will be just part of the brightness range, such as the highlights that will need correcting, in which case altering the curves across the three channels affords more control. There are other simple tools such as colour balance in Photoshop, and the saturation tool. Care should be taken with these, as they can produce rather crude results and may result in an increase in colours that are out-of-gamut (i.e. they are outside the colour gamuts of some or all of the output devices and therefore cannot be reproduced accurately).

7. Noise removal: There are a range of filters created specifically for removal of various types of noise. Applying them globally may remove unwanted dust and scratches, but care should be taken as they often result in softening of edges. Because of this, it is often better to apply them in Photoshop if possible, as you have a greater range of filters at your disposal and you can use layers to fine-tune the result.

8. Localized image restoration: These are the corrections to areas that the noise removal filters did not work on. They usually involve careful use of some of the specially designed restoration tools in Photoshop, such as the healing brush or the patch tool. In other packages this may involve using some form of paint tool.

9. Local tone and colour corrections: These are better carried out after correction for dust and scratches. They involve the selection of specific areas of the image, followed by correction as before.

10. Sharpening: This is also a filtering process. Again, there are a larger range of sharpening tools available in Photoshop and a greater degree of control afforded using layers.

Image resizing, cropping, rotation and correction of distortion

These are resampling operations that involve the movement or deletion of pixels or the introduction of new pixels. They also involve interpolation, which is the calculation of new pixel values based on the values of their neighbours. Cropping alone involves dropping pixels without interpolation, but is often combined with resizing or rotation. Depending upon where in the imaging chain these operations are performed, the interpolation method may be predefined and optimized for a particular device, or may be something that the user selects. The main methods are as follows:

• Nearest neighbour interpolation is the simplest method, in which the new pixel value is allocated based on the value of the pixel closest to it.

• Bilinear interpolation involves the calculation of the new pixel value by taking an average of its four closest neighbours and therefore produces significantly better results than nearest neighbour sampling.

• Bicubic interpolation involves a complicated calculation involving 16 of the pixel’s neighbouring values. The slowest method also produces the best results with fewer visible artefacts and therefore is the best technique for maintaining image quality.

Interpolation artefacts and workflow considerations

In terms of workflow, these operations are usually the first to be applied to the image, as it makes sense to decide on image size and content before applying colour or tonal corrections to pixels that might not exist after resampling has been applied. Because interpolation involves the calculation of missing pixel values it inevitably introduces a loss in quality.

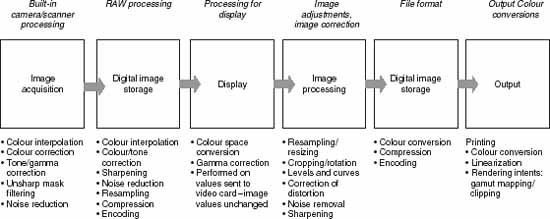

Nearest neighbour interpolation is a rather crude method of allocating pixel values and produces an effective magnification of pixels as well as a jagged effect on diagonals (see Figure11.12(b)) – this is actually an aliasing artefact – see Chapter 6 – as a result of the edge being undersampled. Both are so severe visually that it is hard to see when the method would be selected as an option, especially when the other methods produce much more pleasing results.

Bilinear and bicubic interpolation methods, however, involve a process of averaging surrounding values. Averaging processes produce another type of artefact, the blurring of edges and fine detail (see Figure 11.12(c) and (d)). This again is more severe the more that the interpolation is applied. Because bicubic interpolation uses more values in the calculation of the average, the smoothing effect is not as pronounced as it is with bilinear.

Figure 11.12 Interpolation artefacts. (a) Original image. A small version of this image is resampled to four times its original size using various interpolation methods. (b) Nearest neighbour interpolation shows staircasing on diagonals. (c) Bilinear interpolation produces significant blurring on edges. (d) There is some blurring with bicubic interpolation, but it clearly produces the best results of the three.

Repeated application of any of these operations simply compounds the loss in image quality, as interpolated values are calculated from interpolated values, becoming less and less accurate. For this reason, these operations are best applied in one go wherever possible. Therefore, if an image requires rotation by an arbitrary amount, find the exact amount by trial and error and then apply it in one application rather than repeating small amounts of rotation incrementally. Equally, if an image requires both cropping and perspective correction, perform both in a combined single operation to maintain maximum image quality.

Tone and colour corrections

These are methods for redistributing values across the tonal range in one or more channels to improve the apparent brightness or contrast of the image, to bring out detail in the shadows or the highlights or to correct a colour cast. These corrections are applied extensively throughout the imaging chain, to correct for the effects of the tone or gamut limitations of devices, or for creative effect, to change the mood or lighting in the image.

Brightness and contrast controls

Figure 11.13 Auto brightness control applied to the image.(a)(b)(e)

Figure 11.14 Levels adjustment to improve tone and contrast: (a) original image and its histogram, (b) shadow control levels adjustment, (c) highlight control levels adjustment, (d) mid-tone adjustment and (e) final image and histogram.

The simplest methods of tonal correction are the basic brightness and contrast settings found in most image processing interfaces, which involve the movement of a slider or a number input by the user (Figure 11.13). These are not really professional tools, and are often simple additions or subtractions of the same amount to all pixel values, or multiplication or division by the same amount and mean that there is relatively limited control over the process. With even less control are the ‘auto’ brightness and contrast tools which are simply applied to the image and allow the user no control whatsoever, often resulting in posterization as a result of lost pixel values.

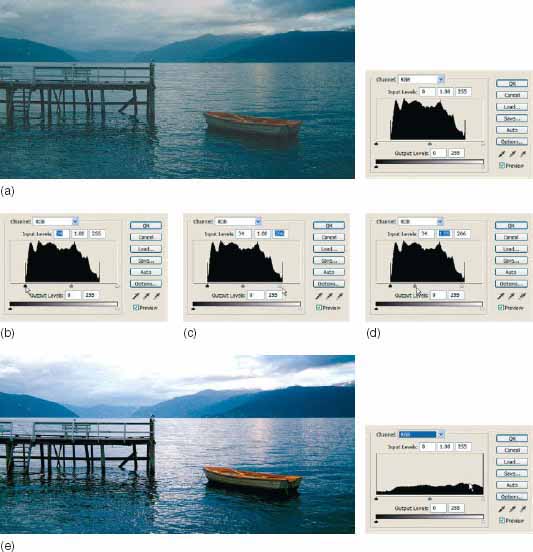

Adjustments using levels

Levels adjustments use the image histogram and allow the user interactive control over the distribution of tones in shadows, midtones and highlights. A simple technique for improving the tonal range is illustrated in Figure 11.14, where the histogram of a low-contrast image is improved by (1) sliding the shadow control to the edge of the left-hand side of the range of tonal values, (2) sliding the highlight slider to the right-hand side of the range and (3) sliding the mid-tone slider to adjust overall image brightness.

The same process can be applied separately to the channels in a colour image. Altering the shadow and highlight controls of all three channels will improve the overall image contrast. Altering the mid-tone sliders by different amounts will alter the overall colour balance of the image.

Curves

These are manipulations of the tonal range using the transfer curve of the image, which is a simple mapping function allowing very precise control over specific parts of the tonal range. The curve shows output (vertical axis) plotted against input (horizontal axis). Shadows are usually at the bottom left and highlights at the top right. Before any editing it is displayed as a straight line at 45° (Figure 11.15(a)). As with levels it is possible to display the combined curve, in this case RGB, or the curves of individual colour channels.

The curve can be manipulated by selecting an area and moving it. If the curve is at an angle steeper than 45°, and if this is applied globally to the full range of the curve as shown in Figure 11.15(b), then the contrast of the output image will be higher than that of the input. If the curve is not as steep as 45° then contrast will be lowered.

Figure 11.15 (a) Initial curve, (b) global contrast enhancement, (c) localized correction and(d) overcorrection as a result of too many selection points.

Multiple points can be selected to ‘peg down’ areas of the curve allowing the effect to be localized to a specific range of values. The more points that are added around a point in question, the more localized the control will be. Again, a steeper curve will indicate an increase in contrast and shallower a decrease (Figure 11.15(c) and (d). Using a larger number of selected points allows a high degree of local control, however it is important to keep on checking the effect on the image, as too many ‘wiggles’ are not necessarily a good thing: in the top part of the curve in Figure 11.15(d), the distinctive bump actually indicates a reversal of tones.

Using curves to correct a colour cast

This is where having an understanding of basic colour theory is useful. Because the image is made up of only three (or four) colour channels, then most colour casts can be corrected by using one of these. Look at the image and identify what the main hue of the colour cast is. From this, you can work out which colour channel to correct. Both the primary and its complementary colour will be corrected by the same colour channel:

Figure 11.16 (a) Original image. Posterized image (b) and its histogram (c).

Artefacts as a result of tone or colour corrections

As with all image processes, overzealous application of any of these methods can result in certain unwanted effects in the image. Obvious casts may be introduced as a result of overcorrecting one colour channel compared to the others. Overexpansion of the tonal range in any part can result in missing values and a posterized image (see Figure 11.16). Lost levels cannot be retrieved without undoing the operation, therefore should be avoided by applying corrections in a more moderate way and by using 16 bit images wherever possible. Another possible effect is the clipping of values at either end of the range, which will result in loss of shadow detail and burning out of highlights and will show as a peak at either end of the histogram.

Filtering operations

Both noise removal and image sharpening are generally applied using filtering. Spatial filtering techniques are neighbourhood operations, where the output pixel value is defined as some combination or selection from the neighbourhood of values around the input value. The methods discussed here are limited to the filters used for correcting images, not the large range of special effects creative filters in the filter menu of image editing software such as Adobe Photoshop.

The filter (or mask) is simply a range of values which are placed over the neighbourhood around the input pixel. In linear filtering the values in the mask are multiplied by the values in the image at neighbourhood at the same point and the result is added together and sometimes averaged. Blurring and sharpening filters are generally of this type. Non-linear filters simply use the mask to select the neighbourhood. Instead of multiplying the neighbourhood with mask values, the selected pixels are sorted and a value from the neighbourhood output, depending on the operation being applied. The median filter is an example, where the median value is output, eliminating very high or low values in the neighbourhood, making it very successful for noise removal.

Figure 11.17 Filtering artefacts: (a) Original image (b) Noise removal filters can cause blurring and posterization, and oversharpening can cause a halo effect at the edges. This is clearly shown in (c) which illustrates a magnified section of the sharpened image.

Noise removal

There are a range of both linear and non-linear filters available for removing different types of noise and specially adapted versions of these may also be built-in to the software of capture devices. Functions such as digital ICE™ for suppression of dust and scratches in some scanner software are based on adaptive filtering methods. The linear versions of noise removal filters tend to be blurring filters, and result in edges being softened; therefore care must be taken when applying them (Figure 11.17). Non-linear filters such as the median filter, or the ‘dust and speckles’ filter in Photoshop are better at preserving edges, but can result in posterization if applied too heavily.

Sharpening

Sharpening tends to be applied using linear filters. Sharpening filters emphasize edges, but may also emphasize noise, which is why sharpening is better performed after noise removal. The unsharp mask is a filter based upon a method used in the darkroom in traditional photographic imaging, where a blurred version of the image is subtracted from a boosted version of the original, producing enhanced edges. This can be successful, but again care must be taken not to oversharpen. As well as boosting noise, oversharpening produces a characteristic ‘overshoot’ at edges, similar to adjacency effects, known as a halo artefact (Figure 11.17 (c)). For this reason sharpening is better performed using layers, where the effect can be carefully controlled.

Digital colour

Although some early colour systems used additive mixes of red, green and blue, colour in film-based photography is predominantly produced using subtractive mixes of cyan, magenta and yellow (Chapter 4). Both systems are based on trichromatic matching, i.e. colours are created by a combination of different amounts of three pure colour primaries.

Digital input devices and computer displays operate using additive RGB colour. At the print stage, cyan, magenta and yellow dyes are used, usually with black (the key) added to account for deficiencies in the dyes and improve the tonal range. In modern printers, more than three colours may be used (six or even eight ink printers are now available and the very latest models by Canon and Hewlett Packard use 10 or 12 inks to increase the colour gamut), although they are still based on a CMY(K) system (see Chapter 10).

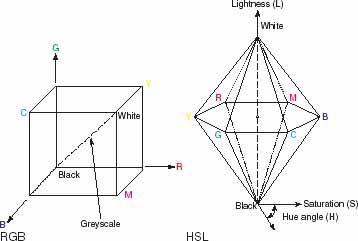

An individual pixel will therefore usually be defined by three (or four, in the case of CMYK) numbers defining the amount of each primary. These numbers are coordinates in a colour space. A colour space provides a three-dimensional (usually) model into which all possible colours may be mapped (see Figure 11.18). Colour spaces allow us to visualize colours and their relationship to each other spatially (see Chapter 4). RGB and CMYK are two broad classes of colour space, but there are a range of others, as already encountered, some of which are much more specific and defined than others (see next section). The colour space defines the axes of the coordinate system, and within this, colour gamuts of devices and materials may then be mapped; these are the limits to the range of colours capable of being reproduced.

The reproduction of colour in digital imaging is therefore more complex than that in traditional silver halide imaging, because both additive and subtractive systems are used at different stages in the imaging chain. Each device or material in the imaging chain will have a different set of primaries. This is one of the major sources of variability in colour reproduction. Additionally, colour appearance is influenced by how devices are set up and by the viewing conditions. All these factors must be taken into account to ensure satisfactory colour. As an image moves through the digital imaging chain, it is transformed between colour spaces and between devices with gamuts of different sizes and shapes: this is the main problem with colour in digital imaging. The process of ensuring that colours are matched to achieve adequately accurate colour and tone reproduction requires colour management.

Colour spaces

Colour spaces may be divided broadly into two categories: device-dependent and device-independent spaces. Device-dependent spaces are native to input or output devices. Colours specified in a device-dependent space are specific to that device, they are not absolute. Device-independent colour spaces specify colour in absolute terms. A pixel specified in a device-independent colour space should appear the same, regardless of the device on which it is reproduced.

Device-dependent spaces are defined predominantly by the primaries of a particular device, but also by the characteristics of the device, based upon how it has been calibrated. This means, for example, that a pixel with RGB values of 100, 25 and 255, when displayed on two monitors from different manufacturers, will probably be displayed as two different colours, because in general the RGB primaries of the two devices will be different. Additionally, as seen in Chapter 4, the colours in output images are also affected by the viewing conditions. Even two devices of the same model from the same manufacturer will produce two different colours if set up differently (see Figure 11.19). RGB and CMYK are generally device dependent, although they can be standardized to become device independent under certain conditions (sRGB is an example).

Device-independent colour spaces are derived from CIEXYZ colourimetry, i.e. they are based on the response of the human visual system. CIELAB and CIELUV are examples. sRGB is actually a device calibrated colour space, specified for images displayed on a cathode-ray tube (CRT) monitor, if the monitor and viewing environment are correctly set up, then the colours will be absolute and it acts as a device-independent colour space.

Figure 11.18 RGB and HSL: colour spaces are multi-dimensional coordinate systems in which colours may be mapped R (red), G (green), B (blue), M (magenta), C (cyan) and Y (yellow).(a) (b)

Figure 11.19 Pixel values, when specified in device-dependent colour spaces will appear as different colours on different devices.

A number of common colour spaces separate colour information from tonal information, having a single coordinate representing tone, which can be useful for various reasons. Examples include hue, saturation and lightness (HSL) (see Figure 11.18) and CIELAB (see page 78); in both cases the Lightness channel (L) represents tone. In such cases, often only a slice of the colour space may be displayed for clarity; the colour coordinates will be mapped in two dimensions at a single lightness value, as if looking down the lightness axis, or at a maximum chroma regardless of lightness. Two-dimensional CIELAB and CIE xy diagrams are examples commonly used in colour management.

Figure 11.20 Gamut mismatch: The gamuts of different devices are often different shapes and sizes, leading to colours that are outof-gamut for one device or the other. In this example, the gamut of an image captured in the Adobe RGB (1998) colour space is wider than the gamut of a CRT display.

Colour gamuts

The colour gamut of a particular device defines the possible range of colours that the device can produce under particular conditions. Colour gamuts are usually displayed for comparison in a device-independent colour space such as CIELAB or CIE Yxy. Because of the different technologies used in producing colour, it is highly unlikely that the gamuts of two devices will exactly coincide. This is known as gamut mismatch (see Figure 11.20). In this case, some colours are within the gamut of one device but lie outside that of the other; these colours tend to be the more saturated ones. A decision needs to be made about how to deal with these out-of-gamut colours. This is achieved using rendering intents in an ICC colour management system. They may be clipped to the boundary of the smaller gamut, for example, leaving all other colours unchanged, however this means that the relationships between colours will be altered and that many pixel values may become the same colour, which can result in posterization. Alternatively, gamut compression may be implemented, where all colours are shifted inwards, becoming less saturated, but maintaining the relative differences between the colours and so achieving a more natural result.

Colour management systems

A colour management system is a software module which works with imaging applications and the computer operating system to communicate and match colours through the imaging chain. Colour management reconciles differences between the colour spaces of each device and allows us to produce consistent colours. The aim of the colour management system is to convert colour values successfully between the different colour spaces so that colours appear the same or acceptably similar, at each stage in the imaging chain. To do this the colours have to be specified first.

The conversion from the colour space of one device to the colour space of another is complicated. As already discussed, input and output devices have their own colour spaces; therefore colours are not specified in absolute terms. An analogy is that the two devices speak different languages: a word in one language will not have the same meaning in another language unless it is translated; additionally, words in one language may not have a direct translation in the other language. The colour management system acts as the translator.

ICC colour management

In recent years, colour management has been somewhat simplified with the development of standard colour management systems by the International Color Consortium (ICC). ICC colour management systems have four main components:

1. Profile connection space (PCS)