CHAPTER 10

Risk and Control Self-Assessments

This chapter explores the role of risk and control self-assessment (RCSA) in the operational risk framework. Various RCSA methods are described and compared, and several scoring methodologies are discussed. RCSA challenges and best practices are explained, and the practical considerations that can help ensure the success of an RCSA program are outlined.

THE ROLE OF ASSESSMENTS

Risk and control self-assessments play a vital role in the operational risk framework.

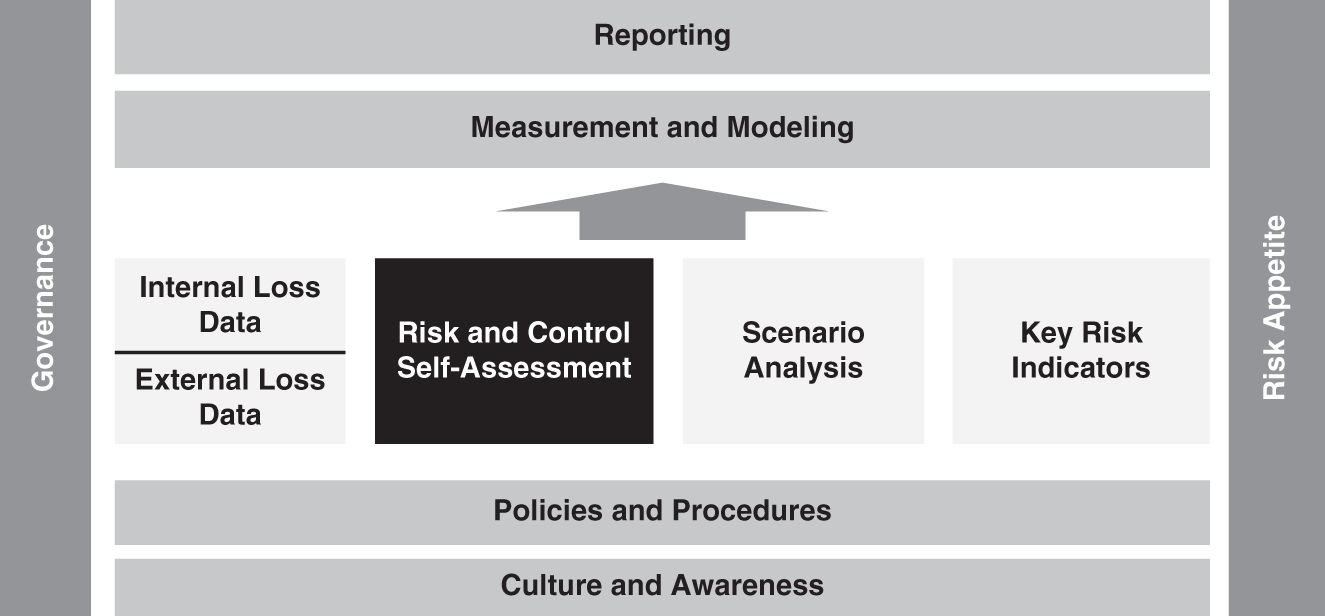

While operational risk event databases are effective in responding to past events, additional elements are needed in order to identify, assess, monitor, control, and mitigate events that have not yet occurred. A well-designed RCSA program provides insight into risks that exist in the firm, regardless of whether they have occurred before. The RCSA program fits into the operational risk framework as illustrated in Figure 10.1. While loss data allows us to look back at what has already happened, RCSA gives a tool to look forward at what might happen in the future. RCSA results often provide the best leading indicators of where risk needs to be mitigated.

Even if these risks are well understood by their owners, there is rarely a tool outside the operational risk framework that provides consistency and transparency in reporting, mitigating, and escalating these risks. For this reason, risk and control assessments are often the most enthusiastically adopted elements of the program, as they can quickly add value by providing a way for a department to articulate its risks.

However, they are also often the most troublesome elements, as finding the right way to manage the assessments that fits the culture of the firm, meets regulatory requirements, and meets the goals of identifying, assessing, and controlling operational risk can be very difficult.

FIGURE 10.1 Risk and Control Self-Assessment in the Operational Risk Framework

Many firms have experienced putting tremendous effort into rolling out RCSA programs only to find that they do not meet their needs and have to be redesigned and rolled out again. In fact, many firms have been through RCSA redesigns a few times already and may now be looking yet again at how to get this right.

The challenge is that the effort needed to populate the RCSA with valuable and accurate information can sometimes exceed the business benefit garnered from that information. The business benefit is being able to see your risks with transparency and make informed decisions about them.

The business benefits of an RCSA program are clear, but there may also be regulatory requirements that can be met through RCSAs. For example, Basel II firms that are taking an advanced approach to capital calculation have to show that they are including business environment and internal control factors in their calculation. These factors should reflect an understanding of the underlying business risk factors that are relevant to the firm and the effectiveness of the internal control environment in managing and mitigating those risks. Key risk indicators (KRIs) can be used to track those indicators, as discussed in Chapter 9. However, RCSA is best suited to identify which indicators are relevant and worthy of monitoring.

In the section on business environment internal control factors (BEICF), Basel II provides a good definition of RCSAs that can be applied to assessments undertaken in any operational risk framework:

… a bank's firm-wide risk assessment methodology must capture key business environment and internal control factors that can change its operational risk profile. These factors will make a bank's risk assessments more forward-looking, more directly reflect the quality of the bank's control and operating environments, help align capital assessments with risk management objectives, and recognize both improvements and deterioration in operational risk profiles in a more immediate fashion.1

RCSAs are used by Basel II Advanced Measurement Approach (AMA) firms to gather these factors, and there is further discussion in Chapter 12 on how these are then incorporated into the capital calculation. Newer and more simplified capital calculations are slated for implementation soon, but the value of a good RCSA program remains. The same methodologies are applicable to all firms as, regardless of regulatory requirements, the firm needs tools to allow it to meet the operational risk management goal of “recognizing both improvements and deterioration in operational risk profiles” to inform its decision making.

Risk and control self-assessment is a term that can refer to many different types of assessment. It should be clearly differentiated from control assessments and from risk and control assessments, neither of which have the “self” assessment characteristic.

Control Assessments

A simple control assessment is one that tests a control's effectiveness against set criteria and issues a pass/fail or level of effectiveness score. A control assessment is often done to the department by a third party, perhaps audit, compliance, or the Sarbanes-Oxley team.

Control assessment can produce output that is very useful to the RCSA program. For example, it may provide effectiveness scores for controls that can be leveraged in the RCSA program. Indeed, where a control has been assigned a score in a control assessment it is preferable to avoid reassessing that control. However, while this seems sensible, in practical terms it can prove difficult to leverage scores from other assessment programs unless the firm has adopted a standard taxonomy for controls, processes, and organizational structure. Without such taxonomies, mapping results from one assessment to another can be difficult.

Risk and Control Assessments

A risk and control assessment is similar to a control assessment, in that it is applied to an area by a third party. However, these do include a risk assessment in addition to a control assessment and so will incorporate several of the elements of the RCSA that will be further described below. As with control assessment, the results of these might be leveraged for the RCSA.

RCSAs

A risk and control self-assessment (RCSA) is distinguished from a control assessment and from a risk and control assessment by its subjective nature. While often facilitated by an operational risk manager, an RCSA is conducted by the department or business unit, and the scoring of risks and controls reflects not the view of a third party, but the view of the department or business itself.

It is the subjective perspective of the RCSA that presents both its biggest advantages and its strongest challenges.

The advantage of such an approach is that it further embeds the culture of operational risk management. Each department takes ownership of its own risks and controls and assesses the risks that may exist in its area. Empowered with this assessment the department can then prioritize mitigating actions and escalate risks that require higher authority for remediation.

The challenge of such an approach is that a subjective view can be considered as less accurate than an objective view, and there may be some skepticism over the scoring in the assessment. In practice, a well-designed RCSA program can produce accurate and transparent operational risk reporting that can be used effectively in the firm. It is important to never lose sight of the subjective nature of this element, however, and to be diligent in applying standards and strong facilitation throughout.

RCSAs should be included in the audit cycle, with each department audited as to its participation in the RCSA program and the reasonableness of their scoring. For example, loss data should be compared to RCSA scores as a check. If losses are high in an area that has been scored as low risk in the RCSA, that would raise a serious question as to the quality of the self-assessment and might result in an audit point. This has been raised by the regulators in recent years under their validation and verification requirements that were discussed in Chapter 4. There are now regulatory requirements that demand that RCSA and loss data be routinely compared to ensure that the RCSAs are reflecting the loss experience of the firm.

RCSA METHODS

There are several RCSA methods, and each has its own advantages and disadvantages. The main methods to consider are the questionnaire approach, the workshop approach, and the hybrid approach.

Questionnaire Approach

The questionnaire-based approach uses a template to present standard risk and control questions to participants. The content of the questionnaire is designed by the operational risk team, usually after intensive discussions across the firm. Each risk category or business process is analyzed and a list of related risks is prepared. For each risk, expected controls are identified.

The questionnaire is usually distributed to a nominated party in each department, who completes the questionnaire, providing self-assessed scores for each expected control, and risk levels (for example, high, medium, or low) and probabilities for each risk.

The level of complexity of questionnaire-based RCSAs' content and workflow varies enormously. Some questionnaire RCSAs ask participants to score just the controls (and in this case might be better named a control self-assessment or CSA). Others have several rounds of completion, the first being risk and control identification, the second being control effectiveness scoring by the control owners, and the last being residual risk scoring by the risk owners.

There are several advantages of a questionnaire-based RCSA method. The use of standard risks and controls makes it easier to consolidate reporting and identify cross-firm themes and trends. Also, the use of standard risks and controls ensures that a consistent approach is being taken across the firm and ensures that risks and controls that have been identified by the operational risk department are considered by every department.

These characteristics make a questionnaire-based RCSA particularly well suited to a firm that has multiple similar activities. For example, a bank that has many branches that offer the same products and services would be well served by a questionnaire-based RCSA. A fintech that has back office processes that are completed in multiple locations would benefit from this type of approach. The results can be collected electronically and the responses compared to identify themes, trends, and areas of potential control weakness or elevated risk.

Another advantage is that this method can take advantage of technology to distribute and collect questionnaires. Many software providers have entered this space with tools that provide good workflow functionality. Where firms have found that these off-the-shelf solutions do not meet their needs, they have developed their own RCSA workflow tools, with varying degrees of success.

There are also disadvantages to the questionnaire-based RCSA. If a firm does not have standard branches or repeated processes, then a standard RCSA might be more frustrating than it is helpful. The assessed business areas may push back on a questionnaire that contains many risk and control areas that are not relevant to them.

Another disadvantage of the questionnaire-based approach is that it is usually sent to specific nominated parties for completion. For this reason, careful facilitation is required to ensure that a departmental view, not just one person's opinion, is being expressed in the assessment.

An additional potential weakness in the questionnaire-based approach is that the original design might be missing a key risk or control, and participants might not have an opportunity to, or may be reluctant to, raise new items.

In fact, a general challenge in any questionnaire-based task is that it can result in a “check all” mentality, where the participants simply check the boxes that are likely to result in the least follow-up work or that express an average score or the middle ground.

A questionnaire-based method is efficient and is highly effective in the right environment, but the supporting training and facilitation should not be underestimated in order to ensure that any disadvantages have been effectively overcome.

Workshop Approach

A workshop method RCSA is discussed in a group setting, with facilitation from the operational risk department. Each risk is discussed, and related controls are scored for effectiveness. Once the controls have been scored, the residual risk is scored, often on a high-medium-low scale, along with related probabilities. Alternatively, the exposure might be expressed in financial terms. Some workshops also collect other impact data, such as possible client impact, legal or regulatory impact, reputational impact, and life safety impact.

Workshops often run for two to three hours, and perhaps more than one session is needed for each RCSA. As such, they are time consuming for all involved and require a strong commitment from both the participants and the facilitators.

Preparation for the workshop is usually extensive, involving the review of past losses, audit, compliance, and Sarbanes-Oxley reports and interviews with business managers and support areas. There are several advantages to a workshop-based RCSA. Perhaps mostly importantly, it provides a forum for an in-depth discussion of the operational risks in the firm. For this reason, it can be effective in embedding operational risk management.

The group approach to scoring ensures that there has been full participation in the scoring, rather than a single view. However, reaching a true consensus can be challenging and requires strong facilitation skills.

The workshop session often results in new risks and controls being identified and so contributes to the richness of the operational risk framework.

Workshop-based approaches are generally more appropriate for firms that do not have consistent branches or processes, and that need more flexibility than can be offered in a questionnaire-based approach. For example, a financial services firm that does not have retail branches, but has fixed income, equity, and asset management divisions, might be better suited to a workshop-based approach so that the unique risks and controls in each area can be appropriately assessed.

However, as with the questionnaire approach, there are several disadvantages to the workshop approach. The flexibility can also result in inconsistency as risks and controls might be newly raised in several areas, perhaps with different terminology. Also, consolidating the results can be challenging as each workshop output might look very different to the others.

Another disadvantage is that the rollout of a workshop-based approach is extremely burdensome on the operational risk department and on the firm. Many people will be involved in the sessions and the preparation and facilitation can use up a large proportion of an operational risk department's resources.

Hybrid RCSA Methods

As the operational risk framework matures and evolves, RCSA design will also mature and evolve. In the meantime, some firms use both the questionnaire and workshop approaches in order to get the most out of their RCSA program. For example, a firm that used the workshop approach in its first year might then use the output from that workshop to design a questionnaire approach for the subsequent years.

Alternatively, a firm might alternate questionnaire and workshop approaches in order to ensure that new risks and controls are identified and that a full discussion of operational risk is undertaken on a regular basis.

A firm might implement a sophisticated RCSA technology system that supports a flexible and collaborative approach and so decide not to hold workshop RCSAs anymore.

A firm might adopt a questionnaire approach but set certain triggers that will result in a workshop being held for a particular risk category. For example, a trigger might arise if losses escalate in a particular risk category or process, or if a major external event occurs that suggests that a reassessment of that risk would be prudent.

RCSA SCORING METHODS

There are many different ways to produce scores from RCSAs. Most RCSAs require some score of the likely impact and probability of an event occurring. Some also require control effectiveness scores that might be entered directly or calculated from control design and performance scores. Some RCSAs might require scores for nonfinancial impacts such as reputational damages, client loss, legal or regulatory exposures, or even life safety impacts.

TABLE 10.1 Scoring Control Design and Performance

| Low | Medium | High | |

|---|---|---|---|

| Design | The design provides only limited protection when used correctly. | The design provides some protection when used correctly. | The design provides excellent protection when used correctly. |

| Performance | The control is rarely performed. | The control is sometimes performed. | The control is always performed. |

Scoring Control Effectiveness

A firm that has a Sarbanes-Oxley program in place might well have a control effectiveness scoring methodology in place. This might be leveraged for control scoring requirements in an RCSA. If there is no control scoring method in place, then one can be developed that assesses both the design and the performance of the control. One example of such a scoring method could be as shown in Table 10.1.

The design and performance scores for each control might then be combined to produce an overall effectiveness score as in Figure 10.2.

FIGURE 10.2 Control Effectiveness Scoring Matrix

In this example scale, a control that is well designed (H) but poorly performing (L) would have an overall control effectiveness score of low. Often, a red-amber-green, or RAG, rating will be used in assessments. In this example, controls that had an overall low effectiveness would produce a red result. The use of RAG ratings to visually highlight areas of concern can be very effective, but can also produce a strong reaction and so need to be used with caution.

An alternative control scoring method would be to have a list of control attributes for control design and have the overall design calculated or subjectively summarized based on those criteria. For example, a preventive control might be considered to be a stronger safeguard than a detective control and might help raise the score of that control. Similarly, an automated control would be considered stronger than a manual control.

It may also be possible to score control performance using key performance or key control indicators. As the RCSA matures, more and more key performance indicators (KPIs) and key control indicators (KCIs) will be identified, and these can be incorporated into the RCSA to provide more objective scoring for the controls where possible.

Each firm will determine its own appropriate control scoring method. Controls might be scored individually or as a group for each risk.

Risk Impact Scores

Some RCSAs simply require a financial impact score, for example, the maximum loss, the maximum plausible loss, or the likely loss amount.

Other RCSAs also require a score for other impact types on a scale that is provided. For example, a sample scale that provides high, medium, and low scores for several impact types is provided in Table 10.2.

The impact is usually scored on a residual scale that is the likely impact after all the controls are in place, or after the control effectiveness scores have been determined. Some RCSAs also score the inherent impact; that is the likely impact before controls are considered and this inherent impact score is sometimes used to prioritize the assessment of risks that have a high inherent impact. The inherent impact can be helpful in understanding the relative value of controls. However, some firms do not collect inherent values and focus only on risks that have a high residual impact.

Probability or Frequency

An RCSA might require a probability score in terms of the likelihood that the risk event could happen in the next 12 months. For example, if the event is likely to happen five times in the next 12 months, the probability would be 5. If it is likely to happen only once in the next 10 years, then the probability would be 0.1.

TABLE 10.2 A Risk Impact Scoring Scale That Includes Nonfinancial Impact Categories

| Impact Type | Low | Medium | High |

|---|---|---|---|

| Financial | Less than $100,000. | Between $100,000 and $1 million. | Over $1 million. |

| Reputational | Negative reputational impact is local. | Negative reputational impact is regional. | Negative reputational impact is global. |

| Legal or Regulatory | Breach of contractual or regulatory obligations, with no costs. | Breach of contractual or regulatory obligations with some costs or censure. | Breach of contractual or regulatory obligations leading to major litigation, fines, or severe censure. |

| Clients | Minor service failure to noncritical clients. | Minor service failure to critical client(s) or moderate service failure to noncritical clients. | Moderate service failure to critical clients or major service failure to noncritical clients. |

| Life Safety | An employee is slightly injured or ill. | More than one employee is injured or ill. | Serious injury or loss of life. |

Alternatively, the probability or frequency might simply be scored as high, medium, or low as shown in Table 10.3.

Risk Severity

Once the impact and frequency have been scored, some RCSAs combine these to give an overall risk severity score. This might be calculated using a combination of the scores as in Figure 10.3.

TABLE 10.3 Sample Scoring Method for Frequency or Probability

| Low | Medium | High | |

|---|---|---|---|

| Length of time between events | >5 years | Between 1 and 5 years | <1 year |

FIGURE 10.3 Risk Severity Scoring Matrix

Using this methodology, a score of low (L) for impact and high (H) for frequency would give an overall risk severity of medium (M). Once again, a RAG rating that indicates high scores as red, medium scores as amber, and low scores as green can be a powerful tool and should be used with caution.

Scoring scales need to be adapted to meet the risk appetites of the firm. One scoring method might be effective in one firm, but inappropriate in another. For this reason, scoring methods vary greatly from firm to firm.

RCSA BEST PRACTICES

There are several key elements to a successful RCSA program, regardless of approach taken. When designing and implementing an RCSA, it is prudent to consider the following elements.

Interview Participants Beforehand

To ensure that the RCSA is well designed and reflects the business processes and associated risks and controls in each department, it is important to spend time interviewing participants, stakeholders, and support functions prior to launching the RCSA.

Review Available Background Data from Other Functions

There will be valuable information available for preparation purposes in recent audit reports, compliance reviews, independent testing results, KPIs and KCIs, and Sarbanes-Oxley assessments. A review of these documents can provide insight into existing and recently remediated operational risks.

Review Past RCSAs and Related RCSAs

Once the RCSA program has been running for more than a year, past RCSAs should be reviewed when a department is conducting its next RCSA. There should also be a review of related RCSAs from departments that either provide support services to the department or rely on support from the department. These related RCSAs may have raised risks where the controls are owned by this department, and may have raised risks that the department needs to be aware of.

Review Internal Loss Data

Events that have been captured in the firm's operational risk event database provide a valuable backdrop, and help to identify the risks and control weaknesses that need to be addressed in the RCSA. They also demonstrate the possible impact and frequency of risk events and so can be used to validate assessments made during the RCSA.

Review of External Events

External events are also helpful in informing the discussions around potential risks. The RCSA is designed to consider all possible risks, not just those that have already occurred in the firm, but this can be a difficult task and examples of events in the industry are useful for this purpose.

Carefully Select and Train Participants

The RCSA participant(s) should be selected with care and trained in the RCSA method beforehand. It is helpful to include representatives from areas that support the department that is completing its RCSA, as they will have a (sometimes surprising) view on the effectiveness of the controls that they own. Ensure that control owners participate in scoring their own controls.

It can be helpful to have the head of the department included if it is a workshop-style RCSA, but only if their presence will not intimidate the other participants and so skew the results to just one view.

Document Results

The RCSA output should be consistently and carefully documented with an emphasis on providing evidential support for conclusions and scores whenever possible. Every detail of the discussions need not, and indeed probably should not, be recorded. However, the output must be captured in a way that can be reviewed, analyzed, and acted upon. This might mean that the results are put into a system or simply recorded in a spreadsheet or document, depending on the RCSA method used.

Regulatory expectations regarding the documentation of RCSA results have risen over the past few years and a subjective score is often not considered to be sufficient by the regulator. For this reason, many firms have been looking to adopt more objective control scoring methods and have been applying taxonomies for processes, risks, and controls. This is discussed further in this chapter under “Ensure Completeness Using Taxonomies.”

Score Appropriately

The RCSA scoring methodology should be appropriate for the firm and each firm should consider whether it might be beneficial to its operational risk management goals to include nonfinancial impacts such as reputational, legal, regulatory, client, and life safety, where appropriate.

Identify Mitigating Actions

An RCSA is incomplete without the identification of any actions that have been agreed upon during the assessment. These actions will be undertaken to lower any unacceptable risk levels, either by improving, changing, or adding a control. Generally, a high risk will need to be mitigated, unless the risk is accepted without mitigation. If the risk is accepted, then this should be clearly stated in the assessment. Action items need to be tracked in the operational risk framework, through to their completion. If a high risk is not going to be mitigated then there needs to be a transparent risk acceptance process to log that decision.

Implement Appropriate Technology

RCSA technology should be used appropriately to manage the process and to report on the outcome. An RCSA tool should support the methodology and provide access to reporting and analysis of the assessments.

Ensure Completeness Using Taxonomies

RCSAs should cover the entire firm and be complete and comprehensive; indeed, national regulatory standards often require this. In recent years, firms have taken this to heart and have been using several methods to demonstrate this.

First, it is important to show that all material areas of the firm have been covered. This can be done by using the organizational hierarchy and checking that all aspects of the hierarchy have participated in an RCSA.

Second, firms are now moving toward developing standard process taxonomies. These process taxonomies can be used by every area in the firm to identify processes that they undertake and to ensure that all of those processes are included in their RCSA program.

Third, firms are also moving to developing risk taxonomies. These taxonomies are often built out of the Basel II seven operational risk categories of Internal Fraud; External Fraud; Employment Practices and Workplace Safety; Clients, Products, and Business Practices; Damage to Physical Assets; Business Disruption and System Failures; and Execution, Delivery, and Process Management. It has proven helpful for many firms to develop their own risk taxonomy down to a Level 3 categorization.

Fourth, firms have moved toward developing control or control-type taxonomies.

Finally, all of these elements can be brought together to ensure completeness in the following way. The corporate operational risk function can work with the businesses and support functions to determine which of the risks in the risk taxonomy could arise in each process. They can also determine which of the control types in the control taxonomy could mitigate the risks in the risk taxonomy.

Armed with this information, an RCSA can be designed that captures all the departments, all the processes in each department, all the risks associated with those processes, and all the expected control types that can mitigate those risks.

This is, not surprisingly, a huge undertaking. Developing the taxonomies alone can require heroic efforts and collaboration across the firms. Even once the taxonomies have been agreed on, the size of RCSA that might result could be burdensome, and this will mean that a triaging or prioritization procedure will likely be needed. This procedure will need to be well documented and defensible if it is not going to undermine the goal of demonstrating completeness.

Finally, the maintenance of such taxonomies is a large and constant undertaking and needs to be owned by a function that has the capacity and authority to maintain it.

If such taxonomies and their mapping relationships are adopted across the firm by audit, compliance, technology risk, and other assessment functions, then the benefits may well outweigh the burden as they will all then be able to leverage each other's work.

Themes Identified

The whole RCSA program should be reviewed for the identification of firm wide themes that may require escalation. One of the important roles of the operational risk function is to take a step back from the details of the individual RCSAs and deduce where there are firm-wide themes that might need to be addressed. Several local solutions might be less effective than a firm-wide strategy to mitigate a particular risk.

For example, if several areas identified that they had difficulty training their staff in a timely way, and that this was impacting several risk scores, then the appropriate solution might be for the firm to improve its corporate training and development programs rather than addressing the training differently in each location.

Leverage Existing Assessments

Risks and controls may have been assessed as part of other programs in the firm, such as business continuity planning or Sarbanes-Oxley. If so, these assessments should be used in the operational risk RCSA, and every effort should be made to avoid repeating an assessment of a risk or of a control. This is important in order to protect the integrity of both the original assessment and the operational risk RCSA. Conflicting scores can cause serious problems, and it is frustrating for all involved if the work is merely repetitive. This is discussed in more depth in Chapter 16, under “Governance, Risk, and Compliance.”

Schedule Appropriately

Many firms conduct RCSAs on an annual basis. However, each firm should select an appropriate scheduling interval, and this might be monthly, quarterly, annually, or ad hoc in response to a certain trigger event.

The schedule should ensure that the information is not stale and that the burden of collecting the assessment does not outweigh the benefit in responding to the assessments with timely mitigation. Reporting on the remediation efforts generated by RCSA activity should occur more frequently, probably monthly, in order to ensure that risks are being mitigated as expected.

Risk and control self-assessments have a unique and powerful role to play in an effective operational risk program. The risk and control scores that are gathered during the RCSA are vital to meeting the goals of identifying, assessing, monitoring, controlling, and mitigating operational risk. RCSAs ensure that there is proactive risk management across the firm, to supplement the reactive risk management that occurs in response to loss events. The challenges with RCSAs are keeping them current, designing them to be relevant and valuable to participants and to senior management, and ensuring that they produce tracked actions.

It is worth spending time planning and piloting RCSA methods before use, and it is important to allow these methods to evolve as experience develops and as the operational risk management function matures.

Backtest or Validate Results

Regulatory expectations now require the validation of RCSA results. The simplest validation method is to compare loss data results with RCSA scores. If loss data suggest that an area produces significant losses in a particular risk category, but the RCSA is indicating low risk severity in that same area, then this should raise concerns. Such contradictions should lead to a review of the RCSA and the justification for the scoring in the RCSA. Backtesting and validation can (and should) be independently undertaken by the second line of defense: the corporate-level operational risk function.

KEY POINTS

- RCSAs provide an opportunity to look forward and consider what could occur in the future, whereas loss data focus on what has already occurred in the past.

- RCSAs come in many different forms and an appropriate method needs to be developed at each firm to meet its particular regulatory and business needs.

- RCSAs can be used to collect scores for the effectiveness of controls, the potential size and probability of a risk event's occurring, and the overall risk severity associated with a potential event.

- Workshop method RCSAs focus on group scoring and discussion while questionnaire method RCSAs often use standard templates and automated delivery methods.

- The qualitative nature of many RCSA methods raises challenges in interpreting and applying the results to ensure that appropriate risk management and mitigation activities can be implemented.

- Best practices for RCSA have matured in the past few years and can be leveraged to ensure a successful program is implemented.

REVIEW QUESTION

- Which of the following best describes how risk and control self-assessments (RCSA) can be used to manage fraud risk for Basel II?

- An RCSA can be used to gather business environment and internal control factors that relate to fraud risks.

- An RCSA should be used to collect fraud-related loss events.

- RCSAs are designed primarily to provide estimates of capital for fraud risk exposures.

- RCSAs are generally not designed to consider fraud risk.

- RCSAs only consider internal fraud risks and not external fraud risks.

NOTE

- 1 Bank for International Settlements, “International Convergence of Capital Measurement and Capital Standards: A Revised Framework,” 2004, section 676.