Chapter 9 Monitoring and Testing

Solutions in this chapter:

Monitor and Test

Monitor and Test Monitor and Track (Audit) Network and Data Access

Monitor and Track (Audit) Network and Data Access Periodically Test Systems (and Processes)

Periodically Test Systems (and Processes)

Summary

Summary Solutions Fast Track

Solutions Fast Track Frequently Asked Questions

Frequently Asked Questions

Introduction

Generally speaking, the best approach to any industry or government regulatory requirement has been to find a middle ground in terms of effort and cost to meet the spirit of the requirement, and then work with the auditor ahead of audit time to see how you’ve done. Generally, that approach reaps rewards that pay off in reduced “patching” of the effort. Obviously, meeting with the auditor before you start makes a lot of sense, but making certain the results meet with the auditor’s approval is where your Return on Investment (ROI) will show up. If the auditor is happy, then the card issuer will be happy.

This is certainly true where Requirements 10 and 11 of the Payment Card Industry (PCI) requirements come into play. Requirement 10, Monitoring, and Requirement 11, Testing, are easily capable of inflating PCI compliance costs to the point of consuming the small margins of card transactions. No one wants to lose money to be PCI compliant. Therefore, the ability to meet the requirements above all must make business sense. Nowhere else in PCI compliance does the middle ground of design philosophy more come into play than in the discipline of monitoring, but this is also where minimizing the risk can hurt most.

Monitoring Your PCI DSS Environment

PCI Data Security Standard (DSS) Requirement 10 states: “Track and monitor all access to network resources and cardholder data”. The requirement around monitoring is potentially broad and far-reaching, but there are boundaries to be determined, and that is the first, best step an Information Technology (IT) architect or engineer can take in determining the boundaries.

A PCI compliant operating environment is where the cardholder data exists. That data can never be allowed out of that environment without knowledge by way of auditable logging. Assuming you’ve designed your PCI environment to have appropriate physical and logical boundaries (through use of segregated networks and dedicated applications space), you should be able to identify the boundaries of your monitoring scope. If you haven’t done this part, go back to Requirement Number 1 and start over!

Once your boundaries are determined, it’s time to start digging into the details.

Establishing Your Monitoring Infrastructure

When an architect or engineer goes about designing a computer environment, he or she will be aware of basic components in the form of capabilities that enable functionality at the various layers of the network. These are the things that make Internetworking of computer platforms possible across hubs and switches and routers. These are also the things that make monitoring of these networked components reliable. It is reliability that makes for a well-constructed monitoring solution that will stand the test of an audit, and survive scrutiny in a courtroom (should that necessity arise).

Any successful operating environment is designed from the ground up, or, in the case of a networking infrastructure and applications space, from the wires on up. It’s important, therefore, to plan your monitoring of your PCI compliant operating environment the same way you designed it. But to play in this environment you need basic components you cannot do without.

Time

During early development of computer networks, scientists discovered quickly that all systems had to have a common point of reference to develop context for the data they were handling. The context was obviously a reliable source of time, since computer systems have no human capacity for cognitive reconstruction or memory. The same holds true today for monitoring systems. We would all look a bit foolish troubleshooting three-week-old hardware failures, so hardware monitoring had it right from day one. It seems fairly straightforward that time and security event monitoring would go hand in hand.

PCI requirement 10.4 states that the source for time in your environment must be configured for acquiring time from specific sources. Good monitoring systems (event management, network intrusion prevention) and forensic investigation tools rely on time. System time is frequently found to be arbitrary in a home or small office network. It’s whatever time your server was set at, or if you designed your network for some level of reliance, you’re systems are configured to obtain time synchronization from a reliable source, like the Naval Observatory Network Time Protocol (NTP) servers (see http://tycho.usno.navy.mil/ntp.html).

Subsequent network services on which a PCI compliant environment would rely include Domain Name System (DNS), directory services (such as Sun’s or Microsoft’s), and Simple Mail Transfer Protocol (SMTP) (e-mail). Each of these in turn rely on what are referred to as “time sources.” Stratum 1 time sources are those devices acquiring time data from direct sources like the atomic clocks run by various government entities or Global Positioning System (GPS) satellites. Local hardware, in fact, is considered Stratum 1; it gets time from its own CMOS. Stratum 2 gets their time from Stratum 1, and so on.

For purposes of PCI compliance, Stratum 2 is typically sufficient to “prove” time, as long as all systems in the PCI environment synchronize their clocks with the Stratum 2 source. Of course, PCI does not say anything about time synchronization. So what’s the big deal?

Event management. That’s the big deal. Oh, and PCI Requirement 10.4, too.

Here’s the rub: What is your source for accurate time? How do you ensure that all your platforms have that same reference point so the event that occurred at 12:13 P.M. GMT is read by your event management systems as having occurred at 12:13 P.M. GMT instead of 12:13 A.M.?

There are two facets to the approach. One is to make sure you have a certified source of time into your environment (see: http://tf.nist.gov/service/time-servers.html for a list of stratum 1 sources of time). Second is to make sure you have the means to reliably replicate time data across your network.

Stratum 2, as mentioned, is an acceptable source for your monitoring environment, and that data can be acquired via the Internet from the National Institute of Standards & Technology (NIST) sources as described in the text. By using a durable directory service, the time data can be advertised to all systems, assuring no worse than a 20-second skew. In an Active Directory forest, for example, the Primary Domain Controller (PDC) emulator serves as a Stratum 2 source. Servers in the forest operating the parameters of W32Time service (based on Simple Network Time Protocol [SNTP]) adhering to RFC 2030, can therefore provide adequate time synchronization to within 20 seconds of all other servers in the AD forest.

![]() TIP

TIP

Using a Stratum 1 source for time into your environment is not necessary for a business. Large enterprises use Stratum 1 sources (such as GPS satellites) but spend a lot of money to do it. Stratum 1 time acquisition is accomplished by using technology from companies like Symmetricom (http://www.ntp-sys-tems.com/), who produce NTP solutions around an appliance. The appliance itself would have to be wired to a satellite antenna that is then mounted on the roof of your data center or other facility. It’s an expensive solution.

Obviously, having reliable power and network is critical to this sort of approach, but it’s fairly easy to overlook. When you’re planning for PCI compliance, who looks at the clocks?

Active Directory servers can be configured as authoritative time servers. Read this technical article from Microsoft (http://support.microsoft.com/kb/816042) to find out how.

Identity Management

One might suppose that the basics of any infrastructure mandate a good identity management solution, perhaps based on Microsoft Active Directory or Novell eDirectory. Just like “Time,” PCI DSS does not say much about how you implement, only that you have a solution.

Identity management solutions can be configured to have multiple roles per identity and multiple identities per user. It’s important, therefore, to sort out how you need your solution to behave in the context of your card transaction environment. Roles-based identity has never been more difficult; therefore, many different industry and government regulations and standards call for them. Separation of duties is a concept that pervades throughout every business.

Choosing the directory solution has everything to do with which platforms will operate in your environment. Either train or hire strong engineering and architectural staff to make sure this solution is deployed without a hitch. This text is not a discourse on identity solutions, but this is a worthwhile point.

To bring a robust identity structure to your card transaction environment, first, make sure the identity solution has its own instance within the card transaction environment. This might constitute a dedicated Lightweight Directory Access Protocol (LDAP) organization (or Active Directory domain), such as pos.acme.com, which would be a subdomain of acme.com. Second, apply appropriate security settings to your directory.

Security settings should be configured to basically track all access to systems in your directory domain. That is a good reason for having a dedicated domain in the first place; here, there are no safe systems and no assumptions of innocence. All access is tracked for audit.

The system logs are sent to the event management solution immediately for correlation and archival. No opportunity for alteration must exist!

Establish the roles within the monitoring environment. There are really only two: system administrators and security log administrators. That’s it. No one else should set foot (or network interface card [NIC]) in that environment. Make sure the system administrators are not the same lot that manage your PCI network if you can help it.

Each ID must be audited in much the same manner as within your PCI environment. Log each access. Each identity associated with your log monitoring environment must have a person attached to it. No guest IDs, no test IDs.

Event Management Storage

Keeping the facility that captures and stores your logs happy is paramount to your ability to maintain PCI compliance. Logs add up quickly when you consider your sources:

Firewalls

Firewalls Switches

Switches Servers

Servers Applications

Applications Databases

Databases IDS

IDS Other Security Software such as Antivirus

Other Security Software such as Antivirus

The amount of data a business deals with in this space can easily reach into the terabytes. Good thing disk space is so cheap! (Relatively speaking, of course, tools that handle and correlate this data might not be so cheap.)

Using Storage Area Network (SAN) technology is the best way to go in terms of storage. You might consider Direct Access Storage Device (DASD) connected to the various servers that handle log transfer, but what if you’re dealing with appliance-based solutions? What if you’re dealing with a combination?

SAN is really the best way to go for a hybrid- (appliance and server) based solution. You need this data in a reliable, separate architecture where it can exist for a long time (up to a year) and can be recalled on short notice (such as after a security incident).

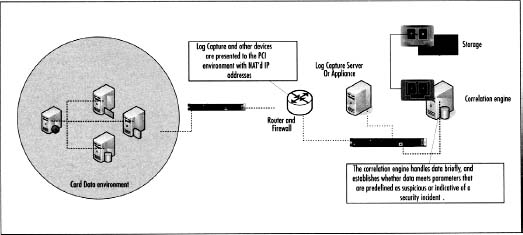

Where all of this data will live is a bit different from where it will be stored. Storage can be connected to the appliances and servers via fiber channel either directly to a fiber channel (FC) card, or over the Internet Protocol (IP) network (see Figure 9.1). Accessing that data, therefore, can be different. The storage of the old data is not relevant to dashboards and alerting systems, but is more relevant to audits. The live or current data, therefore, may be stored closer to the correlation systems than the archive data.

The enormous amount of data you will deal with in the course of logging and monitoring your environment means you must keep abreast of your available storage space and fabric capacity. As mentioned earlier, storage disk may be cheap, but acquiring EMC or other brands of SAN devices can be costly. Forecast your needs and have good capacity planning processes in hand!

As far as handling and then alerting on this data, you will need to select from a small population of security event/incident vendor technologies that automate this daunting task. The number of requirements you use during the selection process is significant.

Does the vendor support all your operating systems? Applications? Security tools?

Develop your list of requirements, then start shopping. Deploying a security event management solution is critical to your success and will help you meet PCI DSS 10.6.

![]() TIP

TIP

Don’t be fooled by every magazine you pick up from the shelf at the book store. Selecting a good storage solution is a huge undertaking, because the costs can be high if you choose poorly. Refer to reports by groups such as Gartner (www.gartner.com) or Forrester (www.forrester.com) to understand where the market for such technologies are headed, which company has the best management tools, and which company is most viable.

Nothing hurts an IT investment more than hanging your hat on a company that goes bankrupt six months after you signed the purchase order.

Determining What You Need to Monitor

Knowing what you are monitoring is half the battle in planning your storage needs as well as successfully deploying an auditable PCI-DSS solution. Any well-intentioned system administrator can tell you the basic equations to develop the amount of storage needed for a firewall or a Network Intrusion Detection System (NIDS) device. It cannot be stressed enough, however, that trapping all that is log-able is not the point. To do so would be counterproductive. The fact of PCI-DSS as protection of cardholder data is paramount, and therefore should be the focus of all logging activity.

To this point, it is time to examine what exactly we are required to monitor.

Applications Services

It’s best to break up the task of monitoring and logging into two less daunting components. Best because the activity you are performing had best not interfere with the primary job of the components you’re monitoring, which is providing services to merchants (i.e., serving up cardholder information to a point of sale and to a financial institution).

Monitoring tools are meant to be unobtrusive, exhibiting a small resource footprint on their hosts and networks; if you overwhelm either, card transactions can be impacted. This would obviously be an undesirable situation that can create significant financial burdens and sudden career changes.

In this respect, we have grouped the “Application Services” of data storage and access. These systems are the honey of the hive, and are the point of aspiring to PCI compliance. This is our primary goal as well as that of any hacker looking for some data of return value.

Data Storage Points

Storage of cardholder data is a necessary evil. During the course of business, a point-of-sale solution must make fast transactions possible to approve or deny sales to a cardholder.

The storage points must be protected by a number of solutions, and typically are hosted on servers of some sort. Intrusion detection (such as Trip Wire), intrusion prevention, antivirus, and system logs are all sources of auditable data that must be captured and transmitted to your security event management solution.

Data Access Points

So, you have cardholder data inbound and outbound via a e-commerce system, some is being stored, some is being sent on to a financial institution, some is being sent back to your point-of-sale system in the form of acknowledgements, approvals, denials, take-the-card notices, and so on. How do you know that only your systems are able to see that data? How do you know that no other entity is intercepting or otherwise recording these data streams?

Of course, the users of the systems between POS and the bank are all accounted for and their access is logged and monitored. To make certain no hackers have gained access to these “supply chain” systems, the access of the systems is logged, best done via system logging (e.g., Windows-family servers event logging). Microsoft has published a paper regarding logging of privileged access, which can be found at http://support.microsoft.com/kb/814595 Active Directory is an infrastructure component, and is frequently leveraged to grant access to applications resident on Windows Server hosts. As such, the access to the host is logged as well as access to the application.

Application logs can also be acquired using technology tailored to the task. Tools such as CA’s Unicenter WSDM (for Windows platforms) and Oracle’s WS-Security can be configured to acquire logs, then transfer them to your security event management solution.

Here, the point will be repeated; the logs will be moved to the event management solution for archival; no opportunity for alteration exists!

Infrastructure Components

The Infrastructure is the carrier and handler of the cardholder data. Operating systems and the management tools that support them do not care nor do they understand the financial transactions that are occurring around them. Therefore, the code that runs on these components is necessarily low-level. We don’t anticipate a Microsoft MOM agent to understand a Simple Object Access Protocol (SOAP) transaction, only its impact on central processing unit (CPU) and memory input/output (I/O).

Because of this, it is a safe assumption to make that this area is at least as important, if not more so, to watch with strong monitoring and alerting systems. It’s also easier to overdo the solution, and impact the mission-critical services above. No matter how mission critical and infrastructure components are viewed, the care and feeding of one must not overwhelm the functionality of the other.

Infrastructure, by not having a good sense of what’s happening at the applications layer, is an ideal place for a hacker to set up camp and search for good data of the sellable sort. A hijacked operating system or worse, a sniffer planted on the network by an insider, is a sure means of gathering such data.

Host Operating Systems (aka Servers)

The host operating system is probably the trickiest bit of the puzzle. Too many people have access to it, no matter how far you lock it down. There are system administrators, security administrators, and of course backup/restore folks. Each of these roles need to have a level of access, however, at no point should these folks be able to alter the system logs.

This is where Security Information Management (SIM) becomes quite handy in the area of system lockdown. When configuring the host, install a SNARE agent on it. Configure the agent to send the host system logs to your log collector. Also, configure the host to not retain logs for longer than 48 hours locally.

By configuring the host in this fashion, you have some local log data that is valuable to your administrators for technical reasons, but you’ve also moved the log data to a remote location. You’ve made it impossible for a hacker to cover his tracks.

A very important point: don’t just move the logs then deleted them locally. Your system administrators and other support staff need these logs as well.

Network Objects

Wired and wireless. Routers, switches, hubs. Firewalls. Each of these components provide for intercommunication between and within networks. Each also generates logs of various levels of detail and size. Configure these platforms to send logs to your SIM solution.

Usually, you’re not going to be able to load software on a supervisor card to route traffic logs; that’s not how it works. You need skilled networking people to configure your infrastructure to send SNMP 2.0-compliant data to your SIM solution. The SIM will handle what it can in terms of load. This is where significant planning of your SIM solution comes into play.

The SIM itself must be very scalable if you’re dealing with a large network with many subnets or bridged environments. The traffic is valuable to the correlation activities, so you want to capture as much of it as you can. That means you need a log collector proximate to your heaviest log-generating locations. Typically, a nexus of your network activities.

![]() WARNING

WARNING

Wireless networks are really a bad way to conduct business securely; nevertheless they are accepted as being a reality. PCI DSS 1.1 requires that a wireless operating environment be physically segregated from a wired environment and appropriately firewalled. It’s a good thought, but let’s face it, if someone can get into your wireless network, then it’s not too much of a leap to get into the wired one. Therefore, using a strong wireless access monitor (see the “Solutions” section below) is critical to controlling your environment.

One would be surprised at the number of vending machines that accept credit cards and use wireless connectivity to transmit the transaction!

Wireless security is still very immature, so bulletproof security measures are not achievable. You can take every precaution to give your environment a modicum of security from wireless hacks, but you will still be vulnerable. Proof of this can be seen in what happened to TJX Companies (owner of TJMaxx). Their wireless network was leveraged by hackers to gain entry to cardholder data storage platforms. This resulted in an $8 million dollar gift card fraud scheme that has resulted in major lawsuits brought against TJX by the banks who have had to foot the bill for the fraud.

Determining How You Need to Monitor

What Gets Monitored

The simple statement “monitor everything” might be a wish in the dark for security professionals. After all, monitoring it all costs much more than the business might be bringing in the door. The cash margins still take precedent, but the balance of security cost versus loss of prestige and associated business must be weighed. Within that balance, establish what budget can be assigned to monitoring, then figure out “What will hurt my business if it is compromised?”

Security Information Management

A SIM solution at its heart is nothing more or less than a log collector and its correlation engine. The log collector’s role is to acquire the log, normalize it (that is, translate the log data into the schema used by the vendor), then pass it on to the correlation engine.

The correlation engine uses rules, signatures (though not always), and sophisticated logic to deduce patterns and intent from the traffic it sees originating at the host operating system (OS) and network layers. Well-designed SIM technologies try to distribute much of the “heavy lifting” in terms of moving data, but the actual analysis of that data must be centralized in some form.

A SIM solution must be scalable! In other words, wherever your business has data, you should have some central point where a log collector is going to have a reasonable chance of receiving your logs, then passing them on. If you have an important subsidiary that handles significant volumes of cardholder data, you don’t want your log collector at a remote office. You need to have it near where the data flows and where it is stored.

Security Event Alerting

When a correlation engine has determined that something is amiss (see “Are you Owned?”), it will attempt to alert your security team in whatever fashion you configured. Typically, this alert is via e-mail or pager, though some folks use Windows popup messenger, a Web-based broadcast message, a ticket sent to a response center, or even a direct phone call from the alerting system.

Whichever means you decide on, make sure you do not use just one part of your infrastructure to deliver that message. If your business uses Voice-over-IP (VoIP) for phone services, a well-crafted network attack could disable your phone services. If an e-mail solution is disrupted by a spam attack or a highly virulent e-mail worm, you might not be able to receive the data from your SIM solution.

Make certain you use two separate forms of communication to send alerts from your SIM to your security team.

Are You Owned?

Getting Tipped Off That You Have a BOT on the Loose

When intrusion detection systems (IDSes) are configured correctly, your security team has spent time understanding which systems are expected to send data and initiate communications, and in what fashion. For instance, a Web server is not expected to initiate port 80 communications if it is configured to only support Secure Sockets Layer (SSL) communications for the purpose of completing online purchase transactions.

Similarly, the Web server is not expected to initiate communications to a foreign IP address using a port that supports I Seek You (ICQ) traffic.

When IDS is configured correctly, your solution will detect such anomalies and alert you to their existence.

Deciding Which Tools Will Help You Best

Log Correlation

SIM tools provide incredible capabilities, and the best (and sometimes most questionable) include strong correlation capabilities. This means the system is able to acquire logs and events from disparate sources, normalize and compare the data presented, and make a logical deduction as to their meaning.

For instance, a series of calls outbound from a file server to an IP address over ICQ channels would tip off the SIM tools that a famous worm is running amok within the network. This in turn would generate an alert received by the Security Administrator, who then would have words with a certain System Administrator or two.

Log Searching

We’ll cover the log searching tools in greater detail in the next section of this chapter, but a note about selection of this tool is worthwhile here.

Generally, a database should be searchable in the same manner by a variety of tools, but the fact is that many vendors spend less effort on their retrieval tools than on their correlation and storage components—that’s a situation generated by market forces. PCI DSS mandates an ability to retrieve data in much the same way as Sarbanes-Oxley (SOX) does. Therefore, SIM vendors have put more effort in the space.

If possible, using the same vendor for data retrieval is the best possible approach. However, if the retrieval capabilities show significant delay in reacquiring data (more than 24 hours), then consider another vendor. PCI DSS 10.6 gives some guidance in this area, but from a security perspective, a day is an eternity—the perpetrator is already gone.

Alerting Tools

Each vendor of SIM tools provides integration points to hook into sophisticated alerting systems. These take the form of management consoles that in turn provide SMTP, SNMP, and other means to transmit or broadcast information to whatever mode of communication is in play (e.g., pager, Smartphone, and so on).

Auditing Network and Data Access

The audit activities are proof of the work you’ve put into your PCI DSS solution. They help you understand where you are and how far you need to go to achieve nirvana. Or something similar. Fortunately, in this instance, the card issuers have helped us out in the form of the PCI DSS Security Audit Procedures (version 1.1, found here: www.pcisecuritystandards.org/pdfs/pci_audit_procedures_vl-l.pdf).

Searching Your Logs

Finding the best tool to mine log data after it has been archived (meaning, after it has been correlated and subjected to logic that detects attacks and such), is a very important bit of work. The point here is that after you’ve committed your terabytes of data to disk, you need a way to look for cookie crumbs if you’ve discovered an incident after-the-fact.

Data mining is a term normally associated with marketing activities. In this case, however, it’s a valuable security discipline that allows the security professional to find clues and behaviors around intrusions of various sorts.

Some options for data mining include the use of the correlation tool you selected for security information management. In fact, most SIM tools now carry strong data mining tools that can be used to reconstruct events specific between IP and Media Access Control (MAC) identifiers.

The point is that the solution must be able to integrate at the schema layer. You don’t want to invest in additional code just to make a data miner that typically looks for apples, to suddenly be able to look for oranges. If your current SIM vendor doesn’t provide mining tools, insist on them, or take your business elsewhere.

Testing Your Monitoring Systems and Processes

Throughout this chapter, we have been preparing you to implement PCI DSS solutions covering each of the requirements. With diligence, skill, and just a little bit of luck, you have deployed a strong security solution that would meet the rigors of PCI DSS certification. Also, if done correctly, you will be ready to far exceed PCI DSS. Do not forget that the goal of PCI DSS is to create a framework for good security practice around the handling of cardholder data. It is not prescriptive security for the entirety of your IT infrastructure!

The activity of testing the PCI DSS environment, you might find, is actually quite straightforward. First you must find a good testing service. PCI DSS does not differentiate between what you do in-house and what is done for you by a third-party vendor, but the PCI group does provide a list of Approved Scanning Vendors (ASVs) that have been prescreened for their thorough processes and reporting. If you would rather not invest the significant dollars in creating your own penetration testing team, you would be well advised to scan the list of ASVs here: www.pcisecuritystandards.org/resources/approved_scanning_vendors.htm.

You also must engage a Qualified Security Assessor (QSA). The QSA is your auditor. This is the person who will actually walk through your test results and validate that your business is PCI DSS certifiable. The QSA is covered in Chapter 3.

Network Access Testing

The ASV will need full access to your network to perform the testing here. The idea is to expose the solutions you have deployed to appropriate testing. Whether it’s possible or not, every egress and ingress must be examined for issues.

Penetration Testing

Every penetration test begins with one concept—communication. A penetration test is normally viewed as a hostile act. After all, the point is to break through active and passive defenses erected around an information system. Communication is important because you’re about to break your security. Typically, companies that do not approve a penetration test come down hard on the tester.

During the time of the penetration test, alarm bells will ring, processes will be put into motion, and, if communication has not occurred, and appropriate permissions to perform these tests have not been obtained, law enforcement authorities may be contacted to investigate. Now wouldn’t that be an embarrassment if your penetration test, planned for months, had not been approved by your Chief Information Officer (CIO)?

Intrusion Detection and Prevention

Detection and prevention technologies have collided in recent months and are certain to converge to greater degrees over time. In the context of network and host activities, you should search for solutions and technologies where the best of breed is represented. Intrusion detection and prevention are more frequently housed on the same platform. This is an area where the business can see a better return in investment than on standalone solutions.

Intrusion Detection

Intrusion detection is a funny thing. Some focus on the network layer, some on the application layer. In a PCI DSS environment, you are typically dealing with application-layer traffic. Web services behaviors and transactions using extensible Markup Language (XML), SOAP, and so on. There are two sorts of IDS in this context: network and applications. In addition, there are two layers of IDS: network and host.

Network IDS is going to work in a similar vein to Intrusion Protection System (IPS), except that the purpose is to detect situations like distributed Denial of Service (DoS) attacks, while IPS is simply permitting or denying certain traffic.

Intrusion Prevention

When configuring IPS, the most important step is to catalog those data activities your network normally operates. Port 80 outbound from such-and-such server, 443 inbound and outbound, Network Basic Input/Output System (NetBios) and other Active Directory required protocols, File Transfer Protocol (FTP), and so on, each have legitimate purposes in most networks. The important point is to catalog the port, the expected origination, and the expected destination. Once that is documented, you can use that information to configure your IPS appropriately.

If you’re configuring the network IPS, you’ll need all the data relevant to that area of the network. If you’re working on host IPS, you’ll need expected transaction information for that host.

Integrity Monitoring

Tools like Trip Wire (www.tripwire.com) serve to monitor the Message Digest 5 (MD5) hash or checksum of the system files on your application or host. If alterations are made to these files, a good file integrity solution will detect and alert you to the issue.

To really make the best use of a configuration assurance tool, however, you will need to implement (assuming you have not already) a decent change management or configuration management database. You will also need solid processes around its use. An organization that is ISO-17799 compliant will typically have this sort of solution in hand already. Compliance can be a good thing! Solutions from NetIQ (www.netiq.com) can help in this area.

What are You Monitoring?

Focus your monitoring on the files that perform transactions or serve as libraries to your SOAP, XML, or ActiveX transaction applications. Alterations in these files serve as ingress points for additional misbehavior. The obvious point here is that if the file is altered, someone with ill intent is already accessing your network.

In addition, look into your OS’ critical files. Monitor and alert on odd behaviors.

All the industry leading solutions in this space offer pre-configured solutions specific to varieties of software and operating systems. You should use these pre-configured packages to best protect your systems. However, applications that have been developed by small coding houses, or those you’ve written yourself, will need customization in order to be monitored. In this circumstance, your vendor must be willing to work with you to extend their technology to cover your gaps.

Again, if the vendor will not or cannot support your needs, take your business elsewhere!

Solutions Fast Track

Identity Management

Develop and deploy a robust directory that is LDAP compatible.

Develop and deploy a robust directory that is LDAP compatible. Design and deploy access control to each component of your PCI DSS environment.

Design and deploy access control to each component of your PCI DSS environment. Certify that each component of your identity management solution can interface with those systems that provide you with identity data.

Certify that each component of your identity management solution can interface with those systems that provide you with identity data. Make certain each role for each user in your environment has a unique identifier that can be mapped back to a single identity (user).

Make certain each role for each user in your environment has a unique identifier that can be mapped back to a single identity (user).

Security Information Management

Select a SIM vendor that can monitor all of the systems in your PCI DSS environment.

Select a SIM vendor that can monitor all of the systems in your PCI DSS environment. Design a log aggregation solution that will scale to the eventual size of your environment.

Design a log aggregation solution that will scale to the eventual size of your environment. Deploy a storage solution that will handle the mass of data created by all the devices that generate logs.

Deploy a storage solution that will handle the mass of data created by all the devices that generate logs. Identify each component of the PCI DSS environment. Where a component handles cardholder data or can facilitate access to cardholder data, you should be collecting logs from it.

Identify each component of the PCI DSS environment. Where a component handles cardholder data or can facilitate access to cardholder data, you should be collecting logs from it.

Network and Application Penetration Testing

Refer to PCI’s list of ASVs and select one for ongoing penetration testing.

Refer to PCI’s list of ASVs and select one for ongoing penetration testing. Use a QSA to perform the actual assessment of your tools and processes.

Use a QSA to perform the actual assessment of your tools and processes.

Integrity Monitoring and Assurance

Integrity monitoring solutions are necessary to make certain your core applications and host operating systems files are not altered. Tools from www.tripwire.com can help in this area.

Integrity monitoring solutions are necessary to make certain your core applications and host operating systems files are not altered. Tools from www.tripwire.com can help in this area. Configuration management solutions help you to understand your current operating environment, therein making it easier to configure the various solutions covered in this chapter. Tools from www.netiq.com can help in this regard.

Configuration management solutions help you to understand your current operating environment, therein making it easier to configure the various solutions covered in this chapter. Tools from www.netiq.com can help in this regard.

Frequently Asked Questions

The following Frequently Asked Questions, answered by the authors of this book, are designed to both measure your understanding of the concepts presented in this chapter and to assist you with real-life implementation of these concepts. To have your questions about this chapter answered by the author, browse to www.syngress.com/solutions and click on the “Ask the Author” form.

Q: What is the difference between host intrusion detection and HIM?

A: Many different types of applications are labeled as host-based intrusion detection. In general, the distinction is that with a HIDS the end goal is the detection of malicious activity in a host environment, whereas a HIM system aims to provide visibility into all kinds of change. Detecting malicious change or activity is a big part of a HIM system, but that is not the entire motivation behind its deployment.

Q: My network IDS boats real-time processing of events. Should my HIM system be real time as well?

A: Not necessarily. There is a belief that real-time processing of host-based events is good because it is natural at the network level. This is simply not the case. Host-based integrity monitoring usually involves a great many more nodes compared with network monitoring. If you receive host-based alerts in real time, are you going to respond to them in real time? Usually the answer is no. The best way to stop attacks in their tracks is to prevent them from happening in the first place. The intrusion prevention product made by Immunix is a good example of this.

Q: I have a firewall. Do I need an IDS?

A: Yes. Firewalls perform limited packet inspection to determine access to and from your network. IDSes inspect the entire packet for malicious content and alert you to its presence.

Q: How many IDSes do I need?

A: The number of IDSes in an organization is determined by policy and budget. Network topologies differ greatly; security requirements vary accordingly. Public networks might require minimal security investment, whereas highly classified or sensitive networks might need more stringent controls.

Q: Do I need both HIDS and NIDS to be safe?

A: Although the use of both NIDS and HIDS can produce a comprehensive design, network topologies vary. Some networks require only a minimum investment in security, and others demand specialized security designs.

Q: Many of the statutes have overlapping control statements. Can I leverage output from a previous audit—say, PCI—to support SOX compliance?

A: To some extent, absolutely. Your auditor will determine to what extent, though. They can’t totally rely on another auditors’ work, but they can leverage some of it.

Q: I’ve gone through the PCI standard and it appears that the credit card companies want us to encrypt everything everywhere—for example, credit card numbers in repositories and even administrative connections to infrastructure devices. How can I achieve this, given my legacy environment?

A: The credit card companies realize that this is a challenge for many organizations. Because of this, they are relaxing PCI’s encryption requirements. For specifics, contact your PCI auditor.

Q: When you are performing an external penetration test, should you worry about performing port scans too often?

A: In the last seven years or so, the Internet has become a very dangerous place for unprotected systems. Due to the proliferation of automated attack tools, port scans can fall into the background noise of a typical Internet-homed system. That being said, launching multiple scan threads from one source constantly will elevate your risk potential to someone watching the perimeter. An occasional scan may not be detected, but repeated ones might.