14

Business Intelligence

WHAT'S IN THIS CHAPTER?

Business intelligence (BI) is an umbrella term that refers to technologies, applications, and a number of exercises an organization may use to deploy shared business processes across multiple business units. Business intelligence empowers users with the right insights and enables them to make better, faster, and more relevant decisions when they collaborate.

The focus of this chapter is on bringing SharePoint developers up to speed with the BI features of SharePoint Server 2010 through a series of step-by-step examples. This chapter also includes demonstrations of some of the new features shipped in the current wave of products with SharePoint Server 2010, Office Product 2010, and SQL Server 2008 R2.

CHALLENGES WITH TRADITIONAL BUSINESS INTELLIGENCE

Statistics in the BI industry state that the majority of people in organizations do not have proper access to data or to the tools that they require to make effective business decisions. Think about that for a moment. With all the advancements happening so fast in the business intelligence sector, isn't this horrible?

When you look at the problem from different angles, it's obvious that part the problem lies in how people have been implementing business intelligence over the past decade. This issue can be divided into three categories:

- Complexity in adoption

- Lack of must-have functionalities

- Hardware and software specifications

Until recently, the greatest challenge in many organizations was the fact that accessing data for the purpose of analysis was restricted to certain groups of people using very specialized tools. With only a handful of staff members able to make use of the BI solutions, business users would come with ad hoc inquiries for information resulting in highly qualified BI experts becoming a bunch of report writers, rather than people who look after the BI solutions and fulfill the ongoing corporate BI needs.

Furthermore, it was difficult to give the company leaders the ability to gauge the state of their business at a glance, so they could make agile decisions to keep the business moving forward. In many cases, delivering timely and accurate reports to key decision makers that summarized strategic and operational data has been done in unbelievably inefficient ways, such as through emails and file shares that could easily provide out-of-date data.

This left the door open for developers and third-party vendors to build custom applications that delivered reports to key decision makers efficiently, which in turn translated into more costs and more hardwired dependencies. Let's not forget that the most compelling reason to do BI is to support decision making. So, the question is: Why must customers pay extra for something that should have been included in the technology to begin with?

From the hardware perspective, building a decent BI solution required assembling the right hardware, compression algorithms, and networking components that constitute the solution. The challenge for many organizations extending the reach of their BI solutions to broader sets of users was the storage and the computing power that was required to host decent BI solutions and make them available to the masses.

Business intelligence is not only for answering the questions that users may have in mind. The more important part of BI is to help users ask the right questions, and also to guide them through an often resource-intensive process to get the insights they need. The types of questions may not necessarily be anticipated or preaggregated into the BI solutions, so the hardware, software, and bandwidth specifications for hosting those solutions must be powerful enough to respond to such on-demand queries in a reasonably fast manner.

All these issues have created quite a number of hurdles for the IT industry over the past decade. In the next section, you will look at the history of Microsoft BI, the integration of BI into SharePoint products and technologies, and how Microsoft has managed to address a major portion, if not all, of these issues.

INTEGRATION WITH SHAREPOINT: THE HISTORY

Like many other BI vendors at the time, Microsoft started its significant BI investment with the same limitations in adoption, lacking must-have functionalities and requirements for strong computing power. The problem was that most Microsoft BI solutions were strongly tied to SQL Server technology and SQL Enterprise Manager was the primary interface to interact with those solutions. Again, unless you knew how to work with SQL Server and to do BI, the chances that you were just a bystander in the whole BI world were very high!

Soon, Microsoft realized that the value of its BI platform would not become apparent until a paradigm shift occurred in its approach to doing traditional BI. Looking for a way to excel, Microsoft developed a new vision, which looked at things very differently than had been done before.

The new vision was based on taking BI to the masses, using it to connect people to each other and to connect people to data. The key area of focus was to take the BI out of the realm of specialty and niche tools and turn it into something mainstream. There were two primary justifications for the new vision. First, it would hide the difficulties of the underlying platform from the general public. Second, it would make the adoption of the platform much easier. Obviously, the more people who use a platform, the more valuable it becomes and the faster it grows.

Following the overall vision of “BI for everyone” and starting with SharePoint Portal Server 2003, Microsoft fostered this notion of integrating some aspects of their BI offering into their Information Portal technology. Theoretically, because SharePoint brings people together to work and make decisions collaboratively, it could have been the right starting point. However, this integration never extended beyond a couple of web parts natively rendering BI artifacts that are stored outside SharePoint content databases, in products such as Microsoft SQL Server Reporting Services 2000 and Microsoft Business Scorecard Manager 2005.

Okay, so what is wrong with storing BI artifacts outside SharePoint content databases? There are three obvious issues with this separation. First, you need to deal with a minimum of two separate products and repository frameworks to implement a single BI solution, which means more administrative effort. Second, users have to go through more than a hop to get to the backend datasource. For the environments without Kerberos delegation in place, this model can cause authentication issues — also known as double hops. The double hop (one hop from the client browser to the SharePoint server and another hop to the BI server) problem is not a bug. It's an intentional security design to restrict identities from acting on behalf of other identities. Third, since the SQL Server based BI products and SharePoint Portal Server 2003 were using different security models, it was difficult to map SharePoint roles and permission-levels directly to the roles and permissions understandable by the BI product. In other words, it was difficult to apply a unified authorization model across the products.

In the spring of 2006, Microsoft acquired analytics vendor ProClarity, and soon Business Scorecard Manager 2005 and ProClarityAnalytics products were merged and formed a new product named Microsoft PerformancePoint Server 2007.

Later on, with the release of Microsoft Office SharePoint Server 2007, Microsoft's BI offering turned into something that was way more than just a couple of integration hooks, as is the case with SharePoint Portal Server 2003. In Microsoft Office SharePoint Server 2007 (MOSS 2007), Microsoft made major improvements in four different areas: the Report Center template, full integration with SQL Server Reporting Services (SQL Server 2005 SP2), new Excel Services, and a Business Data Catalog for integration with line-of-business (LOB) applications.

Fortunately, Microsoft didn't stop there; they released more features that could change the way people build dashboard-style applications. Customers could use PerformancePoint Scorecard Builder 2007 and put together their own dashboards and publish them to the PerformancePoint monitoring server. Once the dashboards are published, customers could then use the Dashboard Viewer web part to integrate the dashboard into SharePoint pages. Again, the integration is just a web part that calls into PerformancePoint Server 2007 functioning as a standalone server. Both products were sold separately and they had different management environments and operations.

Even though the attempts Microsoft made to bring the best of both the SharePoint and BI worlds together in MOSS 2007 was great, it was still not enough to call it a full-fledged integration. In other words, the journey was not over yet! The next section is a sneak peek at some of the highlights of new BI features Microsoft added to SharePoint Server 2010.

HIGHLIGHTS OF BUSINESS INTELLIGENCE IN SHAREPOINT SERVER 2010

The team that was building SharePoint Server 2010 made significant changes based on the customer feedback and the lessons learned in MOSS 2007. Starting with SharePoint Server 2010 Server, PerformancePoint is designed as a service application on top of the SharePoint 2010 Server platform. What is important about the new design is that PerformancePoint and SharePoint are no longer two separate products. Instead, both are finally offered as an integrated product on the Enterprise CAL. The biggest advantage of this move is that PerformancePoint contents are all stored and secured within SharePoint libraries, and they can benefit from the new features and enhancements made to the core SharePoint platform. PerformancePoint itself got many new features and enhancements.

There are many improvements in Excel Services 2010. Microsoft started with limited PivotTable and Excel Services integration in SharePoint 2007 and then expanded beyond that very dramatically in the newest version of SharePoint products and technologies. SharePoint Server 2010 can integrate with a specialized version of the SQL Server Analysis Services (SSAS) engine, which allows business analysts to benefit from more sophisticated and high-performing interactive queries using PowerPivot.

In a nutshell, PowerPivot is a collaborative effort across several teams in Microsoft to make Excel, SharePoint, and SQL Server R2 work together to allow all users to discover and manage the right information, all done through the familiar environments such as an Excel client or a web browser.

On the API side, the enhanced Excel Services programmability model allows developers to interact with published Excel workbooks in several ways, such as through Enhanced Web Service APIs, the JavaScript object model, or the REST API. Last, but certainly not least, Excel workbooks work in the enterprise and in the cloud across many popular browsers such as Internet Explorer, Firefox, and Safari on Mac.

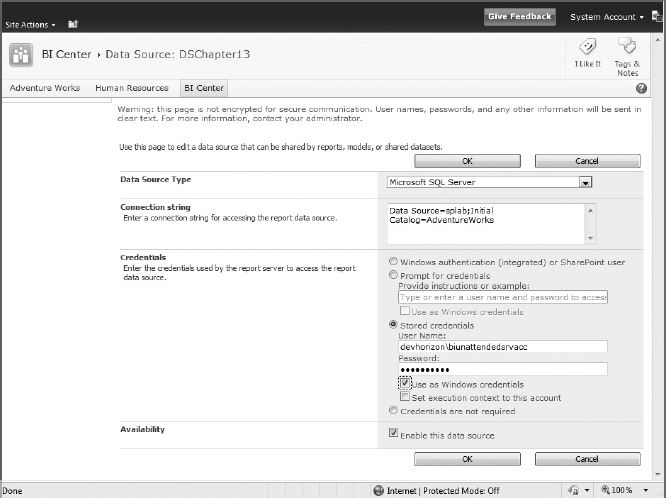

Reporting Services integration with SharePoint Server 2010 is much tighter and cleaner than before. Reporting Services 2008 R2 not only supports native and connected mode (previously known as SharePoint integrated mode), but it also supports a new lightweight integration mode named local mode. In this mode, customers need to install SharePoint Server 2010 and the SQL Server 2008 R2 Reporting Services add-in, but no Reporting Services server is required. Local mode is a valuable out of-the-box feature that allows the viewing of SSRS reports with no SSRS server. Reporting Services has seen a number of improvements as well, which are discussed later in this chapter.

Access Services 2010 is probably one of the biggest service areas for customers, because now they can model their databases in the Access client application, publish everything to SharePoint, and keep the client and server models in sync. In Access 2007, customers could move only the tables to SharePoint; the rest of application still lived in the Access client application. In Access 2010, you can move the entire application to SharePoint and map it to a SharePoint team site. All the tables and data become standard SharePoint lists, and the forms are converted to standard ASPX pages stored in SharePoint document libraries. Finally, data macros become workflows, and reports inside the application are turned into .rdl files and are associated with the Reporting Services report execution engine.

Recall from the previous section that one of the barriers to extending the reach of BI to everyone was the required computer power. With the current wave of Microsoft products, many of the desktop and large-server scalability issues are addressed. On the sever side, 64-bit-only products allow customers to take advantage of greater addressable memory range, modern CPU architectures, and multicore technologies, which in turn translates into tremendous computer power and faster BI solutions. On the client side, in-memory cache and compression algorithms allow BI analysts to load millions of rows of data into a PowerPivot workbook and do BI faster than ever.

The rest of this chapter discusses some of these new features in more detail.

IMPORTANT BI TERMS AND CONCEPTS

As much as a BI developer may get confused when he or she first hears commonly used terms in SharePoint, such as “site” and “site collection,” there are some BI terms that may sound a bit vague to a SharePoint developer with no BI background. Many BI techniques share terminology, and some terms are used interchangeably. In the interest of clarity, some of these terms are defined in this section and then referenced later on.

If you are a SharePoint developer, you are most likely familiar with flat, table-style data structures, because lists in SharePoint mimic the same data storage format. Relational database management systems (RDBMSs), such as the SQL Server database engine, also use tables for storing data. Although storing data in tables has its own advantages, browsing through rows and columns rarely leads to useful analysis, especially when someone is looking for patterns and relationships that lie hidden in huge piles of data and information.

For instance, if you were analyzing Internet sales information of AdventureWorks over the past few years, you would be more interested in the sums of sales per product, per country, and per quarter than in an analysis of the individual sales. Aggregating data at this level, although possible with most RDBMS engines, isn't the most optimized process.

Online Analytical Processing (OLAP) is a technology that tends to remove any granularity in the underlying data and focuses on enhanced data storage, faster data retrieval, and more intuitive navigational capabilities in large databases. Typically, OLAP's information comes from a database, referred to as a data warehouse. Compared to a relational database, a data warehouse requires much tighter design work up front for supporting analysis and data aggregation, such as summed totals and counts.

Because the storage unit used in OLAP is multidimensional, it's called a cube instead of a table. The interesting aspect of OLAP is its ability to store aggregated data hierarchically, and give users the ability to drill down or up aggregates by dimensional traits. Dimensions are a set of attributes representing an area of interest. For example, if you are looking at sales figures generally, you would be interested in geography, time, and product sales, as shown in Figure 14-1.

FIGURE 14-1

Dimensions give contextual information to the numerical figures, or measures, that you are aggregating on; for example, Internet sales amount, Internet gross profit, and Internet gross profit margin. OLAP calls each of these a measure. Because the measures are always preaggregated and anticipated by the cube, OLAP makes navigation through the data almost instantaneous.

If you want to look at a particular region that had a good quarter of sales, OLAP's navigational feature allows you to expand the quarterly view to see each month or day of the quarter. At the same time, you can also drill down into the region itself to find the cities with major increases in sales.

There are two more terms that need to be called out here:

- Multidimensional Expressions (MDX): MDX is the query language that lets you query cubes and return data.

- Datasource: A datasource is a stored set of information, such as a tabular database, OLAP cube, Excel spreadsheet, SharePoint list, or any other data object that contains the actual data.

USING THE ADVENTUREWORKS SAMPLE DATABASES

Your database source for examples provided in this chapter is the AdventureWorks database for SQL Server 2008 R2. You can download this sample database from CodePlex at http://msftdbprodsamples.codeplex.com. It's worth mentioning that the installation instructions are also available on CodePlex.

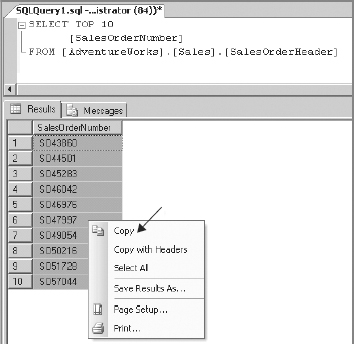

If the installation goes smoothly, you should be able to start SQL Server Management Studio, connect to the Database Engine, and see the new AdventureWorks databases in your SQL Server 2008 R2 instance.

Unfortunately, the installation package does not automatically deploy the Analysis Services database, so you need to deploy it manually.

![]() Before you can start the instructions that follow, ensure that the SSAS service account has permission to the SQL Server instance where the AdventureWorks DW2008R2 sample database exists. Additionally, ensure that the SSAS service account has permission to access the databases and is at least a member of the db_datareader role for the AdventureWorksDW2008R2 database.

Before you can start the instructions that follow, ensure that the SSAS service account has permission to the SQL Server instance where the AdventureWorks DW2008R2 sample database exists. Additionally, ensure that the SSAS service account has permission to access the databases and is at least a member of the db_datareader role for the AdventureWorksDW2008R2 database.

To deploy this database, you need to perform the following steps:

- Start Business Intelligence Development Studio.

- Choose File

Open

Open  Project

Project  Solution, and navigate to Drive:Program FilesMicrosoft SQL Server100ToolsSamplesAdventureWorks 2008R2 Analysis Services Project. According to the version of your SQL Server, click either the Standard or the Enterprise folder, and select Adventure Works.sln. As you can tell, SSAS databases are not supported in other editions of SQL Server.

Solution, and navigate to Drive:Program FilesMicrosoft SQL Server100ToolsSamplesAdventureWorks 2008R2 Analysis Services Project. According to the version of your SQL Server, click either the Standard or the Enterprise folder, and select Adventure Works.sln. As you can tell, SSAS databases are not supported in other editions of SQL Server. - Next, in the Solution Explorer, double-click the Adventure Works.ds datasource. This opens the Data Source Designer dialog box, as shown in Figure 14-2.

FIGURE 14-2

- Click the Edit button, and in the Connection Manager, supply your SQL Server Database Engine connection information. Click the Test Connection button. If the test succeeds, click OK and click OK again to save the changes.

- Right-click the solution in Solution Explorer, and then choose Properties. On the AdventureWorks DW 2008 Property Pages dialog box, change the Server property to your SSAS server name and database name.

- In the Solution Explorer, right-click the solution, and click Deploy Solution.

At this point, you should be able to start SQL Server Management Studio, if it's not already open, connect to the Analysis Services, and see the new AdventureWorks databases.

THE STARTING POINT: BUSINESS INTELLIGENCE CENTER

In SharePoint Server 2010, there are many ways to manage and display BI assets. One of them is to use a site template called Business Intelligence Center, which is the enhanced version of the Report Center in MOSS 2007. This site template encompasses many of the BI capabilities that Microsoft has introduced in SharePoint Server 2010.

Although using Business Intelligence Center is not the only way to access SharePoint's BI features, this site template can provide a central location for teams and departments within your organization to store, retrieve, and modify shared reports.

To begin creating and using the Business Intelligence Center to its full capacity, you must first enable a few site collection scoped features. To enable these features, perform the following steps:

- Click Site Actions menu

Site Settings.

Site Settings. - In the Site Collection Administration list, click the Site collection features link.

- Activate the SharePoint Server Publishing Infrastructure feature. PerformancePoint Services uses this feature to perform dashboard publishing.

- Activate the SharePoint Server Enterprise Site Collection Features feature. This feature enables Excel Services, Visio Services, and Access Services, included in the SharePoint Server Enterprise License.

- Activate the PerformancePoint Services Site Collection Features feature. This feature adds PerformancePoint content types and a Business Intelligence Center site template.

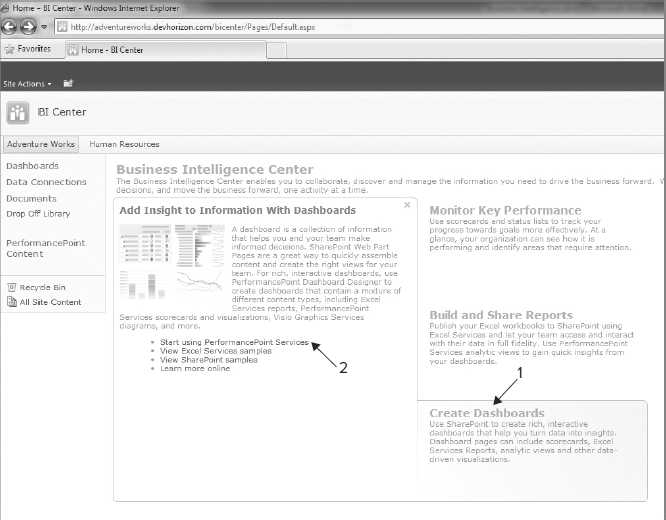

To properly examine the capabilities of the Business Intelligence Center in SharePoint Server 2010, create a new site with this template by clicking Site Actions ![]() New Site, and then choosing the Business Intelligence template, as shown in Figure 14-3.

New Site, and then choosing the Business Intelligence template, as shown in Figure 14-3.

Next, fill out the title and the URL and click the Create button. Your new site should look like Figure 14-4.

Just like any other template, the Business Intelligence Center includes several features that can help you organize dashboards, reports, and the connections to external datasources in one centralized and standardized place.

FIGURE 14-3

FIGURE 14-4

One obvious difference between Business Intelligence Center in this release and Report Center in the previous version of SharePoint is that lots of guidance and samples have been produced and placed into various pages of the site to assist users to start their BI implementation inside SharePoint as quickly as possible. And all samples just work!

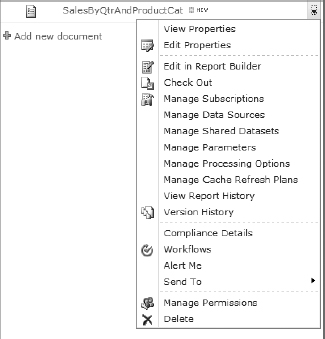

Most of the BI functionalities available in this template are contained in two document libraries and one list as follows:

- Dashboards Document Library: A library that contains exported PerformancePoint dashboards organized in folders

- Data Connections Document Library (DCL): A library that contains ODC Office Data Connection (ODC) files, UDC Universal Data Connection (UDC) files, and PerformancePoint data connections

- PerformancePoint Content List: A list that contains PerformancePoint content and OOB views for organizing content

There are two things about the new site that warrant more attention.

First, the BI Center automatically activates a site (not site collection) scoped feature named PerformancePoint Services Site Features. This feature adds the list and document library templates that are used in the Business Intelligence Center.

Second, PerformancePoint content storage has completely changed compared to the previous version. In SharePoint Server 2010, all PerformancePoint elements are stored, secured, and managed in SharePoint lists and document libraries, not on the PerformancePoint server. This one, right here, is a huge game changer!

![]() Like other templates in SharePoint, the BI Center template can be further customized to meet your business requirement needs. The BI Center template already has all the content types, and list and document library definitions for your BI solutions, and is a great starting point.

Like other templates in SharePoint, the BI Center template can be further customized to meet your business requirement needs. The BI Center template already has all the content types, and list and document library definitions for your BI solutions, and is a great starting point.

EXCEL SERVICES

Excel has always been one of the most widely used data analysis tools, with which users take corporate data and bring it into workbooks and, for the purposes of analysis, combine it with other datasources that users can't track back to learn where they came from, such as XML data coming from a web service or data feed. Microsoft introduced a new server technology in MOSS 2007, named Excel Services, which has become an increasingly popular choice for sharing and collaborating on the data kept inside the Excel workbooks.

The primary driving force behind this technology was to make Excel and Excel Services the analysis tools of choice for users doing BI. This was done by changing Excel from being just a client-side application into an application that works in the client and on the server. The server-side application model allows users to reuse the logic and content of their Excel workbooks in the browser, while easily protecting the IP behind them. Additionally, maintaining a single server-side version of the workbook gives everyone the right numbers and one version of the truth!

Excel 2010 picks up where Excel 2007 left off. There are two forms of Excel in SharePoint Server 2010: Excel Web App and Excel Services. Excel Web App is an extension of the Excel rich client that allows users to view and edit workbooks in the browser. Excel Services 2010 is the enhanced version of the same service that was offered back in MOSS 2007. Figure 14-5 illustrates what Microsoft has shipped in Excel Services 2010.

FIGURE 14-5

In the new architecture, an information worker uses the Office Excel 2010 rich client to author the Excel workbook and publish it to Excel Services 2010. Alternatively, he or she can save the workbook to a document library in Excel Services right on the server, where it is managed and secured by the site collections administrator. Once the workbook is published, it can be consumed and edited in three ways:

- Directly through the browser

- By downloading the workbook into the Excel rich client for further analysis as either a snapshot or an Excel workbook

- In custom applications through User Defined Functions (UDFs), the Web Services API, the Client object model, or the REST API

What is important about Figure 14-5 is that the user experience in Excel Services 2010 is a major subset of the full Excel 2010 client on the desktop. For instance, with Excel Services 2010, users can:

- Collaborate on the whole or just parts of a workbook while performing distinct operations such as in-cell editing, sorting, filtering, pivoting, and entering parameters

- Work with connected or standalone external datasources in the workbook

- Perform what-if analysis — a use of underlying datasources in real time to model different data scenarios

- Build dashboard-style applications by using the web part connection framework between web parts on the same page or across pages

Excel Services Architecture

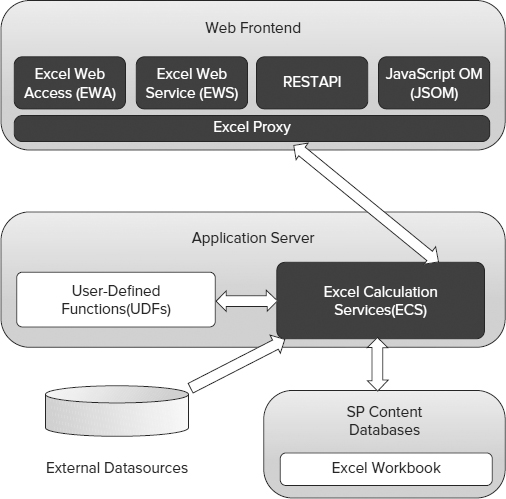

The functionalities that Excel Services 2010 offers are handled in three tiers of a SharePoint Server farm topology: Database Server, Application Server, and Web frontend, as shown in Figure 14-6.

Because the Excel Services architecture is built on the SharePoint farm topology, it can be scaled up or out using configurable load-balancing scenarios and several other options to support large numbers of workbooks and concurrent requests. All these settings can be configured from Central Administration Site ![]() Manage Service Applications

Manage Service Applications ![]() Excel Services

Excel Services ![]() Global Settings.

Global Settings.

Essentially, Excel Services is nothing without a workbook. An Excel workbook is a self-contained unit of an application that contains data, logic on the top of the data (a model), visualization, and external assets such as those that connect up to the external datasources. A workbook is typically authored in the Excel client application and deployed to Excel Services, where it is stored and secured inside the SharePoint content database.

FIGURE 14-6

Next is the Application Server layer, which stands in the middle. A key component of Excel Services installed on the Application Server is Excel Calculation Services (ECS). This service application is responsible for loading the workbook, managing the cache for concurrent access, and bringing data from external datasources. If a workbook contains custom logic implemented in UDFs, Excel Calculation Services combines that logic with the logic that was already placed in the workbook when it was authored.

The overall architecture of Excel Services limits interactions with the Application Server layer to being performed only through the Web Frontend layer, where three data access methods are exposed: the Excel Web Access (EWA) web part, the Web Services API, and the REST API.

EWA renders an Excel workbook in the browser with a high degree of fidelity with the Excel client. EWA is not new; it has been around since MOSS 2007, but what is new in Excel Services 2010 is a real JavaScript object model (JSOM) used to automate EWA. JSOM is typically JavaScript code that a developer inserts on a web part page that contains the EWA using a Content Editor web part or directly referenced in a custom ASPX page itself. JSOM is used to drive EWA and manipulate the rendered workbook inside. For example, you can use JSOM to capture the click event on a cell.

On the other hand, the Web Services API provides an interface to enable applications to access the workbooks through SOAP calls, while the REST API provides access to all aspects of a workbook, such as the visuals, the model, and the data, through simple URLs. All these middle-tier data access methods are covered in more detail later in this chapter.

Office Data Connection

As with any other BI solution, when you start off with an Excel application, the first thing you may want to do is to go after data. In this section, you create an Office Data Connection (.odc) file and store it in the Data Connections Library of the Business Intelligence Center you created earlier in this chapter.

To connect to SQL Server Analysis Services and pull in some data, follow these steps:

- Open Office Excel 2010.

- From the Data tab in the Ribbon, click the From Other Sources button.

- For this particular example, select From Analysis Services.

- Specify the Analysis Services instance you want to connect to, and click Next.

- Select the database and the cube you want to connect to. For this example, you want to connect to the AdventureWorksDW2008R2 database and the AdventureWorks cube.

- Change the filename and friendly name to AdventureWorksDW_ADCube.odc and AdventureWorksDW_ADCube, respectively. Also, make sure that you check the Always Attempt to Use This File to Refresh Data box, as shown in Figure 14-7.

FIGURE 14-7

- Click Authentication Settings, and select None as the method of authentication, as shown in Figure 14-8. This selection forces Excel Services to use the unattended service account to authenticate to SSAS. The unattended service account is covered in detail in the next section.

FIGURE 14-8

- Click Finish. When Excel 2010 displays the Import Data dialog box, select Only Create Connection, and then click OK.

- Browse to C:Users[Current User]DocumentsMy Data Sources and upload the AdventureWorksDW_ADCube.odc file to the Data Connections Library in the Business Intelligence Center site you created earlier in this chapter.

- Edit the property of the file and change the Content Type to Office Data Connection file.

- Next, create a new web part page called ExcelDemo and insert the Data Connections Library in the Footer web part zone, as shown in Figure 14-9.

FIGURE 14-9

Creating the .odc files this way is a best practice, for two reasons. First, users don't need to know how to create the .odc files. Instead, they are created by IT and are made available to users. Second, this approach enables users to access the data that they need quickly by clicking the Office Data Connection file, which opens the Excel 2010 client, automatically sends their credentials to the server, and authenticates them to the Analysis Services server. As a person who manages this connection string, if you ever want to adjust things or change them around, there is only one place you need to go. After you apply your new settings, they are propagated into the workbooks in future connection requests.

The Unattended Service Account

As you saw in the previous section, Excel Services uses three authentication options to authenticate to SSAS: Windows authentication, SSS, and None.

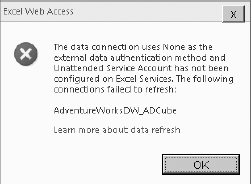

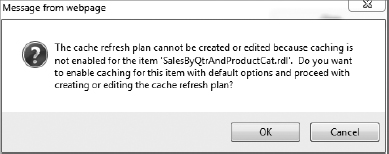

The only case in which you would use Windows authentication is when SSAS accepts Windows authentication, and you want to let the identity of the workbook viewer delegate to the Analysis Services instance. This authentication method is known as peruser identity and only Kerberos enables it. If you select Windows Authentication without implementing Kerberos, after the workbook is published to SharePoint, users get the error shown in Figure 14-10 when viewing the workbook in the browser.

FIGURE 14-10

In some scenarios you want to have a single account act as a proxy for all your users when accessing the backend datasource. This account is referred to as an unattended service account, and it is widely used in the new service application infrastructure in SharePoint 2010.

![]() Note that “unattended service account” and “application pool identity” are not the same type of account. The unattended service account is a service application setting such as in Excel Services, Visio Services, Business Connectivity Services, and PerformancePoint Services. The unattended service account is stored in the service application's database and has nothing to do with IIS.

Note that “unattended service account” and “application pool identity” are not the same type of account. The unattended service account is a service application setting such as in Excel Services, Visio Services, Business Connectivity Services, and PerformancePoint Services. The unattended service account is stored in the service application's database and has nothing to do with IIS.

In MOSS 2007, the single sign-on (SSO) feature somehow implements the concept of the unattended service account, but not in a practical way. Unfortunately, the SSO feature introduced some serious limitations with non-Windows identity providers and anonymous users, so it was replaced with the Secure Store Service (SSS) in SharePoint Server 2010. The SSS works with all types of authentication providers, and it's not limited to Windows identities. It provides access to external datasources under the security context of a predefined set of credentials stored in the Service Application's database.

In Excel Services, each workbook can have its own unattended service account or they all can share a global unattended service account. If the workbook connection's authentication type is set to SSS, you need to reference a target application ID that stores the unattended service account credentials required for authenticating to SQL Server Analysis Services. This account might not be used by other BI applications that need to talk to the same backend datasource. Whether to use a single or different unattended service accounts really boils down to your business requirements, but keep one thing in mind: the more accounts you create, the more administrative effort is required to manage them.

If the workbook connection's authentication type is set to None, the global unattended service account is used. This account, along with many other Excel Services settings, can be configured in the Excel Services service application. Failure to define this account will cause the error in Figure 14-11 to be thrown.

The process of creating the unattended service account is fairly straightforward. Before you start creating this account, you need to ensure that you are either the farm administrator or the service application administrator for the instance of the Secure Store Service.

To create this account, perform the following steps:

- Browse to the Central Administration site.

- From the Application Management category, click Manage Service Applications.

- From the list of existing service applications, click Secure Store Service application.

- From the Ribbon, click the New button.

- Figure 14-12 shows the settings for the new target application. In the Target Application ID box, type a name to identify this target application. In the Display Name box, type a friendly name that's shown in the user interface. In the Contact Email box, type the email address of the primary contact for this target application. Change the Target Application Type to Group for mapping all the members of one or more groups to a single set of credentials that can be authenticated to the SQL Server Analysis Services instance, and then click Next.

FIGURE 14-11

FIGURE 14-12

- Since the target application type is Group, you can leave the default values in this page untouched and move on to the next step, by clicking Next.

- In the Specify the Membership Settings page, in the Target Application Administrators field, specify all users who have access to manage the target application settings. Again, since the target application type is Group, in the Members field, specify the groups or users that are mapped to the credentials for this target application.

- Click OK.

At this point, you should be able to see the new target application along with other target applications in the Manage Target Applications page, as shown in Figure 14-13.

FIGURE 14-13

After creating the target application, you should set credentials for it. To set credentials for the target application you've just created, follow these steps:

- Select the target application you have just created, and then, in the menu, click Set Credentials.

- Fill out the fields for setting credentials (see Figure 14-14), and click OK. This is the account that is used to authenticate to SSAS, so you need to ensure that this account can authenticate to SSAS and is at least a member of the db_datareader role for the AdventureWorksDW2008R2 database.

FIGURE 14-14

You are almost there! The last step is to introduce the new target application to Excel Services. To do so, follow these steps:

- Browse to the Central Administration site.

- From the Application Management category, choose Manage Service Applications.

- From the list of existing service applications, click Excel Services.

- From the Managed Excel Services page, click Global Settings.

- Browse all the way down to the External Data section, and specify the new target application ID (string text) in the Application ID textbox, as shown in Figure 14-15.

FIGURE 14-15

- Click OK when you are done.

Authoring Workbooks in Excel

AdventureWorks is a company that knows Business Intelligence gives them an edge over their competitors because when they make business decisions, those decisions are based on the latest and most up-to-date analysis of relevant business data. Therefore, the BI team at AdventureWorks created an OLAP cube to keep track of the Internet sales and made it available to the business analysts to use for analysis.

The goal of this section is to perform a quick analysis of the data kept in that cube. The section starts out with a step-by-step procedure to create a simple PivotTable report and moves into some of the newest Excel 2010 features used for easier visualization and better insights into the key data values.

This section assumes that you already completed the following two tasks:

- Created a site from the Business Intelligence Center template and named it BI Center (see the “The Starting Point: Business Intelligence Center” section).

- Properly set up an Office Data Connection to access the AdventureWorksDW2008R2 and the AdventureWorks cube and uploaded the .odc file to the Data Connections Library (see the “Office Data Connection” section).

PivotTable and PivotCharts

Almost every spreadsheet application currently on the market ships with a feature that allows sorting and summarizing large tables of data independent of the original data layout kept in the spreadsheet itself. This feature has different names in products such as Microsoft Excel, OpenOffice.org Calc, Quantrix, and Google Docs, but the concept remains the same in all these products.

In Microsoft Excel, this capability is called a PivotTable. Essentially, a PivotTable is a powerful data summarization and cross-tabulation object that allows you to do free-form layout of your business data. For instance, when you use Microsoft Excel for cube browsing, you can import the cube's data into Excel and represent it as PivotChart or PivotTable report, connected to the same cube.

The following steps help you create a new Excel workbook containing an OLAP PivotTable report based on data in the Analysis Services cube.

- Browse to the ExcelDemo web part page in the BI Center, and click the AdventureWorksDW_ADCube.odc Office Data Connection file to open Excel.

- At this point, you should see the PivotTable field list, and an empty PivotTable report is placed in the current worksheet at =$A$1.

- To add some measures to the PivotTable, change Show Fields Related to Internet Sales, and select the following three fields as measures:

- Internet Gross Profit

- Internet Gross Profit Margin

- Internet Sales Amount

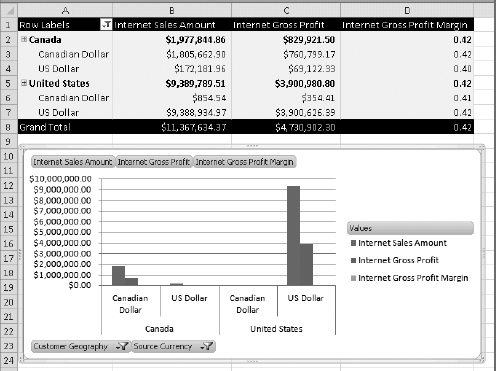

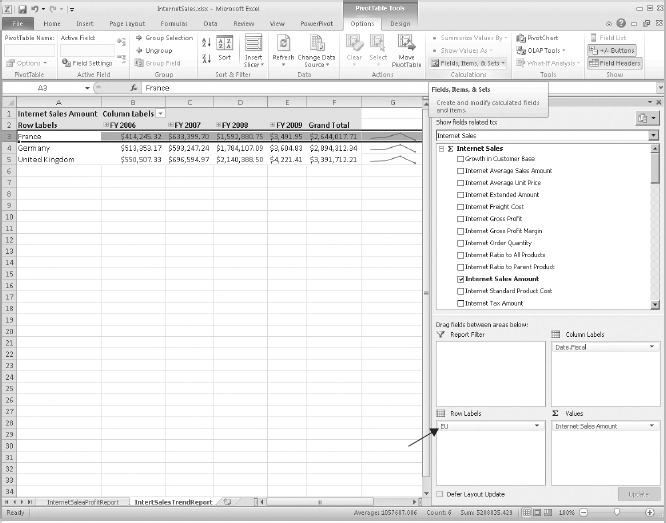

- Now you need to add two dimensions to the PivotTable. Select Customer Geography and Source Currency Name from the PivotTable Tools tab, select the Design tab, and under PivotTable Styles, choose an appropriate style. Your workbook should look like Figure 14-16.

With the Internet Sales PivotTable inserted, make the report complete by adding a PivotChart to the worksheet. This chart will give focus to the sales data and make it easy to understand.

FIGURE 14-16

To add a PivotChart based on the data in the Internet Sales PivotTable, you need to perform two easy steps as follows:

- From the PivotTable Tools contextual menu, click Options, and then the PivotChart button to insert a PivotChart.

- Select Clustered Column chart type, and click OK.

PivotCharts are new in Excel 2010, and they are like normal Excel charts with one major difference. In PivotCharts, you can drill down into the hierarchies to identify the items you want to view, and this affects both the PivotChart and the associated PivotTable. In Excel 2007, you could only link charts to PivotTables and only navigate them through the PivotTable.

PivotCharts are new in Excel 2010, and they are like normal Excel charts with one major difference. In PivotCharts, you can drill down into the hierarchies to identify the items you want to view, and this affects both the PivotChart and the associated PivotTable. In Excel 2007, you could only link charts to PivotTables and only navigate them through the PivotTable. - In the PivotChart you just inserted, click the Source Currency drop-down list and find US Dollar and Canadian Dollar. Then click OK.

- Click the Customer Geography drop-down list and select Canada and United States. Your PivotTable should look like Figure 14-17.

FIGURE 14-17

Two things need to be highlighted here. First, notice that when you apply filters to the PivotChart, a search box appears that allows you to look for a specific item in the hierarchy and find it. That means you don't have to browse all the way down or up in the hierarchy to find it. This is particularly important, because if a field contains lots of items, it's fairly difficult to find what you are looking for. Second, when you change the filters on the PivotTable, this change affects the PivotTable and vice versa!

Label Filtering

In an Excel PivotTable, you can filter a column or row label by using the Label Filter or Value Filter options. In the Internet Sales PivotTable example, you can select the PivotTable to report on backend data only when Source Currency is equal to US Dollar, as shown in Figure 14-18.

FIGURE 14-18

To filter Source Category on US Dollar only, follow these steps:

- In the PivotTable, click the arrow on the right of the Row Labels heading.

- In the Select Field drop-down list, select Source Currency.

- In the search box, type US Dollar.

- In the list of currencies, make sure that US Dollar is selected, and then click OK.

- The PivotTable now shows results for work done on the selected currency, and you can focus your analysis on that data.

Visual Slicers

In Excel 2007, if you wanted to filter a PivotTable or PivotChart, you had to add it to the Report Filter section. Additionally, if you wanted to select multiple items in a filter, Excel would have shown you the Multiple Items tag without telling you which items you had selected. In Excel 2010, Visual Slicers address issues of this kind. In nutshell, Slicers allow you to filter the data on PivotTables and PivotCharts with just a single click, which is much easier than before.

There are three characteristics about Slicers that make them a great navigation paradigm in Excel 2010. First, Slicers are rendered as buttons, making the filtering operations relatively easy. Second, you can select more than one item by holding the Shift key and clicking each item or by dragging your mouse over a range. Third, the filters that produce no result from the backend datasource are grayed out, which is yet another good indicator when interacting with PivotTables and PivotCharts.

The following steps will help you add Category and Subcategory Slicers to the Internet Sales PivotTable.

- In the PivotTable tools, select the Options tab.

- In the Sort & Filter category, choose Insert Slicer

Insert Slicers.

Insert Slicers. - Select Internet Sales from the drop-down list, and then select Product

Product Categories. Select both Category and Subcategory.

Product Categories. Select both Category and Subcategory. - Resize and position the stacked version of both Slicers on the right side of the PivotTable. Also, using the Options menu, increase the columns in the Subcategory Slicer to 4.

- Now, click Bikes on the Category Slicer and notice how the related fields and the actual data are affected in the Subcategory Slicer PivotChart, and the PivotTable itself, as shown in Figure 14-19.

FIGURE 14-19

Sparklines

Another addition to Excel 2010 gives you the capability to add Sparklines to a set of data being reported on. A Sparkline is a powerful graphic that brings meaning and context to what it describes. Simply put, think of a Sparkline as a mini-chart without any fluff, such as tick marks, labels, axis lines, or a legend.

Excel 2010 ships with three distinct types of Sparklines:

- Line

- Columns

- Win/Loss

If you have worked with and loved the conditional formatting data bars introduced in Excel 2007, you will find Sparklines even easier to use.

The following steps help you add Sparklines to the Internet Sales report to highlight trends in the Internet sales report and identify Line values with special formatting.

- Create a new sheet and name it InternetSalesTrendReport.

- From the Insert tab, click PivotTable and add a new PivotTable to the existing sheet at =$A$1.

- In the Create PivotTable dialog box, select Use an External Datasource and select AdventureWorksDW_ADCube from the Connections in this workbook section.

- In the PivotTable Field List task pane, choose Internet Sales in the Show Fields Related To drop-down list, and add a measure of Internet Sales Amount.

- Now, select the CustomerGeography and Date.Fiscal as the attributes on which the Internet Sales Amount measure should be analyzed.

- Place the cursor at cell =$G$3.

- From the Insert tab, under the Sparklines group, click on Line Sparkline.

- With the Create Sparkline dialog box open, select the cells from B3:E3, as shown in Figure 14-20.

FIGURE 14-20

- Click OK to create the Sparklines.

- From the Design tab, update the formatting of the inserted Sparkline to highlight the min and max points.

- The Sparkline object is like any other object placed in a cell, such as a formula, so it can be selected and dragged from cell G3 to G8 to insert the same line chart bound to other rows of the PivotTable, as shown in Figure 14-21.

FIGURE 14-21

Show Value As

In Excel 2007, if you wanted to perform certain types of calculations against a PivotTable for rows and columns, you would have to do this either outside of the PivotTable in your own function or turn them into a cube formula.

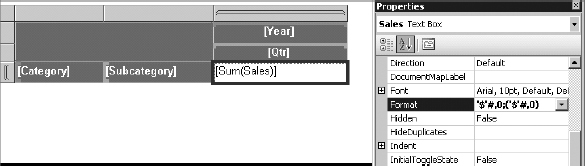

In Excel 2010, Microsoft has introduced a new feature named Show Value, which allows you change the way you view values, by selecting from a list of predefined calculations, as shown in Figure 14-22.

FIGURE 14-22

The following steps help you calculate and display regional Internet sales as a percentage of the country sales.

- Expand the Canada node to show all the available regions.

- Right-click the value in any of the fiscal years, and from the context menu, select % of Parent Total.

- From the Base Field drop-down list, select Country.

- Click OK.

By looking at the PivotTable, you can quickly find out that from 2002 to 2005, British Columbia had the biggest percentage of sales among all Canadian provinces, as shown in Figure 14-23.

FIGURE 14-23

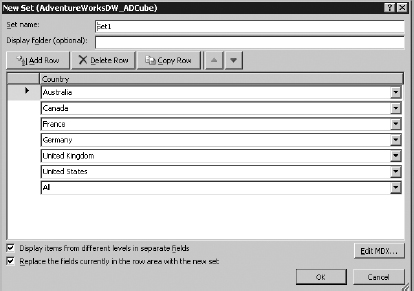

Named Sets

When working with OLAP PivotTables, there are scenarios where you want to work with the same logical group of items from the underlying data across multiple reports. For instance, most of the report layouts needed by AdventureWorks need to show information about European countries. The problem is that this grouping doesn't exist in the cube, so you always end up applying the same filter to get reports for such countries over and over again.

A new feature in Excel 2010 that helps resolve issues of this kind is the ability to define Named Sets. This new feature allows you put common sets of items together and reuse them. This grouping can be done based on row items, column items, or your own MDX queries.

The following steps help you add a Named Set to the Internet Sales PivotTable.

- From the PivotTable tools, click Options in the Ribbon.

- Select Fields, Items, & Sets, and then select Create Set Based on Row Items.

- The New Set creation UI pops up, as shown in Figure 14-24. Note that Subtotals and Grand totals contain an All member. The UI contains all the tuple that currently define the row labels of the PivotTable.

FIGURE 14-24

- Delete the countries that are not European.

- Change the new Set name to something easier to remember in the future, such as EU.

- Click OK. Notice that the grouping has been created (without applying any filter), and the new Named Set is placed on the corresponding axis (Row Label), as shown in Figure 14-25.

FIGURE 14-25

At this point, you have a reusable Named Set containing three European countries. The new Named Set is attached to the workbook and moves with it, so if anyone opens the workbook, they can reuse this set in their own PivotTable and focus on the rest of their analysis. Of course, the example used in this section was relatively simple, but the idea of reusability is pretty much the same, no matter how complex your Named Set becomes.

Although Named Sets may sound just like simple groupings of items, in reality they offer way more than that. You can do things with Named Sets that otherwise wouldn't be possible, such as combining items from multiple hierarchies.

In previous versions of Excel, you could create Named Sets with some limited functionalities, but there was no graphical user interface, and this could be done only through the Excel object model and by writing your own MDX queries. The Named Sets defined programmatically consisted only of items from a single hierarchy, and they could never be dynamic in nature.

In Excel 2010, you can make Named Sets based on your own custom MDX and use them in PivotTables to dynamically change their dimensionality. These Named Sets — called Dynamic Sets — were first introduced in Analysis Services 2008, but unfortunately Excel 2007 couldn't fully benefit from them. For example, the Top 50 Selling Countries is one of the great examples in which using Dynamic Sets can help a lot. Another example is when you want to see European countries when you're filtering on Source Currency by Euro and show North American countries when you're filtering by American Dollar.

What-If Analysis

Like Dynamic Sets, Analysis Services has included writeback capability for a while, but it was not implemented in Excel out of the box. In previous versions of Excel, if you ever clicked a cell in a PivotTable to edit its content, you would get an error message saying that PivotTables cannot be edited.

In Excel 2010, the ability to write back against a cube and change the underlying data is referred to as what-if analysis. This particular feature becomes extremely helpful when you use Excel and your existing data to plan something in future. In a nutshell, what-if analysis is all about answering what-if questions you might have in your mind when looking at a PivotTable.

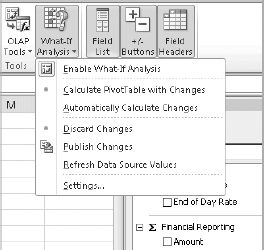

To use what-if analysis, you need to enable it from the PivotTable tools ![]() Options tab.

Options tab.

Once the what-if analysis is enabled, you can edit any values and enter the value of your choice. At the end, you can automatically calculate the selected change or have the cube calculate it for you and commit the information back to the cube, as shown in Figure 14-26.

FIGURE 14-26

Publishing the Workbook

Now that you have created a workbook in Excel 2010, it's time to make this workbook available in SharePoint and examine the capabilities of Excel Services 2010. This process is known as publishing a workbook to Excel Services.

There are many configuration settings that can be applied to the Excel Services application and the site that hosts your published workbook. Although diving into all these configuration settings is out of the scope of this chapter, the rest of this section discusses some of these settings that can get you up and running.

Trusted Locations

A major consideration in deploying to Excel Services, and quite frankly the one you will want to plan carefully, is to determine the trusted Excel file locations and trusted connection document libraries in your SharePoint farm. The following steps walk you through defining these locations:

- Browse to the Central Administration site.

- From the Application Management category, choose Manage Service Applications.

- From the list of existing service applications, click Excel Services.

- From the Manage Excel Services page, select Trusted File Locations.

- If the location where you plan to publish your workbook is not in the list of trusted locations, click Add Trusted File Location and define that location.

- Make sure you enable the workbook to make external connections by setting the Allow External Data setting to either “Trusted Data Connection Libraries Only” or “Trusted Data Connection Libraries and Embedded.”

Click OK to go back to the Excel Services Trusted File Location page. At this point, the list of trusted file locations should look like Figure 14-27.

FIGURE 14-27

- Browse back to the Manage Excel Services page, and this time around, select Trusted Data Connection Libraries.

- From the Excel Services Trusted Data Connection Libraries page, specify the Data Connection Library from which Excel workbooks opened in Excel Services are allowed to access the Office Data Connection files.

![]() Note that because service applications can be associated with multiple web applications, you should define multiple trusted locations for each web application that hosts your Excel workbooks. The same thing is true if you have assigned multiple Excel Services Service applications to one web application. You need to define the trusted locations for each service application.

Note that because service applications can be associated with multiple web applications, you should define multiple trusted locations for each web application that hosts your Excel workbooks. The same thing is true if you have assigned multiple Excel Services Service applications to one web application. You need to define the trusted locations for each service application.

Publishing to Excel Services

With the trusted locations properly configured, the next step is to publish the Internet Sales workbook to SharePoint and view it using Excel Services.

The following steps help you publish the workbook:

- From the Excel 2010 Ribbon, click File to open the Backstage.

- Switch to the Share tab.

- From the Share billboard, click the Publish to Excel Services option, and then click Publish to Excel Services.

- Click the Excel Services Options button that appears below the Open in Excel Services option.

- The Internet Sales workbook contains two worksheets, so you can decide if you want to publish the entire workbook or just portions of the workbook based on named cells, or parameters, defined within workbook. In this particular example, you will choose Entire Workbook, as shown in Figure 14-28.

FIGURE 14-28

- Enter the path of the BI Center site you created earlier in this chapter and click Save.

- In Internet Explorer, navigate to the URL you specified in Step 6.

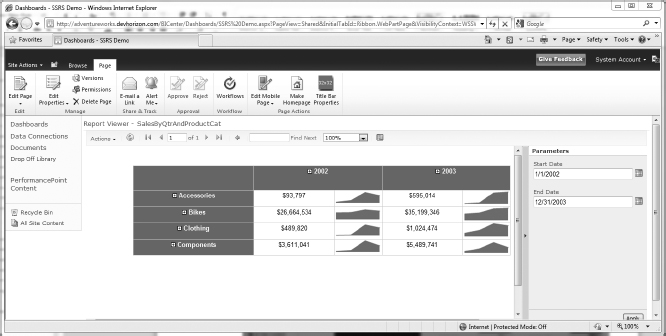

- Click the link to InternetSales.xlsx to view it in the browser, as shown in Figure 14-29.

FIGURE 14-29

After a workbook is published to SharePoint, Excel Services performs loading and calculations included within the workbook on the server, which means that whatever logic is behind the workbook is no longer directly accessible to the end users.

Next, the Excel Web App tries to open the workbook in view mode. Unlike the previous version, in Excel Services 2010 if the workbook contains unsupported features, it is still rendered, but some of the functionalities may not work properly.

As a final remark, the Excel web app works in IE, Firefox, and Safari. Figure 14-30 shows InternetSales.xlsx opened on an iPhone 3G using the Safari browser.

FIGURE 14-30

Switching to Edit Mode

One of the drawbacks of publishing an Excel workbook to Excel Services 2007 is that the published Excel workbook is not editable. This means that users cannot mess around with the data contained in the workbook and then save the changes back to the Excel workbook. Although the Open In Excel and Open Snapshot In Excel options allow users to take the workbook offline and take further actions, most users prefer to be able to do online editing.

In Excel Services 2010, you can edit a workbook using the Excel Web App. Edit mode offers only a subset of the Excel 2010 functionalities, but there is a fairly impressive set of operations that you can perform when editing the workbook online.

Edit mode also supports joint sessions, where more than one user can edit the document at the same time. This is done through a separate session for each user in the Excel Web App. The Excel Web App keeps alerting everyone about the changes that have been made to the workbook by others.

Figure 14-31 shows an editable version of the Internet Sales workbook and a simple calculation at =$G$3.

FIGURE 14-31

Excel Web Access Web Part

Another way to render and manipulate a published Excel workbook is through the Excel Web Access web part, also known as EWA. Figure 14-32 shows the entire Excel workbook displayed in a single EWA.

FIGURE 14-32

Unlike its predecessor, EWA has no dependency on client-side scripts or any ActiveX control to function properly. You can navigate through the sheets within the published workbook just as you do when using the Excel client. Not only can the entire workbook be hosted and rendered in a EWA web part, a section of the workbook can be as well. This is done based on named parameters within the workbook, which should be created when authoring it.

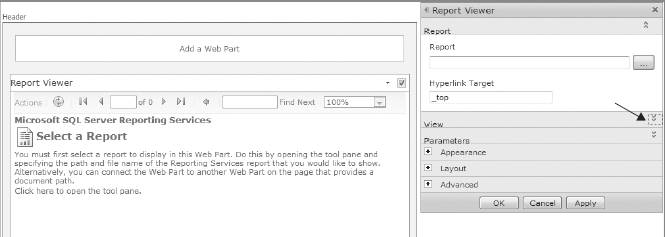

In Excel Services 2007, if you wanted to allow interaction with the workbook through the EWA, you had to list parameters on the left side in the Parameter pane. In the EWA web part's properties, you can control how much users can interact with the hosted workbook and for what features, as shown in Figure 14-33.

FIGURE 14-33

One thing needs to be highlighted here. Interactivity in EWA web parts is not the same as editing in the Excel Web App. In the Excel Web App, the changes users make to the workbook are written back to the original workbook. When interacting with EWA, users can see changes in calculations and visualization objects, but the original workbook remains intact.

You can expose your Excel model in the Web Access web part and turn on the interactivity feature so that users can interact with the workbook without a need for listing parameters on the left side in the Parameter pane. Also, you have the option to specify whether changes made to the workbook need to be committed back or, in the case of a model, not committed back to the workbook.

Last, but certainly not least, EWA supports the Web Part Connection framework and can send data to or receive data from other web parts to create really powerful dashboards and mash-up scenarios.

PERFORMANCEPOINT SERVICES

Especially in today's tough economic times, dashboard-style applications that can present historical and real-time data to the decision makers in the form of metrics, reports, and data visualizations are becoming more and more popular. PerformancePoint Services is Microsoft's dashboard delivery tool, which now is part of the SharePoint Server 2010 Enterprise platform. But, why do you need PerformancePoint? Can't you build dashboards using SharePoint?

Yes, you certainly can! There are a lot of components in the SharePoint ecosystem that you can use to build out dashboard-style applications. SharePoint, right out of the box, offers some lightweight tools for hosting and displaying data, such as web part pages, status lists, and the Chart web part. You can combine these types of content with Visio diagrams, InfoPath forms, Reporting Services reports, or Excel workbooks to build dashboards. However, such dashboards may not present a level of sophistication people would like to see on their computers' screens every morning when they come to work!

The good news is that PerformancePoint Services works with all these types of technologies to help you aggregate content and data to assemble richer and more interactive dashboards that suit any business requirements. The following sections discuss the out-of-the-box features in PerformancePoint Services and the techniques that you can use to create a PerformancePoint dashboard.

Dashboard versus Scorecard

When it comes to business decision-making processes, sometimes there are terms with blurry lines between them, which makes things a bit difficult to understand. One example is the title of this section, “Dashboard versus Scorecard,” which is a very common point of confusion! This section looks into clarifying this confusion from different angles.

Conceptually, a dashboard is a collection of real-time information that is used for evaluating performance and making sure that operational goals are met. However, a scorecard stays at a higher level than a dashboard and is more focused on monitoring the performance associated with organizational strategic objectives. So, the key difference here is short-term goals versus long-term success. In reality, making a distinction between a scorecard and a dashboard is absolutely unnecessary, as both are used to accomplish one thing: making sure that the business is on track to reach established goals.

In the context of PerformancePoint Services, things are much simpler and less formal. A PerformancePoint dashboard is simply an .ASPX page that renders a bunch of heads-up displays, including a scorecard. Let's think about it this way for now!

PerformancePoint Services Architecture

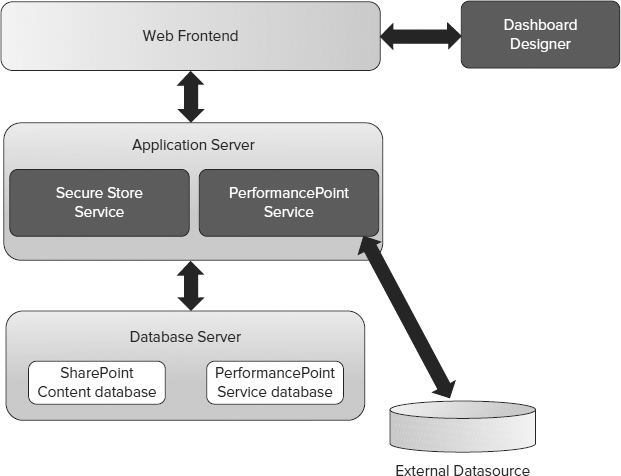

The functionalities that PerformancePoint Services offer are handled in three tiers of a SharePoint Server farm topology: Database Server, Application Server, and Web Frontend, as shown in Figure 14-34.

The Web Frontend server hosts the Dashboard Designer application, PerformancePoint web parts, PerformancePoint web services, and the service application proxy that is required to communicate with the PerformancePoint Services application installed on the application server. Like all other service application proxies, the PerformancePoint proxy talks to the PerformancePoint Services application using claims, so the environments with no Kerberos implementation are not affected by the double-hop security issue.

In the middle tier, two service applications make the integration happen:

- Secure Store Service: This service application stores the password for the PerformancePoint Services unattended account. The unattended service account is covered in the next section.

FIGURE 14-34

- PerformancePoint Services: This service application stores the settings needed for the instance. If you have worked with PerformancePoint 2007 and MOSS 2007 before, you probably recall that you had to go through many configuration settings to get the PerformancePoint dashboards to work. Thankfully, configuring PerformancePoint Services 2010 is much simpler than before, and it requires no changes in the SharePoint web application's web.config file anymore.

In the database layer, most of the configurations required for PerformancePoint service applications are stored in the PerformancePoint service database.

![]() At the time of writing this book, PerformancePoint still doesn't support web applications with claims-based authentication, and that's because of way the click-once Dashboard Designer is structured. This limitation may be changed with the release of SharePoint Server 2010 SP1.

At the time of writing this book, PerformancePoint still doesn't support web applications with claims-based authentication, and that's because of way the click-once Dashboard Designer is structured. This limitation may be changed with the release of SharePoint Server 2010 SP1.

The Unattended Service Account

One of the challenging configuration steps in the previous version was to set up the authentication to the backend datasource. The problem was that the authentication option couldn't be defined per datasource; instead, PerformancePoint would use the application pool identity of the SharePoint web application. From the security practice perspective, this was not recommended because users could potentially use PerformancePoint to access data that they shouldn't have access to, such as SharePoint content databases.

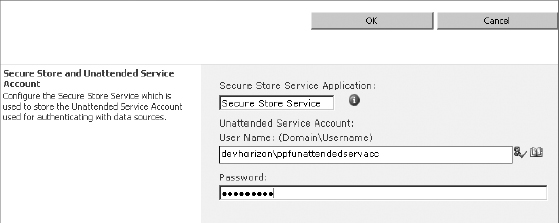

PerformancePoint Services 2010 implements per datasource authentication and has some new features that control how authentication to the datasource itself is configured. One of these new features is the unattended service account for PerformancePoint.

The unattended service account concept in PerformancePoint services 2010 is very similar to Excel Services' unattended account, with two differences. As explained earlier in this chapter, in Excel Services you create the target application in the Secure Store Service application and then reference its Application ID in the Excel Services service application's settings. Thus, both the username and password are stored in the Secure Store Services application.

In PerformancePoint Services, however, you create the unattended account directly in the PerformancePoint Services application settings. In this case, the password is stored in Secure Store Service and the actual username is stored in the PerformancePoint Services database. If you look at a PerformancePoint target application, you will find that it only contains the password field and not the username field.

An unattended account can be created using the following steps:

- Browse to the Central Administration site.

- From the Application Management category, choose Manage Service Applications.

- From the list of existing service applications, click PerformancePoint Service Application.

- Click the PerformancePoint Service Application Settings link.

- Specify the unattended service account for PerformancePoint (see Figure 14-35), and click OK.

FIGURE 14-35

- Browse to the Secure Store Service application's settings page and verify that the unattended account has been created.

Introducing Dashboard Designer

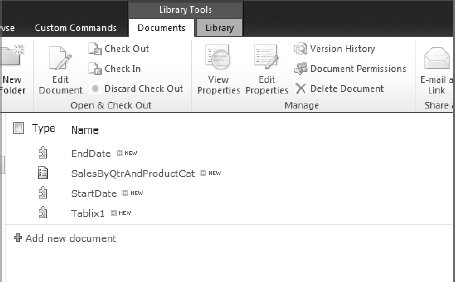

In this section, you will kick off PerformancePoint dashboard designer by following these steps:

- In Internet Explorer, navigate to the Business Intelligence Center site you created at the beginning of this chapter.

- Click the Create Dashboards link, and then click the Start Using PerformancePoint Services link, as shown in Figure 14-36.

FIGURE 14-36

- From the PerformancePoint Services page, click the big button that says Run Dashboard Designer. This downloads and installs the PerformancePoint Dashboard Designer to your workstation.

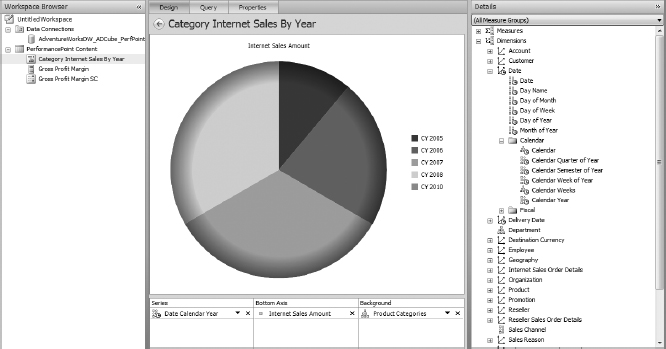

After the executable file is downloaded and installed on your computer, the PerformancePoint Dashboard Designer appears. In the Data Connections folder, the Excel data connection file that you created earlier in this chapter is a good indication that the dashboard designer is live and connected to the BI Center site.

One thing that you may notice is that the Dashboard Designer is installed on the client machine using a web-based deployment technology called ClickOnce. A major advantage of this deployment model is that it frees the IT department from managing and distributing the application. All that's required is for the Dashboard Designer team to provide proper access permission to the BI Center site.

After the Dashboard Designer is installed, you have an empty workspace. A workspace is a primary container for all of the elements that you can use to build your dashboard, and it keeps its content synched with the site from which it was launched.

Essentially, the workspace becomes an XML file (.ddwx) that encapsulates all the metadata required to describe a PerformancePoint dashboard. In the workspace, you can build new elements or you can import existing elements from a published dashboard such as scorecards, KPIs, reports, filters, indicators, and dashboards.

Creating Your First Dashboard

Now that you have created a PerformancePoint workspace, you are ready to create your first dashboard, which displays historical and real-time information as an asymmetrical report and compares it to an established goal. And, with that, it's time to build the actual dashboard from the ground up.

Dashboard Datasource

As with any other BI solution, the first thing that you will want to do is to go after data. To create the datasource used for this dashboard, follow these steps:

- Right-click the Data Connections folder in the Workspace Browser, and then select New

Data Source.

Data Source. - From the Select a Data Source Template menu, choose the Analysis Services template to create a datasource that connects to Microsoft SQL Server Analysis Services, and click OK.

- In the Connection Settings, specify the Analysis Services instance you want to connect to. In the next field, select the database and the cube you want to connect to. For this example, you want to connect to the AdventureWorks DW 2008R2 database and the AdventureWorks cube, as shown in Figure 14-37.

In the Data Source Settings, note the Cache Lifetime setting. The value of this textbox (in minutes) indicates the interval of refreshing the dashboard information from the backend datasource.

In the Data Source Settings, note the Cache Lifetime setting. The value of this textbox (in minutes) indicates the interval of refreshing the dashboard information from the backend datasource.FIGURE 14-37

- Click Test Data Source to make sure that your connection settings are correct.

- Switch to the Properties tab and change the Name to AdventureWorksDW_ADCube_PerfPoint.

- Save the new datasource by right-clicking it in the Workspace Browser, and then selecting Save.

At this point, you have successfully created the dashboard's main datasource and it's been uploaded to the Data Connections Document Library by the Dashboard Designer.

Datasource Authentication Types

As you saw in Figure 14-37, there are three authentication types available for the datasource you are building.

The unattended service account option has been discussed already, and by now you should know what it does, but the other two options deserve more attention:

- Unattended Service Account and Add Authenticated User Name in Connection String: If you select this option, supply the SharePoint authenticated provider and username (Forms, SAML, windows, and so on) as a string in the CustomData field in Analysis Services. You can then create a role (or set of roles) and write MDX queries using the CustomData string to dynamically restrict access to the cube data. The main challenge of this solution is that you need to modify the cube data to include the users of the system and their relationships to the data; this can be somewhat difficult to maintain.

- Per-User Identity: There are cases that may not require you to create the unattended service account at all. Picture this: Your backend datasource supports Windows authentication, and user identities must be delegated all the way down to the backend datasource when they access the PerformancePoint dashboards. In PerformancePoint, this authentication type is known as per-user identity, and only Kerberos enables it.

![]() No matter what authentication type you choose for PerformancePoint Services, always make sure that it has proper access to the backend datasource that will be required. For more information, see my blog post at www.devhorizon.com/go/16.

No matter what authentication type you choose for PerformancePoint Services, always make sure that it has proper access to the backend datasource that will be required. For more information, see my blog post at www.devhorizon.com/go/16.

Tracking Performance Using KPIs

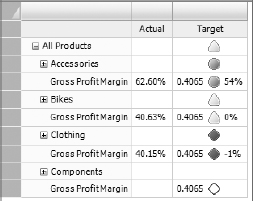

Recall from the previous section that your goal for building the Internet Sales dashboard is to compare Internet sales information with an established goal, and then measure and monitor the success of the online business per country.

But what is success anyway? How is it implemented in a dashboard? Success (or the goal) in a certain area of the business is defined by someone in your organization who knows the business inside and out. In PerformancePoint, a primary metric used to implement and measure this success is something referred to as a key performance indicator (KPI). After a KPI is defined and implemented, it can be used to monitor the organization's progress in a specific area, such as gross profit margin per product category earned from Internet sales.

To create a new KPI to track gross profit margin for Internet sales, you need to follow these steps:

- Right-click the PerformancePoint Content folder and select New

KPI, as shown in Figure 14-38.

KPI, as shown in Figure 14-38. - In the Select a KPI Template dialog box, select Blank KPI, and then click OK.

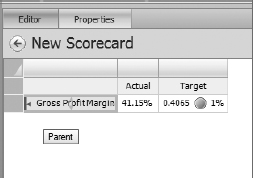

- Figure 14-39 shows the new KPI. Here, you can define your actual and target values. You can also continue adding new actuals or targets to the current KPI. For example, if your organization has defined a minimum goal and stretched goal, you may want to bring them into the KPI by defining two target values.

FIGURE 14-38

FIGURE 14-39

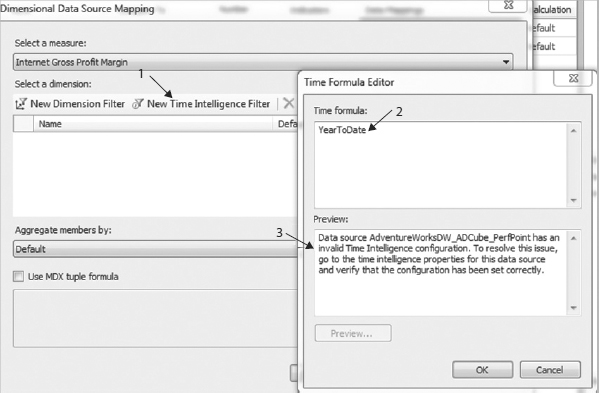

- The current value for Actual is set to 1, which doesn't represent anything. Click the 1 (Fixed Values) link in the Data Mappings column for Actual, and then in the Fixed Values Data Source Mapping dialog box, click the Change Source button.

In Analysis Services, you can build KPIs that have four values: Actual, Target, Status, and Trend. In PerformancePoint KPIs, you only have two values: Actual and Target. One interesting aspect about Actual and Target values in PerformancePoint is that they do not need to come from the same datasource. For example, you can define a KPI that gets the Actual Value from the cube and then have the Target value loaded from a SharePoint list. This makes PerformancePoint KPIs very flexible.

In Analysis Services, you can build KPIs that have four values: Actual, Target, Status, and Trend. In PerformancePoint KPIs, you only have two values: Actual and Target. One interesting aspect about Actual and Target values in PerformancePoint is that they do not need to come from the same datasource. For example, you can define a KPI that gets the Actual Value from the cube and then have the Target value loaded from a SharePoint list. This makes PerformancePoint KPIs very flexible. - Select the AdventureWorksDW_ADCube_PerfPoint data connection, and click OK.

- From the Select a Measure drop-down list, select Internet Gross Profit Margin.

- Click OK to close the dialog box.

- Select the Target row, and click the Set Scoring Pattern and Indicator button in the Thresholds area, as shown in Figure 14-40.

FIGURE 14-40

- In the first step of the Edit Banding Settings dialog box (see Figure 14-41), you need to identify how the actual value compares to a target. From the Scoring Pattern list, select the Increasing Is Better option. Most of the time, you would use a normalized value where you take the actual value and divide it by the target value, so select the first option (Band by Normalized Value of Actual/Target) from the Banding Method drop-down list, and then click Next.

FIGURE 14-41

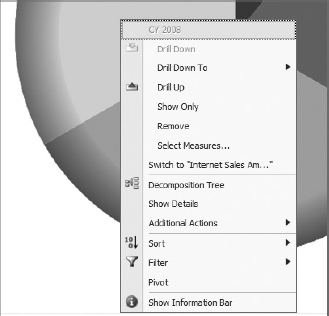

- In the Select an Indicator step, select an indicator to use for the target that clearly shows whether the goal is met. You can choose from a collection of indicator templates available in PerformancePoint Dashboard Designer. When you are done, click Next.