Auditing Resilience in Risk Control and Safety Management Systems

Discussion of the need for resilience in organisations is only of academic value, if it is not possible to assess in advance of accidents and disasters whether an organisation has those qualities, or how to change the organisation in order to acquire or improve them, if it does not already have them. Proactive assessment of organisations relies on one form or other of management audit or organisational culture assessment. In this chapter we look critically at the ARAMIS risk assessment approach designed for evaluating high-hazard chemical plants, which contains a management audit (Duijm et al., 2004; Hale et al., 2005). We ask the question whether such a tool is potentially capable of measuring the characteristics that have been identified in this book as relevant to resilience. In an earlier paper (Hale, 2000) a similar question was asked in a more specific context about the IRMA audit (Bellamy et al., 1999), a predecessor of the tool that will be assessed here. That paper asked whether the audit tool could have picked up the management system and cultural shortcomings which ultimately led to the Tokai-mura criticality incident in Japan (Furuta et al., 2000), if used before the accident happened. That analysis concluded that there were at least eight indicators in the management system that the hazards of criticality had become poorly controlled, which such an audit, if carried out thoroughly, should have picked up. It is of course then a valid question whether these signals would have been taken seriously enough to take remedial action, which would have led to sufficient change to prevent the accident. Responding effectively to signals from audits is also a characteristic of a resilient organisation; but this in turn can be assessed by an audit, if it looks, as did the IRMA audit, at the record of action taken from previous audit recommendations.

The IRMA audit and its close relation, the ARAMIS risk assessment method, have been developed during studies in which the group in Delft has participated, in collaboration with a number of others.1 These studies have tried to develop better methods of modelling and auditing safety management systems, particularly in major hazard companies falling under the Seveso Directives. Whilst the term ‘resilience engineering’ has never been used in these projects, there are many overlaps in the thinking developed in them with the concepts expressed in the chapters of this book and in the High Reliability Organisation and Normal Accident literatures, which have fed the discussions about resilience.

It seems valuable to ask the same question again here, but now in a more general context and not in relation to one particular accident sequence. We therefore present a critical assessment of our audit tool in relation to the generic weak signals of lack, or loss of resilience that are discussed in this book. We confine ourselves in this chapter to an audit tool as a potential measuring instrument for resilience and do not consider tools for measuring organisational culture. This reflects partly the field of competence of the principal author, partly the fact that other authors in this book are more competent to pose and discuss that question. It also reflects, however, a more fundamental proposition that, if resilience is not anchored in the structure of management systems, which audits measure, it will not have a long life. Organisational culture measuring instruments tend to tap into the aspect of group/shared attitudes and beliefs, which may rest largely in the sphere of the informal operation of the management system. Culture will, in this formulation, always be a powerful shaper of practice and risk control, but a lack of anchoring in structure may mean that culture can change unremarked, causing good performance to become lost. We believe that measuring instruments for resilience will need to be derived from both traditions. This chapter only assesses the contribution of one of them, the audit.

In the next section a brief summary of the ARAMIS risk assessment and audit tool is given, followed by a discussion of the way in which such a tool could provide some proactive assessment of the factors identified as providing resilience in the organisation.

Structure of the ARAMIS Audit Model

The model used in the ARAMIS project (Duijm et al., 2004) can be summarised as follows.

Technical Model of Accident Barriers: Hardware and Behaviour

The technical modelling of the plant identifies all critical equipment and accident scenarios for the plant concerned and analyses what barriers the company claims to use to control these scenarios. It is essential that this identification process is exhaustive for all potential accidents with major consequences, otherwise crucial scenarios may be missed, which may later turn out to be significant. All relevant accident scenarios need to be defined not only for the operational phase, but also for nonnominal operation, maintenance and emergency situations. In the ARAMIS project only some of these scenarios were worked out in detail, but the principles of the model could be applied to all of them. In the audits of chemical companies under the Seveso Directive, the major consequences covered are those which arise from loss of containment of toxic and flammable chemicals, and which result in the possibility of off-site deaths. If we were to lower the threshold of what we consider to be major consequences, to single on-site deaths, or even serious injuries, the task of identifying the relevant scenarios becomes rapidly larger, but the principles do not alter. A major project funded by the Dutch Ministry of Social Affairs & Employment is currently extending this approach to develop generic scenarios for all types of accident leading to serious injury in the Netherlands (Hale et al., 2004).

The scenarios are represented in the so-called bowtie model (Figure 3.2) as developments over time. Inherent hazards such as toxic and flammable chemicals, which are essential to the organisation and cannot be designed out, are kept under control by barriers, such as the containment walls of vessels, pressure relief valves, or the procedure operators use to mix chemicals to avoid a potential runaway reaction.

If these barriers are not present, or kept in a good operating state, the hazard will not be effectively controlled and the scenario will move towards the centre event, the loss of control. This is usually defined for chemicals as a loss of containment of the chemical. To the right of this centre event there are still a number of possible barriers, such as bunds around tanks to contain liquid spills, sprinkler systems to prevent or control fire, and evacuation procedures to get people out of the danger area. These mitigate the consequences. In this modelling technique barriers consist of a combination of hardware, software and behavioural/procedural elements, which must be designed, installed, adjusted, used, maintained and monitored, if they are hardware, or defined, trained, tested, used and monitored if they are behavioural/procedural. These management tasks to keep the barriers functioning effectively throughout their life cycle are represented in the three blocks at the base of Figure 18.1.

It is already common practice in chemical design to assign a SIL (Safety Integrity Level) value for safety hardware. These SIL values give nominal maximum effectiveness values for the barriers, which can only be achieved if the barriers are well managed and kept up to the level of effectiveness which is assumed to be the basis of their risk reduction potential. In the ARAMIS project equivalent SIL values were assigned to behavioural barriers in order to use the same approach. The audit is designed to assess whether the specific barriers chosen by the company are indeed well managed. The modelling of the scenarios and barriers themselves also gives insight into how good the choice of barriers is which the company has made, and whether another choice could provide more intrinsic safety, e.g. to eliminate the hazard entirely by using non-toxic substitute chemicals. In other words it assesses the inherent safety of the whole system.

Modelling the Management of the Barriers

The management model on which the audit is based is structured around the life cycle of these barriers or barrier elements (Figure 18.1).

Figure 18.1: ARAMIS outline model of the barrier selection and management system

The life cycle begins in block 1 with the risk analysis process, which leads to the choice of what the company believes to be the most effective set of barriers for all its significant scenarios. Auditing the management process here assesses how well the company’s own choice of barriers is argued, and so provides a check on the technical modellers’ assessment of the barrier choice. In order that the life cycle should be optimally managed, the company needs to carry out a range of tasks, which will differ slightly depending on whether the barriers consist of hardware and software, of behaviour, or of a combination of both. These are detailed below the central block at the top of Figure 18.1 and we return to them below. Box 2 represents the vital process of monitoring the state and effectiveness of the barriers and taking corrective, or improvement action, if that performance declines, or new information about hazards or the state of the art of control measures, is found from inside or outside the company.

The life cycle tasks for hardware and software are shown to the left of Figure 18.1 (boxes 3 and 4) and these for behaviour and procedures are shown on the right in boxes 5 to 9. These two sets of tasks are, however, in essence fulfilling the same system functions. The barrier (technical or behavioural) must be

• specified (design specification or procedural or goal-based task description);

• it must be installed and adjusted (construction, layout, installation and adjustment, or selection, training, task allocation and task instruction);

• it must operate, a relatively automatic process for pure hardware/software barriers, but a complex one for behavioural barriers, since motivation/commitment and communication and coordination play a role, alongside competence, in determining whether the people concerned will show the desired behaviour; and

• it must be maintained in an effective state (inspection & maintenance, or supervision, monitoring, retraining).

Each step in ensuring the effectiveness of the hardware barriers (installation, maintenance, etc.) is itself a task carried out by somebody. If we want to go deeper into the effectiveness of this task we can ask whether the task has been defined properly (a procedure or set of goals), the person assigned to it has been selected and trained for it, is motivated to do it and communicates with all other relevant people, etc. In fact we are then applying the boxes 5 to 9 at a deeper level of iteration to these hardware life cycle tasks. Figure 18.1 shows this occurring for a deeper audit of the tasks represented by the boxes 3 and 4, the two halves of the life cycle for hardware/software barriers and barrier elements. If this iteration is carried out, boxes 5 – 9 are applied this time to tasks such as managing the spares ordering, storage and issuing for maintenance or modifications, or managing the competence and manpower planning for carrying out the planned inspection and maintenance on safety-critical hardware barriers.

In theory we could also take the same step for the tasks to select and train people or motivate them to do the tasks. We end up then with a set of iterations that can go on almost for ever – a set of Russian dolls, each identical inside each other. This would make auditing impossibly cumbersome. Again we could ask the question how far such iterations should go in a safe (or resilient) company, and at what point the company loses its way in the iteration loops and becomes confused or rigid. We return to some aspects of this in the discussion of the risk picture below.

Dissecting Management Tasks: Control Loops

We call the sets of tasks described in the preceding section as ‘delivery systems’, because they deliver to the primary barriers the necessary hardware, software and behaviour to keep the system safe. Each delivery system can be seen as having a series of steps, which can be summarised as closed loop processes such as in Figure 18.2 (the delivery process for procedures and rules), which deliver the required resources for the barrier life cycle functions and the criteria against which their success can be assessed and ensure that the use of the resources is monitored and correction and improvement is made as necessary. Such protocols were developed for each of the nine numbered boxes in Figure 18.2 (Guldenmund & Hale, 2004). Table 18.1 gives more detail of the topics covered by the protocols for the nine boxes. The monitoring and improvement loops described in these protocols must be linked to those represented in box 2 of Figure 18.1, but we do not show the lines and arrows there, in order to avoid confusing the picture.

The processes and the protocols of steps within each process can be formulated generically, as in Figure 18.1. However, in the audit they are applied specifically to a representative sample of the actual barriers identified in the technical model. Hence questions are not asked about maintenance in general, but about the specific maintenance schedule for a defined piece of hardware, such as a pressure indicator, identified specifically as a barrier element in a particular scenario. The audit then verifies performance against that specific schedule. Similarly, training and competence of specific operators in carrying out specified procedures related to, for example, emergency plant shutdown is audited, not just safety training and quality of procedures in general. When the audit approach is used by the company itself, the idea is that, over time, all scenarios and barriers would be audited on a rotating basis, to spread the assessment load.

Figure 18.2: Example of management delivery process: procedures and rules

The audit tool provides checklist protocols based on the models outlined above. It has not been developed to the level of giving detailed guidance or extensive questions and checkpoints for the auditors. The assumption is made that it will be used by experienced auditors familiar with the safety management literature and able to locate the lessons and issues from that within the framework offered.

Further details of how the audit was used to rate management performance and to modify the SIL value of the chosen barriers can be found elsewhere (see Duijm et al., 2004. A description of all of the steps in the ARAMIS project will be published in 2006 in a special issue of the Journal of Hazardous Materials).

Table 18.1: Coverage of audit protocols

Protocol |

Contents |

Risk identification &barrier selection |

Modelling of processes within system boundary, development of scenarios, (semi) quantification & prioritisation, selection of type and form of barrier, identification of barrier management needs, assessment of barrier functioning & effectiveness |

Monitoring, feedback, learning change & management |

Performance monitoring & measurement, on-line correction, feedback & improvement locally and across the system, design & operation of the learning system inside & outside the company, technical & organisational change management |

Procedures, rules, goals |

Definition & translation of high level safety goals to detailed targets, design & production of rules relevant to safety, rule dissemination & training, monitoring, modification & enforcement |

Availability, manpower planning |

Defining manpower needs & manning levels, recruitment, retention, planning for normal & exceptional (emergency) situations, coverage for holidays, sickness, emergency call-out, project planning & manning (incl. major maintenance) |

Competence, suitability |

Task & job safety analysis, selection & training, competence testing & appraisal, job instruction & practice, simulators, refresher training, physical & health assessment & monitoring |

Commitment, conflict resolution |

Assessment of required attitudes & beliefs, selection, motivation, incentives, appraisal, social control of behaviour, safety culture, definition of safety goals & targets, identification of conflicts between safety & other goals, decision-making fora and rules for conflict flagging and resolution |

Communication, coordination |

On-line communication for group/collaborative tasks, shift changeovers, handover of plant & equipment (production – maintenance), planning meetings, project coordination |

Design to installation |

Barrier design specification (incl. boundaries to working), purchase specification, supplier management, in-house construction management, type testing, purchase, storage & issues (incl. spares), installation, sign-off, adjustment |

Inspection to repair |

Maintenance concept, performance monitoring, inspection, testing, preventive & breakdown maintenance & repair, spares issue & checking, equipment modification |

Does the Model Encompass Resilience?

As in our chapter about the resilience of the railway system (Chapter 9), we have defined eight criteria of resilience, which would require, as a minimum, to be measured by a management audit. These criteria are given below. After each criterion we indicate where in the ARAMIS audit system we believe it could, or should be addressed.

Table 18.2: Eight resilience criteria

1. Defences erode under production pressure. |

Commitment & conflict resolution |

2. Past good performance is taken as a reason for future confidence (complacency) about risk control. |

Performance monitoring & learning. |

3. Fragmented problem-solving clouds the big picture – mindfulness is not based on a shared risk picture. |

Risk analysis & barrier selection, whole ARAMIS model |

4. There is a failure to revise risk assessments appropriately as new evidence accumulates. |

Learning |

5. Breakdown at boundaries impedes communication and coordination, which do not have sufficient richness and redundancy. |

Communication & coordination |

6. The organisation cannot respond flexibly to (rapidly) changing demands and is not able to cope with unexpected situations. |

Risk analysis. Competence. |

7. There is not a high enough ‘devotion’ to safety above or alongside other system goals. |

Commitment & conflict resolution |

8. Safety is not built as inherently as possible into the system and the way it operates by default. |

Barrier selection |

For the purposes of this discussion we group these points in a way that links better with the ARAMIS model and audit. We use the following headings (numbers in brackets indicate where each of the above criteria is discussed):

A. Clear picture of the risks and how they are controlled (3, 8, elements of 5 and 6)

B. Monitoring of performance, learning & change management (elements of 1, 2 and 4)

C. Continuing commitment to high safety performance (1, 7)

D. Communication & coordination (elements of 5)

Of these, A and B are the most important and extensively discussed.

We shall conclude that the audit can cover many of the eight criteria in Table 18.2, but is weakest in addressing 2, 4 and 6.

In the sub-sections that follow we discuss the degree to which that assessment is indeed found and how the case studies used to test and evaluate the audit illustrated assessment of those aspects (see also Hale et al., 2005). We also discuss some of the problems of using the model and audit. These often raise the question whether the approach implicit in this assessment model is one that is compatible with the notion of resilience. As will be clear to the readers who have ploughed there way through the rather theoretical exposé in the description of the ARAMIS audit model, the model we use is complex, with many iterations. Resilience, on the other hand, often conjures up images of flexibility, lightness and agility, not of ponderous complexity. Our only response is to say that the management of major hazards is inescapably a complex process. Our models imply that we can only be sure that this is being done well if we make that complexity explicit and transparent.

Clear Picture of Risks and How they are Controlled

The essence of the ARAMIS method is that it demands a very explicit and detailed model of the risks present and the way in which they are controlled. It also provides the tools to create this. We believe that this modelling process is an essential step in creating a clear picture of how the safety management system works, which can then be communicated to all those within the system. Only then can they see clearly what part they each play in making the whole system work effectively. Trials with the approach (e.g. Hale et al., 2005) have shown that the explicitation of the scenarios and barriers, if done by the personnel involved in their design, use and maintenance, helps create much clearer understanding of what is relevant and crucial to the control of which hazards. This was a comment also made by the management of the companies audited in the ARAMIS case studies. They said they learned more from undergoing this audit than they had from other more generic system audits based on ISO-standards.

We devote considerable attention to this issue here, because we believe it is the most fundamental requirement for safe operation and for resilience. Without a clear picture of what is to be controlled, the other elements of resilience have no basis on which to operate.

Understanding How Barriers Work and Fail: How Complete is the Model? What the ARAMIS technique contains is explicit attention to the step from the barriers themselves to the management of their effectiveness, through their life cycle and the resources and processes needed to keep the barriers of different types functioning optimally. This direct linking of barriers to specific parts of the management system is unique in risk assessment and system modelling. We believe it provides a fundamental increase in the clarity of the risk control picture.

Of course the model is only as useful as it is complete. We know that a risk model can never be complete in every detail. We can hope that logical analysis of the organisation’s processes, coupled with experience with the technology, can provide an exhaustive set of generic ways in which harm can result from loss of control of the technology. When we look at the major disasters that are often used to illustrate the discussion of high reliability or resilience, we do not see very often that the scenarios which eventually led to the accident were unknown. Loss of tiles on Columbia, the by-passing of the O-rings on Challenger, the starting of pumps on Piper Alpha with a pressurised safety valve missing, the mix of chemicals and water resulting in the gas cloud from Bhopal, etc. were all known routes to disaster, which had barriers defined and put in place to control them. What was lacking was enough clarity about the functioning of the barriers and about the commitment to their management to ensure that their effectiveness could be, and was, monitored effectively. At the level of the detail of how control is lost there will always be gaps in our model of the risk control system, as some of these disasters show. Sometimes these gaps are in the technical understanding of failure, such as of steel embrittlement at Flixborough, but more often the gaps are in our understanding of how people and organisations can fail in the conduct of their tasks. These failures sit in the delivery systems feeding the hardware and behavioural barriers. Vaughan’s analysis (Vaughan, 1996) of the normalisation of deviance fits into this category, whereby subtle processes occur of becoming convinced that signals initially thought of as warnings are in fact false alarms. Normalisation of deviance is a failure in the monitoring and learning system (box 2 in Figure 18.1), which had previously not been clearly identified. Another example is the analysis of the classic accident at Three Mile Island nuclear reactor, in which operators misdiagnosed the leak in the primary cooling circuit, due to a combination of misleading or unavailable instrument readings and an inappropriate set of expectations about what were likely and serious failures. Until this accident, the modelling of human error had paid insufficient attention to such errors of commission, whose management falls under the design of instrumentation (box 3 in Figure 18.2) and the competence and support of operators (boxes 5 and 7).

The only recourse in the case of gaps in the model at this detailed level is then to rely on efficient learning (see ‘Monitoring of performance, learning and change management’ below); as new detailed ways of losing control of hazards are discovered within the organisation or comparable ones elsewhere, they need to be added to the model, as a living entity, reflecting the actual state of the system it models. This builds in resilience.

Functional Thinking. The ARAMIS models and the auditing techniques developed have a strong emphasis on functional process analysis. Functions are generic features of systems. How those functions are fulfilled in one specific company may be very different from another. Barriers also are defined functionally in the models, with the choice of how the barrier is implemented in a specific case left to the individual company. The quality of that choice is part of the audit process. To make the audit technique complete it will be necessary to build up and make available better databases of experience, indicating which choices of barriers in which circumstances are most likely to be effective (Schupp et al., 2005). Thinking in terms of functions provides a clearer picture of the essence of the risk control system, which does not get bogged down in the details of implementation. However, the audit must descend into these details to check that the function is well implemented in a particular company. It checks whether the managers and operators involved can see clearly not only what they specifically have to do (inspect a valve, monitor an instrument, follow up a change request, etc.) but how that fits functionally into the whole risk control system.

Control Loops & Dynamic Analysis. The idea of the life cycle of the barrier and the management of its effectiveness through the delivery of resources subject to their own control loops, introduces a dynamic element into the model, because these are processes with feedback. Resilience is essentially linked to a dynamic view of risk control. In this respect the Aramis model and audit matches the approach developed in Leveson’s accident model (Leveson, 2004) and in the control-loop model of system levels put forward by Rasmussen & Svedung (2000). The emphasis of the dynamic modelling in the ARAMIS model has, however, been at the management level, a system level above the human-technology interactions which are the ones most described by Leveson. Dynamic feedback loops at the management level are of course not new. The Deming circle has been a concept advocated for several decades and incorporated into the ISO management standards and their national forerunners. However, these standards and their auditors can be criticised for the fact that they have usually not paid enough attention to the detailed content of the feedback loops and the way they articulate with the primary processes to control risk. They have been too easily satisfied with the existence of bureaucratic and paper proof that planning and feedback loops exist, without checking whether their existence results in actual dynamic risk control and positive adaptation to hazard-specific new knowledge, experience and learning opportunities.

The use of dynamic models for the management processes presents problems of interfacing with the static fault and event tree models used by the technical modellers to quantify risk. A challenge for the future is to make technical risk modelling more dynamic, so that it can accept input from the control loops envisaged in such tools as ARAMIS. These are needed to get over to risk assessors and managers that risk is constantly varying due to changes in the effectiveness of the management and learning processes. A static picture of risk levels, established once and for all by an initial safety study, is an incorrect one, which encourages a lack of flexibility in thinking about risk control and hence of resilience.

Conceiving of Barriers as Dynamic. In ARAMIS and the more recent Dutch project looking at risk control in ‘common or garden’ accidents in industry2, the control loop is linked with the functioning, and hence the effectiveness, of the barriers introduced to control the development of scenarios represented in the bowties. The link with barriers appears, according to the feedback from the case studies, to be one which can easily be communicated to people in the companies who are actually operating the processes and keeping the scenarios under control.

The original concept of barriers comes from the classic work of Haddon (1966) and has been used primarily in the old static, deviation models, typically codified in such approaches as MORT (Johnson, 1980). Barriers were originally conceived of largely as physical things in the path of energy flows. However, the concept has now been extended to procedural, immaterial and symbolic elements (Hollnagel, 2004), in other words everything that keeps energy (or more recently information) flows and processes from deviating from their desired pathways. In the work of the ARAMIS project we have extended this definition and given it a control-loop aspect. Barriers are defined as hardware, software or behaviour, which keeps the critical process within its safe limits. In other words, barriers are seen more as the frontier posts guarding the safe operating envelope, than as preventing deviation from the ‘straight and narrow’. They are also defined as control devices, which must have three functions in order to be called effective barriers:

• Detect the need to operate,

• Diagnose what that control action is, and

• Carry out the control action.

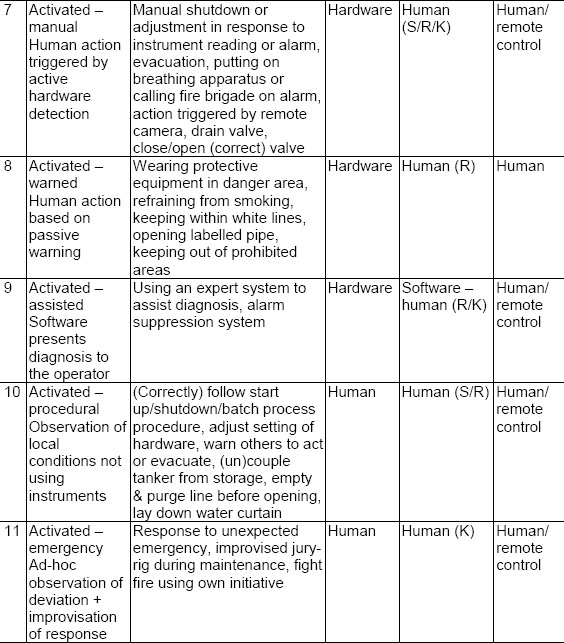

We therefore do not see a warning sign or instrument reading as a barrier in its own right, only as a barrier element, since it is only effective if somebody responds to it and carries out the correct control action. In this sense we differ from Hollnagel (2004) by calling his symbolic and immaterial barriers only barrier elements. We therefore place behavioural responses more centrally in the definition of barriers, which we believe contributes to clearer thinking about them and to more resilience of the barriers themselves. A preliminary classification of 11 barrier types then indicates how each of these barrier functions is operationalised by an element of the barrier, either in the form of hardware/software, or of human perception, diagnosis or action (Hale et al., 2005), see Table 18.3.

This classification allows a direct link to be made between the choice of barrier type, the hardware, software and behavioural elements essential to its effective operation and the management functions needed to keep it functioning effectively (see Figure 18.1). A different choice of the type of barrier to achieve a given function can then be seen in the light of the management effort it brings with it, and the degree to which it can be thought of as inherently safe (criterion 8 of the resilience criteria in Table 18.2). By making the relative roles of hardware, software and behaviour very explicit, the technique forces the system controllers to think clearly about the prerequisites for effective functioning and to realise that all barriers depend for that effectiveness on human actions, either in direct use, or indirect installation, inspection, maintenance, etc. The issue of the resilience of the different barriers is therefore brought centre stage in the decision process of barrier selection and in auditing. At this point a judgement can be made as to how inherently safe the process is with its given barriers, or how narrow and fragile the margins are of the safe operating envelope.

Table 18.3: Classification of different barrier types.

The coding S, R, K against human actions means the following:

S = skill-based, routine, well-learned responses which people carry out without needing to reflect or decide. They are triggered by well-known situations or signals.

R = rule-based, known and practised actions which have to be chosen more or less consciously from a repertoire of skills that have been learned in advance

K = knowledge-based improvisation when faced with situations not met before, or ones where there is no prescribed or learned response available to the person concerned and they have to make up a response as they go along.

Central or Distributed Control. All of these modelling efforts are designed to answer the need of the controllers in any system to have a model of the system they are trying to control, which shows them the levers they need to operate to influence the risks. In making these attempts at defining a central (umbrella) system model, we are of course in danger of falling into the trap that we are implying or even encouraging the view that control should also be situated centrally. Such a conclusion is not inevitable from building such an overall model. It is also possible that the functions in the total models are, in practice, carried out by many local controllers working in response to local signals and environments, but within one overall set of objectives. If control is distributed or local, there is, however, a need to ensure that the different actors communicate, either directly in person, or through observation of, and response to the results of other’s actions. A central overview is essential for monitoring and learning, but not necessarily so for exercising control.

We need to resist the tendency to believe that central control is essential, since many studies have shown that decentralised control can be better and safer. An example of this was a recent study by Hoekstra (2001) of the possible implementation of the ‘free flight’ approach to ATM, in which control in certain parts of the air space is delegated from a central air traffic control centre to individual aircraft with relatively simple built-in avoidance algorithms. He showed that these could cope easily with traffic densities two orders of magnitude higher than the current maximum densities (which are already worrying en-route controllers) and could resolve even extreme artificial situations, such as a single aircraft approaching a solid wall of aircraft at the same altitude (with vertical resolution algorithms disabled), or ‘super-conflicts’ involving 16 aircraft approaching the same point in airspace from mutually opposing directions. (See also Chapter 4 by Pariès.) We need to distinguish the need for resilient systems to have both central overview and clarity for the purpose of system auditing, and local control for the purpose of rapid response.

The explicit risk control model may also be a help in coping with the ability to respond to unexpected situations and rapid changes, where distributed response is an essential element. However, this will only be so if the inventory of scenarios strives for completeness and extends to emergency situations and non-nominal system states. We have discussed above the impossibility of being fully comprehensive in detailed scenarios and the need for learning loops to be built in. The ARAMIS assessment methods are currently weak in dealing with the area of non-nominal situations, since there is nothing that forces the analyst to include these in the total model.

Monitoring of Performance, Learning and Change Management

In all of the management processes audited as ‘delivery systems’ in the ARAMIS audit there is an explicit step included, which is about monitoring the effectiveness of the resource or control delivered by that system. These are collected together in the learning and change management system in the ARAMIS model (Figure 18.3).

Figure 18.3: Management of learning and change

The ARAMIS audit emphasises the importance throughout the management system of having performance indicators attached to each step of all of the processes, both as indicators of state and performance (boxes 2 and 4) and of failure (box 3). Performance indicators are essential as the basis for deciding if defences are being eroded under pressure of production, routine violation or loss of commitment. The audit will indicate whether those performance indicators are in place, and also whether they are used, or whether a blind eye is being turned and performance is being allowed to slip.

Closely related to this are the systems for responding to changes in the external world (box 1: risk criteria, state of the art risk control knowledge, good practice) and for assessing proposed technical and organisational changes inside the organisation (boxes 6/7 and 8/9).

The audit also considers how all of the signals are used in the central boxes (5, 7, 9, 10), which assesses performance and proposed changes. At that point it is necessary that there is no complacency in interpreting good performance in the past as a guarantee of good performance in the future. The sensitivity of the learning system will also determine whether risk assessments are revised appropriately to take account of new evidence and signals about performance coming in. These are both resilience criteria in the original list of eight given in Table 18.2. It is critical here that the feedback loops contain enough data about relevant precursors to failure that the shifts in performance can be picked up. A crucial balance needs to be found between so much data that the significant signals cannot be sorted from the noise, and too little data, leading to blindness to trends. These elements have to be fed into the audit in order for it to pick up these clues to resilience.

The Two Faces of Change. Many studies and many of the discussions during the workshop identify that these feedback loops are essential for learning and adaptation in a ‘good’ sense, but also that the mechanism that drives the processes of normalisation of deviance are also learning processes. These have led to some of the highly publicised disasters, such as Challenger and Columbia (Vaughan, 1996, Gehman, 2003). The dilemma is how to distinguish these two applications of the same basic principles. The key to resilience is to be able to change flexibly to cope with unexpected or changed circumstances, but change itself can destroy tried and tested risk control systems.

The occurrence of violations of procedures is an example of a learning process gone wrong. Either the violations are unjustified and should have been corrected, or they are improvements in ways of coping, which should be officially accepted. In the specific protocol for assessing procedure management (Figure 18.2) there is a place for asking how this process of monitoring and changing rules is controlled. The only mechanism which we have come across, which explicitly addresses this issue, takes a leaf out of the book of technical change management and applies it to the management of procedural and organisational change. In one chemical company with an excellent safety record we found a mechanism in place whereby experienced specialists were available to modify standard procedures to cope with each application that deviated at all from standard, and in which the boundaries of that ‘safe operation’ of the procedures were very clearly defined. Within those boundaries operators and maintenance staff had freedom to adapt their controlling/operating behaviour. As soon as the boundary was reached the experts were called in to make the change, with an approval process involving more senior management at further defined boundaries of change. This process is reminiscent of the method of rule modification found by Bourrier in her study of a US nuclear plant (Bourrier, 1998). It operationalises a conclusion which we have reached from a number of our own studies that the crucial core of a rule management system is the system for rule monitoring and modification, not the steps of initial rule making. Such a view is proving to be a major culture shock when proposed in our railway studies, where the actors in the system are used to seeing rules as fixed truths to be carved in stone. Introducing an explicit rule change system is designed to make change more explicit and considered, so turning a smooth slide of rule erosion into a bumpy ride of explicit rule change.

Controlling Organisational Change. The chemical company mentioned above was also in the process of implementing a similar procedure for all organisational changes, such as manning changes, reorganisations, shifts of staff in career moves, etc. The idea of imposing an explicit control of organisational change also comes as a major culture shock in most organisations, even those operating in high hazard environments. They are used to seeing such reorganisations as unstoppable processes, which roll over them and cannot be questioned. The British Nuclear Installations Inspectorate has now imposed a rule requiring plants to present a detailed assessment of such organisational changes before they are approved (Williams, 2000). In order to implement such a rule, the company has to have a clear organisational picture of its risk control and management system. Then it can assess whether the reorganisation will leave any crucial tasks in that system unallocated. Reorganisation can also result in tasks being allocated to people not competent or empowered to fulfil them. It can also cut, distort or overload communication or information channels essential for local or overall control. The control of organisational change presents a challenge to industries and activities that have traditionally had an implicit risk control system and management (railways, ATM, etc.). Their picture of how their control system works may not be explicit enough to act as a basis for evaluating change (see also Chapter 3). This issue returns to the discussion raised in the section dealing with the ‘clear picture of risks and how they are controlled’.

If we wish to evaluate organisational change in advance, this immediately raises the same questions as those which technical change management processes raise, namely when is a procedural or organisational change large enough to subject it to explicit scrutiny and demand a safety case to justify it? The chemical company mentioned above had begun to tackle this by making explicit such things as the (methods of calculating) minimum levels of manning3 and competence for a range of activities, with an explicit approval process involving higher management if these were to be breached. This was a move appreciated by the operational and supervisory staff as a means to strengthen their hand in resisting subtle pressure from above to bend the rules informally. However, this is still largely unexplored territory for such companies and the lessons from accidents such as Challenger and Columbia show that the similar boundary posts and formal waiver procedures in place in those cases failed to stop the deviance normalisation processes there.

Continuing Commitment to High Safety Performance

The ARAMIS audit and its predecessor, the IRMA audit of I-Risk (Bellamy et al., 1999), have an explicit delivery system devoted to the delivery of commitment. This is combined in ARAMIS with the delivery of conflict resolution, a process separated out in the IRMA audit. The difference between the two processes is as follows. Commitment applies particularly at the level of individuals and groups in the organisation and failures in it are concerned with violation of procedures, short cuts, neglect of routine checks, etc. This is the focus of the ARAMIS audit, which zooms in on on-line barrier performance. Conflict resolution deals much more with the conflicts emerging and controllable at the level of first-line, middle and senior management. These are concerned with such things as the decisions to curtail maintenance shutdowns for reasons of production, to reward managers on their quality or production figures despite poor safety figures, or to transfer resources for training from safety to security as a result of terrorist threats. They condition the choices at individual and group level, as does the individual commitment of the senior and top managers. Since these are the drivers behind the erosion of defences and the subordination of safety to other organisational goals, they are crucial protocols in assessing resilience. Unlike the management processes for managing the life cycle of hardware, or delivering manpower, competence or procedures, these delivery systems are much more diffuse in companies and therefore more difficult to audit. They approach most closely the traditional areas of safety culture, which can only be unravelled and understood by a combination of observation, interview and discussion. In the ARAMIS audit they are assessed by looking for explicit analysis of potential conflicts, by examining how safety is incorporated into formal and informal appraisal and reward systems, by looking for explicit instruments to monitor and discuss good and poor safety behaviour (violations), and by assessing senior management visibility and involvement in workplace safety. However, we accept that this is an area of the audit tool which is weak and which needs support from assessment methods drawn from research on culture and safety climate.

Communication and Coordination

What emerges from the studies of high reliability and resilience is a central emphasis on intensive communication and feedback, either for the individual or for and within the group which is steering the system within the boundaries of its safe envelope. It seems necessary to audit, or study in detail what it is that is taking place within this communication and feedback, in order to understand if it contains the requisite information and models to cope with the range of situations it will meet. What we are looking for is a better understanding of how this commonly described or demanded aspect of a culture of safety works in detail. What is observed? How is it interpreted? What is communicated, how and with whom? What are signals indicating a movement towards the boundaries of control? How are they distinguished from other signals? In the ARAMIS audit this aspect receives specific attention in the delivery system relating to communication and coordination. This concentrates on the communication within groups, particularly when their tasks are distributed in time or space. It therefore assesses the operation of barriers where combined action by control room and field staff is necessary, the transfer of control at shift changeovers and between operations and maintenance in isolating, making safe and opening plant for maintenance and reassembling, testing and returning it to service.

Conclusions and General Issues

In the previous sections we have indicated that a number of the major criteria for assessing or recognising resilience, which were listed in Table 18.2, are potentially auditable by a system such as the ARAMIS audit. The audit and its underlying modelling techniques pay a lot of attention to establishing a clear picture of the risks to be controlled and how they are eliminated, minimised or managed. The full range of possible scenarios, the well-chosen barriers, the well-managed barrier life cycles, the functioning feedback loops and the critically reviewed learning and adaptation processes are explicitly covered in the auditing system. So is attention to commitment, conflict resolution and communication.

Dependence on Auditor Quality

However, the assessment of all of these factors, as in all audit systems, depends very much on the quality and knowledge of the auditor and whether the relevant aspects are picked up and assessed. This depends in turn on the auditor’s willingness and ability to judge the quality of arguments offered by the companies in justifying their approach to risk control and its management. Only highly experienced auditors, knowledgeable about the industry being audited can make such judgements. In the five case studies carried out to test the ARAMIS audit this issue was highlighted. The structure of the audit and its support is not mature enough yet to provide acceptable reliability in the assessments. The issue of the quality and experience of auditors presents problems for the regulators, whether these are government inspectorates or commercial certification bodies. Where can they attract such knowledgeable and valuable people from and how can they afford to pay them? The companies themselves, if they use such scenario-based auditing techniques, also need to commit themselves to putting high quality staff into these functions. These need to be people who can step back far enough from the familiar surroundings of the plant and its risk controls to assess them independently and critically. The central audit teams employed by a number of international chemical companies, who travel to company sites for audits lasting several days at regular intervals, seem to come close to fulfilling this essential role as critical testers of the resilience of the organisations they visit.

Marrying Auditing and Culture Assessment

The ARAMIS audit, but undoubtedly other audit tools, can provide the hooks on which to hang the questions that can attempt to assess the resilience of companies according to the criteria we have listed in Table 18.2. However, we have to admit that the questions to probe those issues are not always made explicit in the audits. If we wish to ensure that the questions do get asked in ways which will produce revealing answers, we will need to adapt the audit protocols to provide a much more explicit focus. In the ARAMIS project an attempt was made to start on this process, which would require a closer coupling between the traditionally separate areas of safety management structure and safety culture. Discussions to try to link specific dimensions of safety culture to specific aspects of safety management structure were carried out, but this failed to lead to any consensus. Further attempts are necessary to marry these two areas of work, which have arisen from separate traditions, one rooted in psychology and attitude measurement and the other in management consultancy, so that we can devise effective audit tools to assess both in an acceptable period of time.

What if Resilience is Measured as Poor?

An audit tool should, according to our arguments, be able to recognise at least a number of the weak signals indicating that an organisation is not resilient. The question then is how to improve matters. What the audit tells the organisation is which of the performance indicators is off-specification. If these performance indicators are widely spread throughout the risk control system, they should tell the company where the improvements must take place. Is that in the monitoring and review of performance? Is it in tackling significant amounts of violation of procedures? Is it in convincing staff that better performance is possible? Is it in the design and layout of user and maintenance friendly plant and equipment? Or is it in a move to risk-based maintenance? What an audit cannot say is how to achieve that change. The processes of organisational change, of achieving commitment and of modifying organisational culture require a very different set of expertise than that of the auditor. We leave the analysis of those change processes to other experts.

1 Relatively constant in these consortia have been the Dutch Ministry of Social Affairs and Employment, The Dutch Institute for Public Health, Environment and Nature, the British Health & Safety Executive, the Greek Institute Demokritos and Linda Bellamy, working for a number of different consultancies. Other institutes which have taken part include INERIS in France and Risø in Denmark. The consortia have worked under a succession of European projects, including PRIMA, I-Risk and ARAMIS.

2 This project is called Workplace Occupational Risk Model or WORM (Hale et al., 2004) and involves the core participants mentioned in note 1.

3 Earlier experience in posing this question to the association of chemical companies in the Rotterdam area of the Netherlands had led to a firm refusal to make such methods explicit and transparent or to develop common approaches to establishing them, with the argument that this was a matter for each company to decide in its own way and with professional secrecy, since it was linked to relative profitability.