Chapter 1

Simulation: History, Concepts, and Examples 1

1.1. Issues: simulation, a tool for complexity

1.1.1. What is a complex system?

The world in which we live is rapidly advancing along a path toward complexity. Effectively, the time when component parts of a system could be dealt with individually has passed: we no longer consider the turret of a tank in isolation but consider the entire weapon system, with chassis, ammunition, fuel, communications, operating crew (who must be trained), maintenance crew, supply chain, and so on. It is also possible to consider the tank from a higher level, taking into account the interactions with other units in the course of a mission.

We thus pass from a system where a specific task is carried out to a system of systems accomplishing a wide range of functions. Our tank, for example, is now just one element in a vast mechanism (or “force system”), which aims to control the aeroterrestrial sphere of the battlefield.

Therefore we must no longer use purely technical system-oriented logic, but use system-of-systems-oriented capacity-driven logic1 [LUZ 10, MAI 98].

This is also true for a simple personal car, a subtle mixture of mechanics, electronics, and information technology (IT), the conception of which considers manufacturing, marketing, and maintenance (including the development of an adequate logistics system) and even recycling at the end of the vehicle’s useful life, a consideration which is becoming increasingly important with growing awareness of sustainable development and ecodesign. Thus, the Toyota Prius, a hybrid vehicle of which the component parts pollute more than average, has an end-of-life recycling potential, which is not only high, but also organized by the manufacturers who, for example, offer a bonus for retrieval of the NiMH traction battery, the most environmentally damaging of the car’s components. In this way, the manufacturer ensures that the battery does not end up in a standard refuse dump, but instead it follows the recycling process developed at the same time as the vehicle. In spite of this, the manufacturer is able to remain competitive.

These constraints place the bar very high in engineering terms. Twenty years ago, systems were complicated, but could be simplified by successive decompositions which separated the system into components that were easy to deal with, for example, a gearbox, a steering, and an ignition. Once these components were developed and validated, they could simply be integrated following the classic V model. Nowadays, engineers are confronted more and more often with complex systems, rendering a large part of the development methods used in previous decades invalid and necessitating a new approach.

Thus, what is a complex system? Complex systems are nothing new, even if they have gained an importance in the 21st century. The Semi-Automatic Ground Environment (SAGE) aerial defense systems developed by the United States in the 1950s, or Concorde in the 1960s, are examples of complex systems even if they were not labeled as such. SAGE can even be considered a system of systems. However, the methods involved were barely formalized, leading to errors and omissions in the system development processes. In 1969, Simon [SIM 69] defined a complex system as being “a system made of a large number of elements which interact in a complex manner”. Jean-Louis Le Moigne gave a clearer definition [LEM 90]: “The complexity of a system is characterized by two factors: on the one hand, the number of constituent parts, and on the other, the number of interrelations”.

Globally, then, we shall judge the complexity of a system not only by the number of components but also by the relationships and dependencies between components. A product of which a large proportion is software thus becomes complex very rapidly. Other factors of complexity exist, for example, human involvement (i.e. multiple operators) in system components, the implication of random or chaotic phenomena (which make the behavior of the system non-deterministic), the use of very different time scales or trades in sub-systems, or the rapid evolution of specifications (changeable exploitation environment). An important property of complex systems is that when the sub-systems are integrated, we are often faced with unpredicted emergences, which can prove beneficial (acquisition of new capacities) or disastrous (a program may crash). A complex system is therefore much more than the sum of its parts and associated processes. Therefore, it can be characterized as non-Cartesian: it cannot be analyzed by a series of decompositions. This is the major (but not the only) challenge of complex system engineering: mastery of these emergent properties.

On top of the intrinsic complexity of systems, we find increasingly strong exterior constraints that make the situation even more difficult:

– increasing number of specifications to manage;

– increasingly short cycles of technological obsolescence; system design is increasingly driven by new technologies;

– pressure from costs and delays;

– increasing necessity for interoperability between systems;

– larger diversity in product ranges;

– more diverse human involvement in the engineering process, but with less individual independence, with wide (including international) geographic distribution;

– less acceptance of faults: strict reliability constraints, security of individuals and goods, environmental considerations, sustainable development, and so on.

To manage the growing issues attached to complexity, we must perfect and adopt new methods and tools: modern global land-air defense systems could not be developed in the same way as SAGE developed in the 1950s (not without problems and major cost and schedule overruns). Observant readers may point out that a complex system loses its complexity if we manage to model and master it. It is effectively possible to see things this way; for this reason, the notion of complexity evolves with time and technological advances. Certain systems considered complex today may not be complex in the future. This work aims to contribute to this process.

1.1.2. Systems of systems

In systems engineering, numerous documents, such as the ISO/IEC 15288 norm, define processes that aim to master system complexity. These processes often reach their limits once we reach a situation with systems of systems. If we can, as a first step, decompose a system of systems hierarchically into a group of systems which cooperate to achieve a common goal, the aforementioned processes may be applied individually. However, to stop at this approach is to run considerable risks; by its very nature, a system of systems is often more than the sum of its parts.

It would, of course, be naïve to restrict the characterization of systems of systems to this property, but it is the principal source of their appeal and of risks. A system of systems is a higher-level system which is not necessarily a simple “federation” of other systems.

Numerous definitions of systems of systems can be found in current literature on this subject: [JAM 05] gives no less than six, and Chapter 1 of [LUZ 10] gives more than 40. In this case, we shall use the most widespread definitions, based on the so-called Maier criteria [MAI 98]:

– operational independence of constituent systems (which cooperate to fulfill a common operational mission at a higher level, i.e. capacitive);

– functional autonomy of constituent systems (which operate autonomously to fulfill their own operational missions);

– managerial independence of constituent systems (acquired, integrated, and maintained independently);

– changeable design and configuration of the system (specifications and architectures are not fixed);

– emergence of new behaviors exploited to improve the capacities of each constituent system or provide new capacities (new capacities emerge via the cooperation of several systems not initially developed for this purpose);

– geographical distribution of the constituent systems (from whence the particular and systematic importance of information systems and communication infrastructures in systems of systems).

As a general rule, the main sources of difficulties in mastering a system of systems are as follows:

– intrinsic complexity (by definition);

– the multi-trade, multi-leader character of the system, which poses problems of communication and coordination between the various individuals involved, who often come from different cultural backgrounds;

– uncertainty concerning specifications or even the basic need, as mastery of a system of systems presents major challenges for even the most experienced professionals. This difficulty exists on all levels, for all involved in the acquisition process, including the final user and the overseer, who may have difficulties expressing and stabilizing their needs (which may legitimately evolve due to changes in context, e.g. following a dramatic attack on a nation or a major economic crisis), making it necessary to remain agile and responsive regarding specifications;

– uncertainty concerning the environment, considering the number of people concerned and the timescale of the acquisition cycle, which may, for example, lead to a component system or technology becoming obsolete even before being used. This is increasingly true due to the growing and inevitable use of technologies and commercial off-the-shelf products, particularly those linked to information and communications technology which leads to products becoming obsolete with increasing speed.

To deal with these problems, a suitable approach, culture, and tools must be put into place. Simulation is an essential element of this process but is not sufficient on its own. Currently, there is no fully tested process or “magic program” that is able to deal with all these problems, although the battle lab approach does seem particularly promising as it has been developed specifically to respond to the needs of system-of-systems engineering. This approach involves battle labs and is described in detail in Chapter 8.

1.1.3. Why simulate?

As we shall see, a simulation can be extremely expensive, costing several million euros for a system-of-systems simulation, if not more. The acquisition of the French Rafale simulation centers, now operational, cost around 180 million euros (but generates savings as it removes the need to buy several Rafale training aircraft, with one aircraft costing more than double the price of the centers before even considering the maintenance costs involved in keeping them operational). The American JSIMS inter-army program was another example of this kind, but it was stopped after over one billion dollars of investment. These cases are, admittedly, extreme, but they are not unique. Some of these exceedingly costly simulation systems are themselves complex systems and have been known to fail. If, then, a simulation is so difficult to develop, why simulate?

First, a word of reassurance: not all simulation programs are so expensive, and not all are failures. By following rigorous engineering procedures, notably, and a number of the processes described in the present work, it is possible to guarantee the quality of simulations, as with all industrial products. Second, simulation is not obligatory but is often a necessary means to an end. We shall review the principle constraints that can lead to working with simulations to achieve all or part of a goal.

1.1.3.1. Simulating complexity

Those who have already been faced with system engineering, even briefly, will be aware of just how delicate a matter the specification and development of current systems is. The problem is made even trickier by the fact that current products (military or civilian) have become complex systems (systems of systems). We do not need to look as far as large-scale air traffic control systems or global logistics to find these systems; we encounter them in everyday life. Mobile telephone networks, for example, may be considered to be systems of systems, and a simple modern vehicle is a complex system. For these systems, a rigorous engineering approach is needed to avoid non-attainment of objectives or even complete failure.

In this case, simulation can be used on several levels:

– simulation can assist in the expression of a need, allowing the client to visualize their demands using a virtual model of the system, providing a precious tool for dialog between the parties involved in the project, who often do not have the same background, and guaranteeing sound mutual understanding of the desired system;

– this virtual model can then be enriched and refined throughout the system development process to obtain a virtual prototype of the final system;

– during the definition of the system, simulation can be used to validate technological choices and avoid falling into a technological impasse;

– during testing of the system, simulations developed during the previous stages can be used to reduce the number of tests needed and/or to extend the field of system validation;

– when the system is finally put into operation, simulations can be used to train system operators;

– finally, if system developments are planned, existing simulations facilitate analysis of their impact on the performance of the new system.

These uses show that simulation is a major tool for system engineering, to the point that nowadays it is difficult to imagine complex system engineering without simulation input. We shall not go any further into this subject at present as we shall discuss it later, but two key ideas are essential for the exploitation of simulation in system engineering:

– Simulation should be integrated into the full system acquisition process to achieve its full potential: this engineering concept is known as simulation-based acquisition (SBA, see [KON 01]) in America and synthetic environment-based acquisition (see [BOS 02]) in the United Kingdom (the different national concepts have different nuances, but the general philosophy is similar).

– The earlier simulation is used in a program; the more efficient it will be in reducing later risks.

To understand the issue of risk reduction, we shall provide a few statistics: according to the Standish Group [STA 95], approximately one-third of the projects (in the field of IT) do not succeed. Almost half of all projects overrun in terms of cost by a factor of two or more. Only 16% of projects are achieved within the time and cost limitations established at the outset, and the more complex the project, the further this figure decreases: 9% for large companies, which, moreover, end up deploying systems which lack, on average, more than half of the functionality initially expected. We have, of course, chosen particularly disturbing figures, and recent updates to the report have shown definite improvements, but the 2003 version [STA 95] still shows that only one-third of projects are achieved within the desired cost and time limitations. This represents an improvement of 100%, but there is stillroom for a great deal of progress to be made.

1.1.3.2. Simulation for cost reduction

Virtual production is costly, admittedly, but often cheaper than real production. The construction of a prototype aircraft such as the Rafale costs hundreds of millions of euros. Even a model can be very expensive to produce. Once the prototype or model arrives, tests must be carried out, which in turn carry their own costs in terms of fuel and the mobilization of corresponding resources. Finally, if the test is destructive (e.g. as in the case of missile launches), further investment is required for each new test.

For this reason, in the context of ever-shrinking budgets, the current tendency is toward fewer tests and their partial replacement by simulation, with the establishment of a “virtuous simulation-test circle” [LUZ 10]. The evolution of numbers of tests during the development of ballistic missiles by the French strategic forces, a particularly complex system, is explained in this respect:

– The first missile, the M1, was developed in only 8 years, but at great cost. Modeling was used very little, and a certain level of empiricism was involved in the tests, of which there were 32 (including nine failures).

– For the M4, slightly more use was made of simulation, but major progress was essential due to the implementation of a quality control process. Fourteen tests were carried out, including one failure.

– During the development of the M51, which represented a major technological leap forward, simulation was brought to the fore to reduce flight and ground tests, with the aim of carrying out less than 10 tests.

Nevertheless, it should be highlighted that, contrary to all too widespread beliefs, simulation is not in principle intended to replace testing: the two techniques are complementary. Simulation allows tests to be optimized, allowing better coverage of the area in which the system will be used with, potentially, fewer real tests. Simulation is not, however, possible without tests, as it requires real-world data for parameters for models and for validation. Without this input, simulation results could rapidly lose all credibility.

Figure 1.1 illustrates this complementarity: it shows the simulation and a test of armor penetration munitions, carried out with the OURANOS program at the Gramat research center of the Defense Procurement Directorate (Direction générale de l’armement, DGA). The simulation allows a large number of hypotheses to be played out with minimum cost and delay, but these results still need to be validated by tests, even if the number of tests is reduced by the use of simulation. Chapter 3 gives more detail on the principle of validation of models and simulations.

One area in which simulation has been used over the course of several decades is in the training of pilots. The price range of a flight simulator certified by the aviation authorities (Federal Aviation Administration (FAA), USA; Joint Aviation Authorities (JAA), Europe; and so on) is sometimes close to that of a real airplane (from 10 to 20 million euros for an evolved full flight simulator and approximately 5 million euros for a simplified training-type simulator2). The Rafale simulation center in Saint-Dizier, France, costs a trifling 180 million euros for four training simulators. Obviously, this leads to questions about whether this level of investment is justified. To judge, we need a few more figures concerning real systems. To keep to the Rafale example, the catalog price of one airplane is over 40 million euros, meaning it is not possible to have a large number of these aircraft. Moreover, a certain number must be reserved for training. The use of simulation for a large part of the training reduces the number of aircraft unavailable for military operations. Indeed, approximately 50 aircraft would be needed to obtain the flight equivalent of the Rafale Training Center (Centre de simulation Rafale, CSR) training. Moreover, the real cost of an airplane is considerably more than its catalog price. Frequent maintenance and updates are required, alongside fuel, all of which effectively doubles or triples the total cost of the system over its lifespan. An hour’s flight in a Mirage 2000 aircraft costs approximately 10,000 euros, and an hour in a Rafale costs twice that figure3. The military must also consider munitions; a simple modern bomb costs approximately 15,000 euros, or more depending on the sophistication of the guiding system. A tactical air-to-ground missile costs approximately 250,000 euros.

An hour in a simulator costs a few hundred euros, during which the user has unlimited access to munitions. We need not continue …. Moreover, the simulator presents other advantages: there is no risk to the student’s life in case of accident, environmental impact (and disturbance for the inhabitants of homes near airfields) is reduced, and the simulator offers unparalleled levels of control (e.g. the instructor can choose the weather, the time of flight, and simulate component failures).

In this case, why continue the training using real aircraft? Simulation, as sophisticated as it may be (and in terms of flight simulators, this can go a long way) cannot replace the feelings of real flight. As in the case of tests, simulation can reduce the number of hours of real flight while providing roughly equivalent results in terms of training. For the Rafale, a difficult aircraft to master, between 230 and 250 h of flight per year, are needed for pilots to operate efficiently, but the budget only covers 180 h. Without simulation, the Rafale pilots could not completely master their weapons systems. We are therefore dealing with a question of filling a hole in the budget rather than an attempt to reduce flight hours. Note, though, that in civil aviation, so-called transformation courses exist, which allow pilots already experienced in the use of one aircraft to become qualified on another model of the same range through simulation alone (although the pilot must then complete a period as a co-pilot on the new aircraft).

1.1.3.3. Simulation of dangerous situations

Financial constraints are not the only reason for wishing to limit or avoid the use of real systems. Numerous situations exist where tests or training involves significant human, material, or environmental risks.

To return to the example of flight simulation, international norms for the qualification of pilots (such as the Joint Aviation Requirements) demand that pupils be prepared to react to different component failures during flight. It is hard to see how this kind of training could be carried out in real flight, as it would put the aircraft and crew in grave danger. These breakdowns are therefore reproduced in the simulator to enable the pupil to acquire the necessary reflexes so that the instructor can test the pupil’s reactions.

On a different level, consider the case of inter-army and inter-ally training: given the number of platforms involved, the environmental impact would be particularly significant. Thus, the famous NATO exercise, Return of Forces to Germany (REFORGER), training which measured the capacity of NATO to send forces into Europe in the case of conflict with the Soviet Union and its allies involved, in the 1988 edition, approximately 97,000 individuals, 7,000 vehicles, and 1,080 tanks, costing 54 million dollars (at the time), of which almost half was used to compensate Germany for their environmental damage. In 1992, the use of computer simulations allowed the deployment to be limited to 16,500 individuals, 150 armored vehicles, and no tanks, costing a mere 21 million dollars (everything is relative …). The most remarkable part of this is that the environmental damage in 1992 cost no more than 250,000 dollars.

1.1.3.4. Simulation of unpredictable or non-reproducible situations

Some of our readers may have seen the film Twister. Setting aside the fictional storyline and Hollywood special effects, the film concerns no more, no less than an attempt by American researchers to create a digital simulation of a tornado, a destructive natural phenomenon which is, alas, frequent in the United States. As impressive as it is, this phenomenon is unpredictable, thus difficult to study, hence the existence of “tornado chaser” scientists, as seen in the film. In this movie, the heroes try to gather the data required to build and validate a digital model. This appears to be very challenging, as tornadoes are unpredictable and highly dangerous, and their own lives are threatened more than once. However, the stakes are high; simulation allows better understanding of the mechanisms which lead to the formation of a tornado, increasing the warning time which can be given.

Simulation thus allows us to study activities or phenomena that cannot be observed in nature because of their uniqueness or unpredictability. The dramatic tsunami of December 2004 in the Indian Ocean, for example, has been the subject of a number of simulation-based studies. The document [CEA 05], a summary of works published by the French Alternative Energies and Atomic Energy Commission (Commissariat à l’énergie atomique et aux énergies alternatives, CEA), shows how measurements were carried out, then the construction of digital models, which enabled events to be better understood and, whether we can avoid repetition of the terrible consequences of the tsunami, which left around 200,000 dead, is still in question.

1.1.3.5. Simulation of the impossible or prohibited

In some cases, a phenomenon or activity cannot be reproduced experimentally. A tsunami or a tornado can be reproduced on a small scale, but not, for example, a nuclear explosion.

On September 24, 1996, 44 states, including France, signed the Comprehensive Test Ban Treaty (CTBT). By August 2008, almost 200 nations had signed. By signing and then ratifying the treaty 2 years later, France committed itself to not carrying out experiments with nuclear weapons. Nevertheless, the treaty did not rule out nuclear deterrence, nor evolutions in the French arsenal; how, though, can progress be made without tests?

For this reason, in 1994, the CEA and, more specifically, the directorate of military applications launched the simulation program. With a duration of 15 years and a total cost of approximately 2 billion euros, the program consists of three main sections:

– An X-ray radiography device, Airix, operational since 2000, allows us to obtain images of implosion of a small quantity of fissionable material, an event which allows a nuclear charge to reach critical mass and spark a chain reaction and detonation. Of course, as these experiments are carried out on very small quantities, there is no explosion. To measure these phenomena over a duration of 60 ns (i.e. thousandths of a second), the most powerful X-ray generator in the world (20 MeV) was developed.

– The megajoule laser (LMJ) allows thermonuclear micro-reactions to be set off. Under construction near Bordeaux at the time of writing, the LMJ allows the conditions of a miniature thermonuclear explosion to be reproduced by focusing 240 laser beams with a total power of 2 million joules on a deuterium and tritium target. The project is due for completion in 2012.

– The TERA supercomputer has been operational since 2001 and is regularly updated. One of the most powerful centers of calculation in Europe, TERA-10, the 2006 version, included more than 10,000 processors, giving a total power of 50,000 teraflops (billions of operations on floating-point numbers per second), and was classed at number 7 on the global list of supercomputers (according to the “Top 500 list”, www.top500.org).

Moreover, to provide the necessary data for the simulation program (among other things), President Jacques Chirac took the decision in 1995 to re-launch a program of nuclear tests in the Pacific, a decision which had major consequences for the image of France with other countries.

This allows us to measure the scale of the most important French simulation program of all time, which aims to virtualize a now prohibited activity; in this case, simulation is essential, but it is not simple to put into operation, nor is it cheap.

1.1.4. Can we do without simulation?

Simulation is a tool of fundamental importance in systems engineering. We should not, however, see it as a “magic solution”, a miraculous program which is the answer to all our problems — far from it.

Simulation, while presenting numerous advantages, is not without its problems. What follows is a selection of the principal issues.

– Simulation requires the construction of a model, which can be difficult to construct, and the definition of parameters. If the system being modeled does not already exist, the selection of parameters can be tricky and may need to be based on extrapolations of existing systems, with a certain risk of errors.

– The risk of errors is considerably reduced by using an adequate process of checks and validation, but processes of this kind are costly (although they do provide considerable benefits, as with any formalized quality control process).

– In cases where a system is complex, the model is generally complex too, and its construction, as well as the development of the simulation architecture, requires the intervention of highly specialized experts. Without this expertise, there is a serious risk of failure, for example, in choosing an unstable, invalid, or low-performing model.

– The construction of a model and its implementation in a simulation are expensive and time consuming, factors which may make simulation inappropriate if a rapid response is required, unless a pre-existing library of valid and ready-to-use models is available in the simulation environment.

– The implementation of a complex model can require major IT infrastructures: note that the world’s most powerful supercomputers are mainly used for simulations.

– The correct use of simulation results often is not an easy task. For example, when simulating a stochastic system — a fairly frequent occurrence when studying a system of systems — results vary from one implementation to another so that data from several dozen implementations of the simulation are sometimes required before reliable results can be obtained.

Other methods that can help resolve problems without resorting to simulation exist. These methods are generally specific to certain types of problem but can provide satisfactory results.

After all, 100 years ago, we got along without simulation. Admittedly, systems were simple (“non-complex”) and designed empirically, for the most part, with large security margins in their specifications. Reliability was considerably lower than it is today. Nevertheless, one technique used is still frequently encountered: real tests on models or prototypes, that is, the construction of a physical model of the system. For example, we could build a scale model of an airplane which could be put in a wind tunnel to study the way the air moves around the model, from which the behavior of the full-scale aircraft in the same circumstances can be deduced. This scaling down is not necessarily without its problems, as physical phenomena do not always evolve in a linear manner with the scale of the model, but the theoretical process is relatively well understood. On the other hand, the uses of this kind of model are limited: they cannot accomplish missions, nor can a wind tunnel reproduce all possible flight situations (without prohibitively expensive investment in the installation). Flying models can be built, for example, NASA’s X43A, a 3.65 m long unpiloted model airplane, equipped with a scramjet motor and able to fly at Mach 10. Of course, numerous simulations were used to reach this level of technological achievement, but the time came when it was necessary to pass to a real model: the results of the simulations needed to be validated, in a little-understood domain, that of aerobic hypersonic flight. Moreover, from a marketing point of view, a model accomplishing real feats (the model in question has a number of entries in the Guinness Book of Records) is much easier to “sell” to the public and to backers than a computer simulation, much less credible to lay people (and even to some experts).

Models are therefore useful, even essential, but can also be expensive and cannot reproduce all the capacities of the system. A prototype would go further toward reproducing the abilities of the real system, but it is also too expensive: each of the four prototypes of the Rafale costs much more than the final aircraft, produced in larger numbers.

Using models, we are able to study certain aspects of the physical behavior of the system in question. The situation is different if the system under study is an organization, for example, the logistics of the Airbus A380 or the projection of armed forces on a distant, hostile territory. There are still techniques and tools other than simulation which can be used to optimize organizations, for example, constraint programming or expert systems, but their use is limited to certain specific problems.

For the qualification of on-board computer systems and communication protocols, mathematical formal systems (formal methods), associated with ad hoc languages (Esterel, B, Lotos, VHDL, and so on), are used to obtain “proof” of validity of system specifications (completeness, coherence, and so on). These methods are effective, but somewhat ungainly and their operation requires a certain level of expertise. These methods also cannot claim to be universal.

Finally, simulation does not remove the interest of collaborative working and making use of the competences of those involved in the system throughout its life. This is the case of the technico-operational laboratory (laboratoire technico-opérationnel, LTO) of the French Ministry of Defense, a main actor in the development of systems of systems for defense. We shall discuss this in Chapter 8. When dealing with a requirement, the work of the LTO begins with a period of reflection on the subject by a multi-disciplinary work group of experts in a workgroup laboratory (laboratoire de travail en groupe, LTG). Simulation is used as a basis for reflection and to evaluate different architectural hypotheses. The LTO is a major user of defense simulation, but it has also shown that by channeling the creativity and analytical skills of a group of experts using rigorous scientific methods, high-quality results may be obtained more quickly and at lower cost than by using simulation.

Simulation, then, is an essential tool for engineering complex systems and systems of systems, but it is not a “magic wand”; it comes with costs and constraints, and if used intelligently and appropriately, it can produce high-quality results.

1.2. History of simulation

Simulation is often presented as a technique which appeared at the same time as computer science. This is not the case; its history does not begin with the appearance of computers. Although the formalization of simulation as a separate discipline is recent and, indeed, unfinished, it has existed for considerably longer, and the establishment of a technical and theoretical corpus took place progressively over several centuries.

The chronology presented here does not pretend to be exhaustive; we have had to be selective, but we have tried to illustrate certain important steps in technical evolution and uses of simulation. It is in the past that we find the keys of the present: in the words of William Shakespeare, “what’s past is prologue”.

1.2.1. Antiquity: strategy games

The first models can be considered to date from prehistoric times. Cave paintings, for example, were effectively idealized representations of reality. Their aim, however, was seemingly not simulation, so we shall concentrate on the first simulations, developed in a context which was far from peaceful.

Even before the golden age of Pericles in Greece and the conquests of Alexander the Great, a sophisticated art of war developed in the East. Primitive battle simulations were developed as tools for teaching military strategy and to stimulate the imagination of officers. Thus, in China, in the 6th Century BC, the general and war philosopher Sun Tzu, author of a work on the art of war which remains a work of reference today, supposedly created the first strategy game, Wei Hei. The game consisted of capturing territories, as in the game of go, which appeared around 1200 BC. The game Chaturanga, which appeared in India around 500 BC, evolved in Persia to become the game of chess, in which pieces represent different military categories4 (infantry, cavalry, elephants, and so on). According to the Mahâbhârata, a traditional Indian text written around 2,000 years ago, a large-scale battle simulation took place during this period with the aim of evaluating different tactics.

In around 30 AD, the first “sand pits” appeared for use in teaching and developing tactics. Using a model of terrain and more or less complicated rules, these tables allowed combat simulations. Intervisibility was calculated using a string. This technique remained in use for two millennia and is still used in a number of military academies and in ever-popular figurine-based war games.

1.2.2. The modern era: theoretical bases

The Renaissance in Europe was a time of great scientific advances and a new understanding of the real world. Scholars became aware that the world is governed by physical laws; little by little, philosophy and theology gave way to mathematics and physics in the quest to understand the workings of the universe. Knowledge finally advanced beyond the discoveries of Greek philosophers of the 5th Century BC. Mathematics, in particular, developed rapidly over the course of three centuries. Integral calculus, matrices, algebra, and so on provided the mathematical basis for the construction of a theoretical model of the universe. Copernicus, Galileo, Descartes, Kepler, Newton, Euler, Poincaré, and others provided the essential elements for the development of equations to express physical phenomena, such as the movement of planets, the trajectory of a projectile or the flow of fluids, and chaotic phenomena. Philosophy and theology gave way to mathematics and physics in explaining the workings of the universe, which thus became predictable and could be modeled. In 1855, the French astronomer Urbain le Verrier, already famous for having discovered the existence of the planet Neptune by calculation, demonstrated that, with access to the corresponding meteorological data, the storm which caused the Franco-British naval debacle in the Black Sea on November 14, 1854 (during the Crimean War) could have been predicted. Following this, Le Verrier created the first network of weather stations and thus founded modern meteorology. Today, weather forecasting is one of the most publicly visible uses of simulation.

In parallel, the military began to go above and beyond games of chess and foresee more concrete operational applications of simulation. From 1664, the German Christopher Weikhmann modified the game of chess to include around 30 pieces more representative of contemporary troops, thus inventing the Koenigsspiel. In 1780, another German, Helvig, invented a game in which each player would have 120 pieces (infantry, cavalry, and artillery), played on a board with 1,666 squares of various colors, each representing a different type of terrain. The game enjoyed a certain success in Germany, Austria, France, and Italy, where it was used to teach basic tactical principles to young noblemen. In 1811, Baron von Reisswitz, advisor at the Prussian Court, developed a battle simulation game known as Kriegsspiel. Prince Friedrich Wilhelm III was so impressed that he had the game adopted by the Prussian army. Kriegsspiel was played on a table covered with sand, using wooden figurines to represent different units. There were rules governing movements and the effects of terrain, and the results of engagements were calculated using resolution tables. Two centuries later, table-based war-games using figurines operate using the same principles. The computer-based war-games that dominate the field nowadays have rendered the sandpit virtual, but once again, the basic principles are the same. These games allowed different tactical hypotheses to be explored with ease and permitted greater innovation in matters of doctrine. The German Emperor Wilhelm I believed that Kriegsspiel played a significant role in the Prussian victory against France in 1870. During the same period, the game was also used in Italy, Russia, the United States, and Japan, mainly to attempt to predict the result of battles. The results, however, were not always reliable. Thus, before World War I, the Germans used a variant of Kriegsspiel to predict the outcome of a war in Europe, but they did not consider several decisive actions by the allied forces which took place in the “real” war. The game was also used by the Third Reich and the Japanese in World War II. Note, however, that the results of simulations were not always considered by the general staff. Kriegsspiel predicted that the battle of Midway would cost the Japanese two aircraft carriers. The Japanese continued and actually lost four, after which their defeat became inevitable. It is said that, during the simulation, the Japanese player refused an aircraft carrier maneuver from the player representing America, canceling their movements; the real battle was, in fact, played out around the use of aircraft carriers and on-board aircraft. This anecdote illustrates a problem that may be encountered in the development of scenarios: the human factor, where operators may refuse to accept certain hypotheses. In the 1950s and 1960s, the US Navy “played” a number of simulations of conflicts with the Soviet forces and their allies. In these simulations, it was virtually forbidden to sink an American aircraft carrier; this would show their vulnerability and provide arguments for the numerous members of Congress who considered these mastodons of the sea to be too expensive and were reluctant to finance their development as the spearhead of US maritime strategy. It is, however, clear that in case of conflict, the NATO aircraft carriers would have been a principal target for the aero-naval forces of the Warsaw Pact.

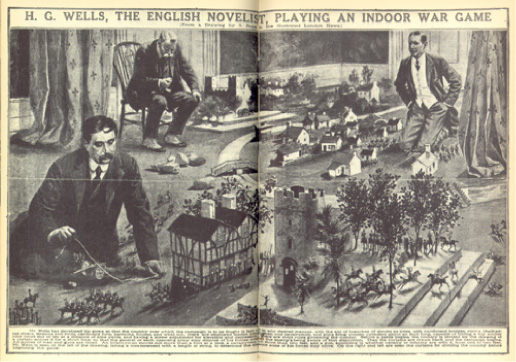

In 1916, the fields of war games and mathematics met when F.W. Lanchester published a dynamic combat theory, which allowed, significantly, the calculation of losses during engagement (attrition). Almost a century later, Lanchester’s laws are still widely used in war games (Figure 1.2).

Figure 1.2. Image from Making and Collecting Military Miniatures by Bob Bard (1957), with an original illustration published in the Illustrated London News [DOR 08]

Before the movement toward computerization, combat simulation became increasingly popular. In 1952, Charles S. Roberts invented a war game based on a terrain divided using a rectangular grid. Cardboard tokens carried the symbols of various units (infantry, artillery, armor, and so on), and movements and combats were controlled by rules. This game, called Tactics, was a great success, and Roberts founded the Avalon Hill games company. From its first appearance, the commercial war board game enjoyed growing success, and large-scale expansion took place in the 1970s. Over 200,000 copies of Panzerblitz, an Avalon Hill game, were sold.

1.2.3. Contemporary era: the IT revolution

1.2.3.1. Computers

Scientific advances allowed considerable progress in the modeling of natural phenomena in the 17th and 18th Centuries, but a major obstacle remained: the difficulty of calculation, particularly in producing the logarithm and trigonometry tables necessary for scientific work. At the time, the finite difference method was used (convergence to the result using expansion), involving large amounts of addition using decimal numbers. Whole services, each with dozens of workers, were affected to this task in numerous organizations. Furthermore, each calculation was usually carried out twice to detect inevitable errors. Automating calculation would therefore be of considerable scientific and economic interest. The work of Pascal and Leibniz, among others, led to the construction of calculating machines, but these required fastidious manual interventions and remained prone to error. A means of calculating a full algorithm automatically was needed.

In 1833, Charles Babbage invented an “analytical engine”, which contained most of the elements present in a modern computer: a calculating unit, memory, registers, control unit, and mass memory (in the form of a perforated card reader). Although attempts at construction failed, it was later proved (a century later) that the invention would work, despite some questionable design choices. Babbage’s collaborator, Ada Lovelace, invented the first programming language. A few years later, George Boole invented the algebra, which carries his name, the basis of modern computer logic. The seeds had been sown, but the technique took around a hundred years to bear fruit.

The first calculators were not built until the 1940s, with, for example, John Atanasoff’s (University of Iowa) electronic calculator. Although the calculator contained memory and logic circuits, it could not be programmed. There was also the Colossus, built in the United Kingdom used by cryptologists under the direction of Alan Turing, whose theories predated the foundations of computer programs or the Harvard Mark II by 10 years.

The first true modern computer, however, was the ENIAC (Figure 1.3). The ENIAC, with 200,000 tubes, could carry out an addition in 200 µs and a multiplication in 2,800 µs (i.e. approximately 1,000 operations per second). When it was launched in 1946, it broke down every 6 h, but despite these problems, the ENIAC represented a major advance, not only in terms of calculating speed — incomparably higher than that of electromechanical machines — but also by the fact of being easily programmable. This power was initially used to calculate range tables for the US Navy, then rapidly made available for simulation, notably in the development of nuclear warheads, a very demanding activity in terms of repetitive complex calculations, based on the work of mathematicians such as Ulam, von Neumann, and Metropolis who, in the course of the Manhattan Project, formalized the statistical techniques for the study of random phenomena by simulation (Monte-Carlo method). Note that the Society for Computer Simulation, an international not-for-profit association dedicated to the promotion of simulation techniques, was founded in 1952.

In the first few years, the future of the computer was unclear. Some had doubts about its potential for commercial success. For simulation, notably the integration of systems of differential equations, another path was opening up, that of the analog computer. Analog computers were used to model physical phenomena by analogy with electric circuits, physical magnitude being represented by voltages. Groups of components could carry out operations such as multiplication by a constant, integration in relation to time, or multiplication or division of one variable by another. Analog computers reached the height of their success in the 1950s and 1960s, but became totally obsolete in the 1970s, pushed out by digital. In France, in the 1950s, the Ordnance Aerodynamics and Ballistics Simulator (Simulateur Aérodynamique et Balistique de l’Armement, SABA) analog computer was used at the Ballistics and Aerodynamics Research Laboratory (Laboratoire de Recherches Balistiques et Aérodynamiques, LRBA), in Vernon, to develop the first French land-to-air missile, the self-propelled radio-guided projectile against aircrafts (projectile autopropulsé radioguidé contre avions, PARCA).

Digital computers, as we know, emerged victorious, but their beginnings were difficult. On top of their cost and large size, programming them was a delicate matter; at best, a (not particularly user-friendly) teleprinter could be used, and at worst, perforated cards or even, for the oldest computers, switches or selector switches could be used to enter information coded in binary or decimal notation. In the mid-1950s, major progress was made with the appearance of the first high-level languages, including FORTRAN in 1957. Oriented toward scientific calculations, FORTRAN was extremely popular in simulation and remains, 50 years later, widely used for scientific simulations. One year later, in 1958, the first algorithmic language, ALGOL, appeared. This language, and its other versions (Algol60, Algol68), is important as, even if it was never as popular as more basic languages such as COBOL or FORTRAN, it inspired very popular languages such as Pascal and Ada. The latter was widely used by the military and by the aerospace industry from the mid-1980s. In the 1990s, object-oriented languages (Java, C#, and so on) took over from the descendants of Algol, while retaining a number of their characteristics. Smalltalk is often cited as the first object-oriented language, but this is not the case: the very first was Simula, in 1967, an extension of Algol60 designed for discrete event simulation.

The 1960s saw a veritable explosion in digital simulation, which continues to this day. 1965 was an important year, with the commercialization of the PDP-8 mini-computer by the Digital Equipment Company. Because of its “reasonable” size and cost, thousands of machines were sold, marking a step toward the democratization of computing and of means of scientific calculation, expanding into universities and businesses. This tendency was reinforced by the unrolling of workstations across research departments in the 1980s, followed by personal computers (PCs). This democratization not only meant an increase in access to means of calculation but also allowed greater numbers of researchers and engineers to work on and advance simulation techniques.

These evolutions also took place at application level. In 1979, the first spreadsheet program, Visicalc, was largely responsible for the success of the first micro-computers in businesses. This electronic calculation sheet carried out simple simulations and data analysis (by iteration), notably in the world of finance. Visicalc has long disappeared from the market, but its successors, such as Microsoft Excel, have become basic office tools and, seemingly, the most widespread simulation tool of our time.

1.2.3.2. Flight simulators and image generation

During the 1960s–1970s, although computers became more widespread and more accessible, simulations retained the form of strings of data churned out by programs, lacking concrete physical and visual expression.

Two domains were the main drivers behind the visual revolution in computing: piloted simulation, initially, followed by video games (which, incidentally, are essentially simulations in many cases). Learning to fly an airplane has always been difficult and dangerous, especially in the case of single-seat aircraft. How can the pupil have sufficient mastery of piloting before their first flight without risk of crashing?

The first flight “simulators” were invented in response to this problem. The “Tonneau” was invented by the Antoinette company in 1910 (Figure 1.4). A cradle on a platform with a few cables allowed students to learn basic principles. In the United States, the Sanders Teacher used an airplane on the ground. In 1928, in the course of his work on pilotage of airplanes without visibility, Lucien Rougerie developed a “ground training bench” for learning to fly using instruments. This system is one of two candidates for the title of first flight simulator. The other invention was developed by the American Edward Link in the late 1920s. Link, an organ manufacturer with a passion for aviation, patented and then commercialized the Link Trainer, a simulator based on electro-pneumatic technology. The main innovation was that the cabin was made to move using four bellows for the roll and an electric motor for the yaw, of which the logic followed the reactions of the aircraft. The instructor could follow the student’s progress using a replica of the control panel and a plotter. The instructor could also set parameters for wind influence. The first functional copies were delivered to the American air force in 1934. The Link Trainer is considered to be the first true piloted simulator. The company still exists and continues to produce training systems.

These training techniques using simulation became more important during WWII, a period with constant pressure to train large numbers of pilots. From 1940 to 1945, US Navy pilots trained on Link ANT-18 Blue Box simulators, of which no less than 10,000 copies were produced.

Afterwards, these simulators became more realistic due to electronics. In the 1940s, analog computers were used to resolve equations concerning the flight of the airplane. In 1948, a B377 Stratocruiser simulator, built by the company Curtiss-Wright, was delivered to the PanAm company. The first of its kind to be used by a civil aviation company, it allowed a whole team to be trained in a cockpit with fully functional instruments, but two fundamental elements were absent: the reproduction of the movement of the airplane and exterior vision, cockpits of the time still being blind. In the 1950s, mechanical elements were added to certain simulators to make the cabin mobile and thus reproduce, at least to some degree, the sensations of flight. The visual aspect was first resolved by using a video camera flying over a model of the terrain and transmitting the image to a video screen in the cockpit. In France, the company Le Matériel Téléphonique (LMT) commercialized systems derived from the Link Trainer from the 1940s. The first French-designed electronic simulator was produced by the company in 1961 (Mirage III simulator). LMT has continued its activities in the field, currently operating under the name Thales Training & Simulation.

1.2.3.3. From simulation to synthetic environments

The bases of simulation were defined during the period 1940–1960. These fundamental principles remain the same today. Nevertheless, the technical resources used have evolved, and not just in terms of brute processing power: new technologies have emerged, creating new possibilities in the field of simulation. Particular examples of this include networks and image generators.

The computers in 1950s had nothing like the multi-media capacities of modern computers. At the time, operators communicated with the machine using teleprinters. Video screens and keyboards eventually replaced teleprinters, but until the end of the 1970s, the bulk of man-machine interfaces were purely textual, and data were recreated in the form of lists of figures.

Some, however, perceived the visual potential of computers very early on. In 1963, William Fetter, a Boeing employee, created a three-dimensional (3D) wire sculpture of an airplane to study take-off and landings. He also created First Man, a virtual pilot for use in ergonomic studies. Fetter invented the term “computer graphics” to denote his work. In 1967, General Electric produced an airplane simulator in color. The graphics and animation were relatively crude, and it required considerable resources to function. Full industrial use of computer-generated images did not really begin until the early 1970s, with David Evans and Ivan Sutherland’s Novaview simulator, which made use of one of the first image generators. The graphics were, admittedly, basic, in monochrome wire, but a visual aspect had finally been added to a simulator. The Novaview company was to become a global leader in the field of image generation and visual simulation. In 1974, one of their collaborators, Ed Catmull, invented Z-buffering, a technique which improves the management of 3D visualization, notably in the calculation of hidden parts. Two years later, this technique was combined with another new technique, texture mapping, invented by Jim Blinn. This enabled considerable progress to be made in terms of realism of computer-generated images by pinning a bitmap image (i.e. a photo) onto the surface of a 3D object, giving the impression that the object is much more detailed than it really is. For example, by pinning a photo of a real building onto a simple parallelepiped, all the windows and architectural features of the building can be seen, but all that needs to be managed is a simple 3D volume. Although processing power remained insufficient and software were very limited, the bases of computer-generated images were already present. At the beginning of the 1980s, NASA developed the first experimental virtual reality systems; interactive visual simulation began to become more widespread. Virtual reality, although not the commercial success anticipated, had a significant impact on research on the man-machine interface.

During the 1980s and 1990s, another technology emerged, so revolutionary that some spoke of a “new industrial revolution”: networks. Arpanet, the ancestor of the Internet, had been launched at the end of the 1960s, but it was confined to a limited number of sites. Local Access Networks and the Internet made networks more popular, linking humans and simulation systems.

In 1987, a distributed simulation network (SIMNET) was created to respond to a need for collective training (tanks and helicopters) by the US Army. This experimental system rapidly aroused interest, and led, in 1989, to the first drafts of the DIS (Distributed Interactive Simulation) standard protocol for simulation interoperability, which became the IEEE 1278 standard in 1995 (see Chapter 7). Oriented in real time and at low level, DIS was far from universal, but it was extremely successful and is still used today. DIS allowed cooperation between simulations. The community of aggregated constructive simulations, such as, war games, created its own non-real-time standard, ALSP (Aggregate Level Simulation Protocol), developed by the Mitre Corporation. Later, the American Department of Defense revised its strategy and imposed a new standard, HLA (High Level Architecture), in 1995, which became the IEEE 1516 standard in 2000. Distributed simulation found applications rapidly. In 1991, simulation (including distributed simulation through SIMNET) was used for the first time on a massive scale in preparing a military operation: the first Gulf War. France was not far behind: in 1994, two Mirage 2000 flight simulators, one in Orange and the other in Cambrai, were able to work together via DIS and a simple Numeris link (digital link with integrated service (réseau numérique à intégration de service, RNIS). The following year, several constructive Janus simulations were connected via the Internet between France and the United States. 1996 saw the first large multi-national simulation federation, with the Pathfinder experiment, repeated several times since. This capacity to make means of simulation, real materials, and information systems interoperate via networks opens the way for new capacities, used, for example, in battle-labs such as the technico-operational laboratory of the French Ministry of Defense.

An entire book could be written on the history of simulation, a fascinating meeting of multiple technologies and innovations, but such is not our plan for this work. The essential points to remember are that simulation is the result of this meeting, that the subject is still in its youth, and that it will certainly evolve a great deal over the coming years and decades. Effectively, we are only just beginning to pass from the era of “artisan” simulations to that of industrial simulation. Simulation, as described in this book, is, therefore, far from having achieved its full potential.

1.3. Real-world examples of simulation

1.3.1. Airbus

Airbus was created in 1970 as a consortium. Something of a wild bet in the beginning, on the back of the success (technical, at least) of Concorde, it became a commercial success story, even — something no-one would have dared imagine at the time — overtaking Boeing in 2003. This success is linked to strong political willpower combined with solid technical competence, but these elements do not explain everything. Despite the inherent handicaps of a complex multi-national structure, the consortium’s first airplane, the Airbus A300, entered into service from 1974; its performance and low operating costs attracted large numbers of clients. The A300 incorporated numerous technological innovations, including the following:

– just-in-time manufacturing;

– revolutionary wing design, with a supercritical profile and particularly accurate flight controls from an aerodynamic point of view, one of the factors responsible for significant reductions in fuel consumption (the A300 consumed 30% less than a Lockheed Tristar, produced during the same period);

– auto-pilot possible from the first ascent to the final descent;

– wind shear protection;

– electronically controlled braking.

These innovations, to which others were added in later models (including the highly mediatized two-person piloting system, where the tasks of the flight engineer were made automatic) led to the commercial success of the aircraft, but also made the system more complex.

Figure 1.5. Continuous atmospheric wind tunnel from Mach 0.05 to Mach 1, the largest wind tunnel of its type in the world — A380 tests — (ONERA)

The engineers who created the Airbus consortium at that time thus made use of significant simulation, both digital, which was becoming more widespread in spite of the cost of computers, still very high, and by using models in wind tunnels (see Figure 1.5). This use of simulation, to a degree never before seen in commercial aviation, enabled Airbus to develop and build an innovative, competitive, and reliable product, and thus to achieve a decisive advantage over the competition. Without simulation, Airbus would probably not have experienced this level of commercial success.

It would be difficult to provide an exhaustive list of all uses of simulation by Airbus and its sub-contractors. Nevertheless, the following list provides some examples:

– global performance simulation: aerodynamics, in-flight behavior of a design;

– technical simulation of functions: hydraulics, electricity, integrated modular avionics, flight controls;

– simulation of mechanical behavior of components: landing gear, fuselage, wings, pylon rigging structure;

– simulation of global possession costs;

– production chain simulation;

– interior layout simulation (for engineering, but also for marketing purposes);

– maintenance training simulator;

– training simulator for pilots.

The use of simulation by Airbus is unlikely to be reduced in the near future, especially in the current context of ever-increasing design constraints. The Advisory Council for Aeronautics Research in Europe (ACARE) defines major strategic objectives for 2020 in [EUR 01], with the aim of providing “cheaper, cleaner, and safer air transport”:

– more pleasant and better value journeys for passengers;

– reduction in atmospheric emissions;

– noise reduction;

– improvements in flight safety and security;

– increased capacity and efficiency of the air transport system.

We clearly see that the aims of these objectives go beyond the engineering of a “simple” aircraft, but necessitate the combined and integrated engineering of a system of systems (that for air transport, [LUZ 10]). To achieve this, large-scale investment will be needed, over 100 billion euros over 20 years (according to [EUR 01]), and modeling and simulation, as well as systems engineering as a whole, play an important role.

1.3.2. French defense procurement directorate

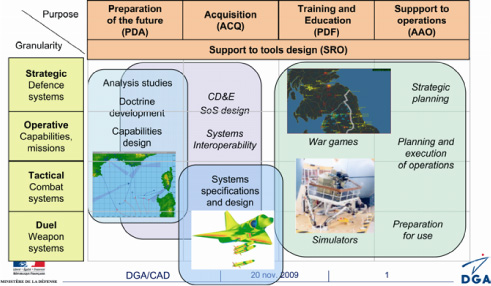

The DGA provides an interesting example of simulation use. This organism, part of the French Ministry of Defense, exists principally for the acquisition of systems destined for the armed forces. The DGA’s engineers therefore deal with technical or technico-operational issues throughout the lifecycle of a system. We find examples of simulation use at every step of system acquisition (which corresponds to the lifecycle of the system from the point of view of the contracting authority). Furthermore, the DGA uses a very wide variety of simulations (constructive, virtual, hybrid, and so on).

Figure 1.6 illustrates the different applications of simulation in the system acquisition cycle:

– Determine operational concepts: it allows “customers” and final users (the armed forces) to better express their high-level needs, for example, in terms of capacity. Simulation allows concepts to be illustrated and tested virtually (see Chapter 8).

– Provide levels of performance: it would be difficult to issue a contract for a system from a concept; a first level of dimensioning is required to transform an operational need, for example, “resist the impact of a modern military charge”, into “resist an explosion on the surface of the system with a charge of 50 kg of explosives of type SEMTEX”. Simulation can give an idea of the levels of performance necessary to achieve the desired effect.

– Measure operational efficiency: the next step is to re-insert these performance levels into the framework of an operational mission by “playing out” operational scenarios in which the new system can be evaluated virtually.

– Mitigate risk and specify: the system specifications must be refined, for example, by deciding what material to use for armoring the system to obtain the required resistance. The potential risks involved with these choices can then be evaluated: What will the mass of the system be? Will it be possible to motorize it? Will the system remain within the limits of maneuverability? Note that this activity usually falls under the authority of the system project manager.

– Mitigate risk regarding human factors: evaluate the impact of the operators on the performance of the system: Is there a risk of overtaxing the operator in terms of tasks or information? Might the operator reduce the performance of the system? For example, it would be useless to develop an airplane capable of accelerating at 20 g if the pilot can only withstand half of that.

– Evaluate the feasibility of technological solutions: this is an essential step in risk mitigation. It ensures that a technology can be integrated and performs well in the system framework. At this stage, we might notice, for example, that a network architecture based on a civil technology, 3G mobile telephony, poses problems due to the existence of zones of non-coverage, gives very variable bandwidth with the possibility of disconnections, and so on, or, on the contrary, check that the technology in question responds well to the expressed need in the envisaged framework of use.

– Optimize an architecture or function: once the general principle of an architecture has been validated, we can study means of optimizing it. In the case of “intelligent” munitions, that is, munitions capable of autonomous or assisted direction toward a target, we might ask at what stage in flight target detection should occur. If the sensor is used too early, there may be problems identifying or locking onto the correct target; if it is too late, the necessary margin for correcting the trajectory to hit the target will be absent.

– Facilitate the sharing and understanding of results of calculations: the results of studies and simulations can be very difficult to interpret, for example, lists of several million numerical values recording dozens of different variables. Illustrating these results using simulations, for example, a virtual prototype of the system, more visually representative, allows the analyst to better understand the dynamic evolution of the system.

– Study system production and maintenance conditions: implemented by the project manager, manufacturing process simulations are used to specify, optimize, and validate manufacturing conditions (organization of the production line, work station design, incident management, and so on). Simulation can be applied to the whole of the production line, the entire logistics system (including supply fluxes for components and materials and delivery of the finished product), or a work station (ergonomic and safety improvements). This activity also includes studies of maintenance operations. In this case, virtual reality is used to check on the accessibility of certain components during maintenance operations.

– Prepare tests: tests of complex systems are themselves complicated or even complex to design and costly to implement. Simulation provides precious help in optimizing tests, improving, for example, the determination of the domain of qualification to cover, the finalization and validation of corresponding test scenarios, the test architecture, and the evaluation of potential risks. Simulation can also be used during the interpretation of test results, providing a dynamic 3D representation of the behavior of the system, based on the data captured during the test, which is substantial and difficult to analyze.

– Supplement tests: the execution of tests allows us to acquire knowledge of a system for a given scenario, for example, a flight envelope. Simulation allows the system to be tested (virtually) over and beyond what can be tested in reality, whether this may be due to budget or environmental constraints (e.g. pollution from a nuclear explosion) or safety (risk to human life) or even confidentiality (emissions from an electronic war system or from a radar can be captured from a distance and analyzed; moreover, they constitute a source of electromagnetic pollution). By carrying out multiple tests in simulation, the domain in which the system has been tested can be extended, or the same scenario can be repeated several times to evaluate reproducibility and make test results more statistically representative, thus increasing confidence in the results of qualification.

– Specify user-system interface: the use of piloted study simulators representing the system allows the ergonomics of the user-system interface for the initial system to be analyzed and improvements to be suggested to increase efficiency or adapt it to a system evolution (addition of functionalities).

Beyond its role as direct support during the various phases of the program acquisition cycle, simulation is also used by the DGA for the development of technical expertise, particularly in the context of project management, where technical mastery of the operation of a system is indispensable to work on the specifications and qualification of a system. This mastery, however, is difficult to obtain without being the project manager or the final user. Simulation is also a tool used for dialog between experts in the context of collaborative engineering processes using a “board” of multi-disciplinary teams, the idea being, eventually, to maintain and share virtual system prototypes between the various participants in the acquisition process (the SBA principle). Finally, modeling and simulation are seen as the main tools for mastering system complexity; at the DGA, simulation is attached to the “systems of systems” technical department.

1.4. Basic principles

In any scientific discipline, a precise taxonomy of the field of work is essential, and precision is critical in the choice of vocabulary. Unfortunately, simulation is spread across several scientific “cultures”, generally considered a tool and not as a separate domain such that the same term will not always mean the same thing depending on the person using it. Furthermore, a large number of typologies of simulation exist. Although simulation is becoming increasingly organized, a certain amount of effort is still required to guarantee coherence between different areas and between different nationalities. In France, the absence of a national norm concerning simulation does not help, as the translation of English terms is not always the same from one person to another.

There are, however, a number of documents that can be considered to be references in the field, and upon which the terminology used in this work will be based. Most are in English, as the United States is both the largest market for simulation and the principle source of innovations in the field. This driving role gives the United States a certain predominance in the orientation of the domain.

Before going any further, we shall explain what exactly is meant by system simulation.

1.4.1. Definitions

1.4.1.1. System

A system is a group of resources and elements, whether hardware or software, natural or artificial, arranged so as to achieve a given objective. Examples of systems include communication networks, cars, weapons, production lines, databases, and so on.

A system is characterized by

– its component parts;

– the relationships and interactions between these components;

– its environment;

– the constraints to which it is subjected;

– its evolution over time.

As an example, we shall consider the system of a billiard ball falling in the Earth’s gravitational field. This system is composed of a ball, and its environment is Earth’s atmosphere and gravity. The ball is subjected to a force that pulls it downwards and an opposing force in the form of air resistance.

1.4.1.2. Model

First and foremost, simulating, as we shall repeat throughout this work, consists of building a model, that is, an abstract construction that represents reality. This process is known as modeling. Note that, in English, the phrase “modeling and simulation” is encountered more often than the word “simulation” on its own.

A model can be defined as “any physical, mathematical, or other logical representation (abstraction) of a system, an entity, a process or a physical phenomenon [constructed for a given objective]” [DOD 95].

Note that this definition includes numerous types of models, not only computer ones. A model can take many forms, for example, a system of equations describing the trajectory of a planet, a group of rules governing the treatment of an information flux, or a plastic model of an airplane. An interesting element of the definition is the fact that a model is “constructed for a given objective”: there is no single unique model for a system. A system model that is entirely appropriate for one purpose may be completely useless for another purpose.

To illustrate this notion of relevance of the model, to which we will return later, we shall use the example of the billiard ball falling in the Earth’s gravitational field. Suppose that we want to know how long the fall will last, with moderate precision, to the nearest second, that the altitude of release z0 is low (not more than a few hundred meters), and that the ball is subject to no other influence than gravity,g, which we can treat as a constant because of the low variation in altitude and the low level of precision required.

The kinematic equations of the center of gravity of the ball give a(t) =g for the acceleration and ![]() for the velocity, from which we deduce that

for the velocity, from which we deduce that ![]() .

.

The equations giving a(t),v(t), and z(t) constitute a mathematical abstraction of the “billiard ball (falling in the Earth’s gravitational field)” system; this is therefore a model of the system. But are there other possible models? Without going too far, let us consider our aims and our hypotheses. Imagine now that we want to obtain the fall time correct to within 50 ms. To arrive at this level of precision, we must consider other factors, notably air resistance, in the form of a term representing fluid friction as a force opposing the fall f (v) = −av. We thus have a new expression for altitude depending on the time z(t), as follows: ![]() .

.

This expression is clearly more complicated and demanding in terms of calculation power than the former. Moreover, our hypothesis was relatively simple, the forces of friction being closer to f(v) = −av2, especially at high velocities. Nevertheless, our second expression reproduces the movement of the ball more realistically over long periods of falling, as the velocity is no longer linear, but tends to a limit.

If we represent the velocity of the ball as a function of time, following both hypotheses (simple and with fluid friction), we notice that both curves are close at the beginning and then diverge. For short durations starting when the ball is released and assuming that the model with friction is close enough to reality for our purposes, we notice that the simple model is also valid for our needs in this zone, known as the domain of validity of the model.

If our needs are such that even the model with friction is not representative enough of reality, other influences can be considered, such as wind. If our ball is released from a high altitude, we must consider the variation in the attraction of weight depending on the altitude, introducing a new term g(z) into the model equations, making matters considerably more complex. We could go even further, considering the Coriolis effect, the non-homogeneity of the ball, and so on. We therefore see that models of the same system can vary greatly depending on our aims. Of the two models we have constructed, we cannot choose the best model to use without knowing what it is to be used for. Some might say that, in case of doubt, the most precise model should be used. In our simple example, this is certainly feasible, but the case would be different in a more complicated system, governed by hundreds of equations, as is often the case with aerodynamic modeling for aeronautics: solving these equations is costly in terms of time and technical resources, and the use of a model too “heavy” for the objective required involves the use of resources over and beyond what would be strictly necessary, leading to cost overrun, a sign of poor quality.

1.4.1.3. Simulation

The definition provided by the US Department of Defense, seen above [DOD 95], is interesting as it introduces the notion of subjectivity of a model. However, it concerns models in general; in simulation, we use more specific models.

The IEEE, the civil standardization association, gives two other definitions in the document IEEE 610.3-1989:

– an approximation, representation, or idealization of certain aspects of the structure, behavior, operation, or other characteristics of a real-world process, concept, or system;

– a mathematical or logical representation of a system or the behavior of a system over time.

If we add “for a given objective” to the first definition, it corresponds closely to that given by the US DoD. The second definition is interesting, as it introduces an essential element: time. The model under consideration is not fixed: it evolves over time, as in the case of our falling billiard ball model. It is this temporal aspect that gives life to a model in a simulation, as simulation is the implementation of a model in time.

To simulate, therefore, is to make a model “live” in time. The action of simulation can also refer to the complete process which, from a need for modeling, allows the desired results to be obtained. In general, this process can be split into three major steps, which we will cover in more detail later:

– design and development of the model;

– execution of the model;

– analysis of the execution.