Chapter 7

The Future of Broadband Access

Peter Vetter

The essential vision

For the past two decades, broadband access has been provided by traditional telecommunication and cable TV operators. These operators have leveraged their existing twisted pair and hybrid fiber-coaxial (HFC) wireline networks to offer triple play voice, data and video services. Typically, they have operated with a national market focus while serving the needs of their original domestic markets. With the increasing importance of mobile communications and networking in the last decade, many of these operators purchased mobile networks in other markets. Then, with the move toward quadruple-play services, including wireless services with triple play offers, a convergence of fixed and mobile operators in each domestic market began to occur. However, given the hyper-competitive nature of the marketplace, this convergence often came at the expense of further global expansion. As a result, a relatively localized set of converged operators emerged.

In the coming years the future of broadband will be affected by three dramatic changes in business models and enabling technologies:

- A new global digital democracy — In the future, users will have access to broadband services anywhere in the world. Service will be offered by global providers independent of region or local access networks. These global service providers will offer the same service package and level of service to any user and enable access from any location — at home, work, or on the move — by leveraging any affiliated local access provider.

- A new global-local service provider paradigm — In the next access era, global service providers will offer multiple tiers of services by partnering with regional access providers to create a new global-local service provider paradigm. Specific service packages will be built depending on the technological capabilities of the local access provider and related commercial agreements with the global service provider. Local access providers in the same region will compete to offer global service providers with the most compelling hosting and digital delivery infrastructure, which will be comprised of a seamless mix of wireline and wireless connectivity.

- A new universal access experience — As the new paradigm unfolds, users will become access agnostic. They will expect to be able to move seamlessly between fiber, copper and wireless connections. This will require operators to shift resources dynamically between different access technologies. Therefore, the virtualization of network functions in the edge cloud will be an important enabler of this flexible, converged infrastructure. Additional flexibility will come from the installation of universal remote nodes that can be reconfigured to support any combination of access technologies at any time.

This chapter outlines the evolution of broadband access and highlights key indicators for the paradigm shift that will happen. It elaborates our vision of a future in which the technology, architecture and business models that enable broadband access will differ significantly from what they are today.

The past and present

The beginning of the digital democracy

The first digital democracy in which affordable broadband access was accessible in nearly all markets emerged over the past two decades. It was driven by aggressive commercial competition among network service providers and a remarkable series of innovations in technology that reduced the cost per bit dramatically.

In the early 1990s, the deregulation of the telecommunication industry in most markets and the advent of the Internet marked the start of broadband access services. Then, the World Wide Web provided a platform for sharing content at scale efficiently. Before that time, service providers offered a limited portfolio of content and services, built on proprietary platforms and limited to the walled garden of their network realm. Fiber to the home (FTTH) was seen as the ultimate solution for broadband access and PayTV video services were considered to be the killer application that would fund the cost of deploying a new optical access infrastructure.

However, this utopian ideal was hampered by the enormous cost associated with deploying new wired infrastructure to every home, the amount of time required to build out the new networks (decades) and the realization that video services offered limited additional revenue potential. Combined, these factors made the return on investment (ROI) period for FTTH deployments a decade or more. This was deemed unacceptable to investors and shareholders of publicly traded access providers. Consequently, access network providers started looking for alternative technologies that would reuse their existing infrastructure to enable deployment of broadband services faster and with an acceptable ROI.

In 1997, incumbent telecom operators in North America started using new digital subscriber line (DSL) technology over their twisted pair copper wires. This technology used 1 MHz of bandwidth above the voice channel (4 kHz) to modulate a digital data signal to homes where it was demodulated by a DSL modem. At the same time, the community antenna television (CATV) providers became multi-service operators (MSOs) by using cable modem technology over their coaxial cable plant, using so-called hybrid fiber-coaxial (HFC) technology that used a related digital modulation and demodulation scheme to transmit data to homes using a small amount of spectrum above the video spectrum (above 550 MHz). These technologies were relatively economical to deploy and offered speeds up to 10 Mb/s (peak rate).

As a result, FTTH was restricted to greenfield deployments for which new infrastructure had to be deployed anyway and the relative economics were comparable to those of copper-based technologies. The most notable exceptions to this evolution were Japan and Korea where fiber deployments in metropolitan areas and buildings was considered a long-term economic imperative and the relative density of apartment buildings made the economics more attractive. Chinese operators were also deploying FTTH, due to the lack of adequate twisted pair infrastructure and a desire to create a future-proof solution. On the east coast of North America, the low-cost associated with the aerial installation of fiber, combined with higher demand for video services, created a positive business case for FTTH. To minimize the investment risk, Google Fiber changed the business model by signing subscribers before deploying FTTH in select North American cities.

Historically, because they started as local telephone or video service operators, telecom service providers and MSOs were national businesses. Telecom networks were interconnected through exchanges that created a global service, but geographic restrictions on video content rights limited connections. This changed in the early 2000s. Increasing competition from web-scale service providers who offered global services on top of any access network infrastructure, combined with the associated value shift from connectivity services to content and contextual services discussed elsewhere in this book, led network service providers to develop different strategies to become more global players. Many looked at successful global-local expansion strategies in other industries, which showed that it was possible to build very profitable businesses by expanding beyond a national base.

As shown in figure 1, global companies in different industry segments have leveraged their brand and the assets of local partners to be successful worldwide. These companies derive more than 50% of their revenues from foreign markets. For example, Coca-Cola pursues a global marketing and product strategy, but relies on local companies for the bottling and distribution of its drinks. Similarly, global airlines like Lufthansa have evolved to be global carriers with worldwide coverage, but achieve this by sharing codes and facilities with regional airlines and relying on partners for air control and ground operations in local airports.

Examples of global companies in different industries that collaborate successfully with local partners (Bell Labs Consulting based on references from annual report data)1

Taking a cue from these and other successful global-local companies, some service providers made initial forays into international markets where they formed joint ventures. For example, BT and AT&T joined forces to create Concert Communications Services and Vodafone and Verizon created Verizon Wireless. Others, such as Orange and Vodafone, acquired local operators. However, these ventures had limited success because they could not achieve economies of scale by combining geographically dispersed and architecturally dissimilar networks (Manta 2015 and Wikipedia).

The era of local access providers

The current market dominance enjoyed by national and regional broadband access providers is the result of historical incumbency based on owned physical infrastructure. But with the emergence of virtualized network functions (NFV) and software-defined networking (SDN), providers have an opportunity to extend their reach beyond historical geo-physical boundaries and achieve greater scale and a larger market share.

Figure 2 shows how a larger market share creates a better free cash flow (FCF) to revenue ratio f or converged wireline and wireless service providers in their domestic markets. It also emphasizes that providers can extend their reach beyond national borders (and leverage global branding and marketing to attract more subscribers than would be possible with a local brand and its attendant smaller marketing budget. These operators have the advantage of more financial clout in the procurement of content, equipment from system vendors, connectivity with transport network providers and, as we will see below, connectivity to local access providers.

Correlation between market share (*average for 2000 to 2014) and free cash flow (FCF) for different operators2

The advantages of going global have continued to drive mergers and acquisitions in the service provider space between wireline and wireless providers (Table 1). In an era where end users expect seamless access to broadband services, regardless of where they are and whether they are connected via a fixed or mobile network, converged global service providers have the advantage in terms of service delivery and the superior economics associated with sharing network costs across wireline and wireless networks. However, the ability to achieve the scale and profitability advantages seen by truly global brands has been very limited. But, Bell Labs believes the industry is on the verge of a new era of global-local expansion, as described later in this chapter.

Wireless and wireline access provider acquisitions in 2014 to 2015

ACQUIRER |

TARGET |

|---|---|

VF (Germany) – mobile |

KDG – fixed |

VF (Spain) – mobile |

Ono – fixed |

Orange (Spain) – converged |

Jazztel – fixed |

Numericable (France) – fixed |

SFR – converged |

The evolution of broadband access speed

As shown in figure 3, competition and user demand have driven access providers to deliver higher-speed broadband services to users at affordable prices and, with it, they have enabled the evolution of the digital democracy.

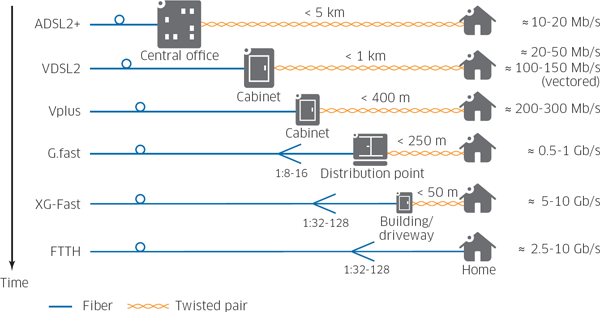

Evolution of the speed achievable over twisted pair copper using DSL technology

(Maes 2015)

The first broadband access service in the 1990s delivered rates ranging from a few hundred kb/s to a couple of Mb/s, which was a huge improvement compared to the tens of kb/s possible with a dial-up modem. Access capacity for DSL services delivered over twisted pair grew exponentially over the years, as shown in figure 3 (Maes 2015). Every new technological step enabled providers to deliver new services: asymmetric digital subscriber line (ADSL) was well suited for early web browsing on the Internet, while very-high-bit-rate DSL (VDSL) was ideal for the delivery of video. While many believed that this would be the endpoint for DSL, the introduction of vectoring (Oksman, Schenck et al 2010) to cancel crosstalk between twisted pairs in the same bundle, which was inspired by interference cancellation techniques used in wireless communication, resulted in a significant improvement of the signal quality over VDSL. This gave providers the ability to support up to 100 Mb/s and multiple simultaneous streams of high-definition content per household. Where multiple pairs to the home were available, it was also possible to combine their capacity through bonding across pairs, which combined the capacity of the pairs into a single, logical, high-capacity transmission link. And this remarkable technological feat — delivering near-fiber-like speeds over copper loops that were designed for 56 kb/s and, in some cases, were approaching 100 years of age — continues today, thereby turning copper into commercial gold.

The latest DSL standards, Vplus and G.fast, are about to be deployed. Vplus achieves up to 300 Mb/s over a single twisted pair and features a vectoring scheme that is compatible with existing VDSL2. G.fast further boosts ultra-broadband transmission speeds up to one Gb/s (figure 3). As we will see below, evolution to even higher rates can be expected and has already been demonstrated in research (Coomans 2014).

Likewise, the demand for higher bandwidth and higher video resolution has driven innovation in cable networks. Figure 4 shows the evolution of the downstream capacity on a cable network for different generations of the data over cable service interface specification (DOCSIS) standard. Unlike the point-to-point rate per subscriber shown for DSL in figure 3, DOCSIS capacity is shared among hundreds of homes attached to the same cable tree (figure 7). Therefore, the capacity shown is the instantaneous peak rate available to subscribers. What this means is that one subscriber has access to all the data bandwidth, but the achievable sustained rate per subscriber is significantly lower (for example, only about 10% of this rate when 10 subscribers are simultaneously active).

Evolution of total downstream bit rate shared on a cable access network (Bell Labs Consulting 2015)2

In 1997, DOCSIS 1.0 specified the first, non-proprietary, high-speed data service infrastructure capable of providing Internet web browsing services. The ability to differentiate traffic flows for the improvement of service quality appeared with DOCSIS 1.1. Enhancement of the upstream bandwidth was introduced with DOCSIS 2.0, so that new services like IP telephony could be supported. DOCSIS 3.0, which is used worldwide today, significantly increased capacity by bonding channels (for example, combining 24 or 32 channels for downstream data and 4 or 8 for upstream data), effectively using a wider spectrum for interactive services. With a USA spectral plan, each additional channel provides 6MHz bandwidth. The latest DOCSIS 3.1 standard enables up to 10 Gb/s downstream and 1 Gb/s upstream thanks to the use of a wider spectrum for downstream (1 GHz or higher) and upstream (up to 200 MHz), as well as the introduction of orthogonal frequency division multiplexing (OFDM), a signal format with improved spectral efficiency that is already widely used in DSL and wireless.

There has been a similar evolution in the data rates supported by optical access (figure 5). A passive optical network (PON) has proven to be the most economical choice because it allows multiple subscribers (typically 32) to share the expensive downstream laser and it supports the use of a signal distribution fiber, which is then passively split to each home with individual drop fibers (FTTH in figure 6). The bandwidth is shared through time division multiplexing (TDM) on one wavelength channel for downstream and another wavelength channel for upstream. The early, pre-standard PON systems based on asynchronous transfer mode (ATM) — APONs— supported symmetrical rates of 155/155 Mb/s and asymmetrical rates of 622/155 Mb/s (downstream/upstream). They were the baseline for a broadband PON (BPON) that was standardized in 1998 and which included an additional radio frequency (RF) overlay wavelength for the distribution of video channels. The subsequent standard Ethernet PON (EPON) and gigabit PON (GPON) provide a shared bandwidth of 1 Gb/s and 2.5 Gb/s respectively and are both still widely deployed today.

Telecom access architecture evolution to deep fiber solutions and remote nodes that reuse existing copper (with the exception of the FTTH case)

A 10 Gb/s capable PON and 40 Gb/s PON have recently been defined in standards. They will be deployed over the next five years to allow multiplexing of more subscribers over a PON (for example, 128) or allow simultaneous support for backhaul and enterprise applications, without compromising the bandwidth available for residential services. The latest next-generation PON (NG-PON2) standard achieves 40 Gb/s by stacking four wavelength pairs of 10 Gb/s in a time and wavelength division multiplexing (TWDM) scheme (Poehlmann, Deppisch et al 2013). A 40 Gb/s-capable PON (XLG-PON) with pure TDM serial bit rates can be expected, if cost-effective approaches can be found (van Veen, Houtsma et al 2015).

The evolution of the broadband access architecture

The continuous increase in data rates has had a significant impact on the access network architecture. The higher DSL speeds require transmission over a wider frequency band (for example, 1 MHz for ADSL, 8 or 17 MHz for VDSL, 34 MHz for Vplus, and 106 MHz for G.fast), which due to propagation physics, is only possible over shorter distances of twisted pair copper (figure 6). Therefore ADSL can run from a central office over a distance of 5 km because of the low frequency band used, but because it uses higher frequencies, VDSL can only be delivered from a street cabinet close to the end user (typically 1 km or less). Vplus is a recent addition to the DSL family that offers higher rates for homes closer to the cabinet by doubling the frequency band. The even higher frequency bands used by G.fast necessitate deployment from a distribution point at the street curb or basement of a building. As a result, fiber is increasingly being installed to G.fast remote nodes that are moving ever closer to the end users.

Likewise, the bandwidth per subscriber in a cable network can be improved by reducing the node size and using deeper fiber penetration, ultimately bypassing the last amplifier, as shown in figure 7.

As discussed in the chapter on The future of wireless, the same evolution is occurring in wireless networks with the move from macro cells (~1 km cell radius) to small cells (<500 m cell radius). Consequently, ultra-broadband copper (whether DSL or cable-based) access and ultra-broadband wireless access are evolving to very similar architectures and distances from subscribers, a trend which is pointing the way to a new converged future, as described in the next section.

The future

Changing the game in broadband access

As outlined earlier, we believe that a new global-local paradigm will emerge in which global service providers (GSPs) will seek partnerships with regional or local service providers (LSPs) to become tenants of the access infrastructure and provide local delivery of digital access to end users. Through these partnerships, GSPs will expect to have the flexibility and ability to instantiate and configure required capabilities in the access infrastructure as needed. This includes everything from basic subscriber management and configuration of bandwidth and quality of service (QoS), to more advanced functions like mobility, security, storage of data, or even virtualization of a user’s customer premises equipment (CPE). To the greatest extent possible, GSPs will want to offer the same set of service packages anywhere in the world, regardless of the location or the type of access network to which the subscriber is connected at any given time. Of course, this will depend on the technological capabilities of the local access infrastructure. But one of the goals of the future access network will be to create the illusion of seemingly infinite capacity using the optimum set of access technologies in each location, with seamless interworking between the technologies.

To support these requirements, LSPs will transform their infrastructure to host different GSPs, as well as their own domestic subscribers, with a simple interface that provides an abstraction of underlying network capabilities. The local access infrastructure will support multi-tenancy to enable sharing of the access network by multiple GSPs, on demand and with the required profitability to sustain access network growth. Given that competition between LSPs in the same region will continue to exist, each will try to attract GSPs by offering the most compelling hosting infrastructure. To meet these requirements, today’s dedicated fixed and mobile access architecture will evolve to a new, fully converged, cloud-integrated broadband access network, as shown in figure 8 (a) and (b).

The new architecture will contain three key enabling technology building blocks:

- Small, localized data centers serving as an edge cloud infrastructure, which will host virtualized access network functions and support flexible programming of services by GSP tenants

- Universal remote access nodes, which will extend access programmability to the physical layer, so that operators can configure any combination of wireless and wireline access technology, as needed, based on demand patterns

- A network operating system (Network OS), which will control the creation, modification and removal of functions, and schedule the usage of available resources across different global service agreements

In addition to the introduction of these key technologies, the evolution to higher capacity in the access infrastructure will continue to fiber-like speeds, but not just over fiber. Beyond G.fast, 10 Gb/s capable DSL (called XG-Fast) over tens of meters of twisted pair has already been demonstrated in research labs, with products likely to emerge by 2020 (figure 3) (Coomans 2014). At the same time, cable will move beyond DOCSIS 3.1 with the expected synergistic use of technology similar to XG-Fast that will enable XG-Cable solutions capable of symmetrical 10 Gb/s rates. In addition, wireless data rates will evolve to multiple Gb/s with the evolution to 5G, as described in chapter 6, The future of wireless access. In each case, these ultra-high access rates will be possible by reducing distances between the access nodes/cells and end users to less than a hundred meters.

This vision of the future raises the obvious challenge of backhauling traffic to and from remote nodes. To support the high capacity of these nodes (up to 10s Gb/s), high-speed optical access technologies will be required in the feeder network (Pfeiffer 2015). The feeder network will be based on next-generation passive optical networks (NG-PON2) or, possibly, XLG-PON. And pure wavelength-division multiplexing (WDM) could be used if very high bit rates (>10 Gb/s sustained per remote node) and minimal latency are required.

The central office in the edge cloud

The global-local cloud introduced in chapter 5, The future of the cloud, which will have compute and storage resources distributed to the edge of the network, is well suited to host key enabling functionality for the future access network. In essence, the edge cloud will move the data center to the central office (CO). For access network operators, the edge cloud will be created by consolidating multiple current COs into a more central location at the metro edge, as shown in figure 8, which will create an access network with an extended reach of approximately 20 to 40 km. The maximum distance between the edge cloud and end users will be dictated by latency requirements, if latency-sensitive physical layer processes are implemented in the edge cloud, and by the preference for a fully passive optical feeder network without locally powered repeaters or amplifiers.

The edge cloud will consist of commodity server blades with general purpose compute and storage resources connected via top-of-the-rack (TOR) switches. The optical line terminal (OLT) function that drives the PON and has, until now, been implemented in dedicated hardware platforms (figure 8 (a)), will be virtualized and decomposed into line termination (LT) server blades. These blades will contain the physical layer and medium access control (MAC) layer functions of the PON and the control plane of the OLT will be implemented as a virtual access network controller (vANC) (figure 8(b)). This will enable separate scaling of the functions that depend more on the (fixed) physical interface (the Layer 1 and basic MAC functions) from the functions that depend on the number of subscribers and flows (the Layer 2 (Ethernet) and Layer 3 (IP) forwarding functions).

This new architecture will integrate access network resources in a data center with an SDN controller to enable rapid configuration of new broadband services as service chains between constituent functions and with lower operational costs compared to a conventional access network (Kozicki, Oberle et al 2014).

Using this new architecture, LSPs will create separate vANC instances for each hosted GSP and the domestic service, while SDN will be used to connect these instances to the underlying (common) physical layer functions. This can be achieved in any edge cloud of any LSP, depending on needs and subscriber location. In addition, GSPs will also be able to instantiate virtual CPE (vCPE) and virtual broadband network gateway (vBNG) subscriber management functions to provide nomadic subscribers with the same set of capabilities they have at home. In fact, the virtual BNG or CPE functions will be further decomposed into their constituent functions, which can be service-chained together, as needed, to meet the service requirements for each subscriber and with the optimum economics. The edge cloud will also host the virtualized radio access network (vRAN) function described in chapter 6, The future of wireless access. Finally, it will be possible to leverage available local storage resources for virtualized content distribution network (vCDN) functions to enable providers to optimize video content delivery for each local subscriber.

Toward the universal remote

As noted earlier, to deliver the desired bandwidth in the future, high-speed wireless and wireline technologies will be located at distances of 100 m or less to end users. Furthermore, they will both be backhauled by PON-based solutions, wherever possible. Therefore, it will be beneficial to collocate these technologies at the same site, so they can share power and backhaul connectivity. But, the Bell Labs vision for broadband access goes one step further: there will be convergence at the component level as well.

The analysis in figure 9 shows that the total capacity per wireless node has grown faster than that of wireline nodes. This has been driven by the desire of users to have everything available on mobile devices that is available over wireline networks. And, this coincidence becomes convergence if we recognize that wireline and wireless access are now based on the same digital signal modulation format (orthogonal frequency-division multiplexing (OFDM)), similar signal processing technologies and comparable spectral bandwidth (approximately 100 MHz to 1 GHz). Combined with the same deployment location (distance), this convergence suggests that a common digital signal processing platform could be used for all access technologies in future. It creates a clear opportunity for a truly converged universal remote that can be configured for any wireless or wireline access network deployment scenario and adapted to dynamic changes in demand on each interface.

The total capacity of access nodes for different wireline and wireless access technologies is converging (Bell Labs Consulting)

To illustrate this potential, compare the OFDM signal flow for G.fast and LTE in figure 10. It is clear that there is substantial functional similarity between the two at the digital signal processing layer (Layer 1 or L1). In general, the requirements of this layer (forward error correction (FEC), processing for cross-talk cancellation, and Fast Fourier Transform (FFT)) are not performed optimally on a general purpose processor, even with the most advanced multi-core processors. In addition, such an approach would consume too much power to be acceptable for a remote node deployment. Therefore, the best approach is to make use of specialized hardware for the digital signal processing and to virtualize the L2 and L3 functions (vANC functions) to run in the edge data center on general purpose servers.

Comparison of digital signal processing flow of G.fast DSL and LTE wireless with indication of common blocks (data transfer unit (DTU), medium access control (MAC), forward error correction (FEC), multiple input multiple output (MIMO), FFT, inverse FFT (iFFT) and cyclic prefix (CP))

We believe that the future ultra-broadband access network will leverage this split architecture (Doetsch et al. 2013). It will have vANC functionality running in the edge cloud and connected to a universal remote node that will contain programmable, reconfigurable hardware accelerators that have the ability to continuously adapt to changing technologies and user demands over time. Once again, as with the PON example, vANC instances will be created for each GSP. They will be connected to the universal remote, which will be programmed according to the requirements of the set of hosted GSPs and the LSPs’ own local subscribers. And they will use SDN and a Network OS to efficiently and dynamically share physical layer resources across multiple physical access technologies, as described in the following section.

Collocating wireless and wireline physical layer functions in the same remote unit offers significant operational and capital cost benefits because of the shared site acquisition, fiber backhaul, power supply, and maintenance opportunities. In addition, the programmability of the new universal remote offers much greater flexibility for the deployment of a converged access network that can adapt to:

- Geographical variations: Because the universal remotes are placed so close to end users, the specific needs for wireless/wireline capacity, the number, type and reach of copper connections required or the use of line-of-sight versus nonline-of-sight transmission for wireless will vary at every location. A programmable remote can be configured to accommodate each specific location.

- Long timescale variations: As mobile traffic grows more rapidly than fixed, or subscribers churn between different access technologies, the LSP can reprogram node resources to better reflect these changes and achieve higher throughput (by more extensive signal processing) on the desired interfaces.

- Short timescale variations: Traffic patterns vary over short timescales. For example, mobile usage typically increases during the day when users are moving outside the home. But, the reverse is true in the evening. The universal remote can adapt to these diurnal patterns to provide optimal throughput at all times.

- Rapid introduction of new capabilities: A programmable remote node can adapt to support new technology standards or features that can differentiate an LSP from competitors by enabling the LSP to offer a superior level of service delivery.

In summary, a universal remote extends the programmability of the future broadband access infrastructure to the physical layer and will enable a truly end-to-end SDN to be created. The high capacity and flexibility of the new architecture will enable deployment of multiple types of services that required separate networks in the past (residential or business, fixed or mobile) on a single flexible infrastructure, which will support multiple GSPs, as well as the LSP’s local subscriber base.

The role of a Network OS

As discussed in chapter 4, The future of wide area networks, a Network OS will also be needed to enable dynamic scheduling of network and processing resources among different functions and services in the broadband access infrastructure (figure 8). Similar to the role of an OS in a computing or mobile device, a Network OS will allow for rapid installation or removal of functions without interrupting ongoing services. The multi-tenancy requirement for multiple GSPs will be supported by the creation of network slices by the Network OS. These slices are similar to the virtual machines on computing hardware. But, in addition to slicing computing resources, they partition programmable physical resources, such as capacity and wavelengths on the fiber network or the digital signal processing resources in the universal remote, to instantiate the desired wireline or wireless access capacity.

In the future ultra-broadband access network, the Network OS will leverage SDN control functions to:

- Provide GSPs with an end-to-end service control by hiding the details of the access network through technology-agnostic abstractions and integrating network control with other network domains (for example, the metro aggregation network or the macro wireless network)

- Act as a network hypervisor that allows independent GSPs to program network functions dynamically within their network slice, without modifying resources allocated to other tenants

- Resolve the contention between multiple network services sharing the same infrastructure, while satisfying different service level agreements (SLAs)

- Optimize the use of resources automatically based on an up-to-date view of network conditions and demand from each tenant network based on sophisticated monitoring and analytics that allow network slices to be modified before any degradation of quality of service occurs

In addition to the functions it will enable for GSPs, the Network OS will provide the host LSP with a unified mechanism for optimizing its access infrastructure cost, revenue, performance, and reliability. The network abstraction provided by SDN will also enable the LSP to install new capabilities rapidly, without affecting existing user services offered by the tenant GSPs.

The future begins now

The broadband access industry is already moving toward a new era of global convergence. Service providers are merging to become fully converged operators that can increase their market share (figure 2 and table 1). Also, web-scale players are offering broadband access services by leveraging Wi-Fi and FTTH deployments in some cities. In particular, in addition to the gigabit services offered by Google Fiber in selected cities, Google has started large-scale trials of a free Wi-Fi access service (supported by ads) using 10,000 Wi-Fi pylons in New York City (Payton 2015). And Google’s Project Fi, announced in March of 2015, is perhaps the clearest evidence to date of the upcoming global-local revolution, with subscribers obtaining wireless service from Google, based on local access provided through an ecosystem of local access providers (Google 2015).

From a technology perspective, SDN and NFV are being increasingly embraced by the broadband access community as enabling technologies for multi-tenancy and rapid configuration of new services. For example, in North America, an operator is promoting a new architecture and a prototype with central office functions in an edge cloud (Le Maistre 2015), using the open network operating system (ONOS) (ONOS 2015). The architecture instantiates and controls virtualized subscriber management functions for the provisioning of access services via G.fast. Virtual CPE services are also being pioneered and trialed on a large scale with some early products already announced (Morris and LightReading 2015).

Meanwhile, the European Telecommunications Standards Institute (ETSI) industry specification group (ISG) on NFV has created dedicated technical committees for broadband wireless and broadband cable access (ETSI 2015). The latter is in collaboration with CableLabs, which also runs an open networking project that is exploring how to virtualize cable access headend systems (Donley and CableLabs 2015). And the Broadband Forum (BBF) is expected to release technical reports on fixed access network sharing (FANS), architectures for access network virtualization that are consistent with ETSI NFV by the end of 2016, and access programmability through SDN by the end of 2017 (Broadband Forum 2015).

Clearly, we are also at the beginning of Gb/s services for residential access and the requisite accelerated roll-out of fiber deeper in the network, either to the home or, in many cases, to remote nodes closer to the home. Driven by governments in Asia (for example, South Korea and Japan), or by competition by Google Fiber in the US, gigabit to the home initiatives are important catalysts for this evolution.

Looking at the evolution toward remote nodes, there are also clear early moves in the market:

- Several equipment vendors have announced their first G.fast products that feature near-gigabit speeds via twisted pair copper from a distribution point unit (DPU) at a 100 m from the home (Alcatel-Lucent 2015).

- Researchers have recently demonstrated a prototype of 10 Gb/s over DSL over tens of meters of twisted pair copper using XG-Fast (Coomans 2014).

- Cable system vendors have announced the availability of products compatible with DOCSIS 3.1, which supports downstream bit rates up to 10 Gb/s (CableLabs and Arris 2014).

- Some operators have recognized the opportunity of collocating wireless access points with DSL access nodes (Iannone 2013), which is an important precursor to a fully converged universal access node. System and component vendors have also announced agreements to jointly develop a system on a chip for a programmable universal remote node (Joosting 2015).

Summary

In 2020 and beyond, the future broadband access network will see a shift from local and regional service providers to a more GSP model. To achieve global coverage and optimize local services delivery, GSPs will form partnerships with LSPs to offer ubiquitous access and multiple tiers of services to end users. This model has been successful in other industries, driven by the branding and scale advantages of global companies. We predict that this model will also be applied to the global telecommunications market. Early signs of this trend are apparent with large service providers merging or acquiring other players and achieving superior financial performance as a result. Furthermore, service providers with a converged fixed and mobile portfolio show stronger business performance than providers that only focus on either fixed or mobile.

Consequently, the role of the LSP is changing and this change will have a profound impact on the technologies deployed in the future broadband access network. Local access providers will compete for business partnerships with GSPs, with a more limited focus on their own local service offers.

Due to the virtualization of central office functions in the edge cloud, multiple tenant GSPs will be able to obtain a slice of the LSP-owned access network. This will allow them to configure multiple tiers of service packages for their subscribers. In this market, LSPs with a combination of wireline and wireless access capabilities will have a clear advantage in terms of the range of service offers they can provide relative to those limited to either wireless or fixed access networks. Driven by user demand and competition, local operators will continue to increase broadband access speeds to gigabit rates over FTTH in an increasing number of areas. In a significant portion of the network where FTTH deployments are expected to remain economically unattractive, they will offer fiber-like services over existing copper or high-speed air interfaces.

Since the ultra-high-speed copper and wireless networks will require short transmission distances of less than 100 m, they will become collocated to benefit from the economics of shared optical feeder network, site acquisition and installation, power supply and maintenance opportunities. Furthermore, Bell Labs believes that there will be convergence at the chipset level as well, where there will be an opportunity to make a universal remote node that is fully programmable for any combination of wireless and wireline technologies to enhance the deployment flexibility of a truly converged access network.

What will emerge from the above changes is a new digital democracy in which anyone will have access to broadband services at fiber speeds and competitive prices from anywhere in the world by leveraging the optimum local access provider. Users will be able to seamlessly shift between fixed and mobile networks and between wireline and wireless technology, experiencing a fully converged broadband access service that delivers an optimal digital experience anywhere, anytime.

References

Alcatel-Lucent 2015. “G.fast: Clear a New Path to Ultra-Broadband,” Alcatel-Lucent web site, (https://www.alcatel-lucent.com/solutions/G.fast).

Arris 2014. “SCTE 2014 CCAP interoperability demo highlights DOCSIS 3.1 leadership,” Arris web site, September (http://www.arriseverywhere.com/?s=Docsis+3.1).

Broadband Forum 2015. “Technical Working Groups Mission Statements,” Broadband Forum web site (https://www.broadband-forum.org/technical/technicalworkinggroups.php).

CableLabs 2014. “New Generation of DOCSIS Technology,” CableLabs press release (http://www.cablelabs.com/news/new-generation-of-docsis-technology).

CableLabs 2015. “SDN Architecture for Cable Access Network Technical Report,” CableLabs web site, June 25 (http://www.cablelabs.com/specification/sdn-architecture-technical-report).

Coca-Cola Company 2014. “Annual Report 2014 Form 10-K 2014; Net Operating Revenues by Operating Segment; North America vs. Total,” Coca-Cola web site (http://assets.coca-colacompany.com/c0/ba/0bdf18014074a1ec a3cae0a8ea39/2014-annual-report-on-form-10-k.pdf)

Coomans W., Moraes, R. B., Hooghe, K., Duque, A., Galaro, J., Timmers, M., van Wijngaarden, A. J., Guenach, M., and Maes, J., 2014. “XG-FAST: Toward 10 Gb/s Copper Access,” proceedings of IEEE Globecom, December.

Deutsche Post AG 2014. “Annual Report:, “DHL Express Revenues: Europe vs. Total,” (from key figures by operating division table) Deutsche Post Annual Report web site (http://annualreport2014.dpdhl.com).

Doetsch, U., et al. 2013. “Quantitative Analysis of Split Base Station Processing and Determination of Advantageous Architectures for LTE,” Bell Labs Technical Journal, June.

Donley, C., 2015. “SDN and NFV: Moving the Network into the Cloud,” CableLabs web site, (http://www.cablelabs.com/sdn-nfv).

ETSI 2015. “Network Functions Virtualization,” ETSI web site (http://www.etsi.org/technologies-clusters/technologies/nfv).

Google 2015. “Project Fi uses new technology to give you fast speed in more places and better connections to Wi-Fi,” Project Fi web site (https://f.google.com/about/network).

GSMA 2015. “GSMA Intelligence: Wireless Market Data by Country,” GSMA Intelligence web site, July. (https://gsmaintelligence.com).

Kozicki B., Oberle K., et al 2014 “Software-Defined Networks and Network Functions Virtualization in Wireline Access Networks,” From Research to Standards Workshop, Globecom 2014, pp. 680–685, December.

Iannone, P. et al, 2013. “A Small Cell Augmentation to a Wireless Network Leveraging Fiber-to-the-Node Access Infrastructure for Backhaul and Power,” Optical Fiber Communication Conference and Exposition and the National Fiber Optic Engineers Conference (OFC/NFOEC), March.

Joosting, J-P., 2015. “Freescale and Bell Labs explore wireline and wireless virtualization on the road to 5G,” Microwave Engineering Europe, February 19 (http://microwave-eetimes.com/en/freescale-and-bell-labs-explore-wireline-and-wireless-virtualization-on-the-road-to-5g.html?cmp_id=7&news_id=222905842).

Le Maistre R. 2015. “AT&T to Show Off Next-Gen Central Office,” Lightreading, June 15 (http://www.lightreading.com/gigabit/fttx/atandt-to-show-off-next-gen-central-office/d/d-id/716307).

Lightreading 2015. “Telefónica, NEC Prep Virtual CPE Trial,” Lightreading Oct 10 (http://www.lightreading.com/carrier-sdn/nfv-%28network-functions-virtualization%29/telefonica-nec-prep-virtual-cpe-trial/d/d-id/706032).

Lufthansa 2014. “First Choice Annual Report 2014: Passenger Airline Group 2014, “Net traffic revenue in €m external revenue; Europe vs. Total,” Lufthansa web site (http://investor-relations.lufthansagroup.com/fileadmin/downloads/en/financial-reports/annual-reports/LH-AR-2014-e.pdf).

Maes, J., and Nuzman, C., 2015. “The Past, Present and Future of Copper Access,” Bell Labs Technical Journal, vol. 20, pp. 1-10.

Manta 2015. “Verizon Communications Inc (Verizon).” April (http://www.manta.com/c/mmlg0rt/verizon-wireless).

McDonald’s 2014. “Annual Report 2014 Revenue by Segment and Geographical Information; US vs. Total,” McDonald’s web site (http://www.aboutmcdonalds.com/content/dam/AboutMcDonalds/Investors/McDonald’s2014AnnualReport.PDF).

Morris, I., 2015. “Orange Unveils NFV-based Offering for SMBs,” Lightreading, March 18 (http://www.lightreading.com/nfv/orange-unveils-nfv-based-offering-for-smbs/d/d-id/714503).

Oksman, V., Schenk, H., Clausen, A., Cioffi, J. M., Mohseni, M., Ginis, G., Nuzman, C., Maes, J., Peeters, M., Fisher, K., and Eriksson, P.-E., 2010. “The ITU-T’s New G.Vector Standard Proliferates 100 Mb/s DSL,” IEEE Communications Magazine, vol. 48, no. 10, pp. 140–148, October.

ONOS 2015. ONOS web site (http://onosproject.org)

Payton M. 2015. “Google wants to bring free Wi-Fi” to the world.... and it’s starting NOW,” Metro UK. http://metro.co.uk/2015/06/25/google-wants-to-bring-free-wifi-to-the-world-and-its-starting-now-5265352

Pfeiffer T. 2015. “Next Generation Mobile Fronthaul Architectures,” Invited paper, M2J.7, OFC.

Poehlmann W., Deppisch B., Pfeiffer T., Ferrari C., Earnshaw M., Duque A., Farah B., Galaro J., Kotch J., van Veen D., Vetter P. 2013. “Low-cost TWDM by Wavelength-Set Division Multiplexing,” Bell Labs Technical Journal, Vol. 18, No. 3, pp. 173-193, November.

van Veen, D., Houtsma, V., Gnauck, A., and Iannone, P. 2015. “Demonstration of 40-Gb/s TDM-PON over 42-km with 31 dB Optical Power Budget using an APD-based Receiver,” Journal of Lightwave Technology,” vol. PP, Issue: 99, DOI: 10.1109/JLT.2015.2399271.

Vetter, P. 2012. “Next Generation Optical Access Technologies,” Tutorial at ECOC, Amsterdam, p. Tu.3.G.1, September.

Wikipedia 2015. “Concert Communications Services,” (https://en.wikipedia.org/wiki/Concert_Communications_Services).

Wikipedia 2015. “Orange SA,” (https://en.wikipedia.org/wiki/Orange_S.A.)

Wikpedia 2015. “Vodafone,” (https://en.wikipedia.org/wiki/Vodafone).

1 - Analysis and modeling by Bell Labs Consulting based on data from various sources (Coca-Cola 2014, Deutsche Post 2014, Lufthansa 2014, Passenger 2014, McDonald’s 2014).

2 - Bell Labs Consulting based on data from GSMA 2015.

2 - Based on Bell Labs modeling and the following standards: “DOCSIS 1 - ITU-T Recommendation J.112 Annex B (1998);” “DOCSIS 1.1 - ITU-T Recommendation J.112 Annex B (2001);” “DOCSIS 2 - ITU-T Recommendation J.122, December 2007;” “DOCSIS 3 - ITU-T Recommendation J.222, December 2007;” “DOCSIS 3.1 Physical Layer Specification (CableLabs), CM-SP-PHYv3.1,” June.