Chapter 9: Photo, Video, and Audio Forensics

In the first few chapters of the book, we focused on the identification and analysis of mobile evidence stored in system-generated artifacts such as databases, PLISTs, and log files. However, any type of investigation will also typically involve analyzing user-generated content found on a device, such as media files.

A modern iOS device can contain tens of thousands of media files, and each of these files is associated with unique metadata that can be critical to an investigation. In this chapter, we will learn how to identify and analyze multimedia content such as photos, videos, and audio files.

We will start the chapter with an introduction to media forensics and discuss where photos, videos, and audio recordings are stored on an iOS device. Then, we'll learn all about analyzing media metadata to gain meaningful insights, both from the file itself and from the corresponding databases. In the last part of the chapter, we will learn how to analyze user behavior to find out what media the user has accessed or watched through Apple apps such as Music and Safari, and third-party apps such as Spotify or Netflix.

In this chapter, we will cover the following topics:

- Introducing media forensics

- Analyzing photos and videos

- Introducing EXIF metadata

- Analyzing user viewing activity

Introducing media forensics

Media forensics can be defined as the process of locating, analyzing, and extracting meaningful metadata from any kind of multimedia object, such as an image or a video. Modern iOS devices such as the iPhone 13 have huge storage capacities (the base model has 128 GB of storage), and this allows them to potentially store tens of thousands of media files. Each of these is linked to particular metadata that may give an investigator a lot more information than the file itself.

Although it may be tempting to think of multimedia assets merely as photos or videos, iOS devices, in reality, handle a lot more than that; the following is a list of common media assets that can be found on an iOS device:

- Camera roll photos, videos, and live photos

- Saved photos and videos

- Screenshots

- Audio recordings and music

- Media files received from third-party apps (such as WhatsApp and Telegram)

- Streamed content

It's important to understand that when an examiner is tasked with analyzing any kind of media file from a mobile device, the focus shouldn't be solely on where the file was located but rather on how the media object got there.

By analyzing media files, their metadata, and iOS-related artifacts, the investigator will be able to answer questions such as the following:

- Was a particular photo taken from the device's camera?

- What was the location where the photo was taken?

- Was a video file received from a messaging app?

- When was a media file last modified?

- What does a media file say about the user?

We're going to start by highlighting some of the most common locations where media-related artifacts are located:

- /private/var/mobile/Media/DCIM/1**APPLE/: This folder stores photos and videos that were created by the user or saved to the device. Typically, images will be stored in JPG, PNG, or HEIC format, and videos will be stored as MP4 or MOV files.

- /private/var/mobile/Media/PhotoData/: The PhotoData folder stores several artifacts related to the media metadata, such as the Photos.sqlite database, thumbnails (which are stored in /private/var/mobile/Media/PhotoData/Thumbnails/V2/DCIM/1**APPLE/IMG_****.JPG/****.JPG), and photo album data. Generally, this folder will be the primary source of evidence for any kind of media file stored on the device. We'll take an in-depth look at this folder in the next section.

- /private/var/mobile/Media/PhotoData/PhotoCloudSharingData/: The PhotoCloudSharingData folder contains artifacts pertaining to Shared Albums, an iOS feature that allows users to easily share photo collections. Each shared album will have a subfolder that contains an Info.plist file, which contains album metadata (the name, the owner, and more), and DCIM_CLOUD.plist, which stores the number of photos in the album.

- /private/var/mobile/Media/Recordings/*: This folder contains user-recorded voice memos.

The following screenshot shows which files and folders can be found in the PhotoData folder:

Figure 9.1 – The PhotoData folder contains media metadata

Each media asset has two different kinds of metadata associated with it – EXIF metadata, which contains details such as the device that generated the image or the location, is stored within the file itself, while iOS-generated metadata is stored in the Photos.sqlite database.

In the following section, we'll analyze the database to learn how to extract meaningful information for each image or video that is stored on the device.

Analyzing photos and videos

Every time a photo or video is taken through a device's camera or saved onto a device, iOS analyzes it and generates a multitude of metadata, which is stored in the Photos.sqlite database.

The following is a list of events that occur during the process:

- First, the newly created photo or video is saved in /private/var/mobile/Media/DCIM/1**APPLE/ as a JPG, HEIC, MP4, or MOV file. The folder name will iterate upward (100APPLE, 101APPLE, 102APPLE, and so on) as more photos or videos are stored on the device.

- Additionally, the PreviewWellImage.tiff image is created and stored in /private/var/mobile/Media/PhotoData/MISC/. This image is the thumbnail that is displayed in the Photos app where the most recent image is displayed.

- As the new media is stored, a new entry is created in the Photos.sqlite database, in the ZGENERICASSET table. Since iOS 14, this table is now called ZASSET. The new record will contain metadata such as the date and time when the content was saved, the date and time of when it was last edited, the filename, and the color space.

- In the background, iOS starts processing the newly created media by running several algorithms, such as facial and object recognition. If a name has been associated with a face that iOS recognizes in the media file, the photo or video will also be displayed in the People album, in the Photos app. The results of the analysis are then stored in the Photos.sqlite database and in mediaanalysis.db, which can be found in /private/var/mobile/Media/MediaAnalysis/.

As you can see, there's a lot going on! Now that we know where the artifacts are located, we will focus on analyzing photos and videos.

Understanding Photos.sqlite

With over 60 tables packed full of data, the Photos.sqlite database is one of the largest datasets that can be found in an iOS acquisition. A detailed analysis of this database is beyond the scope of this book, so we'll focus on extracting the most important pieces of metadata from the ZGENERICASSET (ZASSET in iOS 14 and 15) table.

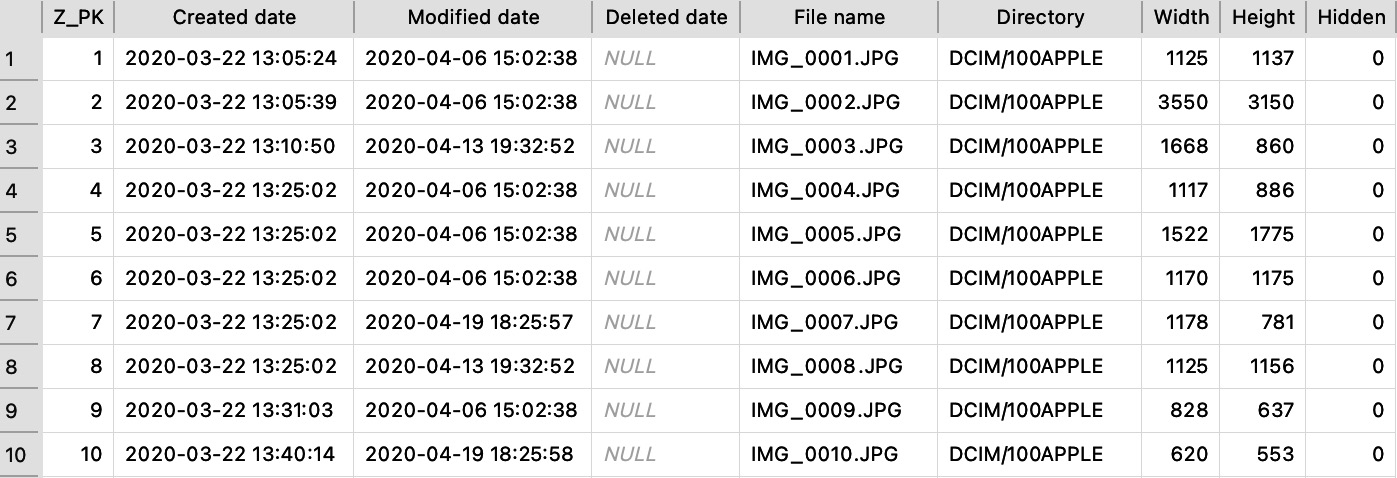

Once you've opened the file with your tool of choice, such as DB Browser for SQLite, run the following query:

SELECT

Z_PK,

DATETIME(ZDATECREATED + 978307200, 'UNIXEPOCH') AS "Created date",

DATETIME(ZMODIFICATIONDATE + 978307200, 'UNIXEPOCH') AS "Modified date",

DATETIME(ZTRASHEDDATE + 978307200, 'UNIXEPOCH') AS "Deleted date",

ZFILENAME AS "File name",

ZDIRECTORY AS "Directory",

ZWIDTH AS "Width",

ZHEIGHT AS "Height",

ZHIDDEN AS "Hidden"

FROM ZGENERICASSET

ORDER BY Z_PK ASC;

The following screenshot shows the result of the query by running it on our example dataset:

Figure 9.2 – The results of the query from the Photos.sqlite database

As you can see, the query extracts the list of all media stored on the device, including metadata such as when the media object was created, when it was last modified, if and when it was deleted, if the user chose to hide it, the width and the height, and of course, the directory and the filename.

So far, we've only scratched the surface of Photos.sqlite, as this database has a lot more to offer. By going deeper into the evidence and correlating data between different tables, the examiner can leverage the metadata provided by iOS to gain insights such as the following:

- Was the media asset shared? If so, when?

- Was a particular photo adjusted/corrected on the device?

- Was the photo taken on the device or did the user receive it?

- Which application received and stored the photo?

- How many times did the user view a media asset?

- Was the media asset added to a specific album?

To extract all this data from the database, we're going to use a more complex SQL query that was written by Scott Koenig (@Scott_Kjr). The query can be found on his blog: theforensicscooter.com.

Tip

To gain a full understanding of how Photos.sqlite works and what data can be found by delving into the database, you can refer to this article: Using Photos.sqlite to Show the Relationships Between Photos and the Application they were Created with?, DFIR Review, Koenig, S., 2021 (retrieved from https://dfir.pubpub.org/pub/v19rksyf).

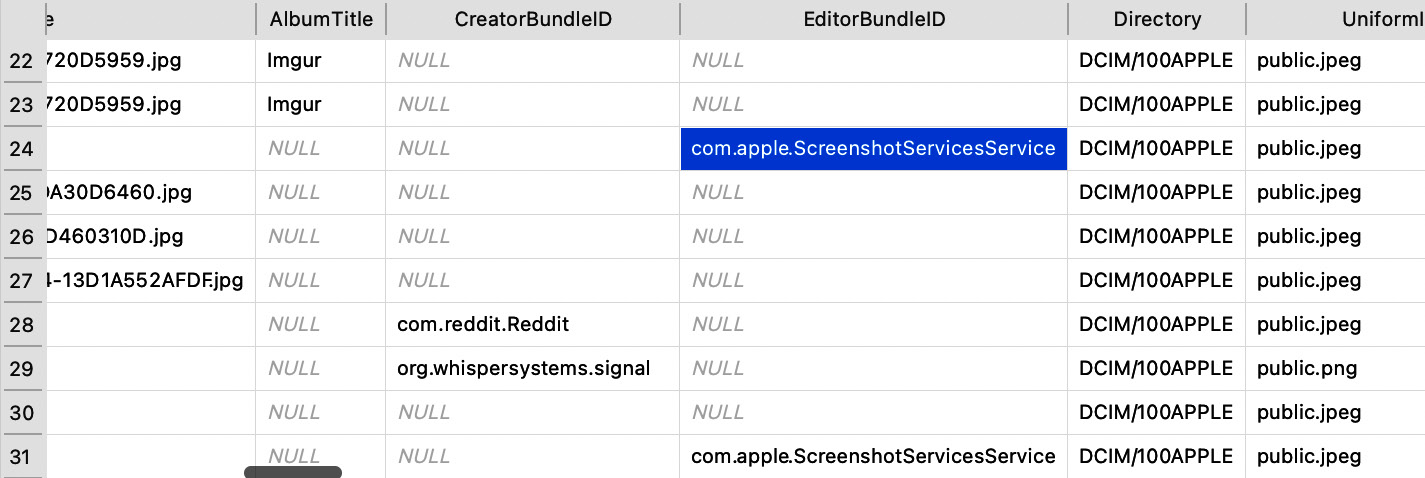

The following screenshot shows the query executed through DB Browser:

Figure 9.3 – The results of the query from the Photos.sqlite database

As you can see, this query provides a lot more data. The following is a list of the most relevant columns and their descriptions:

- The query provides us with a Kind column, which indicates whether the media asset is a photo or a video.

- The AlbumTitle column shows whether the asset belongs to a specific album, such as the WhatsApp album.

- By looking at CreatorBundleID, the investigator can learn which application on the device created the media asset – for instance, the com.facebook.Messenger value is a clear indication that the media was received through the Facebook app.

- EditorBundleID indicates which application edited the asset last – for example, in the following screenshot, the com.apple.ScreenshotServicesService value shows that a screenshot was taken on the device and that the screenshot utility was used to edit the image:

Figure 9.4 – CreatorBundleID and EditorBundleID indicate where the asset originates from

- The SavedAssetType column indicates whether the asset was created on the device or whether it was synced from another source.

- Favorite, Hidden_File, and TrashState indicate whether the media asset was included in the Favorites album, whether the user opted to hide it, or whether it was marked for deletion.

- The ShareCount and LastSharedDate columns indicate how many times a particular media asset was shared and the timestamp of the last time it occurred:

Figure 9.5 – The ShareCount column indicates whether a media asset was shared with other devices

- By checking the Has_Adjustments column, the investigator can understand whether the media asset was adjusted, edited, or cropped on the device.

- If location services were enabled, the Latitude and Longitude columns store the coordinates of the location where a photo or video was taken.

- Finally, the UUID column indicates the asset's unique identifier.

As we can see from the result of this query, there is a significant amount of metadata stored within the database; however, this is not the only source of media metadata. In the following section, we'll focus on extracting information from the media file itself.

Introducing EXIF metadata

The Photos.sqlite database can be a huge source of metadata, but there may be some occasions in which the database is not available, and the investigator only has access to the media file. Thankfully, images and videos often contain embedded metadata, which is known as Exchangeable Image File Format (EXIF).

EXIF metadata can provide a wealth of information to investigators, such as the following:

- The device model

- Camera settings

- Exposure and lens specifications

- Timestamps

- Location data

- Altitude and bearing

- Speed reference

When a photo or video is captured through an iOS device's built-in camera, EXIF metadata is automatically added to the media asset. The following screenshot shows the amount of metadata that can be extracted from a photo that was taken on an iPhone SE:

Figure 9.6 – EXIF metadata extracted from a photo that was captured on an iPhone

As you can see, by analyzing the EXIF metadata, we can learn that the photo was taken on an iPhone SE, using the back camera. The camera's flash was set to Auto, but it didn't fire, the shutter speed was automatically set to 1/1788, while the exposure time was 1/1789. The timestamp indicates when the photo was taken, based on the device's time zone, which is also indicated.

Metadata related to GPS is possibly even more useful; the iPhone automatically stored the device's coordinates, altitude, bearing, and speed when the photo was captured. Of course, this is dependent on Location Services being enabled on the device.

Tip

The investigator should always attempt to validate EXIF metadata: with iOS 15, Apple introduced the possibility of editing the file's location and capturing the timestamp directly from within the Photos application. However, from research that has been carried out, it seems that editing the metadata from the Photos app only affects the data stored in the Photos.sqlite database, while the EXIF metadata remains unchanged.

Although EXIF metadata can be extremely valuable for media assets created on a device, it won't be as useful when dealing with photos or videos received through a third-party application; most instant messaging apps, such as WhatsApp or Telegram, compress images before transferring them, resulting in the EXIF data being stripped from the file.

Now that we know what EXIF metadata is, we'll learn which tools can be used to view it.

Viewing EXIF metadata

There are a number of free tools available to view a media asset's EXIF, although most operating systems allow the user to view the data without installing any third-party tool.

On Windows, you can view a file's metadata by right-clicking on the media and selecting Properties. Then, click on the Details tab and scroll down to view the EXIF data. The exiftool free tool can also be used for the same purpose. It can be downloaded from http://exiftool.org and is compatible with both Windows and macOS.

On macOS, you can view EXIF simply by opening the file using Preview. Once open, click on Tools and select Show Inspector. Then, click on the Exif tab.

On most Linux distributions, the exif command-line tool can be used for this purpose.

By now, you should have a general understanding of what EXIF metadata is, how it can be beneficial to an investigation, and how to analyze it.

Analyzing user viewing activity

So far, we have focused on analyzing photo and video media assets; however, the investigator may want to understand not only what media was stored on a device but also what media the user viewed.

This may include any of the following:

- Audio/video streamed through Safari or other browsers

- Music played through Apple Music or third-party apps such as Spotify

- Videos played through third-party apps such as YouTube and Netflix

The KnowledgeC.db database, which we discussed in Chapter 5, Pattern-of-Life Forensics, tracks most of the user's day-to-day activity, including events related to audio or video playback.

The table of interest is the ZOBJECT table, which stores device events, organizing them by stream name. Every time iOS detects that the user has initiated media playback, a /media/nowPlaying event is triggered.

The following screenshot shows some example data from the KnowledgeC.db database, analyzed using DB Browser:

Figure 9.7 – Media events extracted from the KnowledgeC.db database

By correlating the data from the ZOBJECT table with the metadata stored in ZSTRUCTUREDMETADATA, the examiner can gain many useful insights, such as what song the user was listening to, what video was streaming through Safari, or what show they were binge-watching on Netflix.

The following query will extract all the relevant data from KnowledgeC.db:

SELECT

datetime(ZOBJECT.ZSTARTDATE+978307200,'UNIXEPOCH', 'LOCALTIME') as "START",

datetime(ZOBJECT.ZENDDATE+978307200,'UNIXEPOCH', 'LOCALTIME') as "END",

(ZOBJECT.ZENDDATE-ZOBJECT.ZSTARTDATE) as "USAGE IN SECONDS",

ZOBJECT.ZVALUESTRING,

ZSTRUCTUREDMETADATA.Z_DKNOWPLAYINGMETADATAKEY__ALBUM as "NOW PLAYING ALBUM",

ZSTRUCTUREDMETADATA.Z_DKNOWPLAYINGMETADATAKEY__ARTIST as "NOW PLAYING ARTIST",

ZSTRUCTUREDMETADATA.Z_DKNOWPLAYINGMETADATAKEY__GENRE as "NOW PLAYING GENRE",

ZSTRUCTUREDMETADATA.Z_DKNOWPLAYINGMETADATAKEY__TITLE as "NOW PLAYING TITLE",

ZSTRUCTUREDMETADATA.Z_DKNOWPLAYINGMETADATAKEY__DURATION as "NOW PLAYING DURATION"

FROM ZOBJECT

left join ZSTRUCTUREDMETADATA on ZOBJECT.ZSTRUCTUREDMETADATA = ZSTRUCTUREDMETADATA.Z_PK

left join ZSOURCE on ZOBJECT.ZSOURCE = ZSOURCE.Z_PK

WHERE ZSTREAMNAME like "/media%"

ORDER BY "START";

As you can see from the following screenshot, the query provides us with a wealth of information, such as which app the user was using to view the media, the duration, and the name of the song or video:

Figure 9.8 – Extracting media viewing data from KnowledgeC.db

It is worth noting here that KnowledgeC.db doesn't just store playback events pertaining to media applications such as Apple Music or Spotify; it also logs any viewing activity that occurs through the Safari browser, including content that was viewed in private browsing mode.

Finally, if more context is required for an investigation, the CurrentPowerlog.PLSQL database can be queried to extract insights on any app that is utilizing the device's audio functions. In particular, the PLAUDIOAGENT_EVENTPOINT_AUDIOAPP table will provide the app, service name, or bundle ID for the process that is using the audio function.

By now, you should have a general understanding of what kind of media assets can be found on a typical iOS acquisition, what metadata can be extracted from iOS and EXIF artifacts, and how to analyze user viewing activity.

Summary

In this chapter, we learned all about photo, video, and audio forensics. First, we introduced the concept of media forensics to learn which artifacts an investigator should expect to find on an iOS device and where they are located.

Later on in the chapter, we focused on analyzing metadata from photos and videos. We introduced the Photos.sqlite database and discussed different options to extract data pertaining to the media files stored on the device. Then, we learned all about EXIF metadata, how an investigation can benefit from such data, and how to extract it using Windows, macOS, and Linux.

Finally, in the last section of this chapter, we discussed how to detect user viewing activity by using the events logged in the KnowledgeC.db database.

In the next chapter, we will learn how to analyze third-party applications.