Chapter 14

Stateless Application Patterns

THE AWS CERTIFIED DEVELOPER – ASSOCIATE EXAM TOPICS COVERED IN THIS CHAPTER INCLUDE, BUT ARE NOT LIMITED TO, THE FOLLOWING:

- Domain 1: Deployment

1.4 Deploy serverless applications.

1.4 Deploy serverless applications.- Domain 2: Security

2.1 Make authenticated calls to AWS services.

2.1 Make authenticated calls to AWS services. 2.2 Implement encryption using AWS services.

2.2 Implement encryption using AWS services. 2.3 Implement application authentication and authorization.

2.3 Implement application authentication and authorization.- Domain 3: Development with AWS Services

3.2 Translate functional requirements into application design.

3.2 Translate functional requirements into application design. 3.3 Implement application design into application code.

3.3 Implement application design into application code. 3.4 Write code that interacts with AWS services by using APIs, SDKs, and AWS CLI.

3.4 Write code that interacts with AWS services by using APIs, SDKs, and AWS CLI.

Introduction to the Stateless Application Pattern

In previous chapters, you were introduced to compute, networking, databases, and storage on the AWS Cloud. This chapter covers the fully managed services that you use to build stateless applications on AWS. Scalability is an important consideration when you create and deploy applications that are highly available, and stateless applications are easier to scale.

When users or services interact with an application, they often perform a sequence of interactions that form a session. A stateless application is one that requires no knowledge of previous interactions and stores no session information. Given the same input, an application can provide the same response to any user.

A stateless application can scale horizontally because requests can be serviced by any of the available compute resources, such as Amazon Elastic Compute Cloud (Amazon EC2) instances or AWS Lambda functions. With no session data sharing, you can add more compute resources as necessary. When that compute capacity is no longer needed, you can safely terminate any individual resource. Those resources do not need to be aware of the presence of their peers, and they only need a way to share the workload among them.

This chapter discusses the AWS services that provide a mechanism for persisting state outside of the application: Amazon DynamoDB, Amazon Simple Storage Service (Amazon S3), Amazon ElastiCache, and Amazon Elastic File System (Amazon EFS).

Amazon DynamoDB

Amazon DynamoDB is a fast and flexible NoSQL database service that applications use that require consistent, single-digit millisecond latency at any scale. A fully managed NoSQL database supports both document and key-value store models. DynamoDB is ideal for mobile, web, gaming, ad tech, and Internet of Things (IoT) applications. DynamoDB provides an effective solution for sharing session states across web servers, Amazon EC2 instances, or computing nodes.

Using Amazon DynamoDB to Store State

DynamoDB provides fast and predictable performance with seamless scalability. It enables you to offload the administrative burdens of operating and scaling a distributed database, including hardware provisioning, setup and configuration, replication, software patching, or cluster scaling. Also, DynamoDB offers encryption at rest, which reduces the operational tasks and complexity involved in protecting sensitive data.

With DynamoDB, you can create database tables that can store and retrieve any amount of data (collection) and serve any level of request traffic. You can scale up or scale down the throughput capacity of your tables without downtime or performance degradation and use the AWS Management Console to monitor resource utilization and performance metrics. DynamoDB provides on-demand backup capability to create full backups of tables for long-term retention and archives for regulatory compliance. Use DynamoDB to delete expired items from tables automatically to reduce both storage usage and the cost to store irrelevant data.

DynamoDB automatically spreads data and traffic for tables over a sufficient number of servers to handle throughput and storage requirements while maintaining consistent and fast performance. All of your data is stored on solid-state drive (SSDs) and automatically replicates across multiple Availability Zones in an AWS Region, providing built-in high availability and data durability. You can use global tables to keep DynamoDB tables synchronized across AWS Regions, and you can access this service using the DynamoDB console, the AWS Command Line Interface (AWS CLI), a generic web services Application Programming Interface (API), or any programming language that the AWS software development kit (AWS SDK) supports.

Tables, items, and attributes are core components of DynamoDB. A table is a collection of items, and each item is a collection of attributes. For example, you could have a table called Cars, which stores information about vehicles. DynamoDB uses primary keys to identify each item in a table (e.g., Ford) uniquely and secondary indexes to provide more querying flexibility (e.g., Mustang). You can use Amazon DynamoDB Streams to capture data modification events in DynamoDB tables.

Primary Key, Partition Key, and Sort Key

When you create a table, you must configure both the table name and the primary key of the table. The primary key uniquely identifies each item in the table so that no two items have the same key. DynamoDB supports two different kinds of primary keys: a partition key and sort key.

A partition key is a simple primary key, composed of only a partition key attribute. The partition key of an item is also known as its hash attribute. The term hash attribute derives from the use of an internal hash function in DynamoDB that evenly distributes data items across partitions based on their partition key values. DynamoDB uses the partition key’s value as input to an internal hash function. The output from the hash function determines the partition (physical storage internal to DynamoDB) in which the item will be stored.

![]() In a table that has only a partition key, no two items can have the same partition key value.

In a table that has only a partition key, no two items can have the same partition key value.

You can also create a primary key as a composite primary key, consisting of a partition key (first attribute) and a sort key (second attribute).

The sort key of an item is also known as its range attribute. The term range attribute derives from the way that DynamoDB stores items with the same partition key physically close together, in sorted order, by the sort key value.

Each primary key attribute must be a scalar, meaning that it can hold only a single value. The only data types allowed for primary key attributes are string, number, or binary. There are no such restrictions for other, nonkey attributes.

Best Practices for Designing and Using Partition Keys

When DynamoDB stores data, it divides table items into multiple physical partitions primarily based on the partition key values, and it distributes the table data accordingly. The primary key that uniquely identifies each item in a DynamoDB table can be either simple (partition key only) or composite (partition key combined with a sort key). Partition key values determine the logical partitions in which a table’s data is stored, which affects the table’s underlying physical partitions. Efficient partition key design keeps your workload spread evenly across these partitions.

A single physical DynamoDB partition supports a maximum of 3,000 read-capacity units (RCUs) or 1,000 write-capacity units (WCUs). Provisioned I/O capacity for the table is divided evenly among all physical partitions. Therefore, design your partition keys to spread I/O requests as evenly as possible across the table’s partitions to prevent “hot spots” that use provisioned I/O capacity inefficiently.

Example 1: Hotspot

If a table has a small number of heavily accessed partition key values (possibly even one heavily used partition key value), request traffic is concentrated on a small number of partitions, or only one partition. If the workload is heavily unbalanced, meaning that it is disproportionately focused on one or a few partitions, the requests do not achieve the overall provisioned throughput level.

![]() To achieve the maximum DynamoDB throughput, create tables where the partition key has a large number of distinct values, and values are requested fairly uniformly, as randomly as possible.

To achieve the maximum DynamoDB throughput, create tables where the partition key has a large number of distinct values, and values are requested fairly uniformly, as randomly as possible.

Designing Partition Keys to Distribute Even Workloads

The partition key portion of a table’s primary key determines the logical partitions in which a table’s data is stored, and it affects the underlying physical partitions. Provisioned I/O capacity for the table is divided evenly among these physical partitions, but a partition key design that does not distribute I/O requests evenly can create “hot” partitions that use your provisioned I/O capacity inefficiently and result in throttling.

The optimal usage of a table’s provisioned throughput depends on both the workload patterns of individual items and the partition key design. This does not mean that you must access all partition key values to achieve an efficient throughput level or even that the percentage of accessed partition key values must be high. It does mean that the more distinct partition key values that your workload accesses, the more those requests are spread across the partitioned space. You will use your provisioned throughput more efficiently as the ratio of partition key values accessed to the total number of partition key values increases.

Table 14.1 provides a comparison of the provisioned throughput efficiency of some common partition key schemas.

Table 14.1 Partition Key Schemas

| Partition Key Value | Uniformity |

| User Identification (ID) where the application has many users | Good |

| Status Code where there are only a few possible status codes | Bad |

| Item Creation Date rounded to the nearest period (day, hour, or minute) | Bad |

| Device ID where each device accesses data at relatively similar intervals | Good |

| Device ID where even if there are many devices being tracked, one is by far more popular than all the others | Bad |

If a single table has only a small number of partition key values, consider distributing your write operations across more distinct partition key values, and structure the primary key elements to avoid one “hot” (heavily requested) partition key value that slows overall performance.

Consider a table with a composite primary key. The partition key represents the item’s creation date, rounded to the nearest day. The sort key is an item identifier. On a given day, all new items are written to that single partition key value and corresponding physical partition.

If the table fits entirely into a single partition (considering the growth of your data over time), and if your application’s read and write throughput requirements do not exceed the read and write capabilities of a single partition, your application does not encounter any unexpected throttling because of partitioning.

However, if you anticipate your table scaling beyond a single partition, architect your application so that it can use more of the table’s full provisioned throughput.

Using Write Shards to Distribute Workloads Evenly

A shard is a uniquely identified group of stream records within a stream. To distribute writes better across a partition key space in DynamoDB, expand the space. You can add a random number to the partition key values to distribute the items among partitions, or you can use a number that is calculated based on what you want to query.

Random Suffixes in Shards

To distribute loads more evenly across a partition key space, add a random number to the end of the partition key values and then randomize the writes across the larger space. For example, if a partition key represents today’s date, choose a random number from 1 through 200, and add it as a suffix to the date. This yields partition key values such as 2018-07-09.1, 2014-07-09.2, and so on, through 2018-07-09.200. Because you are randomizing the partition key, the writes to the table on each day are spread evenly across multiple partitions. This results in better parallelism and higher overall throughput.

To read all of the items for a given day, you would have to query the items for all of the suffixes and then merge the results. For example, first issue a Query request for the partition key value 2018-07-09.1, then another Query for 2018-07-09.2, and so on, through 2018-07-09.200. When complete, your application then merges the results from all Query requests.

Calculated Suffixes in Shards

A random strategy can improve write throughput, but it is difficult to read a specific item because you do not know which suffix value was written to the item. To make it easier to read individual items, instead of using a random number to distribute the items among partitions, use a number that you can calculate based on what you want to query.

Consider the previous example, where a table uses today’s date in the partition key. Now suppose that each item has an accessible OrderId attribute and that you most often need to find items by OrderId in addition to date. Before your application writes the item to the table, it can calculate a hash suffix based on the OrderId, append it to the partition key date, and generate numbers from 1 through 200 that evenly distribute, similar to what the random strategy produces. You can use a simple calculation, such as the product of the UTF-8 code point values, for the characters in the OrderId, modulo 200, + 1. The partition key value would then be the date concatenated with the calculation result.

With this strategy, the writes are spread evenly across the partition key values and across the physical partitions. You can easily perform a GetItem operation for a particular item and date because you can calculate the partition key value for a specific OrderId value.

To read all of the items for a given day, you must query each of the 2018-07-09.N keys (where N is 1 through 200), and your application then merges the results. With this strategy, you avoid a single “hot” partition key value taking the entire workload.

Items

Each table contains zero or more items. An item is a group of attributes that is uniquely identifiable among all other entities in the table. For example, in a People table, each item represents a person, and in a Cars table, each item represents one vehicle. Items in DynamoDB are similar to rows, records, or tables in other database systems. However, in DynamoDB, there is no limit to the number of items that you can store in a table.

Attributes

Each item in a table is composed of one or more attributes. An attribute is a fundamental data element, something that does not need to be broken down any further. For example, an item in a People table contains attributes called PersonID, LastName, FirstName, and so on. For a Department table, an item may have attributes such as DepartmentID, Name, Manager, and so on. Attributes in DynamoDB are similar in many ways to fields or columns in other database systems.

The naming rules for DynamoDB tables are as follows:

- All names must be encoded using UTF-8 and be case-sensitive.

- Table names must be between 3 and 255 characters long and can contain only the following characters:

- a–z

- A–Z

- 0–9

- _ (underscore)

- – (dash)

- . (period)

- Attribute names must be between 1 and 255 characters long.

- Each item in the table has a unique identifier, or primary key, which distinguishes the item from all others in the table. In a People table, the primary key consists of one attribute, PersonID.

- Other than the primary key, the People table is schema-less, meaning that you are not required to define the attributes or their data types beforehand. Each item can have its own distinct attributes.

- Most of the attributes are scalar, meaning that they can have only one value. Strings and numbers are common scalars.

- Some of the items have a nested attribute. For example, in a People table, the Address attribute may have nested attributes such as Street, City, and PostalCode. DynamoDB supports nested attributes up to 32 levels deep.

Data Types

DynamoDB supports several data types for attributes within a table.

Scalar

A scalar type can represent exactly one value. The scalar types are number, string, binary, Boolean, and null.

Number Numbers can be positive, negative, or zero and can have up to 38 digits of precision. Exceeding this limit results in an exception. Numbers are presented as variable length. Leading and trailing zeros are trimmed. All numbers are sent as strings to maximize compatibility across languages and libraries. DynamoDB treats them as number-type attributes for mathematical operations. You can use the number data type to represent a date or a timestamp. One way to do this is with the epoch time, the number of seconds since 00:00:00 Coordinated Universal Time (UTC) on January 1, 1970.

String Strings are Unicode with UTF-8 binary encoding. The length of a string must be greater than zero, and it is constrained by the maximum DynamoDB item size limit of 400 KB. If a primary key attribute is a string type, the following additional constraints apply:

- For a simple primary key, the maximum length of the first attribute value (partition key) is 2,048 bytes.

- For a composite primary key, the maximum length of the second attribute value (sort key) is 1,024 bytes.

- DynamoDB collates and compares strings using the bytes of the underlying UTF-8 string encoding. For instance, “a” (0x61) is greater than “A” (0x41).

You can use the string data type to represent a date or a timestamp. One way to do this is to use ISO 8601 strings as follows:

- 2018-04-19T12:34:56Z

- 2018-02-31T10:22:18Z

- 2017-05-08T12:22:46Z

Binary Binary type attributes can store any binary data, such as compressed text, encrypted data, or images. Whenever DynamoDB compares binary values, it treats each byte of the binary data as unsigned. The length of a binary attribute must be greater than zero, and it is constrained by the maximum DynamoDB item size limit of 400 KB. If a primary key attribute is a binary type, the following additional constraints apply:

- For a simple primary key, the maximum length of the first attribute value (partition key) is 2,048 bytes.

- For a composite primary key, the maximum length of the second attribute value (sort key) is 1,024 bytes.

Applications must encode binary values in base64-encoded format before sending them to DynamoDB. Upon receipt, DynamoDB decodes the data into an unsigned byte array and uses it as the length of the binary attribute.

Boolean A Boolean type attribute can store one of two values: true or false.

Null A null attribute is one with an unknown or undefined state.

Document

There are two document types, list and map, which you can nest within each other to represent complex data structures up to 32 levels deep. There is no limit on the number of values in a list or a map, as long as the item containing the values fits within the DynamoDB item size limit of 400 KB.

![]() An attribute value cannot be an empty string or an empty set; however, empty lists and maps are allowed.

An attribute value cannot be an empty string or an empty set; however, empty lists and maps are allowed.

List A list type attribute can store an ordered collection of values. Lists are enclosed in square brackets [ … ] and are similar to a JavaScript Object Notation (JSON) array. There are no restrictions on the data types that can be stored in a list element, and the elements in a list element can be of different types. Here is an example of a list with strings and numbers:

MyFavoriteThings: ["Thriller", "Purple Rain", 1983, 2]Map A map type attribute can store an unordered collection of name/value pairs. Maps are enclosed in curly braces { … } and are similar to a JSON object. There are no restrictions on the data types that you can store in a map element, and elements in a map do not have to be the same type. Maps are ideal for storing JSON documents in DynamoDB. The following example shows a map that contains a string, a number, and a nested list that contains another map:

{Location: "Labrynth",MagicStaff: 1,MagicRings: ["The One Ring",{"ElevenKings: { Quantity : 3},"DwarfLords: { Quantity : 7},"MortalMen: { Quantity : 9}}]}

![]() DynamoDB enables you to work with individual elements within maps—even if those elements are deeply nested.

DynamoDB enables you to work with individual elements within maps—even if those elements are deeply nested.

Set DynamoDB supports types that represent sets of number, string, or binary values. There is no limit on the number of values in a set, as long as the item containing the value fits within the DynamoDB 400 KB item size limit. Each value within a set must be unique. The order of the values within a set is not preserved. Applications must not rely on the order of elements within the set. DynamoDB does not support empty sets.

![]() All of the elements within a set must be of the same type.

All of the elements within a set must be of the same type.

Amazon DynamoDB Tables

DynamoDB global tables provide a fully managed solution for deploying a multiregion, multi-master database, without having to build and maintain your own replication solution. When you create a global table, you configure the AWS Regions where you want the table to be available. DynamoDB performs all of the necessary tasks to create identical tables in these regions and propagate ongoing data changes to all of the regions.

DynamoDB global tables are ideal for massively scaled applications, with globally dispersed users. In such an environment, you can expect fast application performance. Global tables provide automatic multi-master replication to AWS Regions worldwide, so you can deliver low-latency data access to your users no matter where they are located.

There is no practical limit on a table’s size. Tables are unconstrained in terms of the number of items or the number of bytes. For any AWS account, there is an initial limit of 256 tables per region.

Provisioned Throughput

With DynamoDB, you can create database tables that store and retrieve any amount of data and serve any level of request traffic. You can scale your table’s throughput capacity up or down without downtime or performance degradation, and you can use the AWS Management Console to monitor resource utilization and performance metrics.

![]() For any table or global secondary index, the minimum settings for provisioned throughput are one read capacity unit and one write capacity unit.

For any table or global secondary index, the minimum settings for provisioned throughput are one read capacity unit and one write capacity unit.

AWS places some default limits on the throughput that you can provision. These are the limits unless you request a higher amount.

![]() You can apply all of the available throughput of an account to a single table or across multiple tables.

You can apply all of the available throughput of an account to a single table or across multiple tables.

Throughput Capacity for Reads and Writes in Tables and Indexes

When you create a table or index in DynamoDB, you must configure your capacity requirements for read and write activity. If you define the throughput capacity in advance, DynamoDB can reserve the necessary resources to meet the read and write activity that your application requires, while it ensures consistent, low-latency performance.

Throughput capacity is specified in terms of read capacity units or write capacity units:

- One read capacity unit represents one strongly consistent read per second, or two eventually consistent reads per second, for an item up to 4 KB in size. If you need to read an item larger than 4 KB, DynamoDB must consume additional read capacity units. The total number of read capacity units required depends on both the item size and whether you want an eventually consistent read or strongly consistent read.

- One write capacity unit represents one write per second for an item up to 1 KB in size. If you need to write an item larger than 1 KB, DynamoDB must consume additional write capacity units. The total number of write capacity units required depends on the item size.

- For example, if you create a table with five read capacity units and five write capacity units, your application could do the following:

- Perform strongly consistent reads of up to 20 KB per second (4 KB × 5 read capacity units)

- Perform eventually consistent reads of up to 40 KB per second (twice as much read throughput)

- Write up to 5 KB per second (1 KB × 5 write capacity units)

If your application reads or writes larger items (up to the DynamoDB maximum item size of 400 KB), it will consume more capacity units.

If your read or write requests exceed the throughput settings for a table, DynamoDB can throttle that request. DynamoDB can also throttle read requests exceeds for an index. Throttling prevents your application from consuming too many capacity units. When a request is throttled, it fails with HTTP 400 code (Bad Request) and a ProvisionedThroughputExceededException. The AWS SDKs have built-in support for retrying throttled requests, so you do not need to write this logic yourself.

You can use the AWS Management Console to monitor your provisioned and actual throughput and modify your throughput settings if necessary.

DynamoDB provides the following mechanisms for managing throughput:

- DynamoDB automatic scaling

- Provisioned throughput

- Reserved capacity

- AWS Lambda triggers in DynamoDB streams

Setting Initial Throughput Settings

Every application has different requirements for reading and writing from a database. When you determine the initial throughput settings for a DynamoDB table, take the following attributes into consideration:

Item sizes Some items are small enough that they can be read or written by using a single capacity unit. Larger items require multiple capacity units. By estimating the sizes of the items that will be in your table, you can configure accurate settings for your table’s provisioned throughput.

Expected read and write request rates In addition to item size, estimate the number of reads and writes to perform per second.

Read consistency requirements Read capacity units are based on strongly consistent read operations, which consume twice as many database resources as eventually consistent reads. Determine whether your application requires strongly consistent reads, or whether it can relax this requirement and perform eventually consistent reads instead.

![]() Read operations in DynamoDB are by default eventually consistent, but you can request strongly consistent reads for these operations if necessary.

Read operations in DynamoDB are by default eventually consistent, but you can request strongly consistent reads for these operations if necessary.

Item Sizes and Capacity Unit Consumption

Before you choose read and write capacity settings for your table, understand your data and how your application will access it. These inputs help you determine your table’s overall storage and throughput needs and how much throughput capacity your application will require. Except for the primary key, DynamoDB tables are schemaless, so the items in a table can all have different attributes, sizes, and data types. The total size of an item is the sum of the lengths of its attribute names and values. You can use the following guidelines to estimate attribute sizes:

- Strings are Unicode with UTF-8 binary encoding. The size of a string is as follows:

(length of attribute name) + (number of UTF-8-encoded bytes)

- Numbers are variable length, with up to 38 significant digits. Leading and trailing zeroes are trimmed.

- The size of a number is approximately as follows:

(length of attribute name) + (1 byte per two significant digits) + (1 byte)

- A binary value must be encoded in base64 format before it can be sent to DynamoDB, but the value’s raw byte length is used for calculating size. The size of a binary attribute is as follows:

(length of attribute name) + (number of raw bytes)

- The size of a null attribute or a Boolean attribute is as follows:

(length of attribute name) + (1 byte)

- An attribute of type List or Map requires 3 bytes of overhead, regardless of its contents. The size of a List or Map is as follows:

(length of attribute name) + sum (size of nested elements) + (3 bytes).

- The size of an empty List or Map is as follows:

(length of attribute name) + (3 bytes)

![]() Choose short attribute names rather than long ones. This helps to optimize capacity unit consumption and reduce the amount of storage required for your data.

Choose short attribute names rather than long ones. This helps to optimize capacity unit consumption and reduce the amount of storage required for your data.

Capacity Unit Consumption for Reads

The following describes how read operations for DynamoDB consume read capacity units:

GetItem Reads a single item from a table. To determine the number of capacity units GetItem will consume, take the item size and round it up to the next 4 KB boundary. If you specified a strongly consistent read, this is the number of capacity units required. For an eventually consistent read (the default), take this number and divide it by 2.

- For example, if you read an item that is 3.5 KB, DynamoDB rounds the item size to 4 KB. If you read an item of 10 KB, DynamoDB rounds the item size to 12 KB.

BatchGetItem Reads up to 100 items, from one or more tables. DynamoDB processes each item in the batch as an individual GetItem request, so DynamoDB first rounds up the size of each item to the next 4-KB boundary and then calculates the total size. The result is not necessarily the same as the total size of all the items.

- For example, if BatchGetItem reads a 1.5-KB item and a 6.5-KB item, DynamoDB calculates the size as 12 KB (4 KB + 8 KB), not 8 KB (1.5 KB + 6.5 KB).

Query Reads multiple items that have the same partition key value. All of the items returned are treated as a single read operation, whereby DynamoDB computes the total size of all items and then rounds up to the next 4-KB boundary.

- For example, suppose that your query returns 10 items whose combined size is 40.8 KB. Amazon DynamoDB rounds the item size for the operation to 44 KB. If a query returns 1,500 items of 64 bytes each, the cumulative size is 96 KB.

Scan Reads all items in a table. DynamoDB considers the size of the items that are evaluated, not the size of the items returned by the scan.

If you perform a read operation on an item that does not exist, DynamoDB will still consume provisioned read throughput. A request for a strongly consistent read consumes one read capacity unit, whereas a request for an eventually consistent read consumes 0.5 of a read capacity unit.

For any operation that returns items, request a subset of attributes to retrieve. However, doing so has no impact on the item size calculations. In addition, Query and Scan can return item counts instead of attribute values. Getting the count of items uses the same quantity of read capacity units and is subject to the same item size calculations, because DynamoDB has to read each item to increment the count:

Read operations and read consistency The preceding calculations assumed requests for strongly consistent reads. For a request for eventually consistent reads, the operation consumes only half of the capacity units. For example, of an eventually consistent read, if the total item size is 80 KB, the operation consumes only 10 capacity units.

Read consistency for Scan A Scan operation performs eventually consistent reads, by default. This means that the Scan results might not reflect changes as the result of recently completed PutItem or UpdateItem operations. If you require strongly consistent reads, when the Scan begins, set the ConsistentRead parameter to true in the Scan request. This ensures that all of the write operations that completed before the Scan began are included in the Scan response. Setting ConsistentRead to true can be useful in table backup or replication scenarios. With DynamoDB streams, to obtain a consistent copy of the data in the table, first use Scan with ConsistentRead set to true. During the Scan, DynamoDB streams record any additional write activity that occurs on the table. After the Scan completes, apply the write activity from the stream to the table.

![]() A Scan operation with ConsistentRead set to true consumes twice as many read capacity units as compared to keeping ConsistentRead at the default value (false).

A Scan operation with ConsistentRead set to true consumes twice as many read capacity units as compared to keeping ConsistentRead at the default value (false).

Capacity Unit Consumption for Writes

The following describes how DynamoDB write operations consume write capacity units:

PutItem Writes a single item to a table. If an item with the same primary key exists in the table, the operation replaces the item. For calculating provisioned throughput consumption, the item size that matters is the larger of the two.

UpdateItem Modifies a single item in the table. DynamoDB considers the size of the item as it appears before and after the update. The provisioned throughput consumed reflects the larger of these item sizes. Even if you update only a subset of the item’s attributes, UpdateItem will consume the full amount of provisioned throughput (the larger of the “before” and “after” item sizes).

DeleteItem Removes a single item from a table. The provisioned throughput consumption is based on the size of the deleted item.

BatchWriteItem Writes up to 25 items to one or more tables. DynamoDB processes each item in the batch as an individual PutItem or DeleteItem request (updates are not supported). DynamoDB first rounds up the size of each item to the next 1-KB boundary and then calculates the total size. The result is not necessarily the same as the total size of all the items. For example, if BatchWriteItem writes a 500-byte item and a 3.5-KB item, DynamoDB calculates the size as 5 KB (1 KB + 4 KB), not 4 KB (500 bytes + 3.5 KB).

For PutItem, UpdateItem, and DeleteItem operations, DynamoDB rounds the item size up to the next 1 KB. If you put or delete an item of 1.6 KB, DynamoDB rounds the item size up to 2 KB.

PutItem, UpdateItem, and DeleteItem allow conditional writes, whereby you configure an expression that must evaluate to true for the operation to succeed. If the expression evaluates to false, DynamoDB consumes write capacity units from the table.

For an existing item, the number of write capacity units consumed depends on the size of the new item. For example, a failed conditional write of a 1-KB item would consume one write capacity unit. If the new item were twice that size, the failed conditional write would consume two write capacity units.

![]() For a new item, DynamoDB consumes one write capacity unit.

For a new item, DynamoDB consumes one write capacity unit.

If a ConditionExpression evaluates to false during a conditional write, DynamoDB will consume write capacity from the table based on the following conditions:

- If the item does not currently exist in the table, DynamoDB consumes one write capacity unit.

- If the item does exist, then the number of write capacity units consumed depends on the size of the item. For example, a failed conditional write of a 1-KB item would consume one write capacity unit. If the item were twice that size, the failed conditional write would consume two write capacity units.

![]() Write operations consume write capacity units only. Write operations do not consume read capacity units.

Write operations consume write capacity units only. Write operations do not consume read capacity units.

A failed conditional write returns a ConditionalCheckFailedException. When this occurs, you do not receive any information in the response about the write capacity that was consumed. However, you can view the ConsumedWriteCapacityUnits metric for the table in Amazon CloudWatch.

To return the number of write capacity units consumed during a conditional write, use the ReturnConsumedCapacity parameter with any of the following attributes:

Total Returns the total number of write capacity units consumed.

Indexes Returns the total number of write capacity units consumed with subtotals for the table and any secondary indexes that were affected by the operation.

None No write capacity details are returned (default).

![]() Unlike a global secondary index, a local secondary index shares its provisioned throughput capacity with its table. Read and write activity on a local secondary index consumes provisioned throughput capacity from the table.

Unlike a global secondary index, a local secondary index shares its provisioned throughput capacity with its table. Read and write activity on a local secondary index consumes provisioned throughput capacity from the table.

Capacity Unit Sizes

One read capacity unit = one strongly consistent read per second, or two eventually consistent reads per second, for items up to 4 KB in size.

One write capacity unit = one write per second, for items up to 1 KB in size.

Creating Tables to Store the State

Before you store state in DynamoDB, you must create a table. To work with DynamoDB, your application must use several API operations and be organized by category.

Control Plane

Control plane operations let you create and manage DynamoDB tables and work with indexes, streams, and other objects that are dependent on tables.

CreateTable Creates a new table. You can create one or more secondary indexes and enable DynamoDB Streams for the table.

DescribeTable Returns information about a table, such as its primary key schema, throughput settings, and index information.

ListTables Returns the names of all of the tables in a list.

UpdateTable Modifies the settings of a table or its indexes, creates or remove new indexes on a table, or modifies settings for a table in DynamoDB Streams.

DeleteTable Removes a table and its dependent objects from DynamoDB.

Data Plane

Data plane operations let you perform create/read/update/delete (CRUD) actions on data in a table. Some data plane operations also enable you to read data from a secondary index.

Creating Data

The following data plane operations enable you to perform create actions on data in a table:

PutItem Writes a single item to a table. You must configure the primary key attributes, but you do not have to configure other attributes.

BatchWriteItem Writes up to 25 items to a table. This is more efficient than multiple PutItem commands because your application needs only a single network round trip to write the items. You can also use BatchWriteItem to delete multiple items from one or more tables.

Performing Batch Operations

DynamoDB provides the BatchGetItem and BatchWriteItem operations for applications that need to read or write multiple items. Use these operations to reduce the number of network round trips from your application to DynamoDB. In addition, DynamoDB performs the individual read or write operations in parallel. Your applications benefit from this parallelism without having to manage concurrency or threading.

The batch operations are wrappers around multiple read or write requests. If a BatchGetItem request contains five items, DynamoDB performs five GetItem operations on your behalf. Similarly, if a BatchWriteItem request contains two put requests and four delete requests, DynamoDB performs two PutItem and four DeleteItem requests.

In general, a batch operation does not fail unless all requests in that batch fail. If you perform a BatchGetItem operation, but one of the individual GetItem requests in the batch fails, the BatchGetItem returns the keys and data from the GetItem request that failed. The other GetItem requests in the batch are not affected.

BatchGetItem A single BatchGetItem operation can contain up to 100 individual GetItem requests and can retrieve up to 16 MB of data. In addition, a BatchGetItem operation can retrieve items from multiple tables.

BatchWriteItem The BatchWriteItem operation can contain up to 25 individual PutItem and DeleteItem requests and can write up to 16 MB of data. The maximum size of an individual item is 400 KB. In addition, a BatchWriteItem operation can put or delete items in multiple tables.

![]() BatchWriteItem does not support UpdateItem requests.

BatchWriteItem does not support UpdateItem requests.

Reading Data

The following data plane operations enable you to perform read actions on data in a table:

GetItem Retrieves a single item from a table. You must configure the primary key for the item that you want. You can retrieve the entire item or only a subset of its attributes.

BatchGetItem Retrieves up to 100 items from one or more tables. This is more efficient than calling GetItem multiple times because your application needs only a single network round trip to read the items.

Query Retrieves all items that have a specific partition key. You must configure the partition key value. You can retrieve entire items or only a subset of their attributes. You can apply a condition to the sort key values so that you retrieve only a subset of the data that has the same partition key.

![]() You can use the Query operation on a table or index if the table or index has both a partition key and a sort key.

You can use the Query operation on a table or index if the table or index has both a partition key and a sort key.

Scan Retrieves all the items in the table or index. You can retrieve entire items or only a subset of their attributes. You can use a filter condition to return only the values that you want and discard the rest.

Updating Data

UpdateItem modifies one or more attributes in an item. You must configure the primary key for the item that you want to modify. You can add new attributes and modify or remove existing attributes. You can also perform conditional updates so that the update is successful only when a user-defined condition is met. You can also implement an atomic counter, which increments or decrements a numeric attribute without interfering with other write requests.

Deleting Data

The following data plane operations enable you to perform delete actions on data in a table:

DeleteItem Deletes a single item from a table. You must configure the primary key for the item that you want to delete.

BatchDeleteItem Deletes up to 25 items from one or more tables. This is more efficient than multiple DeleteItem calls, because your application needs only a single network round trip. You can also use BatchWriteItem to add multiple items to one or more tables.

Return Values

In some cases, you may want DynamoDB to return certain attribute values as they appeared before or after you modified them. The PutItem, UpdateItem, and DeleteItem operations have a ReturnValues parameter that you can use to return the attribute values before or after they are modified. The default value for ReturnValues is None, meaning that DynamoDB will not return any information about attributes that were modified.

The following are additional settings for ReturnValues, organized by DynamoDB API operation:

PutItem The PutItem action creates a new item or replaces an old item with a new item. You can return the item’s attribute values in the same operation by using the ReturnValues parameter.

ReturnValues: ALL_OLD

- If you overwrite an existing item, ALL_OLD returns the entire item as it appeared before the overwrite.

- If you write a nonexistent item, ALL_OLD has no effect.

UpdateItem The most common use for UpdateItem is to update an existing item. However, UpdateItem actually performs an upsert, meaning that it will automatically create the item if it does not already exist.

ReturnValues: ALL_OLD

- If you update an existing item, ALL_OLD returns the entire item as it appeared before the update.

- If you update a nonexistent item (upsert), ALL_OLD has no effect.

ReturnValues: ALL_NEW

- If you update an existing item, ALL_NEW returns the entire item as it appeared after the update.

- If you update a nonexistent item (upsert), ALL_NEW returns the entire item.

ReturnValues: UPDATED_OLD

- If you update an existing item, UPDATED_OLD returns only the updated attributes as they appeared before the update.

- If you update a nonexistent item (upsert), UPDATED_OLD has no effect.

ReturnValues: UPDATED_NEW

- If you update an existing item, UPDATED_NEW returns only the affected attributes as they appeared after the update.

- If you update a nonexistent item (upsert), UPDATED_NEW returns only the updated attributes as they appear after the update.

DeleteItem

The DeleteItem deletes a single item in a table by primary key. You can perform a conditional delete operation that deletes the item if it exists, or if it has an expected attribute value.

ReturnValues: ALL_OLD

- If you delete an existing item, ALL_OLD returns the entire item as it appeared before you deleted it.

- If you delete a nonexistent item, ALL_OLD does not return any data.

Requesting Throttle and Burst Capacity

If your application performs reads or writes at a higher rate than your table can support, DynamoDB begins to throttle those requests. When DynamoDB throttles a read or write, it returns a ProvisionedThroughputExceededException to the caller. The application can then take appropriate action, such as waiting for a short interval before retrying the request.

The AWS SDKs provide built-in support for retrying throttled requests; you do not need to write this logic yourself. The DynamoDB console displays CloudWatch metrics for your tables so that you can monitor throttled read requests and write requests. If you encounter excessive throttling, consider increasing your table’s provisioned throughput settings.

In some cases, DynamoDB uses burst capacity to accommodate reads or writes in excess of your table’s throughput settings. With burst capacity, unexpected read or write requests can succeed where they otherwise would be throttled. Burst capacity is available on a best-effort basis, and DynamoDB does not verify that this capacity is always available.

Amazon DynamoDB Secondary Indexes: Global and Local

A secondary index is a data structure that contains a subset of attributes from a table. The index uses an alternate key to support Query operations in addition to making queries against the primary key. You can retrieve data from the index using a Query. A table can have multiple secondary indexes, which give your applications access to many different Query patterns.

You can create one or more secondary indexes on a table. DynamoDB does not require indexes, but indexes give your applications more flexibility when you query your data. After you create a secondary index on a table, you can read or scan data from the index in much the same way as you do from the table.

DynamoDB supports the following kinds of indexes:

Global secondary index A global secondary index is one with a partition key and sort key that can be different from those on the table.

Local secondary index A local secondary index is one that has the same partition key as the table but a different sort key.

![]() You can define up to five global secondary indexes and five local secondary indexes per table. You can also scan an index as you would a table.

You can define up to five global secondary indexes and five local secondary indexes per table. You can also scan an index as you would a table.

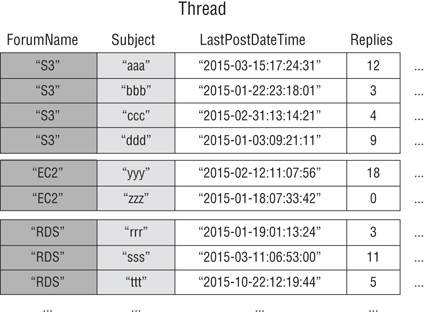

Figure 14.1 shows a local secondary index for a DynamoDB table of forum posts. The local secondary index allows you to query based on the date and time of the last post to a subject, as opposed to the subject itself.

Figure 14.1 Amazon DynamoDB indexes

Every secondary index is associated with exactly one table from which it obtains its data; it is the base table for the index. DynamoDB maintains indexes automatically. When you add, update, or delete an item in the base table, DynamoDB makes the change to the item in any indexes that belong to that table. When you create an index, you configure which attributes copy (project) from the base table to the index. At a minimum, DynamoDB projects the key attributes from the base table into the index.

When you create an index, you define an alternate key (partition key and sort key) for the index. You also define the attributes that you want to project from the base table into the index. DynamoDB copies these attributes into the index along with the primary key attributes from the base table. You can Query or Scan the index like a table.

Consider your application’s requirements when you determine which type of index to use. Table 14.2 shows the main differences between a global secondary index and a local secondary index.

Table 14.2 Global vs. Secondary Indexes

| Characteristic | Global Secondary Index | Local Secondary Index |

| Key Schema | The primary key can be simple (partition key) or composite (partition key and sort key). | The primary key must be composite (partition key and sort key). |

| Key Attributes | The index partition key and sort key (if present) can be any base table attributes of type string, number, or binary. | The partition key of the index is the same attribute as the partition key of the base table. The sort key can be any base table attribute of type string, number, or binary. |

| Size Restrictions Per Partition Key Value | No size restrictions. | No size restrictions. |

| Online Index Operations | Create at the same time that you create a table. You can also add a new global secondary index to an existing table or delete an existing global secondary index. | Create at the same time that you create a table. You cannot add a local secondary index to an existing table, nor can you delete any local secondary indexes that currently exist. |

| Queries and Partitions | Query over the entire table, across all partitions. | Query over a single partition, as specified by the partition key value in the query. |

| Read Consistency | Query on eventual consistency only. | Query eventual consistency or strong consistency. |

| Provisioned Throughput Consumption | Every global secondary index has its own provisioned throughput settings for read and write activity. Queries, scans, and updates consume capacity units from the index, not from the base table. | Query or scan consumes read capacity units from the base table. Writes and write updates consume write capacity units from the base table. |

| Projected Attributes | Queries or scans can only request the attributes that project into the index. DynamoDB will not fetch any attributes from the table. | Queries or scans can request attributes that do not project into the index. DynamoDB will automatically fetch those attributes from the table. |

If you write an item to a table, you do not have to configure the attributes for any global secondary index sort key. A table with many global secondary indexes incurs higher costs for write activity than tables with fewer indexes. For maximum query flexibility, you can create up to five global secondary indexes and up to five local secondary indexes per table.

To create more than one table with secondary indexes, you must do so sequentially. Create the first table and wait for it to become active, then create the next table and wait for it to become active, and so on. If you attempt to create more than one table with a secondary index at a time, DynamoDB responds with a LimitExceededException error.

For each secondary index, you must configure the following:

Type of index The type of index to be created can be either a global secondary index or a local secondary index.

Name of index The naming rules for indexes are the same as those for table. The name must be unique for the base table, but you can use the same name for indexes that you associate with different base tables.

Index key schema Every attribute in the index key schema must be a top-level attribute of type string, number, or binary. Other data types, including documents and sets, are not allowed. Other requirements for the key schema depend on the type of index:

Global secondary index For a global secondary index, the partition key can be any scalar attribute of the base table. A sort key is optional, and it can be any scalar attribute of the base table.

Local secondary index For a local secondary index, the partition key must be the same as the base table’s partition key, and the sort key must be a non-key base table attribute.

Additional attributes These attributes are in addition to the table’s key attributes, which automatically project into every index. You can project attributes of any data type, including scalars, documents, and sets.

Global secondary index For a global secondary index, you must configure read and write capacity unit settings. These provisioned throughput settings are independent of the base table’s settings.

Local secondary index For a local secondary index, you do not need to configure read and write capacity unit settings. Any read and write operations on a local secondary index draw from the provisioned throughput settings of its base table.

To generate a detailed list of secondary indexes on a table, use the DescribeTable operation. DescribeTable returns the name, storage size, and item counts for every secondary index on the table. These values refresh approximately every six hours.

Use the Query or Scan operation to access the data in a secondary index. You configure the base table name, index, attributes to return in the results, and any condition expressions or filters that you want to apply. DynamoDB returns the results in ascending or descending order.

![]() When you delete a table, all indexes associated with that table are deleted.

When you delete a table, all indexes associated with that table are deleted.

Global Secondary Indexes

Some applications may need to perform many kinds of queries, using a variety of different attributes as query criteria. To support these requirements, you can create one or more global secondary indexes and then issue query requests against these indexes.

To illustrate, Figure 14.2 displays the GameScores table, which tracks users and scores for a mobile gaming application. Each item in GameScores has a partition key (UserId) and a sort key (GameTitle). Figure 14.2 shows the organization of the items.

Figure 14.2 Game scores

To write a leaderboard application to display top scores for each game, you could generate a query that specifies the key attributes (UserId and GameTitle). While this would be efficient for the application to retrieve data from GameScores based on GameTitle only, it would need to use a Scan operation. As you add more items to the table, Scan operations of all the data becomes slow and inefficient, making it difficult to answer questions based on Figure 14.2, such as the following:

- What is the top score ever recorded for the game Meteor Blasters?

- Which user had the highest score for Galaxy Invaders?

- What was the highest ratio of wins versus losses?

To better implement queries on non-key attributes, create a global secondary index. A global secondary index contains a selection of attributes from the base table, but you organize them by a primary key that is different from that of the table. The index key does not require any of the key attributes from the table, nor does it require the same key schema as a table.

Every global secondary index must have a partition key and can have an optional sort key. The index key schema can be different from the base table schema. You could have a table with a simple primary key (partition key) and create a global secondary index with a composite primary key (partition key and sort key) or vice versa. The index key attributes can consist of any top-level string, number, or binary attributes from the base table but not other scalar types, document types, and set types.

![]() You can project other base table attributes into the index. When you query the index, DynamoDB can retrieve these projected attributes efficiently; however, global secondary index queries cannot fetch attributes from the base table. In a DynamoDB table, each key value must be unique. However, the key values in a global secondary index do not need to be unique. A global secondary index tracks data items only where the key attribute or attributes actually exist.

You can project other base table attributes into the index. When you query the index, DynamoDB can retrieve these projected attributes efficiently; however, global secondary index queries cannot fetch attributes from the base table. In a DynamoDB table, each key value must be unique. However, the key values in a global secondary index do not need to be unique. A global secondary index tracks data items only where the key attribute or attributes actually exist.

Attribute Projections

A projection is the set of attributes the secondary index copies from a table. While the partition key and sort key of the table project into the index, you can also project other attributes to support your application’s Query requirements. When you query an index, DynamoDB accesses any attribute in the projection as if those attributes were in a table of their own.

When you create a secondary index, configure the attributes that project into the index. DynamoDB provides the following options:

KEYS_ONLY Each item in the index consists only of the table partition key and sort key values, plus the index key values, and this results in the smallest possible secondary index.

INCLUDE Each item in the index consists only of the table partition key and sort key values plus the index key values, and it includes other non-key attributes that you configure.

ALL Includes all attributes from the source table, including other non-key attributes that you configure. Because the table data is duplicated in the index, an ALL projection results in the largest possible secondary index.

When you choose the attributes to project into a global secondary index, consider the provisioned throughput costs and the storage costs:

- Before accessing a few attributes with the lowest possible latency, consider projecting only those attributes into a global secondary index. The smaller the index, the less it costs to store it and the lower your write costs will be.

- If your application will frequently access non-key attributes, consider projecting those attributes into a global secondary index. The additional storage costs for the global secondary index offset the cost of performing frequent table scans.

- When you’re accessing most of the non-key attributes frequently, project these attributes, or even the entire base table, into a global secondary index. This provides maximum flexibility; however, your storage cost would increase or even double.

- If your application needs to query a table infrequently but must perform many writes or updates against the data in the table, consider projecting KEYS_ONLY. The global secondary index would be of minimal size but would still be available for query activity.

Querying a Global Secondary Index

Use the Query operation to access one or more items in a global secondary index. The query must specify the name of the base table, the name of the index, the attributes the query results return, and any query conditions that you want to apply. DynamoDB can return the results in ascending or descending order.

Consider the following example in which a query requests game data for a leaderboard application:

{"TableName": "GameScores","IndexName": "GameTitleIndex","KeyConditionExpression": "GameTitle = :v_title","ExpressionAttributeValues": {":v_title": {"S": "Meteor Blasters"}},"ProjectionExpression": "UserId, TopScore","ScanIndexForward": false}

In this query, the following actions occur:

- DynamoDB accesses GameTitleIndex, using the GameTitle partition key to locate the index items for Meteor Blasters. All index items with this partition key are next to each other for rapid retrieval.

- Within this game, DynamoDB uses the index to access the UserID and TopScore for this game.

- The query results return in descending order, as the ScanIndexForward parameter is set to false.

Scanning a Global Secondary Index

You can use the Scan operation to retrieve the data from a global secondary index. Provide the base table name and the index name in the request. With a Scan operation, DynamoDB reads the data in the index and returns it to the application. You can also request only some of the data and to discard the residual data. To do this, use the FilterExpression parameter of the Scan operation.

Synchronizing Data between Tables and Global Secondary Indexes

DynamoDB automatically synchronizes each global secondary index with its base table. When an application writes or deletes items in a table, any global secondary indexes on that table update asynchronously by using an eventually consistent model. Though applications seldom write directly to an index, understand the following the implications of how DynamoDB maintains these indexes:

- When you create a global secondary index, you configure one or more index key attributes and their data types.

- When you write an item to the base table, the data types for those attributes must match the index key schema’s data types.

- When you put or delete items in a table, the global secondary indexes on that table update in an eventually consistent fashion.

Long Global Index Propagations

Long Global Index Propagations

Under normal conditions, changes to the table data propagate to the global secondary indexes within a fraction of a second. However, if an unlikely failure scenario occurs, longer propagation delays may occur. Because of this, your applications need to anticipate and handle situations where a query on a global secondary index returns results that are not current.

Considerations for Provisioned Throughput of Global Secondary Indexes

When you create a global secondary index, you must configure read and write capacity units for the workload that you expect on that index. The provisioned throughput settings of a global secondary index are separate from those of its base table. A Query operation on a global secondary index consumes read capacity units from the index, not the base table.

When you put, update, or delete items in a table, the global secondary indexes on that table are updated. These index updates consume write capacity units from the index, not from the base table.

To view the provisioned throughput settings for a global secondary index, use the DescribeTable operation, and detailed information about the table’s global secondary indexes return.

![]() If you query a global secondary index and exceed its provisioned read capacity, your request throttles. If you perform heavy write activity on the table but a global secondary index on that table has insufficient write capacity, then the write activity on the table throttles.

If you query a global secondary index and exceed its provisioned read capacity, your request throttles. If you perform heavy write activity on the table but a global secondary index on that table has insufficient write capacity, then the write activity on the table throttles.

To avoid potential throttling, the provisioned write capacity for a global secondary index should be equal to or greater than the write capacity of the base table because new updates write to both the base table and global secondary index.

Read Capacity Units

Global secondary indexes support eventually consistent reads, each of which consume one-half of a read capacity unit. For example, a single global secondary index query can retrieve up to 8 KB (2 × 4 KB) per read capacity unit. For global secondary index queries, DynamoDB calculates the provisioned read activity in the same way that it does for queries against tables, except that the calculation is based on the sizes of the index entries instead of the size of the item in the base table. The number of read capacity units is the sum of all projected attribute sizes across all returned items; the result is then rounded up to the next 4-KB boundary.

The maximum size of the results returned by a Query operation is 1 MB; this includes the sizes of all of the attribute names and values across all returned items.

For example, if a global secondary index contains items with 2,000 bytes of data and a query returns 8 items, then the total size of the matching items is 2,000 bytes × 8 items = 16,000 bytes; this is then rounded up to the nearest 4-KB boundary. Because global secondary index queries are eventually consistent, the total cost is 0.5 × (16 KB/4 KB), or two read capacity units.

Write Capacity Units

When you add, update, or delete an item in a table and a global secondary index is affected by this, then the global secondary index consumes provisioned write capacity units for the operation. The total provisioned throughput cost for a write consists of the sum of the write capacity units consumed by writing to the base table and those consumed by updating the global secondary indexes. If a write to a table does not require a global secondary index update, then no write capacity is consumed from the index.

For a table write to succeed, the provisioned throughput settings for the table and all of its global secondary indexes must have enough write capacity to accommodate the write; otherwise, the write to the table will throttle.

Factors Affecting Cost of Writes

The cost of writing an item to a global secondary index depends on the following factors:

- If you write a new item to the table that defines an indexed attribute or you update an existing item to define a previously undefined indexed attribute, one write operation is required to put the item into the index.

- If an update to the table changes the value of an indexed key attribute (from A to B), two writes are required—one to delete the previous item from the index and another write to put the new item into the index.

- If an item was present in the index, but a write to the table caused the indexed attribute to be deleted, one write is required to delete the old item projection from the index.

- If an item is not present in the index before or after the item is updated, there is no additional write cost for the index.

- If an update to the table changes the value of only projected attributes in the index key schema but does not change the value of any indexed key attribute, then one write is required to update the values of the projected attributes into the index.

All of these factors assume that the size of each item in the index is less than or equal to the 1-KB item size for calculating write capacity units. Larger index entries require additional write capacity units. Minimize your write costs by considering which attributes your queries must return and projecting only those attributes into the index.

Considerations for Storing Global Secondary Indexes

When an application writes an item to a table, DynamoDB automatically copies the correct subset of attributes to any global secondary indexes in which those attributes should appear. Your account is charged for storing the item in the base table and also for storing attributes in any global secondary indexes on that table.

The amount of space used by an index item is the sum of the following:

- Size in bytes of the base table primary key (partition key and sort key)

- Size in bytes of the index key attribute

- Size in bytes of the projected attributes (if any)

- 100 bytes of overhead per index item

To estimate the storage requirements for a global secondary index, estimate the average size of an item in the index and then multiply by the number of items in the base table that have the global secondary index key attributes.

If a table contains an item for which a particular attribute is not defined but that attribute is defined as an index partition key or sort key, DynamoDB does not write any data for that item to the index.

Managing Global Secondary Indexes

Global secondary indexes require you to create, describe, modify, delete, and detect index key violations.

Creating a Table with Global Secondary Indexes

To create a table with one or more global secondary indexes, use the CreateTable operation with the GlobalSecondaryIndexes parameter. For maximum query flexibility, create up to five global secondary indexes per table. Specify one attribute to act as the index partition key. You can specify another attribute for the index sort key. It is not necessary for either of these key attributes to be the same as a key attribute in the table.

Each index key attribute must be a scalar of type string, number, or binary, and cannot be a document or a set. You can project attributes of any data type into a global secondary index, including scalars, documents, and sets. You must also provide ProvisionedThroughput settings for the index, consisting of ReadCapacityUnits and WriteCapacityUnits. These provisioned throughput settings are separate from those of the table but behave in similar ways.

Viewing the Status of Global Secondary Indexes on a Table

To view the status of all the global secondary indexes on a table, use the DescribeTable operation. The GlobalSecondaryIndexes portion of the response shows all indexes on the table, along with the current status of each (IndexStatus).

The IndexStatus for a global secondary index is as follows:

Creating Index is currently being created, and it is not yet available for use.

Active Index is ready for use, and the application can perform Query operations on the index.

Updating Provisioned throughput settings of the index are being changed.

Deleting Index is currently being deleted, and it can no longer be used.

When DynamoDB has finished building a global secondary index, the index status changes from Creating to Active.

Adding a Global Secondary Index to an Existing Table

To add a global secondary index to an existing table, use the UpdateTable operation with the GlobalSecondaryIndexUpdates parameter, and provide the following information:

- An index name, which must be unique among all of the indexes on the table.

- The key schema of the index. Configure one attribute for the index partition key. You can configure another attribute for the index sort key. It is not necessary for either of these key attributes to be the same as a key attribute in the table. The data types for each schema attribute must be scalar: string, number, or binary.

- The attributes to project from the table into the index include the following:

KEYS_ONLY Each item in the index consists of only the table partition key and sort key values, plus the index key values.

INCLUDE In addition to the attributes described in KEYS_ONLY, the secondary index includes other non-key attributes that you configure.

ALL The index includes all attributes from the source table.

- The provisioned throughput settings for the index, consisting of ReadCapacityUnits and WriteCapacityUnits. These provisioned throughput settings are separate from those of the table.

![]() You can create only one global secondary index per UpdateTable operation, and you cannot cancel a global secondary index creation process.

You can create only one global secondary index per UpdateTable operation, and you cannot cancel a global secondary index creation process.

Resource Allocation

DynamoDB allocates the compute and storage resources to build the index. During the resource allocation phase, the IndexStatus attribute is CREATING and the Backfilling attribute is false. Use the DescribeTable operation to retrieve the status of a table and all of its secondary indexes.

While the index is in the resource allocation phase, you cannot delete its parent table, nor can you modify the provisioned throughput of the index or the table. You cannot add or delete other indexes on the table; however, you can modify the provisioned throughput of these other indexes.

Backfilling

For each item in the table, DynamoDB determines which set of attributes to write to the index based on its projection (KEYS_ONLY, INCLUDE, or ALL). It then writes these attributes to the index. During the backfill phase, DynamoDB tracks items that you add, delete, or update in the table and the attributes in the index.

During the backfilling phase, the IndexStatus attribute is CREATING and the Backfilling attribute is true. Use the DescribeTable operation to retrieve the status of a table and all of its secondary indexes.

While the index is backfilling, you cannot delete its parent table. However, you can still modify the provisioned throughput of the table and any of its global secondary indexes.

When the index build is complete, its status changes to Active. You are not able to query or scan the index until it is Active.

Restrictions and Limitations of Backfilling

During the backfilling phase, some writes of violating index items may succeed while others are rejected. This can occur if the data type of an attribute value does not match the data type of an index key schema data type or if the size of an attribute exceeds the maximum length for an index key attribute.

Index key violations do not interfere with global secondary index creation; however, when the index becomes Active, the violating keys will not be present in the index. After backfilling, all writes to items that violate the new index’s key schema will be rejected. To detect and resolve any key violations that may have occurred, run the Violation Detector tool after the backfill phase completes.

While the resource allocation and backfilling phases are in progress, the index is in the CREATING state. During this time, DynamoDB performs read operations on the table; you are not charged for this read activity.

You cannot cancel an in-flight global secondary index creation.

Detecting and Correcting Index Key Violations

Throughout the backfill phase of the global secondary index creation, DynamoDB examines each item in the table to determine whether it is eligible for inclusion in the index, because noneligible items cause index key violations. In these cases, the items remain in the table, but the index will not have a corresponding entry for that item.

An index key violation occurs if:

- There is a data type mismatch between an attribute value and the index key schema data type. For example, in Figure 14.1, if one of the items in the GameScores table had a TopScore value of type “string,” and you add a global secondary index with a number-type partition key of TopScore, the item from the table would violate the index key.

- An attribute value from the table exceeds the maximum length for an index key attribute. The maximum length of a partition key is 2,048 bytes, and the maximum length of a sort key is 1,024 bytes. If any of the corresponding attribute values in the table exceed these limits, the item from the table violates the index key.

If an index key violation occurs, the backfill phase continues without interruption; however, any violating items are not included in the index. After the backfill phase completes, all writes to items that violate the new index’s key schema will be rejected.

Deleting a Global Secondary Index from a Table

You use the UpdateTable operation to delete a global secondary index. While the global secondary index is being deleted, there is no effect on any read or write activity in the parent table, and you can still modify the provisioned throughput on other indexes. You can delete only one global secondary index per UpdateTable operation.

![]() When you delete a table (DeleteTable), all of the global secondary indexes on that table are deleted.

When you delete a table (DeleteTable), all of the global secondary indexes on that table are deleted.

Local Secondary Indexes

Some applications query data by using only the base table’s primary key; however, there may be situations where an alternate sort key would be helpful. To give your application a choice of sort keys, create one or more local secondary indexes on a table and issue Query or Scan requests against these indexes.

For example, Figure 14.3 is useful for an application such as discussion forums. The figure shows how the items in the table would be organized.

Figure 14.3 Forum thread table

DynamoDB stores all items with the same partition key value contiguously. In this example, given a particular ForumName, a Query operation could immediately locate the threads for that forum. Within a group of items with the same partition key value, the items are sorted by sort key value. If the sort key (Subject) is also provided in the Query operation, DynamoDB can narrow the results that are returned, such as returning the threads in the S3 forum that have a Subject beginning with the letter a.

Requests may require more complex data-access patterns, such as the following:

- Which forum threads receive the most views and replies?