Chapter 1

A Big Data Primer for Executives

James R. Kalyvas

1.1 What Is Big Data?

The phrase Big Data is commonplace in business discussions, yet it does not have a universally understood meaning. The main objective of this chapter is to provide a simple framework for understanding Big Data.

There have been many different definitions for Big Data proposed by technology experts and a wide range of organizations. For purposes of this book, we developed the following definition:

Big Data is a process to deliver decision-making insights. The process uses people and technology to quickly analyze large amounts of data of different types (traditional table structured data and unstructured data, such as pictures, video, email, transaction data, and social media interactions) from a variety of sources to produce a stream of actionable knowledge.

Because there is no commonly accepted definition of Big Data, we offer this definition because it is both descriptive and practical. Our definition emphasizes that the term Big Data really refers to a process that results in information that supports decision making, and the definition underscores that Big Data is not simply a shorthand reference to an amount or type of data. Our definition is derived from our research and elements of a number of existing definitions.

We include several frequently referenced definitions next for context and comparison. According to the McKinsey Global Institute:

“Big Data” refers to datasets whose size is beyond the ability of typical database software tools to capture, store, manage, and analyze. This definition is intentionally subjective and incorporates a moving definition of how big a dataset needs to be in order to be considered Big Data—i.e., we don’t define Big Data in terms of being larger than a certain number of terabytes (thousands of gigabytes). We assume that, as technology advances over time, the size of datasets that qualify as Big Data will also increase. Also note that the definition can vary by sector, depending on what kinds of software tools are commonly available and what sizes of datasets are common in a particular industry. With those caveats, Big Data in many sectors today will range from a few dozen terabytes to multiple petabytes (thousands of terabytes). (McKinsey Global Institute. Big Data: The Next Frontier for Innovation, Competition, and Productivity. McKinsey & Company, June 2011.)

Gartner indicates the following:

Big Data is high-volume, high-velocity and high-variety information assets that demand cost-effective, innovative forms of information processing for enhanced insight and decision making. (Gartner. IT Glossary. 2013. http://www.gartner.com/it-glossary/big-data/.)

The term Big Data is sometimes used in this book as part of a phrase, such as “Big Data analytics,” when a particular part of the process is being emphasized. In the rest of this chapter, we continue to build on the framework for understanding Big Data and describe at a very high level and in relatively nontechnical terms how it works.

1.1.1 Characteristics of Big Data

You will rarely see a discussion of Big Data that does not include a reference to the “3 Vs”1—volume, velocity, and variety—as distinguishing characteristics of Big Data. Simply put, it is the volume (amount of data), velocity (the speed of processing and the pace of change to data), and variety (sources of data and types of data)2 that most notably distinguish Big Data from the traditional approaches used to capture, store, manage, and analyze data.

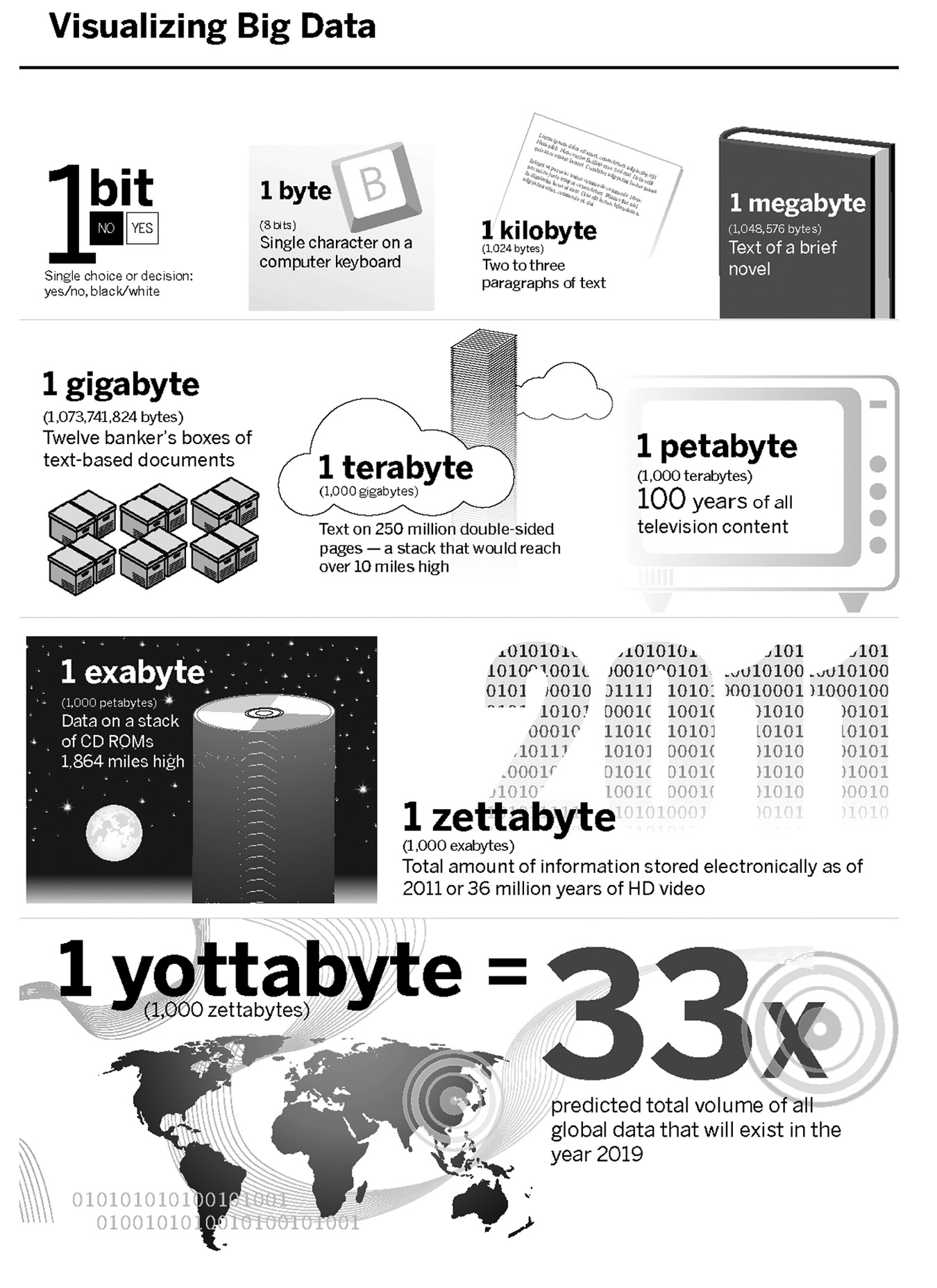

1.1.2 Volume

The volume of data available to enterprises has dramatically increased since 2004. In 2004, the total amount of data stored on the entire Internet was 1 petabyte (equivalent to 100 years of all television content). As can be seen in Figure 1.1, by 2011 the total worldwide amount of information stored electronically was 1 zettabyte (1 million petabytes or 36 million years of high-definition [HD] video). By 2015, that number is estimated to reach 7.9 zettabytes (or 7.9 million petabytes), and then by 2003 skyrocket to 35 zettabytes (or 35 million petabytes).3 The size of the datasets in use today, and continually and exponentially growing, has outpaced the capabilities of traditional data tools to capture, store, manage, and analyze the data.

1.1.3 The Internet of Things and Volume

The volume of data to be stored and analyzed will experience another dramatic upward arc as more and more objects are equipped with sensors that generate and relay data without the need for human interaction. Known as the Internet of Things (IoT), a concept hailing from the Massachusetts Institute of Technology (MIT) since 2000, it is the ability for machines and other objects, through sensors or other implanted devices, to communicate relevant data through the Internet directly to connected machines. The IoT is already in action regularly today (think exercise devices such as Fitbit® or FuelBand or connected appliances like the Nest thermostat or smoke detector), and we are still at the early stages of how ubiquitous it will become. For example, a basketball was recently produced with sensors that provide direct feedback to the user on the arc, spin, and speed of release of the player’s shots. While the player is receiving instant feedback and even “coaching” from the app on his or her iPhone, the app is also sending all of this data to the manufacturer as well as other important data relating to the frequency and duration of use, places the user frequents to play; by matching weather information, the manufacturer can even collect information on the impact of weather conditions on the performance characteristics of the ball. Regardless of how, or whether, the manufacturer uses these insights, it has unprecedented ability to interact with and obtain multiple types of feedback directly from the basketball, and all the player does is connect it and use it.

1.1.4 Variety

Big Data is also transforming data analytics by dramatically expanding the variety of useful data to analyze. Big Data combines the value of data stored in traditional structured4 databases with the value of the wealth of new data available from sources of unstructured data. Unstructured data includes the rapidly growing universe of data that is not structured. Common examples of unstructured data are user-generated content from social media (e.g., Facebook, Twitter, Instagram, and Tumblr), images, videos, surveillance data, sensor data, call center information, geolocation data, weather data, economic data, government data and reports, research, Internet search trends, and web log files. Today, more than 95% of all data that exists globally is estimated to be unstructured data. These data sources can provide extremely valuable business intelligence. Using Big Data analytics, organizations can now make correlations and uncover patterns in the data that could not have been identified through conventional methods.5 The correlations and patterns can provide a company with insight on external conditions that have a direct impact on an enterprise, such as market trends, consumer behaviors, and operational efficiencies, as well as identify interdependencies between the conditions.

1.1.5 Velocity

A rapidly ever-increasing amount of unstructured data from an exponentially growing number of sources streams continuously across the Internet. The speed with which this data must be stored and analyzed constitutes the velocity characteristic of Big Data.

1.1.6 Validation

If you are counting, you will note that “validation” is a fourth V. We have added this fourth V for your consideration because it captures one of the core teachings of this book: An organization’s Big Data strategy must include a validation step. This validation step should be used by the organization to insert appropriate pauses in their analytics efforts to assess how laws, regulations, or contractual obligations have an impact on the

- Architecture of Big Data systems

- Design of Big Data search algorithms

- Actions to be taken based on the derived insights

- Storage and distribution of the results and data

Each of the chapters addresses applicable legal considerations to illustrate the importance of validation and provides recommendations for effective validation steps.

1.2. Cross-Disciplinary Approach, New Skills, and Investment

Organizations that seek to leverage Big Data in their operations will also need to develop cross-disciplinary teams that wed deep knowledge of the business with technology. An essential component of these teams will be the data scientist. Whether the data scientist is an employee or a contractor, he or she is essential to extracting the promise of business insights Big Data holds for organizations (i.e., deriving order and knowledge from the chaos that can be Big Data). The data scientist is a multidimensional thinker who operates effectively in talking about business issues in business terms while also at the apex of technology and statistics education and experience. The role of the data scientist is captured well in the following excerpts from a job posting for the position from a leading consumer manufacturing company:6

Key Responsibilities:

- Analyze large datasets to develop custom models and algorithms to drive business solutions

- Build complex datasets from multiple data sources

- Build learning systems to analyze and filter continuous data flows and offline data analysis

- Develop custom data models to drive innovative business solutions

- Conduct advanced statistical analysis to determine trends and significant data relationships

- Research new techniques and best practices within the industry

Technology Skills:

- Having the ability to query databases and perform statistical analysis

- Being able to develop or program databases

- Being able to create examples, prototypes, demonstrations to help management better understand the work

- Having a good understanding of design and architecture principles

- Strong experience in data warehousing and reporting

- Experience with multiple RDBMS (Relational Database Management Systems) and physical database schema design

- Experience in relational and dimensional modeling

- Process and technology fluency with key analytic applications (for example, customer relationship management, supply chain management and financials)

- Familiar with development tools (e.g., MapReduce, Hadoop, Hive) and programming languages (e.g., C++, Java, Python, Perl)

- Very data driven and ability to slice and dice large volumes of data

The data scientist is not the only subject matter expert needed in designing a Big Data strategy but plays a critical role. The data scientist will work with business subject matter experts from your organization as well as the data architects and analysts, technology infrastructure team, management, and others to deliver Big Data insights. Whether your organization elects to build or buy Big Data capabilities, there is a strategic investment that must be made to acquire new analytical skill sets and develop cross-functional teams to execute on your Big Data objectives.

1.3 Acquiring Relevant Data

Organizations will need to gain access to data that will be relevant to the objectives they are trying to achieve with Big Data. This data can be available from any number of sources, including from existing databases throughout an organization or enterprise, from local or remote storage systems, directly from public sources on the Internet or from the government or trade associations, by license from a third party, or from third-party data brokers or providers that remotely aggregate and host valuable sources of data. Ultimately, organizations will need to ensure that they can legally obtain and maintain access to these data sources over time so that they will be able to continually reassess their results and make meaningful comparisons and not lose access to valuable business intelligence.

1.4 The Basics of How Big Data Technology Works

A growing number of proprietary and open-solution (i.e., publicly available without charge) Big Data analytic platforms are available to enterprises, as well as hosted solutions. For the sole purpose of simplicity in trying to describe how the technology behind Big Data works, we focus on Apache’s™ Hadoop® software in this discussion. Hadoop is an open-source application generally made available without license fees to the public.

Hadoop (reportedly named after the favorite stuffed animal of the child of one of its creators) is a popular open-source framework consisting of a number of software tools used to perform Big Data analytics. Hadoop takes the very large data distribution and analytic tasks inherent in Big Data and breaks them down into smaller and more manageable pieces. Hadoop accomplishes this by enabling an organization to connect many smaller and lower-price computers together to work in parallel as a single cost-effective computing cluster. Hadoop automatically distributes data across all of the computers on the cluster as the data is being loaded, so there is no need to first aggregate the data separately on a storage-area network (SAN) or otherwise (Figure 1.2). At the same time the data is being distributed, each block of data is replicated on several of the computers in the cluster. So, as Hadoop is breaking down the computing task into many pieces, it is also minimizing the chances that data will not be available when needed by making the data available on multiple computers. Each of these features offers efficiencies over traditional computer architectures.7 Of course, setting up this distributed computing structure with Hadoop, or similar tools, requires an initial investment that may not be warranted if your computer cluster is smaller. However, once the initial investment in a platform like Hadoop is made, it can be incrementally expanded to include more computers (scaled) at a low cost per increment.

Hadoop is a combination of advanced software and computer hardware, often referred to as a “platform,” that provides organizations with a means of executing a “client application.” These applications are the actual source of the code or scripts that are written to specifically describe the analytic functions (tasks) that Hadoop will be performing and the data on which those tasks will be performed.8 The analytic applications that use platforms like Hadoop to analyze Big Data are not typically focused on analysis that requires explicit direct relationships between already well-defined data structures, such as would be required by an accounting system, for example. Instead, by performing statistical analysis and modeling on the data, these applications are focused on uncovering patterns, unknown correlations, and other useful information in the data that may never have been identified using traditional relational data models.

When a computer on the cluster completes its assigned processing task, it returns its results and any related data back to the central computer and then requests another task. The individual results and data are reassembled by the central computer so that they can be returned to the client application or stored elsewhere on Hadoop’s file system or database.

1.5 Summary

To develop an explanation of Big Data suitable for its purpose in this book, we greatly simplified the discussion of how the complex technologies behind Big Data work. But, the purpose of this chapter was not to act as a blueprint for constructing a Big Data platform in your organization. Instead, we provided a basic and common understanding of what the phrase Big Data really means so that the frequent uses of the term throughout the remaining chapters can be read in that context.

Notes

1. Although ubiquitous now, the origin of the 3V’s is regularly attributed to Gartner Incorporated.

2. Douglas Laney. 3D Data Management: Controlling Data Volume, Velocity and Variety. Gartner, February 6, 2001. Blogs.gartner.com/doug-laney/files/2012/01/ad949-3D-Data-Management-Controlling-Data-Volume-Velocity-and-Variety.pdf

3. Eaton et al. Understanding Big Data: Analytics for Enterprise Class Hadoop and Streaming Data (IBM). New York: McGraw, 2012.

4. Michael Cooper and Peter Mell. Tackling Big Data. NIST Information Technology Laboratory Computer Security Division. http://csrc.nist.gov/groups/SMA/forum/documents/june2012presentations/fcsm_june2012_cooper_mell.pdf.

5. Michael Cooper and Peter Mell. Tackling Big Data. NIST Information Technology Laboratory Computer Security Division. http://csrc.nist.gov/groups/SMA/forum/documents/june2012presentations/fcsm_june2012_cooper_mell.pdf.

6. IT Data Scientist Job Description (The Clorox Company), http: //www.linkedin.com/jobs2/view/9495684

7. First, the cost of improving the density of processors and hard disks on a large enterprise server becomes disproportionately more expensive than building an equally capable cluster of smaller computers. Second, the rate at which modern hard drives can read and write data has not advanced as fast as has the storage capacity of hard disks or the speed of processors. Finally, in contrast to the distributed approach used in Big Data, enterprise relational database systems must first sequence and organize data before it can be loaded, and these systems are commonly subject to time-consuming processes like lengthy extract-transform-load (ETL) processes that could hinder system performance or delay data collection by hours or may even require importing old data with incremental batching and other manual processes.

8. Although the analogy of a search query is useful, a user of a search engine is actually receiving the final product of a complex Big Data analytic process by which the search engine scoured the Internet for data, indexed that data, and stored it for rapid retrieval. If you would like to learn more about the application of advanced analytics, we recommend reviewing Analytics at Work: Smarter Decisions, Better Results by Thomas H. Davenport and Jeanne G. Harris.