18

Threat Hunting

The release of Mandiant’s APT1 report provided information security professionals with a deep insight into one of the most experienced and prolific threat groups operating. The insight into the Chinese PLA Unit 61398 also provided context around these sophisticated threat actors. The term Advanced Persistent Threat (APT) became part of the information security lexicon. Information security and incident responders now had insight into threats that conducted their activities without detection, and over a significant period.

With the threat that APTs pose, coupled with the average time even moderately sophisticated groups can spend in a target network, organizations have started to move from passive detection and response to a more active approach, to identify potential threats in the network. This practice, called threat hunting, is a proactive process, whereby digital forensics techniques are used to conduct analysis on systems and network components to identify and isolate threats that have previously gone undetected. As with incident response, threat hunting is a combination of processes, technology, and people that does not rely on preconfigured alerting or automated tools, but rather, incorporates various elements of incident response, threat intelligence, and digital forensics.

This chapter will provide an overview of the practice of threat hunting by examining several key elements:

- Threat hunting overview

- Crafting a hypothesis

- Planning a hunt

- Digital forensic techniques for threat hunting

- EDR for threat hunting

Threat hunting overview

Threat hunting is a developing discipline, driven in large part by the availability of threat intelligence along with tools, such as Endpoint Detection and Response (EDR) and SIEM platforms, that can be leveraged to hunt for threats at the scale of today’s modern enterprise architectures. What has developed out of this is specific working cycles and maturity models that can guide organizations through the process of starting and executing a threat hunting program.

Threat hunt cycle

Threat hunting, like incident response, is a process-driven exercise. There is not a clearly defined and accepted process in place, but there is a general sequence that threat hunting takes that provides a process that can be followed. The following diagram combines the various stages of a threat hunt into a process that guides threat hunters through the various activities to facilitate an accurate and complete hunt:

Figure 18.1 – Threat hunt cycle

Initiating event

The threat hunt begins with an initiating event. Organizations that incorporate threat hunting into their operations may have a process or policy that threat hunting be conducted at a specific cadence or time. For example, an organization may have a process where the security operations team conducts four or five threat hunts per month, starting on the Monday of every week. Each one of these separate hunts would be considered the initiating event.

A second type of initiating event is usually driven by some type of threat intelligence alert that comes from an internal or external source. For example, an organization may receive an alert such as the one shown in the following screenshot. This alert, from the United States Federal Bureau of Investigation, available at https://www.aha.org/system/files/media/file/2021/05/fbi-tlp-white-report-conti-ransomware-attacks-impact-healthcare-and-first-responder-networks-5-20-21.pdf, indicates that there are new Indicators of Compromise (IOCs) that are associated with the Conti variant of ransomware. An organization may decide to act on this intelligence and begin a hunt through the network for any indicators associated with the IOCs provided as part of the alert:

Figure 18.2 – FBI alert

After the initiating event is fully understood, the next phase is to start crafting what to look for during the threat hunt.

Creating a working hypothesis

Moving on from the initiating event, the threat hunting team then creates a working hypothesis. Threat hunting is a focused endeavor, meaning that hunt teams do not just start poking through event logs or memory images, looking for whatever they can find. A working hypothesis—such as an APT group has gained control of several systems on the network—is general and provides no specific threat hunting target. Threat hunters need to focus their attention on the key indicators, whether those indicators come from a persistent threat group or some existing threat intelligence.

A working hypothesis provides the focus. A better hypothesis would be: An APT-style adversary has taken control of the DMZ web servers and is using them as a C2 infrastructure. This provides a specific target to which the hunt team can then apply digital forensic techniques to determine whether this hypothesis is true.

Threat hunts that are initiated via alerts can often find key areas of focus that can be leveraged, to craft a hypothesis. For example, the previous section contained an alert from the FBI. In addition to the IOCs associated with Conti, the following text was also in the alert:

The exact infection vector remains unknown, as Ryuk deletes all files related to the dropper used to deploy the malware. In some cases, Ryuk has been deployed secondary to TrickBot and/or Emotet banking Trojans, which use SMB protocols to propagate through the network and can be used to steal credentials.

From this data, the hunt team can craft a hypothesis that directly addresses these Tactics, Techniques, and Procedures (TTP). The hypothesis may be: An adversary has infected several internal systems and is using a Microsoft SMB protocol to move laterally within the internal network, with the intent of infecting other systems. Again, this sample hypothesis is specific and provides the threat hunters with a concrete area of focus to either prove or disprove the hypothesis.

Leveraging threat intelligence

In the previous chapter, there was an extensive overview of how cyber threat intelligence can be leveraged during an incident. As we are applying a variety of incident response and digital forensic techniques in threat hunting, cyber threat intelligence also plays an important role. Like the working hypothesis, threat intelligence allows the hunt team to further focus attention on specific indicators or TTPs that have been identified through a review of the pertinent threat intelligence available.

An example of this marriage between the hypothesis and threat intelligence can be provided by examining the relationship between the banking Trojan Emotet and the infrastructure that supports it.

First, the hypothesis that the hunt team has crafted is: Systems within the internal network have been in communication with the Emotet delivery or Command and Control infrastructure. With that hypothesis in mind, the hunt team can leverage OSINT or commercial feeds to augment their focus. For example, the following sites have been identified as delivering the Emotet binary:

- https://www.cityvisualization.com/wp-includes/88586

- https://87creationsmedia.com/wp-includes/zz90f27

- http://karencupp.com/vur1qw/s0li7q9

- http://www.magnumbd.com/wp-includes/w2vn93

- http://minmi96.xyz/wp-includes/l5vaemt6

From here, the hunt can focus on those systems that would have indications of traffic to those URLs.

Applying forensic techniques

The next stage in the threat hunt cycle is applying forensic techniques to test the hypothesis. The bulk of this book has been devoted to using forensic techniques to find indicators in a variety of locations. In threat hunting, the hunt team will apply those same techniques to various evidence sources to determine whether any indicators are present.

For example, in the previous section, five URLs were identified as indicators associated with the malware Emotet. Threat hunters could leverage several sources of evidence, to determine whether those indicators were present. For example, an examination of proxy logs would reveal whether any internal systems were connected to any of those URLs. DNS logs would also be useful, as they would indicate whether any system on the internal network attempted to resolve one or more of the URLs to establish connections. Finally, firewall logs may be useful in determining whether any connections were made to those URLs or associated IP addresses.

Identifying new indicators

During the course of a threat hunt, new indicators may be discovered. A search of a memory image for a specific family of malware reveals a previously unknown and undetected IP address. These are the top 10 indicators that may be identified in a threat hunt:

- Unusual outbound network traffic

- Anomalies in privileged user accounts

- Geographical anomalies

- Excessive login failures

- Excessive database read volume

- HTML response sizes

- Excessive file requests

- Port-application mismatch

- Suspicious registry or system file changes

- DNS request anomalies

Enriching the existing hypothesis

New indicators that are identified during the threat hunt may force the modification of the existing threat hunt hypothesis. For example, during a threat hunt for indicators of an Emotet infection, threat hunters uncover the use of the Windows system internal tool PsExec, to move laterally in the internal network. From here, the original hypothesis should be changed to reflect this new technique, and any indicators should be incorporated into the continued threat hunt.

Another option available to threat hunters regarding new indicators that are discovered is to begin a new threat hunt, utilizing the new indicators as the initiating event. This action is often leveraged when the indicator or TTP identified is well outside the original threat hunting hypothesis. This is also an option where there may be multiple teams that can be leveraged. Finally, indicators may also necessitate moving from a threat hunt to incident response. This is often a necessity in cases where data loss, credential compromise, or the infection of multiple systems has occurred. It is up to the hunt team to determine at which point the existing hypothesis is modified, a new hypothesis is created, or, in the worst-case scenario, an incident is declared.

Threat hunt reporting

Chapter 13 provided the details necessary for incident responders to properly report on their activities and findings. Reporting a threat hunt is just as critical, as it affords managers and policymakers insight into the tools, techniques, and processes utilized by the hunt team, as well as providing potential justification for additional tools or modifying the existing processes. The following are some of the key elements of a threat hunt report:

- Executive summary: This high-level overview of the actions taken, indicators discovered, and whether the hunt proved or disproved the hypothesis provides the decision-makers with a short narrative that can be acted upon.

- Threat hunt plan: The plan, including the threat hunt hypothesis, should be included as part of the threat hunt report. This provides the reader with the various details that the threat hunt team utilized during their work.

- Forensic report: As Chapter 13 explored, there is a great deal of data that is generated by forensic tools as well as by the incident responders themselves. This section of the threat hunt report is the lengthiest, as the detailed examination of each system or evidence source should be documented. Furthermore, there should be a comprehensive list of all evidence items that were examined as part of the hunt.

- Findings: This section will indicate whether the hunt team was able to either prove or disprove the hypothesis that had been set at the beginning of the hunt. In the event that the hypothesis was proved, there should be documentation as to what the follow-on actions were, such as a modification to the hypothesis, a new hypothesis, or whether the incident response capability was engaged. Finally, any IOCs, Indicators of Attacks (IOAs), or TTPs that were found as part of the threat hunt should also be documented.

Another key area of the Findings section should be an indication of how the existing process and technology were able to facilitate a detailed threat hunt. For example, if the threat hunt indicated that Windows event logs were insufficient in terms of time or quantity, this should be indicated in the report. This type of insight provides the ability to justify additional time and resources spent on creating an environment where sufficient network and system visibility is obtained to facilitate a detailed threat hunt.

One final section of the threat hunt report is a section devoted to non-security or incident-related findings. Threat hunts may often find vulnerable systems, existing configuration errors, or non-incident-related data points. These should be reported as part of the threat hunt so that they can be remediated.

- Recommendations: As there will often be findings, even on threat hunts that disprove the hypothesis and include no security findings, recommendations to improve future threat hunts, the security posture of the organization, or improvements to system configuration should be included. It would also be advisable to break these recommendations out into groups. For example, strategic recommendations may include long-term configuration or security posture improvements that may take an increased number of resources and time to implement. Tactical recommendations may include short-term or simple improvements to the threat hunt process or systems settings that would improve the fidelity of alerting. To further classify recommendations, there may be a criticality placed on the recommendations, with those recommendations needed to improve the security posture or to prevent a high-risk attack given higher priority than those recommendations that are simply focused on process improvement or configuration changes.

The threat hunt report contains a good deal of data that can be used to continually improve the overall threat hunting process. Another aspect to consider is which metrics can be reported to senior managers about threat hunts. Some key data points they may be interested in are the hours utilized, previously unknown indicators identified, infected systems identified, threats identified, and the number of systems contained. Having data that provides indicators of the threat hunt’s ability to identify previously unidentified threats will go a long way to ensuring that this is a continuing practice that becomes a part of the routine security operations of an organization.

Threat hunting maturity model

The cybersecurity expert David Bianco, the developer of the Pyramid of Pain covered in the previous chapter, developed the threat hunting maturity model while working for the cybersecurity company Sqrrl. It is important to understand this maturity model in relation to threat hunting, as it provides threat hunters and their organization with a construct in determining the roadmap to maturing the threat hunting process in their organization. The maturity model is made up of five levels, starting with Hunt Maturity 0 (or HM0) and going up to HM4.

What follows is a review of the five levels of the model:

- HM0 – Initial: During the initial stage, organizations rely exclusively on automated tools such as network- or host-based intrusion prevention/detection systems, antivirus, or SIEM to provide alerts to the threat hunt team. These alerts are then manually investigated and remediated. Along with a heavy reliance on alerting, there is no use of threat intelligence indicators at this stage of maturity. Finally, this maturity level is characterized by a limited ability to collect telemetry from systems. Organizations at this stage are not able to threat hunt.

- HM1 – Minimal: At the minimal stage, organizations are collecting more data and, in fact, may have access to a good deal of system telemetry available. In addition, these organizations manifest the intent to incorporate threat intelligence into their operations but are behind in terms of the latest data and intelligence on threat actors. Although this group will often still rely on automated alerting, the increased level of system telemetry affords this group the ability to extract threat intelligence indicators from reports and search available data for any matching indicators. This is the first level at which threat hunting can begin.

- HM2 – Procedural: At this stage, the organization is making use of threat hunting procedures that have been developed by other organizations, which are then applied to a specific use case. For example, an organization may find a presentation or use case write-up concerning lateral movement via a Windows system’s internal tools. From here, they would extract the pertinent features of this procedure and apply them to their own dataset. At this stage, the organization is not able to create its own process for threat hunting. The HM2 stage also represents the most common level of threat hunting maturity for organizations that have threat hunting programs.

- HM3 – Innovative: At this maturity level, the threat hunters are developing their own processes. There is also increased use of various methods outside manual processes, such as machine learning, statistical, and link analysis. There is a great deal of data that is available at this level as well.

- HM4 – Leading: Representing the bleeding edge of threat hunting, the Leading maturity level incorporates a good deal of the features of HM3 with one significant difference, and that is the use of automation. Processes that have produced results in the past are automated, providing an opportunity for threat hunters to craft new threat hunting systems that are more adept at keeping pace with emerging threats.

Threat hunt maturity model

The threat hunt maturity model is a useful construct for organizations to identify their current level of maturity, as well as planning for the inclusion of future technology and processes, to keep pace with the very fluid threat landscape.

Crafting a hypothesis

In a previous section, we explored the importance of crafting a specific and actionable threat hunting hypothesis. In addition, we looked at how threat intelligence can assist us with crafting a hypothesis. Another key dataset to leverage when crafting a hypothesis is the MITRE ATT&CK framework. In terms of crafting a specific hypothesis, this framework is excellent to drill down to a specific data point or points necessary to craft a solid hypothesis.

MITRE ATT&CK

In Chapter 17 there was an exploration of the MITRE ATT&CK framework, as it pertains to the incorporation of threat intelligence into incident response. The MITRE ATT&CK framework is also extremely useful in the initial planning and execution of a threat hunt. The MITRE ATT&CK framework is useful in a variety of areas in threat hunting, but for the purposes of this chapter, the focus will be on two specific use cases. First will be the use of the framework to craft a specific hypothesis. Second, the framework can be utilized to determine likely evidence sources that would produce the best indicators.

The first use case, crafting the hypothesis, can be achieved through an examination of the various tactics and techniques of the MITRE ATT&CK framework. Although descriptive, the tactics are not specific enough to be useful in threat hunt hypothesis creation. What threat hunters should be focusing attention on are the various techniques that make up a tactic—for example, examining the initial access tactic, which describes the various techniques that adversaries utilize to gain an initial foothold. The MITRE ATT&CK framework describes these tactics in detail.

Where the MITRE ATT&CK framework can be leveraged for a hypothesis is through the incorporation of one or more of these techniques across various tactics. For example, if a threat hunt team is concerned about C2 traffic, they can look under TA0011 in the MITRE ATT&CK enterprise tactics. There are 22 specific techniques that fall under that tactic. From here, the threat hunt team can select a technique, such as T1132—Data Encoding. They can then craft a hypothesis that states: An adversary has compromised a system on the internal network and is using encoding or compression to obfuscate C2 traffic.

In this instance, the MITRE ATT&CK framework provided a solid foundation for crafting a hypothesis. What the MITRE ATT&CK framework also provides is an insight into the various threat actor groups and tools that have been identified as using this type of technique. For example, examining the technique T1132—Data Encoding, located at https://attack.mitre.org/techniques/T1132/, reveals that threat actor groups such as APT19 and APT33 both use this technique to obfuscate their C2 traffic. In terms of tools, MITRE indicates that a variety of malware families, such as Linux Rabbit and njRAT, use obfuscation techniques, such as Base64 encoding or encoded URL parameters. This can further focus a threat hunt on specific threat groups or malware families if the hunt team wishes.

The second way the MITRE ATT&CK framework can be leveraged for threat hunting is by providing guidance on evidence sources. Going back to the T1132—Data Encoding technique, MITRE indicates that the best data sources for indicators associated with this technique are packet captures, network protocol analysis, process monitoring, and identifying processes that are using network connections. From here, the threat hunter could leverage packet capture analysis with Moloch or Wireshark, to identify any malicious indicators. These can be further augmented with an examination of key systems’ memory for network connections and their associated processes.

MITRE will often break down additional details that will assist threat hunt teams in their search for indicators. Technique 1132 contains additional details concerning this specific technique, as shown here:

Analyze network data for uncommon data flows (for example, a client sending significantly more data than it receives from a server). Processes utilizing the network that do not normally have network communication or have never been seen before are suspicious. Analyze packet contents to detect communications that do not follow the expected protocol behavior for the port that is being used.

The details regarding the technique, the data sources, and the potential course of action are a great aid to threat hunters, as it affords them the ability to put a laser focus on the threat hunt, the hypothesis, and—finally—a course of action. These elements go a long way in crafting a plan for the threat hunt.

Planning a hunt

Beginning a threat hunt does not require a good deal of planning, but there should be some structure as to how the threat hunt will be conducted, the sources of data, and the period on which the threat hunt will focus. A brief written plan will address all of the key points necessary and place all of the hunt team on the same focus area so that extraneous data that does not pertain to the threat hunt is minimized. The following are seven key elements that should be addressed in any plan:

- Hypothesis: A one- or two-sentence hypothesis, which was discussed earlier. This hypothesis should be clearly understood by all the hunt team members.

- MITRE ATT&CK tactic(s): In the previous chapter, there was a discussion of the MITRE ATT&CK framework and its application to threat intelligence and incident response. In this case, the threat hunt should include specific tactics that have been in use by threat actors. Select the tactics that are most applicable to the hypothesis.

- Threat intelligence: The hunt team should leverage as much internally developed and externally sourced threat intelligence as possible. External sources can either be commercial providers or OSINT. The threat intelligence should be IOCs, IOAs, and TTPs that are directly related to the hypothesis and the MITRE ATT&CK tactics that were previously identified. These are the data points that the hunt team will leverage during the hunt.

- Evidence sources: This should be a list of the various evidence sources that should be leveraged during the threat hunt. For example, if the hunt team is looking for indicators of lateral movement via SMB, they may want to leverage NetFlow or selected packet captures. Other indicators of lateral movement using Remote Desktop can be found within the Windows event logs.

- Tools: This section of the plan outlines the specific tools that are necessary to review evidence. For example, Chapter 12 addressed log file analysis with the open source tool Skadi. If the threat hunt intends to use this tool, it should be included in the plan.

- Scope: This refers to the systems that will be included in the threat hunt. The plan should indicate either a single system or systems, a subnet, or a network segment on which to focus. In the beginning, threat hunters should focus on a limited number of systems and add more as they become more familiar with the toolset and how much evidence can be examined in the time given.

- Timeframe: As threat hunting often involves a retrospective examination of evidence, it is necessary to set a timeframe upon which the threat hunt team should focus. For example, if an originating event is relatively new (say, 48 hours), the timeframe indicated in the plan may be limited to the past 72 hours, to address any previously undetected adversarial action. Other timeframes may widen the threat hunt to 14—or even 30—days, based on the hypothesis and threat intelligence available.

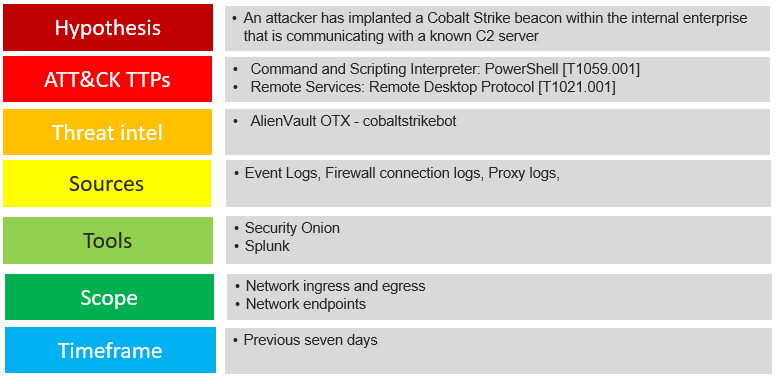

Here is an example threat hunt plan that incorporates these elements into an easy-to-view framework for a threat hunt identifying the presence of Cobalt Strike:

Figure 18.3 – Threat hunt plan

This plan outlines a threat hunt for the presence of Cobalt Strike. The hypothesis and MITRE ATT&CK techniques are clearly defined and set the scope of the overall effort. From a threat intelligence perspective, the user cobaltstrikebot is an excellent source of up-to-date IOCs. Evidence sources and the tool necessary are clearly defined along with the specific scope of telemetry that can be leveraged. Finally, the analysts have set a 7-day timeframe for this threat hunt. Due to the prevalence and associated risk of tools such as Cobalt Strike, this type of threat hunt may be conducted weekly, which is reflected in the timeframe.

Now that we have a plan, let’s briefly look at how forensic techniques fit into threat hunting and how we can hunt at scale.

Digital forensic techniques for threat hunting

We have dedicated several chapters to digital forensic techniques for both network and endpoint systems. The challenge with applying these techniques to hunting is that some do not fit with the overall approach to threat hunting. For example, a hunt organized around identifying malicious script execution cannot rely on individual systems chosen at random but rather the ability to examine all systems across the entire network for matching behaviors.

Here are some considerations to consider when examining how to integrate digital forensic techniques into a threat hunting program:

- Identify your data sources: If you do not have the ability to leverage firewall connection logs for more than a 24-hour period, it may be difficult to go back far enough to conduct a proper threat hunt of suspicious network connections. Instead of throwing your hands up, put together some threat research to identify other behaviors that align with your tool selection. If you can pull in PowerShell logs for the past 10 days, you have a much better chance of detecting post-exploitation frameworks focusing on a search of the Windows event logs than firewall logs.

- Focus on scale: Many of the forensic techniques that we have examined in the previous chapters are executed after some other piece of data indicates that evidence may be contained in a specific system. Look at the execution of ProcDump, for example. An alert or other indication from a SIEM will usually point to a specific system to investigate. What is needed is the ability to scan for this behavior across the network. This requires the use of tools such as an IDS/IPS, an SIEM, or, as we will discuss next, an EDR tool that allows for that scale.

- Just start hunting: As we saw with the maturity model, you are not going to be at the bleeding edge of threat hunting right out of the gate. Rather, it is better to start small. For example, identify the top five threats that actors are leveraging from threat intelligence sources. Then, focus your evidence collection and analysis. As you go through this process, you will identify additional data sources and evidence collection tools or techniques that you can later incorporate into the process.

- Only one of three outcomes: A final consideration is that hunting has one of three outcomes. First, you have proven your hypothesis correct and now you must pivot into incident response. Second, you have not proved your hypothesis but have validated that your tools and techniques were sufficient to come to an accurate conclusion. Third and final, you were not able to prove or disprove your hypothesis due to a lack of tools, immaturity techniques, or a combination of both. In any case, there is a continuous improvement of your hunt program.

Next, we will briefly look at how EDR platforms help with providing digital forensics at scale.

EDR for threat hunting

A group of tools that greatly aid in threat hunting is EDR tools. These tools build on the existing methodology of antivirus platforms. Many of these platforms also have the ability to search across the enterprise for specific IOCs and other data points, allowing threat hunt teams to search an extensive number of systems for any matching IOCs. These tools should be leveraged extensively during a threat hunt.

This type of functionality may be out of the budget of some organizations. In that case, we can use the previously discussed tool Velociraptor for threat hunting as well. In this case, let’s look at a threat hunt where the hypothesis is that a previously unidentified threat actor is using RDP to connect to internal systems as their initial foothold:

- To start, log into Velociraptor and click on the target symbol in the far-left column. This will open up the threat hunting page.

Figure 18.4 – Configuring a threat hunt

Figure 18.5 – Threat hunt description

- Enter a description and then click Select Artifacts. Either scroll or use a keyword search to find the Remote Desktop artifacts listed as Windows.EventLogs.RDPAuth.

Figure 18.6 – Setting artifacts to collect

- Leave the remaining parameters as default and click Launch.

- Select the hunt in the middle pane and then click the Play sign in the upper-left corner.

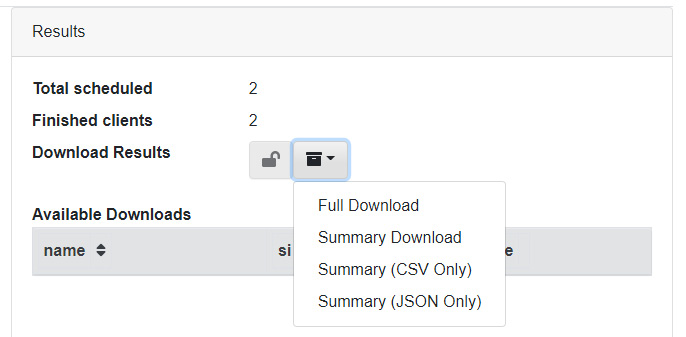

- Download the results.

Figure 18.7 – Download results

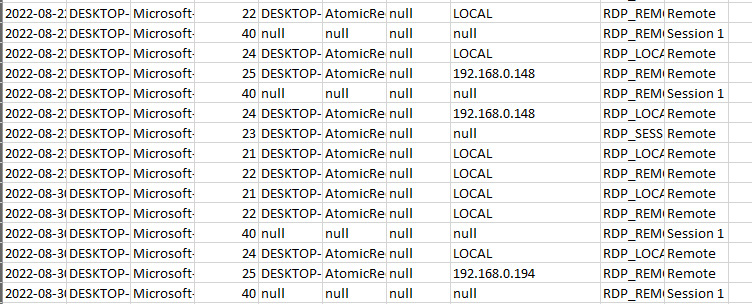

In this case, we will run through a summary of the results, which is in a CSV document. We can see in the document the actual RDP connections with associated IP addresses.

Figure 18.8 – Threat hunt results

From here, analysts can parse the data and determine what connections require further escalation and investigation.

EDR tools allow analysts to conduct threat hunting at scale for even the largest networks. This ability is crucial when hunting threats that can impact a wide variety of systems across the network. Even without an EDR, tools such as Velociraptor can provide similar functionality that allows analysts to quickly get to the data they need to conduct a proper threat hunt.

Summary

Eric O’Neill, former FBI intelligence professional and cybersecurity expert, has said: When you don’t hunt the threat, the threat hunts you. This is exactly the sentiment behind threat hunting. As was explored, the average time from compromise to detection is plenty for adversaries to do significant damage. Threat detection can be done by understanding the level of maturity in an organization in terms of proactive threat hunting, applying the threat hunt cycle, adequately planning, and—finally—recording the findings. Taking a proactive stance may reduce the time an adversary has to cause damage and help to possibly keep ahead of the constantly shifting threat landscape.

Questions

Answer the following questions to test your knowledge of this chapter:

- At what level of the threat hunting maturity model would technologies such as machine learning be found?

- HM0

- HM1

- HM2

- HM3

- Which of the following is a top 10 IOC?

- IP address

- Malware signature

- Excessive file request

- URL

- A threat hunt-initiating event can be a threat intelligence report.

- True

- False

- A working hypothesis is a generalized statement regarding the intent of the threat hunt.

- True

- False

Further reading

Refer to the following for more details about the topics covered in this chapter:

- Your Practical Guide to Threat Hunting: https://www.threathunting.net/files/hunt-evil-practical-guide-threat-hunting.pdf

- Threat hunting with Velociraptor: https://docs.velociraptor.app/presentations/2022_sans_summit/