by Benjamin Lipchak

WHAT YOU'LL LEARN IN THIS CHAPTER:

How to alter colors

How to post-process images

How to light an object per-fragment

How to perform procedural texture mapping

As you may recall from Chapter 19, “Programmable Pipeline: This Isn't Your Father's OpenGL,” fragment shaders replace the texturing, color sum, and fog stages of the fixed functionality pipeline. This is the section of the pipeline where the party is happening. Instead of marching along like a mindless herd of cattle, applying each enabled texture based on its pre-ordained texture coordinate, your fragments are free to choose their own adventure. Mix and match textures and texture coordinates. Or calculate your own texture coordinates. Or don't do any texturing, and just compute your own colors. It's all good.

In their natural habitat, vertex shaders and fragment shaders are most often mated for life. Fragment shaders are the dominant partner, directly producing the eye candy you see displayed on the screen, and thus they receive the most attention. However, vertex shaders play an important supporting role. In the name of performance, as much of the grunt work as possible is pushed into vertex shaders because they tend to be executed much less frequently (except for the smallest of triangles). The results are then placed into interpolants for use as input by the fragment shader. The vertex shader is a selfless producer; the fragment shader a greedy consumer.

In this chapter, we continue the learning by example we began in the preceding chapter. We present many fragment shaders, both as further exposure to the low-level and high-level shading languages, and as a launch pad for your own future dabbling. Because you rarely see fragment shaders alone, once you get the hang of fragment shaders in isolation, we will move on to discuss several examples of vertex shaders and fragment shaders working together in peaceful harmony.

We almost have to contrive some examples illustrating where fragment shaders are used without vertex shader assistance. But we can easily separate them where we simply want to alter the existing color. For these examples, we use fixed functionality lighting to provide a starting color. Then we go to town on it.

One thing you might want to do in your own work is simulate black-and-white film. Given the incoming red, green, and blue color channel intensities, we would like to calculate a single grayscale intensity to output to all three channels. Red, green, and blue each reflect light differently, which we represent by their different contributions to the final intensity.

Listings 23.1 and 23.2 show the GLSL and ARB_fragment_program versions of the shader. Though you won't be able to distinguish between several of the shader results due to the black-and-white limitations of the figures, Figure 23.1 is provided as a reference for some of the other shader results that are distinguishable.

Example 23.1. Grayscale Conversion High-Level Fragment Shader

// grayscale.fs

//

// convert RGB to grayscale

void main(void)

{

// Convert to grayscale

float gray = dot(gl_Color.rgb, vec3(0.3, 0.59, 0.11));

// replicate grayscale to RGB components

gl_FragColor = vec4(gray, gray, gray, 1.0);

}

Example 23.2. Grayscale Conversion Low-Level Fragment Shader

!!ARBfp1.0

# grayscale.fp

#

# convert RGB to grayscale

ATTRIB iPrC = fragment.color.primary; # input primary color

OUTPUT oPrC = result.color; # output color

DP3 oPrC.rgb, iPrC, {0.3, 0.59, 0.11};# R,G,B each contribute diff.

MOV oPrC.a, 1.0; # init alpha to 1

END

The key to all these fragment shaders is that what you write to the color output (gl_FragColor or result.color) is what is passed along down the rest of the OpenGL pipeline, eventually to the framebuffer. The primary color inputs are gl_Color and fragment.color.primary, respectively.

Try playing with the contributions of each color channel. Notice how they add up to 1. You can simulate overexposure by making them add up to more than 1, while less than 1 will simulate underexposure.

In this next example, we recolorize the grayscale picture with a sepia tone. This tone gives the picture the tint of an old Western photograph. To do this, we first convert to grayscale as before. Then we multiply the gray value by a color vector, which accentuates some color channels and reduces others. Listings 23.3 and 23.4 illustrate this sepia-tone conversion.

Example 23.3. Sepia-Tone Conversion High-Level Fragment Shader

// sepia.fs

//

// convert RGB to sepia tone

void main(void)

{

// Convert RGB to grayscale

float gray = dot(gl_Color.rgb, vec3(0.3, 0.59, 0.11));

// convert grayscale to sepia

gl_FragColor = vec4(gray * vec3(1.2, 1.0, 0.8), 1.0);

}

Example 23.4. Sepia-Tone Conversion Low-Level Fragment Shader

!!ARBfp1.0

# sepia.fp

#

# convert RGB to sepia tone

ATTRIB iPrC = fragment.color.primary; # input primary color

OUTPUT oPrC = result.color; # output color

TEMP gray;

DP3 gray, iPrC, {0.3, 0.59, 0.11}; # convert to grayscale

MUL oPrC.rgb, gray, {1.2, 1.0, 0.8}; # convert to sepia

MOV oPrC.a, 1.0; # init alpha to 1

END

You can choose to colorize with any tint you like. Go ahead and play with the tint factors. Here, we've hard-coded one for sepia. If you're truly ambitious, you could substitute external application-defined constants (uniforms in GLSL, program parameters in ARB_fragment_program) to make the tint color user-selectable so you don't have to write a different shader for every tint color.

For this next example, we're going for the film negative effect. These shaders are almost too simple to mention. All you have to do is take whatever color you were otherwise going to draw and subtract that color from 1. Black becomes white, and white becomes black. Red becomes cyan. Purple becomes chartreuse. You get the picture.

Figure 23.2 illustrates the color inversion performed in Listings 23.5 and 23.6. Use your imagination or consult the sample code for the grayscale inversion, which is just as straightforward.

Example 23.5. Color Inversion High-Level Fragment Shader

// colorinvert.fs

//

// invert like a color negative

void main(void)

{

// invert color components

gl_FragColor.rgb = 1.0 - gl_Color.rgb;

gl_FragColor.a = 1.0;

}

Now, we attempt our first texture lookup. In this sample shader, we simulate a heat signature effect like the one in the movie Predator. Heat is represented by a color spectrum ranging from black to blue to green to yellow to red.

We again use the grayscale conversion, this time as our scalar heat value. We use this value as a texture coordinate to index into a 1D texture populated with the color gradients from black to red. Figure 23.3 shows the results of the heat signature shaders in Listings 23.7 and 23.8.

Example 23.7. Heat Signature High-Level Fragment Shader

// heatsig.fs

//

// map grayscale to heat signature

uniform sampler1D sampler0;

void main(void)

{

// Convert to grayscale

float gray = dot(gl_Color.rgb, vec3(0.3, 0.59, 0.11));

// lookup heatsig value

gl_FragColor = texture1D(sampler0, gray);

}

Figure 23.3. This fragment shader simulates a heat signature by looking up a color from a 1D texture.

Example 23.8. Heat Signature Low-Level Fragment Shader

!!ARBfp1.0

# heatsig.fp

#

# map grayscale to heat signature

ATTRIB iPrC = fragment.color.primary; # input primary color

OUTPUT oPrC = result.color; # output color

TEMP gray;

DP3 gray, iPrC, {0.3, 0.59, 0.11}; # R,G,B -> gray

TEX oPrC, gray, texture[0], 1D; # lookup heatsig value

END

In the low-level shader, notice how we reference the texture unit from which we want to look up as texture[0]. The texture unit is effectively hard-coded into the shader. This is in contrast to the GLSL shader, which uses a special sampler uniform that can be set within the application.

Fixed functionality texture mapping was very strict, requiring all texture lookups to use an interpolated per-vertex texture coordinate. One of the powerful new capabilities made possible by fragment shaders is that you can calculate your own texture coordinates per-fragment. You can even use the result of one texture lookup as the coordinate for another lookup. All these cases are considered dependent texture lookups. They're named that because the lookups are dependent on other preceding operations in the fragment shader.

You may not have noticed, but we just performed a dependent texture lookup in the heat signature shader. First, we had to compute our texture coordinate by doing the grayscale conversion. Then we used that value as a texture coordinate to perform a dependent texture lookup into the 1D heat signature texture.

The dependency chain can continue: You could, for example, take the color from the heat texture and use that as a texture coordinate to perform a lookup from a cube map texture, perhaps to gamma-correct your color. Beware, however, that some OpenGL implementations have a hardware limit as to the length of dependency chains, so keep this point in mind if you want to avoid falling into a non–hardware-accelerated driver path!

Instead of performing fog blending per-vertex, or calculating the fog factor per-vertex and using fixed functionality fog blending, we compute the fog factor and perform the blend ourselves within the fragment shader in the following example. This example emulates GL_EXP2 fog mode except that it will be more accurate than most fixed functionality implementations, which apply the exponentiation per-vertex instead of per-fragment. This is most noticeable on low-tesselation geometry that extends from the foreground to the background, such as the floor upon which all the objects in the scene rest. Compare the results of this shader with the fog shaders in the preceding chapter, and you can readily see the difference.

Figure 23.4 illustrates the output of the fog shader in Listings 23.9 and 23.10.

Example 23.9. Per-Fragment Fog High-Level Fragment Shader

// fog.fs

//

// per-pixel fog

uniform float density;

void main(void)

{

const vec4 fogColor = vec4(0.5, 0.8, 0.5, 1.0);

// calculate 2nd order exponential fog factor

// based on fragment's Z distance

const float e = 2.71828;

float fogFactor = (density * gl_FragCoord.z);

fogFactor *= fogFactor;

fogFactor = clamp(pow(e, -fogFactor), 0.0, 1.0);

// Blend fog color with incoming color

gl_FragColor = mix(fogColor, gl_Color, fogFactor);

}

Example 23.10. Per-Fragment Fog Low-Level Fragment Shader

!!ARBfp1.0

# fog.fp

#

# per-pixel fog

ATTRIB iPrC = fragment.color.primary; # input primary color

ATTRIB iFrP = fragment.position; # input fragment position

OUTPUT oPrC = result.color; # output color

PARAM density = program.local[0]; # fog density

PARAM fogColor = {0.5, 0.8, 0.5, 1.0}; # fog color

PARAM e = {2.71828, 0, 0, 0};

TEMP fogFactor;

# fogFactor = clamp(e^(-(d*Zw)^2))

MUL fogFactor.x, iFrP.z, density.x;

MUL fogFactor.x, fogFactor.x, fogFactor.x;

POW fogFactor.x, e.x, -fogFactor.x;

MAX fogFactor.x, fogFactor.x, 0.0; # clamp to [0,1]

MIN fogFactor.x, fogFactor.x, 1.0;

LRP oPrC, fogFactor.x, iPrC, fogColor; # blend lit and fog colors

END

We need to comment on a few things here. One is the instructions used to blend. On the one hand, we have GLSL's built-in mix function. On the other hand, we have the LRP instruction in the low-level shader, which is specific to ARB_fragment_program. LRP is not available in ARB_vertex_program, where you have to perform blending with a combination of instructions, such as SUB and MAD.

Another thing to notice is how we have chosen to make the density an externally set constant rather than a hard-coded one. This way, we can tie the density to keystrokes. When the user hits the left or right arrows, we update the density shader constant with a new value without having to change the shader text at all. As a general rule, constant values that you may want to change at some point should not be hard-coded, but all others should be. By hard-coding a value, you give the OpenGL implementation's optimizing compiler an early opportunity to use this information to possibly make your shader run even faster.

Now that we've already created fog the hard way, we can use a low-level ARB_fragment_program extension shortcut that actually lets us request fog with a single line:

!!ARBfp1.0 OPTION ARB_fog_exp2; ...

Also available are ARB_fog_exp and ARB_fog_linear. These shortcuts were made available to ease the transition for application developers who were accustomed to the convenience of just setting glEnable(GL_FOG).

Image processing is another application of fragment shaders that doesn't depend on vertex shader assistance. After drawing the scene without fragment shaders, we can apply convolution kernels to post-process the image in a variety of ways.

To keep the shaders concise and improve the probability of their being hardware-accelerated on a wider range of hardware, we've limited the kernel size to 3×3. Feel free to experiment with larger kernel sizes.

Within the sample application, glCopyTexImage2D is called to copy the contents of the framebuffer into a texture. The texture size is chosen to be the largest power-of-2 size smaller than the window. A fragment-shaded quad is then drawn centered within the window with the same dimensions as the texture, with a base texture coordinate ranging from (0,0) in the lower left to (1,1) in the upper right.

The fragment shader takes its base texture coordinate and performs a texture lookup to obtain the center sample of the 3×3 kernel neighborhood. It then proceeds to apply eight different offsets to lookup samples for the other eight spots in the neighborhood. Finally, the shader applies some filter to the neighborhood to yield a new color for the center of the neighborhood. Each sample shader provides a different filter commonly used for image-processing tasks.

Blurring may be the most commonly applied filter in everyday use. It smoothes out high-frequency features, such as the jaggies along object edges. It is also called a low-pass filter because it lets low-frequency features pass through while filtering out high-frequency features.

Because we're using only a 3×3 kernel, the blur is not overly dramatic in a single pass. We could make it more blurry by using a larger kernel or, as we do here, by applying the blur filter multiple times in successive passes. Figure 23.5 shows the results of the blur filter in Listings 23.11 and 23.12 after five passes.

Example 23.11. Post-Process Blur High-Level Fragment Shader

// blur.fs

//

// blur (low-pass) 3x3 kernel

uniform sampler2D sampler0;

uniform vec2 tc_offset[9];

void main(void)

{

vec4 sample[9];

for (int i = 0; i < 9; i++)

{

sample[i] = texture2D(sampler0,

gl_TexCoord[0].st + tc_offset[i]);

}

// 1 2 1

// 2 1 2 / 13

// 1 2 1

gl_FragColor = (sample[0] + (2.0*sample[1]) + sample[2] +

(2.0*sample[3]) + sample[4] + (2.0*sample[5]) +

sample[6] + (2.0*sample[7]) + sample[8]) / 13.0;

}

Example 23.12. Post-Process Blur Low-Level Fragment Shader

!!ARBfp1.0 # blur.fp # # blur (low-pass) 3x3 kernel ATTRIB iTC0 = fragment.texcoord[0]; # input texcoord OUTPUT oPrC = result.color; # output color TEMP tc0, tc1, tc2, tc3, tc4, tc5, tc6, tc7, tc8; ADD tc0, iTC0, program.local[0]; ADD tc1, iTC0, program.local[1]; ADD tc2, iTC0, program.local[2]; ADD tc3, iTC0, program.local[3]; ADD tc4, iTC0, program.local[4]; ADD tc5, iTC0, program.local[5]; ADD tc6, iTC0, program.local[6]; ADD tc7, iTC0, program.local[7]; ADD tc8, iTC0, program.local[8]; TEX tc0, tc0, texture[0], 2D; TEX tc1, tc1, texture[0], 2D; TEX tc2, tc2, texture[0], 2D; TEX tc3, tc3, texture[0], 2D; TEX tc4, tc4, texture[0], 2D; TEX tc5, tc5, texture[0], 2D; TEX tc6, tc6, texture[0], 2D; TEX tc7, tc7, texture[0], 2D; TEX tc8, tc8, texture[0], 2D; # 1 2 1 # 2 1 2 / 13 # 1 2 1 ADD tc0, tc0, tc2; ADD tc2, tc4, tc6; ADD tc0, tc0, tc2; ADD tc0, tc0, tc8; ADD tc1, tc1, tc3; ADD tc3, tc5, tc7; ADD tc1, tc1, tc3; MAD tc0, tc1, 2.0, tc0; MUL oPrC, tc0, 0.076923; # 1/13 END

The first thing we do in the blur shaders is generate our nine texture coordinates. This is accomplished by adding precomputed constant offsets to the interpolated base texture coordinate. The offsets were computed taking into account the size of the texture such that the neighboring texels to the north, south, east, west, northeast, southeast, northwest, and southwest could be obtained by a simple 2D texture lookup. In the low-level version, texture[0] indicates that the texture lookup takes place on texture unit 0. In the GLSL version, you have to use a special-purpose uniform called a sampler, just as we did in the heat sampler. The sampler is loaded outside the shader to reflect which texture unit is in play.

This neighborhood is obtained the same way in all our image processing shaders. It is the filter applied to the neighborhood that differs in each shader. In the case of the blur filter, the texel neighborhood is multiplied by a 3×3 kernel of coefficients (1s and 2s), which add up to 13. The resulting values are all summed and averaged by dividing by 13, resulting in the new color for the texel. Note that we could have made the kernel coefficient values 1/13 and 2/13 instead of 1 and 2, but that would have required many extra multiplies. It is simpler and cheaper for us to factor out the 1/13 and just apply it at the end.

Try experimenting with the filter coefficients. What if, for example, you put a weight of 1 at each corner and then divide by 4? Notice what happens when you divide by more or less than the sum of the coefficients: The scene grows darker or lighter. That makes sense. If your scene were all white, you would be effectively multiplying the filter coefficients by 1 and adding them up. If you don't divide by the sum of the coefficients, you'll end up with a color other than white.

Sharpening is the opposite of blurring. Some examples of its use include making edges more pronounced and making text more readable. Figure 23.6 illustrates the use of sharpening, applying the filter in two passes.

Here is the GLSL code for applying the sharpen filter:

// sharpen.fs

//

// 3x3 sharpen kernel

uniform sampler2D sampler0;

uniform vec2 tc_offset[9];

void main(void)

{

vec4 sample[9];

for (int i = 0; i < 9; i++)

{

sample[i] = texture2D(sampler0,

gl_TexCoord[0].st + tc_offset[i]);

}

// -1 -1 -1

// -1 9 -1

// -1 -1 -1

gl_FragColor = (sample[4] * 9.0) -

(sample[0] + sample[1] + sample[2] +

sample[3] + sample[5] +

sample[6] + sample[7] + sample[8]);

}

All our image processing low-level shaders look the same up to and including the part where they perform the texture lookups, so we'll concentrate on the parts that are different: the application of the convolution kernel. The low-level code for sharpening is as follows:

!!ARBfp1.0 # sharpen.fp # # 3x3 sharpen kernel ... # -1 -1 -1 # -1 9 -1 # -1 -1 -1 ADD tc0, -tc0, -tc1; ADD tc0, tc0, -tc2; ADD tc0, tc0, -tc3; ADD tc0, tc0, -tc5; ADD tc0, tc0, -tc6; ADD tc0, tc0, -tc7; ADD tc0, tc0, -tc8; MAD oPrC, tc4, 9.0, tc0; END

Notice how this kernel also sums to 1, as did the blur filter. This operation guarantees that, on average, the filter is not increasing or decreasing the brightness. It's just sharpening the brightness, as desired.

Dilation and erosion are morphological filters, meaning they alter the shape of objects. Dilation grows the size of bright objects, whereas erosion shrinks the size of bright objects. (They each have the reverse effect on dark objects.) Figures 23.7 and 23.8 show the effects of three passes of dilation and erosion, respectively.

Dilation simply finds the maximum value in the neighborhood:

// dilation.fs

//

// maximum of 3x3 kernel

uniform sampler2D sampler0;

uniform vec2 tc_offset[9];

void main(void)

{

vec4 sample[9];

vec4 maxValue = vec4(0.0);

for (int i = 0; i < 9; i++)

{

sample[i] = texture2D(sampler0,

gl_TexCoord[0].st + tc_offset[i]);

maxValue = max(sample[i], maxValue);

}

gl_FragColor = maxValue;

}

!!ARBfp1.0

# dilation.fp

#

# maximum of 3x3 kernel

...

MAX tc0, tc0, tc1;

MAX tc0, tc0, tc2;

MAX tc0, tc0, tc3;

MAX tc0, tc0, tc4;

MAX tc0, tc0, tc5;

MAX tc0, tc0, tc6;

MAX tc0, tc0, tc7;

MAX oPrC, tc0, tc8;

END

Erosion conversely finds the minimum value in the neighborhood:

// erosion.fs

//

// minimum of 3x3 kernel

uniform sampler2D sampler0;

uniform vec2 tc_offset[9];

void main(void)

{

vec4 sample[9];

vec4 minValue = vec4(1.0);

for (int i = 0; i < 9; i++)

{

sample[i] = texture2D(sampler0,

gl_TexCoord[0].st + tc_offset[i]);

minValue = min(sample[i], minValue);

}

gl_FragColor = minValue;

}

!!ARBfp1.0

# erosion.fp

#

# minimum of 3x3 kernel

...

MIN tc0, tc0, tc1;

MIN tc0, tc0, tc2;

MIN tc0, tc0, tc3;

MIN tc0, tc0, tc4;

MIN tc0, tc0, tc5;

MIN tc0, tc0, tc6;

MIN tc0, tc0, tc7;

MIN oPrC, tc0, tc8;

END

One last filter class worthy of mention here is edge detectors. They do just what you would expect—detect edges. Edges are simply places in an image where the color changes rapidly, and edge detection filters pick up on these rapid changes and highlight them.

Three widely used edge detectors are Laplacian, Sobel, and Prewitt. Sobel and Prewitt are gradient filters that detect changes in the first derivative of each color channel's intensity, but only in a single direction. Laplacian, on the other hand, detects zero-crossings of the second derivative, where the intensity gradient suddenly changes from getting darker to getting lighter, or vice versa. It works for edges of any orientation.

Because the differences in their results are subtle, Figure 23.9 shows the results from only one of them, the Laplacian filter. Try out the others and examine their shaders at your leisure in the accompanying sample code.

The Laplacian's filter code is almost identical to the sharpen code we just looked at:

// laplacian.fs

//

// Laplacian edge detection

uniform sampler2D sampler0;

uniform vec2 tc_offset[9];

void main(void)

{

vec4 sample[9];

for (int i = 0; i < 9; i++)

{

sample[i] = texture2D(sampler0,

gl_TexCoord[0].st + tc_offset[i]);

}

// -1 -1 -1

// -1 8 -1

// -1 -1 -1

gl_FragColor = (sample[4] * 8.0) -

(sample[0] + sample[1] + sample[2] +

sample[3] + sample[5] +

sample[6] + sample[7] + sample[8]);

}

!!ARBfp1.0

# laplacian.fp

#

# Laplacian edge detection

...

# -1 -1 -1

# -1 8 -1

# -1 -1 -1

ADD tc0, -tc0, -tc1;

ADD tc0, tc0, -tc2;

ADD tc0, tc0, -tc3;

ADD tc0, tc0, -tc5;

ADD tc0, tc0, -tc6;

ADD tc0, tc0, -tc7;

ADD tc0, tc0, -tc8;

MAD oPrC, tc4, 8.0, tc0;

END

The difference, of course, is that the center kernel value is 8 rather than the 9 present in the sharpen kernel. The coefficients sum up to 0 rather than 1. This explains the blackness of the image. Instead of, on average, retaining its original brightness, the edge detection kernel will produce 0 in areas of the image with no color change.

Welcome back to another discussion of lighting shaders. In the preceding chapter, we covered per-vertex lighting. We also described a couple of per-fragment fixed functionality tricks to improve the per-vertex results: separate specular with color sum and power function texture for specular exponent. In this chapter, we perform all our lighting calculations in the fragment shader to obtain the greatest accuracy.

The shaders here will look very familiar. The same lighting equations are implemented, so the code is virtually identical. One new thing is the use of vertex shaders and fragment shaders together. The vertex shader sets up the data that needs to be interpolated across the line or triangle, such as normals and light vectors. The fragment shader then proceeds to do most of the work, resulting in a final color.

As a refresher, the equation for diffuse lighting follows:

Cdiff = max{N • L, 0} * Cmat * Cli

You need a vertex shader that generates both normal and light vectors. Listings 23.13 and 23.14 contain the high-level and low-level vertex shader source to generate these necessary interpolants for diffuse lighting.

Example 23.13. Diffuse Lighting Interpolant Generating High-Level Vertex Shader

// diffuse.vs

//

// setup interpolants for diffuse lighting

uniform vec3 lightPos0;

varying vec3 N, L;

void main(void)

{

// vertex MVP transform

// eye-space normal

N = gl_NormalMatrix * gl_Normal;

// eye-space light vector

vec4 V = gl_ModelViewMatrix * gl_Vertex;

L = lightPos0 - V.xyz;

// Copy the primary color

gl_FrontColor = gl_Color;

}

Example 23.14. Diffuse Lighting Interpolant Generating Low-Level Vertex Shader

!!ARBvp1.0

# diffuse.vp

#

# setup interpolants for diffuse lighting

ATTRIB iPos = vertex.position; # input position

ATTRIB iPrC = vertex.color.primary; # input primary color

ATTRIB iNrm = vertex.normal; # input normal

OUTPUT oPos = result.position; # output position

OUTPUT oPrC = result.color.primary; # output primary color

OUTPUT oTC0 = result.texcoord[0]; # output texcoord 0

OUTPUT oTC1 = result.texcoord[1]; # output texcoord 1

PARAM mvp[4] = { state.matrix.mvp }; # model-view * projection matrix

PARAM mv[4] = { state.matrix.modelview }; # model-view matrix

# inverse transpose of model-view matrix:

PARAM mvIT[4] = { state.matrix.modelview.invtrans };

PARAM lightPos = program.local[0]; # light pos in eye space

TEMP V; # temporary register

DP4 oPos.x, iPos, mvp[0]; # xform input pos by MVP

DP4 oPos.y, iPos, mvp[1];

DP4 oPos.z, iPos, mvp[2];

DP4 oPos.w, iPos, mvp[3];

DP4 V.x, iPos, mv[0]; # xform input pos by MV

DP4 V.y, iPos, mv[1];

DP4 V.z, iPos, mv[2];

DP4 V.w, iPos, mv[3];

DP3 oTC0.x, iNrm, mvIT[0]; # xform norm to eye space

DP3 oTC0.y, iNrm, mvIT[1];

DP3 oTC0.z, iNrm, mvIT[2]; # put N in texcoord 0

SUB oTC1, lightPos, V; # light vector in texcoord 1

MOV oPrC, iPrC; # copy primary color in to out

END

When using low-level shaders, we're stuck tossing the normal and light vector into a standard texture coordinate for interpolation. However, notice how we are able to give descriptive names N and L to our interpolants, known as varyings in GLSL. They have to match the names used in the fragment shader. All in all, this feature makes the high-level shaders much more readable and less error-prone. For example, if we're not careful in the low-level shaders, we might accidentally output L into texture coordinate 0, whereas the fragment shader is expecting it in texture coordinate 1. No compile error would be thrown. GLSL, on the other hand, matches them up automatically by name, keeping us out of trouble and at the same time avoiding the need for tedious comments in code explaining the contents of each interpolant.

The diffuse lighting fragment shaders resulting in Figure 23.10 follow in Listings 23.15 and 23.16. Unlike colors produced by specular lighting, diffuse lit colors do not change rapidly across a line or triangle, so you will probably not be able to distinguish between per-vertex and per-fragment diffuse lighting. For this reason, in general, it would be more efficient to perform diffuse lighting in the vertex shader, as we did in the preceding chapter. We perform it here per-fragment simply as a learning exercise.

Example 23.15. Diffuse Lighting High-Level Fragment Shader

// diffuse.fs

//

// per-pixel diffuse lighting

varying vec3 N, L;

void main(void)

{

// output the diffuse color

float intensity = max(0.0,

dot(normalize(N), normalize(L)));

gl_FragColor = gl_Color;

gl_FragColor.rgb *= intensity;

}

Example 23.16. Diffuse Lighting Low-Level Fragment Shader

!!ARBfp1.0 # diffuse.fp # # per-pixel diffuse lighting ATTRIB iPrC = fragment.color.primary;# input primary color ATTRIB iTC0 = fragment.texcoord[0]; # normal (N) ATTRIB iTC1 = fragment.texcoord[1]; # light vector (L) OUTPUT oPrC = result.color; # output color TEMP N, L, NdotL; DP3 N.w, iTC0, iTC0; # normalize normal RSQ N.w, N.w; MUL N, iTC0, N.w; DP3 L.w, iTC1, iTC1; # normalize light vec RSQ L.w, L.w; MUL L, iTC1, L.w; DP3 NdotL, N, L; # N . L MAX NdotL, NdotL, 0.0; # max(N . L, 0) MUL oPrC.rgb, iPrC, NdotL; # diffuse color MOV oPrC.a, iPrC.a; # preserve alpha END

First, we normalize the interpolated normal and light vectors. Then one more dot product, a maximum, and a multiply, and we're finished. Because we want a white light, we can save ourselves the additional multiply by Cli = { 1,1,1,1}.

Rather than cover specular lighting and multiple light samples independently, we'll cover both at the same time. As a refresher, the specular lighting equation is

Cspec = max{N • H, 0} ^Sexp * Cmat * Cli

The vertex shaders need to generate light vector interpolants for all three lights, in addition to the normal vector. We'll calculate the half-angle vector in the fragment shader. Listings 23.17 and 23.18 show the vertex shaders for the three diffuse and specular lights.

Example 23.17. Three Lights High-Level Vertex Shader

// 3lights.vs

//

// setup interpolants for 3 specular lights

uniform vec3 lightPos0;

uniform vec3 lightPos1;

uniform vec3 lightPos2;

varying vec3 N, L[3];

void main(void)

{

// vertex MVP transform

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

vec4 V = gl_ModelViewMatrix * gl_Vertex;

// eye-space normal

N = gl_NormalMatrix * gl_Normal;

// Light vectors

L[0] = lightPos0 - V.xyz;

L[1] = lightPos1 - V.xyz;

L[2] = lightPos2 - V.xyz;

// Copy the primary color

gl_FrontColor = gl_Color;

}

Example 23.18. Three Lights Low-Level Vertex Shader

!!ARBvp1.0

# 3lights.vp

#

# setup interpolants for 3 specular lights

ATTRIB iPos = vertex.position; # input position

ATTRIB iPrC = vertex.color.primary; # input primary color

ATTRIB iNrm = vertex.normal; # input normal

OUTPUT oPos = result.position; # output position

OUTPUT oPrC = result.color.primary; # output primary color

OUTPUT oTC0 = result.texcoord[0]; # output texcoord 0

OUTPUT oTC1 = result.texcoord[1]; # output texcoord 1

OUTPUT oTC2 = result.texcoord[2]; # output texcoord 2

OUTPUT oTC3 = result.texcoord[3]; # output texcoord 3

PARAM mvp[4] = { state.matrix.mvp }; # model-view * projection matrix

PARAM mv[4] = { state.matrix.modelview }; # model-view matrix

# inverse transpose of model-view matrix:

PARAM mvIT[4] = { state.matrix.modelview.invtrans };

PARAM lightPos0 = program.local[0]; # light pos 0 in eye space

PARAM lightPos1 = program.local[1]; # light pos 1 in eye space

PARAM lightPos2 = program.local[2]; # light pos 2 in eye space

TEMP V; # temporary register

DP4 oPos.x, iPos, mvp[0]; # xform input pos by MVP

DP4 oPos.y, iPos, mvp[1];

DP4 oPos.z, iPos, mvp[2];

DP4 oPos.w, iPos, mvp[3];

DP4 V.x, iPos, mv[0]; # xform input pos by MV

DP4 V.y, iPos, mv[1];

DP4 V.z, iPos, mv[2];

DP4 V.w, iPos, mv[3];

DP3 oTC0.x, iNrm, mvIT[0]; # xform norm to eye space

DP3 oTC0.y, iNrm, mvIT[1];

DP3 oTC0.z, iNrm, mvIT[2]; # put N in texcoord 0

SUB oTC1, lightPos0, V; # light vector 0 in texcoord 1

SUB oTC2, lightPos1, V; # light vector 1 in texcoord 2

SUB oTC3, lightPos2, V; # light vector 2 in texcoord 3

MOV oPrC, iPrC; # copy primary color in to out

END

The fragment shaders will be doing most of the heavy lifting. Figure 23.11 shows the result of Listings 23.19 and 23.20.

Example 23.19. Three Diffuse and Specular Lights High-Level Fragment Shader

// 3lights.fs

//

// 3 specular lights

varying vec3 N, L[3];

void main(void)

{

const float specularExp = 128.0;

vec3 NN = normalize(N);

// Light colors

vec3 lightCol[3];

lightCol[0] = vec3(1.0, 0.25, 0.25);

lightCol[1] = vec3(0.25, 1.0, 0.25);

lightCol[2] = vec3(0.25, 0.25, 1.0);

gl_FragColor = vec4(0.0);

for (int i = 0; i < 3; i++)

{

vec3 NL = normalize(L[i]);

vec3 NH = normalize(NL + vec3(0.0, 0.0, 1.0));

// Accumulate the diffuse contributions

gl_FragColor.rgb += gl_Color.rgb * lightCol[i] *

max(0.0, dot(NN, NL));

// Accumulate the specular contributions

gl_FragColor.rgb += lightCol[i] *

pow(max(0.0, dot(NN, NH)), specularExp);

}

gl_FragColor.a = gl_Color.a;

}

Example 23.20. Three Diffuse and Specular Lights Low-Level Fragment Shader

!!ARBfp1.0

# 3lights.fp

#

# 3 specular lights

ATTRIB iPrC = fragment.color.primary;# input primary color

ATTRIB iTC0 = fragment.texcoord[0]; # normal (N)

ATTRIB iTC1 = fragment.texcoord[1]; # light vector (L) 0

ATTRIB iTC2 = fragment.texcoord[2]; # light vector (L) 1

ATTRIB iTC3 = fragment.texcoord[3]; # light vector (L) 2

OUTPUT oPrC = result.color; # output color

PARAM lightCol0 = { 1.0, 0.25, 0.25, 1.0 }; # light 0 color

PARAM lightCol1 = { 0.25, 1.0, 0.25, 1.0 }; # light 1 color

PARAM lightCol2 = { 0.25, 0.25, 1.0, 1.0 }; # light 2 color

TEMP N, L, H, NdotL, NdotH, finalColor;

ALIAS diffuse = NdotL;

ALIAS specular = NdotH;

DP3 N.w, iTC0, iTC0; # normalize normal

RSQ N.w, N.w;

MUL N, iTC0, N.w;

DP3 L.w, iTC1, iTC1; # normalize light vec 0

RSQ L.w, L.w;

MUL L, iTC1, L.w;

ADD H, L, {0, 0, 1}; # half-angle vector 0

DP3 H.w, H, H; # normalize it

RSQ H.w, H.w;

MUL H, H, H.w;

DP3 NdotL, N, L; # N . L0

MAX NdotL, NdotL, 0.0; # max(N . L, 0)

MUL diffuse, iPrC, NdotL; # diffuse color

MUL finalColor, diffuse, lightCol0;

DP3 NdotH, N, H; # N . H0

MAX NdotH, NdotH, 0.0; # max(N . H, 0)

POW specular, NdotH.x, 128.0.x; # NdotH^128

MAD finalColor, specular, lightCol0, finalColor;

DP3 L.w, iTC2, iTC2; # normalize light vec 1

RSQ L.w, L.w;

MUL L, iTC2, L.w;

ADD H, L, {0, 0, 1}; # half-angle vector 1

DP3 H.w, H, H; # normalize it

RSQ H.w, H.w;

MUL H, H, H.w;

DP3 NdotL, N, L; # N . L1

MAX NdotL, NdotL, 0.0; # max(N . L, 0)

MUL diffuse, iPrC, NdotL; # diffuse color

MAD finalColor, diffuse, lightCol1, finalColor;

DP3 NdotH, N, H; # N . H1

MAX NdotH, NdotH, 0.0; # max(N . H, 0)

POW specular, NdotH.x, 128.0.x; # NdotH^128

MAD finalColor, specular, lightCol1, finalColor;

DP3 L.w, iTC3, iTC3; # normalize light vec 2

RSQ L.w, L.w;

MUL L, iTC3, L.w;

ADD H, L, {0, 0, 1}; # half-angle vector 2

DP3 H.w, H, H; # normalize it

RSQ H.w, H.w;

MUL H, H, H.w;

DP3 NdotL, N, L; # N . L2

MAX NdotL, NdotL, 0.0; # max(N . L, 0)

MUL diffuse, iPrC, NdotL; # diffuse color

MAD finalColor, diffuse, lightCol2, finalColor;

DP3 NdotH, N, H; # N . H2

MAX NdotH, NdotH, 0.0; # max(N . H, 0)

POW specular, NdotH.x, 128.0.x; # NdotH^128

MAD oPrC.rgb, specular, lightCol2, finalColor;

MOV oPrC.a, iPrC.a; # preserve alpha

END

This time, we made each of the three lights a different color instead of white, necessitating an additional multiply by lightColn (Cli). The lack of loops really makes itself obvious here in the low-level shader, which is more than three times as long.

When can you texture map an object without using any textures? When you're using procedural texture maps. This technique enables you to apply colors or other surface properties to an object, just like using conventional texture maps. With conventional texture maps, you load a texture image into OpenGL with glTexImage; then you perform a texture lookup within your fragment shader. However, with procedural texture mapping, you skip the texture loading and texture lookup and instead describe algorithmically what the texture looks like.

Procedural texture mapping has advantages and disadvantages. One advantage is that its storage requirements are measured in terms of a few shader instructions rather than megabytes of texture cache and/or system memory consumed by conventional textures. This frees your storage for other uses, such as the vertex buffer objects discussed in Chapter 16, “Buffer Objects: It's Your Video Memory; You Manage It!”

Another benefit is its virtually limitless resolution. Like vector drawings versus raster drawings, procedural textures scale to any size without loss of quality. Conventional textures require you to increase texture image sizes to improve quality when greatly magnified. Eventually, you'll hit a hardware limit. The only hardware limit affecting procedural texture quality is the floating-point precision of the shader processors, which are required to be at least 24-bit for OpenGL.

A disadvantage of procedural texture maps, and the reason they're not used more frequently, is that the complexity of the texture you want to represent requires an equally complex fragment shader. Everything from simple shapes and colors all the way to complex plasma, fire, smoke, marble, or wood grain can be achieved with procedural textures, given enough shader instructions to work with. But sometimes you just want the company logo or a satellite map or someone's face textured onto your scene. Certainly, conventional textures will always serve a purpose!

Enough discussion. Let's warm up with our first procedural texture: a 3D checkerboard. Our object will appear to be cut out of a block of alternating white and black cubes. Sounds simple enough, right?

We'll use the object-space position at each fragment to decide what color to make that fragment. So we need a vertex shader that, in addition to transforming the object-space position to clip-space as usual, also copies that object-space position into an interpolant so it becomes available to the fragment shader. While we're at it, we might as well add diffuse and specular lighting, so our vertex shader needs to output the normal and light vector as well.

Listings 23.21 and 23.22 show the high-level and low-level versions of this vertex shader. We'll use it for all three of our procedural texture mapping samples.

Example 23.21. Procedural Texture Mapping High-Level Vertex Shader

// checkerboard.vs

//

// Generic vertex transformation,

// copy object-space position and

// lighting vectors out to interpolants

uniform vec3 lightPos;

varying vec3 N, L, V;

void main(void)

{

// normal MVP transform

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

// map object-space position onto unit sphere

V = gl_Vertex.xyz;

// eye-space normal

N = gl_NormalMatrix * gl_Normal;

// eye-space light vector

vec4 Veye = gl_ModelViewMatrix * gl_Vertex;

L = lightPos - Veye.xyz;

}

Example 23.22. Procedural Texture Mapping Low-Level Vertex Shader

!!ARBvp1.0

# checkerboard.vp

#

# Generic vertex transformation,

# copy object-space position and

# light vectors out to interpolants

ATTRIB iPos = vertex.position; # input position

ATTRIB iNrm = vertex.normal; # input normal

OUTPUT oPos = result.position; # output position

OUTPUT oTC0 = result.texcoord[0]; # output texcoord 0: N

OUTPUT oTC1 = result.texcoord[1]; # output texcoord 1: L

OUTPUT oTC2 = result.texcoord[2]; # output texcoord 2: V

PARAM mvp[4] = { state.matrix.mvp }; # model-view * proj matrix

PARAM mv[4] = { state.matrix.modelview }; # model-view matrix

# inverse transpose of model-view matrix:

PARAM mvIT[4] = { state.matrix.modelview.invtrans };

PARAM lightPos = program.local[0]; # light pos in eye space

TEMP V; # temporary register

DP4 oPos.x, iPos, mvp[0]; # xform input pos by MVP

DP4 oPos.y, iPos, mvp[1];

DP4 oPos.z, iPos, mvp[2];

DP4 oPos.w, iPos, mvp[3];

DP4 V.x, iPos, mv[0]; # xform input pos by MV

DP4 V.y, iPos, mv[1];

DP4 V.z, iPos, mv[2];

DP4 V.w, iPos, mv[3];

DP3 oTC0.x, iNrm, mvIT[0]; # xform norm to eye space

DP3 oTC0.y, iNrm, mvIT[1];

DP3 oTC0.z, iNrm, mvIT[2]; # put N in texcoord 0

SUB oTC1, lightPos, V; # light vector in texcoord 1

MOV oTC2, iPos; # put objPos in texcoord 2

END

The object we're using for our samples is a sphere. The size of the sphere doesn't matter because we normalize the object-space position at the beginning of the fragment shader. This means that all the positions we deal with in the fragment shader will be in the range [–1,1].

Our strategy for the fragment shader will be to break up the range [–1,1] into eight alternating blocks along each axis. Each block will be assigned an alternating value of 0 or 1 for each axis, as illustrated in Figure 23.12. If the total of the three values is even, we paint it black; otherwise, we paint it white.

Figure 23.13 shows the result of Listings 23.23 and 23.24, which implement our checkerboard procedural texture mapping algorithm.

Example 23.23. Checkerboard High-Level Fragment Shader

// checkerboard.fs

//

// 3D solid checker grid

varying vec3 V; // object-space position

varying vec3 N; // eye-space normal

varying vec3 L; // eye-space light vector

const vec3 onColor = vec3(1.0, 1.0, 1.0);

const vec3 offColor = vec3(0.0, 0.0, 0.0);

const float ambientLighting = 0.2;

const float specularExp = 60.0;

const float specularIntensity = 0.75;

const int numSquaresPerSide = 8;

void main (void)

{

// Normalize vectors

vec3 NN = normalize(N);

vec3 NL = normalize(L);

vec3 NV = normalize(V);

vec3 NH = normalize(NL + vec3(0.0, 0.0, 1.0));

// Map -1,1 to 0,numSquaresPerSide

vec3 onOrOff = ((NV + 1.0) * float(numSquaresPerSide)) / 2.0;

// mod 2 >= 1

onOrOff = step(1.0, mod(onOrOff, 2.0));

// 3-way xor

onOrOff.x = step(0.5,

mod(onOrOff.x + onOrOff.y + onOrOff.z, 2.0));

// checkerboard grid

vec3 surfColor = mix(offColor, onColor, onOrOff.x);

// calculate diffuse lighting + 20% ambient

surfColor *= (ambientLighting + vec3(max(0.0, dot(NN, NL))));

// calculate specular lighting w/ 75% intensity

surfColor += (specularIntensity *

vec3(pow(max(0.0, dot(NN, NH)), specularExp)));

gl_FragColor = vec4(surfColor, 1.0);

}

Example 23.24. Checkerboard Low-Level Fragment Shader

!!ARBfp1.0

# checkerboard.fp

#

# 3D solid checker grid

ATTRIB N = fragment.texcoord[0];

ATTRIB L = fragment.texcoord[1];

ATTRIB V = fragment.texcoord[2]; # obj-space position

OUTPUT oPrC = result.color; # output color

PARAM onColor = {1.0, 1.0, 1.0, 1.0};

PARAM offColor = {0.0, 0.0, 0.0, 1.0};

# 0.25 * squares per side, ambient lighting,

# specular exponent, specular intensity

PARAM misc = {2.0, 0.2, 60.0, 0.75};

TEMP NV, NN, NL, NH, NdotL, NdotH, surfColor, onOrOff;

ALIAS specular = NdotH;

DP3 NV.w, V, V; # normalize vertex pos

RSQ NV.w, NV.w;

MUL NV, V, NV.w;

# Map position from -1,1 to 0,numSquaresPerSide/2

MAD onOrOff, NV, misc.x, misc.x;

# mod2 by doubling FRC, then subtract 1 for >= 1 compare

FRC onOrOff, onOrOff;

MAD onOrOff, onOrOff, 2.0, -1.0;

CMP onOrOff, onOrOff, 0.0, 1.0;

# perform xor by adding all 3 axes' onoroff values,

# then mod2 again

DP3 onOrOff, onOrOff, 1.0;

MUL onOrOff, onOrOff, 0.5;

FRC onOrOff, onOrOff;

MAD onOrOff, onOrOff, 2.0, -1.0;

CMP onOrOff, onOrOff, 0.0, 1.0;

# checkerboard grid

LRP surfColor, onOrOff, onColor, offColor;

DP3 NN.w, N, N; # normalize normal

RSQ NN.w, NN.w;

MUL NN, N, NN.w;

DP3 NL.w, L, L; # normalize light vec

RSQ NL.w, NL.w;

MUL NL, L, NL.w;

ADD NH, NL, {0, 0, 1}; # half-angle vector

DP3 NH.w, NH, NH; # normalize it

RSQ NH.w, NH.w;

MUL NH, NH, NH.w;

# diffuse lighting

DP3 NdotL, NN, NL; # N . L

MAX NdotL, NdotL, 0.0; # max(N . L, 0)

ADD NdotL, NdotL, misc.y; # 20% ambient

MUL surfColor, surfColor, NdotL; # factor in diffuse color

# specular lighting

DP3 NdotH, NN, NH; # N . H

MAX NdotH, NdotH, 0.0; # max(N . H, 0)

POW specular, NdotH.x, misc.z; # NdotH^60

MAD oPrC, misc.w, specular, surfColor; # 75% specular intensity

END

GLSL has a built-in modulo function (mod), which is used to achieve the alternating blocks. However, we have to work a little harder to perform the modulo 2 operation in our low-level fragment shader. We take the value, divide it by 2, use the FRC instruction to get the fractional part, and then multiply that by 2. What we're left with is a value in the range [0,2].

Next, we must determine whether the value is within [0,1] or [1,2]. We do this in GLSL using the step function, which returns 1 if the second argument is greater than or equal to the first, and 0 otherwise. In the low-level shader, we can do this using the CMP instruction, but because it compares the first argument to 0, not 1, we first subtract 1 from the argument.

Now that we have a value of 0 or 1 on each axis, we sum those three values and again perform modulo 2 and a greater than or equal to comparison. That way, we can assign colors of black or white based on whether the final sum is even or odd. We accomplish this with mix in the high-level shader or LRP in the low-level version.

You can very easily alter the shaders to change the checkerboard colors or to adjust the number of blocks per row. Give it a try!

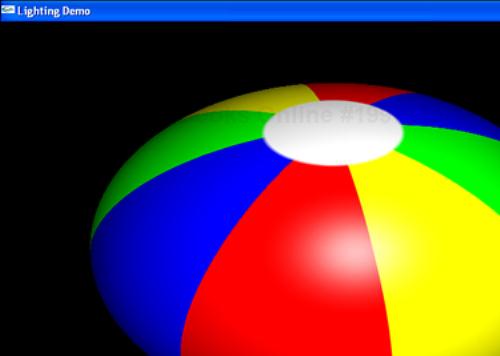

In this next sample, we're going to turn our sphere into a beach ball. The ball will have eight longitudinal stripes with alternating primary colors. The north and south poles of the ball will be painted white. Let's get started!

Look at the ball from above. We'll be slicing it up into three half spaces: north-south, northeast-southwest, and northwest-southeast. See Figure 23.14 for a visual depiction. The north slices are assigned full red values, while south slices are assigned no red. The two slices that are both in the southeast half space and the northeast half space are assigned full green, while all other slices receive no green. Notice how the overlapping red and green slice becomes yellow. Finally, all slices in the southwest half space are assigned the color blue.

The east slices nicely alternate from red to yellow to green to blue. But what about the west slices? The easiest way to address them is to effectively copy the east slices and rotate them 180 degrees. We're looking down at the ball from the positive y-axis. If the object-space position's x coordinate is greater than or equal to 0, the position is used as-is. However, if the coordinate is less than 0, we negate both the x-axis and z-axis position, which maps the original position to its mirror on the opposite side of the beach ball.

The white caps at the poles are simple to add in. After coloring the rest of the ball with stripes, we replace that color with white whenever the absolute value of the y-axis position is close to 1. Figure 23.15 shows the result of the beach ball shaders in Listings 23.25 and 23.26.

Example 23.25. Beach Ball High-Level Fragment Shader

// beachball.fs // // Longitudinal stripes, end caps varying vec3 V; // object-space position varying vec3 N; // eye-space normal varying vec3 L; // eye-space light vector const vec3 myRed = vec3(1.0, 0.0, 0.0); const vec3 myYellow = vec3(1.0, 1.0, 0.0); const vec3 myGreen = vec3(0.0, 1.0, 0.0); const vec3 myBlue = vec3(0.0, 0.0, 1.0); const vec3 myWhite = vec3(1.0, 1.0, 1.0); const vec3 myBlack = vec3(0.0, 0.0, 0.0); const vec3 northHalfSpace = vec3(0.0, 0.0, 1.0); const vec3 northeastHalfSpace = vec3(0.707, 0.0, 0.707); const vec3 northwestHalfSpace = vec3(-0.707, 0.0, 0.707); const float capSize = 0.03; // 0 to 1 const float smoothEdgeTol = 0.005; const float ambientLighting = 0.2; const float specularExp = 60.0; const float specularIntensity = 0.75; void main (void) { // Normalize vectors vec3 NN = normalize(N); vec3 NL = normalize(L); vec3 NH = normalize(NL + vec3(0.0, 0.0, 1.0)); vec3 NV = normalize(V); // Mirror half of ball across X and Z axes float mirror = (NV.x >= 0.0) ? 1.0 : -1.0; NV.xz *= mirror; // Check for north/south, east/west, // northeast/southwest, northwest/southeast vec4 distance; distance.x = dot(NV, northHalfSpace); distance.y = dot(NV, northeastHalfSpace); distance.z = dot(NV, northwestHalfSpace); // setup for white caps on top and bottom distance.w = abs(NV.y) - 1.0 + capSize; distance = smoothstep(vec4(0.0), vec4(smoothEdgeTol), distance); // red, green, red+green=yellow, and blue stripes vec3 surfColor = mix(myBlack, myRed, distance.x); surfColor += mix(myBlack, myGreen, distance.y*(1.0-distance.z)); surfColor = mix(surfColor, myBlue, 1.0-distance.y); // white caps on top and bottom surfColor = mix(surfColor, myWhite, distance.w); // calculate diffuse lighting + 20% ambient surfColor *= (ambientLighting + vec3(max(0.0, dot(NN, NL)))); // calculate specular lighting w/ 75% intensity surfColor += (specularIntensity * vec3(pow(max(0.0, dot(NN, NH)), specularExp))); gl_FragColor = vec4(surfColor, 1.0); }

Example 23.26. Beach Ball Low-Level Fragment Shader

!!ARBfp1.0 # beachball.fp # # Longitudinal stripes, end caps ATTRIB N = fragment.texcoord[0]; ATTRIB L = fragment.texcoord[1]; ATTRIB V = fragment.texcoord[2]; # obj-space position OUTPUT oPrC = result.color; # output color PARAM myRed = {1.0, 0.0, 0.0, 1.0}; PARAM myYellow = {1.0, 1.0, 0.0, 1.0}; PARAM myGreen = {0.0, 1.0, 0.0, 1.0}; PARAM myBlue = {0.0, 0.0, 1.0, 1.0}; PARAM myWhite = {1.0, 1.0, 1.0, 1.0}; PARAM myBlack = {0.0, 0.0, 0.0, 1.0}; PARAM northHalfSpace = {0.0, 0.0, 1.0}; PARAM northeastHalfSpace = {0.707, 0.0, 0.707}; PARAM northwestHalfSpace = {-0.707, 0.0, 0.707}; # cap size minus one, ambient lighting, # specular exponent, specular intensity PARAM misc = {-0.97, 0.2, 60.0, 0.75}; TEMP NV, NN, NL, NH, NdotL, NdotH, surfColor, distance, mirror; ALIAS specular = NdotH; ALIAS redColor = NV; DP3 NV.w, V, V; # normalize vertex pos RSQ NV.w, NV.w; MUL NV, V, NV.w; # Mirror half of ball across X and Z axes CMP mirror, NV.x, -1.0, 1.0; MUL NV.xz, NV, mirror; # Check for north/south, east/west, # northeast/southwest, northwest/southeast DP3 distance.x, NV, northHalfSpace; DP3 distance.y, NV, northeastHalfSpace; DP3 distance.z, NV, northwestHalfSpace; # setup for white caps on top and bottom ABS distance.w, NV.y; ADD distance.w, distance.w, misc.x; CMP distance, distance, 0.0, 1.0; # red, green, red+green=yellow, and blue stripes LRP redColor, distance.x, myRed, myBlack; MAD distance.z, -distance.y, distance.z, distance.y; LRP surfColor, distance.z, myGreen, myBlack; ADD surfColor, surfColor, redColor; SUB distance.y, 1.0, distance.y; LRP surfColor, distance.y, myBlue, surfColor; # white caps on top and bottom LRP surfColor, distance.w, myWhite, surfColor; DP3 NN.w, N, N; # normalize normal RSQ NN.w, NN.w; MUL NN, N, NN.w; DP3 NL.w, L, L; # normalize light vec RSQ NL.w, NL.w; MUL NL, L, NL.w; ADD NH, NL, {0, 0, 1}; # half-angle vector DP3 NH.w, NH, NH; # normalize it RSQ NH.w, NH.w; MUL NH, NH, NH.w; # diffuse lighting DP3 NdotL, NN, NL; # N . L MAX NdotL, NdotL, 0.0; # max(N . L, 0) ADD NdotL, NdotL, misc.y; # 20% ambient MUL surfColor, surfColor, NdotL; # factor in diffuse color # specular lighting DP3 NdotH, NN, NH; # N . H MAX NdotH, NdotH, 0.0; # max(N . H, 0) POW specular, NdotH.x, misc.z; # NdotH^60 MAD oPrC, misc.w, specular, surfColor; # 75% specular intensity END

After remapping all negative x positions as described earlier, we use dot products to determine on which side of each half space the current object-space coordinate falls. The sign of the dot product tells us which side of the half space is in play. In the low-level shader, we use the CMP instruction to perform this greater than or equal to 0 comparison. However, in the GLSL shader, we don't use the built-in step function this time. Instead, we introduce a new and improved version: smoothstep.

Instead of transitioning directly from 0 to 1 at the edge of a half space, smoothstep allows for a smooth transition near the edge where values between 0 and 1 are returned. Switch back and forth between the high-level and low-level versions and you'll see how smoothstep helps reduce the aliasing jaggies.

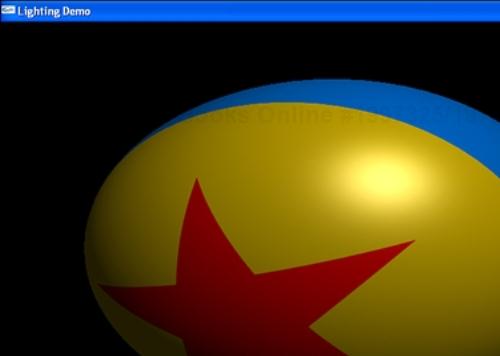

For our final procedural texture mapping feat, we'll transform our sphere into a familiar toy ball, again using no conventional texture images. This ball will have a red star on a yellow background circumscribed by a blue stripe. We will describe all this inside a fragment shader.

The tricky part is obviously the star shape. For each fragment, the shader must determine whether the fragment is within the star, in which case it's painted red, or whether it remains outside the star, in which case it's painted yellow. To make this determination, we first detect whether the fragment is inside or outside five different half spaces, as shown in Figure 23.16.

Figure 23.16. This diagram illustrates the determination of whether a fragment is inside or outside the star as described by 5 half spaces.

Any fragment that is inside at least four of the five half spaces is inside the star. We'll start a counter at –3 and increment it for every half space that the fragment falls within. Then we'll clamp it to the range [0,1]. A 0 indicates that we're outside the star and should paint the fragment yellow. A 1 indicates that we're inside the star and should paint the frag-ment red.

Adding the blue stripe, like the white caps on the beach ball, is an easy last step. Instead of repainting fragments close to the ends of the ball, we repaint them close to the center, this time along the z-axis. Figure 23.17 illustrates the result of the toy ball shaders in Listings 23.27 and 23.28.

Example 23.27. Toy Ball High-Level Fragment Shader

// toyball.fs // // Based on shader by Bill Licea-Kane varying vec3 V; // object-space position varying vec3 N; // eye-space normal varying vec3 L; // eye-space light vector const vec3 myRed = vec3(0.6, 0.0, 0.0); const vec3 myYellow = vec3(0.6, 0.5, 0.0); const vec3 myBlue = vec3(0.0, 0.3, 0.6); const vec3 myHalfSpace0 = vec3(0.31, 0.95, 0.0); const vec3 myHalfSpace1 = vec3(-0.81, 0.59, 0.0); const vec3 myHalfSpace2 = vec3(-0.81, -0.59, 0.0); const vec3 myHalfSpace3 = vec3(0.31, -0.95, 0.0); const vec3 myHalfSpace4 = vec3(1.0, 0.0, 0.0); const float stripeThickness = 0.4; // 0 to 1 const float starSize = 0.2; // 0 to ~0.3 const float smoothEdgeTol = 0.005; const float ambientLighting = 0.2; const float specularExp = 60.0; const float specularIntensity = 0.5; void main (void) { vec4 distVector; float distScalar; // Normalize vectors vec3 NN = normalize(N); vec3 NL = normalize(L); vec3 NH = normalize(NL + vec3(0.0, 0.0, 1.0)); vec3 NV = normalize(V); // Each flat edge of the star defines a half-space. The interior // of the star is any point within at least 4 out of 5 of them. // Start with -3 so that it takes adding 4 ins to equal 1. float myInOut = -3.0; // We need to perform 5 dot products, one for each edge of // the star. Perform first 4 in vector, 5th in scalar. distVector.x = dot(NV, myHalfSpace0); distVector.y = dot(NV, myHalfSpace1); distVector.z = dot(NV, myHalfSpace2); distVector.w = dot(NV, myHalfSpace3); distScalar = dot(NV, myHalfSpace4); // The half-space planes all intersect the origin. We must // offset them in order to give the star some size. distVector += starSize; distScalar += starSize; distVector = smoothstep(0.0, smoothEdgeTol, distVector); distScalar = smoothstep(0.0, smoothEdgeTol, distScalar); myInOut += dot(distVector, vec4(1.0)); myInOut += distScalar; myInOut = clamp(myInOut, 0.0, 1.0); // red star on yellow background vec3 surfColor = mix(myYellow, myRed, myInOut); // blue stripe down middle myInOut = smoothstep(0.0, smoothEdgeTol, abs(NV.z) - stripeThickness); surfColor = mix(myBlue, surfColor, myInOut); // calculate diffuse lighting + 20% ambient surfColor *= (ambientLighting + vec3(max(0.0, dot(NN, NL)))); // calculate specular lighting w/ 50% intensity surfColor += (specularIntensity * vec3(pow(max(0.0, dot(NN, NH)), specularExp))); gl_FragColor = vec4(surfColor, 1.0); }

Example 23.28. Toy Ball Low-Level Fragment Shader

!!ARBfp1.0

# toyball.fp

#

# Based on shader by Bill Licea-Kane

ATTRIB N = fragment.texcoord[0];

ATTRIB L = fragment.texcoord[1];

ATTRIB V = fragment.texcoord[2]; # obj-space position

OUTPUT oPrC = result.color; # output color

PARAM myRed = {0.6, 0.0, 0.0, 1.0};

PARAM myYellow = {0.6, 0.5, 0.0, 1.0};

PARAM myBlue = {0.0, 0.3, 0.6, 1.0};

PARAM myHalfSpace0 = {0.31, 0.95, 0.0};

PARAM myHalfSpace1 = {-0.81, 0.59, 0.0};

PARAM myHalfSpace2 = {-0.81, -0.59, 0.0};

PARAM myHalfSpace3 = {0.31, -0.95, 0.0};

PARAM myHalfSpace4 = {1.0, 0.0, 0.0};

# stripe thickness, star size & ambient lighting,

# specular exponent, specular intensity

PARAM misc = {0.4, 0.2, 60.0, 0.5};

TEMP NV, NN, NL, NH, NdotL, NdotH, surfColor, distance, myInOut;

ALIAS specular = NdotH;

DP3 NV.w, V, V; # normalize vertex pos

RSQ NV.w, NV.w;

MUL NV, V, NV.w;

# Each flat edge of the star defines a half-space. The interior

# of the star is any point within at least 4 out of 5 of them.

# Start with -3 so that it takes adding 4 ins to equal 1.

MOV myInOut, -3.0;

# We need to perform 5 dot products, one for each edge of

# the star. Perform first 4 in vector, 5th in a second

# vector along with the blue stripe.

DP3 distance.x, NV, myHalfSpace0;

DP3 distance.y, NV, myHalfSpace1;

DP3 distance.z, NV, myHalfSpace2;

DP3 distance.w, NV, myHalfSpace3;

# The half-space planes all intersect the origin. We must

# offset them in order to give the star some size.

ADD distance, distance, misc.y;

CMP distance, distance, 0.0, 1.0;

DP4 distance, distance, 1.0;

ADD myInOut, myInOut, distance;

# set up last star edge and blue stripe

DP3 distance.x, NV, myHalfSpace4;

ADD distance.x, distance.x, misc.y;

ABS distance.y, NV.z;

SUB distance.y, distance.y, misc.x;

CMP distance, distance, 0.0, 1.0;

ADD_SAT myInOut, myInOut, distance.x;

# red star on yellow background

LRP surfColor, myInOut, myRed, myYellow;

# blue stripe down middle

LRP surfColor, distance.y, surfColor, myBlue;

DP3 NN.w, N, N; # normalize normal

RSQ NN.w, NN.w;

MUL NN, N, NN.w;

DP3 NL.w, L, L; # normalize light vec

RSQ NL.w, NL.w;

MUL NL, L, NL.w;

ADD NH, NL, {0, 0, 1}; # half-angle vector

DP3 NH.w, NH, NH; # normalize it

RSQ NH.w, NH.w;

MUL NH, NH, NH.w;

# diffuse lighting

DP3 NdotL, NN, NL; # N . L

MAX NdotL, NdotL, 0.0; # max(N . L, 0)

ADD NdotL, NdotL, misc.y; # 20% ambient

MUL surfColor, surfColor, NdotL; # factor in diffuse color

# specular lighting

DP3 NdotH, NN, NH; # N . H

MAX NdotH, NdotH, 0.0; # max(N . H, 0)

POW specular, NdotH.x, misc.z; # NdotH^60

MAD oPrC, misc.w, specular, surfColor; # 50% specular intensity

END

The half spaces cut through the center of the sphere. This is what we wanted for the beach ball, but for the star we need them offset from the center slightly. This is why we add an extra constant distance to the result of the half space dot products. The larger you make this constant, the larger your star will be.

Again, we use smoothstep in the GLSL shader and CMP in the low-level shader when picking between inside and outside. For efficiency, we put the inside/outside results of the first four half spaces into a four-component vector. This way, we can sum the four components with a single four-component dot product against the vector { 1,1,1,1} . The fifth half space's inside/outside value goes into a lonely float and is added to the other four separately because no five-component vector type is available. You could create such a type yourself out of a structure, but you would likely sacrifice performance on most implementations, which natively favor four-component vectors.

If you want to toy with this shader, try this exercise: Convert the star into a six-pointed star by adding another half space and adjusting the existing half space planes. Prove to yourself how many half spaces your fragments must fall within now to fall within the star, and adjust the myInOut counter's initial value accordingly.

The possible applications of vertex and fragment shaders are limited only by your imagination. We've introduced a few just to spark your creativity and to provide you with some basic building blocks so you can easily jump right in and start creating your own shaders. Feel free to take these shaders, hack and slash them beyond recognition, and invent and discover better ways of doing things while you're at it. Don't forget the main objective of this book: Make pretty pictures. So get to it!