9.5 Augmented Reality

Computer graphics provides a virtual environment (VE) to immerse a user into a synthetic environment but a user cannot actually see the world around him or her. Augmented reality (AR), a variation on VE, allows the user to see parts of the world with virtual objects added into the real world. Therefore, AR supports reality with the appearance of both real and virtual objects rather than replacing reality. AR also provides a middle ground between VE (completely synthetic) and telepresence (completely real) [24, 25]. AR can enhance the perception of a user to helps them perform real-world tasks with the assistance of the virtual objects. AR is defined by Azuma [26] as a system that has the following three characteristics:

- combine real and virtual objects and scenes

- allow the interaction with the real and virtual objects in real time

- register interactions and objects in the 3D world

The fundamental functions provided by an AR system are the ability to add and remove objects to and from a real world and to provide haptics. Current research focuses on adding virtual objects into a real environment and blending virtual and real images seamlessly for display. However, the display or overlay should be able to remove and hide the real objects from the real world, too. Vision is not the only sense of a human. Hearing, touch, smell and taste are other senses which are also important hints for interaction with the scene. Especially touch can give the user the sense of interaction with the objects. Therefore a proper haptic sense should be generated and fed to the user for proper interaction. Accordingly, a AR system should consist of the following three basic subsystems:

- Scene generator

Compared to VE systems which replace the real world with a virtual environment, AR uses virtual images as supplements to the real world. Therefore, only a few virtual objects need to be drawn but realism is not required in order to provide proper interaction. Therefore, adding virtual objects into the real world using a scene generator is the fundamental function for proper AR interactions. However, seamlessly compositing virtual objects into the real world is not the critical issue for AR applications.

- Display device

Because the composited images must be delivered to the user through a display device, the display device is important for an AR system. But the requirement of a display device for an AR system may be less stringent than that of a VE system because AR does not replace the real world. For example, the image overlay in Section 9.5.1 shows the composition of the patient's head and the MRI scanned brain virtual image. The quality of the overlay image may not reach the pleasant entertainment level but it provides useful information for the surgeon.

- Tracking and sensing

In the previous two cases, AR had lower requirements than VE, but the requirements for tracking and sensing are much stricter than those for VE systems. Proper alignment of the virtual objects in the real world is important to provide the correct and necessary information for achieving the user's goal. Without that, AR is only a nice-looking application. Tracking and sensing can also be viewed as a registration problem. In order to have virtual objects registered correctly in the real world, the real world must be constructed or analyzed for the AR system. But 3D scene reconstruction is still a difficult problem. Therefore, tracking and sensing is still the key to pushing AR applications to another level.

A basic design decision in building an AR system is how to accomplish the task of combining real and virtual objects on the same display device. Currently there are two techniques to achieve this goal:

- Optical augmented display

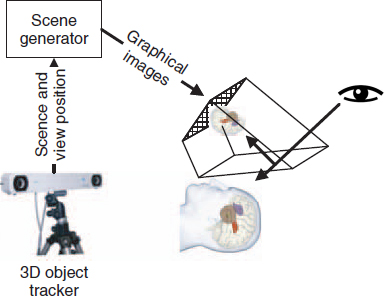

As shown in Figure 9.8, an optical augmented display system can let the user see the real world by placing optical combiners in front of the user. These combiners are partially transmissive, so that the user can look directly through them to see the real world. The virtual objects can be composited onto the display by reflection. Later, the medical visualization image overlay system can illustrate this concept.

- Video augmented display

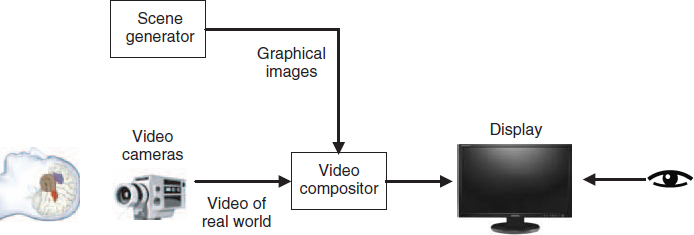

As shown in Figure 9.9, a video augmented display system does not allow any direct view of the real world. A single video camera, or a set of cameras, can provide the view of the real world. The virtual images generated by the scene generator are superimposed on the real world. The composite result is then sent to the display device for a visual perception of the augmented world. There is more than one way to composite the virtual images with the real world video. The blue screen composition algorithm is one of them. The algorithm uses a specific color, say green, to identify the non important part of the virtual images and then composition replaces the non important part with the corresponding part of the real world video. The overall effect is to superimpose the virtual objects over the real world. Another composition algorithm can use depth information to help place the virtual object in the proper location according to the related depth information. The virtual object can be composited into the real world by doing a pixel-by-pixel depth comparison. This would allow real objects to cover virtual objects and vice versa. Therefore, reconstructing the depth of the world is critical for proper AR interaction and view synthesis of the 3D stereoscopic view. Later, the mobile AR system can illustrate this concept.

Currently, there are several different possible applications including medical visualization, maintenance and repair, annotation, robot path planning, entertainment, and military aircraft navigation and targeting. Since 3D applications are the focus of this section, we would like to focus on two applications of augmented reality. Other parts will be neglected and interesting readers can refer to [26] for more details.

Figure 9.8 The optical augmented display system concept. The subject is direct under the transmissive display device. There is a tracking device to tracking the real world for registration of the virtual object. The scene generator generates the virtual objects. The virtual objects are then composited onto the transmissive display.

Figure 9.9 The video camera captures the real world. The 3D tracker tracks the real world for registration. The scene generator generates virtual objects. The compositor combines the real world video with the virtual object using the registration information from the 3D tracker.

9.5.1 Medical Visualization

Currently, minimally invasive surgical techniques are popular because they reduce the destruction of the tissues of a patient which gives faster recovery and a better cure. However, these techniques have a major problem that the reduction in the ability to see the surgical location makes the process more difficult. AR can be helpful in relieving this problem. AR first collects 3D datasets of a patient in real time using non invasive sensors like magnetic resonance imaging (MRI), computed tomography scans (CT), or ultrasound imaging. Then, these collected datasets are input to the scene generator to create virtual images. The virtual images are superimposed on the view of the real patient. This effectively gives a surgeon “X-ray vision” inside the patient, which would be very helpful during minimally invasive surgery. Additionally, AR can even be helpful in the traditional surgery because certain medical features are hard to detect with the naked eye but easy to detect with MRI or CT scans and vice versa. AR can use the collected data sets to generate accantuated views of the operation position and target tissues to help surgeons precisely remove the problematic tissues and cure the disease. AR might also be helpful for guiding tasks which need high precision, such as displaying where to drill a hole into the skull for brain surgery or where to perform a needle biopsy of a tiny tumor. The information from the non invasive sensors would be directly displayed on the patient to show exactly where to perform the operation. There are several AR medical systems proposed in [27–32].

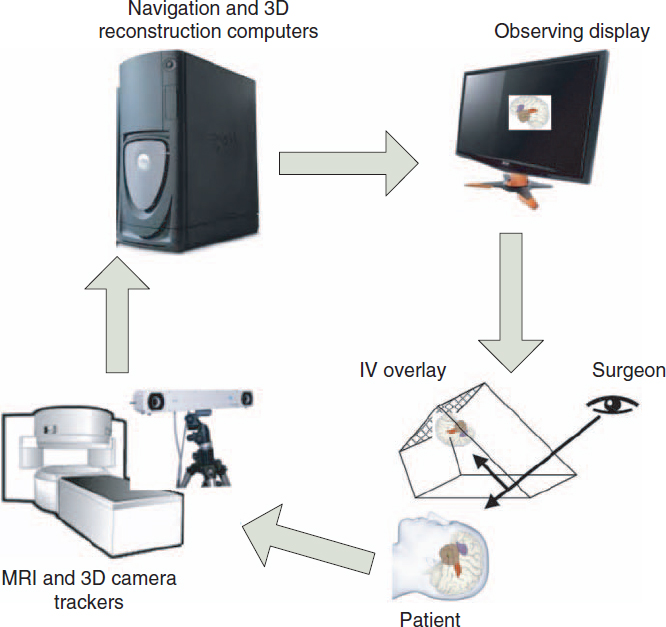

As shown in Figure 9.10, Liao et al. [33] proposed a 3D augmented reality navigation system which consists of an integral videography (IV) overlay device, a 3D data scanner (MRI), a position tracking device and computers for image rendering and display. The IV overlay device [33–36] is constructed with autostereoscopic techniques for 3D stereoscopic perception and is aligned with a half-silvered mirror to display the 3D structure of the patient to the doctor. The half-silvered mirror reflects an autostereoscopic image on the IV display back to the surgeon. Looking through this mirror, the surgeon sees the IV image formed in the corresponding location in the body. The spatially projected 3D images are superimposed onto the patient via the half-silvered mirror. Using this image overlay with reconstructed 3D medical images, a surgeon can “see through” the patient's body while being exactly positioned within the patient's anatomy. This system potentially enhances the surgeon's ability to perform a complex procedure. To properly align the MRI virtual images with the patient body, the system use a set of spatial test patterns to calibrate the displayed IV image and a set of fiducial markers for registering 3D points in space. A set of anatomic or fiducial markers (more than four markers located in different planes) is used to track the position of the patient's body. The same markers can be detected by MRI and localized in the position sensor space by computing their 3D coordinates. This procedure enables theoretical registration of the reflected spatial 3D image in conjunction with the target object.

Figure 9.10 MRI scans the brain of the patient to create the 3D brain structure. The 3D tracker uses test patterns to register the patient's location. The computer collects MRI scanned images and tracking information to place the virtual object at the proper location on the image overlay. The doctor can directly see both the helping image and the patient.

Surgical safety is important and critical and thus the following gives a complete workflow for checking the safety and precision of MRI-guided surgery using an IV image overlay:

- The spatial position of the IV must be calibrated preoperatively with a designed pattern.

- Sterile fiducial markers are placed on the patient's body. The images of the target area on the patient's body are scanned by open MRI.

- The markers and images are used to perform intraoperative segmentation and patient-to-image registration.

- The IV images are rendered and transferred to the overlay device for superimposing with the image captured from the patient.

- The IV image is updated according to the registration results, and the alignment of the overlay image with the patient is verified.

- The surgical treatment can be guided with the help of the IV image overlay to adjust the position and orientation of the instrument.

- After finishing the treatment, the patient is scanned by the MRI to confirm whether the entire tumor was resected or the target was punctured.

The procedure can be repeated until the evaluation of the surgery is successful.

9.5.2 Mobile Phone Applications

With the advance of a modern smartphones and the rapid adoption of wireless broadband technologies, AR has moved out of the lab and into the real world and become feasible in consumer devices. Although smartphones are not ideal platforms for AR research, they provide several useful functions as a successful platform for AR. The AR applications on mobile devices basically follow the video-augmented-display techniques to deliver the augmented sense to the users. However, the AR applications are still not as popular as the researchers expect a decade ago for the following two reasons. First, the currently available technology cannot reach the expectations of all consumers. The imagination and pop culture have made customers believe that immersive 3D and smart object technologies can deliver fantastic interaction to them but current technology is only half way there and thus the gap is still beyond the expectation. Second, immersive 3D technologies are impressive but there is little real life usage of them. For example, smart lamps and desks do not fit our current lifestyles, meet our needs, or provide any perceived benefit. Through careful observation, three technologies are the key in the past AR technologies. The first is “vision” which means the predominance of displays, graphics, and cameras, and the effects of these on the human visual system. The second is “space” which means the registration of objects physically and conceptually in the real world for creating a convincing AR. The final aspect is the technology which needs special techniques and equipment to be made for AR systems which makes the process expensive and hard to be accepted by the general public. Therefore, with limited success in smartphones, the AR applications should focus on the emergent technology available. However, for future applications, three aspects should be extended: vision becomes perception, space becomes place, and technologies become capabilities [37].

- Vision becomes perceptions

AR has always focused on the perception and representations in the hybrid space consisting of real and virtual objects. Although traditionally the focus has always been visual representations, there is more perception for AR to let the human comprehend the hybrid space rather than vision alone. Information perceived should give the user the distinct characteristics of the hybrid space for some kinds of human activities. One simple example is tabletop AR games [37] which work very well technically and are published with new generations of handheld gaming devices such as the Nintendo 3DS. However, AR research should go beyond the demonstration of what we might use AR for and begin to focus more explicitly on understanding the elements of perception and representation that are needed to accomplish each of these tasks. According to the tasks, AR designers and researchers understand how to blend and adapt information with the technologies available to help users accomplish their goal. This will be critical for the success of AR.

- Space becomes place

Currently, smartphone users tend to store and access information on the cloud and the cloud has made the computing placeness disappears. For example, you can no longer guarantee that a researcher is at home or in an office when simulating complex phenomena. The distinctive characteristic of AR is its ability to define a hybrid space but there is more to creating a place than the space itself. A place is a space with meaning. HCI researchers have used this same distinction to argue that seemingly inconsequential elements, such as furniture and artwork, are actually vital to effective collaboration and telepresence technologies [38] because they help create a sense of place that frames appropriate behaviors. It is also a distinction that can help us adapt AR research for the future. Mobile computing would like to have web-based services that are inherently built for location awareness and context awareness. Fundamentally, the kinds of captured data and the ways to structure and access the data need to evolve accordingly. The main challenge is to determine what data is relevant, how to collect data, when to retrieve data, and how to represent data when finishing retrieval. These are fundamental questions to understand humans rather than technologies.

- Technologies become capabilities

In the past, AR researchers focused their efforts on developing expensive and cumbersome equipment to create a proper AR interaction environment [39–41]. The environments are built for specific tasks or scenarios and the defects of such schemes are that the systems are expensive and hard to standardize. Although smartphones make AR popular, they are not explicitly designed for AR and not ideal for delivering AR content. A large number of smartphone applications provide AR by overlaying text on the magic window. This text is used to view the physical world with no more than the sensors built into the device (compass, GPS, accelerometers, gyroscopes). However, this AR helping scheme also has its limitations. The future generation of smartphone systems must create new AR interaction and application models to meet the expectation of users. These new models must present AR information in the real space around the user and this in turn requires the information to be aligned rigidly with their view of the physical space. Precise alignment requires the fusion of sensor information with vision processing of camera-captured videos [42, 43], which is beyond the capabilities of current technologies.

From these observations, 3D technologies can push mobile technologies to another level. First, the autostereoscopic display device can provide more precise perception of the world to the viewer. Second, video-plus-depth is an important content delivery scheme in 3D technologies The delivered depth map can be used to create better registration of virtual objects for AR applications. Therefore, 2D-3D reconstruction is the key for the success of 3D and AR technologies.

9.5.2.1 Nintendo 3DS System

Nintendo 3DS is a portable game console produced by Nintendo. It is the first 3D stereoscopic game console device released by a major game company. The company claims that it is a mobile gaming device where “games can be enjoyed with 3D effects without the need of any special glasses”. The system was revealed at the E3 show in Los Angeles in June 2010 and released in Japan on 26 February 2011, in Europe on 25 March 2011, in North America on 27 March 2011 and in Australia on 31 March 2011. The console is the successor of the handheld systems which are the primary competent of Sony's PlayStation Portable (PSP). In addition, the system would like to explore the possibility of motion-sensing elements and 3D vision display for portable devices. The following are several important specification of the system:

- Graphics processing unit (GPU): Nintendo 3DS is based on a custom PICA200 graphics processor from a Japanese start-up, Digital Media Professionals (DMP). The company has been developing optimized 2D and 3D graphics technologies which support OpenGL ES specifications which primarily focus on the embedded market.

- Two screens: 3DS has two screens. Both screens are capable of displaying a brilliant 16.77 million colors. The top screen is the largest selling point for this device to provide gamers a spatial feeling and depth of objects through the parallax barrier technology. 3D effect gives gamers the ability to find and aim objects more easily to enhance the game experience. The excellent 3D effects have a limited zone to perceive them. Outside the optimal zone, the perception of depth will distort and artifacts will show up. Therefore the 3DS provides a 3D view controller which allows users to adjust the intensity of the 3D display to fine tune the level of depth. Although the adjustment can relieve partial 3D problems, there are still several other issues: the players may experience eye strain or discomfort when they perceive the 3D effect; generally, most users will feel discomfort after an extended play session.

- Connectivity: Currently, the system only support a 2.4 GHz 802.11 Wi-Fi connectivity with enhanced security WPA2. Mobile connectivity is not provided in this device.

- Motion sensor and gyro sensor: Gyro sensors and motion sensors have become the default devices in smartphones because they provide different means for human–machine interaction. These devices can track the motion and tilt of the machine for a game to react with the user's control. They may provide a portable control for new and unique gameplay mechanics.

- 3D cameras: There are two cameras on the outside of the device. The cameras are synchronized to take a set of stereoscopic images. In addition, there is a camera on the top screen facing the player which is used to take 2D images and shoot 2D videos. All three cameras have a resolution of 640 × 480 pixels (0.3 megapixels). The two outer cameras simulates human eyes to see the world in 3D vision and allow for the creation of 3D photos. These 3D photos can be shown in a similar manner to the 3D game on the stereoscopic screen.

- 3D videos: In addition to 3D games and photos, the stereoscopic screen also has the ability to show 3D videos.

- Augmented reality games: The sense of AR can be enhanced by the 3D stereoscopic pair of cameras and motion sensor and gyro. The 3DS system uses the outer cameras and AR cards to have a video game able to unfold in the real world such as on your kitchen table or the floor of your living room. Generally, the AR cards are placed on a region in the real world and the camera will read the card and initiate the game stages and characters right before your eyes.

This naked-eye 3D vision-based screen can provide new gaming experiences to the players but there are still 3D artifact issues needing to be solved. Furthermore, a “killer app” for the usage of 3D vision is still need to get the console overwhelmingly by accepted gamers.