Security Assessment and Testing

Domain Objectives

• 6.1 Design and validate assessment, test, and audit strategies.

• 6.2 Conduct security control testing.

• 6.3 Collect security process data (e.g., technical and administrative).

• 6.4 Analyze test output and generate report.

• 6.5 Conduct or facilitate security audits.

Domain 6 addresses the important topic of security assessment and testing. Security assessment and testing enable cybersecurity professionals to determine if security controls implemented to protect assets are functioning properly and to the standards they were designed to meet. We will discuss several types of assessments and tests during the course of this domain, and address situations and reasons why we would use one over another. This domain covers designing and validating assessment, test, and audit strategies; conducting security control testing; collecting the security process data used to carry out a test; examining test output and generating test reports; and conducting security audits.

Design and validate assessment, test, and audit strategies

Design and validate assessment, test, and audit strategies

Before conducting any type of security assessment, test, or audit, the organization must pinpoint the reason it wants to conduct the activity in order to select the right process to meet its needs. Information security professionals conduct different types of assessments, tests, and audits for various reasons, with distinctive goals, and sometimes use different approaches for each. In this objective we will examine the reasons why an organization may want to conduct specific types of assessments, tests, and audits, including internal, external, and third-party, and discuss the strategies for each.

Defining Assessments, Tests, and Audits

Before we discuss the reasons for, and the strategies related to, conducting assessments, tests, and audits, it’s useful to define what each of these processes means. Although the terms assessment, test, and audit are frequently used interchangeably, each has a distinctive meaning. Note that each of these security processes consists of activities that can overlap; in fact, any or all three of these processes can be conducted simultaneously as part of the same effort, so it can sometimes be difficult to differentiate one from another or to separate them as distinct events.

NOTE Although “assessment” is the term that you will hear most often even when referring to tests and audits, for the purposes of this objective we will use the term “evaluation” to avoid any confusion when generally referencing security assessments, tests, and audits.

A test is a defined procedure that records properties, characteristics, and behaviors of the system being tested. Tests can be conducted using manual checklists, automated software such as vulnerability scanners, or a combination of both. Typically, there is a specific goal of the test, and the data collected is used to determine if the objective is met. The test results may be compared to regulatory standards or compliance frameworks to see if those results meet or exceed the requirements. Most security-related tests are technical in nature, but they don’t necessarily have to be. For example, a social engineering test can help determine if users are sufficiently trained to identify and resist social engineering attempts. Other examples of tests that we will discuss later in the domain include vulnerability tests and penetration tests.

An assessment is a collection of related tests, usually designed to determine if a system meets specified security standards. The collection of tests does not have to be all technical; a control assessment, for example, may consist of technical testing, documentation reviews, interviews with key personnel, and so on. Other assessments that we will discuss later in the domain include vulnerability assessments and compliance assessments.

Cross-Reference

The realm of security assessments also includes risk assessments, which were discussed in Objective 1.10.

Audits are conducted to measure the effectiveness of security controls. Auditing as a routine business process is conducted on a continual basis. Auditing as a detective control usually involves looking at audit trails such as log files to discover wrongdoing or violations of security policy. On the other hand, an audit as a specific event consists of a tailored, systematic assessment of a particular system or process to determine if it meets a particular standard. While audits can be used to analyze specific occurrences within the infrastructure, such as transactions, auditors may be specifically tasked with reviewing a particular incident, event, or process.

Designing and Validating Evaluations

Evaluations are designed to achieve specific results, whether it is a compliance assessment, a security process audit, or a system security test. While most of these types of evaluations use similar processes and procedures, the information and results that the organization needs to gain from the evaluation may affect how it is designed. For example, a test that must yield technical configuration findings must be designed to scan a system, collect security configuration information in a commonly used format, and report those results in a consistent manner that has been validated as accurate and acceptable. Design and validation of evaluations are critical to consistency and the usefulness of the results.

Goals and Strategies

The type of security evaluation an organization chooses to use depends on its goals. What does the organization want to get out of the assessment? What does it need to test? How does it need to conduct an audit and why do they need to conduct it in a specific way? Depending on what type of security evaluation the organization uses, there are different strategies that can be used to conduct each type of activity. For the purposes of covering exam objective 6.1, we will discuss goals and strategies that involve the use of internal personnel, external personnel, and third-party teams for assessments, tests, and audits.

Use of Internal, External, and Third-Party Assessors

Any type of evaluation can be resource intensive; a simple system test, even using internal personnel, costs time and labor hours. A larger assessment that covers multiple systems or an audit that looks at several different security business processes can be very expensive, especially when it involves the use of many different personnel and requires system downtime. An organization may be able to reduce cost by making use of available internal resources in an effective and efficient way, especially since the labor hours of those resources are already budgeted. However, internal teams are not always the best choice to perform an evaluation, as they can have confirmation biases. Sometimes the use of external teams or third-party assessors is necessary to get a truly objective evaluation.

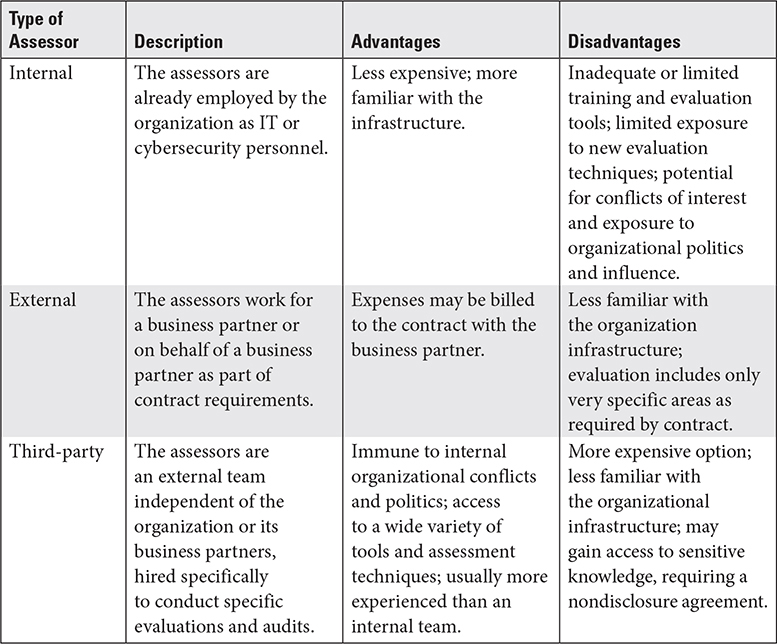

Table 6.1-1 summarizes the use of internal assessors versus external (second party) assessors and third-party assessors, as well as their advantages and disadvantages.

TABLE 6.1-1 Types of Assessors and Their Characteristics

EXAM TIP Although the difference between internal and other types of assessors seems simple, the difference between external and third-party assessors may be less so. Internal assessors work for the organization; external assessors work for a business partner or someone connected to the organization. Third-party assessors are completely independent of the organization and any partners. Typically, they work for an independent outside entity such as a regulatory agency.

REVIEW

Objective 6.1: Design and validate assessment, test, and audit strategies In this objective we defined and discussed assessments, tests, and audits. We also looked at three key strategies for the use of internal, external, and third-party assessors. The choice of which of these three types of assessors to use depends on several factors: the organization’s security budget, the potential for conflicts of interest or politics if internal auditors are used, and assessor familiarity with the infrastructure. An additional consideration is a broader knowledge of assessment techniques and access to more advanced tools.

6.1 QUESTIONS

1. Your manager has tasked you to determine if a specific control for a system is functional and effective. This effort will be very limited in scope and focus only on specific objectives using a defined set of procedures. Which of the following would be the most appropriate method to use?

A. Assessment

B. Test

C. Audit

D. Review

2. You have a major security compliance assessment that must be completed on one of your company’s systems. There is a very large budget for this assessment, and it must be conducted independently of organizational influence. Which of the following types of assessment teams would be the most appropriate to conduct the assessment?

A. Third-party assessment team

B. Internal assessment team

C. External assessment team

D. Second-party assessment team

6.1 ANSWERS

1. B A test would be the most appropriate method to use, since it is very limited in scope and involves only the list of procedures used to check for the control’s functionality and effectiveness. An assessment consists of several tests and is much broader in scope. An audit focuses on a specific event or business process. A review is not one of the three types of assessments.

2. A A third-party assessment team would be the most appropriate to conduct a major security compliance assessment. This type of assessment team is independent from the organization’s influence, but it’s usually also the most expensive type of team to employ for this effort. However, the organization has a large budget for this effort. Internal assessment teams are not independent of organizational influence but are usually less expensive. “External assessment team” and “second-party assessment team” are two terms for the same type of team, usually employed as part of a contract between two entities, such as business partners, and used only to assess specific requirements of the contract.

Conduct security control testing

Conduct security control testing

Objective 6.2 delves into the details of security control testing using various techniques and test methods. Security control testing involves testing a control for three main reasons: to evaluate the effectiveness of the control as implemented, to determine if the control is compliant with governance, and to see how well the control reduces or mitigates risk for the asset.

Security Control Testing

In this objective we will discuss various tests that serve to verify that security controls are functioning the way they were designed to, as well as validate that they are effective in fulfilling their purpose. Each of these tests serves to verify and validate security controls. We will examine the different types of testing, such as vulnerability assessment and penetration testing. We will also take a look at reviewing audit trails in logs, which describe what happened during a test. We will review other aspects of security control testing, such as synthetic transactions used during testing, as well as some of the more specific types of tests we can conduct on infrastructure assets. These include code review and testing, use and misuse case testing, interface testing, simulating a breach attack, and compliance testing. During this discussion, we will also address test coverage analysis and exactly how much testing is enough for a given assessment.

Vulnerability Assessment

A vulnerability assessment is a type of test that involves identifying the vulnerabilities, or weaknesses, present in an individual component of a system, an entire system, or even in different areas of the organization. While we most often look at vulnerability assessments as technical types of tests, vulnerability assessments can be performed on people and processes as well. Recall from Objective 1.10 that a vulnerability is not only a weakness inherent to an asset, but also the absence of a security control, or a security control that is not very effective in protecting an asset or reducing risk.

Vulnerability assessments can be performed using a variety of methods, but most technical assessments involve the use of network scanning tools, such as Nmap, OpenVAS, Qualys, or Nessus. These vulnerability scanners give us information about the infrastructure, such as live hosts on the network, open ports, protocols and services on those hosts, misconfigurations, missing patches, and a wealth of other information that can, when combined with other types of tests, inform us as to what weaknesses and vulnerabilities are present on our network.

EXAM TIP Vulnerability assessments are distinguished from penetration tests, discussed next, in that during a vulnerability assessment we only look for vulnerabilities; we don’t act on them by attempting to exploit them.

Vulnerability mitigation is also covered in other areas in this book, but understand that you must prioritize vulnerabilities you discover for mitigation. Most often mitigations included patching, reconfiguring devices or applications, or implementing additional security controls in the infrastructure.

Penetration Testing

A penetration test can be thought of as the next logical extension of a vulnerability assessment, with some key differences. First, while a vulnerability assessment looks for weaknesses that theoretically can be exploited, a penetration test takes it one step further by proving that those weaknesses can be exploited. It’s one thing to discover a vulnerability that a scanning tool reports as being severe, but it’s quite another to have the tools and techniques or the knowledge necessary to actually exploit the weakness. In other words, there’s often a big difference between discovering a severe vulnerability and the ability of an attacker to take advantage of it. Penetration testing attempts to exploit discovered vulnerabilities and affect system changes. Penetration tests are more precise in that they demonstrate the true weaknesses you should be concerned about on the network. This helps you to better apply resources and mitigations in an effort to eliminate those vulnerabilities that can truly be exploited, versus the ones that may not easily be exploitable.

CAUTION Penetration tests can be very intrusive on an organization and can result in the failure or degradation of infrastructure components, such as systems, networks, and applications. Before performing a penetration test, all parties should be aware of the risks involved, and there should be a documented agreement between the penetration test team and the client that explicitly states so, with the client signing that they understand the potential risks.

There are several different ways to categorize both penetration testers and penetration tests. First, you should be aware of the different types of testers (sometimes referred to as hackers) normally involved in penetration tests:

• White-hat (ethical) hackers Security professionals who test a system to determine its weaknesses so they can be mitigated and the system better secured

• Black-hat (unethical) hackers Malicious entities who hack systems to infiltrate them, access sensitive data, or disrupt infrastructure operations

• Gray-hat hackers Hackers who go back and forth between the white-hat and black-hat worlds, sometimes selling their expertise for the good of an organization, and sometimes engaging in unethical or illegal activities

NOTE Black-hat hackers (and gray-hat hackers operating as black-hat hackers) aren’t really considered penetration testers, as their motives are not to test security controls to improve system security.

Note that ethical hackers or testers may be internal security professionals who work for the organization on a continual basis or they may be external security professionals who provide valuable services as an independent team contracted by organizations.

In addition to the various colors of hats we have in penetration testing, there are also red teams, blue teams, and white cells, which we encounter during the course of a security test or exercise. The term red team is merely another name for the penetration testing team, the attacking group of testers. A blue team is the name of the group of people who serve as the defenders of the infrastructure and are tasked with detecting and responding to an attack. Finally, the white cell operates as an independent entity facilitating communication and coordination between the blue and red teams and organizational management. The white cell is usually the team managing the exercise, serving as liaison to all stakeholders, and having final decision-making authority over any conflicts during the exercise.

Just as there are different categories of testers, there are also different categories of tests. Each category has its own distinctive characteristics and advantages. They are summarized here as key terms and definitions to help you remember them for the exam:

• Full-knowledge (aka white box) test A penetration test in which the test team has full knowledge about the infrastructure and how it is architected, including operating systems, network segmentation, devices, and their vulnerabilities. This type of test is useful for enabling the team to focus on specific areas of interest or particular vulnerabilities.

• Zero-knowledge (aka blind or black box) test A penetration test in which the team has no knowledge of the infrastructure and must rely on any open source intelligence it can discover to determine network characteristics. This type of test is useful in discovering the network architecture and its vulnerabilities from an attacker’s point of view, since this is the most likely circumstances a real attacker will face. In this scenario, the defenders may be aware that the infrastructure is being attacked by a test team and will react accordingly per the test scenarios.

• Partial-knowledge (aka gray box) test A penetration test that takes place somewhere between full- and zero-knowledge testing, where the test team has only some limited useful knowledge about the infrastructure.

• Double-blind test A penetration test that is zero-knowledge for the attacking team, but also one in which the defenders are not aware of an assessment. The advantage to this type of test is that the defenders are also assessed on their detection and response capabilities, and tend to react in a more realistic manner.

• Targeted test A test that focuses on specific areas of interest by organizational management, and may be carried out by any of the mentioned types of testers or teams.

EXAM TIP You should never carry out a penetration test unless you are properly authorized to do so. Organizational management and the test team should jointly develop a set of “rules of engagement” that both clearly defines the test parameters and grants you permission to perform the test in writing.

Log Reviews

Log reviews serve several functions, both during normal security business processes and during security assessments. On a broader scope, log reviews take place on a daily basis to inspect the different types of transactions that occur within the network, such as auditing user actions or other events of interest. Logs are reviewed on a frequent basis to determine if any anomalous or malicious behavior has occurred. During a security assessment, log reviews serve the same function and also record the results of security testing. Logs are reviewed both during and after security tests to ensure that the test happened according to its designed parameters and produced predictable results. When test results occur that were not predicted, logs can be useful in tracing the reason.

Logs can be manually reviewed, or ingested by automated systems, such as security information and event management (SIEM) systems, for aggregation, correlation, and analysis. Logs are useful in reconstructing timelines and an order of events, particularly when they are combined from different sources that may present various perspectives.

Synthetic Transactions

Information systems normally operate on a system of transactions. A transaction is a collection of individual events that occur in some sort of serial or parallel sequence to produce a desired behavior. A transaction that occurs when a user account is created, for example, consists of several individual events, such as creating the username, then its password, assigning other attributes to the account, and finally adding the account to a group of users that have specific privileges. Synthetic transactions are automated or scripted events that are designed to simulate the behaviors of real users and processes. They allow security professionals to systematically test how critical security services behave under certain conditions. A synthetic transaction could consist of several scripted events that create objects with specific properties and execute code against those objects to cause specific behaviors. Synthetic transactions have the advantage of being controlled, predictable, and repeatable.

Code Review and Testing

Code review consists of examining developed software code’s functional, performance, and security characteristics. Code reviews are designed to determine if code meets specific standards set by the organization. There are several methods for code review, including manual reviews conducted by people and automated reviews conducted by code review software and scripts. Think of code review as proofreading software code before it is published and put into production in operational systems. Some of the items reviewed in code include structure and syntax, variables, interaction with system resources such as memory and CPU, security issues such as embedded passwords and encryption modules, and so on.

In addition to review, code is also tested using both manual and automated tools. Some of the security aspects of code testing involve

• Input validation

• Secure data storage

• Encryption and authentication mechanisms

• Secure transmission

• Reliance on unsecured or unknown resources, such as library files

• Interaction with system resources such as memory and CPU

• Bounds checking

• Error conditions resulting in a nonsecure application or system state

Misuse Case Testing

Use case testing is employed to assess all the different possible scenarios under which software may be correctly used from a functional perspective. Misuse case testing involves assessing the different scenarios where software may be misused or abused. A misuse case is a type of use case that tests how software code can be interacted with in an unauthorized, malicious, or destructive way. Misuse cases are used to determine all the various ways that a malicious entity, such as a hacker, could exploit weaknesses in a software application or system.

Test Coverage Analysis

When performing an assessment, you should determine which parts of a system, application, or process will be covered by the assessment, how much you’re going to test, and to what depth or level of detail. This is referred to as test coverage analysis and is performed to derive a percentage measurement of how much of a system is going to be examined by a test or overall assessment. This limit is something that IT professionals, security professionals, and management want to know before approving and conducting an assessment.

Test coverage analysis considers many factors, such as the cost of the assessment, personnel availability, and system criticality. Obviously, highly critical systems require more test coverage than noncritical systems, to ensure that all possible scenarios and exceptions are analyzed. Test coverage analysis can be one of those measurements that can be extended over time; for example, an organization could conduct in-depth vulnerability testing on 25 percent of its assets each week so that after a month, all assets have been covered, and then the process repeats. This approach ensures that no one piece of the infrastructure is left untested.

Interface Testing

Interfaces are connections and entry/exit points between hardware and software. Examples of interfaces include a graphical user interface (GUI) that helps a human user communicate with a system and a network interface that connects systems to networks to facilitate the exchange of data. Interfaces can also include security mechanisms, application programming interfaces (APIs), database tables, and a multitude of other examples. In any event, interfaces represent critical junction points for communications and data exchange and should be tested for security.

Security issues with interfaces include data movement from a more secure environment to a less secure one, introduction of malware into a system, and unauthorized access by a user or process. Interfaces are often responsible for not only exchanging data between systems or networks, but also data transformation, which can affect data integrity. Interface testing should address all of these issues and ensure that data exchanged between entities is of the right format and transferred with the correct access controls.

Breach Attack Simulations

Breach attack simulations are a form of automated penetration test that the organization may conduct on itself, targeting various systems, network segments, or even applications. A breach attack simulation is normally done on a regular schedule and lies somewhere along the spectrum between vulnerability testing and penetration testing, since it attempts to find vulnerabilities that are in fact exploitable. Normally, a breach attack simulation will not actually exploit a vulnerability, unless it is purposely configured to do so; it may run automated tests and synthetic transactions to show that the vulnerability was, in fact, exploitable, without actually executing the attack. An advantage of a breach attack simulation is that since it’s performed on a regular basis, it’s not simply a point-in-time assessment, as are penetration tests. Breach attack simulations often come as part of a software package, sometimes as part of a subscription- or cloud-based security suite.

Compliance Checks

Compliance checks are tests or assessments designed to determine if a control or security process complies with governance requirements. While compliance checks are conducted in pretty much the same way as the other types of assessments discussed so far, the real difference is that the results are checked against sometimes detailed requirements passed down through laws, regulations, or mandatory standards that the control must meet. For example, it may not be enough to confirm that the control ensures that data is encrypted during transmission; the governance requirement may mandate that the encryption strength be at a certain level or use a specific algorithm, so the control must be checked to determine if it meets that requirement.

NOTE While definitely interconnected, the terms secure and compliant are not synonymous. A control could be secure, but not necessarily compliant due to a lack of documentation or a consistent process. The opposite is also true.

Most assessments examine not only the security of a control and how well it protects an asset, but also if it complies with prescribed governance. An individual test, such as a vulnerability assessment performed by a network scanner, may only determine if the control is secure. It’s up to the security analyst performing the overall assessment to determine its compliance with governance requirements.

REVIEW

Objective 6.2: Conduct security control testing In this objective we looked a bit more in depth at security control testing. We discussed overall assessments, such as vulnerability assessments and penetration testing. Vulnerability assessments look for weaknesses in systems but do not attempt to exploit them. Penetration testing, on the other hand, not only finds vulnerabilities but assesses their severity by attempting to exploit them. Penetration testing can come in different flavors and can be performed by different types of testers, such as ethical hackers or internal and external testers. Penetration testing can be categorized in different ways, such as full-knowledge, zero-knowledge, and partial-knowledge tests.

Different tools and methods can be used for assessments but should include various methods to verify and validate security controls. Verification means that the control is tested to determine if it is working as designed; validation means that the control’s actual effectiveness in performing that function is determined. Security control testing includes:

• Log reviews to determine if the results of the tests are consistent with what we expect.

• Synthetic transactions, which are scripted sets of events that can be used to test different aspects of functionality or security with a system.

• Code review and testing, which are critical to ensuring that software code is error-free and employs strong security mechanisms.

• Misuse case testing, which looks at how users might abuse systems, resulting in unauthorized access to information or system compromise.

• Test coverage analysis, which examines data based on a percentage of measurement of how much of a system or application is tested.

• Interface testing, which examines the different connections and data exchange mechanisms between systems, networks, applications, and other components.

• Breach attack simulation, which is an automated periodic process that not only finds vulnerabilities but more closely examines whether or not they can be exploited, without actually doing so.

• Compliance checks, which ensure that controls are not only secure but also meet governance requirements.

6.2 QUESTIONS

1. You are a cybersecurity analyst who has been tasked with determining what weaknesses are on the network. Your supervisor has specifically said that regardless of the weaknesses you find, you must not disrupt operations by determining if they can be exploited. Which of the following is the best type of assessment you could perform that meets these requirements?

A. Penetration test

B. Vulnerability assessment

C. Zero-knowledge test

D. Compliance check

2. Your organization has performed several point-in-time tests to determine what weaknesses are in the infrastructure and if they can be exploited. However, these types of tests are expensive and require a lot of planning to execute. Which of the following would be a better way to determine on a regular basis not only weaknesses but if they could be exploited?

A. Perform a vulnerability assessment

B. Perform a zero-knowledge penetration test

C. Perform a full-knowledge penetration test

D. Perform a breach attack simulation

6.2 ANSWERS

1. B Of the choices given, performing a vulnerability assessment is the preferred type of test, since it discovers vulnerabilities in the infrastructure without disrupting operations by attempting to exploit them.

2. D A breach attack simulation is an automated method of periodically scanning and testing systems for vulnerabilities, as well as running scripts and other automated methods to determine if those vulnerabilities can be exploited. A breach attack simulation does not actually perform the exploitation on vulnerabilities, however.

Collect security process data (e.g., technical and administrative)

Collect security process data (e.g., technical and administrative)

To address objective 6.3, we will discuss the importance of collecting and using security process data for purposes of security assessment and testing. Security process data is any type of data generated that is relevant to managing the security program, whether it is routine data resulting from normal security processes or data that comes from specific events, such as incidents or test events. In the context of this domain, we will focus on data generated as a result of security business processes.

Security Data

There are so many sources of data that can be collected and used during security processes that they could not possibly be covered in this limited space. In general, you will want to collect both technical and administrative process data, whether it is electronic data, written data, or narrative data. The various data collection methods include ingesting data from log files, configuration files, and so on, as well as manual documentation reviews and interviews with key personnel.

EXAM TIP Security process data can be technical or administrative. Administrative data consists of analyzed or narrative data from administrative processes (such as metrics, for example), and technical data comes from technical tools used to collect data from systems.

Security Process Data

Although there are some generally accepted categories of information that we use for security, any data could be “security data” if it supports the organization’s security function. Obviously, security data includes logs, configuration files, and audit trail data, but other types of data, such as security process data (data that results from an administrative or technical process), risk reports, and other information can be useful in refining security processes as well. Beneficial data that is not specifically created for security purposes includes architectural, electric circuit, and building diagrams, as well as videos or voice recordings and information collected for unrelated objectives. Some of this information may be used to create a timeline for an incident during an investigation, for example, or to support a security decision. In the next few paragraphs, we will cover different sources of security process data and how they apply to the security function.

Data Sources

Data may be received and collected from a wide variety of sources within the infrastructure through automated means or manual collection. Technical data sources can be agent-based software on devices, or data can be collected by running a program or script that retrieves it from a host manually. Indeed, you should look at any source that will provide information regarding the effectiveness of a security control, the severity of a vulnerability, or an event that should be investigated and monitored. Valuable information may be gathered from a variety of sources, including electronic log files, paper access logs, vulnerability scan results, and configuration data. In a mature organization, a centralized security information and event management (SIEM) system may collect, aggregate, and correlate all of this information.

Cross-Reference

Objective 7.2 covers SIEM in more depth.

Account Management

Account management data is of critical importance to security personnel. Account management data includes details on user accounts, including their rights, permissions and other privileges, and other user attributes. Account usage history usually comes in electronic form as an account listing, but it could also come from paper records that are signed by supervisors to grant accounts to new individuals or increase their privileges. This information can be used to match user identities with audit trails that facilitates auditing and accountability.

Backup Verification Data

Backup verification data is important in that the organization needs to be sure that its information is being properly backed up, secured, transported, and stored. Backup verification data can come either from a written log in which a cybersecurity analyst or IT professional has manually recorded that backups have taken place or, more commonly, from transaction logs produced by the backup application or system. All critical information should be backed up in the event that an incident occurs that renders data unusable or damages a system. The event that causes a data loss should be well documented, along with the complete restore process for the backed-up data.

Training and Awareness

Since security training is the most critical piece of managing the human element, individuals should have training records that reflect all forms of security training completed. When the organization understands how well its personnel are trained, how often, and on which topics, this data can later be compared to trends that indicate whether training is responsible for the number or severity of security incidents increasing or decreasing. Training data can be recorded electronically in a database so effective training can be analyzed based on its frequency, delivery method, and/or facilitator. Security training data is a good indicator for how well personnel understand their security responsibilities.

Disaster Recovery and Business Continuity Data

The ability to restore data from backups is important. However, the bigger picture is how well the organization reacts to and recovers from a negative event, such as an incident or disaster. Data regarding how quickly a team responds to an incident or how well the team is organized and equipped is essential to assessing readiness to meet recovery objectives. Disaster recovery (DR) and business continuity (BC) data provide details of critical recovery point objectives, recovery time objectives, and maximum allowable downtime. The organization should develop these metrics before a disaster occurs. The most important data is how well the organization actually meets these objectives during an event.

Cross-Reference

Refer to Objective 1.8 for coverage of BC requirements and to Objective 7.11 for coverage of DR processes.

Key Performance and Risk Indicators

Many of the data points we have discussed so far may not be very useful alone. Data that you collect must be aggregated and correlated with other types of data to create information. Data that is considered useful should also match measurements or metrics that have been previously developed by the organization. Metrics can be used to develop key indicators. Key indicators show overall progress toward goals or deficiencies that must be addressed. Key indicators come in four common forms:

• Key performance indicators (KPIs) Metrics that show how well a business process or even a system is doing with regard to its expected performance.

• Key risk indicators (KRIs) Can show upward or downward trends in singular or aggregated risk for a system, process, or other area of interest.

• Key control indicators (KCIs) Show how well a control is functioning and performing.

• Key goal indicators (KGIs) Overall indicators that may use the other indicators to show how well organizational goals are being met.

Most of these indicators are created by aggregating, correlating, and analyzing relevant security process data, to include both technical and administrative data. Examples of data that can be used to produce these metrics include vulnerabilities discovered during a technical scan, risk analysis, and summaries of user habits and incidents.

Management Review and Approval

An important data source that should be collected, and also kept for governance reasons, is the paper trail that results from management review and approval of assessments, reports, security actions, and so on. This information is important so that both the organization and any external entities understand the detailed process completed to approve a policy, implement a control, or respond to a risk or security issue. Not only is this documentation trail important for audits and governance, but it also establishes that the security program has management approval and involvement. It can also establish due diligence and due care in the event that any liabilities come from overall management of the security process.

REVIEW

Objective 6.3: Collect security process data (e.g., technical and administrative) In this objective we looked at a sampling of data points that a security program should collect—and the sources from which to collect them—to maintain and manage its security program. Data can come from a variety of sources, including technical data from applications, devices, log files, and SIEM systems. Relevant data can also come from written sources, such as visitor access control logs, or in the form of process documentation such as infrastructure diagrams, configuration files, vulnerability assessment results, and so on. Even narrative data based on interviews from personnel can be useful. Types of data that are critical to collect, include, but are not limited to, account management data, backup verification data, and disaster recovery and response data. Metrics involve the use of specific data to create key indicators, which are specific data points of interest to management. Finally, a critical source of information comes from documentation reflecting management review and approval of security processes and actions.

6.3 QUESTIONS

1. You are an incident response analyst for your company. You are investigating an incident involving an employee who accessed restricted information he was not authorized to view. In addition to reviewing device and application logs, you also wish to establish the events for the timeline starting when the individual entered the facility and various other physical actions he took. Which of the following sources of information could help you establish these events in the incident timeline? (Choose two.)

A. Electronic badge access logs

B. Active Directory event logs

C. Closed-circuit television video files

D. Workstation audit logs

2. Which of the following is an aggregate of data points that can show management how well a control is performing or how risk is trending in the organization?

A. Quantitative analysis

B. Security process data

C. Metrics

D. Key indicators

6.3 ANSWERS

1. A C Badge access logs and video surveillance logs can help establish the events of the incident timeline that show the employee’s physical activities.

2. D Key indicators are aggregates of data points that have been collected, correlated, and analyzed to represent important metrics regarding performance, goals, and risk in the organization.

Analyze test output and generate report

Analyze test output and generate report

In this objective we will addresses what you should do with the test output and results, and how you should report those results. Different organizations and regulations have various reporting requirements, but we will discuss key pieces of the analysis and reporting process you should know for the CISSP exam in this objective, as well as remediation, exception handling, and ethical disclosure.

Test Results and Reporting

Once an organization has collected all relevant data from a test, it should analyze the test outputs and report those results to all stakeholders. Stakeholders include management, but may also include business partners, regulatory agencies, and so on. Each organization has its own internal reporting requirements, such as routing the report to different departments and managers, edits and changes that must be made, and reporting format. All of these requirements should be taken into consideration when reporting, but the key take-away here is that the primary stakeholders (i.e., senior management and other key organizational personnel) should be informed as to the results of the test, how it affects the security posture and risk of the organization, and what the path forward is for reducing the risk and strengthening the security posture.

Analyzing the Test Results

Analyzing the results of a test involves gathering all relevant data, as discussed in Objective 6.3, analyzing the results and output of tests that were executed during the evaluation, and distilling all this data into actionable information. Regardless of the evaluation methodology or the amount of data that goes into the analysis, properly evaluating the test results should provide the following:

• Analysis that is thorough, clear, and concise

• Reduction or elimination of repetitive or unnecessary data

• Prioritization of vulnerabilities or other issues according to severity, risk, cost, etc.

• Information that shows what is going on in the infrastructure and how it affects the organization’s security posture and risk

• Root causes of any issues, when possible

• Business impact to the organization

• Mitigation alternatives or compensating controls

• Identifying changes to key performance indicators (KPIs), key goal indicators (KGIs), and key risk indicators (KRIs), as well as other metrics defined by the organization.

Cross-Reference

KPIs, KGIs, and KRIs were defined in Objective 6.3.

Reporting

After completing the test, security personnel report the results and any recommendations to management and other stakeholders. The goal of a report is to inform stakeholders of the actual situation with regard to any issues, shortcomings, or vulnerabilities that may have been uncovered during the test. The report should include historical analysis, root causes, and any negative trends that management should know about. Additionally, any positive results, such as reduced risk and good security practices, should also be highlighted in the report. The findings should be discussed in technical terms for those who have the knowledge and experience to implement mitigations for any discovered vulnerabilities, but often a nontechnical summary of the analysis is needed in the final report for senior management and other nontechnical stakeholders to understand.

The report should clearly convey the organization’s security posture, compliance status, and risk incurred by systems or the organization, depending on the scope and context of the report. Relevant metrics that have been formally defined by the organization, such as the aforementioned indicator metrics, should also be reviewed. Finally, recommendations and other mitigations should be included in the report to justify expenditures of resources (money, time, equipment, and people) needed to mitigate any issues.

Remediation, Exception Handling, and Ethical Disclosure

In addition to analyzing test results and reporting, exam objective 6.4 requires that we examine three critical areas related to the results of evaluatons. In addition to other requirements detailed throughout this domain and other parts of the book, you must understand requirements for remediating vulnerabilities, handling exceptions to vulnerability mitigation management, and disclosing vulnerabilities as part of your ethical professional behavior.

Remediation

As a general rule, all vulnerabilities should be identified as soon as possible and mitigated in short order. This may mean patching software, swapping out a hardware component, requiring additional training for personnel, developing a new policy, or even altering a business process. It’s generally not cost-effective for an organization to try to mitigate all discovered vulnerabilities at once; instead, the organization should prioritize the vulnerabilities according to several factors. Severity is a top priority, closely followed by cost to mitigate, level of actual risk to the organization and its systems, and scope of the vulnerability. For example, a vulnerability that presents a low risk to an organization because it only affects a single system that is not connected to the outside world may be prioritized lower for remediation than a vulnerability that affects several critical systems and presents a higher risk.

Remediation actions should be carefully considered by management and included in the formal change and configuration management processes. Actions should also be formally documented in process or system maintenance records, as well as risk documentation.

Exception Handling

As mentioned earlier, vulnerabilities should be mitigated as soon as practically possible, based mainly on severity of the vulnerability. However, there are times when a vulnerability cannot be easily mitigated for various reasons, including lack of resources, system downtime, regulations, or other reasons. Exception handling refers to how vulnerabilities are handled when they cannot be immediately remediated. For example, discovering vulnerabilities in a medical device that cannot be easily patched due to U.S. Food and Drug Administration (FDA) regulations requires that the organization develop an exception-handling process to mitigate vulnerabilities by employing compensating controls.

The exception process should start with notifying the appropriate individuals who can make the decision regarding mitigation options, typically senior management; documenting the exception and the reasons for it; and determining compensating controls that can reduce the risk of not directly mitigating the vulnerability. There should also be a follow-up plan to look at the long-term viability of mitigating the vulnerability on a more permanent basis, which can include upgrading or replacing the system, changing the control, or even altering business processes.

EXAM TIP Understand how exceptions to policy, such as a vulnerability that cannot be immediately remediated, are handled through compensating controls, documentation, and follow-up on a periodic basis.

Ethical Disclosure

Ethical disclosure refers to a cybersecurity professional’s ethical obligation to disclose the discovery of vulnerabilities to the organization’s stakeholders. This ethical obligation applies whether you are an employee of the organization and discover a vulnerability during your routine duties or are an outside assessor employed to conduct an assessment or audit on an organization. In either case (or any other scenario), if you discover a vulnerability in a software or hardware product, you have an ethical obligation to disclose it. You should disclose any discovered vulnerabilities to organizations using the system or product, the creator/developers of the product, and, when necessary, the appropriate professional communities. As a professional courtesy, you should not disclose a newly discovered vulnerability to the general population without first disclosing it to those entities mentioned, since the vulnerability could be used by malicious entities to compromise systems before there is a mitigation for it.

REVIEW

Objective 6.4: Analyze test output and generate report This objective summarized what you should consider when analyzing and reporting evaluation results, to include general requirements for analyzing test output and reporting test results to all stakeholders. This objective also addressed vulnerability remediation, exception handling, and ethical disclosure of vulnerabilities.

6.4 QUESTIONS

1. You have discovered a vulnerability in a software product your organization uses. While researching patches or other mitigations for the vulnerability, you find that this vulnerability has never been documented. Which of the following should you do as a professional cybersecurity analyst? (Choose all that apply.)

A. Contact the software vendor directly to report the vulnerability.

B. Immediately post information about the vulnerability on public security sites.

C. Say nothing; if no one knows the vulnerability exists, then no one will attempt to exploit it.

D. Inform your supervisor.

2. After a routine vulnerability scan, you find that several critical servers have an operating system vulnerability that exposes the organization to a high risk of exploitation. The vulnerability is in a service that is not used by the servers but is running by default. There is a patch for the vulnerability, but it involves taking the servers down, which is not acceptable due to high data processing volumes during this time of the year. Which of the following is the best course of action to address this vulnerability?

A. Do nothing; since the servers don’t use that particular service, the vulnerability can’t affect them.

B. Take the servers down immediately and patch the vulnerability on each one.

C. Disable the service from running on the critical systems, and once the high processing times have passed, then patch the vulnerability.

D. Disable the service and do not worry about patching the vulnerability, since the servers don’t use that service.

6.4 ANSWERS

1. A D You should contact the software vendor and report the vulnerability so that a patch or other mitigation can be developed for it. You should also contact your supervisor so that management is aware of the vulnerability and can determine any mitigations necessary to protect the organization’s assets.

2. C The vulnerability must be patched eventually, but in the short term, simply disabling the service may mitigate or reduce the risk somewhat until the high data processing time has passed; then the servers can undergo the downtime required to patch the vulnerability. Doing nothing is not an option; even if the servers do not use that particular service, the vulnerability can be exploited. Taking down the servers immediately and patching the vulnerability on each one is generally not an option since it is a time of high-volume data processing and this may severely impact the business.

Conduct or facilitate security audits

Conduct or facilitate security audits

To conclude our discussion on security assessments, tests, and audits, in this objective we will address conducting and facilitating security audits, specifically using internal, external, and third-party auditors. We will reiterate the definition of auditing as a process, as well as audits as distinct events, and provide some examples of audits and the circumstances under which different types of audit teams would be most beneficial.

Conducting Security Audits

In Objective 6.1, we briefly described audits under two different contexts. First, auditing is a detective control and a process that should take place on an ongoing basis. It is a security business process that involves continuously monitoring and reviewing data to detect violations of security policy, illegal activities, and deviations from standards in how systems and information are accessed. However, security audits can also be distinct events that are planned to review specific areas within an organization, such as a system, a particular technology, and even a set of business processes. A security audit can also be used to review events such as incidents, as well as to ensure that organizations are following standardized procedures in accordance with compliance or governance requirements.

NOTE For our purposes here, assume that “audits” and “auditors” refer throughout this objective as specifically “security audits” and “security auditors,” unless otherwise specified.

For the purposes of this objective we will look at three different types of auditing teams that can be used to conduct an audit: internal auditors, external auditors, and third-party auditors. These are similar to the three types of assessment teams discussed in Objective 6.1, so you’ll notice some overlap in this discussion.

EXAM TIP There is little difference between internal, external, and third-party assessors, also discussed briefly in Objective 6.1, and the three types of auditors discussed here, except where there are minor nuances in the types of assessments versus audits. The teams that can perform them have the same characteristics.

Internal Security Auditors

Internal auditors are likely the most cost-effective to use compared to external and third-party audit teams. Internal auditors work full time for the organization in question, so auditing is already part of their normal daily duties. Cybersecurity auditors are tasked with reviewing logs and other data on a daily basis to detect policy violations, illegal activities, and anomalies in the infrastructure. However, auditors can be assigned to specific events, such as auditing the account management process or auditing the results of an incident response or a business continuity exercise.

The advantages of using internal auditors include

• Cost effectiveness, since auditors already work for the organization

• Less difficulty in scheduling an internal audit versus an external audit

• Familiarity with the organization, its personnel, processes, and systems

• Reduces exposure of sensitive data to outsiders

There are, however, disadvantages to using internal audit teams, and indeed there are circumstances when this may not be permitted, particularly when the audit has been directed by an outside entity, such as a regulatory agency. Disadvantages of using an internal audit team include

• Influenced by organizational politics and conflicts of interest

• May not be full-time auditors and likely have other duties that must be performed during an audit event

• Lack of independence from organizational structure and management

• Not as experienced in a wide variety of auditing tools and techniques

• May not be allowed by external agencies if governance requires an independent audit

External Security Auditors

Just like an external assessment team, as mentioned in Objective 6.1, an external audit team normally works for a business partner entity of the organization. Contracts or other legal agreements between organizations may stipulate that certain systems, activities, or processes must be audited on a regular basis. An external auditor, also considered a second-party auditor, will periodically come into the organization and audit specific systems or processes to meet the requirements of the contract.

As with internal audit teams, using external auditors has disadvantages and advantages. Advantages include

• Cost may be more than internal audit team, but is typically already budgeted and built into the contract

• Not as easily influenced by organizational politics or conflicts of interest

• Defined schedule due to contract requirements (e.g., annually)

Disadvantages of using an external team include

• Lack of familiarity with the organization’s people, internal processes, and infrastructure

• Lack of independence (allegiances to the business partner, not the organization)

• May be influenced by the business partner’s internal conflicts of interest or politics

• May incur some of the same limitations as an internal team, such as split time between a regular IT or cybersecurity job, lack of access to advanced auditing tools and techniques, etc.

Third-Party Security Auditors

The third and final option relevant to this objective is the use of third-party auditors. Third-party auditors do not work for the organization or any of its business partners. A third-party audit team is normally required for independence from any internal stakeholder entity. This is the type of team that may be employed by a regulatory agency, for example, to ensure compliance with laws or regulations. Depending on the type of audit, an organization may not have a choice about whether to use a third-party audit team; it may be levied as part of external governance requirements.

As with the other two types of teams, using third-party audit teams has advantages and disadvantages. Advantages of using a third-party audit team include

• True independence from any organizational conflict of interest or politics

• Access to advanced auditing tools and techniques

• Establishes unbiased proof of compliance or noncompliance with regulations or standards

The disadvantages of using a third-party audit team include

• Most expensive option of the three types of audit teams; the expense cannot always be predicted or budgeted

• Sometimes difficult to schedule due to other auditing commitments, even if required on a recurring basis

• Lack of familiarity with the organization’s personnel, processes, and infrastructure

EXAM TIP Remember that external auditors work for business partners or stakeholders outside of the organization. Third-party auditors work for independent organizations, such as regulatory agencies.

REVIEW

Objective 6.5: Conduct or facilitate security audits In this objective we discussed conducting security audits. Auditing is an ongoing business process that looks for wrongdoing and anomalies in operations. However, an audit is also an event used to review compliance standards for systems, processes, and other activities. Audits can be performed by one of three types of audit teams: internal teams, external teams, and third-party teams. Internal teams are more cost-effective but lack independence from the organization and may not have access to the right audit tools and techniques. External teams work for a business partner or other stakeholder and audit processes and activities as required by a contract. Third-party audit teams are more expensive but allow some level of independence from organizational stakeholders and may be required in the event of regulatory audits.

6.5 QUESTIONS

1. You are a cybersecurity analyst for your company. You have been tasked with auditing account management in another division of the company. Which of the following types of auditing would this be considered?

A. Third-party audit

B. External audit

C. Internal audit

D. Second-party audit

2. Your company must be audited for compliance with regulations that protect healthcare information. Which of the following would be the most appropriate type of auditors to perform this task?

A. External auditors

B. Internal auditors

C. Second-party auditors

D. Third-party auditors

6.5 ANSWERS

1. C An internal audit uses auditors from within an organization to assess another part of the organization.

2. D For a compliance audit, third-party auditors are usually the most appropriate type of audit team to conduct the effort, due to their independence.