Security Operations

Domain Objectives

• 7.1 Understand and comply with investigations.

• 7.2 Conduct logging and monitoring activities.

• 7.3 Perform Configuration Management (CM) (e.g., provisioning, baselining, automation).

• 7.4 Apply foundational security operations concepts.

• 7.5 Apply resource protection.

• 7.6 Conduct incident management.

• 7.7 Operate and maintain detective and preventative measures.

• 7.8 Implement and support patch and vulnerability management.

• 7.9 Understand and participate in change management processes.

• 7.10 Implement recovery strategies.

• 7.11 Implement Disaster Recovery (DR) processes.

• 7.12 Test Disaster Recovery Plans (DRP).

• 7.13 Participate in Business Continuity (BC) planning and exercises.

• 7.14 Implement and manage physical security.

• 7.15 Address personnel safety and security concerns.

Domain 7 is unique in that it has the most objectives of any of the CISSP domains, and it accounts for approximately 13 percent of the exam questions. You’ll find that many of the objectives covered in this domain, Security Operations, have also been briefly discussed throughout the entire book. This is because security operations are diverse and overarching activities that span multiple areas within security.

In this domain we will examine a wide range of subjects, including those that are reactive in nature, such as investigations, logging and monitoring, vulnerability management, and incident management. We will also look at the details of how to ensure a secure change and configuration management process that is supported by patch management.

We will review some of the foundational security operations concepts that we also discussed in Domain 1 and apply some of those concepts to resource protection. We will also look at some of the more technical details of detective and preventive measures, such as firewalls and intrusion detection systems. Four of the objectives address business continuity planning and disaster recovery, and we will discuss the strategies involved with each topic as well as how to implement and test the plans associated with these processes. Finally, we will review physical and personnel safety and security concerns.

Understand and comply with investigations

Understand and comply with investigations

In Objective 1.6 we briefly touched on the types of investigations you may encounter in security, and we also reviewed the related topics of legal and regulatory issues in Objective 1.5. These two objectives go hand-in-hand with Objective 7.1, which carries our discussion a bit further by focusing on how investigations are conducted.

Investigations

Recall from Objective 1.6 that the four primary types of investigations are administrative, regulatory, civil, and criminal investigations. Regardless of the type of investigation, however, most of the activities, processes, and techniques that are used are common across all of them. This includes how to collect and handle evidence; reporting and documenting the investigation; the investigative techniques that are used; the digital forensics tools, tactics, and procedures that are implemented; and the artifacts that are discovered on computing devices using those tools, tactics, and procedures. These common activities are the focus of this objective.

Cross-Reference

The types of investigations you may encounter in cybersecurity were discussed at length in Objective 1.6.

Forensic Investigations

Computers, mobile devices, network devices, applications, and data files all contain potential evidence. In the event of an incident involving any of them, they must all be investigated. Computing devices can be part of an incident in three different ways:

• As the target of the incident (e.g., as an attack on a system)

• As the tool of the incident (used to directly perpetrate a crime, such as a hacking attack)

• Incidental to the event (part of the event but not the direct target or tool used, such as researching how to commit a murder, for instance)

Computer forensics is the science of the identification, preservation, acquisition, analysis, discovery, documentation, and presentation of evidence to a court of law (either civil or criminal), corporate management, or even to a routine customer. Computer forensic investigations are carried out by personnel who are uniquely qualified to perform them. E-discovery is another term with which you should be familiar; it is the process of discovering digital evidence and preparing that information to be presented in court. The most important part of the computer forensics process is how evidence is collected and handled, discussed next.

Evidence Collection and Handling

Evidence preservation is the most crucial part of an investigation. Even if an unskilled investigator is not properly trained to analyze evidence, the investigation can be saved by ensuring that the evidence is preserved and protected at all stages of the investigation. Evidence preservation involves proper collection and handling, including chain of custody, physical protection, and logical protection. We will discuss proper collection and handling procedures in the next few sections. However, chain of custody is an important one to discuss first.

Chain of custody refers to the consistent, formalized documentation of who is in possession of evidence at all stages of the investigation, up to and including when the evidence is presented in court or another proceeding. Chain of custody starts at the time evidence is obtained. The individual collecting the evidence initiates a chain of custody form that describes the evidence and documents the time and location it was collected, who collected it, and its transfer. From then on, every individual who takes possession of the evidence, relocates it, or pulls it out of storage for analysis must document those actions on the chain of custody form. This ensures that an uninterrupted sequence of events, timelines, and locations for the evidence is maintained throughout the entire investigation. Chain of custody assures evidence integrity and protects against tampering. This is one of the most crucial pieces of documentation you must initiate and maintain throughout the entire investigative cycle.

Evidence Life Cycle

Evidence has a defined life cycle. This means that the moment evidence is identified during the initial response to the investigation, it is collected and handled carefully, according to strict procedures. Chain of custody begins this critical process, but it doesn’t end with that event. Evidence must be protected throughout the investigation against tampering; even the appearance of intentional or inadvertent changes to evidence may call its investigative value and admissibility into court into question.

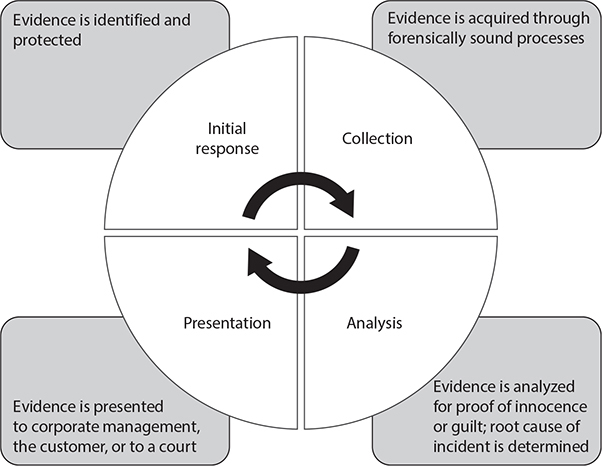

The evidence life cycle consists of four major phases, summarized as follows:

• Initial response Evidence is identified and protected; a chain of custody is initiated.

• Collection Evidence is acquired through forensically sound processes; evidence integrity is preserved.

• Analysis All evidence is analyzed at the technical level to determine the timeline and chain of events. Determining innocence or guilt of a suspect is the goal as well as identifying the root cause of the incident.

• Presentation Evidence is summarized in the correct format for presentation and reporting for corporate management, a customer, or to a court of law.

NOTE This life cycle, as with all other life cycles, may be different depending upon the methodology or standard used, since there are many different life cycles that exist in the investigative world. However, all of them agree on fundamental evidence collection and handling processes, which are standardized all over the world.

Obviously, there are more in-depth processes and procedures that must take place at each of these phases, and we will discuss those at length in the next section. Figure 7.1-1 summarizes the evidence life cycle.

FIGURE 7.1-1 The evidence life cycle

Evidence Collection and Handling Procedures

There are standardized evidence collection and handling procedures used throughout the world, regardless of the type of investigation. These have all been adopted as formal standards by different law enforcement agencies, security firms, and professional organizations. They are summarized as follows:

• Secure the scene of the crime or incident against unauthorized personnel.

• Photograph the scene before it is disturbed in any way.

• Don’t arbitrarily power off devices until any available live evidence has been gathered from them.

• Obtain legal authorization from law enforcement or an asset owner before removing items from the scene.

• Inventory all items removed from the scene.

• Transport and store all evidence items in protected containers and store them in secure areas.

• Maintain a strong chain of custody at all times.

• Don’t perform a forensic analysis on original evidence items; forensically duplicate the evidence item and perform an analysis on the duplicate to avoid destroying or compromising the original.

EXAM TIP Once evidence is obtained from the source, such as a device, logs, and so on, that source may be placed on what is known as legal hold. Legal hold ensures that any devices or media that contain the original evidence must be kept in secure storage, and access must be controlled. These items cannot be reused, destroyed, or released to anyone outside the chain of custody until cleared by a legal department or court.

Artifacts

Artifacts are any items of potential evidentiary value obtained from a system. They are usually discrete pieces of information in the form of files, such as documents, pictures, executables, e-mails, text messages, and so on that are found on computers, mobile devices, or networks. However, they can also be information such as screenshots, the contents of RAM, and storage media images.

Artifacts are used as evidence of activities in investigations and can serve to support audit trails. For example, the Internet history files from a computer can support an audit log that indicates an individual visited a prohibited website. Files such as pictures or documents can indicate whether individuals are performing illegal activities on their system.

Note that artifacts by themselves are not indicative of an individual’s guilt or innocence; the presence of artifacts on a system mutually corroborates audit trails and other sources of information during an investigation. Artifacts must be investigated on their own merit before they are determined to meet the requirements of evidence. As discussed earlier in the objective, digital artifacts, as potential evidence, must be collected and handled with care.

Digital Forensics Tools, Tactics, and Procedures

While knowledge and experience with evidence collection and handling procedures are among the most crucial skills forensic investigators can have, they should also have core technical analysis skills and knowledge of a variety of subjects, including how storage media is constructed and operates, operating system architecture, networking, programming or scripting, and security. If you are conducting a forensic investigation, these skill sets will assist you greatly when performing some of the following forensic tasks:

• Data acquisition from volatile memory or hard drives using forensic techniques

• Establishing and maintaining evidence integrity through hashing tools to ensure artifacts are not intentionally or inadvertently changed

• Data carving (the process used to “carve” discrete data or files from raw data on a system using forensics processes) to locate and preserve artifacts that have been deleted or hidden

We are long past the days when computer forensics was performed mostly on simply end-user desktop computers or servers. In today’s environment, devices and data are integrated all the way from end-user mobile devices, into the cloud, and back to the organization’s infrastructure. While core forensics knowledge and skills are still necessary, so too are knowledge and skills related to specific procedures that are tied to more narrowly focused areas within forensics. These areas often require specialized knowledge and tools in addition to generalized forensics skills. These areas of expertise include

• Cloud forensics

• Mobile device forensics

• Virtual machine forensics

The choice of tools that a forensic investigator uses is important. There are specific tools that are used for specific actions, including data acquisition, log aggregation review, and so on. Each investigator or organization typically has favorite tools they use to perform all of these tasks. Some tools are proprietary, commercial-off-the-shelf enterprise-level software suites sold specifically for forensics processes, but many are simply individual tools that come with the operating system itself, such as utilities or built-in applications. Many forensics tools may also be internally developed utilities, to include scripts, for instance, or even open-source software utilities or applications.

Regardless of which digital forensics tools an organization uses, the following are some key things to remember about a forensics tool set:

• The tools should be standardized and thoroughly documented. An organization should have established procedures for the investigator to follow when using a tool.

• Forensics tools are often validated by professional organizations or national standards agencies; these tools should be preferred over tools developed in-house or tools whose origins and effectiveness can’t be easily verified.

• Tools that can offer repeatable and verifiable results should be used; if a tool does not image the same hard drive consistently every single time, for example, then its usefulness may be limited since its integrity cannot be trusted.

While this objective can’t possibly cover every single tool available to you during your forensic investigation, you can generally categorize tools in the following areas:

• Network tools (protocol analyzers or sniffers such as Wireshark and tcpdump)

• System tools (used to obtain technical configuration information for a system)

• File analysis tools

• Storage media imaging tools (both hardware and software tools)

• Log aggregation and analysis tools

• Memory acquisition and analysis tools

• Mobile device forensics tools

Investigative Techniques

Investigations attempt to discover what happened before, during, and after an incident. The goal of investigators is to identify the root cause of an incident and help ensure someone is held accountable for illegal acts or those that violate policy. Investigators also want to answer questions such as the who, what, where, when, and how of an incident. Investigators have the primary tasks of

• Collecting and preserving all evidence

• Determining the timeline and sequence of events

• Determining the root cause of and methods used during an incident

• Performing a technical analysis of evidence

• Submitting a complete, comprehensive, unbiased report

Investigators should always treat all investigations as if the results will eventually be presented in a court of law. This is because many investigations, even ones that seemingly start out as innocuous policy violations, may go to court if the evidence indicates that a violation of the law has occurred.

During an investigation, there are key points to keep in mind:

• Remain unbiased; don’t go into an investigation automatically presuming guilt or innocence.

• Always have another investigator validate work you have performed.

• Maintain documented, verifiable forensic procedures.

• Keep all investigation information confidential to the extent possible; it should only be shared with key personnel such as senior corporate managers or law enforcement.

• Only perform procedures on evidence that you are trained and qualified to perform; don’t undertake any actions for which you are not qualified.

• Ensure you have the proper tools to perform forensic activities on evidence; using the wrong tool could inadvertently destroy or compromise the integrity of evidence.

Reporting and Documentation

Documentation is one of the most critical aspects of investigations. An investigator should document every action they take during an investigation. The documentation for investigations should meet legal requirements and be thorough and complete. The content of documentation includes

• All actions involving evidence and witnesses (chain of custody, artifacts collected, witness interviews, etc.)

• Dates, times, and relevant events

• All forensic analysis of evidence

Reports and relevant documentation are usually delivered formally to the corporate legal department, human resources, or lawyers for all parties, as well as law enforcement investigators. Reports and investigation documentation should be clear and concise and present only the facts regarding an incident.

A good report includes investigative events, timelines, and evidence. The analysis portion of the report includes determination of the root cause(s), attack methods used during the incident, and the assertion of proof of guilt or innocence of the accused.

Investigative reports usually are formatted according to the desires of the corporate management or the court or agency that maintains jurisdiction over the investigation. In general, however, the investigation report should consist of an executive summary, the details of the events of the investigation, any findings and supporting evidence, and conclusions regarding the root cause of the case. Characteristics of a well-written investigative report include

• Clear, concise, and nontechnical

• Well written and well researched

• Answers to critical investigative questions of who, what, where, when, why, and how

• Conclusions supported by evidence

• Unbiased analysis

In addition to documentation and reporting, investigations also may make use of witness depositions or testimony. Witnesses are often asked to testify if they have direct knowledge of the facts of the case. Investigators can also be required to testify in court to detail the facts of the investigation.

REVIEW

Objective 7.1: Understand and comply with investigations This objective provided an opportunity to discuss details of investigations in more depth. Whereas Objective 1.6 covered the different types of investigations, in this objective we discussed the details of how investigations are conducted. We examined in particular forensic investigations, which involve gathering evidentiary data from computing systems.

Evidence collection and handling is the most crucial part of an investigation, since once evidence has been destroyed or compromised, it may not be recovered or trusted. The evidence life cycle consists of four general phases: initial response, collection, analysis, and presentation. The most critical part of evidence collection and handling is to establish a chain of custody that follows the evidence over its entire life cycle. Chain of custody assures that the evidence is always accounted for during transfer, storage, and analysis, and helps to rebut claims that the evidence has been tampered with or is unreliable. Other critical evidence handling activities include securing the scene of the incident or crime, photographing all evidence before it is removed, securely transporting and storing the evidence, and conducting analysis using only verifiable forensic procedures.

Artifacts are any items of potential evidentiary value obtained from a system, including files, logs, screen images, media, network traffic, and the contents of volatile memory. Artifacts are used to support a legal case and corroborate with other sources of information.

Digital forensics consists of a wide variety of tools, techniques, and procedures. The forensic investigator should be well-versed in a variety of disciplines, including networking, operating systems, programming, and other specific areas such as cloud computing, mobile device forensics, and virtual machine technology. Digital forensics tools can be categorized in terms of network tools, system tools, file analysis tools, storage media imaging tools, log aggregation analysis tools, memory acquisition and analysis tools, and mobile device tools.

Investigative techniques include solid knowledge of legal and forensic procedures with regard to evidence collection and handling, as well as technical areas of expertise. Investigators should also understand how to present an analysis of evidence in a court of law and should conduct all investigations as if they will proceed in that direction. Investigators should also approach every incident with an open mind with no bias as to the guilt or innocence of a suspect.

Forensic reports and documentation must be thorough and complete; they must follow the format prescribed by the corporate entity, customer, or the court of jurisdiction. They should include an executive summary, technical findings, and analysis of the evidence that supports those findings. They should also propose a conclusion and list any relevant facts pertinent to the case. Reports should also be clear and understandable to nontechnical personnel.

7.1 QUESTIONS

1. You have been called to investigate an incident of an employee who has violated corporate security policies by downloading copyrighted materials from the Internet. You must collect all evidence relating to the incident for the investigation, including the employee’s workstation. Which one of the following is the most critical aspect of the response?

A. Establishing a chain of custody

B. Analyzing the workstation’s hard drive

C. Creating a forensic duplicate of the workstation’s hard drive

D. Creating a formal report for management

2. Which of the following best describes one of the primary tasks a forensic investigator must complete?

A. Ensuring that the evidence proves a suspect is guilty

B. Determining a timeline and sequence of events

C. Performing the investigation alone to ensure confidentiality

D. Manually analyzing device logs

7.1 ANSWERS

1. A During the initial response, creating a solid chain of custody is critical for evidence integrity and preservation. The other choices refer to processes that normally take place after the initial response.

2. B One of the primary tasks of the investigator is to determine a timeline and sequence of events that occurred during an incident. The other choices indicate things an investigator should not do, such as only looking for evidence that proves guilt, performing an investigation alone, or manually analyzing logs.

Conduct logging and monitoring activities

Conduct logging and monitoring activities

This objective covers the more technical aspects of logging and monitoring the network infrastructure and traffic. Although we have touched on these topics throughout the book, this objective addresses the need for and the process of collecting data from different sources all over the network, aggregating that data, and then performing analysis and correlation to determine the overall security picture for the network.

Logging and Monitoring

Remember that in Objective 6.1 you learned about security audits; auditing is directly enabled by logging network activity and monitoring the infrastructure for negative events and anomalous behavior. However, there’s more to these critical activities than that. Logging and monitoring are necessary to maintain understanding and visibility of what’s going on in the network infrastructure, which includes network devices, traffic, hosts, their applications, and user activity. You need to understand not only what’s going on in the network at any given moment, but also what’s happening over time, so that you can perform historical analysis and predict potentially negative trends.

Monitoring includes not only security monitoring but also performance monitoring, function monitoring, and user behavior monitoring. The purpose for all of this logging and monitoring is to collect small pieces of data that, when put together and given context, generate information that enables you to take proactive measures to defend the network. Some key elements of the infrastructure that you must monitor include

• Network devices and their performance

• Servers

• Endpoint security

• Bandwidth utilization and network traffic

• User behavior

• Infrastructure changes or departures from normal baselines

Much of this information is generated by logs, particularly from network and host devices. Logs that cybersecurity analysts review on almost a daily basis include firewall logs, proxy logs, and intrusion detection and prevention system logs. In this objective we will discuss many of the technologies that enable logging and monitoring, as well as how they are implemented.

Cross-Reference

Objective 7.7 provides a broader overview of firewalls and intrusion detection and prevention systems.

Continuous Monitoring

Continuous monitoring requires a resilient infrastructure that is able to collect, adjust, and analyze data on multiple levels, including both network-based data (e.g., traffic characteristics and patterns) and host-based data (such as host communications, processes, applications, and user activity). Continuous monitoring involves the use of IDS/IPSs and security information and event management systems (SIEMs).

We discuss continuous monitoring here in two different contexts. The first is more relevant to logging and monitoring the infrastructure and involves proactive monitoring of both the network infrastructure and its connected hosts to detect anomalies in configured baselines, as well as potentially malicious activities. The second context is not as technical but equally as important: monitoring overall system and organizational risk. Risk is monitored and measured on a continual basis so that any changes in the organization’s risk posture can be quickly identified and adjusted if needed. Risk changes frequently due to several factors, which include the threat landscape, the organization’s operating environment, technologies, the industry or market segment, and even the organization itself. All of these risk factors must be monitored to ensure risk does not exceed appetite or tolerance levels for the organization.

Intrusion Detection and Prevention

Historically, network and security personnel focused on simple intrusion detection capabilities. Modern security devices function as both intrusion detection and prevention systems (IDS/IPSs). They not only can detect and categorize a potential attack, but can also take actions to halt traffic that may be malicious in nature. An IDS/IPS may perform this function by dynamically rerouting network traffic, shunting connections, or even isolating hosts.

Intrusion detection typically relies on a combination of one or more of three models to detect problems in the infrastructure:

• Signature- or pattern-based (rule-based) detection Uses well-known signatures or patterns of behavior that match attack characteristics (e.g., traffic inbound on a particular port from a specific domain or IP address)

• Anomaly- or behavior-based analysis Detects deviations in normal behavior baselines

• Heuristic analysis Also detects deviations in normal behavior baselines, but matches those deviations to potential attack characteristics

EXAM TIP There is a difference between behavior-based analysis and heuristic analysis, although they are very similar. With behavior-based analysis, any deviations in the normal behavior baseline will be flagged and security personnel will be alerted. However, even deviations can be explained under certain circumstances. Heuristic analysis takes it a step further by looking to see what those abnormal behaviors might do, such as accessing protected memory areas, changing operating system files, or writing data to a hard drive.

Security Information and Event Management

With properly configured monitoring and logging, a large network, or even a medium-sized network, likely collects millions of pieces of data daily from a variety of network sources. These pieces of data come from hosts, network devices, user activity, network traffic, and so on. It would be impossible for a single person or even several people to sift through the data to make sense out of it and gain meaningful information from it. A good amount of data that comes from logging and monitoring may be insignificant; it’s up to a security analyst to determine which of the millions of pieces of data are important and what they mean to the overall security of the network.

Fortunately, the daunting process of collecting, aggregating, correlating, and analyzing this data is not simply left up to human beings to perform. This is where automation significantly contributes to the security process. We have already discussed how security tool automation is critical to the security process, but here we are talking specifically about security information and event management (SIEM) systems.

A SIEM system is a multifunctional security device whose purpose is to collect data from various sources, aggregate it, and assist security analysts in analyzing it to produce actionable information regarding what is happening on the network. SIEM systems are often the central data collection point for all log files, traffic captures, and other forms of data, sometimes disparate. A SIEM system ingests all of this data and correlates seemingly unrelated data to connect data points and show how they actually do relate to each other. This device helps you to make intelligent, risk-based decisions in almost real time about the security of the network.

SIEM systems use a concept known as a dashboard to display information to security analysts and allow them to run extensive queries on information to get very detailed analysis from all of these different sources of information.

Egress Monitoring

Egress monitoring specifically examines traffic that is leaving the network. Egress monitoring is typically performed by firewall, proxy, intrusion detection, or data loss prevention systems. For the most part this will be routine traffic, but egress monitoring looks for specific security issues. Obviously, a major issue is malware. Often an attack may come in the form of a distributed denial-of-service (DDoS) attack carried out by a botnet that uses the network against itself by infecting different hosts, which then attack other hosts on the network or even hosts on an external network. Egress monitoring looks for signs that internal hosts have been compromised and are being controlled by an external malicious entity and are communicating with it.

In addition to malware, another issue egress monitoring is useful for detecting is data exfiltration. This usually involves sensitive data that is being illegally sent outside the network, in an uncontrolled manner, to unauthorized entities. Egress monitoring uses several different technologies to detect this issue; in addition to data loss prevention (DLP) technologies deployed on both network devices and user endpoints, security devices such as firewalls implement rule sets that look for large volumes of data as well as files with particular extensions, sizes, and other characteristics.

Cross-Reference

Data loss prevention was discussed in Objective 2.6.

Log Management

If an organization does not monitor its logs and react to them properly, the logs serve no useful function. Given that there may be thousands of devices writing logs, managing logs can seem like a daunting task. Again, this is where automation comes into play. Logs are usually automatically sent to central collection points, such as the aforementioned SIEM system, or even a syslog server, for examination. Often, manual log review must occur to solve a particular problem, research a specific event, or gain more details about what is going on with the network. However, these are usually the exceptions, and most of the log management process can be automated, as mentioned previously.

Most devices generate what are known generically as event logs. An event log records an occurrence of an activity or happening. An event is usually something that is considered on a singular basis and has definable characteristics. Basic information for an event in a log includes

• Event definition

• System or resource the event effects

• Identifying information for a host, such as hostname, IP address, or MAC address

• The user or other entity that initiated or caused the event

• Date, time, and duration of the event

• Event action (e.g., file deletion, privilege use, etc.)

EXAM TIP You should be familiar with the general contents of an event log entry, which typically includes an event definition, the system affected, host information, user information, the action that was taken, and the date and time of the event.

Log analysis, also primarily an automated task performed by SIEM systems, has the goal of looking through various logs to connect data points and ascertain any patterns between those aggregated data points.

Threat Intelligence

Threat intelligence is the process of collecting and analyzing raw threat data in an effort to identify potential or actual threats to the organization. This may involve determining threat trends to predict what a threat will do, historical analysis of threat data to recognize what happened during a particular event, or behavioral analysis to understand how a threat reacted under certain circumstances to the environment.

Note that the terms threat data and threat intelligence are similar but not the same thing. Threat data refers to raw pieces of information, typically without context, which may or may not be related to each other. An example is an IP address or a log entry that shows a connection between two hosts. Threat data only becomes threat intelligence when it is analyzed and correlated to gain useful insight into how the data relates to the organization’s assets. Threat intelligence can come from various sources, called threat feeds, which include open-source, proprietary, and closed-source information.

Characteristics of Threat Intelligence

The effectiveness of threat intelligence can be evaluated based on the following three different characteristics, which will determine the quality or usefulness of the intelligence to the organization:

• Timeliness The intelligence must be obtained as soon as it is needed to be of any value in countering a threat.

• Accuracy The intelligence must be factually correct, accurate, and not contribute to false negatives or positives.

• Relevance The intelligence must be related to the given threat problem at hand and considered in the correct context when viewed with other factors.

Two other characteristics of threat intelligence are

• Threat rating Indicates the threat’s potential danger level. Typically, the higher the rating, the more dangerous the threat.

• Confidence level The trust placed in the source of the threat intelligence and the belief that the threat rating is accurate.

Both threat ratings and confidence levels can be expressed on qualitative scales, from least dangerous to most dangerous, for example, or least level of confidence to highest level of confidence, respectively. Often threat ratings and threat confidence levels directly relate to the sources from which we gain intelligence, as some are more dependable than others. Threat intelligence sources are discussed next.

EXAM TIP Make sure you are familiar with the characteristics of threat intelligence timeliness, accuracy, and relevance, and that you understand the concepts of threat rating and confidence level.

Open-Source Intelligence

Open-source intelligence (OSINT) comes from sources that are available to the general public. Examples include public databases, websites, and general news. While open-source intelligence is very useful, it is typically broader and describes very general characteristics of threats, which may not apply to your particular assets, vulnerabilities, or overall organization. Open-source intelligence comes in great volumes, which must be reduced, sorted, prioritized, and analyzed to determine its relevance to the organization. (Threat modeling, discussed a bit later, is useful for distilling OSINT.)

Closed-Source Intelligence

Closed-source intelligence comes from threat feeds that may be restricted in their availability. Consider classified government intelligence feeds, for example. These are not readily available to the general public due to data sensitivity or the sensitivity of their source, such as from an agent operating covertly in a foreign country or obtained with secret technology. Another key differentiator for closed-source intelligence versus OSINT is that typically closed-source intelligence is more accurate, more thoroughly authenticated, and holds a higher confidence level. Closed-source intelligence also often provides greater detail and fidelity about the threat, particularly as the intel is often focused on specific organizations, assets, and vulnerabilities that are targeted.

Proprietary Intelligence

Proprietary intelligence can be thought of as a closed-source intelligence feed, but it is usually developed by a private organization and sold, via subscription, to any organization that wishes to purchase it. This makes it more of an intelligence commodity as opposed to being restricted from the general public based on sensitivity. Many organizations purchase proprietary threat intelligence feeds from other companies, sometimes tailored to their specific market or circumstances.

Threat Hunting

Threat hunting is the active effort to determine whether various threats exist in an infrastructure. In some cases, an analyst may be looking to determine if specific threats or threat actors have already infiltrated the infrastructure and continue to maintain a presence. In other cases, threat hunting is more geared toward looking for a variety of threats on a continual basis to ensure that they don’t ever get into the infrastructure in the first place. Threat hunting uses both threat intelligence feeds and threat modeling to determine more precisely which threats are more likely to target which assets in the infrastructure, rather than looking for generic threats. Then the threat hunters make a concerted effort to look for those specific threats or threat actors in the network.

Threat Modeling Methodologies

Several formalized methodologies have been developed to address the different characteristics and components of threats. Some address threat indicators, some address attack methods that threat sources can use against organizations (called threat vectors), and some allow for in-depth threat modeling and analysis. All these methodologies allow the organization to formally manage threats and are critical components of the threat modeling process. A few examples are listed and described in Table 7.2-1.

TABLE 7.2-1 Various Threat Modeling Methodologies

While an in-depth discussion on any of these threat methodologies is beyond the scope of this book, you should have basic knowledge about them for the CISSP exam.

Cross-Reference

We also discussed threat modeling in Objective 1.11.

User and Entity Behavior Analytics

User and entity behavior analytics (UEBA) focuses on patterns of behavior from users and other entities (e.g., service accounts or processes). UEBA goes beyond simply reviewing a log of user actions; it looks at user behavioral patterns over time to detect when those patterns of behavior change. These behavioral patterns include when a user normally logs on or off of a system, which resources they access, and how they interact with the system as a whole.

When a pattern of behavior deviates from the normal baseline, it may be an indicator of compromise (IoC). It may indicate one of several possibilities that merit further investigation, such as:

• The user is violating a policy or doing something illegal

• The system itself is functioning but performing in a less than optimal manner

• The system is under attack from a malicious entity

As with all the other types of data in the infrastructure, user behavior data must be initially collected, aggregated, and analyzed to determine normal baselines of behavior.

REVIEW

Objective 7.2: Conduct logging and monitoring activities In this objective we discussed details of technical logging and monitoring. Logging and monitoring contribute to the auditing function by providing data to connect events to entities. Logs can come from various sources, including network devices, hosts, applications, and so on. Event log entries normally include details regarding the user that initiated the event, the identifying host information, a description or definition of the event, the date and time of the event, and what actually happened.

Continuous monitoring is a proactive way of ensuring that you not only have continuous visibility into what is happening in the network but also are able to perform historical analysis and trend prediction. Continuous monitoring also means the organization is continually monitoring its risk posture.

Intrusion detection and prevention systems use three methods of detection, sometimes in combination with each other: signature or pattern-based detection, behavioral-based detection, and heuristic detection.

Logs and other data from across the infrastructure can be fed into an automated system that aggregates and correlates all of this information, known as a SIEM system. SIEM systems allow instant visibility into the security posture of the network through dashboards and complex queries.

Egress monitoring allows security personnel to detect malware attacks that may make use of botnets and cause hosts to attack each other or, worse, attack external networks not owned by the organization. Egress monitoring also allows the organization to detect data exfiltration through secure device rule sets and data loss prevention systems.

Log management means that administrators actually review logs to detect malicious events, poor network performance, or negative trends. Most modern log management is automated through SIEM systems.

We also revisited and expanded upon the basic concepts of threat modeling. Threat modeling goes beyond simply listing generic threats that could be applicable to any organization; threat modeling takes a more in-depth, detailed look at how specific threats may affect an organization’s assets and vulnerabilities. Threat modeling uses threat intelligence that is timely, relevant, and accurate, and that intelligence may come from a variety of threat feeds, such as open-source, closed-source, or proprietary sources. Various threat management and modeling methodologies exist, including STRIDE, VAST, PASTA, and many others.

Finally, we examined user and entity behavior analytics (UEBA), which looks for abnormal behavioral patterns from users, system accounts, and processes. These deviations of normal behavior patterns could indicate an issue with a user, the system, or an attack.

7.2 QUESTIONS

1. You are designing a new intrusion detection and prevention system for your company. You want to ensure that it has the capability to accept security feeds from the system’s vendor to allow you to detect intrusions based on known attack patterns. Which one of the following detection models must you include in the system design?

A. Behavior-based detection

B. Heuristic detection

C. Signature-based detection

D. Intelligence-based detection

2. You are a cybersecurity analyst who works at a major research facility. As part of the organization’s effort to perform threat modeling for its systems, you need to look at various proprietary intelligence feeds and determine which ones would be most likely to help in this effort. Which of the following is not an important characteristic of threat intelligence you should consider when selecting threat feeds?

A. Timeliness

B. Methodology

C. Accuracy

D. Relevance

3. Nichole is a cybersecurity analyst who works for O’Brien Enterprises, a small cybersecurity firm. She is recommending various threat methodologies to one of her customers, who wants to develop customized applications for Microsoft Windows. Her customer would like to incorporate a threat modeling methodology to help them with secure code development. Which of the following should Nichole recommend to her customer?

A. PASTA

B. TRIKE

C. VAST

D. STRIDE

7.2 ANSWERS

1. C Signature-based detection allows the system to detect attacks based on known patterns or signatures.

2. B Methodology is not a consideration in evaluating intelligence feeds. To be useful to the organization, threat intelligence should be timely, relevant, and accurate.

3. D STRIDE (Spoofing, Tampering, Repudiation, Information disclosure, Denial of service, and Elevation of privilege) is a threat modeling methodology created by Microsoft for incorporating security into application development. None of the other methodologies listed are specific to application development, except for VAST (Visual, Agile, and Simple Threat Modeling), but it is not specific to Windows application development.

Perform Configuration Management (CM) (e.g., provisioning, baselining, automation)

Perform Configuration Management (CM) (e.g., provisioning, baselining, automation)

Configuration management (CM) is a set of activities and processes performed by the change management program of an organization to ensure system configurations are consistent and secure. In this objective we will discuss topics directly related to CM, including provisioning initial configuration, maintaining baseline configurations, and automating the CM process.

Configuration Management Activities

Configuration management is part of the larger change management process. Change management is concerned with overarching strategic changes to the planning and design of the infrastructure, as well as the operational level of managing the infrastructure, while configuration management is more focused at the system level and is usually part of the tactical or day-to-day activities. Configuration management covers initial provisioning and ongoing baseline configurations of systems, applications, and other components, especially through automated processes whenever possible.

Cross-Reference

Configuration management is very closely related to patch and vulnerability management, covered in Objective 7.8, and change management, discussed in detail in Objective 7.9.

Provisioning

Just as user accounts are provisioned, as discussed in Objective 5.5, systems are also provisioned. In this context, however, provisioning is the initial installation and configuration of a system. Provisioning may require manual installation of operating systems and applications, as well as changing configuration settings to make sure that the system is both functional and secure. However, as discussed a bit later in this objective, automation can make provisioning a system far more efficient and ensure that the configuration meets its initial required baseline (discussed next).

Provisioning often uses baseline images, which are preapproved configurations that meet the organization’s requirements for hardware and software settings, to quickly deploy operating systems and software, cutting down the time and margin for error required to install a system.

Baselining

The default settings for most systems are unsecure and often do not meet the functional needs of the organization. Therefore, the initial default configuration and settings need to be changed to better suit the organization’s functional and security requirements. Baselining means ensuring that the configuration of a system is set according to established organizational standards and remains that way even throughout configuration and change processes. This doesn’t mean that the baseline for a system won’t sometimes change; baselines often change in an organization as system functions are changed, systems are upgraded, patches are applied, and the operating environment for the organization changes. Changing baselines is part of the entire change management process and must be approached with careful planning, testing, and implementation.

An organization could have several established baselines that apply to specific hosts. For example, an organization may have a workstation baseline that applies to all end-user workstations and a separate server baseline that applies to servers. It may also have baselines for network devices, and even mobile devices. The point here is that for a given device, the organization should have a baseline design that details the versions of operating systems and applications installed on the device, as well as carefully controlled configuration settings that should be standardized across all like devices.

All baseline configurations should be documented and checked periodically. There are automated software solutions, some of which are part of an operating system, that can alert an administrator if a system deviates from the baseline. Legitimate changes to baselines could be a new piece of software or even a patch that is applied to the host; these valid changes, once tested and accepted, then become part of the updated baseline configuration. It’s the nonstandard or unknown changes to the baseline that must be paid attention to, however, as these may come in the form of unauthorized changes or even malware.

Baseline configuration settings often include

• Standardized versions of operating systems and applications

• Secure configuration settings, including only allowed ports, protocols, and services

• Removal or change of default account and password settings

• Removal of unused applications and services

• Operating system and application patching

EXAM TIP You should keep in mind for the exam that baselines are critical in maintaining secure configuration of all systems in the infrastructure. Secure baselines include controlled versions of operating systems and applications, as well as their security settings. An organization may have multiple baselines, depending on the type of device in question.

Automating the Configuration Management Process

Most systems in the infrastructure are complex and have a myriad of configuration settings that must be carefully set in order to maintain their security and functionality. It would be impractical for administrators to manually set all of these configuration settings and expect to have time for any other of their daily tasks. Additionally, misconfigurations occur due to human error, and many of these configuration settings may render a system nonfunctional or not secure if not set correctly. This is where automation is fundamental in maintaining configuration baselines.

Hundreds of automated tools are available to assist with configuration management. Some of them are built into the operating system itself, and many others are free or third-party utilities that come with management software. For example, Windows Server includes Active Directory (AD), which has group policy settings that enable change management administrators to manage configuration baselines in an AD domain. Linux has its own built-in configuration management utilities as well. Additionally, organizations can use powerful customized scripts, such as those written in PowerShell or Python, as well as enterprise-level management systems. We will discuss many of the tools used to configure and maintain security settings in Objective 8.2. Using automated tools to perform configuration management can help reduce issues caused by human error, ensure standardization of configuration settings across the enterprise, and make configuration changes much more efficient.

Cross-Reference

Tool sets, software configuration management, and security orchestration, automation, and response (SOAR) are related to automating the configuration management process and are discussed in depth in Objective 8.2.

REVIEW

Objective 7.3: Perform Configuration Management (CM) (e.g., provisioning, baselining, automation) This objective reviewed configuration management processes. Configuration management is a subset of change management and is closely related to both vulnerability management and patch management. The provisioning process is where the initial installation and configuration of systems and applications occur. It’s important to establish a standardized baseline to use for devices across the organization, and there may be multiple baselines to address different types of devices. Baselines also change occasionally, as the environment changes or systems and applications change. Configuration management is made much more efficient and easier by using automated tools that can help reduce human error and ensure configuration baselines are maintained.

7.3 QUESTIONS

1. Your company is creating a secure baseline for its end-user workstations. The workstations should only be able to communicate with specific applications and hosts on the network. Which of the following should be included in the secure baseline for the workstations to ensure enforcement of these restrictive communications requirements?

A. Operating system version

B. Application version

C. Limited open ports, protocols, and services

D. Default passwords

2. Riley has been manually provisioning several hosts for a secure subnet that will process sensitive data in the company. These systems are scanned before being taken out of the test environment and connected to the production network. The scans indicate a wide variety of differences in configuration settings for the hosts that have been manually provisioned. Which of the following should Riley do so that the configuration settings will be consistent and follow the secure baseline?

A. Provision the systems using automated means, such as baseline images

B. Manually configure the systems using vendor-supplied recommendations

C. Back up a generic system on a network and restore the backup to the new systems so they will be configured identically

D. Manually configure the systems using a secure baseline checklist

7.3 ANSWERS

1. C Any open ports, protocols, and services affect how the workstation communicates with other applications on the network or other hosts. These should be carefully considered and controlled for the secure baseline. The other choices are also considerations for the secure baseline, but do not necessarily affect communicating with only specific applications or hosts on the network.

2. A Riley should use an automated means to provision the secure hosts; an OS image with a secure baseline could be deployed to make the job much easier and more efficient and ensure that the configuration settings are standardized.

Apply foundational security operations concepts

Apply foundational security operations concepts

In this objective we reexamine some foundational security concepts that we covered in previous domains, albeit in this objective from an operations context. These concepts include need-to-know, least privilege, separation of duties, privileged account management, job rotation, and service level agreements.

Security Operations

Security operations describes the day-to-day running of the security functions and programs. When you first learned about security theories, models, definitions, and terms, it may not have been clear as to how these things apply in the course of a security professional’s normal day. Now you are going to apply the fundamental knowledge and concepts you learned earlier in the book to the operational world.

Need-to-Know/Least Privilege

Two of the important fundamental concepts introduced in Domain 1, and emphasized throughout the book, are need-to-know and the principle of least privilege. These concepts ensure that entities do not have unnecessary access to information or systems.

Need-to-Know

Recall from previous discussions that need-to-know means that an individual should have access only to information or systems required to perform their job functions. In other words, if their job does not require access, then they don’t have the need-to-know for information, and by extension, the systems that process it. This limitation helps support and enforce the security goal of confidentiality. The need-to-know concept is applied operationally throughout security activities. Examples include restrictive permissions, rights, and privileges; the requirement for need-to-know in mandatory access control models; and the need to keep privacy information confidential.

A new employee’s need-to-know should be assumed to be the minimum required to fulfill the functions of their job. As time progresses, an individual may require more access, depending on changing job requirements and the operating environment. Only then should additional access be granted. Need-to-know should be carefully considered and approved by someone with the authority to do so; normally that might mean the individual’s supervisor, a data or system owner, or a senior manager. Need-to-know should also be periodically reviewed to see if the individual still has validated requirements to access systems and information. If the job requirements change or the operating environment no longer requires the individual to have the need-to-know, then access should be revoked or reduced.

Principle of Least Privilege

The principle of least privilege, as we have discussed in other objectives, essentially means that an individual should only have the rights, permissions, privileges, and access to systems and information that they need to perform their job. This may sound similar to the concept of need-to-know, but there is a subtle difference that you must be aware of for the exam. With need-to-know, an individual may or may not have access at all to a system or information. The principle of least privilege states that if an individual does have access to system or information, they can only perform certain actions. So, it becomes a matter of no access at all (need-to-know) or minimal access necessary (least privilege).

EXAM TIP Need-to-know determines what you can access. Least privilege regulates what you can do when you have access.

The principle of least privilege is applied at the operational level by only allowing individuals, ranging from normal users to administrators and executives, to perform tasks at the minimal level of permissions necessary. For example, an ordinary user should not be able to perform privileged administrative tasks on a workstation. Even a senior executive should not be able to perform those tasks since they do not relate to their duties.

Separation of Duties and Responsibilities

The concept of separation of duties (SoD) prevents a single individual from performing a critical function that may cause damage to the organization. In practice, this means that an individual should perform only certain activities, but not others that may involve a conflict of interest or allow one person to have too much power. For example, an administrator should not be able to audit their own activities, since they could conceivably delete any audit trails that record any evidence of wrongdoing. A separate auditor should be checking the activities of administrators.

Related to the concept of separation of duties are the concepts of multiperson control and m-of-n control, which require more than one person to work in concert to perform a critical task.

Multiperson Control

Multiperson control means that performing an action or task requires more than one person acting jointly. It doesn’t necessarily imply that the individuals have the same or different privileges, just that the action or task requires multiple people to perform it, for the sake of checks and balances.

A classic example of multiperson control is when an individual bank teller signs a check for over a certain amount of money, and then a manager or supervisor must countersign the check authorizing the transaction. In this manner, no single individual can use this method to steal a large amount of funds. A bank teller and bank manager could secretly agree to commit the crime, known as collusion, but it may be less likely because the odds of getting caught increase. Another example would be a situation that requires three people to witness and sign off on the destruction of sensitive media. One person alone can’t be empowered to do this, since assigning only one person to be responsible for destroying the media could allow that person to steal the media and claim that they destroyed it. But assigning three people to witness the destruction of sensitive media would reduce the possibility of collusion and reduce the risk that the media was destroyed improperly or accessed by unauthorized individuals.

M-of-N Control

M-of-n control is the same as multiperson control, except it doesn’t require all designated individuals to be present to perform a task. There may be a given number of people, “n,” that have the ability to perform a task, but only so many of them (the “m”) are required out of that number. For example, a secure piece of software may designate that five people are allowed to override a critical financial transaction, but only three of the five are necessary for the override to take place. This means that any three of the five people could input their credentials signifying that they agree to an override for it to take place. This doesn’t necessarily imply that they all have different rights, privileges, or permissions (although in the practical world, that is often the case); it could simply mean that a single person alone can’t make that decision.

Note that separation of duties does not require multiperson control or m-of-n control. An individual can have separate duties and perform those tasks daily without having to work with anyone. Multiperson or m-of-n control only comes into play when a task must be completed by multiple people working together at that moment or in a defined sequence to make a decision or complete a sensitive or critical task.

Privileged Account Management

Privileged accounts require special care and attention. In addition to carefully vetting individuals before assigning them higher-level accounts with special privileges, those accounts should be approved by the management chain. The individual should have the proper need-to-know and security clearance for a privileged account, as well as additional training that emphasizes the special procedures for safeguarding the account and the potential dire security consequences of failing to do so. Note that it’s not only administrators who receive accounts with higher-level privileges; sometimes users receive accounts with additional privileges for legitimate business reasons.

Once a privileged account has been granted to an individual, they should also be carefully scrutinized for the correct authorizations. Even with a privileged account, the principles of separation of duties and least privilege still apply. Having a privileged account is not an all-or-nothing prospect; the account should still have only the privileges necessary to perform the functions related to the individual’s job description, and no more. Having a privileged account also does not mean that the individual has access to all resources and objects. There still may be sensitive data that they do not need to access, which falls under the principle of need-to-know, as mentioned earlier.

EXAM TIP Even privileged accounts are still subject to the principle of least privilege; not every privileged account requires full administrator privileges over the system or application. Privileged accounts can still be assigned only the limited rights, privileges, and permissions required to perform specific functions.

Individuals with privileged accounts should only use those accounts for specific privileged functions, and for only a limited amount of time. They should not be constantly logged into the privileged account, since that increases the attack surface for the account and the resources they are accessing. Privileged account holders should also maintain a routine user account and use it for the majority of their duties, especially for mundane tasks such as e-mail, Internet access, and so on. Using the methods described in Objective 5.2, the organization should employ just-in-time authorization; that is to say, the privileged account should only be used when and if necessary, and then the individual should revert to their basic account.

Cross-Reference

Just-in-time identification and authorization was discussed in Objective 5.2 and described the use of utilities such as sudo and runas to affect temporary privileged account access.

Privileged account management also lends itself to role-based authorization. Rather than granting additional privileges to a user account, security administrators can place the user in a role that allows additional privileges. Their membership in that role group should require that their account be audited more frequently and to a greater level of detail. This approach might be a better alternative than granting a user a separate privileged account if the majority of that user’s daily job requirements necessitate use of the additional privileges. Again, the key here is frequent reviews and management approval for any additional privileges and system or information access.

Job Rotation

An organization with a job rotation policy rotates employees periodically through various positions so that a single individual is not in a position sufficiently long to conduct fraud or other malicious acts to a degree that could substantially impair the organization’s ability to continue operations. Job rotation serves not only as a detective control but also as a deterrent control, because employees know that someone else will be filling their job role after a certain period of time and will be able to discover any wrongdoing. Implementing a job rotation policy in larger organizations usually is easier than in smaller organizations, which may lack multiple people with the necessary qualifications to perform the job. Even when there is no suspicion of malicious acts or policy violations, it can be difficult to rotate someone out of a job position for normal professional growth and development since they may be so ingrained in that role that no one else can do their job. This is why planned, periodic cross-training and leveraging multiple people to understand exactly what is involved with a particular job requirement is necessary. The organization should never depend on one person only to perform a job function; this would make it very difficult to rotate a person out of that position in the event they were suspected of fraud, theft, complacency, incompetency, or other negative behaviors, let alone the critical need for having someone trained in a position for business continuity in the event an individual came to harm or departed the organization for some reason.

Mandatory Vacations

Somewhat related to job rotation is the principle of mandatory vacations—forcing an individual to take leave from a job position or even the organization for a short period of time. Frequently, if an individual simply has been performing the job function for a long period of time without a break, company policy may require that they take vacation time for rest and rehabilitation. Usually, this part of the policy allows an individual to be away from the organization for an allotted number of vacation days annually, whenever they choose. This is likely one of the more positive aspects of a mandatory vacation policy.

However, a mandatory vacation policy can also be used to force someone who is suspected of malicious acts to step away from the job position temporarily so an investigation can occur. You will often see this type of action in the news if someone in a position of public trust, for example, is suspected of wrongdoing. People are often placed on “administrative leave,” with or without pay, pending an investigation. This is the same thing as a mandatory vacation. The individual may be allowed to return to their duties after the investigation completes, or they may be reassigned or even terminated from the organization.

CAUTION Organizations that actively use a mandatory vacation policy should also have, by necessity, some level of cross-training or job rotation as part of their policy and procedures.

Service Level Agreements

A service level agreement (SLA) exists between a third-party service provider and the organization. Third parties offer services, such as infrastructure, data management, maintenance, and a variety of other services, that can be provided to the organization under a contract. Note that third-party services can also include those offered by cloud providers. Service level agreements impact the security posture of an organization by affecting the security goal of availability, more often than not, but can also affect data confidentiality if a third-party has access to sensitive information. Poor performance of services can impact the organization’s efficient operations, performance, and security, so it’s important to have agreements in place that ensure consistent levels of function and performance for those third-party provided services.

In addition to the legal contract documentation that will likely be included in a contract with a third-party service provider, the SLA is critical in specifying the responsibilities of both parties. This document is used to protect both the organization and the third-party service provider. The SLA can be used to guarantee specific levels of performance and function on the part of the third-party service provider, as well as delineate the security responsibilities between the customer and the provider with regard to protecting systems and data. Failing to meet SLA requirements often incurs a financial penalty.

REVIEW

Objective 7.4: Apply foundational security operations concepts In this objective we reviewed several foundational security operations concepts, including need-to-know, least privilege, separation of duties, privileged account management, job rotation, and service level agreements. Each of these concepts has been discussed in at least one previous objective, but here we framed them in the context of security operations.

Need-to-know means that an individual does not have any access to systems or information unless their job requires that access. Contrast this to the principle of least privilege, which means that once granted access to a system or information, an individual should only be allowed to perform the minimal tasks necessary to fulfill their job responsibilities. Separation of duties means that one individual should not be able to perform all the duties required to complete a critical task, thereby preventing fraudulent or malicious activity absent the collusion of two more people. This is also further demonstrated by the concepts of multiperson control and m-of-n control, which require at least a minimum number of designated, authorized individuals present to approve or perform a critical task.

Privileged account management requires that any individual having privileges above a normal user level should be vetted and approved by management for those privileges. Privileged accounts granted to these individuals should not be used for routine user functions, but only for the privileged functions they were created to perform. Privileged accounts should also be reviewed periodically to ensure they are still valid.

Job rotation is used to replace an individual in a job function periodically so that the person’s activities can be audited for any malicious or wrongful acts. This is similar to mandatory vacations, which is only temporary and usually implemented while an individual is under investigation.

Service level agreements are used to protect both a third-party service provider and the organization by specifying the required performance and function levels in the contract, including security, for each party.

7.4 QUESTIONS

1. Which of the following is the best example of implementing need-to-know in an organization?

A. Denying an individual access to a shared folder of sensitive information because the individual does not have job duties that require the access

B. Allowing an individual to have read permissions, but not write permissions, to a shared folder containing sensitive information

C. Requiring the concurrence of three people out of four who are authorized to approve a deletion of audit logs

D. Routinely reassigning personnel to different security positions that each require access to different sensitive information

2. Audit trails for a sensitive system have been deleted. Only a few people in the company have the level of training and privileged access required to perform that action. Although a particular person is suspected of performing the malicious act, all people who have access must be removed from their position, at least on a temporary basis, during the investigation. Which of the following does this action describe?

A. Separation of duties

B. Job rotation

C. Mandatory vacation

D. M-of-n control

7.4 ANSWERS

1. A Need-to-know is typically a deny or allow situation; denying access to a shared folder containing sensitive information that the user does not require for their job duties is based on need-to-know.

2. C Since only a few people have that level of access, they must all be temporarily removed from their positions during the investigation and placed on administrative leave, a form of mandatory vacation. Job rotation is not an option if there are only a few people who can perform the job function and they are all under investigation.

Apply resource protection

Apply resource protection

We have discussed protecting resources throughout this entire book, but in this objective we’re going to focus specifically on one area we have not previously addressed—media management and protection. Media is often associated with backup tapes, but it also includes hard drive arrays, CD-ROM and Blu-ray discs, storage area networks (SANs), network-attached storage (NAS), and portable media such as USB thumb drives, regardless of whether they are local or remote storage. This objective focuses on managing the wide variety of media and the specific security measures used to protect it.

Media Management and Protection

Regardless of the type of media your organization is using, you should carefully consider several key protection activities related to media management. These include administrative, technical, and physical security controls. Each type of control is applied to protect media from unauthorized access, theft, damage, and destruction. We’ll discuss some of these controls in the upcoming sections.

Media Management

Media management primarily uses administrative controls, such as policies and procedures, associated with dictating how media will be used in the organization. Management should create a media protection and use policy that outlines the requirements for proper care and use of storage media in the organization. This policy could also be closely tied to the organization’s data sensitivity policy, in that the data residing on media should be protected at the highest level of sensitivity dictated by the policy.

Media management requirements detailed in the policy should include

• All media must be maintained under inventory control procedures and secured during storage, transportation, and use.

• Proper access controls, such as object permissions, must be assigned to media.

• Only authorized portable media should be used in organizational systems, and portable media must be encrypted.

• Media should only be reused if sensitive data can be adequately wiped from it.

• Media should be considered for destruction if it cannot be reused due to the sensitivity of data stored on it.

Media Protection Techniques

Media management sets administrative policy controls for the use, storage, transport, and disposal of various types of media in the organization. It also dictates the practical controls expected for those activities. Protection techniques for media include technical and physical controls implemented during access, transport, storage, and disposal.

Media Access Controls

Media should be treated with care and handling commensurate with the level of sensitivity of data stored on the media. This again should be in accordance with the data sensitivity policies determined by management. These controls include

• Sensitive data stored on media should be encrypted.

• Access control permissions granted to authorized users should be based upon their job duties and need-to-know; the principles of least privilege and separation of duties should also be included in these access controls.

• Strong authentication mechanisms required to access media must be used even for authorized users.

Media Storage and Transportation

Physical controls are the primary type of control used to secure media while it is stored and during transport to prevent access by unauthorized personnel. Physical controls for media storage and transport must include

• Secure media storage areas (e.g., locked closets and rooms)

• Physical access control lists of personnel authorized to enter media storage areas

• Proper temperature and humidity controls for media storage locations

• Media inventory and accountability systems

• Proper labeling of all media, including point-of-contact information (i.e., data or system owner), sensitivity level, archival or backup date, and any special handling instructions