C H A P T E R 12

Evolutionary Data Modeling

I worked for Lockheed right after college. In my first year, I was part of a team assigned to evaluate Manufacturing Resources Planning (MRP) methods and tools. I was familiar with MRP; it had been part of the required curriculum for my bachelor's degree, plus I had some prior experience with MRP systems. However, MRP was a very new idea at Lockheed, and it was the antithesis of the philosophy behind the existing systems. My boss and mentor, Skip Christopherson, had been at Lockheed more years than I'd been on the planet and he knew every aspect of Lockheed's scheduling system, down to the very smallest detail. You attended classes in MRP together after work and it was like a classic comedy scene with the smart-mouthed rookie breezing through the material while the grizzled older employee who actually knows how things work struggles to grasp the point of this new fangled technology with all its bells and whistles. At the end of the class each night, you'd walk back to our windowless office while Skip talked about all that was wrong with this inane idea that a system could process changes and regenerate the schedule every night without throwing the assembly line into utter chaos. I'd been learning about how Lockheed approached scheduling at the same time you were taking the MRP class and I'd begun to understand and appreciate how it worked. Skip was right: MRP was exactly opposite our process flow model, which was based on math, statistics, and learning curves. Using the knowledge gained from decades of successful aircraft programs, you calculated the boundaries for the specific phases of the assembly process and tied them to positions on the shop floor. These positions and dates created a window in time for each crew to contain their contribution to the assembly. Within those boundaries, parts and schedules might shuffle madly while the crew worked insane hours, but the men on line knew that meeting the scheduled move date for the next position was critical and they'd learned to make it happen no matter what. The plane always moved forward, even if an assembly crew three positions further down the line had to rip out four positions worth of work to add a fabricated part that hadn't been available when it was needed.

As our class got more intense, Skip's concerns about MRP heightened and his after-class rants got more animated. He knew everything the assembly line went through to make their schedules now. If the schedules changed nightly, how could workers possibly keep up with the influx of information, much less the actual parts and planes? One night, I considered my words carefully before I expressed what I was thinking to my mentor. Once I had worked up my courage, I said, “Skip, the schedule is already changing every day. The only difference is that you'll be able to see it if it's it the system.” He suddenly got very quiet and that was the last time I ever heard him complain about MRP. Later he went on to champion the MRP system implementation and became an expert in both the technology and the implementation process.

Change happens, whether you're building airplanes or software. You have a choice: you either manage it or you get blindsided.

Lessons from Two Decades of System Development

I met Skip in 1989 when he interviewed me on recruitment trip to my college and he taught me many things in the time I worked for him. Since that first post-college job, I've been a contributor on many different kinds of software system projects. Some of them were small enough that I could design and build the entire application myself, while others involved global teams with hundreds of people. Most of the projects I've been involved with were complex, and every one of them was expected to meet an expedited design and implementation schedule. In spite of the differences, there have been some basic realities of system development that were always the same.

- Customers don't know what they want until they see it. Only once they see what can be done with their data will they begin to understand the real possibilities of a new application. This is also the point in time when they will begin to shower the project with change requests. If you want to build an exceptional product, the last thing you want to do is shut down their ideas by refusing to change.

- To paraphrase Gregory House, MD, customers lie. Ask a customer to describe their process, and most of the time, they will answer with the documented version of the process. Customers don't intend to mislead us, but the ISO certification process has taught them to answer questions about process by describing the currently documented steps. However, many processes are flawed and there are likely to be additional steps that may not be included in the written documentation. Watch what a customer does and you will see the real process. When you see the steps that are not in the documentation, that's where you will find the best opportunities to build an innovative product.

- If you build a system that makes a user's job easier, they will use it and demand more: more options, more functionality, and more performance. Build an application that creates work without providing sufficient benefit, and users will work around the application, using it in ways that were never intended or avoiding it all together. This is a perfectly reasonable way for them to react: users have a job to do and the application and code that is so important to us as the creators of the application may only be a small part of the users' workday. When users have to work around an application to get their job done, more bugs will be reported and data will become inaccurate over time. You need to remember as database and software developers that your customers are not as fascinated with the details of the technology as you are. Users need tools that work consistently and predictably.

- If you cannot provide a tool or a product quickly, someone else will. This applies to both the end product and the development environment it is built in. If a product is late to market, potential customers will find another solution and another vendor. If the database is not available to support the application developers, they will find another solution to get their job done. Even if that solution does not work as well as it might have using the database, it can be very difficult to remove or optimize it later. Usually, such solutions have long ranging repercussions in application functionality, performance, or both.

Database management systems excel at storing, searching, and transforming data. When combined with a well-designed data model, they will perform each of these tasks faster than any application method. Here is the challenge: a database system must be well designed to perform and to continue to perform even as it is placed under increasing demands of more users or more data. It takes time to design a database system that will scale to support these demands. When shortcuts are taken in the design process, the database system will fail to meet performance requirements; this usually leads to a major redesign or hardware expenditure. Both of these options represent waste and inefficiency: the redesign requires extra time and effort, and even if the hardware is able to bring the system up to acceptable performance levels, think of how much more capable the system could have been with a good design AND powerful hardware.

This all sounds quite discouraging: if customers don't know what they want, don't accurately describe their processes, and will misuse a tool to minimize their workload, software developers and the applications they build seem doomed to fail. You can't create a good design up front because you don't have a complete picture of what the application and data will eventually become, and even if you did have complete and accurate information about the final requirements at the beginning of the project, you don't have time for data modeling and system design when the competition is aiming for the same customer base. Yet if the database design is flawed, your users will be unhappy with system response time and your project will be plagued with slow response time and system crashes.

There is another option. You can do something revolutionary: you can recognize that change is inevitable, prepare for it and manage it. If you expect change, or better yet, encourage it and learn from it, you open up the possibility that you will create exactly what the customer needs. If you watch the end user perform their work rather than ask them to describe it, you will find the pain points in the current process and you may be able to remove those pain points easily. This gives you an opportunity to truly innovate and build tools your users need but no one has thought of. If you build your applications with customer involvement, getting new functionality in their hands early in the process, requesting feedback and then using that feedback to improve the application early in the development process, the result is a collaborative process and a better product. If you watch the customer use the tools you build, you will find even more ways to improve your products. This is called iterative design and I believe it leads to better products, happier customers, and a more satisfying work environment for software developers.

The Database and Agile Development

Iterative development is not a new concept: there are a multitude of books and experts covering software development and iterative design. However, when it comes to the database, there is little useful information about how to manage iterative database design. At the same time, more and more application databases are created without an adequate database design. Whether it is the conceptual, logical, or physical design, the one common thread is that intentional design is absent in far too many cases. This leads to a far-too-common end result: the database and application do not perform to expectations. The application might pass functional test requirements, but once it is placed under the expected user load, performance may be far from acceptable. While it is possible to make a poorly designed application perform better, to build a database that will continue to perform to expectations under an increasing number of concurrent users with an ever-growing amount of data, you MUST have a well designed schema. You may be able to add more hardware resources and improve performance, but think about it. What portion of those hardware resources are simply compensating for a bad design? And how much more could you get out of your hardware investment if the design flaws didn't exist? When the schema is flawed, the amount of rework required in the application code increases. This creates a very big problem as the database schema remains frozen in time while the application functionality continues to expand. As change requests continue to flow in and additional fields are tacked on to the already problematic schema design, performance problems become increasingly difficult to repair.

The net result of these kinds of problems is that architects and developers have come to the conclusion that the solution is to put as little functionality in the database as possible. Perhaps this is understandable: because they've learned to see database changes as painful, they attempt to avoid the pain by avoiding the database. They even avoid using the database for the tasks the database does best because they are afraid they may have to change it someday. The desire to be database agnostic adds to the problem as some of the best features and functions in the DBMS go unused. The unfortunate and somewhat ironic result is that a very expensive DBMS sits idle while teams of workers struggle to recreate functionality that is available and paid for under their existing license. Choosing to license a powerful product and then design the application as if you'd purchased the lowest common denominator is one of the most expensive mistakes a development team can make. This is true whether the DBMS is Oracle, MySQL, Postgres, or SQL Server. Good developers are hard to find. Why spend their time recreating what has already been built by another company and purchased by yours? If the goal is to build a competitive product, one that will give the customer what they need while maximizing the return on our investment, you should be fully exploiting every last useful feature available in the DBMS platform you've purchased.

Thus far, I've listed the contradictory goals for software development projects: you need to build it fast, you need to proceed before you have sufficient knowledge, you need to design to support the long term performance goals and those performance goals are probably unknown as well. If you don't have a product yet, how do you know how many users you will attract someday? If you create something amazing and innovative, you will have more users than you ever thought possible in a much shorter time frame than you expected. Then your enthusiastic users will promptly overload the system, resulting in one of those front-page stories about the latest software product experiencing a very public failure. You need to aim for stability and yet encourage change through an iterative design process. You want your systems to be transparent and simple to use so users are able to do their work without having to relearn the steps every time they connect, but you must recognize that your understanding of the user's work may be inaccurate. Considering all the potential ways a project can fail to meet these challenges, how do software projects ever succeed? The one thing you can't do is turn back the clock to times when development didn't move so fast. Instead, you must expect that designs will change, and equip database administrators, database developers, and application developers to manage today's change while remaining focused on the longer term impacts these changes will have on the system as a whole.

![]() Note In this chapter, there will be references to database development and application development. Both of these labels can refer to development of the same application, and one developer may be responsible for both layers of development. My intention for this chapter is to differentiate between the code that accesses and processes the data, and the code that builds the tool to present the data to the end user, not to suggest that an additional organizational layer or group of people is required.

Note In this chapter, there will be references to database development and application development. Both of these labels can refer to development of the same application, and one developer may be responsible for both layers of development. My intention for this chapter is to differentiate between the code that accesses and processes the data, and the code that builds the tool to present the data to the end user, not to suggest that an additional organizational layer or group of people is required.

Evolutionary Data Modeling

In spite of the challenging picture I've painted, I believe it is possible to achieve your goals—but it's not by doing less in the DBMS. The best way to create both an agile development environment and a truly agile application database is by coding more functionality inside the database and exploiting the DBMS for the kind of work it does best. Your database designs need to evolve and adapt to the changing needs of the development team, the customer, and the end users. You need to walk a fine line of building what is needed now, while keeping your eyes on the future and preparing for it. This is why I prefer the term evolutionary data modeling to iterative data modeling. The term iterative implies that the design cycle is repeated throughout the development process: this is a good start but it's not enough. Evolutionary also implies ongoing change, but with evolution, each change builds on the foundation created thus far. When the design evolves, you recognize that it should be capable of extending to meet needs and requirements that are not fully defined. You still don't want to build functionality before it is required, but neither should you make choices that limit options in the name of YGANI (You Ain't Gonna Need It). Some design decisions you make create more options for future design phases, while others remove options and limit the potential solutions in the next round of development. Until you are certain about what is coming next, make the choice that keeps as many options open as possible.

![]() Note To the best of my knowledge, the term “Evolutionary Data Modeling” originated with Scott W. Ambler on his website at

Note To the best of my knowledge, the term “Evolutionary Data Modeling” originated with Scott W. Ambler on his website at agiledata.org. He also coauthored a book, Refactoring Databases: Evolutionary Database Design, which includes some excellent methodologies for Agile development. The approach described in this chapter for achieving an easily refactorable database differs from any recommendation I've seen by Mr. Ambler to date.

For example, the database for one development project had been loaded from a .csv file provided by the customer. About half of the columns in the file did not have a defined use in the first round of functional specifications. The development team was divided into two schools of thought as to what should be done with the extraneous fields. One side stated that all the columns that were not required should be removed as they were not being actively used. The other side recognized that although these fields were not in use, the values had a clear relationship to the data values that were central to the application. They thought the fields might have value in helping the team better understand the required data and how it was processed by the end users.

The unnecessary fields were retained and although they appeared to be parent values of the data in a central table, the solution was to leave the fields as attributes so the relationships could be further evaluated without creating work, and eventually rework, for the database developers. This decision broke several rules of traditional data modeling: the table contained unnecessary data and some of the data in the schema was not normalized. Since the central table was still small and reasonably narrow, the impact of the rule breaking was negligible. A few releases later, the customer began to make references to these unused fields, which confirmed the previously suspected relationships. Gradually, those data values were incorporated into the application as new functionality was requested, with some data values moving to parent tables while others were left as attributes. Removing the data too soon would have limited the team's options. Retaining the data could have created rework if the data had been formally incorporated into the schema too early. Keeping the data in form that minimized the impact on the application while leaving the door open for later use was the better decision in this case.

In the next section, you'll see code that illustrates a simple schema change and how such changes can ripple through an average development environment. Next, you'll examine why using PL/SQL as the API supports evolutionary design by making it easier to refactor the database design without impacting other application components. Later, you'll review some agile development concepts and discuss how evolutionary data modeling and a PL/SQL API supports the values and principles of agile development.

Refactoring the Database

You will use the Human Resources (HR) schema from Oracle's sample schemas to illustrate a simple change to a database schema and demonstrate how those changes can have an unexpected impact on other functionality within the application. The default HR schema as delivered by Oracle contains one phone number field in the employee table. The customer has requested that the application be modified to store and display both an office and a mobile phone number for each employee. Screens and reports will need to be changed, as some will continue to require one specific phone number while others will need to utilize both phone numbers. For example, the company directory may show both the employees' desk and mobile numbers, while a facilities report only needs the desk numbers.

The original structure of the HR schema is shown in Figure 12-1. You need to determine where to store the new data and how to present the information to the user. You should consider multiple plausible solutions and decide which one (or ones) should be tested.

Figure 12-1. Human Resourse schema, unchanged

A common solution to this kind of request would be to add an additional column to the EMPLOYEES table (EMPLOYEES.MOBILE_NUMBER). While this may resolve the initial requirement, there are better ways to solve the problem as this approach only addresses the immediate need. What happens when HR decides they need the employee's home number as well? You could continue to add columns but if you solve every request for new data by adding a column to the end of the EMPLOYEES table, your table will soon be very wide with sparsely distributed data. This first option is also a relatively limited solution. What if an employee works from two different offices and has two different office numbers? What if one employee prefers to be reached on their mobile while another is easier to contact at the office, and the department administrator needs a report of all the employees and the best way to reach them? You could add another column to store an indicator of the kind of phone the employee prefers and then have the application loop through the data pulling the office number for some employees and the mobile number for others. This type of design process is a good example of how performance problems get started. Columns continue to get tacked on to tables and more application code is needed to process the data in those columns. Eventually, the tables grow very wide, code to access the data becomes increasingly complex as code outside the database does more of the sorting and grouping that should be in the DBMS, and performance starts its downward spiral.

A better solution is to create another table that will store multiple phones number and use the EMPLOYEE_ID to link back to the EMPLOYEES table. This approach offers a few advantages over the first proposal: the database will not artificially limit the number of phone numbers that can be stored for each employee, plus you can store additional attributes about the phone numbers such as phone type and preferred phone.

Listing 12-1 shows how to add a table to store the employee ID, phone numbers, phone types, and an indicator of which phone number is the employee's preferred method of contact. This code will create the new table and populate it from the existing table. All phone numbers will be set to a default type “Office” and the preferred indicator is set to “Y”.

Then you'll populate the new table using the data from the EMPLOYEES table. Since all employees have had only one phone in the system, you can set the default phone type and the preferred indicator to the same values for all employees.

Listing 12-1. Create Table and Populate EMP_PHONE_NUMBER

SQL> CREATE TABLE emp_phone_numbers

(employee_id NUMBER(6) NOT NULL,

phone_number VARCHAR2(20) NOT NULL,

preferred VARCHAR2(1) DEFAULT 'N' NOT NULL,

phone_type VARCHAR2(10) NOT NULL,

CONSTRAINT emp_phone_numbers_pk

PRIMARY KEY (employee_id, phone_number)

) ;

Table created.

SQL> alter table EMP_PHONE_NUMBERS add constraint emp_phone_numbers_fk1

foreign key (employee_id)

references EMPLOYEES (employee_id) ;

Table altered.

SQL> insert into emp_phone_numbers (

select employee_id, phone_number, 'Y', 'Office' from employees) ;

107 rows created.

SQL> commit;

Commit complete.

Some approaches to database refactoring suggest that you should keep the old column in the database, populating both data points until you are certain all references to the old column have been resolved. Anytime you store the same data in multiple places in a database, you run the risk that the data will get out of sync. Then you need to reconcile it, determine which values are correct, and repair the data. In a real HR database, data reconciliation and repair can be a huge effort. There are options to minimize the risk of such issues, but they usually involve duplicating the data from one source to the other, which may not prevent the target column from being updated independently. If all of the changes to the database require maintaining multiple data sources, even temporarily, the net result is a development environment that is very difficult to manage. This approach becomes even more complex when you realize that the typical development team is responding to multiple change requests at any given time. As the developers are making other change requests, will they have to build their new code to support both the old and the new field? And how long might these leftover data values remain in the system? The key to good performance is to eliminate unnecessary work: this is as true for processes as it is for systems. If the development team is going to be agile, you must minimize the amount of work they need to do. Maintaining multiple code paths for weeks, months, or years is not the best use of your development resources.

The changes required to update a query to use the new table are simple: you add another table to the from clause and connect the EMPLOYEES table and the EMP_PHONE_NUMBERS table via the EMPLOYEE_ID in the where clause. If the application has been written with SQL calls directly in the application code layer (Java, PHP, etc) then queries like the first one shown in Listing 12-2 could be found throughout the application code in every module that references basic employee information. Listing 12-2 shows a query to get employee information including the employee's phone number before the change to the table design, and how that query would be changed to request the same data after the table changes were complete.

Listing 12-2. Employee Data Request

SQL> select dept.department_name,

loc.street_address,

loc.city,

loc.state_province,

loc.postal_code,

cty.country_name ,

emp.employee_id ,

emp.last_name,

emp.first_name,

emp.email,

emp.phone_number

from departments dept,

locations loc,

countries cty,

employees emp

where dept.department_id = &DepartmentID

and dept.location_id = loc.location_id

and loc.country_id = cty.country_id

and dept.department_id = emp.department_id

order by dept.department_name, emp.last_name, emp.first_name ;

SQL> select dept.department_name,

loc.street_address,

loc.city,

loc.state_province,

loc.postal_code,

cty.country_name ,

emp.employee_id EMP_ID,

emp.last_name,

emp.first_name,

emp.email,

epn.phone_number

from departments dept,

locations loc,

countries cty,

employees emp,

emp_phone_numbers epn

where dept.department_id = &DepartmentID

and dept.location_id = loc.location_id

and loc.country_id = cty.country_id

and dept.department_id = emp.department_id

and emp.employee_id = epn.employee_id

order by dept.department_name, emp.last_name, emp.first_name ;

Since only one phone number is currently populated for each employee, the result sets for the Purchasing Department are exactly the same for both queries. At this point, either query will return the data shown in Listing 12-3.

Listing 12-3. Abbreviated Employee Data for Department 30 (Purchasing)

DEPT_NAME EMP_ID LAST_NAME FIRST_NAME EMAIL PHONE_NO

Purchasing 114 Raphaely Den DRAPHEAL 515.127.4561

Purchasing 115 Khoo Alexander AKHOO 515.127.4562

Purchasing 116 Baida Shelli SBAIDA 515.127.4563

Purchasing 117 Tobias Sigal STOBIAS 515.127.4564

Purchasing 118 Himuro Guy GHIMURO 515.127.4565

Purchasing 119 Colmenares Karen KCOLMENA 515.127.4566

![]() Note To keep the examples readable, results sets may be edited to shorten column names and/or to remove data that is not significant to the example. Results that are not critical to the example may not be shown.

Note To keep the examples readable, results sets may be edited to shorten column names and/or to remove data that is not significant to the example. Results that are not critical to the example may not be shown.

The changes in the table structure create several potential problems for your application, problems that are likely to impact the user interface and generate new bug reports. Resolving these issues will require feedback from the functional side of the business, as you need to know how the customer wants the application to behave. The following are a few questions you need answered:

- How does the customer expect to input employee phone number data? The answer to this question will help you determine your options for keeping the two data sources synchronized until you are sure it is safe to remove the original column.

- Should you add the phone type and preferred flag to the queries in question? Having this information in the query will give you more options for your screens and reports.

- Which queries need to return one phone number per employee, and which queries should return multiple phone numbers? Once the employee's mobile numbers are in the system, the results sets from the queries in Listing 12-2 will NOT be identical, as the original query would only return one row, while the new query could return multiple rows for one employee.

- If there are views built on queries like these, will adding additional fields to the views break the application? If someone has used a

Select * from EMPLOYEES_BY_DEPT_VWto request the data, it will.

Once you've answered these questions, you need to confirm the same information for all queries or updates that reference the EMPLOYEES table and use the phone number data. Otherwise, your small change may impact the application front end in places not intended by the customer's initial request.

There may be many similar but slightly different queries within your application: a different column order, different result sets due to different where clauses, different selections of included columns. Every piece of code that uses the original phone number field needs to be found, updated, and tested. Keeping the old column and synchronizing the data will give you more time to find all the code that needs correction, but all that work still needs to be done.

The single, biggest challenge in refactoring a database schema is finding and changing all of the components that reference the tables you alter. If the developers have been diligent, perhaps they've built reusable modules to handle requesting EMPLOYEE data and you will have less code to find. But can you really be sure that someone hasn't written a small but unknown query or update that will fail when the table structure changes? In this example, you've only considered one select statement. In a real HR system, there will be extensive application code to add employees, change employee data, and produce reports that use the phone number data. Looking at the schema diagram in Figure 12-1, you'll notice there is a circular reference, as the employee's manager is identified in the employee record by the manager's employee ID. Queries, updates, and reports that include employee and manager phone numbers may require more complex changes. And just to make things really interesting, the Order Entry schema also makes use of the EMPLOYEES table, using the EMPLOYEE_ID to indicate the sales representative that made the sale so your very small changes now impact multiple schemas and multiple application modules. When you think of how simple this schema is, and imagine how complex most data stores can be, the fear created by the mention of a schema change becomes a little more understandable.

Creating an Access Layer via PL/SQL

What if you created your modular, reusable code in the database itself, so that all access to your application data was encapsulated within PL/SQL packages, procedures, and functions? The query referenced in Listing 12-2 would instead be incorporated into a procedure as shown in Listing 12-4. Listing 12-5 shows the code to execute that procedure, and Listing 12-6 shows the results from an example execution. This exercise creates a procedure that can be called by components outside the database to return the same data that is returned by the first query in Listing 12-2.

Listing 12-4. Procedure to Return Employee Data by Department

CREATE OR REPLACE PROCEDURE GetEmployeeData(

p_dept_id IN NUMBER,

p_emp_cursor OUT SYS_REFCURSOR,

p_status_code OUT NUMBER,

p_status_message OUT VARCHAR2) IS

/********************************************************************************

NAME: GetEmployeeData

PURPOSE: This procedure returns contact information for employees by department

NOTES:

p_status_code status_message

============= ======================================================

0 Success

-20102 Input parameter p_dept_id must not be null.

***************************************************************************************/

BEGIN -- GetEmployeeData

--validate parameters

IF ((p_department_id) IS NULL ) THEN

RAISE_APPLICATION_ERROR(-20102, Input parameter p_dept_id must not be null.',TRUE);

END IF;

OPEN p_emp_cursor FOR

SELECT

dept.department_id,

dept.department_name,

loc.street_address,

loc.city,

loc.state_province,

loc.postal_code,

cty.country_name,

CURSOR (select emp.employee_id,

emp.last_name,

emp.first_name,

emp.email,

emp.phone_number

from employees emp

where emp.department_id = dept.department_id) emp_cursor

FROM

departments dept, locations loc, countries cty

WHERE

dept.department_id = p_dept_id

AND dept.location_id = loc.location_id

AND loc.country_id = cty.country_id

ORDER BY 2,3;

p_status_code:= 0;

p_status_message:= NULL;

EXCEPTION

WHEN OTHERS THEN

p_status_code:= SQLCODE;

p_status_message:= SQLERRM;

END GetEmployeeData;

/Listing 12-5. Procedure Execution Script

set serveroutput on

var p_main_cursor REFCURSOR

DECLARE

v_dept_id departments.department_id%TYPE;

v_status_code NUMBER;

v_status_message VARCHAR2(200);

t0 timestamp;

t1 timestamp;

BEGIN

t0:= systimestamp;

v_dept_id := &Department_ID;

GetEmployeeData

(v_dept_id, :p_main_cursor, v_status_code, v_status_message);

t1:= systimestamp;

DBMS_OUTPUT.PUT_LINE('Code=' || v_status_code || 'Message=' || v_status_message);

DBMS_OUTPUT.PUT_LINE('ela=' || (t0 - t1) );

END;

/

print :p_main_cursor

Listing 12-6. Procedure Execution and Results Set

SQL> @exec_GetEmployeeData.prc

Enter value for department_id: 30

old 13: v_dept_id := &Department_ID;

new 13: v_dept_id := 30;

status_code=0 status_message

ela=-000000000 00:00:00.000091000

PL/SQL procedure successfully completed.

DEPT_ID DEPT_NAME STREET_ADDRESS CITY STATE EMP_CURSOR

30 Purchasing 2004 Charade Rd Seattle Washington CURSOR STATEMENT : 8

CURSOR STATEMENT : 8

EMPLOYEE_ID LAST_NAME FIRST_NAME EMAIL PHONE_NUMBER

114 Raphaely Den DRAPHEAL 515.127.4561

115 Khoo Alexander AKHOO 515.127.4562

116 Baida Shelli SBAIDA 515.127.4563

117 Tobias Sigal STOBIAS 515.127.4564

118 Himuro Guy GHIMURO 515.127.4565

119 Colmenares Karen COLMENA 515.127.4566

6 rows selected.

With a procedure in place, your refactoring exercise changes and becomes more complete. This time you can create and populate the new table, and once you are sure the data has been correctly populated, drop the old column, removing the duplicate data and the potential for inconsistent entries. You can also use your execution script to complete the first round of testing, verifying that the procedure executes and returns the expected results set. While you can always test a query in the database, that test is of limited value if the application can contain its own version of the code. Later in this chapter you learn how to use schema permissions to ensure that the procedures have been used consistently throughout the application so you can remain confident that refactoring the database will not impact the application layer. For now, execute the code in Listing 12-7.

Listing 12-7. Refactoring Exercise Using a PL/SQL API

SQL> CREATE TABLE emp_phone_numbers

(employee_id NUMBER(6) NOT NULL,

phone_number VARCHAR2(20) NOT NULL,

preferred VARCHAR2(1) DEFAULT 'N' NOT NULL,

phone_type VARCHAR2(10) NOT NULL,

CONSTRAINT emp_phone_numbers_pk

PRIMARY KEY (employee_id, phone_number)

) ;

Table created.

SQL> alter table EMP_PHONE_NUMBERS add constraint emp_phone_numbers_fk1

foreign key (employee_id)

references EMPLOYEES (employee_id) ;

Table altered.

SQL> insert into emp_phone_numbers (

select employee_id, phone_number, 'Y', 'Office'

from employees) ;

107 rows created.

SQL> commit;

Commit complete.

SQL> alter table employees drop column phone_number;

Table altered.

With the changes shown in Listing 12-7 complete, you achieved your target design immediately. The new table has been created and populated and the old data column as been removed. There is no need to code to keep duplicate values synchronized or to revisit this change later to remove the old column and the code built to support it. You've reduced the work that must be done by both the application and by the development team. Figure 12-2 shows your new schema design.

Figure 12-2. Human Resourse schema, target design

Once you've populated the new table and dropped the old column, be sure to check for any objects in the application schema that have been invalidated by your changes. Listing 12-8 will show that not only is your GetEmployeeData procedure invalid, as expected, but two triggers have been invalidated. The first thing you need to do is see what these triggers do and how they are associated to the EMPLOYEES table.

As part of verifying your changes, select the source text for each invalid trigger and evaluate how they are using the EMPLOYEES table. In Listing 12-8, you'll use the data dictionary to see how the changes made in Listing 12-7 impacted other objects in the schema. You will recompile the invalid objects and ensure all objects are valid. The UPDATE_JOB_HISTORY trigger references the EMPLOYEES table, but not the column you've moved to another table. The SECURE_EMPLOYEES trigger references another procedure. You'll check the SECURE_DML procedure and verify that it is not using the phone number data either. This means both triggers can be recompiled as is to restore them to a valid state.

Listing 12-8. Verifying and Recompiling Related Objects

SQL> select object_type, object_name, status

from user_objects

where status = 'INVALID'

order by object_type, object_name ;

OBJECT_TYPE OBJECT_NAME STATUS

------------- --------------------- -------

PROCEDURE GETEMPLOYEEDATA INVALID

TRIGGER SECURE_EMPLOYEES INVALID

TRIGGER UPDATE_JOB_HISTORY INVALID

3 rows selected.

SQL> select text

from user_source

where name = 'UPDATE_JOB_HISTORY'

and type = 'TRIGGER'

order by line ;

TEXT

-------------------------------------------------------------------------

TRIGGER update_job_history

AFTER UPDATE OF job_id, department_id ON employees

FOR EACH ROW

BEGIN

add_job_history(:old.employee_id,

:old.hire_date,

sysdate,

:old.job_id,

:old.department_id);

END;

7 rows selected.

SQL> alter trigger update_job_history compile ;

Trigger altered.

SQL> select text

from user_source

where name = 'SECURE_EMPLOYEES'

and type = 'TRIGGER'

order by line ;

TEXT

--------------------------------------------------------------------------

TRIGGER secure_employees

BEFORE INSERT OR UPDATE OR DELETE ON employees

BEGIN

secure_dml;

END secure_employees;

5 rows selected.

SQL> select object_type, object_name, status

from user_objects

where object_name = 'SECURE_DML' ;

OBJECT_TYPE OBJECT_NAME STATUS

------------------- ----------------------- -------

PROCEDURE SECURE_DML VALID

1 row selected.

SQL> select text

from user_source

where name = 'SECURE_DML'

order by line ;

TEXT

-------------------------------------------------------------------------

PROCEDURE secure_dml

IS

BEGIN

IF TO_CHAR (SYSDATE, 'HH24:MI') NOT BETWEEN '08:00' AND '18:00'

OR TO_CHAR (SYSDATE, 'DY') IN ('SAT', 'SUN') THEN

RAISE_APPLICATION_ERROR (-20205,

'You may only make changes during normal office hours'),

END IF;

END secure_dml;

9 rows selected.

SQL> alter trigger secure_employees compile;

Trigger altered.

SQL> select object_type, object_name, status

from user_objects

where status = 'INVALID'

order by object_type, object_name ;

OBJECT_TYPE OBJECT_NAME STATUS

------------- ----------------- ------------

PROCEDURE GETEMPLOYEEDATA INVALID

1 row selected.

Now you are ready to update the GetEmployeeData procedure to use the EMP_PHONE_NUMBERS table; here again, using procedures gives you options that you don't have when you code SQL directly into your application layer. Instead of altering this procedure to provide the new functionality requested by your customer, you will change the select statement so the procedure returns the same results set as in the past but from the new data source. This is accomplished by adding an additional limiting factor to the where clause, which will now specify that you wish to receive the preferred phone number for each employee. An alternative would be to specify that the procedure always return the office number for the employee. The best way to make this determination is to offer the customer a choice in behavior.

Pause here for a moment and consider what you have accomplished. You refactored the database, changing the table definitions and the location of the data without impacting the front-end application in any way. Every time the application code calls the GetEmployeeData procedure, it will behave exactly as it always has. If the application uses only procedures, then you know there are no unfound bits of select statements missed during your analysis and testing. Even better, since you are able to test the execution and the results in the database before you deliver the changes to development, you can be certain that development can be immediately productive with the real work they need to complete, which is incorporating the new functionality.

Now it's time to make an application change, or at least to change the procedure that the application invokes. Listing 12-9 shows how to modify the GetEmployeeData procedure to use the new table. You'll change the GetEmployeeData procedure to use the new EMP_PHONE_NUMBERS table to return the employees preferred phone number. You'll then execute the test script from Listing 12-5 to confirm the procedure works as expected.

Listing 12-9. Alter the GetEmployeeData Procedure

SQL> CREATE OR REPLACE PROCEDURE GetEmployeeData(

p_dept_id IN NUMBER,

p_emp_cursor OUT SYS_REFCURSOR,

p_status_code OUT NUMBER,

p_status_message OUT VARCHAR2) IS

/**************************************************************************************

NAME: GetEmployeeData

PURPOSE: This procedure returns contact information for employees by department

NOTES:

p_status_code status_message

============= ===========================================================

0 Success

-20102 'Input parameter p_dept_id must not be null.'

***************************************************************************************/

BEGIN -- GetEmployeeData

--validate parameters

IF ((p_dept_id) IS NULL ) THEN

RAISE_APPLICATION_ERROR(-20102, 'Input parameter p_dept_id must not be null.', TRUE);

END IF;

OPEN p_emp_cursor FOR

SELECT

dept.department_id, dept.department_name,

loc.street_address,

loc.city, loc.state_province, loc.postal_code,

cty.country_name,

CURSOR (select emp.employee_id,

emp.last_name,

emp.first_name,

emp.email,

eph.phone_number

from employees emp, emp_phone_numbers eph

where emp.department_id = dept.department_id

and emp.employee_id = eph.employee_id

and eph.preferred = 'Y'

order by emp.employee_id) emp_cursor

FROM

departments dept, locations loc, countries cty

WHERE dept.department_id = p_dept_id

AND dept.location_id = loc.location_id

AND loc.country_id = cty.country_id ;

p_status_code:= 0;

p_status_message:= NULL;

EXCEPTION

WHEN OTHERS THEN

p_status_code:= SQLCODE;

p_status_message:= SQLERRM;

END GetEmployeeData;

/

---- Confirm that all schema objects are valid:

SQL> select object_type, object_name, status

from user_objects

where status = 'INVALID'

order by object_type, object_name ;

no rows selected

---- Execute test script and confirm the procedure works as expected:

SQL> @exec_GetEmployeeData.prc

Enter value for department_id: 30

old 13: v_dept_id := &Department_ID;

new 13: v_dept_id := 30;

status_code=0 status_message=

ela=-000000000 00:00:00.000128000

PL/SQL procedure successfully completed.

DEPT_ID DEPT_NAME STREET_ADDRESS CITY STATE EMP_CURSOR

30 Purchasing 2004 Charade Rd Seattle Washington CURSOR STATEMENT : 8

CURSOR STATEMENT : 8

EMPLOYEE_ID LAST_NAME FIRST_NAME EMAIL PHONE_NUMBER

114 Raphaely Den DRAPHEAL 515.127.4561

115 Khoo Alexander AKHOO 515.127.4562

116 Baida Shelli SBAIDA 515.127.4563

117 Tobias Sigal STOBIAS 515.127.4564

118 Himuro Guy GHIMURO 515.127.4565

119 Colmenares Karen KCOLMENA 515.127.4566

6 rows selected.

At this point, you made the schema design changes and you completed several steps to test and confirm your changes. You know that all schema objects that were impacted by the table changes have been found, recompiled, and are now valid. The only change you made to the GetEmployeeData procedure ensures that it will continue to behave as it always has. The next step is to incorporate the requested functionality change and provide the employee data by department with multiple phone numbers. To accomplish this, you will create a new procedure, GetEmployeeData_MultiPhoneNo, as shown in Listing 12-10. Listing 12-11 then shows how to test the new procedure.

![]() Note There is another, more elegant approach to solving this problem: you add a new parameter to the original procedure to indicate which phone number or combination of phone numbers should be returned to the application. The new parameter should default to returning a single phone number, either the preferred phone number or the office number, whichever option was chosen by your customer. This alternative approach ensures that the procedure will continue to produce the same results set with no changes to the application, and when development is ready to include the new functionality, they will use the same procedure but specify the phone number behavior via one additional value in the procedure call.

Note There is another, more elegant approach to solving this problem: you add a new parameter to the original procedure to indicate which phone number or combination of phone numbers should be returned to the application. The new parameter should default to returning a single phone number, either the preferred phone number or the office number, whichever option was chosen by your customer. This alternative approach ensures that the procedure will continue to produce the same results set with no changes to the application, and when development is ready to include the new functionality, they will use the same procedure but specify the phone number behavior via one additional value in the procedure call.

Listing 12-10. Create the GetEmployeeData_MultiPhoneNo Procedure

SQL> CREATE OR REPLACE PROCEDURE GetEmployeeData_MultiPhoneNo(

p_dept_id IN NUMBER,

p_emp_cursor OUT SYS_REFCURSOR,

p_status_code OUT NUMBER,

p_status_message OUT VARCHAR2) IS

/***************************************************************************************

NAME: GetEmployeeData_MultiPhoneNo

PURPOSE: This procedure returns contact information for employees by department

NOTES:

p_status_code status_message

============= ===========================================================

0 Success

-20102 Input parameter p_dept_id must not be null.

***************************************************************************************/

BEGIN -- GetEmployeeData_MultiPhoneNo

--validate parameters

IF ((p_dept_id) IS NULL ) THEN

RAISE_APPLICATION_ERROR(-20102, 'Input parameter p_dept_id must not be null.', TRUE);

END IF;

OPEN p_emp_cursor FOR

SELECT

dept.department_id,

dept.department_name,

loc.street_address,

loc.city,

loc.state_province,

loc.postal_code,

cty.country_name,

CURSOR (select emp.employee_id,

emp.last_name,

emp.first_name,

emp.email,

eph.preferred,

eph.phone_type,

eph.phone_number

from employees emp, emp_phone_numbers eph

where emp.department_id = dept.department_id

and emp.employee_id = eph.employee_id

order by emp.employee_id) emp_cursor

FROM

departments dept, locations loc, countries cty

WHERE dept.department_id = p_dept_id

AND dept.location_id = loc.location_id

AND loc.country_id = cty.country_id ;

p_status_code:= 0;

p_status_message:= NULL;

EXCEPTION

WHEN OTHERS THEN

p_status_code:= SQLCODE;

p_status_message:= SQLERRM;

END GetEmployeeData_MultiPhoneNo;

/

---- Create new test script to execute this procedure

---- only the procedure name is changed

set serveroutput on

var p_main_cursor REFCURSOR

DECLARE

v_dept_id departments.department_id%TYPE;

v_status_code NUMBER;

v_status_message VARCHAR2(200);

t0 timestamp;

t1 timestamp;

BEGIN

t0:= systimestamp;

v_dept_id := &Department_ID;

GetEmployeeData_MultiPhoneNo

(v_dept_id, :p_main_cursor, v_status_code, v_status_message);

t1:= systimestamp;

DBMS_OUTPUT.PUT_LINE('code=' || v_status_code || 'message=' || v_status_message);

DBMS_OUTPUT.PUT_LINE('ela=' || (t0 - t1) );

END;

/

print :p_main_cursor

At this point, the results set from the new procedure looks exactly like the old procedure as none of the employees actually have more than one phone number in the system. In Listing 12-11, you will add multiple phone numbers for one employee and confirm the correct results are returned from the original test script. You'll add two phone numbers for one of your executives, Mr. Steven King. He'll now have three phone numbers: office, mobile, and home. You will also switch his mobile number to become his preferred number and confirm that his mobile number will be returned from the original GetEmployeeData procedure.

Listing 12-11. Extended Phone Number Functionality Testing

SQL> select * from emp_phone_numbers

where employee_id = 100;

EMPLOYEE_ID PHONE_NUMBER P PHONE_TYPE

----------- ----------------- - ----------

100 515.123.4567 Y Office

SQL> 1 row selected.

SQL> insert into emp_phone_numbers

values (100,'555.312.9876','N','Mobile'),

1 row created.

SQL> insert into emp_phone_numbers

values (100,'555.321.7345','N','Home'),

1 row created.

Commit complete.

SQL> select * from emp_phone_numbers

where employee_id = 100;

EMPLOYEE_ID PHONE_NUMBER P PHONE_TYPE

----------- ----------------- - ----------

100 515.123.4567 Y Office

100 555.312.9876 N Mobile

100 555.321.7345 N Home

SQL> 3 rows selected.

SQL> @exec_GetEmployeeData_MultiPhoneNo.sql

Enter value for department_id: 90

old 13: v_dept_id := &Department_ID;

new 13: v_dept_id := 90;

status_code=0 status_message=

ela=-000000000 00:00:00.000110000

PL/SQL procedure successfully completed.

DEPT_ID DEPT_NAME STREET_ADDRESS CITY STATE EMP_CURSOR

90 Executive 2004 Charade Rd Seattle Washington CURSOR STATEMENT : 8

CURSOR STATEMENT : 8

EMPLOYEE_ID LAST_NAME FIRST_NAME EMAIL P PHONE_TYPE PHONE_NUMBER

100 King Steven SKING Y Office 515.123.4567

100 King Steven SKING N Mobile 555.312.9876

100 King Steven SKING N Home 555.321.7345

101 Kochhar Neena NKOCHHAR Y Office 515.123.4568

102 De Haan Lex LDEHAAN Y Office 515.123.4569

---- Set Mr. Kings' mobile number to be his preferred number

---- Execute the GetEmployeeData procedure

---- Confirm default behavior returns preferred number

SQL> update emp_phone_numbers set preferred = 'N'

where employee_id = 100

and phone_type = 'Office';

1 row updated.

SQL> update emp_phone_numbers set preferred = 'Y'

where employee_id = 100

and phone_type = 'Mobile';

1 row updated.

SQL> commit ;

Commit complete.

SQL> select * from emp_phone_numbers

where employee_id = 100;

EMPLOYEE_ID PHONE_NUMBER P PHONE_TYPE

----------- ----------------- - ------------------

100 515.123.4567 N Office

100 555.312.9876 Y Mobile

100 555.321.7345 N Home

3 rows selected.

SQL> @exec_GetEmployeeData

Enter value for department_id: 90

old 13: v_dept_id := &Department_ID;

new 13: v_dept_id := 90;

status_code=0 status_message=

ela=-000000000 00:00:00.002270000

PL/SQL procedure successfully completed.

DEPT_ID DEPT_NAME STREET_ADDRESS CITY STATE EMP_CURSOR

90 Executive 2004 Charade Rd Seattle Washington CURSOR STATEMENT : 8

CURSOR STATEMENT : 8

EMPLOYEE_ID LAST_NAME FIRST_NAME EMAIL PHONE_NUMBER

100 King Steven SKING 555.312.9876

101 Kochhar Neena NKOCHHAR 515.123.4568

102 De Haan Lex LDEHAAN 515.123.4569

If you compare this results set to the one just above it, you'll notice that phone number listed for Mr. King is his mobile number. Your goal in any refactoring exercise should be to identify the desired behavior in the majority of existing application screens and reports, and code the original procedure to return those results. The unique cases can be dealt with by adding new procedures or by adding additional parameters set to default to the commonly requested value. This will minimize the number of application code changes needed to incorporate the changes.

By approaching the database design and refactor steps to minimize the impact on front-end application development, you have truly decoupled the database from the application. There is a clean line between database development and application development; for the database, this line can be verified and tested in the schema or schemas containing application objects. This allows the application developers to incorporate new functionality at their own pace, prioritizing their tasks independent of the database changes. The database changes will have no impact on the application until the developer chooses to call the procedure that returns the new version of the data. `

Does this mean that application developers, database developers, and DBAs no longer need to work together and communicate? Far from it. In the next section, you'll take a look at the values behind agile and consider some guidelines for making the most of PL/SQL in an agile database development environment.

The Agile Manifesto

A few years ago, I found myself very puzzled by the phrase agile development. I heard many new terms like scrum and sprint, bumped into groups of people standing in hallways (usually blocking access to wherever I was trying to go), and noticed a steady deluge of e-mail about build candidates. At the same time, copies of a Dilbert comic strips explaining that specifications, documentation, and training were no longer needed because everyone had been trained to be agile appeared throughout cubicle land. All of this was vaguely entertaining to me until I noticed that the Entity Relationship Diagram (ERD) and well-designed database schemas had also gone missing in this new world. At that point, I decided it was time to learn something about agile.

Unfortunately, most of my reading turned up very little information about databases. The few sources I did find seemed very limited in their understanding of all the options available in modern database products and how the use of those options impacted database performance. I wondered if the lack of attention to database design had been intended by the creators of the agile movement because they did not consider it necessary, or if database design had been overlooked because the typical use cases in most books dealt with building an application on pre-existing databases.

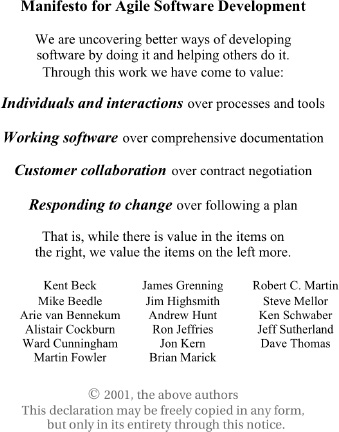

So I started at the beginning by looking at The Agile Manifesto, shown in Figure 12-3. I found that I agreed wholeheartedly with both the Manifesto and every one of The Twelve Principles supporting it. I also found no reference to scrum, sprints, standup meetings, or any desire to obliterate database design. I've copied The Agile Manifesto below, you can find The Twelve Principles at agilemanifesto.org.

Figure 12-3. The Agile Manifesto

The Agile Manifesto lists four values: Individuals and Interaction, Working Software, Customer Collaboration, and Response to Change. These first four values are comparable to four traditional values: Processes and Tools, Comprehensive Documentation, Contract Negotiation, and Following a Plan. Notice the phrasing of each line. The Manifesto doesn't state that the second set of values is no longer necessary; it simply says that the first set of values are more important. Nor does the Manifesto tell you exactly how to achieve agile and there is a reason for that. Teams are different, and what works well for one may be detrimental to another. Since individuals and interactions are more important than tools and processes and are the first value pair listed, you need to recognize that tools and processes should support the individuals on the team and encourage interaction among the team members. When existing tools and processes do not meet this goal, the tools and processes should be changed.

People who hear me say this are usually surprised. After all, I wrote a paper back in 2008 called “An Industrial Engineer's Guide to Database Management.” In my paper, I noted ways that good database management practices were similar to Industrial Engineering, and processes and standardization were very heavily emphasized. I still believe that process and standardization are very important to building a reliable product with a repeatable build but if the existing process is not making it easier for the team to get their job done, the process is flawed, not the team. Just like a user will readily use an application that makes it easier to do their job, a team will follow a process that makes it easier to do theirs. The first sign of a flawed process is that people don't like to follow it. The best fix is to ensure that the process is adding value and that the benefits are visible. If the process is adding value but using the process is painful, then you need tools to make it easier to comply. Processes are most beneficial when they are consistently followed by everyone on the team, and teams are generally very willing to comply with processes that make it easy to comply with a known requirement.

This is, of course, a circular argument but that's not a bad thing. In this case, you have a healthy feedback loop in that people will follow a process that adds value; as more people follow the process, the more valuable it becomes. Early Industrial Engineering techniques, i.e. Taylorism, recognized the importance of finding and eliminating bottlenecks in process (system) optimization but they did so at the expense of the humans involved in the process. Later techniques, such as those proposed by Dr. W. Edwards Deming and used in Lean Manufacturing, recognized the value of the individuals within the process. By changing processes to optimize the team's ability to get their job done in the simplest and most expedient way possible, Toyota was able to build better quality cars faster at a lower cost than the companies who followed the recognized processes of that time period. This is what agile is all about: focus on the values and the goals of the team, define the principals that support those goals, and then create the process (practices) that ensure members are able to achieve their goals as effectively and as efficiently as possible.

Using PL/SQL with Evolutionary Data Modeling

In this section, I'll list some of the techniques that have I have either experienced or seen to be effective. This isn't an exhaustive list, nor is it intended to be a list of commandments that must be followed. As you read through these techniques, consider the values in The Agile Manifesto. Each of the techniques below aligns with at least one of the core values and supports the team's ability adapt to changes, keep change visible, and continuously improve their software products.

Define the Interface

Rather than beginning by designing a database schema, start by defining the interface between the database and the front-end application. What is the functionality the application must perform? Define the data needed by the users and the values they will enter to request the result set. Define the data they need to update and how they will confirm their updates. If data needs to be manipulated or transformed, determine what needs to happen to it and how the transformation can be verified. This process should include database architects, database developers, and application developers, and when it's complete, the end result is an agreement of the functionality the database will provide for the application. The agreement must include names of any object the application team will call. If views are needed, column names and data types should be included. Procedure definitions should include all required input and output parameters. Once an agreement is reached, everyone should have what they need to focus on optimizing the design within their area of responsibility. Any changes must be negotiated. If bugs are identified later that indicate a mismatch between the database and application, a review of the interface agreement will help identify how the bug should be resolved. The process of defining the interface increases the direct interaction between team members and engages them on the most important topic: the functionality needed by the end user.

Think Extensible

One of the principles behind The Agile Manifesto is simplicity and minimizing the work to be done. Some interpretations of agile use the idea of minimizing work to insist that nothing but the minimal code to provide for the immediate needs should be written. The problem with this kind of thinking is that performance and scalability can't be added to the build when the customer notices response time is slowing. I've heard people say “The customer isn't paying for a Cadillac. Just build the Yugo for now.” However, has anyone ever successfully turned a Yugo into a Cadillac? Anyone who knows cars—or software—knows that it just doesn't work that way.

Instead, you need to start by building a solid and extensible frame. Structural changes are major changes for cars, houses, or software systems. If you have a solid foundation, you can add functionality so that your Yugo/Cadillac begins to take shape and gives the customer an initial feel for what is possible. Maybe the customer will be happy with a Yugo; maybe the application will be so successful it will eventually become a Cadillac. Using an evolutionary approach to database design and using PL/SQL as an API allows you to adapt the database to changing needs while controlling how and when changes are implemented in the next layer of the application.

Many proponents of agile mention how easy it is to make the changes later when new customer needs are identified. This depends on which system components are considered part of the design. If the team has an architect and that architect is responsible for the design of the application only, this may be true. However, architect is a widely used term these days, and if the architect in question is responsible for the hardware or software platform, making changes later can become a very complicated and expensive option. The goal should be to focus on the essential: the features and functionality that will provide the results the customer requested while planning for the likely direction of the final product. Short release cycles and iterative development provide you with feedback to ensure you are interpreting our customer's requests accurately. The feedback you receive provides the first clues when your product's direction needs to shift.

Test Driven Development

One of the most beneficial tools to come from agile and Extreme Programming is test driven development (TDD). The concept is simple: before any code is written, design the test that will prove that the planned code changes have achieved their goal. Some people wonder how a test can be written for software functionality that doesn't exist yet, but turn that question around. How can code be written if the measures of success or failure have not been defined?

When using PL/SQL as the API, the first step to determining the necessary tests is to consider the agreed upon interface. The interface provides the information about the procedure name, the parameters, and the desired results. Testing changes made to a procedure should include verifying the accuracy of the results, of course, but what other kinds of errors might influence the procedure's behavior? As you consider this question, you will see options to improve the code. Some error conditions can be captured, and then the procedure can return a message to the front end application. What will happen if the application passes a completely unexpected value? What happens when the results are not what are expected? What happens when the application session calling a procedure goes away without completing its task? Each of these conditions represents a real possibility for any application but when the code is able to capture errors and handle them in a way that helps the developer identify why the input was invalid or how the results were influenced, the resulting application becomes more robust and stable for the end user, which increases the software's value significantly.

Each procedural test should be scripted and repeatable. These repeatable scripts form the foundation for regression testing, providing a tool to confirm that changes to one procedure did not impact other areas of the application. As a side benefit, these scripts can be made available to others, providing a tool for troubleshooting or verifying system integration. Once tests are repeatable, then they can be incorporated into a test harness, allowing an entire series of tests to be executed as a package. If the test scripts can be executed using shell script or PL/SQL code, then the database developer can confirm that all the functionality required by the interface agreement is working as expected before the application development side begins working with it. This will minimized the issues the front end development team experiences as they incorporate their changes with the database changes. Better yet, if an application developer does hit an error, the results from the database only level testing can be compared to the parameters and results at the next level of development. This expedites troubleshooting and error correction, making both sides of this development process more agile.

Use Schemas and Users Wisely

One of the advantages of using PL/SQL as the interface layer is that structural objects can be changed, database code can be recompiled, and the data dictionary can be checked to confirm that the entire schema is valid. If the new changes had any impact on other packages, procedures, or views, it will be visible immediately and “bugs” can be repaired before they've propagated into other application layers. In order to be able to do this, there should be one application schema containing the all the application objects. In a complex application, the functionality can be divided into modules that will allow subsets of the code to be tested independently of each other. This does not mean that complete system integration testing should not be performed, or that it should be delayed until late in the project. But problems can often be solve more easily and quickly if the scope of the problem can be narrowed.

Create a distinct application user and grant that user permission to execute the procedures defined in the interface agreement and central schema(s). If you grant more access to the application user than this, you run the risk that someone will build a direct SQL statement into another part of the application and this will defeat the compile test. It will also make it more difficult to identify how much application code is impacted by a proposed change, adding complexity and uncertainty to the planning process.

While limiting the access and permissions of the application user IDs provides benefits, it's important to note that the developers need access to schema objects and this is not just true for database developers. The more the front-end application developers know and understand about the DBMS and the data within it, the better use they will be able to make of this toolset. Developers should be encouraged to safely explore the database schema, and there are a couple of options for achieving this goal. Provide as much access as possible to the database early in the development cycle and reduce access as changes are migrated from the development databases, through functionality testing, systems integration testing, and quality testing. How this access is provided depends on the skill of the team. If the team is knowledgeable about the database, allow direct access to the schema. If they are inexperienced with the DBMS and database techniques, create additional users with different kinds of permissions. This also provides benefits in monitoring: if you see a runaway query but the process was initiated by the read only user rather than the user expected to execute the procedures in the application, the process can be safely terminated without impacting the application. If developers cannot be trusted to NOT change code or data without authorization or at the wrong stage of the migration process, they should not be given direct access. Auditing provides an accurate and easy way to make all changes made within the database transparent.

Summary

Agility is being able to adapt quickly to changing conditions. More than that, being able to adapt and evolve to meet changing conditions and requirements is critical to your ability to remain competitive. The DBMS software doesn't limit your ability to implement change, but how you choose to use the software and how you design our applications can. First, you need to acknowledge and prepare for the inevitable change that will come your way. Then you need to evaluate the goals of your projects and the tools you have to meet these goals. It is possible to support an agile development process AND have a well designed data model. Using PL/SQL as an API can buffer some of the changes that will need to be made by providing a means to allow the database and the application to evolve and support changing customer needs.