3

Fundamentals of Software Engineering for Games

In this chapter, we’ll discuss the foundational knowledge needed by any professional game programmer. We’ll explore numeric bases and representations, the components and architecture of a typical computer and its CPU, machine and assembly language, and the C++ programming language. We’ll review some key concepts in object-oriented programming (OOP), and then delve into some advanced topics that should prove invaluable in any software engineering endeavor (and especially when creating games). As with Chapter 2, some of this material may already be familiar to some readers. However, I highly recommend that all readers at least skim this chapter, so that we all embark on our journey with the same set of tools and supplies.

3.1 C++ Review and Best Practices

Because C++ is arguably the most commonly used language in the game industry, we will focus primarily on C++ in this book. However, most of the concepts we’ll cover apply equally well to any object-oriented programming language. Certainly a great many other languages are used in the game industry—imperative languages like C; object-oriented languages like C# and Java; scripting languages like Python, Lua and Perl; functional languages like Lisp, Scheme and F#, and the list goes on. I highly recommend that every programmer learn at least two high-level languages (the more the merrier), as well as learning at least some assembly language programming (see Section 3.4.7.3). Every new language that you learn further expands your horizons and allows you to think in a more profound and proficient way about programming overall. That being said, let’s turn our attention now to object-oriented programming concepts in general, and C++ in particular.

3.1.1 Brief Review of Object-Oriented Programming

Much of what we’ll discuss in this book assumes you have a solid understanding of the principles of object-oriented design. If you’re a bit rusty, the following section should serve as a pleasant and quick review. If you have no idea what I’m talking about in this section, I recommend you pick up a book or two on object-oriented programming (e.g., [7]) and C++ in particular (e.g., [46] and [36]) before continuing.

3.1.1.1 Classes and Objects

A class is a collection of attributes (data) and behaviors (code) that together form a useful, meaningful whole. A class is a specification describing how individual instances of the class, known as objects, should be constructed. For example, your pet Rover is an instance of the class “dog.” Thus, there is a one-to-many relationship between a class and its instances.

3.1.1.2 Encapsulation

Encapsulation means that an object presents only a limited interface to the outside world; the object’s internal state and implementation details are kept hidden. Encapsulation simplifies life for the user of the class, because he or she need only understand the class’ limited interface, not the potentially intricate details of its implementation. It also allows the programmer who wrote the class to ensure that its instances are always in a logically consistent state.

3.1.1.3 Inheritance

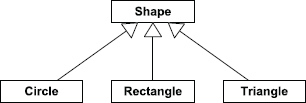

Inheritance allows new classes to be defined as extensions to preexisting classes. The new class modifies or extends the data, interface and/or behavior of the existing class. If class Child extends class Parent, we say that Child inherits from or is derived from Parent. In this relationship, the class Parent is known as the base class or superclass, and the class Child is the derived class or subclass. Clearly, inheritance leads to hierarchical (tree-structured) relationships between classes.

Inheritance creates an “is-a” relationship between classes. For example, a circle is a type of shape. So, if we were writing a 2D drawing application, it would probably make sense to derive our Circle class from a base class called Shape.

We can draw diagrams of class hierarchies using the conventions defined by the Unified Modeling Language (UML). In this notation, a rectangle represents a class, and an arrow with a hollow triangular head represents inheritance. The inheritance arrow points from child class to parent. See Figure 3.1 for an example of a simple class hierarchy represented as a UML static class diagram.

Multiple Inheritance

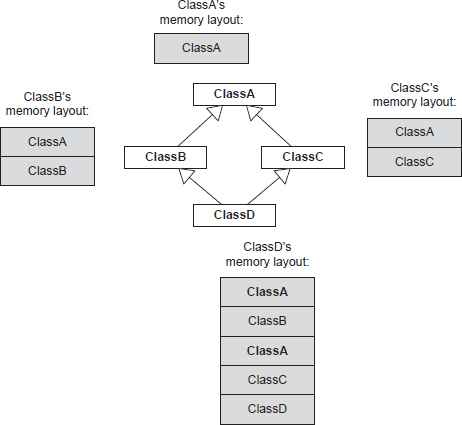

Some languages support multiple inheritance (MI), meaning that a class can have more than one parent class. In theory MI can be quite elegant, but in practice this kind of design usually gives rise to a lot of confusion and technical difficulties (see http://en.wikipedia.org/wiki/Multiple_inheritance). This is because multiple inheritance transforms a simple tree of classes into a potentially complex graph. A class graph can have all sorts of problems that never plague a simple tree—for example, the deadly diamond (http://en.wikipedia.org/wiki/Diamond_problem), in which a derived class ends up containing two copies of a grandparent base class (see Figure 3.2). (In C++, virtual inheritance allows one to avoid this doubling of the grandparent’s data.) Multiple inheritance also complicates casting, because the actual address of a pointer may change depending on which base class it is cast to. This happens because of the presence of multiple vtable pointers within the object.

Most C++ software developers avoid multiple inheritance completely or only permit it in a limited form. A common rule of thumb is to allow only simple, parentless classes to be multiply inherited into an otherwise strictly single-inheritance hierarchy. Such classes are sometimes called mix-in classes because they can be used to introduce new functionality at arbitrary points in a class tree. See Figure 3.3 for a somewhat contrived example of a mix-in class.

3.1.1.4 Polymorphism

Polymorphism is a language feature that allows a collection of objects of different types to be manipulated through a single common interface. The common interface makes a heterogeneous collection of objects appear to be homogeneous, from the point of view of the code using the interface.

For example, a 2D painting program might be given a list of various shapes to draw on-screen. One way to draw this heterogeneous collection of shapes is to use a switch statement to perform different drawing commands for each distinct type of shape.

void drawShapes(std::list<Shape*>& shapes)

{

std::list<Shape*>::iterator pShape = shapes.begin();

std::list<Shape*>::iterator pEnd = shapes.end();

for (; pShape != pEnd; pShape++)

{

switch (pShape->mType)

{

case CIRCLE:

// draw shape as a circle

break;

case RECTANGLE:

// draw shape as a rectangle

break;

case TRIANGLE:

// draw shape as a triangle

break;

//…

}

}

}

The problem with this approach is that the drawShapes() function needs to “know” about all of the kinds of shapes that can be drawn. This is fine in a simple example, but as our code grows in size and complexity, it can become difficult to add new types of shapes to the system. Whenever a new shape type is added, one must find every place in the code base where knowledge of the set of shape types is embedded—like this switch statement—and add a case to handle the new type.

The solution is to insulate the majority of our code from any knowledge of the types of objects with which it might be dealing. To accomplish this, we can define classes for each of the types of shapes we wish to support. All of these classes would inherit from the common base class Shape. A virtual function—the C++ language’s primary polymorphism mechanism—would be defined called Draw(), and each distinct shape class would implement this function in a different way. Without “knowing” what specific types of shapes it has been given, the drawing function can now simply call each shape’s Draw() function in turn.

struct Shape

{

virtual void Draw() = 0; // pure virtual function

virtual ∼Shape() {} // ensure derived dtors are virtual

};

struct Circle : public Shape

{

virtual void Draw()

{

// draw shape as a circle

}

};

struct Rectangle : public Shape

{

virtual void Draw()

{

// draw shape as a rectangle

}

};

struct Triangle : public Shape

{

virtual void Draw()

{

// draw shape as a triangle

}

};

void drawShapes(std::list<Shape*>& shapes)

{

std::list<Shape*>::iterator pShape = shapes.begin();

std::list<Shape*>::iterator pEnd = shapes.end();

for (; pShape != pEnd; pShape++)

{

pShape->Draw(); // call virtual function

}

}

3.1.1.5 Composition and Aggregation

Composition is the practice of using a group of interacting objects to accomplish a high-level task. Composition creates a “has-a” or “uses-a” relationship between classes. (Technically speaking, the “has-a” relationship is called composition, while the “uses-a” relationship is called aggregation.) For example, a spaceship has an engine, which in turn has a fuel tank. Composition/aggregation usually results in the individual classes being simpler and more focused. Inexperienced object-oriented programmers often rely too heavily on inheritance and tend to underutilize aggregation and composition.

As an example, imagine that we are designing a graphical user interface for our game’s front end. We have a class Window that represents any rectangular GUI element. We also have a class called Rectangle that encapsulates the mathematical concept of a rectangle. A naïve programmer might derive the Window class from the Rectangle class (using an “is-a” relationship). But in a more flexible and well-encapsulated design, the Window class would refer to or contain a Rectangle (employing a “has-a” or “uses-a” relationship). This makes both classes simpler and more focused and allows the classes to be more easily tested, debugged and reused.

3.1.1.6 Design Patterns

When the same type of problem arises over and over, and many different programmers employ a very similar solution to that problem, we say that a design pattern has arisen. In object-oriented programming, a number of common design patterns have been identified and described by various authors. The most well-known book on this topic is probably the “Gang of Four” book [19].

Here are a few examples of common general-purpose design patterns.

The game industry has its own set of design patterns for addressing problems in every realm from rendering to collision to animation to audio. In a sense, this book is all about the high-level design patterns prevalent in modern 3D game engine design.

Janitors and RAII

As one very useful example of a design pattern, let’s have a brief look at the “resource acquisition is initialization” pattern (RAII). In this pattern, the acquisition and release of a resource (such as a file, a block of dynamically allocated memory, or a mutex lock) are bound to the constructor and destructor of a class, respectively. This prevents programmers from accidentally forgetting to release the resource—you simply construct a local instance of the class to acquire the resource, and let it fall out of scope to release it automatically. At Naughty Dog, we call such classes janitors because they “clean up” after you.

For example, whenever we need to allocate memory from a particular type of allocator, we push that allocator onto a global allocator stack, and when we’re done allocating we must always remember to pop the allocator off the stack. To make this more convenient and less error-prone, we use an allocation janitor. This tiny class’s constructor pushes the allocator, and its destructor pops the allocator:

class AllocJanitor

{

public:

explicit AllocJanitor(mem::Context context)

{

mem::PushAllocator(context);

}

∼AllocJanitor()

{

mem::PopAllocator();

}

};

To use the janitor class, we simply construct a local instance of it. When this instance drops out of scope, the allocator will be popped automatically:

void f()

{

// do some work…

// allocate temp buffers from single-frame allocator

{

AllocJanitor janitor(mem::Context::kSingleFrame);

U8* pByteBuffer = new U8[SIZE];

float* pFloatBuffer = new float[SIZE];

// use buffers…

// (NOTE: no need to free the memory because we // used a single-frame allocator)

} // janitor pops allocator when it drops out of scope

// do more work…

}

See http://en.cppreference.com/w/cpp/language/raii for more information on the highly useful RAII pattern.

3.1.2 C++ Language Standardization

Since its inception in 1979, the C++ language has been continually evolving. Bjarne Stroustrup, its inventor, originally named the language “C with Classes”, but it was renamed “C++” in 1983. The International Organization for Standardization (ISO, www.iso.org) first standardized the language in 1998—this version is known today as C++98. Since then, the ISO has been periodically publishing updated standards for the C++ language, with the goals of making the language more powerful, easier to use, and less ambiguous. These goals are achieved by refining the semantics and rules of the language, by adding new, more-powerful language features, and by deprecating, or removing entirely, those aspects of the language which have proven problematic or unpopular.

The most-recent variant of the C++ programming language standard is called C++17, which was published on July 31, 2017. The next iteration of the standard, C++2a, was in development at the time of this publication. The various versions of the C++ standard are summarized in chronological order below.

nullptr literal, to replace the bug-prone NULL macro that had been inherited from the C language;auto and decltype keywords for type inference;decltype of a function’s input arguments to be used to describe the return type of that function;override and final keywords for improved expressiveness when defining and overriding virtual functions;constexpr keyword for defining compile-time constant values by evaluating expressions at compile time;__attribute__((…)) and __declspec().C++11 also introduced an improved and expanded standard library, including support for threading (concurrent programming), improved smart pointer facilities and an expanded set of generic algorithms.

auto keyword, without the need for the verbose trailing decltype expression required by C++11;auto to declare its input arguments;0b (e.g., 0b10110110);1’000’000 instead of 1000000);constexpr, including the ability to use if, switch and loops within constant expressions.register keyword, and the already-deprecated auto_ptr smart pointer class;void f() noexcept(true); and void f() noexcept(false); are now distinct types;u8‘x’), and floating-point literals with a hexadecimal base and decimal exponent (e.g., 0xC.68p+2);auto [a, b] = func_that_returns_a_pair();)—a syntax that is strikingly similar to that of returning multiple values from a function via a tuple in Python;[[fallthrough]] which allows you to explicitly document the fact that a missing break statement in a switch is intentional, thereby suppressing the warning that would otherwise be generated.3.1.2.1 Further Reading

There are plenty of great online resources and books that describe the features of C++11, C++14 and C++17 in detail, so we won’t attempt to cover them here. Here are a few useful references:

auto and decltype.3.1.2.2 Which Language Features to Use?

As you read about all the cool new features being added to C++, it’s tempting to think that you need to use all of these features in your engine or game. However, just because a feature exists doesn’t mean your team needs to immediately start making use of it.

At Naughty Dog, we tend to take a conservative approach to adopting new language features into our codebase. As part of our studio’s coding standards, we have a list of those C++ language features that are approved for use in our runtime code, and another somewhat more liberal list of language features that are allowed in our offline tools code. There are a number of reasons for this cautious approach, which I’ll outline in the following sections.

Lack of Full Feature Support

The “bleeding edge” features may not be fully supported by your compiler. For example, LLVM/Clang, the C++ compiler used on the Sony Playstation 4, currently supports the entire C++11 standard in versions 3.3 and later, and all of C++14 in versions 3.4 and later. But its support for C++17 is spread across Clang versions 3.5 through 4.0, and it currently has no support for the draft C++2a standard. Also, Clang compiles code in C++98 mode by default—support for some of the more-advanced standards are accepted as extensions, but in order to enable full support one must pass specific command-line arguments to the compiler. See https://clang.llvm.org/cxx_status.html for details.

Cost of Switching between Standards

There’s a non-zero cost to switching your codebase from one standard to another. Because of this, it’s important for a game studio to decide on the most-advanced C++ standard to support, and then stick with it for a reasonable length of time (e.g., for the duration of one project). At Naughty Dog, we adopted the C++11 standard only relatively recently, and we only allowed its use in the code branch in which The Last of Us Part II is being developed. The code in the branch used for Uncharted 4: A Thief’s End and Uncharted: The Lost Legacy had been written originally using the C++98 standard, and we decided that the relatively minor benefits of adopting C++11 features in that codebase did not outweigh the cost and risks of doing so.

Risk versus Reward

Not every C++ language feature is created equal. Some features are useful and pretty much universally acceptable, like nullptr. Others may have benefits, but also associated negatives. Still other language features may be deemed inappropriate for use in runtime engine code altogether.

As an example of a language feature with both benefits and downsides, consider the new C++11 interpretation of the auto keyword. This keyword certainly makes variables and functions more convenient to write. But the Naughty Dog programmers recognized that over-use of auto can lead to obfuscated code: As an extreme example, imagine trying to read a .cpp file written by somebody else, in which virtually every variable, function argument and return value is declared auto. It would be like reading a typeless language such as Python or Lisp. One of the benefits of a strongly-typed language like C++ is the programmer’s ability to quickly and easily determine the types of all variables. As such, we decided to adopt a simple rule: auto may only be used when declaring iterators, in situations where no other approach works (such as within template definitions), or in special cases when code clarity, readability and maintainability is significantly improved by its use. In all other cases, we require the use of explicit type declarations.

As an example of a language feature that could be deemed inappropriate for use in a commercial product like a game, consider template metaprogramming. Andrei Alexandrescu’s Loki library [3] makes heavy use of template metaprogramming to do some pretty interesting and amazing things. However, the resulting code is tough to read, is sometimes non-portable, and presents programmers with an extremely high barrier to understanding. The programming leads at Naughty Dog believe that any programmer should be able to jump in and debug a problem on short notice, even in code with which he or she may not be very familiar. As such, Naughty Dog prohibits complex template metaprogramming in runtime engine code, with exceptions made only on a case-by-case basis where the benefits are deemed to outweigh the costs.

In summary, remember that when you have a hammer, everything can tend to look like a nail. Don’t be tempted to use features of your language just because they’re there (or because they’re new). A judicious and carefully considered approach will result in a stable codebase that’s as easy as possible to understand, reason about, debug and maintain.

3.1.3 Coding Standards: Why and How Much?

Discussions of coding conventions among engineers can often lead to heated “religious” debates. I do not wish to spark any such debate here, but I will go so far as to suggest that following at least a minimal set of coding standards is a good idea. Coding standards exist for two primary reasons.

In my opinion, the most important things to achieve in your coding conventions are the following.

#defined symbols with extra care; remember that C++ preprocessor macros are really just text substitutions, so they cut across all C/C++ scope and namespace boundaries.3.2 Catching and Handling Errors

There are a number of ways to catch and handle error conditions in a game engine. As a game programmer, it’s important to understand these different mechanisms, their pros and cons and when to use each one.

3.2.1 Types of Errors

In any software project there are two basic kinds of error conditions: user errors and programmer errors. A user error occurs when the user of the program does something incorrect, such as typing an invalid input, attempting to open a file that does not exist, etc. A programmer error is the result of a bug in the code itself. Although it may be triggered by something the user has done, the essence of a programmer error is that the problem could have been avoided if the programmer had not made a mistake, and the user has a reasonable expectation that the program should have handled the situation gracefully.

Of course, the definition of “user” changes depending on context. In the context of a game project, user errors can be roughly divided into two categories: errors caused by the person playing the game and errors caused by the people who are making the game during development. It is important to keep track of which type of user is affected by a particular error and handle the error appropriately.

There’s actually a third kind of user—the other programmers on your team. (And if you are writing a piece of game middleware software, like Havok or OpenGL, this third category extends to other programmers all over the world who are using your library.) This is where the line between user errors and programmer errors gets blurry. Let’s imagine that programmer A writes a function f(), and programmer B tries to call it. If B calls f() with invalid arguments (e.g., a null pointer, or an out-of-range array index), then this could be seen as a user error by programmer A, but it would be a programmer error from B’s point of view. (Of course, one can also argue that programmer A should have anticipated the passing of invalid arguments and should have handled them gracefully, so the problem really is a programmer error, on A’s part.) The key thing to remember here is that the line between user and programmer can shift depending on context—it is rarely a black-and-white distinction.

3.2.2 Handling Errors

When handling errors, the requirements differ significantly between the two types. It is best to handle user errors as gracefully as possible, displaying some helpful information to the user and then allowing him or her to continue working—or in the case of a game, to continue playing. Programmer errors, on the other hand, should not be handled with a graceful “inform and continue” policy. Instead, it is usually best to halt the program and provide detailed low-level debugging information, so that a programmer can quickly identify and fix the problem. In an ideal world, all programmer errors would be caught and fixed before the software ships to the public.

3.2.2.1 Handling Player Errors

When the “user” is the person playing your game, errors should obviously be handled within the context of gameplay. For example, if the player attempts to reload a weapon when no ammo is available, an audio cue and/or an animation can indicate this problem to the player without taking him or her “out of the game.”

3.2.2.2 Handling Developer Errors

When the “user” is someone who is making the game, such as an artist, animator or game designer, errors may be caused by an invalid asset of some sort. For example, an animation might be associated with the wrong skeleton, or a texture might be the wrong size, or an audio file might have been sampled at an unsupported sample rate. For these kinds of developer errors, there are two competing camps of thought.

On the one hand, it seems important to prevent bad game assets from persisting for too long. A game typically contains literally thousands of assets, and a problem asset might get “lost,” in which case one risks the possibility of the bad asset surviving all the way into the final shipping game. If one takes this point of view to an extreme, then the best way to handle bad game assets is to prevent the entire game from running whenever even a single problematic asset is encountered. This is certainly a strong incentive for the developer who created the invalid asset to remove or fix it immediately.

On the other hand, game development is a messy and iterative process, and generating “perfect” assets the first time around is rare indeed. By this line of thought, a game engine should be robust to almost any kind of problem imaginable, so that work can continue even in the face of totally invalid game asset data. But this too is not ideal, because the game engine would become bloated with error-catching and error-handling code that won’t be needed once the development pace settles down and the game ships. And the probability of shipping the product with “bad” assets becomes too high.

In my experience, the best approach is to find a middle ground between these two extremes. When a developer error occurs, I like to make the error obvious and then allow the team to continue to work in the presence of the problem. It is extremely costly to prevent all the other developers on the team from working, just because one developer tried to add an invalid asset to the game. A game studio pays its employees well, and when multiple team members experience downtime, the costs are multiplied by the number of people who are prevented from working. Of course, we should only handle errors in this way when it is practical to do so, without spending inordinate amounts of engineering time, or bloating the code.

As an example, let’s suppose that a particular mesh cannot be loaded. In my view, it’s best to draw a big red box in the game world at the places that mesh would have been located, perhaps with a text string hovering over each one that reads, “Mesh blah-dee-blah failed to load.” This is superior to printing an easy-to-miss message to an error log. And it’s far better than just crashing the game, because then no one will be able to work until that one mesh reference has been repaired. Of course, for particularly egregious problems it’s fine to just spew an error message and crash. There’s no silver bullet for all kinds of problems, and your judgment about what type of error handling approach to apply to a given situation will improve with experience.

3.2.2.3 Handling Programmer Errors

The best way to detect and handle programmer errors (a.k.a. bugs) is often to embed error-checking code into your source code and arrange for failed error checks to halt the program. Such a mechanism is known as an assertion system; we’ll investigate assertions in detail in Section 3.2.3.3. Of course, as we said above, one programmer’s user error is another programmer’s bug; hence, assertions are not always the right way to handle every programmer error. Making a judicious choice between an assertion and a more graceful error-handling technique is a skill that one develops over time.

3.2.3 Implementation of Error Detection and Handling

We’ve looked at some philosophical approaches to handling errors. Now let’s turn our attention to the choices we have as programmers when it comes to implementing error detection and handling code.

3.2.3.1 Error Return Codes

A common approach to handling errors is to return some kind of failure code from the function in which the problem is first detected. This could be a Boolean value indicating success or failure, or it could be an “impossible” value, one that is outside the range of normally returned results. For example, a function that returns a positive integer or floating-point value could return a negative value to indicate that an error occurred. Even better than a Boolean or an “impossible” return value, the function could be designed to return an enumerated value to indicate success or failure. This clearly separates the error code from the output(s) of the function, and the exact nature of the problem can be indicated on failure (e.g., enum Error {kSuccess, kAssetNotFound, kInvalidRange, …}).

The calling function should intercept error return codes and act appropriately. It might handle the error immediately. Or, it might work around the problem, complete its own execution and then pass the error code on to whatever function called it.

3.2.3.2 Exceptions

Error return codes are a simple and reliable way to communicate and respond to error conditions. However, error return codes have their drawbacks. Perhaps the biggest problem with error return codes is that the function that detects an error may be totally unrelated to the function that is capable of handling the problem. In the worst-case scenario, a function that is 40 calls deep in the call stack might detect a problem that can only be handled by the top-level game loop, or by main(). In this scenario, every one of the 40 functions on the call stack would need to be written so that it can pass an appropriate error code all the way back up to the top-level error-handling function.

One way to solve this problem is to throw an exception. Exception handling is a very powerful feature of C++. It allows the function that detects a problem to communicate the error to the rest of the code without knowing anything about which function might handle the error. When an exception is thrown, relevant information about the error is placed into a data object of the programmer’s choice known as an exception object. The call stack is then automatically unwound, in search of a calling function that has wrapped its call in a try-catch block. If a try-catch block is found, the exception object is matched against all possible catch clauses, and if a match is found, the corresponding catch’s code block is executed. The destructors of any automatic variables are called as needed during the stack unwinding process.

The ability to separate error detection from error handling in such a clean way is certainly attractive, and exception handling is an excellent choice for some software projects. However, exception handling does add some overhead to the program. The stack frame of any function that contains a try-catch block must be augmented to contain additional information required by the stack unwinding process. Also, if even one function in your program (or a library that your program links with) uses exception handling, your entire program must use exception handling—the compiler can’t know which functions might be above you on the call stack when you throw an exception.

That said, it is possible to “sandbox” a library or libraries that make use of exception handling, in order to avoid your entire game engine having to be written with exceptions enabled. To do this, you would wrap all the API calls into the libraries in question in functions that are implemented in a translation unit that has exception handling enabled. Each of these functions would catch all possible exceptions in a try/catch block and convert them into error return codes. Any code that links with your wrapper library can therefore safely disable exception handling.

Arguably more important than the overhead issue is the fact that exceptions are in some ways no better than goto statements. Joel Spolsky of Microsoft and Fog Creek Software fame argues that exceptions are in fact worse than gotos because they aren’t easily seen in the source code. A function that neither throws nor catches exceptions may nevertheless become involved in the stack-unwinding process, if it finds itself sandwiched between such functions in the call stack. And the unwinding process is itself imperfect: Your software can easily be left in an invalid state unless the programmer considers every possible way that an exception can be thrown, and handles it appropriately. This can make writing robust software difficult. When the possibility for exception throwing exists, pretty much every function in your codebase needs to be robust to the carpet being pulled out from under it and all its local objects destroyed whenever it makes a function call.

Another issue with exception handling is its cost. Although in theory modern exception handling frameworks don’t introduce additional runtime overhead in the error-free case, this is not necessarily true in practice. For example, the code that the compiler adds to your functions for unwinding the call stack when an exception occurs tends to produce an overall increase in code size. This might degrade I-cache performance, or cause the compiler to decide not to inline a function that it otherwise would have.

Clearly there are some pretty strong arguments for turning off exception handling in your game engine altogether. This is the approach employed at Naughty Dog and also on most of the projects I’ve worked on at Electronic Arts and Midway. In his capacity as Engine Director at Insomniac Games, Mike Acton has clearly stated his objection to the use of exception handling in runtime game code on numerous occasions. JPL and NASA also disallow exception handling in their mission-critical embedded software, presumably for the same reasons we tend to avoid it in the game industry. That said, your mileage certainly may vary. There is no perfect tool and no one right way to do anything. When used judiciously, exceptions can make your code easier to write and work with; just be careful out there!

There are many interesting articles on this topic on the web. Here’s one good thread that covers most of the key issues on both sides of the debate:

Exceptions and RAII

The “resource acquisition is initialization” pattern (RAII, see Section 3.1.1.6) is often used in conjuction with exception handling: The constructor attempts to acquire the desired resource, and throws an exception if it fails to do so. This is done to avoid the need for an if check to test the status of the object after it has been created—if the constructor returns without throwing an exception, we know for certain that the resource was successfully acquired.

However, the RAII pattern can be used even without exceptions. All it requires is a little discipline to check the status of each new resource object when it is first created. After that, all of the other benefits of RAII can be reaped. (Exceptions can also be replaced by assertion failures to signal the failure of some kinds of resource acquisitions.)

3.2.3.3 Assertions

An assertion is a line of code that checks an expression. If the expression evaluates to true, nothing happens. But if the expression evaluates to false, the program is stopped, a message is printed and the debugger is invoked if possible.

Assertions check a programmer’s assumptions. They act like land mines for bugs. They check the code when it is first written to ensure that it is functioning properly. They also ensure that the original assumptions continue to hold for long periods of time, even when the code around them is constantly changing and evolving. For example, if a programmer changes code that used to work, but accidentally violates its original assumptions, they’ll hit the land mine. This immediately informs the programmer of the problem and permits him or her to rectify the situation with minimum fuss. Without assertions, bugs have a tendency to “hide out” and manifest themselves later in ways that are difficult and time-consuming to track down. But with assertions embedded in the code, bugs announce themselves the moment they are introduced—which is usually the best moment to fix the problem, while the code changes that caused the problem are fresh in the programmer’s mind. Steve Maguire provides an in-depth discussion of assertions in his must-read book, Writing Solid Code [35].

The cost of the assertion checks can usually be tolerated during development, but stripping out the assertions prior to shipping the game can buy back that little bit of crucial performance if necessary. For this reason assertions are generally implemented in such a way as to allow the checks to be stripped out of the executable in non-debug build configurations. In C, an assert() macro is provided by the standard library header file <assert.h>; in C++, it’s provided by the <cassert> header.

The standard library’s definition of assert() causes it to be defined in debug builds (builds with the DEBUG preprocessor symbol defined) and stripped in non-debug builds (builds with the NDEBUG preprocessor symbol defined). In a game engine, you may want finer-grained control over which build configurations retain assertions, and which configurations strip them out. For example, your game might support more than just a debug and development build configuration—you might also have a shipping build with global optimizations enabled, and perhaps even a PGO build for use by profile-guided optimization tools (see Section 2.2.4). Or you might also want to define different “flavors” of assertions—some that are always retained even in the shipping version of your game, and others that are stripped out of the non-shipping build. For these reasons, let’s take a look at how you can implement your own ASSERT() macro using the C/C++ preprocessor.

Assertion Implementation

Assertions are usually implemented via a combination of a #defined macro that evaluates to an if/else clause, a function that is called when the assertion fails (the expression evaluates to false), and a bit of assembly code that halts the program and breaks into the debugger when one is attached. Here’s a typical implementation:

#if ASSERTIONS_ENABLED

// define some inline assembly that causes a break

// into the debugger -- this will be different on each

// target CPU

#define debugBreak() asm {int 3}

// check the expression and fail if it is false #define ASSERT(expr)

if (expr) {}

else

{

reportAssertionFailure(#expr,

__FILE__, __LINE__);

debugBreak();

}

#else

#define ASSERT(expr) // evaluates to nothing

#endif

Let’s break down this definition so we can see how it works:

#if/#else/#endif is used to strip assertions from the code base. When ASSERTIONS_ENABLED is nonzero, the ASSERT() macro is defined in its full glory, and all assertion checks in the code will be included in the program. But when assertions are turned off, ASSERT(expr) evaluates to nothing, and all instances of it in the code are effectively removed.debugBreak() macro evaluates to whatever assembly-language instructions are required in order to cause the program to halt and the debugger to take charge (if one is connected). This differs from CPU to CPU, but it is usually a single assembly instruction.ASSERT() macro itself is defined using a full if/else statement (as opposed to a lone if). This is done so that the macro can be used in any context, even within other unbracketed if/else statements.Here’s an example of what would happen if ASSERT() were defined using a solitary if:

// WARNING: NOT A GOOD IDEA!

#define ASSERT(expr) if (!(expr)) debugBreak()

void f()

{

if (a < 5)

ASSERT(a >= 0);

else

doSomething(a);

}

This expands to the following incorrect code:

void f()

{

if (a < 5)

if (!(a >= 0))

debugBreak();

else // oops! bound to the wrong if()!

doSomething(a);

}

ASSERT() macro does two things. It displays some kind of message to the programmer indicating what went wrong, and then it breaks into the debugger. Notice the use of #expr as the first argument to the message display function. The pound (#) preprocessor operator causes the expression expr to be turned into a string, thereby allowing it to be printed out as part of the assertion failure message.__FILE__ and __LINE__. These compiler-defined macros magically contain the .cpp file name and line number of the line of code on which they appear. By passing them into our message display function, we can print the exact location of the problem.I highly recommend the use of assertions in your code. However, it’s important to be aware of their performance cost. You may want to consider defining two kinds of assertion macros. The regular ASSERT() macro can be left active in all builds, so that errors are easily caught even when not running in debug mode. A second assertion macro, perhaps called SLOW_ASSERT(), could be activated only in debug builds. This macro could then be used in places where the cost of assertion checking is too high to permit inclusion in release builds. Obviously SLOW_ASSERT() is of lower utility, because it is stripped out of the version of the game that your testers play every day. But at least these assertions become active when programmers are debugging their code.

It’s also extremely important to use assertions properly. They should be used to catch bugs in the program itself—never to catch user errors. Also, assertions should always cause the entire game to halt when they fail. It’s usually a bad idea to allow assertions to be skipped by testers, artists, designers and other non-engineers. (This is a bit like the boy who cried wolf: if assertions can be skipped, then they cease to have any significance, rendering them ineffective.) In other words, assertions should only be used to catch fatal errors. If it’s OK to continue past an assertion, then it’s probably better to notify the user of the error in some other way, such as with an on-screen message, or some ugly bright-orange 3D graphics.

Compile-Time Assertions

One weakness of assertions, as we’ve discussed them thus far, is that the conditions encoded within them are only checked at runtime. We have to run the program, and the code path in question must actually execute, in order for an assertion’s condition to be checked.

Sometimes the condition we’re checking within an assertion involves information that is entirely known at compile time. For example, let’s say we’re defining a struct that for some reason needs to be exactly 128 bytes in size. We want to add an assertion so that if another programmer (or a future version of yourself) decides to change the size of the struct, the compiler will give us an error message. In other words, we’d like to write something like this:

struct NeedsToBe128Bytes

{

U32 m_a;

F32 m_b;

// etc.

};

// sadly this doesn’t work…

ASSERT(sizeof(NeedsToBe128Bytes) == 128);

The problem of course is that the ASSERT() (or assert()) macro needs to be executable at runtime, and one can’t even put executable code at global scope in a .cpp file outside of a function definition. The solution to this problem is a compile-time assertion, also known as a static assertion.

Starting with C++11, the standard library defines a macro named static_assert() for us. So we can re-write the example above as follows:

struct NeedsToBe128Bytes

{

U32 m_a;

F32 m_b;

// etc.

};

static_assert(sizeof(NeedsToBe128Bytes) == 128,

“wrong size”);

If you’re not using C++11, you can always roll your own STATIC_ASSERT() macro. It can be implemented in a number of different ways, but the basic idea is always the same: The macro places a declaration into your code that (a) is legal at file scope, (b) evaluates the desired expression at compile time rather than runtime, and (c) produces a compile error if and only if the expression is false. Some methods of defining STATIC_ASSERT() rely on compiler-specific details, but here’s one reasonably portable way to define it:

#define _ASSERT_GLUE(a, b) a ## b

#define ASSERT_GLUE(a, b) _ASSERT_GLUE(a, b)

#define STATIC_ASSERT(expr)

enum

{

ASSERT_GLUE(g_assert_fail_, __LINE__)

= 1 / (int)dl(expr))

}

STATIC_ASSERT(sizeof(int) == 4); // should pass

STATIC_ASSERT(sizeof(float) == 1); // should fail

This works by defining an anonymous enumeration containing a single enumerator. The name of the enumerator is made unique (within the translation unit) by “gluing” a fixed prefix such as g_assert_fail_ to a unique suffix—in this case, the line number on which the STATIC_ASSERT() macro is invoked. The value of the enumerator is set to 1 / (!!(expr)). The double negation !! ensures that expr has a Boolean value. This value is then cast to an int, yielding either 1 or 0 depending on whether the expression is true or false, respectively. If the expression is true, the enumerator will be set to the value 1/1 which is one. But if the expression is false, we’ll be asking the compiler to set the enumerator to the value 1/0 which is illegal, and will trigger a compile error.

When our STATIC_ASSERT() macro as defined above fails, Visual Studio 2015 produces a compile-time error message like this:

1>test.cpp(48): error C2131: expression did not evaluate to a constant 1> test.cpp(48): note: failure was caused by an undefined arithmetic operation

Here’s another way to define STATIC_ASSERT() using template specialization. In this example, we first check to see if we’re using C++11 or beyond. If so, we use the standard library’s implementation of static_assert() for maximum portability. Otherwise we fall back to our custom implementation.

#ifdef __cplusplus

#if __cplusplus >= 201103L

#define STATIC_ASSERT(expr)

static_assert(expr,

“static assert failed:”

#expr)

#else

// declare a template but only define the

// true case (via specialization)

template<bool> class TStaticAssert;

template<> class TStaticAssert<true> {};

#define STATIC_ASSERT(expr)

enum

{

ASSERT_GLUE(g_assert_fail_, __LINE__)

= sizeof(TStaticAssert<!!(expr)>)

}

#endif

#endif

This implementation, using template specialization, may be preferable to the previous one using division by zero, because it produces a slightly better error message in Visual Studio 2015:

1>test.cpp(4 8): error C2027: use of undefined type ’TStaticAssert<false>’ 1>test.cpp(4 8): note: see declaration of ’TStaticAssert<false>’

However, each compiler handles error reporting differently, so your mileage may vary. For more implementation ideas for compile-time assertions, one good reference is http://www.pixelbeat.org/programming/gcc/static_assert.html.

3.3 Data, Code and Memory Layout

3.3.1 Numeric Representations

Numbers are at the heart of everything that we do in game engine development (and software development in general). Every software engineer should understand how numbers are represented and stored by a computer. This section will provide you with the basics you’ll need throughout the rest of the book.

3.3.1.1 Numeric Bases

People think most naturally in base ten, also known as decimal notation. In this notation, ten distinct digits are used (0 through 9), and each digit from right to left represents the next highest power of 10. For example, the number 7803 = (7 × 103) + (8 × 102) + (0 × 101) + (3 × 100) = 7000 + 800 + 0 + 3.

In computer science, mathematical quantities such as integers and real-valued numbers need to be stored in the computer’s memory. And as we know, computers store numbers in binary format, meaning that only the two digits 0 and 1 are available. We call this a base-two representation, because each digit from right to left represents the next highest power of 2. Computer scientists sometimes use a prefix of “0b” to represent binary numbers. For example, the binary number 0b1101 is equivalent to decimal 13, because 0b1101 = (1 × 23) + (1 × 22) + (0 × 21) + (1 × 20) = 8 + 4 + 0 + 1 = 13.

Another common notation popular in computing circles is hexadecimal, or base 16. In this notation, the 10 digits 0 through 9 and the six letters A through F are used; the letters A through F replace the decimal values 10 through 15, respectively. A prefix of “0x” is used to denote hex numbers in the C and C++ programming languages. This notation is popular because computers generally store data in groups of 8 bits known as bytes, and since a single hexadecimal digit represents 4 bits exactly, a pair of hex digits represents a byte. For example, the value 0xFF = 0b11111111 = 255 is the largest number that can be stored in 8 bits (1 byte). Each digit in a hexadecimal number, from right to left, represents the next power of 16. So, for example, 0xB052 = (11 × 163) + (0 × 162) + (5 × 161) + (2 × 160) = (11 × 4096) + (0 × 256) + (5 × 16) + (2 × 1) = 45,138.

3.3.1.2 Signed and Unsigned Integers

In computer science, we use both signed and unsigned integers. Of course, the term “unsigned integer” is actually a bit of a misnomer—in mathematics, the whole numbers or natural numbers range from 0 (or 1) up to positive infinity, while the integers range from negative infinity to positive infinity. Nevertheless, we’ll use computer science lingo in this book and stick with the terms “signed integer” and “unsigned integer.”

Most modern personal computers and game consoles work most easily with integers that are 32 bits or 64 bits wide (although 8- and 16-bit integers are also used a great deal in game programming as well). To represent a 32-bit unsigned integer, we simply encode the value using binary notation (see above). The range of possible values for a 32-bit unsigned integer is 0x00000000 (0) to 0xFFFFFFFF (4,294,967,295).

To represent a signed integer in 32 bits, we need a way to differentiate between positive and negative vales. One simple approach called the sign and magnitude encoding reserves the most significant bit as a sign bit. When this bit is zero, the value is positive, and when it is one, the value is negative. This leaves us 31 bits to represent the magnitude of the value, effectively cutting the range of possible magnitudes in half (but allowing both positive and negative forms of every distinct magnitude, including zero).

Most microprocessors use a slightly more efficient technique for encoding negative integers, called two’s complement notation. This notation has only one representation for the value zero, as opposed to the two representations possible with simple sign bit (positive zero and negative zero). In 32-bit two’s complement notation, the value 0xFFFFFFFF is interpreted to mean −1, and negative values count down from there. Any value with the most significant bit set is considered negative. So values from 0x00000000 (0) to 0x7FFFFFFF (2,147,483,647) represent positive integers, and 0x80000000 (−2,147,483,648) to 0xFFFFFFFF (−1) represent negative integers.

3.3.1.3 Fixed-Point Notation

Integers are great for representing whole numbers, but to represent fractions and irrational numbers we need a different format that expresses the concept of a decimal point.

One early approach taken by computer scientists was to use fixed-point notation. In this notation, one arbitrarily chooses how many bits will be used to represent the whole part of the number, and the rest of the bits are used to represent the fractional part. As we move from left to right (i.e., from the most significant bit to the least significant bit), the magnitude bits represent decreasing powers of two (…, 16, 8, 4, 2, 1), while the fractional bits represent decreasing inverse powers of two (½, ¼, ⅛, , …). For example, to store the number −173.25 in 32-bit fixed-point notation with one sign bit, 16 bits for the magnitude and 15 bits for the fraction, we first convert the sign, the whole part and the fractional part into their binary equivalents individually (negative = 0b1, 173 = 0b0000000010101101 and 0.25 = ¼ = 0b010000000000000). Then we pack those values together into a 32-bit integer. The final result is 0x8056A000. This is illustrated in Figure 3.4.

The problem with fixed-point notation is that it constrains both the range of magnitudes that can be represented and the amount of precision we can achieve in the fractional part. Consider a 32-bit fixed-point value with 16 bits for the magnitude, 15 bits for the fraction and a sign bit. This format can only represent magnitudes up to ±65,535, which isn’t particularly large. To overcome this problem, we employ a floating-point representation.

3.3.1.4 Floating-Point Notation

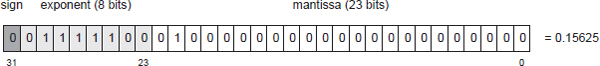

In floating-point notation, the position of the decimal place is arbitrary and is specified with the help of an exponent. A floating-point number is broken into three parts: the mantissa, which contains the relevant digits of the number on both sides of the decimal point, the exponent, which indicates where in that string of digits the decimal point lies, and a sign bit, which of course indicates whether the value is positive or negative. There are all sorts of different ways to lay out these three components in memory, but the most common standard is IEEE-754. It states that a 32-bit floating-point number will be represented with the sign in the most significant bit, followed by 8 bits of exponent and finally 23 bits of mantissa.

The value v represented by a sign bit s, an exponent e and a mantissa m is v = s × 2(e−127) × (1 + m).

The sign bit s has the value +1 or −1. The exponent e is biased by 127 so that negative exponents can be easily represented. The mantissa begins with an implicit 1 that is not actually stored in memory, and the rest of the bits are interpreted as inverse powers of two. Hence the value represented is really 1 + m, where m is the fractional value stored in the mantissa.

For example, the bit pattern shown in Figure 3.5 represents the value 0.15625, because s = 0 (indicating a positive number), e = 0b01111100 = 124 and m = 0b0100… = 0 × 2−1 + 1 × 2−2 = ¼. Therefore,

The Trade-Off between Magnitude and Precision

The precision of a floating-point number increases as the magnitude decreases, and vice versa. This is because there are a fixed number of bits in the mantissa, and these bits must be shared between the whole part and the fractional part of the number. If a large percentage of the bits are spent representing a large magnitude, then a small percentage of bits are available to provide fractional precision. In physics the term significant digits is typically used to describe this concept (http://en.wikipedia.org/wiki/Significant_digits).

To understand the trade-off between magnitude and precision, let’s look at the largest possible floating-point value, FLT_MAX ≈ 3.403 × 1038, whose representation in 32-bit IEEE floating-point format is 0x7F7FFFFF. Let’s break this down:

So FLT_MAX is 0x00FFFFFF × 2127 = 0xFFFFFF00000000000000000000000000. In other words, our 24 binary ones were shifted up by 127 bit positions, leaving 127 − 23 = 104 binary zeros (or 104/4 = 26 hexadecimal zeros) after the least significant digit of the mantissa. Those trailing zeros don’t correspond to any actual bits in our 32-bit floating-point value—they just appear out of thin air because of the exponent. If we were to subtract a small number (where “small” means any number composed of fewer than 26 hexadecimal digits) from FLT_MAX, the result would still be FLT_MAX, because those 26 least significant hexadecimal digits don’t really exist!

The opposite effect occurs for floating-point values whose magnitudes are much less than one. In this case, the exponent is large but negative, and the significant digits are shifted in the opposite direction. We trade the ability to represent large magnitudes for high precision. In summary, we always have the same number of significant digits (or really significant bits) in our floatingpoint numbers, and the exponent can be used to shift those significant bits into higher or lower ranges of magnitude.

Subnormal Values

Another subtlety to notice is that there is a finite gap between zero and the smallest nonzero value we can represent with floating-point notation (as it has been described thus far). The smallest nonzero magnitude we can represent is FLT_MIN = 2−126 ≈ 1.175 × 10−38, which has a binary representation of 0x00800000 (i.e., the exponent is 0x01, or −126 after subtracting the bias, and the mantissa is all zeros except for the implicit leading one). The next smallest valid value is zero, so there is a finite gap between the values −FLT_MIN and +FLT_MIN. This underscores the fact that the real number line is quantized when using a floating-point representation. (Note that the C++ standard library exposes FLT_MIN as the rather more verbose std::numeric_limits <float>::min(). We’ll stick to FLT_MIN in this book for brevity.)

The gap around zero can be filled by employing an extension to the floating-point representation known as denormalized values, also known as subnormal values. With this extension, any floating-point value with a biased exponent of 0 is interpreted as a subnormal number. The exponent is treated as if it had been a 1 instead of a 0, and the implicit leading 1 that normally sits in front of the bits of the mantissa is changed to a 0. This has the effect of filling the gap between −FLT_MIN and +FLT_MIN with a linear sequence of evenly-spaced subnormal values. The positive subnormal float that is closest to zero is represented by the constant FLT_TRUE_MIN.

The benefit of using subnormal values is that it provides greater precision near zero. For example, it ensures that the following two expressions are equivalent, even for values of a and b that are very close to FLT_MIN:

if (a == b) {…}

if (a - b == 0.0f) {…}

Without subnormal values, the expression a − b could evaluate to zero even when a != b.

Machine Epsilon

For a particular floating-point representation, the machine epsilon is defined to be the smallest floating-point value ε that satisfies the equation, 1 + ε ≠ 1. For an IEEE-754 floating-point number, with its 23 bits of precision, the value of ε is 2−23, which is approximately 1.192 × 10−7. The most significant digit of ε falls just inside the range of significant digits in the value 1.0, so adding any value smaller than ε to 1.0 has no effect. In other words, any new bits contributed adding a value smaller than ε will get “chopped off” when we try to fit the sum into a mantissa with only 23 bits.

Units in the Last Place (ULP)

Consider two floating-point numbers which are identical in all respects except for the value of the least-significant bit in their mantissas. These two values are said to differ by one unit in the last place (1 ULP). The actual value of 1 ULP changes depending on the exponent. For example, the floating-point value 1.0f has an unbiased exponent of zero, and a mantissa in which all bits are zero (except for the implicit leading 1). At this exponent, 1 ULP is equal to the machine epsilon (2−23). If we change the exponent to a 1, yielding the value 2.0f, the value of 1 ULP becomes equal to two times the machine epsilon. And if the exponent is 2, yielding the value 4.0f, the value of 1 ULP is four times the machine epsilon. In general, if a floating-point value’s unbiased exponent is x, then 1 ULP = 2x · ε.

The concept of units in the last place illustrates the idea that the precision of a floating-point number depends on its exponent, and is useful for quantifying the error inherent in any floating-point calculation. It can also be useful for finding the floating-point value that is the next largest representable value relative to a known value, or conversely the next smallest representable value relative to that value. This in turn can be useful for converting a greater-than-or-equal comparison into a greater-than comparison. Mathematically, the condition a ≥ b is equivalent to the condition a + 1 ULP > b. We use this little “trick” in the Naughty Dog engine to simplify some logic in our character dialog system. In this system, simple comparisons can be used to select different lines of dialog for the characters to say. Rather than supporting all possible comparison operators, we only support greater-than and less-than checks, and we handle greater-than-or-equal-to and less-than-or-equal-to by adding or subtracting 1 ULP to or from the value being compared.

Impact of Floating-Point Precision on Software

The concepts of limited precision and the machine epsilon have real impacts on game software. For example, let’s say we use a floating-point variable to track absolute game time in seconds. How long can we run our game before the magnitude of our clock variable gets so large that adding 1/30th of a second to it no longer changes its value? The answer is 12.14 days or 220 seconds. That’s longer than most games will be left running, so we can probably get away with using a 32-bit floating-point clock measured in seconds in a game. But clearly it’s important to understand the limitations of the floating-point format so that we can predict potential problems and take steps to avoid them when necessary.

IEEE Floating-Point Bit Tricks

See [9, Section 2.1] for a few really useful IEEE floating-point “bit tricks” that can make certain floating-point calculations lightning fast.

3.3.2 Primitive Data Types

C and C++ provide a number of primitive data types. The C and C++ standards provide guidelines on the relative sizes and signedness of these data types, but each compiler is free to define the types slightly differently in order to provide maximum performance on the target hardware.

char. A char is usually 8 bits and is generally large enough to hold an ASCII or UTF-8 character (see Section 6.4.4.1). Some compilers define char to be signed, while others use unsigned chars by default.int, short, long. An int is supposed to hold a signed integer value that is the most efficient size for the target platform; it is usually defined to be 32 bits wide on a 32-bit CPU architecture, such as Pentium 4 or Xeon, and 64 bits wide on a 64-bit architecture, such as Intel Core i7, although the size of an int is also dependent upon other factors such as compiler options and the target operating system. A short is intended to be smaller than an int and is 16 bits on many machines. A long is as large as or larger than an int and may be 32 or 64 bits wide, or even wider, again depending on CPU architecture, compiler options and the target OS.float. On most modern compilers, a float is a 32-bit IEEE-754 floating-point value.double. A double is a double-precision (i.e., 64-bit) IEEE-754 floatingpoint value.bool. A bool is a true/false value. The size of a bool varies widely across different compilers and hardware architectures. It is never implemented as a single bit, but some compilers define it to be 8 bits while others use a full 32 bits.Portable Sized Types

The built-in primitive data types in C and C++ were designed to be portable and therefore nonspecific. However, in many software engineering endeavors, including game engine programming, it is often important to know exactly how wide a particular variable is.

Before C++11, programmers had to rely on non-portable sized types provided by their compiler. For example, the Visual Studio C/C++ compiler defined the following extended keywords for declaring variables that are an explicit number of bits wide: __int8, __int16, __int32 and __int64. Most other compilers have their own “sized” data types, with similar semantics but slightly different syntax.

Because of these differences between compilers, most game engines achieved source code portability by defining their own custom sized types. For example, at Naughty Dog we use the following sized types:

F32 is a 32-bit IEEE-754 floating-point value.U8, I8, U16, I16, U32, I32, U64 and I64 are unsigned and signed 8-, 16-, 32- and 64-bit integers, respectively.U32F and I32F are “fast” unsigned and signed 32-bit values, respectively. Each of these data types contains a value that is at least 32 bits wide, but may be wider if that would result in faster code on the target CPU.<cstdint>

The C++11 standard library introduces a set of standardized sized integer types. They are declared in the <cstdint> header, and they include the signed types std::int8_t, std::int16_t, std::int32_t and std::int64_t and the unsigned types std::uint8_t, std::uint16_t, std::uint32_t and std::uint64_t, along with “fast” variants (like the I32F and U32F types we defined at Naughty Dog). These types free the programmer from having to “wrap” compiler-specific types in order to achieve portability. For a complete list of these sized types, see http://en.cppreference.com/w/cpp/types/integer.

OGRE’s Primitive Data Types

OGRE defines a number of sized types of its own. Ogre::uint8, Ogre::uint16 and Ogre::uint32 are the basic unsigned sized integral types.

Ogre::Real defines a real floating-point value. It is usually defined to be 32 bits wide (equivalent to a float), but it can be redefined globally to be 64 bits wide (like a double) by defining the preprocessor macro OGRE_DOUBLE_PRECISION to 1. This ability to change the meaning of Ogre::Real is generally only used if one’s game has a particular requirement for double-precision math, which is rare. Graphics chips (GPUs) always perform their math with 32-bit or 16-bit floats, the CPU/FPU is also usually faster when working in single-precision, and SIMD vector instructions operate on 128-bit registers that contain four 32-bit floats each. Hence, most games tend to stick to single-precision floating-point math.

The data types Ogre::uchar, Ogre::ushort, Ogre::uint and Ogre::ulong are just shorthand notations for C/C++’s unsigned char, unsigned short and unsigned long, respectively. As such, they are no more or less useful than their native C/C++ counterparts.

The types Ogre::Radian and Ogre::Degree are particularly interesting. These classes are wrappers around a simple Ogre::Real value. The primary role of these types is to permit the angular units of hard-coded literal constants to be documented and to provide automatic conversion between the two unit systems. In addition, the type Ogre::Angle represents an angle in the current “default” angle unit. The programmer can define whether the default will be radians or degrees when the OGRE application first starts up.

Perhaps surprisingly, OGRE does not provide a number of sized primitive data types that are commonplace in other game engines. For example, it defines no signed 8-, 16- or 64-bit integral types. If you are writing a game engine on top of OGRE, you will probably find yourself defining these types manually at some point.

3.3.2.1 Multibyte Values and Endianness

Values that are larger than eight bits (one byte) wide are called multibyte quantities. They’re commonplace on any software project that makes use of integers and floating-point values that are 16 bits or wider. For example, the integer value 4660 = 0x1234 is represented by the two bytes 0x12 and 0x34. We call 0x12 the most significant byte and 0x34 the least significant byte. In a 32-bit value, such as 0xABCD1234, the most-significant byte is 0xAB and the least-significant is 0x34. The same concepts apply to 64-bit integers and to 32- and 64-bit floating-point values as well.

Multibyte integers can be stored into memory in one of two ways, and different microprocessors may differ in their choice of storage method (see Figure 3.6).

U32 value = 0xABCD1234; U8* pBytes = (U8*)&value;

Figure 3.6. Big- and little-endian representations of the value 0xABCD1234.

Most programmers don’t need to think much about endianness. However, when you’re a game programmer, endianness can become a bit of a thorn in your side. This is because games are usually developed on a Windows or Linux machine running an Intel Pentium processor (which is little-endian), but run on a console such as the Wii, Xbox 360 or PlayStation 3—all three of which utilize a variant of the PowerPC processor (which can be configured to use either endianness, but is big-endian by default). Now imagine what happens when you generate a data file for consumption by your game engine on an Intel processor and then try to load that data file into your engine running on a PowerPC processor. Any multibyte value that you wrote out into that data file will be stored in little-endian format. But when the game engine reads the file, it expects all of its data to be in big-endian format. The result? You’ll write 0xABCD1234, but you’ll read 0x3412CDAB, and that’s clearly not what you intended!

There are at least two solutions to this problem.

Integer Endian-Swapping

Endian-swapping an integer is not conceptually difficult. You simply start at the most significant byte of the value and swap it with the least significant byte; you continue this process until you reach the halfway point in the value. For example, 0xA7891023 would become 0x231089A7.

The only tricky part is knowing which bytes to swap. Let’s say you’re writing the contents of a C struct or C++ class from memory out to a file. To properly endian-swap this data, you need to keep track of the locations and sizes of each data member in the struct and swap each one appropriately based on its size. For example, the structure

struct Example

{

U32 m_a;

U16 m_b;

U3 m_c;

};

might be written out to a data file as follows:

void writeExampleStruct(Example& ex, Stream& stream)

{

stream.writeU32(swapU32(ex.m_a));

stream.writeU16(swapU16(ex.m_b));

stream.writeU32(swapU32(ex.m_c));

}

and the swap functions might be defined like this:

inline U16 swapU16(U16 value)

{

return ((value & 0x00FF) << 8)

| ((value & 0xFF00) >> 8);

}

inline U32 swapU32(U32 value)

{

return ((value & 0x000000FF) << 24)

| ((value & 0x0000FF00) << 8)

| ((value & 0x00FF0000) >> 8)

| ((value & 0xFF000000) >> 24);

}

You cannot simply cast the Example object into an array of bytes and blindly swap the bytes using a single general-purpose function. We need to know both which data members to swap and how wide each member is, and each data member must be swapped individually.

Some compilers provide built-in endian-swapping macros, freeing you from having to write your own. For example, gcc offers a family of macros named __builtin_bswapXX() for performing 16-, 32- and 64-bit endian swaps. However, such compiler-specific facilities are of course non-portable.

Floating-Point Endian-Swapping

As we’ve seen, an IEEE-754 floating-point value has a detailed internal structure involving some bits for the mantissa, some bits for the exponent and a sign bit. However, you can endian-swap it just as if it were an integer, because bytes are bytes. You merely reinterpret the bit pattern of your float as if it were a std::int32_t, perform the endian swapping operation, and then reinterpret the result as a float again.

You can reinterpret floats as integers by using C++’s reinterpret_cast operator on a pointer to the float, and then dereferencing the type-cast pointer; this is known as type punning. But punning can lead to optimization bugs when strict aliasing is enabled. (See http://www.cocoawithlove.com/2008/04/using-pointers-to-recast-in-c-is-bad.html for an excellent description of this problem.) One alternative that’s guaranteed to be portable is to use a union, as follows:

union U32F32

{

U32 m_asU32;

F32 m_asF32;

};

inline F32 swapF32(F32 value)

{

U32F32 u;

u.m_asF32 = value;

// endian-swap as integer

u.m_asU32 = swapU32(u.m_asU32);

return u.m_asF32;

}

3.3.3 Kilobytes versus Kibibytes

You’ve probably used metric (SI) units like kilobytes (kB) and megabytes (MB) to describe quantities of memory. However, the use of these units to describe quantities of memory that are measured in powers of two isn’t strictly correct. When a computer programmer speaks of a “kilobyte,” she or he usually means 1024 bytes. But SI units define the prefix “kilo” to mean 103 or 1000, not 1024.

To resolve this ambiguity, the International Electrotechnical Commission (IEC) in 1998 established a new set of SI-like prefixes for use in computer science. These prefixes are defined in terms of powers of two rather than powers of ten, so that computer engineers can precisely and conveniently specify quantities that are powers of two. In this new system, instead of kilobyte (1000 bytes), we say kibibyte (1024 bytes, abbreviated KiB). And instead of megabyte (1,000,000 bytes), we say mebibyte (1024 × 1024 = 1,048,576 bytes, abbreviated MiB). Table 3.1 summarizes the sizes, prefixes and names of the most commonly used byte quantity units in both the SI and IEC systems. We’ll use IEC units throughout this book.

Table 3.1. Comparison of metric (SI) units and IEC units for describing quantities of bytes.

3.3.4 Declarations, Definitions and Linkage

3.3.4.1 Translation Units Revisited

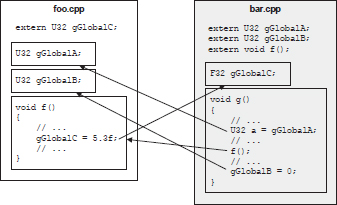

As we saw in Chapter 2, a C or C++ program is comprised of translation units. The compiler translates one .cpp file at a time, and for each one it generates an output file called an object file (.o or .obj). A .cpp file is the smallest unit of translation operated on by the compiler; hence, the name “translation unit.” An object file contains not only the compiled machine code for all of the functions defined in the .cpp file, but also all of its global and static variables. In addition, an object file may contain unresolved references to functions and global variables defined in other .cpp files.

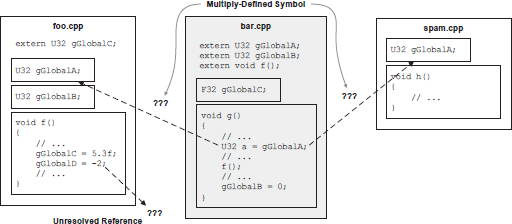

The compiler only operates on one translation unit at a time, so whenever it encounters a reference to an external global variable or function, it must “go on faith” and assume that the entity in question really exists, as shown in Figure 3.7. It is the linker’s job to combine all of the object files into a final executable image. In doing so, the linker reads all of the object files and attempts to resolve all of the unresolved cross-references between them. If it is successful, an executable image is generated containing all of the functions, global variables and static variables, with all cross-translation-unit references properly resolved. This is depicted in Figure 3.8.

The linker’s primary job is to resolve external references, and in this capacity it can generate only two kinds of errors:

extern reference might not be found, in which case the linker generates an “unresolved symbol” error.

These two situations are shown in Figure 3.9.

3.3.4.2 Declaration versus Definition

In the C and C++ languages, variables and functions must be declared and defined before they can be used. It is important to understand the difference between a declaration and a definition in C and C++.

In other words, a declaration is a reference to an entity, while a definition is the entity itself. A definition is always a declaration, but the reverse is not always the case—it is possible to write a pure declaration in C and C++ that is not a definition.

Functions are defined by writing the body of the function immediately after the signature, enclosed in curly braces:

foo.cpp

// definition of the max() function

int max(int a, int b)

{

return (a > b)? a : b;

}

// definition of the min() function

int min(int a, int b)

{

return (a <= b)? a : b;

}

A pure declaration can be provided for a function so that it can be used in other translation units (or later in the same translation unit). This is done by writing a function signature followed by a semicolon, with an optional prefix of extern:

foo.h extern int max(int a, int b); // a function declaration int min(int a, int b); // also a declaration (the extern // is optional/assumed)

Variables and instances of classes and structs are defined by writing the data type followed by the name of the variable or instance and an optional array specifier in square brackets:

foo.cpp // All of these are variable definitions: U32 gGlobalInteger = 5; F32 gGlobalFloatArray[16]; MyClass gGlobalInstance;

A global variable defined in one translation unit can optionally be declared for use in other translation units by using the extern keyword:

foo.h // These are all pure declarations: extern U32 gGlobalInteger; extern F32 gGlobalFloatArray[16]; extern MyClass gGlobalInstance;

Multiplicity of Declarations and Definitions

Not surprisingly, any particular data object or function in a C/C++ program can have multiple identical declarations, but each can have only one definition. If two or more identical definitions exist in a single translation unit, the compiler will notice that multiple entities have the same name and flag an error. If two or more identical definitions exist in different translation units, the compiler will not be able to identify the problem, because it operates on one translation unit at a time. But in this case, the linker will give us a “multiply defined symbol” error when it tries to resolve the cross-references.

Definitions in Header Files and Inlining

It is usually dangerous to place definitions in header files. The reason for this should be pretty obvious: if a header file containing a definition is #included into more than one .cpp file, it’s a sure-fire way of generating a “multiply defined symbol” linker error.

Inline function definitions are an exception to this rule, because each invocation of an inline function gives rise to a brand new copy of that function’s machine code, embedded directly into the calling function. In fact, inline function definitions must be placed in header files if they are to be used in more than one translation unit. Note that it is not sufficient to tag a function declaration with the inline keyword in a .h file and then place the body of that function in a .cpp file. The compiler must be able to “see” the body of the function in order to inline it. For example:

foo.h

// This function definition will be inlined properly.

inline int max(int a, int b)

{

return (a > b)? a : b;

}

// This declaration cannot be inlined because the