So far, we have built a neural network that is fairly simple. A traditional neural network would have a few more parameters that can be varied to achieve a better predictive power.

Let's understand them by using the classic MNIST dataset. MNIST is a handwritten digit dataset that contains images of size 28 x 28 pixels that are represented as NumPy arrays of 28 x 28 dimensions.

Each image is of a digit and the challenge in hand is to predict the digit the image corresponds to.

Let's download and explore some of the images present in the MNIST dataset, as follows:

In the preceding code snippet, we are importing the MNIST object and downloading the MNIST dataset using the load_data function.

Also note that the load_data function helps in automatically splitting the MNIST dataset into train and test datasets.

Let's visualize one of the images within the train dataset:

Note that the preceding digit is 5 and the grid that we are seeing is 28 x 28 in size.

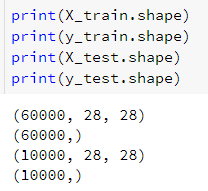

Let's look at the shapes of input and output to further understand the datasets:

Given that each input image is 28 x 28 in size, let's flatten it to get the scores of the 784 pixel values:

The output layer needs to predict whether the image corresponds to one of the digits from 0 to 9. Thus, the output layer consists of 10 units corresponding to each of the 10 different digits:

In the preceding code, to_categorical provides a one hot-encoded version of the label.

Now that we have the train and test datasets in place, let's go ahead and build the architecture of neural network in the following section:

Note that batch_size in the preceding screenshot refers to the number of data points that are considered to update weights. The intuition for batch size is:

"If, in a dataset of 1,000 data points, the batch size is 100, then there are 10 weight updates while sweeping through the whole data".

Note that the accuracy in predicting the labels on the test dataset is ∼91%.

This accuracy increases to 94.9% once the number of epochs reaches 300. Note that for an accuracy of 94.9% on the test dataset, the accuracy on the train dataset is ∼99%.

This is a classic case of overfitting, and the ways to deal with it will be discussed in subsequent chapters.