This chapter will focus on:

- Decision trees

- How to create decision trees for your application

- Understanding truth tables

- Visual intuition regarding false negatives and false positives

Before we dive right in, let's gain some background information which will be helpful to us.

For a decision tree to be complete and effective, it must contain all possibilities, meaning every pathway in and out. Event sequences must also be supplied and be mutually exclusive, meaning if one event happens, the other one cannot.

Decision trees are a form of supervised machine learning, in that we have to explain what the input and output should be. There are decision nodes and leaves. The leaves are the decisions, final or not, and the nodes are where decision splits occur.

Although there are many algorithms available for our use, we are going to use the Iterative Dichotomizer 3 (ID3) algorithm. During each recursive step, the attribute that best classifies the set of inputs we are working with is selected according to a criterion (InfoGain, GainRatio, and so on). It must be pointed out that regardless of the algorithm that we use, none are guaranteed to produce the smallest tree possible. This has a direct implication on the performance of our algorithm. Keep in mind that with decision trees, learning is based solely on heuristics, not true optimized criteria. Let's use an example to explain this further.

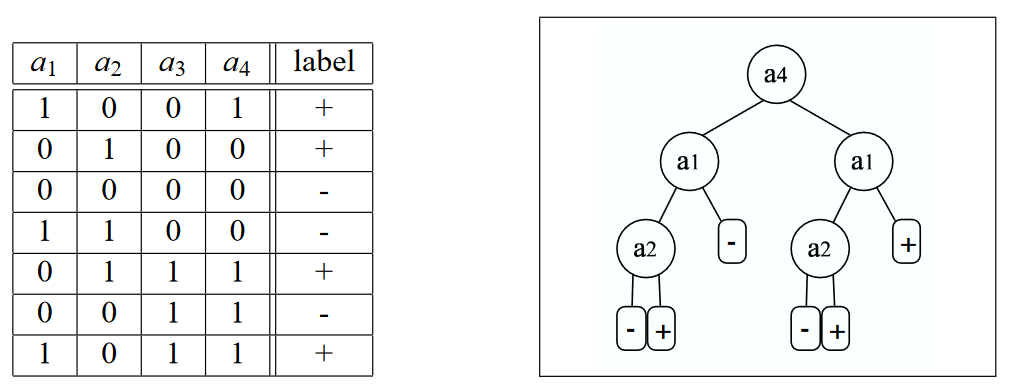

The following example is from http://jmlr.csail.mit.edu/papers/volume8/esmeir07a/esmeir07a.pdf, and it illustrates the XOR learning concept, which all of us developers are (or should be) familiar with. You will see this happen in a later example as well, but for now, a3 and a4 are completely irrelevant to the problem we are trying to solve. They have zero influence on our answer. That being said, the ID3 algorithm will select one of them to belong to the tree, and in fact, it will use a4 as the root! Remember that this is heuristic learning of the algorithm and not optimized findings:

Hopefully this visual will make it easier to understand what we mean. The goal here isn't to get too deep into decision tree mechanics and theory. After all of that, you might be asking why we are even talking about decision trees. Despite any issues they may have, decision trees work as the base for many algorithms, especially those that need a human description of the results. They are also the basis for the Viola & Jones (2001) real-time facial detection algorithm we used in an earlier chapter. As perhaps a better example, the Kinect of Microsoft Xbox 360 uses decision trees as well.

Once again, we will turn to the Accord.NET open source framework to illustrate our concept. In our sample, we'll be dealing with the following decision tree objects, so it's best that we discuss them upfront.