Real Time 3D Face-Ear Recognition on Mobile Devices: New Scenarios for 3D Biometrics “in-the-Wild”

Michele Nappi⁎; Stefano Ricciardi⁎⁎; Massimo Tistarelli⁎⁎⁎ ⁎Department of Information Technology, University of Salerno, Fisciano, Italy

⁎⁎Department of Biosciences, University of Molise, Italy

⁎⁎⁎Department of Communication Sciences and Information Technology, University of Sassari, Sassari, Italy

Abstract

The recent availability of compact and inexpensive depth cameras or the combination of high frame-rate cameras aboard the last generation of smartphones and tablets with computer vision techniques is enabling new applicative scenarios for 3D biometrics. In this chapter, new methods for performing real time 3D face and ear acquisition for biometric purposes, by means of non-specialized devices coupled with computer vision algorithms, are presented and discussed. To this regard, algorithms addressing the Synchronous Location and Mapping (SLAM) problem, like Parallel Tracking and Mapping (PTAM) and Dynamic Tracking and Mapping (DTAM) or the more recent MonoFusion and MobileFusion could make 3D biometrics much more exploitable than in the past, allowing even a not trained user to capture 3D facial features on the fly for improved recognition accuracy and/or reliability. This chapter also highlights the relevance of an efficient implementation of these inherently computationally intensive techniques, which can greatly benefit from GPU-based parallel processing, in making these solutions approachable in real-time on mobile hardware architectures. To this aim, the robustness of recognition algorithms to lower resolution, noisy face geometry, would result in a very desirable feature.

Keywords

3D biometrics; Ear recognition; Face recognition; Real-time 3D scanning; Mobile devices; Commodity hardware

3.1 Introduction

3D face recognition can be considered as an extension of the 2D recognition problem and, at the cost of an extra dimension to deal with, it provides a more accurate representation of the actual face geometry (which is inherently 3D) instead of its projection in two dimensions. In a 3D face model indeed, facial features are represented by local and global curvatures that can be considered as the real signature identifying a person, potentially leading to a higher discriminating power and increased robustness to both intra-class and extra-class variations.

3D methods for face recognition have been thoroughly investigated in the last decade as witnessed by a few comprehensive surveys released through the years [7,9,24,48,55], and also by 3D face recognition contests such as Face Recognition Grand Challenge (FRGC) [41] and SHape REtrieval Contest (SHREC 08) [13] which indeed demonstrated the high recognition accuracy achievable by means of three dimensional features. The most recent and effective approaches exploit a variety of metrics and matching techniques including normal maps [1–3], 3D weighted walkthroughs (3DWWs) and isogeodesic stripes [5], simulated annealing (SA) and surface-interpenetration measure (SIM) [44], collective shape difference classifier (CSDC) and signed shape difference map (SSDM) [50], anthropometric features [19], annotated face model fitting and facial symmetry [40], robust sparse bounding sphere representation (RSBSR) [33], multiscale extended Local Binary Patterns (eLBP) and local feature hybrid matching [20], elastic shape analysis and Riemannian metric [17], iterative closest normal point [34], pose adaptive filters [54], sparse matching of salient facial curves [6], deep networks [47], angular radial signature (ARS) and Kernel Principal Component Analysis (KPCA) [30], robust regional bounding spherical descriptor (RBSR) [32]. However, it has to be remarked that most of the methods proposed so far have been designed, developed, and tested on face scans or range images mainly created by means of dedicated devices such as laser scanners or stereoscopic cameras often requiring manual or semi-automatic data cleaning and optimization to produce usable 3D geometry. Moreover, these systems present the limitations of a strict configuration and require the user's cooperation to work properly, besides still being expensive.

Similar considerations could also apply to ear biometric that, thanks to the well-known studies originally conducted by Lannarelli [28], is acknowledged for its discriminant power due to the uniqueness of the ear shape, for its stability throughout most of the lifetime and for its invariance to facial expressions [14], but unfortunately shares the same limitations and constraints highlighted above for the face when it is captured in 3D. The first 3D method for ear recognition [11] was proposed in 2004 and exploited the Local Surface Patch (LSP) representation and the Iterative Closest Point (ICP) algorithm that was also used [52,53] for matching ear models obtained as range images or 3D mesh. The use of 3D representations, providing more faithful characterization of ear geometry, has led over time to the proposal and development of 3D approaches delivering very good recognition performances [21,22,43,46,56]. A 2.5D approach has also been explored exploiting surveillance videos and pseudo 3D information extracted by means of the Shape From Shading (SFS) scheme [8]. It is also worth mentioning two recent approaches in 3D ear recognition, based on the EGI representation [10] and on the 2D appearance 3D multi-view approach [15]. 3D ear could also represent a good ally for 3D multi-biometric recognition systems based on head capture [12]. However, most of the studies presented so far involved 3D ear representations (in the form of range images, point clouds, or even polygonal meshes).

So, while everybody in the field may agree that the potential is high, the practical usage of 3D face and 3D ear biometrics is still considerably limited for several applicative contexts and particularly for “in the wild” scenarios, where specialized equipment and manual data refinement is not practical.

Hopefully, this is going to change thanks to advances in both hardware and computer vision techniques which are described in the course of this chapter. There is, indeed, a consolidated technological trend according to which the technical specs of the imaging devices (sensors and optics) aboard smartphones, tablets and even electronic entertainment platforms (e.g., Kinect and the likes) are constantly improving (higher resolution, higher frame-rate, higher optics quality, lower noise, depth imaging capabilities, etc.) as well as the processing power (multicore mobile CPUs, highly parallel GPUs, etc.). In this chapter we try to elaborate about how it is becoming possible to address some of the limitations of conventional 3D capture hardware for biometric applications “in-the-wild” by exploiting computationally intensive algorithms such as Monocular SLAM [16,35], Parallel Tracking and Mapping (PTAM) [25,26], the more advanced Dynamic Tracking and Mapping (DTAM) [36], and the recently presented MonoFusion [42] on the affordable and almost ubiquitous mobile hardware.

The rest of this chapter paper is organized as follows. Section 3.2 briefly summarizes the main characteristics and principles of operation of the most established 3D scanning equipment also highlighting their limitations in the context of 3D biometrics. Section 3.3 reports about the most interesting features in the latest generation mobile devices and their exploitation for biometric applications. Section 3.4 reviews a group of techniques and methods for real-time 3D capture of shape and color suitable to 3D face and ear recognition on-the-go and, finally, Section 3.5 draws the conclusions and provides hints for near future applicative scenarios of 3D biometrics in-the-wild.

3.2 3D Capture of Face and Ear: CURRENT Methods and Suitable Options

As remarked in the introduction, both 3D face and 3D ear public dataset available so far have been mainly captured by means of laser scanners that along with structured light scanners and stereophotogrammetry represent the most suited methods and technologies for capturing tridimensional shape and color for biometric purposes.

3.2.1 Laser Scanners

The principle of operation of a laser 3D scanner is based on laser triangulation. An object is scanned by a plane of laser light coming from the source aperture. The plane of light is swept across the field of view by a mirror, rotated by a precise galvanometer. The laser light is reflected from the surface of the scanned object. Each scan line is observed by a single frame, captured by the CCD camera. The contour of the surface is derived from the shape of the image of each reflected scan line. The entire area is captured in a few seconds or even fractions of a second, and the surface shape is converted to a lattice containing up to millions of 3D points. A polygonal-mesh is eventually created by properly connecting the captured points and eliminating geometric ambiguities and improving detail capture. A 24-bit color image is captured at the same time by the same CCD and eventually mapped onto the polygonal mesh. The primary advantage of laser scanning is that the process is non-contact, fast, and results in accurate coordinate locations that lie directly on the surface of the scanned object.

Cost considerations aside, the main disadvantages for biometric applications may include the need for the subject to be captured to stay still during the scansion (though the latest generation of devices can bear subtle subject's movements), and may require a certain degree of caution to avoid staring at the laser beam. The renowned FRGC dataset of 3D faces (often including the ear region also) has been collected by extensively using such a laser scanner (Vivid 910 by Konica Minolta, shown in Fig. 3.1).

3.2.2 Structured Light Scanners

Structured light 3D scanners project a narrow band of light onto a three-dimensionally shaped surface producing a line of illumination that appears distorted from all perspectives other than that of the projector, and can be used for an exact geometric reconstruction of the surface shape (light section).

A faster and more versatile method is the projection of patterns consisting of many stripes at once, or of arbitrary fringes, as this allows for the acquisition of a multitude of samples simultaneously. Seen from different viewpoints, the pattern appears geometrically distorted due to the surface shape of the object. Although many other variants of structured light projection are possible, patterns of parallel stripes are widely used (see Fig. 3.2). The picture shows the geometrical deformation of a single stripe projected onto a simple 3D surface. The displacement of the stripes allows for an exact retrieval of the 3D coordinates of any details on the object's surface.

Two main methods of stripe pattern generation are used: laser interference and projection. The laser interference method works with two wide planar laser beam fronts. Their interference results in regular, equidistant line patterns. The projection method uses non-coherent light and basically works like a beamer projecting patterns generated by a display. Invisible structured light uses structured light without interfering with other computer vision tasks for which the projected pattern will be confusing.

Example methods use infrared light or extremely high frame-rates alternating between two exact opposite patterns. Since structured light scanners can measure shapes from only one perspective at a time, complete 3D shapes have to be combined from different measurements at different angles by stitching them together through a semi-automatic or automatic procedure (if reference landmarks are positioned on or surrounding the subject to be captured).

The popular Microsoft Kinect and the more recent Kinect 2 (Fig. 3.3, center) exploit a pattern of projected infrared points (Fig. 3.3, left) to generate a dense 3D image thus producing range data and also real-time mesh reconstruction for objects and environment within its field of view [23]. A wide range of research works and applications have been presented since its introduction in 2010, arguably making this peripheral for the Xbox videogame console the world's most diffused 3D sensing device. In this context, one of most notable contributions is [36,37] concerning a method which permits real-time, dense volumetric reconstruction of complex room-sized scenes using a handheld Kinect depth sensor. Users can simply pick up and move a Kinect device to generate a continuously updating, smooth, fully fused 3D surface reconstruction. Using only depth data, the system continuously tracks the 6 degrees-of-freedom (6DoF) pose of the sensor using all of the live data available from the Kinect sensor rather than an abstracted feature subset, and integrates depth measurements into a global dense volumetric model. By using only depth data, the proposed system can work in complete darkness, mitigating any issues concerning low light conditions, problematic for passive camera and RGB-D based systems.

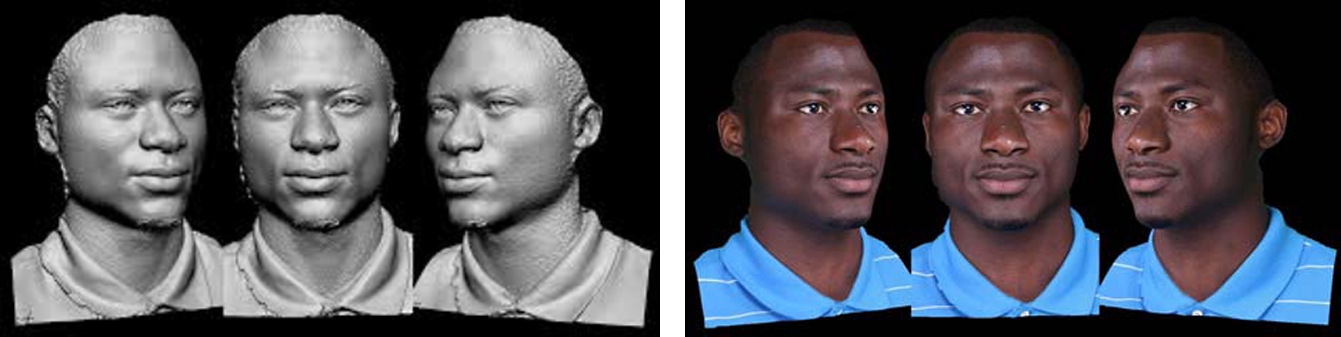

The Kinect has also proved to be capable of capturing 3D human anatomy (Fig. 3.3, right) with a level of detail more than adequate for delivering biometric applications. To this regard Li et al. [31] presented an approach to 3D face recognition entirely based on the Kinect and robust to simultaneous variations in pose, expression, illumination, and disguise. They exploit facial symmetry to addressing face recognition under non-frontal view while helping to smooth out noisy depth data. Although 3D data provided by the Kinect happens to be very noisy, it still retains sufficiently discriminant info for face recognition. In the proposed approach, 3D information is used to preprocess the texture, improving face recognition accuracy significantly in situation of extreme pose variations. The preprocessed depth map also improves face recognition, especially under low ambient lighting condition and sunglasses disguise. According to the experiments conducted, the proposed system relying on RGB-D information achieves an overall recognition rate of 96.7% that drops to 88.7% when using depth-data alone. These results suggest that non-intrusive face recognition can be performed well with high-speed low-cost 3D sensors, even though they have low depth resolution.

3.2.3 Stereophotogrammetry

Stereophotogrammetry, a special case of photogrammetry, is a technique often adopted for achieving accurate 3D reconstruction and involves estimating the three-dimensional coordinates of points on an object employing measurements made in two or more photographic images taken from different positions. Common points are identified on each image. A ray can be constructed from the camera location to the point on the object. The triangulation produced by the intersection of these rays determines the three-dimensional location of the point. More sophisticated algorithms can exploit a priori knowledge about the scene, such as symmetries, in some cases allowing reconstructions of 3-D coordinates from only one camera position. (See Fig. 3.4.)

Disadvantages in using this method for capturing biometric features are related mainly to the nontrivial configuration of the cameras involved and also to the time required from multiple-image capture to final mesh production that is not suited to real time applications. Moreover, the neutral backdrop often required for simplifying the segmentation of the main subject to be reconstructed and the need for a controlled lighting pose further constraints to the capture process.

To sum it up, all the methods exposed above can produce 3D representation suited to biometric applications in terms of shape and color accuracy, but they are all practically non-exploitable “in-the-wild” or under uncontrolled conditions (e.g., outdoor lighting) due to both their specific operating requirements and the computing hardware involved (typically desktop/notebook computers or videogame consoles).

3.3 Mobile Devices for Ubiquitous Face–Ear Recognition

The worldwide diffusion of smartphones and tablets has been characterized by an unprecedented speed

and pervasiveness, resulting in the first form of really ubiquitous computing and communication devices.

The most recent statistics depict a scenario in which almost five billion people (roughly accounting for 66% of the global population) have been using a mobile phone in 2015, with a fraction of more than 2 billion using a smartphone. Market experts estimate smartphone adoption will continue at fast pace, and it is likely to reach 50% of the mobile users in the next three years, while in Europe and North America these figures could be conservative. Along with their rapidly increasing diffusion, the latest generation of smartphones and tablets have undergone uninterrupted feature enhancements (see Table 3.1), particularly in terms of screen resolution, number of embedded sensors and accuracy, imaging capabilities of embedded cameras, and CPU/GPU computing power. The latter two advances are the most relevant due to their usage as “commodity” 3D sensing platforms.

Table 3.1

Technical specifications of latest-generation mobile devices with regard to image capturing and processing

| SAMSUNG Galaxy Note 5 | SAMSUNG Galaxy S6 Edge | APPLE IPhone 6 Plus | |

| Display size | 5.7 inches | 5.7 inches | 5.5 inches |

| Screen resolution | 2560 × 1440 | 2560 × 1440 | 1920 × 1080 |

| 518 ppi | 518 ppi | 401 ppi | |

| Processor | Octa-core Exynos | Octa-core Exynos | Apple A8 with M8 |

| 7420 | 7420 | motion coprocessor | |

| 64-bit | Yes | Yes | Yes |

| Front camera | 5 MP | 5 MP | 1.2 MP |

| Rear camera | 16 MP | 16 MP | 8 MP |

| Video recording | 2160p@30fps | 2160p@30fps | 1080p@60fps |

| 1080p@60fps | 1080p@60fps | 720p240fps | |

| 720p120fps | 720p120fps | optical stabilization | |

| optical stabilization | optical stabilization | ||

| Mobile payment | Samsung Pay | Samsung Pay | Apple Pay |

| Fingerprint sensor | Yes | Yes | Yes |

| Internal storage | 32/64 GB | 32/64 GB | 16/64/128 GB |

| Expandable storage | No | No | No |

More specifically, new, more sophisticated optics have been adopted (see Fig. 3.5), reducing the main defects of typical phone-captured images (vignetting, barrel deformations, etc.) coupled to optical stabilizers for reduced motion blurring and to highly optimized imaging sensors capable of lower noise and of resolution up to 16 megapixels in still capture mode, or even WQHD (![]() ) resolution in video capture mode. According to a new trend set by action cameras, unprecedentedly high frame-rate video capture modes are also available, ranging from 60fps in Full HD (

) resolution in video capture mode. According to a new trend set by action cameras, unprecedentedly high frame-rate video capture modes are also available, ranging from 60fps in Full HD (![]() ) to 240fps in HD (

) to 240fps in HD (![]() ), providing a premise for real-time advanced and robust image processing applications. Additionally, multiple shot sequences can also be taken (bracketing). In this case multiple fast-shutter frames at full resolution and advanced exposition metering can further improve image quality. Along with the ability to produce high quality image content data, the processing capability of this new generation of products has grown as well (see Table 3.1).

), providing a premise for real-time advanced and robust image processing applications. Additionally, multiple shot sequences can also be taken (bracketing). In this case multiple fast-shutter frames at full resolution and advanced exposition metering can further improve image quality. Along with the ability to produce high quality image content data, the processing capability of this new generation of products has grown as well (see Table 3.1).

Multicore processors are now exploited in almost any smartphone or tablet, featuring up to eight cores which can be very useful not only for generic multitasking but particularly for multithreaded implementation of image processing algorithms. This is even more true for the vector processors embedded in the most advanced mobile versions of GPU typically provided by Nvidia of AMD, whose potential is becoming to be exploited not only for videogame applications but also for compute-intensive tasks. For all these reasons, mobile devices might represent a new opportunity for 3D biometrics, greatly fostering the diffusion of 3D approaches for verification and recognition on-the-go. Among the few works presented on the topic so far, Kramer et al. [27] explore the efficacy of face recognition using smartphones by prototyping and testing a face recognition tool for blind users. The tool utilizes mobile technology in conjunction with a wireless network to provide audio feedback of the people in front of the blind user. Testing indicated that the developed face recognition method can tolerate up to a 40 degree angle between the direction a person is looking and the camera axis, and can achieve a 96% success rate with no false positives.

In the same line of research, Wang and Cheng [49], Wang et al. [51] focus on mapping compute-intensive biometric applications to a smartphone platform optimized for maximizing per-energy user experience. The case-study application is a face recognition system based on Gabor face feature representation. A baseline implementation of the application on Nvidia Tegra platform takes 8.5 s to recognize a person. The study highlights two approaches for both performance and energy optimization. The first one involves tuning the algorithmic parameters and characterizing the accuracy–runtime–energy trade-offs, while the second one focuses on a better utilization of the mobile GPU, to reduce the computational load and improve energy efficiency. The implementation based on the best algorithmic configuration and GPU implementation achieves 71% reduction in computation time and 70% saving in energy consumption, particularly for the most time-consuming task, namely face feature extraction.

Also concerning ear recognition, there are only a few studies presented so far. In [18] the authors assume that ear images can be seen as a composition of micro-patterns that can be well described by Linear Binary Patterns (LBP). In order to get geometric features, they use the idea that a smartphone user can adjust the location of the ear center, and then they combine geometric features with LBP to achieve a descriptor representing the ear. Finally, ear recognition is performed using a nearest neighbor classifier in the computed feature space with Euclidean distance as a similarity measure.

Bargal et al. [4] describe the development of an image-based smartphone application prototype for ear biometrics. The application targets the public health problem of managing medical records at on-site medical clinics in less developed countries where many individuals do not hold IDs. The domain presents challenges for an ear biometric system, including varying scale, rotation, and illumination. After performing a comparative study of three ear biometric extraction techniques, Scale Invariant Feature Transform (SIFT) was used to develop an iOS application prototype to establish the identity of an individual using a smartphone camera image.

Finally, Raghavendra et al. [45] propose the first example of multi-modal 3D biometric recognition system on smartphone, based on a novel approach for reconstructing 3D face in real-life scenarios by addressing the most challenging issue that involves reconstructing depth information from a video recorded from the smartphone's frontal camera. Such videos, indeed, pose lots of challenges, such as motion blur, non-frontal perspectives, and low resolution. This limits the applicability of the state-of-the-art algorithms, which are mostly based on landmark detection. This situation is addressed with the SIFT followed by feature matching to generate consistent tracks. These tracks are further processed to generate a 3D point cloud using Point/Cluster based Multi-view stereo (PMVS/CMVS). The usage of PMVS/CMVS will however fail to generate a dense 3D cloud points on the weak surfaces of face (such as cheeks, nose, and forehead). This issue is addressed by multi-view reconstruction of these weakly supported surfaces using Visual-Hull. The method proceeds further to perform person identification using either face or ear biometric by first checking whether the frontal face can be detected from the 3D reconstructed face. In a positive case, person identification is performed based on the face; otherwise ear detected from the reconstructed 3D face profile is used instead. The effectiveness of the proposed method has been evaluated on a specifically collected dataset, which simulates a realistic identification scenario using a smartphone, achieving a recognition rate of 80.0% for the multimodal face+ear experiment which drops to 68.00% for the unimodal face/ear version. According to the authors, the proposed approach can be seen as a proof of concept for future authentication systems which are more accurate and more robust against spoofing than existing approaches.

3.4 The Next Step: Mobile Devices for 3D Sensing Aiming at 3D Biometric Applications

Though we highlighted in the previous section the almost total lack of 3D methods for smartphone powered biometrics, the last five years have seen a number of achievements concerning the topic of real-time 3D scene reconstruction on generic computing hardware and on mobile architecture as well, that open the way for 3D face and ear recognition “in-the-wild”. One of the first examples of the interest shown by the research community to the smartphone platform is due to Klein and Murray [25] which attempt to implement the first keyframe-based SLAM system on the 2008 Apple iPhone 3G (a device widely outperformed by current generation of smartphones) showing this hardware is capable to generate and augment small maps in real time and at full frame-rate. To achieve this goal, the authors propose various adaptations to the Parallel Tracking and Mapping method to cope with the device's imaging deficiencies (e.g., low capture frame-rate, narrow field of view, smearing artifacts due to rolling shutter, and relevant motion-blur), delivering a system capable of interesting tracking and mapping performances, though not comparable to the implementations of SLAM on a PC-class computing hardware. A limiting factor of any RGB approach is that texture is required for both tracking and depth estimation, so if the captured surface has few features then the accuracy of estimates drops.

Aiming at improving accuracy and robustness of the PTAM, Newcombe et al. presented in 2011 the Dynamic Tracking and Mapping algorithm. DTAM [36,37] enables real-time camera tracking and reconstruction exploiting dense, every pixel methods instead of feature extraction. By simply moving a single color camera around the scene to be captured, on selected keyframes the algorithm estimates detailed textured depth maps by dense multi-view stereo reconstruction (with sub-pixel accuracy), resulting in a texture-mapped scene model with millions of vertices. The method exploits the video stream (composed by hundreds of images) as the input to each depth map. Photometric information is sequentially gathered in a cost volume, and is incrementally solved for regularized depth maps by means of an optimization framework. At the same time the camera's 6DoF motion is also tracked with precision at least comparable to feature-based methods but with much greater robustness in case of motion blur or camera defocus, benefitting from the predictive capabilities of a dense model with regard to occlusion handling and multiscale operation The DTAM method is highly parallelizable, and as such it delivers real-time performance when executed on relatively inexpensive GPU hardware.

In Lee et al. [29] the authors propose an efficient method for creating a photorealistic 3D face model on a smartphone by using an active contour model (ACM) and deformable iterative closest point (ICP) methods without the need for any calibration of the phone's built-in camera. By automatically extracting the features of a human face such as eyes, nose, lip, cheek, chin, and a profile from the front and side images of the captured subject, a 3D face model is generated by deforming a generic model using RBF (radial basis function) interpolation method so that the 3D face model is correctly assigned to the extracted facial features. Skin texture map is therefore obtained from the front image mapped onto the warped 3D face model. The whole procedure has been implemented and optimized to run on a smartphone with limited processing power and memory capability. According to the conducted experiments, a photorealistic 3D face model can be created in an average time of 6 s on a smartphone from the year 2010. In addition, the proposed approach is quite robust to common operating context in which smartphones are used.

More recently, Pradeep et al. [42] proposed a method called MonoFusion for building dense 3D reconstructions of the surrounding environment in real-time by means of only a single camera for scene capturing. Tablet and mobile phone cameras or similar commodity devices can be used for this purpose both in indoor and outdoor environments whereas other methods based on power intensive active sensors do not work robustly in natural outdoor lighting. The captured video stream is used to estimate the six degrees-of-freedom (6DoF) pose of the camera using a hybrid sparse and dense tracking method, while poses are used for efficient dense stereo matching between a pair composed by the input frame and a previously extracted key frame. By adopting a computationally inexpensive technique, the dense depth maps obtained in the previous step are fused into a voxel-based implicit model and then surfaces are extracted per frame. Additionally, the proposed approach presents capability of recovering from tracking failures and also of filtering out capture noise from the 3D reconstruction. A remarkable advantage of MonoFusion is that it does not require computationally expensive global optimization methods for depth computation or fusion such as those involved in DTAM. Further, it removes the need for a memory and compute-intensive cost volume for depth computation. Experimental results show high quality reconstructions almost visually comparable to those achieved using active depth sensor-based systems such as the previously mentioned KinectFusion. The whole method implementation and optimization is based on GPU and requires a desktop-class PC or a high end laptop to perform efficiently. However, the authors suggest that a client–server approach could be used to exploit mobile devices as capture terminals thanks to their built-in sensors and their video streaming capabilities, leaving the most compelling tasks to a remote host that could also send back the resulting data to the mobiles.

The evolution of MonoFusion specifically targeted to mobile architectures is MobileFusion [39] that describes a comprehensive pipeline for real-time scanning of 3D surface models and 6DoF camera tracking optimized to run on commodity mobile architectures, like smartphones and tablets, without any hardware modifications. The proposed method exploits the built-in RGB camera to allow users to scan any kind of object in the physical environment within a few seconds, also providing real-time visual feedback during the capture process. The whole reconstruction process takes place entirely on the phone (unlike other approaches in the literature which produce only point-based 3D models, or require cloud-based processing) and includes 25 frames-per-second dense camera tracking, key frame selection, dense stereo matching, volumetric depth map fusion, and raycast-based surface extraction. More specifically, the hybrid GPU/CPU system pipeline operates through five main steps: dense image alignment for pose estimation, key frame selection, dense stereo matching, volumetric update, and raytracing for surface extraction. The tracker estimates the 6DoF camera pose by aligning the entire RGB frame (in a given video stream) with a projection of the current volumetric model. Thanks to an efficient and robust metric that evaluates the overlap between frames, key-frames are selected from live input. For the purpose of performing dense stereo matching, the camera pose is used to select a key-frame that can be used along with the live frame once it has been rectified. The output depth map is then fused into a single global volumetric model. While scanning, a visualization of the current model from camera perspective is displayed to the user for live feedback and then passed into the camera tracking phase for pose estimation. The authors show the results from experiments conducted on different objects featuring both geometrical and organic shapes (such as a human head), producing fully connected 3D scans whose accuracy is inferior to that achieved by active-sensor based method (e.g., KinectFusion), but comparable to the state-of-the-art point-based mobile phone method, with the remarkable advantage of an order of magnitude faster scanning times (average capture-building time for objects featuring geometrical complexity comparable to a human head is in the range of 15–20 s on a latest generation smartphone).

Based on this last group of approaches, it is arguable that the potential for mobile-based 3D face verification/recognition is real, though its current exploitation could still be limited by the processing power available today. Indeed, we have to consider that the capture of 3D biometric data (that is somewhat addressed by the methods summarized above) is only the first stage of the process required to perform identity check, so its cost has to be added to those (not negligible) related to feature extraction, feature matching, and decision making algorithms. This is even more true for recognition (one-to-many) applications. To this regard, the idea of a client–server architecture or even a cloud-computing approach could be beneficial in light of effectively exploiting the ubiquity of mobile hardware and its connectivity capabilities.

According to this approach, a viable solution for the ubiquitous exploitation of 3D multi-biometric data could be based on a heterogeneous network composed by a front-end (mobile devices as main terminals for on-the-field capturing of face and/or ear, pre-processing and sharing of biometric info) and a back-end (remote hosting for computing intensive tasks such as multi-biometric templates matching and subsequent decision-making according to static and/or dynamic fusion-rules) as schematically depicted in Fig. 3.6. The overall operative paradigm can be summarized as follows: in a typical in-the-wild enrollment scenario, video stream of face/ear acquired by the camera embedded in a mobile device could be uploaded on a host (remote) computing platform for GPU-based real-time 3D surface reconstruction and subsequent feature extraction and (multi)biometric template generation (eventually weighted according to capturing conditions and resulting quality), encryption, and storage. For the verification/recognition scenario, a new capture of the considered biometrics will enable the remote matching by means of context and application adaptive decision rules.

3.5 Conclusions and Future Scenarios

The overall vision clearly emerging from the previous sections is that we are very close to the point in which the imaging quality and the computing performance of mobile devices will be adequate to computer-vision tasks such as those required for 3D biometrics capturing and recognition and for a number of market reasons the technology trend driven by the major industry players is going in that direction. There is still a relevant performance gap between the most powerful mobile devices and a basic desktop computer, but though it is reasonable to expect that this distance will never be reduced to zero, there will be enough power for a number of applications previously unfeasible. In a world in which biometrics empowered applications are diffusing at a fast pace in any field of the life and in which more and more communications are based on mobile devices, it is easy to foresee that 3D approaches will become much more viable, provided optimizations will be developed specifically for this class of products.