In this chapter

| Introduction |

| Client/server performance |

| Transaction performance |

| Performance configuration options |

| Coding patterns for performance |

| Performance monitoring tools |

Performance is often an afterthought for development teams. Often, performance is not considered until late in the development process or, more critically, after a customer reports severe performance problems in a production environment. After a feature is implemented, making more than minor performance improvements is often too difficult. But if you know how to use the performance optimization features in Microsoft Dynamics AX, you can create designs that allow for optimal performance within the boundaries of the Microsoft Dynamics AX development and run-time environments.

This chapter discusses some of the most important facets of optimizing performance, and it provides an overview of performance configuration options and performance monitoring tools. For the latest information about how to optimize performance in Microsoft Dynamics AX, check the Microsoft Dynamics AX Performance Team blog at http://blogs.msdn.com/axperf. The Performance Team updates this blog regularly with new information. Specific blog entries are referenced throughout this chapter to supplement the information provided here.

Client/server communication is one of the key areas that you can optimize for Microsoft Dynamics AX. This section details the best practices, patterns, and programming techniques that yield optimal communication between the client and the server.

The following three techniques can help reduce round-trips significantly in many scenarios:

Display and edit methods are used on forms to display data that must be derived or calculated based on other information in the underlying table. These methods can be written on either the table or the form. By default, these methods are calculated one by one, and if there is a need to go to the server when one of these methods runs, as there usually is, each function goes to the server individually. The fields associated with these methods are recalculated every time a refresh is triggered on the form, which can occur when a user edits fields, uses menu items, or presses F5. Such a technique is expensive in both round trips and the number of calls that it places to the database from the Application Object Server (AOS).

Caching cannot be performed for display and edit methods that are declared on the data source for a form because the methods require access to the form metadata. If possible, you should move these methods to the table. For display and edit methods that are declared on a table, use the FormDataSource.cacheAddMethod method to enable caching. This method allows the form’s engine to calculate all the necessary fields in one round-trip to the server and then cache the results. To use cacheAddMethod, in the init method of a data source that uses display or edit methods, call cacheAddMethod on that data source and pass in the method string for the display or edit method. For example, look at the SalesLine data source of the SalesTable form. In the init method, you will find the following code:

public void init()

{

super();

salesLine_ds.cacheAddMethod(tableMethodStr(SalesLine, invoicedInTotal), false);

salesLine_ds.cacheAddMethod(tableMethodStr(SalesLine, deliveredInTotal), false);

salesLine_ds.cacheAddMethod(tableMethodStr(SalesLine, itemName), false);

salesLine_ds.cacheAddMethod(tableMethodStr(SalesLine, timeZoneSite), true);

}If you were to remove this code with comments, each display method would be computed for every operation on the form data source, increasing the number of round-trips to the AOS and the number of calls to the database server. For more information about cacheAddMethod, see http://msdn.microsoft.com/en-us/library/formdatasource.cacheaddmethod.aspx.

Note

Do not register display or edit methods that are not used on the form. Those methods are calculated for each record, even though the values are never shown.

In Microsoft Dynamics AX 2009, Microsoft made a significant investment in the infrastructure of cacheAddMethod. In previous releases, this method worked only for display fields and only on form load. Beginning with Microsoft Dynamics AX 2009, the cache is used for both display and edit fields, and it is used throughout the lifetime of the form, including for reread, write, and refresh operations. It also works for any other method that reloads the data behind the form. With all of these methods, the fields are refreshed, but the kernel now refreshes them all at once instead of individually. In Microsoft Dynamics AX 2012, these features have been extended by another newly added feature—declarative display method caching.

You can use the declarative display method caching feature to add a display method to the display method cache by setting the CacheDataMethod property on a form control to Yes. Figure 13-1 shows the CacheDataMethod property.

The values for the new property are Auto, Yes, and No, with the default value being Auto. Auto equates to Yes when the data method is hosted on a read-only form data source. This primarily applies to list pages. If the same data method is bound to multiple controls on a form, if at least one of them equates to Yes, the method is cached.

RunBase classes form the basis for most business logic in Microsoft Dynamics AX. RunBase provides much of the basic functionality necessary to execute a business process, such as displaying a dialog box, running the business logic, and running the business logic in batches.

Note

Microsoft Dynamics AX 2012 introduces the SysOperation framework, which provides much of the functionality of the RunBase framework and will eventually replace it. For more information about the SysOperation framework in general, see Chapter 14. For more information about optimizing performance when you use the SysOperation framework, see The SysOperation framework, later in this chapter.

When business logic executes through the RunBase framework, the logic flows as shown in Figure 13-2.

Most of the round-trip problems of the RunBase framework originate with the dialog box. For security reasons, the RunBase class should be running on the server because it accesses a large amount of data from the database and writes it back. But a problem occurs when the RunBase class is marked to run on the server. When the RunBase class runs on the server, the dialog box is created and driven from the server, causing excessive round-trips.

To avoid these round-trips, mark the RunBase class to run on Called From, meaning that it will run on either tier. Then mark either the construct method for the RunBase class or the menu item to run on the server. Called From enables the RunBase framework to marshal the class back and forth between the client and the server without having to drive the dialog box from the server, which significantly reduces the number of round-trips. Keep in mind that you must implement the pack and unpack methods in a way that allows this serialization to happen.

For an in-depth guide to implementing the RunBase framework to handle round-trips optimally between the client and the server, refer to the Microsoft Dynamics AX 2009 white paper, “RunBase Patterns,” at http://www.microsoft.com/en-us/download/details.aspx?id=19517.

Microsoft Dynamics AX has a data caching framework on the client that can help you greatly reduce the number of times the client goes to the server. In Microsoft Dynamics AX, the cache operates across all of the unique keys in a table. Therefore, if a piece of code accesses data from the client, the code should use a unique key if possible. Also, you need to ensure that all unique keys are marked as such in the Application Object Tree (AOT). You can use the Best Practices tool to ensure that all of your tables have a primary key. For more information about the Best Practices tool, see Chapter 2.

Setting the CacheLookup property correctly is a prerequisite for using the cache on the client. Table 13-1 shows the possible values for CacheLookup. These settings are discussed in greater detail in the Caching section later in this chapter.

Table 13-1. Settings for the CacheLookup property.

Cache setting | Description |

|---|---|

Found | If a table is accessed through a primary key or a unique index, the value is cached for the duration of the session or until the record is updated. If another instance of the AOS updates this record, all AOS instances will flush their caches. This cache setting is appropriate for master data. |

NotInTTS | Works the same way as Found, except that every time a transaction is started, the cache is flushed and the query goes to the database. This cache setting is appropriate for transactional tables. |

FoundAndEmpty | Works the same way as Found, except that if the query cannot find a record, the absence of the record is stored. This cache setting is appropriate for region-specific master data or master data that isn’t always present. |

The entire table is cached in memory on the AOS, and the client treats this cache as Found. This cache setting is appropriate for tables with a known number of limited records, such as parameter tables. | |

None | No caching occurs. This setting is appropriate in only a few cases, such as when optimistic concurrency control must be disabled. |

An index can be cached only if the where clause contains column names that are unique. The unique index join cache is a new feature that is discussed later in this chapter (see The unique index join cache in the Transaction performance section later in this chapter). This cache supports 1:1 relations only. In other words, caching won’t work if a 1:n join is present or if the query is a cross-company query. In Microsoft Dynamics AX 2012, even if range operations are in the query, caching is supported so long as there is a unique key lookup in the query.

A cache that is set to EntireTable stores the entire contents of a table on the server, but the cache is treated as a Found cache on the client. For tables that have only one row for each company, such as parameter tables, add a key column that always has a known value, such as 0. This allows the client to use the cache when accessing these tables. For an example of the use of a key column in Microsoft Dynamics AX, see the CustParameters table.

When you’re writing code, be aware of the tier that the code will run on and the tier that the objects you’re accessing are on. Here are some things to be aware of:

Note that if you mark classes to always run on either the client or the server by setting the RunOn property to either Client or Server, you canvt serialize them to another tier by using the pack and unpack methods. If you attempt to serialize a server class to the client, you get a new object on the server with the same values. Static methods run on whatever tier they are specified to run on by means of the Client, Server, or Client Server keyword in the declaration.

Temporary tables can be a common source of both client callbacks and calls to the server. Unlike regular table buffers, temporary tables are located on the tier on which the first record was inserted. For example, if a temporary table is declared on the server and the first record is inserted on the client, while the rest of the records are inserted on the server, all access to that table from the server happens on the client.

It’s best to populate a temporary table on the server because the data that you need is probably coming from the database. Still, you must be careful when you want to iterate through the data to populate a form. The easiest way to achieve this efficiently is to populate the temporary table on the server, serialize the entire table to a container, and then read the records from the container into a temporary table on the client.

Avoid joining inMemory temporary tables with regular database tables whenever possible, because the AOS will first fetch all of the data in the database table of the current company and then combine the results in memory. This is an expensive, time-consuming process.

Try to avoid the type of code shown in the following example:

public static server void ImMemTempTableDemo()

{

RealTable rt;

InMemTempTable tt;

int i;

// Populate temp table

ttsBegin;

for (i=0; i<1000; i++)

{

tt.Value = int2str(i);

tt.insert();

}

ttsCommit;

// Inefficient join to database table. If the temporary table is an inMemory

// temp table, this join causes 1,000 select statements on the database table and with

// that, 1,000 round-trips to the database.

select count(RecId) from tt join rt where tt.value == rt.Value;

info(int642str(tt.Recid));

}If you decide to use inMemory temporary tables, indexing them correctly for the queries that you plan to run on them will improve performance significantly. There is one difference compared to indexing for queries against regular tables: the fields must be in the same order as in the query itself. For example, the following query will benefit significantly from an index on the AccountMain, ColumnId, and PeriodCode fields in the TmpDimTransExtract table:

SELECT SUM(AmountMSTDebCred) FROM TmpDimTransExtract WHERE ((AccountMain>=N'11011201' AND AccountMain<=N'11011299')) AND ((ColumnId = 1)) AND ((PeriodCode = 1))

You can use TempDB temporary tables to replace inMemory temporary table structures easily. TempDB temporary tables have the following advantages over InMemory temporary tables:

You can join TempDB temporary tables to database tables efficiently.

You can use set-based operations to populate TempDB temporary tables, reducing the number of round-trips to the database.

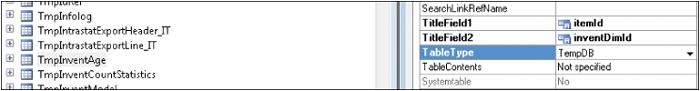

To create a TempDB temporary table, set the TableType property to TempDB, as shown in Figure 13-3.

Tip

Even if temporary tables aren’t dropped but are instead truncated and reused as soon as the current code goes out of scope, minimize the number of temporary tables that need to be created. There is a cost associated with creating a temporary table, so use them only if you need them.

If you use TempDB temporary tables, don’t populate them by using line-based operations, as shown in the following example:

public static server void SQLTempTableDemo1()

{

SQLTempTable tt;

int i;

// Populate temporary table; this will cause 1,000 round-trips to the database

ttsBegin;

for (i=0; i<1000; i++)

{

tt.Value = int2str(i);

tt.insert();

}

ttsCommit;

}Instead, use set-based operations. The following example shows how to use a set-based operation to create an efficient join to a database table:

public static server void SQLTempTableDemo2()

{

RealTable rt;

SQLTempTable tt;

// Populate the temporary table with only one round-trip to the database.

ttsBegin;

insert_recordset tt (Value)

select Value from rt;

ttsCommit;

// Efficient join to database table causes only one round-trip. If the temporary table

// is an inMemory temp table, this join would cause 1,000 select statements on the

// database table.

select count(RecId) from tt join rt where tt.value == rt.Value;

info(int642str(tt.Recid));

}A client callback occurs when the client places a call to a server-bound method and the server then places a call to a client-bound method. These calls can happen for two reasons. First, they occur if the client doesn’t send enough information to the server during its call or if the client sends the server a client object that encapsulates the information. Second, they occur when the server is either updating or accessing a form.

To eliminate the first kind of callback, ensure that you send all of the information that the server needs in a serializable format, such as packed containers or value types (for example, int, str, real, or boolean). When the server accesses these types, it doesn’t need to go back to the client the way that it does if you use an object type.

To eliminate the second type of callback, send any necessary information about the form to the method, and manipulate the form only when the call returns, instead of directly from the server. One of the best ways to defer operations on the client is by using the pack and unpack methods. With pack and unpack, you can serialize a class to a container and then deserialize it at the destination.

To ensure the minimum number of round-trips between the client and the server, group calls into one static server method and pass in the state necessary to perform the operation.

The NumberSeq::getNextNumForRefParmId method is an example of a static server method that is used for this purpose. This method call contains the following line of code:

return NumberSeq::newGetNum(CompanyInfo::numRefParmId()).num();

If this code ran on the client, it would cause four remote procedure call (RPC) round-trips: one for newGetNum, one for numRefParmId, one for num, and one to clean up the NumberSeq object that was created. By using a static server method, you can complete this operation in one RPC round-trip.

Another common example of grouping calls into chunks occurs when the client performs transaction tracking system (TTS) operations. Frequently, a developer writes code similar to that in the following example:

ttsBegin; record.update(); ttsCommit;

You can save two round-trips if you group this code into one static server call. All TTS operations are initiated only on the server. To take advantage of this, do not invoke the ttsbegin and ttscommit call from the client to start the database transaction when the ttslevel is 0.

The global methods buf2con and con2buf are used in X++ to convert table buffers into containers, and vice versa. New functionality has been added to these methods, and they have been improved to run much faster than in previous versions of Microsoft Dynamics AX.

Converting table buffers into containers is useful if you need to send the table buffer across different tiers; (for example, between the client and the server). Sending a container is better than sending a table buffer because containers are passed by value and table buffers are passed by reference. Passing objects by reference across tiers causes a high number of RPC calls and degrades the performance of your application. Referencing objects that were created on different tiers causes an RPC call every time the other tier invokes one of the instance methods of the remote object. To improve performance, you can eliminate a callback by creating local copies of the table buffers, using buf2con to pack the table and con2buf to unpack it.

The following example shows a form running on the client and transferring data to the server for updating. The example illustrates how to transfer a buffer efficiently with a minimum number of RPC calls:

public void updateResultField(Buf2conExample clientRecord)

{

container packedRecord;

// Pack the record before sending to the server

packedRecord = buf2Con(clientRecord);

// Send packed record to the server and container with the result

packedRecord = Buf2ConExampleServerClass::modifyResultFromPackedRecord(packedRecord);

// Unpack the returned container into the client record.

con2Buf(packedRecord, clientRecord);

Buf2conExample_ds.refresh();

}Modify the data on the server tier and then send a container back:

public static server container modifyResultFromPackedRecord(container _packedRecord)

{

Buf2conExample recordServerCopy = con2Buf(_packedRecord);

Buf2ConExampleServerClass::modifyResult(recordServerCopy);

return buf2Con(recordServerCopy);

}

public static server void modifyResult(Buf2conExample _clientTmpRecord)

{

int n = _clientTmpRecord.A;

_clientTmpRecord.Result = 0;

while (n > 0)

{

_clientTmpRecord.Result = Buf2ConExampleServerClass::add(_clientTmpRecord);

n--;

}

}The preceding section focused on limiting traffic between the client and server tiers. When a Microsoft Dynamics AX application runs, however, these are just two of the three tiers that are involved. The third tier is the database tier. You must optimize the exchange of packages between the server tier and the database tier, just as you do between the client tier and the server tier. This section explains how you can optimize transactions.

The Microsoft Dynamics AX run time helps you minimize calls made from the server tier to the database tier by supporting set-based operators and data caching. However, you should also do your part by reducing the amount of data you send from the database tier to the server tier. The less data you send, the faster that data is retrieved from the database and fewer packages are sent back. These reductions result in less memory being consumed. All of these efforts promote faster execution of application logic, which results in smaller transaction scope, less locking and blocking, and improved concurrency and throughput.

Note

You can improve transaction performance further through the design of your application logic. For example, ensuring that various tables and records are always modified in the same order helps prevent deadlocks and ensuing retries. Spending time preparing the transactions to be as brief as possible before starting a transaction scope can reduce the locking scope and resulting blocking, ultimately improving the concurrency of the transactions. Database design factors, such as index design and use, are also important. However, these topics are beyond the scope of this book.

The X++ language contains operators and classes to enable set-based manipulation of the database. Set-based constructs have an advantage over record-based constructs—they make fewer round-trips to the database. The following X++ code example, which selects several records in the CustTable table and updates each record with a new value in the CreditMax field, illustrates how a round-trip is required when the select statement executes and each time the update statement executes:

static void UpdateCustomers(Args _args)

{

CustTable custTable;

ttsBegin;

while select forupdate custTable

where custTable.CustGroup == '20' // Round-trips to the database

{

custTable.CreditMax = 1000;

custTable.update(); // Round-trip to the database

}

ttsCommit;

}In a scenario in which 100 CustTable records qualify for the update because the CustGroup field value equals 20, the number of round-trips would be 101 (1 for the select statement and 100 for the update statements). The number of round-trips for the select statement might actually be slightly higher, depending on the number of CustTable records that can be retrieved simultaneously from the database and sent to the AOS.

Theoretically, you could rewrite the code in the preceding example to result in only one round-trip to the database by changing the X++ code, as indicated in the following example. This example shows how to use the set-based update_recordset operator, resulting in a single Transact-SQL UPDATE statement being passed to the database:

static void UpdateCustomers(Args _args)

{

CustTable custTable;

ttsBegin;

update_recordset custTable setting CreditMax = 1000

where custTable.CustGroup == '20'; // Single round-trip to the database

ttsCommit;

}For several reasons, however, using a record buffer for the CustTable table doesn’t result in only one round-trip. The reasons are explained in the following sections about the set-based constructs that the Microsoft Dynamics AX run time supports. These sections also describe features that you can use to ensure a single round-trip to the database, even when you’re using a record buffer for the table.

Important

The set-based operations described in the following sections do not improve performance when used on inMemory temporary tables. The Microsoft Dynamics AX run time always downgrades set-based operations on inMemory temporary tables to record-based operations. This downgrade happens regardless of how the table became a temporary table (whether specified in metadata in the table’s properties, disabled because of the configuration of the Microsoft Dynamics AX application, or explicitly stated in the X++ code that references the table). Also, the downgrade always invokes the doInsert, doUpdate, and doDelete methods on the record buffer, so no application logic in the overridden methods is executed.

A set-based operation such as insert_recordset, update_recordset, or delete_from is not downgraded to a record-based operation on a subtype or supertype table unless a condition that would cause the operation to be downgraded is met. Both an insert_recordset and update_recordset can update or insert all qualifying records into the specified table and all subtype and supertype tables, but not into any derived tables. The delete_from operator is treated differently because it deletes all qualifying records from the current table and its subtype and supertype tables to guarantee that the record is deleted completely from the database. For more information about the conditions that cause a downgrade, review the following sections.

The insert_recordset operator enables the insertion of multiple records into a table in one round-trip to the database. The following X++ code illustrates the use of insert_recordset. The code copies entries for one item in the InventTable table and the InventSum table into a temporary table for future use:

static void CopyItemInfo(Args _args)

{

InventTable inventTable;

InventSum inventSum;

InsertInventTableInventSum insertInventTableInventSum;

// insert_recordset uses only one round-trip for the copy operation.

// A record-based insert would need one round-trip per record in InventSum.

ttsBegin;

insert_recordset insertInventTableInventSum (ItemId,AltItemId,PhysicalValue,PostedValue)

select ItemId,AltItemid from inventTable where inventTable.ItemId == '1001'

join PhysicalValue,PostedValue from inventSum

where inventSum.ItemId == inventTable.ItemId;

ttsCommit;

select count(RecId) from insertInventTableInventSum;

info(int642str(insertInventTableInventSum.RecId));

// Additional code to use the copied data.

}The round-trip to the database involves the execution of three statements in the database:

The select part of the insert_recordset statement executes when the selected rows are inserted into a new temporary table in the database. The syntax of the select statement when executed in Transact-SQL is similar to SELECT <field list> INTO <temporary table> FROM <source tables> WHERE <predicates>.

The records from the temporary table are inserted directly into the target table using syntax such as INSERT INTO <target table> (<field list>) SELECT <field list> FROM <temporary table>.

The temporary table is dropped with the execution of DROP TABLE <temporary table>.

This approach has a tremendous performance advantage over inserting the records one by one, as shown in the following X++ code, which addresses the same scenario:

static void CopyItemInfoLineBased(Args _args)

{

InventTable inventTable;

InventSum inventSum;

InsertInventTableInventSum insertInventTableInventSum;

ttsBegin;

while select ItemId,Altitemid from inventTable where inventTable.ItemId == '1001'

join PhysicalValue,PostedValue from inventSum

where inventSum.ItemId == inventTable.ItemId

{

InsertInventTableInventSum.ItemId = inventTable.ItemId;

InsertInventTableInventSum.AltItemId = inventTable.AltItemId;

InsertInventTableInventSum.PhysicalValue = inventSum.PhysicalValue;

InsertInventTableInventSum.PostedValue = inventSum.PostedValue;

InsertInventTableInventSum.insert();

}

ttsCommit;

select count(RecId) from insertInventTableInventSum;

info(int642str(insertInventTableInventSum.RecId));

// ... Additional code to use the copied data

}If the InventSum table contains 10 entries for which ItemId equals 1001, this scenario would result in one round-trip for the select statement and an additional 10 round-trips for the inserts, totaling 11 round-trips.

The insert_recordset operation can be downgraded from a set-based operation to a record-based operation if any of the following conditions is true:

The table is cached by using the EntireTable setting.

The insert method or the aosValidateInsert method is overridden on the target table.

Alerts are set to be triggered by inserts into the target table.

The database log is configured to log inserts into the target table.

Record-level security (RLS) is enabled on the target table. If RLS is enabled only on the source table or tables, insert_recordset isn’t downgraded to a row-by-row operation.

The ValidTimeStateFieldType property for a table is not set to None.

The Microsoft Dynamics AX run time automatically handles the downgrade and internally executes a scenario similar to the while select scenario shown in the preceding example.

Important

When the Microsoft Dynamics AX run time checks for overridden methods, it determines only whether the methods are implemented. It doesn’t determine whether the overridden methods contain only the default X++ code. A method is therefore considered to be overridden by the run time even though it contains the following X++ code:

public void insert()

{

super();

}Any set-based insert is then downgraded.

Unless a table is cached by using the EntireTable setting, you can avoid the downgrade caused by the other conditions mentioned earlier. The record buffer contains methods that turn off the checks that the run time performs when determining whether to downgrade the insert_recordset operation:

Calling skipDataMethods(true) prevents the check that determines whether the insert method is overridden.

Calling skipAosValidation(true) prevents the check on the aosValidateInsert method.

Calling skipDatabaseLog(true) prevents the check that determines whether the database log is configured to log inserts into the table.

Calling skipEvents(true) prevents the check that determines whether any alerts have been set to be triggered by the insert event on the table.

The following X++ code, which includes the call to skipDataMethods(true), ensures that the insert_recordset operation is not downgraded because the insert method is overridden on the InventSize table:

static void CopyItemInfoskipDataMethod(Args _args)

{

InventTable inventTable;

InventSum inventSum;

InsertInventTableInventSum insertInventTableInventSum;

ttsBegin;

// Skip override check on insert.

insertInventTableInventSum.skipDataMethods(true);

insert_recordset insertInventTableInventSum (ItemId,AltItemId,PhysicalValue,PostedValue)

select ItemId,Altitemid from inventTable where inventTable.ItemId == '1001'

join PhysicalValue,PostedValue from inventSum

where inventSum.ItemId == inventTable.ItemId;

ttsCommit;

select count(RecId) from insertInventTableInventSum;

info(int642str(insertInventTableInventSum.RecId));

// ... Additional code to use the copied data

}Important

Use the skip methods with extreme caution because they can prevent the logic in the insert method from being executed, prevent events from being raised, and potentially, prevent the database log from being written to.

If you override the insert method, use the cross-reference system to determine whether any X++ code calls skipDataMethods(true). If you don’t, the X++ code might fail to execute the insert method. Moreover, when you implement calls to skipDataMethods(true), ensure that data inconsistency will not result if the X++ code in the overridden insert method doesn’t execute.

You can use skip methods only to influence whether the insert_recordset operation is downgraded. If you call skipDataMethods(true) to prevent a downgrade because the insert method is overridden, use the Microsoft Dynamics AX Trace Parser to make sure that the operation has not been downgraded. The operation is downgraded if, for example, the database log is configured to log inserts into the table. In the previous example, the overridden insert method on the InventSize table would be executed if the database log were configured to log inserts into the InventSize table, because the insert_recordset operation would then revert to a while select scenario in which the overridden insert method would be called. For more information about the Trace Parser, see the section Performance monitoring tools later in this chapter.

Since the Microsoft Dynamics AX 2009 release, the insert_recordset operator has supported literals. Support for literals was introduced primarily to support upgrade scenarios in which the target table is populated with records from one or more source tables (using joins) and one or more columns in the target table must be populated with a literal value that doesn’t exist in the source. The following code example illustrates the use of literals in insert_recordset:

static void CopyItemInfoLiteralSample(Args _args)

{

InventTable inventTable;

InventSum inventSum;

InsertInventTableInventSum insertInventTableInventSum;

boolean flag = boolean::true;

ttsBegin;

insert_recordset insertInventTableInventSum

(ItemId,AltItemId,PhysicalValue,PostedValue,Flag)

select ItemId,altitemid from inventTable where inventTable.ItemId == '1001'

join PhysicalValue,PostedValue,Flag from inventSum

where inventSum.ItemId == inventTable.ItemId;

ttsCommit;

select firstonly ItemId,Flag from insertInventTableInventSum;

info(strFmt('%1,%2',insertInventTableInventSum.ItemId,insertInventTableInventSum.Flag));

// ... Additional code to utilize the copied data

}The behavior of the update_recordset operator is similar to that of the insert_recordset operator. This similarity is illustrated by the following piece of X++ code, in which all rows that have been inserted for one ItemId are updated and flagged for further processing:

static void UpdateCopiedData(Args _args)

{

InventTable inventTable;

InventSum inventSum;

InsertInventTableInventSum insertInventTableInventSum;

// Code assumes InsertInventTableInventSum is populated.

// Set-based update operation.

ttsBegin;

update_recordSet insertInventTableInventSum setting Flag = true

where insertInventTableInventSum.ItemId == '1001';

ttsCommit;

}The execution of update_recordset results in one statement being passed to the database—which in Transact-SQL uses syntax similar to UPDATE <table> <SET> <field and expression list> WHERE <predicates>. As with insert_recordset, update_recordset provides a tremendous performance improvement over the record-based version that updates each record individually. This improvement is shown in the following X++ code, which serves the same purpose as the preceding example. The code selects all of the records that qualify for update, sets the new description value, and updates the record:

static void UpdateCopiedDataLineBased(Args _args)

{

InventTable inventTable;

InventSum inventSum;

InsertInventTableInventSum insertInventTableInventSum;

// ... Code assumes InsertInventTableInventSum is populated

ttsBegin;

while select forUpdate InsertInventTableInventSum

where insertInventTableInventSum.ItemId == '1001'

{

insertInventTableInventSum.Flag = true;

insertInventTableInventSum.update();

}

ttsCommit;

}If ten records qualify for the update, one select statement and ten update statements are passed to the database, rather than the single update statement that would be passed with update_recordset.

The update_recordset operation can be downgraded if specific methods are overridden or if Microsoft Dynamics AX is configured in specific ways. The update_recordset operation is downgraded if any of the following conditions is true:

The table is cached by using the EntireTable setting.

The update method, the aosValidateUpdate method, or the aosValidateRead method is overridden on the target table.

Alerts are set up to be triggered by update queries on the target table.

The database log is configured to log update queries on the target table.

RLS is enabled on the target table.

The ValidTimeStateFieldType property for a table is not set to None.

The Microsoft Dynamics AX run time automatically handles the downgrade and internally executes a scenario similar to the while select scenario shown in the earlier example.

As with the insert_recordset operator, you can avoid a downgrade unless the table is cached by using the EntireTable setting. The record buffer contains methods that turn off the checks that the run time performs when determining whether to downgrade the update_recordset operation:

Calling skipDataMethods(true) prevents the check that determines whether the update method is overridden.

Calling skipAosValidation(true) prevents the checks on the aosValidateUpdate and aosValidateRead methods.

Calling skipDatabaseLog(true) prevents the check that determines whether the database log is configured to log updates to records in the table.

Calling skipEvents(true) prevents the check to determine whether any alerts have been set to be triggered by the update event on the table.

As explained earlier, use the skip methods with caution. Again, using the skip methods only influences whether the update_recordset operation is downgraded to a while select operation. If the operation is downgraded, database logging, alerting, and execution of overridden methods occur even though the respective skip methods have been called.

Tip

If an update_recordset operation is downgraded, the select statement uses the concurrency model specified at the table level. You can apply the optimisticlock and pessimisticlock keywords to the update_recordset statements and enforce a specific concurrency model to be used in case of a downgrade.

Microsoft Dynamics AX supports inner and outer joins in update_recordset. The support for joins in update_recordset enables an application to perform set-based operations when the source data is fetched from more than one related data source.

The following example illustrates the use of joins with update_recordset:

static void UpdateCopiedDataJoin(Args _args)

{

InventTable inventTable;

InventSum inventSum;

InsertInventTableInventSum insertInventTableInventSum;

// ... Code assumes InsertInventTableInventSum is populated

// Set-based update operation with join.

ttsBegin;

update_recordSet insertInventTableInventSum setting Flag = true,

DiffAvailOrderedPhysical = inventSum.AvailOrdered - inventSum.AvailPhysical

join InventSum where inventSum.ItemId == insertInventTableInventSum.ItemId &&

inventSum.AvailOrdered > inventSum.AvailPhysical;

ttsCommit;

}The delete_from operator is similar to the insert_recordset and update_recordset operators in that it passes a single statement to the database to delete multiple rows, as shown in the following code:

static void DeleteCopiedData(Args _args)

{

InventTable inventTable;

InventSum inventSum;

InsertInventTableInventSum insertInventTableInventSum;

// ... Code assumes InsertInventTableInventSum is populated

// Set-based delete operation

ttsBegin;

delete_from insertInventTableInventSum

where insertInventTableInventSum.ItemId == '1001';

ttsCommit;

}This code passes a statement to Microsoft SQL Server in a syntax similar to DELETE <table> WHERE <predicates> and performs the same actions as the following X++ code, which uses record-by-record deletes:

static void DeleteCopiedDataLineBased(Args _args)

{

InventTable inventTable;

InventSum inventSum;

InsertInventTableInventSum insertInventTableInventSum;

// ... Code assumes InsertInventTableInventSum is populated

ttsBegin;

while select forUpdate insertInventTableInventSum

where insertInventTableInventSum.ItemId == '1001'

{

insertInventTableInventSum.delete();

}

ttsCommit;

}Again, the use of delete_from is preferable for performance because a single statement is passed to the database, instead of the multiple statements that the record-by-record version parses.

As with the insert_recordset and update_recordset operations, the delete_from operation can be downgraded—and for similar reasons. A downgrade occurs if any of the following conditions is true:

The table is cached by using the EntireTable setting.

The delete method, the aosValidateDelete method, or the aosValidateRead method is overridden on the target table.

Alerts are set up to be triggered by deletions from the target table.

The database log is configured to log deletions from the target table.

The ValidTimeStateFieldType property for a table is not set to None.

A downgrade also occurs if delete actions are defined on the table. The Microsoft Dynamics AX run time automatically handles the downgrade and internally executes a scenario similar to the while select operation shown in the earlier example.

You can avoid a downgrade caused by these conditions unless the table is cached by using the EntireTable setting. The record buffer contains methods that turn off the checks that the run time performs when determining whether to downgrade the delete_from operation, as follows:

Calling skipDataMethods(true) prevents the check that determines whether the delete method is overridden.

Calling skipAosValidation(true) prevents the checks on the aosValidateDelete and aosValidateRead methods.

Calling skipDatabaseLog(true) prevents the check that determines whether the database log is configured to log the deletion of records in the table.

Calling skipEvents(true) prevents the check that determines whether any alerts have been set to be triggered by the delete event on the table.

The preceding descriptions about the use of the skip methods, the no-skipping behavior in the event of downgrade, and the concurrency model for the update_recordset operator are equally valid for the use of the delete_from operator.

Note

The record buffer also contains a skipDeleteMethod method. Calling the method as skipDeleteMethod(true) has the same effect as calling skipDataMethods(true). It invokes the same Microsoft Dynamics AX run-time logic, so you can use skipDeleteMethod in combination with insert_recordset and update_recordset, although it might not improve the readability of the X++ code.

In addition to the set-based operators, you can use the RecordInsertList and RecordSortedList classes when inserting multiple records into a table. When the records are ready to be inserted, the Microsoft Dynamics AX run time packs multiple records into a single package and sends it to the database. The database then executes an individual insert operation for each record in the package. This process is illustrated in the following example, in which a RecordInsertList object is instantiated, and each record to be inserted into the database is added to the RecordInsertList object. When all records are inserted into the object, the insertDatabase method is called to ensure that all records are inserted into the database.

static void CopyItemInfoRIL(Args _args)

{

InventTable inventTable;

InventSum inventSum;

InsertInventTableInventSumRT insertInventTableInventSumRT;

RecordInsertList ril;

ttsBegin;

ril = new RecordInsertList(tableNum(InsertInventTableInventSumRT));

while select ItemId,AltItemid from inventTable where inventTable.ItemId == '1001'

join PhysicalValue,PostedValue from inventSum

where inventSum.ItemId == inventTable.ItemId

{

insertInventTableInventSumRT.ItemId = inventTable.ItemId;

insertInventTableInventSumRT.AltItemId = inventTable.AltItemId;

insertInventTableInventSumRT.PhysicalValue = inventSum.PhysicalValue;

insertInventTableInventSumRT.PostedValue = inventSum.PostedValue;

// Insert records if package is full

ril.add(insertInventTableInventSumRT);

}

// Insert remaining records into database

ril.insertDatabase();

ttsCommit;

select count(RecId) from insertInventTableInventSumRT;

info(int642str(insertInventTableInventSumRT.RecId));

// Additional code to use the copied data.

}Based on the maximum buffer size that is configured for the server, the Microsoft Dynamics AX run time determines the number of records in a buffer as a function of the size of the records and the buffer size. If the buffer is full, the records in the RecordInsertList object are packed, passed to the database, and inserted individually on the database tier. This check is made when the add method is called. When the insertDatabase method is called from application logic, the remaining records are inserted with the same mechanism.

Using these classes has an advantage over using while select: fewer round-trips are made from the AOS to the database because multiple records are sent simultaneously. However, the number of INSERT statements in the database remains the same.

Note

Because the timing of insertion into the database depends on the size of the record buffer and the package, don’t expect a record to be selectable from the database until the insertDatabase method has been called.

You can rewrite the preceding example by using the RecordSortedList class instead of RecordInsertList, as shown in the following X++ code:

public static server void CopyItemInfoRSL()

{

InventTable inventTable;

InventSum inventSum;

InsertInventTableInventSumRT insertInventTableInventSumRT;

RecordSortedList rsl;

ttsBegin;

rsl = new RecordSortedList(tableNum(InsertInventTableInventSumRT));

rsl.sortOrder(fieldNum(InsertInventTableInventSumRT,PostedValue));

while select ItemId,AltItemid from inventTable where inventTable.ItemId == '1001'

join PhysicalValue,PostedValue from inventSum

where inventSum.ItemId == inventTable.ItemId

{

insertInventTableInventSumRT.ItemId = inventTable.itemId;

insertInventTableInventSumRT.AltItemId = inventTable.AltItemId;

insertInventTableInventSumRT.PhysicalValue = inventSum.PhysicalValue;

insertInventTableInventSumRT.PostedValue = inventSum.PostedValue;

//No records will be inserted.

rsl.ins(insertInventTableInventSumRT);

}

//All records are inserted in database.

rsl.insertDatabase();

ttsCommit;

select count(RecId) from insertInventTableInventSumRT;

info(int642str(insertInventTableInventSumRT.RecId));

// Additional code to utilize the copied data

}When the application logic uses a RecordSortedList object, the records aren’t passed and inserted in the database until the insertDatabase method is called.

Both RecordInsertList objects and RecordSortedList objects can be downgraded in application logic to record-by-record inserts, in which each record is sent in a separate round-trip to the database and the INSERT statement is subsequently executed. A downgrade occurs if the insert method or the aosValidateInsert method is overridden, or if the table contains fields of the type container or memo. However, no downgrade occurs if the database log is configured to log inserts or alerts that are set to be triggered by the insert event on the table. One exception is if logging or alerts have been configured and the table contains CreatedDateTime or ModifiedDateTime columns—in this case, record-by-record inserts are performed. The database logging and alerts occur on a record-by-record basis after the records have been sent and inserted into the database.

When instantiating the RecordInsertList object, you can specify that the insert and aosValidateInsert methods be skipped. You can also specify that the database logging and eventing be skipped if the operation isn’t downgraded.

Often, code is not transferred to a set-based operation because the logic is too complex. However, an if condition, for example, can be placed in the where clause of a query. If you have a scenario that requires an if/else decision, you can achieve this with two queries, such as two update_recordsets. Necessary information from other tables can be obtained through joins instead of being looked up in a find operation. In Microsoft Dynamics AX 2012 insert_recordset and TempDB temporary tables help to extend the possibilities of transferring code into set-based operations.

Some things still might seem difficult to transfer to a set-based operation, such as performing calculations on the columns in a select statement. For this reason, Microsoft Dynamics AX 2012 offers a feature for views that is called computed columns, and you can use this feature to transfer even fairly complex logic into set-based operations. Computed columns can also provide performance advantages when used as an alternative to display methods on read-only data sources. Imagine the following task: Find all customers who bought products for more than $100,000 and all customers who bought products for more than $1,000,000. Those customers are treated as VIP customers who then get certain rebates.

In earlier versions of Microsoft Dynamics AX, the X++ code to set these values would have looked like the following example:

public static server void demoOld()

{

SalesLine sl;

CustTable ct;

vipparm vp;

int64 total;

vp = vipparm::find();

ttsBegin;

// One + n round-trips per Customer Account in the salesline table.

while select CustAccount, sum(SalesQty), sum(SalesPrice) from sl group by sl.CustAccount

{

// Necessary to select for update causing n additional round-trips.

ct = CustTable::find(sl.CustAccount,true);

ct.VIPStatus = 0;

if((sl.SalesQty*sl.SalesPrice)>=vp.UltimateVIP)

ct.VIPStatus = 2;

else if((sl.SalesQty*sl.SalesPrice)>=vp.VIP)

ct.VIPStatus = 1;

// Another n round-trips for the update.

if(ct.VIPStatus != 0)

ct.update();

}

ttsCommit;

}You could replace this code easily with two direct Transact-SQL statements to make it far more effective. The direct Transact-SQL statements would look like the following:

UPDATE CUSTTABLE SET VIPSTATUS = 2 FROM (SELECT CUSTACCOUNT,SUM(SALESQTY)*SUM(SALESPRICE) AS TOTAL,VIPSSTATUS = CASE WHEN SUM(SALESQTY)*SUM(SALESPRICE) > 1000000 THEN 2 WHEN SUM(SALESQTY)*SUM(SALESPRICE) > 100000 THEN 1 ELSE 0 END FROM SALESLINE GROUP BY CUSTACCOUNT) AS VC WHERE VC.VIPSTATUS = 2 and CUSTTABLE.ACCOUNTNUM = VC.CUSTACCOUNT and DATAAREAID = N'CEU'

Note

This code contains only a partial dataAreaId and no Partition field, which highlights its weaknesses. The data access logic is not enforced.

In Microsoft Dynamics AX 2012, with the help of computed columns, you can replace this code with two set-based statements.

To create these statements, you first need to create an AOT query because views themselves cannot contain a group by statement. Further, you need a parameter table that holds the information about who counts as a VIP customer for each company (Figure 13-4). Then, you need to join this information together so that it is available at run time.

The code for the computed column is shown here:

private static server str compColQtyPrice()

{

str sReturn,sQty,sPrice,ultimateVIP,VIP;

Map m = new Map(Types::String,Types::String);

sQty = SysComputedColumn::returnField(tableStr(mySalesLineView),

identifierStr(SalesLine_1),

fieldStr(SalesLine,SalesQty));

sPrice = SysComputedColumn::returnField(tableStr(mySalesLineView),

identifierStr(SalesLine_1),

fieldStr(SalesLine,SalesPrice));

ultimateVIP = SysComputedColumn::returnField(tableStr(mySalesLineView),

identifierStr(Vipparm_1),

fieldStr(vipparm,ultimateVIP));

VIP = SysComputedColumn::returnField(tableStr(mySalesLineView),

identifierStr(Vipparm_1),

fieldStr(vipparm,VIP));

m.insert(SysComputedColumn::sum(sQty)+'*'+SysComputedColumn::sum(sPrice)+

' > '+ultimateVIP,int2str(VipStatus::UltimateVIP));

m.insert(SysComputedColumn::sum(sQty)+'*'+SysComputedColumn::sum(sPrice)+

' > '+VIP ,int2str(VipStatus::VIP));

return SysComputedColumn::switch('',m,'0'),

}The next step is to add the parameter table to a view and create the necessary computed column, as shown in Figure 13-5.

The view in SQL Server looks like this:

SELECT T1.CUSTACCOUNT AS CUSTACCOUNT,T1.DATAAREAID AS DATAAREAID,1010 AS RECID,T2.DATAAREA ID AS DATAAREAID#2,T2.VIP AS VIP,T2.ULTIMATEVIP AS ULTIMATEVIP,(CAST ((CASE WHEN SUM(T1. SALESQTY)*SUM(T1.SALESPRICE) > T2.ULTIMATEVIP THEN 2 WHEN SUM(T1.SALESQTY)*SUM(T1.SALES PRICE) > T2.VIP THEN 1 ELSE 0 END) AS NVARCHAR(10))) AS VIPSTATUS FROM SALESLINE T1 CROSS JOIN VIPP ARM T2 GROUP BY T1.CUSTACCOUNT,T1.DATAAREAID,T2.DATAAREAID,T2.VIP,T2.ULTIMATEVIP

Now you can change the record-based update code used earlier to effective, working set-based code:

public static server void demoNew()

{

mySalesLineView mySLV;

CustTable ct;

ct.skipDataMethods(true);

update_recordSet ct setting VipStatus = VipStatus::UltimateVIP

join mySLV where ct.AccountNum == mySLV.CustAccount &&

mySLV.VipStatus == int2str(enum2int(vipstatus::UltimateVIP));

update_recordSet ct setting VipStatus = VipStatus::VIP

join mySLV where ct.AccountNum == mySLV.CustAccount &&

mySLV.VipStatus == int2str(enum2int(vipstatus::VIP));

}Executing the code shows the difference in timing:

public static void main(Args _args)

{

int tickcnt;

DemoClass::resetCusttable();

tickcnt = WinAPI::getTickCount();

DemoClass::demoOld();

info('Line based' + int2str(WinAPI::getTickCount()-tickcnt));

DemoClass::resetCusttable();

tickcnt = WinAPI::getTickCount();

DemoClass::demoNew();

info('Set based' + int2str(WinAPI::getTickCount()-tickcnt));

}The execution time of the operation is as follows:

Record-based 1,514 milliseconds

Set-based 171 milliseconds

Note that this code ran on demo data. Imagine running similar code on an actual database with hundreds of thousands of sales orders and customers.

Another example that might seem tricky to transfer to a set-based operation is if you need to use aggregation and group by in queries, because the update_recordset operator does not support this. You can work around this issue by using TempDB temporary tables and a combination of insert_recordset and update_recordset.

Note

The amount of data that you need to modify determines whether this pattern is beneficial. For example, if you just want to update 10 rows, a while select statement might be more efficient. But if you are updating hundreds or thousands of rows, this pattern can be more efficient. You’ll need to evaluate and test each pattern individually to determine which one provides better performance.

The following example first populates a table and then updates the values in it based on a group by and sum operations in a statement. Note that deleting and populating the data takes longer than the actual execution of the later insert_recordset and update_recordset statements.

public static server void PopulateTable()

{

MyUpdRecordsetTestTable MyUpdRecordsetTestTable;

int myGrouping,myKey,mySum;

RecordInsertList ril = new RecordInsertList(tablenum(MyUpdRecordsetTestTable));

delete_from MyUpdRecordsetTestTable;

for(myKey=0;myKey<=100000;myKey++)

{

MyUpdRecordsetTestTable.Key = myKey;

if(myKey mod 10 == 0)

{

myGrouping += 10;

mySum += 10;

}

MyUpdRecordsetTestTable.fieldForGrouping = myGrouping;

MyUpdRecordsetTestTable.theSum = mySum;

ril.add(MyUpdRecordsetTestTable);

}

ril.insertDatabase();

}Combine TempDB temporary tables, insert_recordset, and update_recordset to update the table:

public static void InsertAndUpdate()

{

MyUpdRecordsetTestTable MyUpdRecordsetTestTable;

MyUpdRecordsetTestTableTmp MyUpdRecordsetTestTableTmp;

int tc;

tc = WinAPI::getTickCount();

insert_recordset MyUpdRecordsetTestTableTmp(fieldForGrouping,theSum)

select fieldForGrouping,sum(theSum) from MyUpdRecordsetTestTable

Group by MyUpdRecordsetTestTable.fieldForGrouping;

info("Time needed: " + int2str(WinAPI::getTickCount()-tc));

tc = WinAPI::getTickCount();

update_recordSet MyUpdRecordsetTestTable setting theSum = MyUpdRecordsetTestTableTmp.t

heSum

join MyUpdRecordsetTestTableTmp

where MyUpdRecordsetTestTable.fieldForGrouping == MyUpdRecordsetTestTableTmp.

fieldForGrouping;

info("Time needed: " + int2str(WinAPI::getTickCount()-tc));

}When this code ran on demo data, the execution time of the operation was as follows:

insert_recordset statement 1,685 milliseconds

update_recordset statement 3,697 milliseconds

In multiple scenarios in Microsoft Dynamics AX, the execution of some application logic involves manipulating multiple rows from the same table. Some scenarios require that all rows be manipulated within the scope of a single transaction. In such a scenario, if something fails and the transaction is cancelled, all modifications are rolled back, and the job can be restarted manually or automatically. Other scenarios commit the changes on a record-by-record basis. In the case of failure in these scenarios, only the changes to the current record are rolled back, and all previously manipulated records are committed. When a job is restarted in this scenario, it starts where it left off by skipping the records that have already changed.

An example of the first scenario is shown in the following code, in which all update queries to records in the CustTable table are wrapped into the scope of a single transaction:

static void UpdateCreditMax(Args _args)

{

CustTable custTable;

ttsBegin;

while select forupdate custTable where custTable.CreditMax == 0

{

if (custTable.balanceMST() < 10000)

{

custTable.CreditMax = 50000;

custTable.update();

}

}

ttsCommit;

}An example of the second scenario, executing the same logic, is shown in the following code, in which the transaction scope is handled on a record-by-record basis. You must reselect each individual CustTable record inside the transaction for the Microsoft Dynamics AX run time to allow the record to be updated:

static void UpdateCreditMax(Args _args)

{

CustTable custTable;

CustTable updateableCustTable;

while select custTable where custTable.CreditMax == 0

{

if (custTable.balanceMST() < 10000)

{

ttsBegin;

select forupdate updateableCustTable

where updateableCustTable.AccountNum == custTable.AccountNum;

updateableCustTable.CreditMax = 50000;

updateableCustTable.update();

ttsCommit;

}

}

}In a scenario in which 100 CustTable records qualify for the update, the first example would involve 1 select statement and 100 update statements being passed to the database, and the second example would involve 1 large select query and 100 additional select queries, plus the 100 update statements. The code in the first scenario would execute faster than the code in the second, but the first scenario would also hold the locks on the updated CustTable records longer because they wouldn’t be committed on a record-by-record basis. The second example demonstrates superior concurrency over the first example because locks are held for a shorter time.

With the optimistic concurrency model in Microsoft Dynamics AX, you can take advantage of the benefits offered by both of the preceding examples. You can select records outside a transaction scope and update records inside a transaction scope—but only if the records are selected optimistically. In the following example, the optimisticlock keyword is applied to the select statement while maintaining a per-record transaction scope. Because the records are selected with the optimisticlock keyword, it isn’t necessary to reselect each record individually within the transaction scope.

static void UpdateCreditMax(Args _args)

{

CustTable custTable;

while select optimisticlock custTable where custTable.CreditMax == 0

{

if (custTable.balanceMST() < 10000)

{

ttsBegin;

custTable.CreditMax = 50000;

custTable.update();

ttsCommit;

}

}

}This approach provides the same number of statements passed to the database as in the first example, but with the improved concurrency from the second example because records are committed individually. The code in this example still doesn’t perform as fast as the code in the first example because it has the extra burden of per-record transaction management. You could optimize the example even further by committing records on a scale somewhere between all records and the single record, without decreasing the concurrency considerably. However, the appropriate choice for commit frequency always depends on the circumstances of the job.

Tip

You can use the forupdate keyword when selecting records outside the transaction if the table has been enabled for optimistic concurrency at the table level. The best practice, however, is to use the optimisticlock keyword explicitly because the scenario won’t fail if the table-level setting is changed. Using the optimisticlock keyword also improves the readability of the X++ code because the explicit intention of the developer is stated in the code.

The Microsoft Dynamics AX run time supports both single-record and set-based record caching. You can enable set-based caching in metadata by switching a property on a table definition or writing explicit X++ code that instantiates a cache. Regardless of how you set up caching, you don’t need to know which caching method is used because the run time handles the cache transparently. To optimize the use of the cache, however, you must understand how each caching mechanism works.

Microsoft Dynamics AX 2012 introduces some important new features for caching. For example, record-based caching works not only for a single record but for joins as well. This mechanism is described in the Record caching section, which follows next. Also, even if range operations are used in a query, caching is supported so long as the query contains a unique key lookup.

The Microsoft Dynamics AX 2012 software development kit (SDK) contains a good description of the individual caching options and how they are set up. See the topic “Record Caching” at http://msdn.microsoft.com/en-us/library/bb278240.aspx.

This section focuses on how the caches are implemented in the Microsoft Dynamics AX run time and what to expect when using specific caching mechanisms.

You can set up three types of record caching on a table by setting the CacheLookup property on the table definition: Found, FoundAndEmpty, and NotInTTS. An additional value (besides None) is EntireTable—a set-based caching option. These settings were introduced briefly in the section Caching and indexing, earlier in this chapter, and are discussed in greater detail in this section.

The three types of record caching are fundamentally the same. The differences are found in what is cached and when cached values are flushed. For example, the Found and FoundAndEmpty caches are preserved across transaction boundaries, but a table that uses the NotInTTS cache doesn’t use the cache when the cache is first accessed inside a transaction scope. Instead, the cache is used in consecutive select statements unless a forupdate keyword is applied to the select statement. (The forupdate keyword forces the run time to look up the record in the database because the previously cached record wasn’t selected with the forupdate keyword applied.)

The following X++ code example illustrates when the cache is used inside a transaction scope when a table uses the NotInTTS caching mechanism. The AccountNum field is the primary key. The code comments indicate when the cache is used. In the example, the first two select statements after the ttsbegin command don’t use the cache. The first statement doesn’t use the cache because it’s the first statement inside the transaction scope; the second doesn’t use the cache because the forupdate keyword is applied to the statement.

static void NotInTTSCache(Args _args)

{

CustTable custTable;

select custTable // Look up in cache. If record

where custTable.AccountNum == '1101'; // does not exist, look up

// in database.

ttsBegin; // Start transaction.

select custTable // Cache is invalid. Look up in

where custTable.AccountNum == '1101'; // database and place in cache.

select forupdate custTable // Look up in database because

where custTable.AccountNum == '1101'; // forupdate keyword is applied.

select custTable // Cache will be used.

where custTable.AccountNum == '1101'; // No lookup in database.

select forupdate custTable // Cache will be used because

where custTable.AccountNum == '1101'; // forupdate keyword was used

// previously.

ttsCommit; // End transaction.

select custTable // Cache will be used.

where custTable.AccountNum == '1101';

}If the table in the preceding example had been set up with Found or FoundAndEmpty caching, the cache would have been used when the first select statement was executed inside the transaction, but not when the first select forupdate statement was executed.

Note

By default, all Microsoft Dynamics AX system tables are set up using a Found cache. This cannot be changed.

For all three caching mechanisms, the cache is used only if the select statement contains equal-to (==) predicates in the where clause that exactly match all of the fields in the primary index of the table or any one of the unique indexes that is defined for the table. Therefore, the PrimaryIndex property on the table must be set correctly on one of the unique indexes that is used when accessing the cache from application logic. For all other unique indexes, without any additional settings in metadata, the kernel automatically uses the cache if it is already present.

The following X++ code examples show when the Microsoft Dynamics AX run time will try to use the cache. The cache is used only in the first select statement; the remaining three statements don’t match the fields in the primary index, so instead, the statements perform lookups in the database.

static void UtilizeCache(Args _args)

{

CustTable custTable;

select custTable // Will use cache because only

where custTable.AccountNum == '1101'; // the primary key is used as

// predicate.

select custTable; // Cannot use cache because no

// "where" clause exists.

select custTable // Cannot use cache because

where custTable.AccountNum > '1101'; // equal-to (==) is not used.

select custTable // Will use cache even if

where custTable.AccountNum == '1101' // where clause contains more

&& custTable.CustGroup == '20'; // predicates than the primary

// key. This assumes that the rec

ord

// have been successfully cached

// before. Please see the next sa

mple.

}Note

The RecId index, which is always unique on a table, can be set as the PrimaryIndex in the table’s properties. You can therefore set up caching by using the RecId field.

The following example illustrates how the improved caching mechanics in Microsoft Dynamics AX 2012 work when the where clause of the query contains more than just the unique index key columns:

static void whenRecordDoesGetCached(Args _args)

{

CustTable custTable,custTable2;

// Using Contoso demo data

// The following select statement will not cache using the found cache because the loo

kup

// will not return a record.

// It would cache the record if the cache setting was FoundAndEmpty.

select custTable

where custTable.AccountNum == '1101'

&& custTable.CustGroup == '20';

// Following query will cache the record.

select custTable

where custTable.AccountNum == '1101';

// Following will be cached too as the lookup will return a record.

select custTable2

where custTable2.AccountNum == '1101'

&& custTable2.CustGroup == '10';

// If you rerun the job, everything will come from the cache.

}The following X++ code example shows how unique index caching works in the Microsoft Dynamics AX run time. The InventDim table in the base application has InventDimId as the primary key and a combination of keys (inventBatchId, wmsLocationId, wmsPalletId, inventSerialId, inventLocationId, configId, inventSizeId, inventColorId, and inventSiteId) as the unique index on the table.

Note

This sample is based on Microsoft Dynamics AX 2012. The index has been changed for Microsoft Dynamics AX 2012 R2.

static void UtilizeUniqueIndexCache(Args _args)

{

InventDim InventDim;

InventDim inventdim2;

select firstonly * from inventdim2;

// Will use the cache because only the primary key is used as predicate

select inventDim

where inventDim.InventDimId == inventdim2.InventDimId;

info(enum2str(inventDim.wasCached()));

// Will use the cache because the column list in the where clause matches that of a un

ique

// index

// for the InventDim table and the key values point to same record as the primary key

fetch

select inventDim

where inventDim.inventBatchId == inventDim2.inventBatchId

&& inventDim.wmsLocationId == inventDim2.wmsLocationId

&& inventDim.wmsPalletId == inventDim2.wmsPalletId

&& inventDim.inventSerialId == inventDim2.inventSerialId

&& inventDim.inventLocationId == inventDim2.inventLocationId

&& inventDim.ConfigId == inventDim2.ConfigId

&& inventDim.inventSizeId == inventDim2.inventSizeId

&& inventDim.inventColorId == inventDim2.inventColorId

&& inventDim.inventSiteId == inventDim2.inventSiteId;

info(enum2str(inventDim.wasCached()));

// Cannot use cache because the where clause does not match the unique key list or pri

mary

// key.

select firstonly inventDim

where inventDim.inventLocationId== inventDim2.inventLocationId

&& inventDim.ConfigId == inventDim2.ConfigId

&& inventDim.inventSiteId == inventDim2.inventSiteId;

info(enum2str(inventDim.wasCached()));

}The Microsoft Dynamics AX run time ensures that all fields in a record are selected before they are cached. Therefore, if the run time can’t find the record in the cache, it always modifies the field list to include all fields in the table before submitting the SELECT statement to the database. The following X++ code illustrates this behavior:

static void expandingFieldList(Args _args)

{

CustTable custTable;

select CreditRating // The field list will be expanded to all fields.

from custTable

where custTable.AccountNum == '1101';

}Expanding the field list ensures that the record fetched from the database contains values for all fields before the record is inserted into the cache. Even though the performance when fetching all fields is inferior compared to the performance when fetching a few fields, this approach is acceptable because in subsequent use of the cache, the performance gain outweighs the initial loss of populating it.

Tip

You can avoid using the cache by calling the disableCache method on the record buffer with a Boolean parameter of true. This method forces the run time to look up the record in the database, and it also prevents the run time from expanding the field list.

The Microsoft Dynamics AX run time creates and uses caches on both the client tier and the server tier. The client-side cache is local to the Microsoft Dynamics AX client, and the server-side cache is shared among all connections to the server, including connections coming from Microsoft Dynamics AX Windows clients, web clients, the .NET Business Connector, and any other connection.

The cache that is used depends on the tier that the lookup is made from. If the lookup is executed on the server tier, the server-side cache is used. If the lookup is executed on the client tier, the client first looks in the client-side cache. If no record is found in the client-side cache, it executes a lookup in the server-side cache. If no record is found, a lookup is made in the database. When the database returns the record to the server and sends it on to the client, the record is inserted into both the server-side cache and the client-side cache.

If caching was set in Microsoft Dynamics AX 2009, the client stored up to 100 records per table, and the AOS stored up to 2,000 records per table. In Microsoft Dynamics AX 2012, you can configure the cache by using the Server Configuration form (System Administration > Setup > Server Configuration). For more information, see the section Performance configuration options later in this chapter.

Scenarios that perform multiple lookups on the same records and expect to find results in the cache can suffer performance degradation if the cache is continuously full—not only because records won’t be found in the cache because they were removed based on the aging scheme, forcing a lookup in the database, but also because of the constant scanning of the tree to remove the oldest records. The following X++ code shows an example in which all SalesTable records are iterated through twice: each loop looks up the associated CustTable record. If this X++ code were executed on the server and the number of lookups for CustTable records was more than 2,000 (assuming that the cache was set to 2,000 records on the server), the oldest records would be removed from the cache and the cache would no longer contain all CustTable records when the first loop ended. When the code iterates through the SalesTable records again, the records might not be in the cache, and the run time would go to the database to look up the CustTable records. The scenario, therefore, would perform much better with fewer than 2,000 records in the database.

static void AgingScheme(Args _args)

{

SalesTable salesTable;

CustTable custTable;

while select salesTable order by CustAccount

{

select custTable // Fill up cache.

where custTable.AccountNum == salesTable.CustAccount;

// More code here.

}

while select salesTable order by CustAccount

{

select custTable // Record might not be in cache.

where custTable.AccountNum == salesTable.CustAccount;

// More code here.

}

}Important

Test performance improvements of record caching only on a database where the database size and data distribution resemble the production environment. (The arguments have been presented in the previous example.)

Before the Microsoft Dynamics AX run time searches for, inserts, updates, or deletes records in the cache, it places a mutually exclusive lock that isn’t released until the operation is complete. This lock means that two processes running on the same server can’t perform insert, update, or delete operations in the cache at the same time. Only one process can hold the lock at any given time, and the remaining processes are blocked. Blocking occurs only when the run time accesses the server-side cache. So although the caching possibilities supported by the run time are useful, you should use them only when appropriate. If you can reuse a record buffer that is already fetched, you should do so. The following X++ code shows the same record fetched multiple times. The subsequent fetch operations use the cache, even though it could have used the first fetched record buffer.

static void ReuseRecordBuffer(Args _args)

{

CustTable custTable;

CurrencyCode myCustCurrency;

CustGroupId myCustGroupId;

PaymTermId myCustPaymTermId;

// Bad coding pattern

myCustGroupId = custTable::find('1101').CustGroup;

myCustPaymTermId = custTable::find('1101').PaymTermId;

myCustCurrency = custTable::find('1101').Currency;

// The cache will be used for these lookups, but it is much more

// efficient to reuse the buffer, because even cache lookups are not "free."

// Good coding pattern:

custTable = CustTable::find('1101'),

myCustGroupId = custTable.CustGroup;

myCustPaymTermId = custTable.PaymTermId;

myCustCurrency = custTable.Currency;

}The unique index join cache is new to Microsoft Dynamics AX 2012 and allows caching of subtype and supertype tables, one-to-one relation joins with a unique lookup, or a combination of both. A key constraint with this type of cache is that you can look up only one record through a unique index and you can join only over unique columns.

The following example illustrates all three possible variations:

public static void main(Args args)

{

SalesTable header;

SalesLine line;

DirPartyTable party;

CustTable customer;

int i;

// subtype, supertype table caching

for (i=0 ; i<1000; i++)

select party where party.RecId == 5637144829;

// 1:1 join data caching

for (i=0 ; i<1000; i++)

select line

join header

where line.RecId == 5637144586

&& line.SalesId == header.SalesId;

// Combination of subtype, supertype, and 1:1 join caching

for (i=0 ; i<1000; i++)

select customer

join party

where customer.AccountNum == '4000'

&& customer.Party == party.RecId;