Chapter 2. Supervised Learning

As we mentioned earlier, supervised machine learning is one of the most commonly used and successful types of machine learning. In this chapter, we will describe supervised learning in more detail and explain several popular supervised learning algorithms. We already saw an application of supervised machine learning in Chapter 1: classifying iris flowers into several species using physical measurements of the flowers.

Remember that supervised learning is used whenever we want to predict a certain outcome from a given input, and we have examples of input/output pairs. We build a machine learning model from these input/output pairs, which comprise our training set. Our goal is to make accurate predictions for new, never-before-seen data. Supervised learning often requires human effort to build the training set, but afterward automates and often speeds up an otherwise laborious or infeasible task.

2.1 Classification and Regression

There are two major types of supervised machine learning problems, called classification and regression.

In classification, the goal is to predict a class label, which is a choice from a predefined list of possibilities. In Chapter 1 we used the example of classifying irises into one of three possible species. Classification is sometimes separated into binary classification, which is the special case of distinguishing between exactly two classes, and multiclass classification, which is classification between more than two classes. You can think of binary classification as trying to answer a yes/no question. Classifying emails as either spam or not spam is an example of a binary classification problem. In this binary classification task, the yes/no question being asked would be “Is this email spam?”

Note

In binary classification we often speak of one class being the positive class and the other class being the negative class. Here, positive doesn’t represent having benefit or value, but rather what the object of the study is. So, when looking for spam, “positive” could mean the spam class. Which of the two classes is called positive is often a subjective matter, and specific to the domain.

The iris example, on the other hand, is an example of a multiclass classification problem. Another example is predicting what language a website is in from the text on the website. The classes here would be a pre-defined list of possible languages.

For regression tasks, the goal is to predict a continuous number, or a floating-point number in programming terms (or real number in mathematical terms). Predicting a person’s annual income from their education, their age, and where they live is an example of a regression task. When predicting income, the predicted value is an amount, and can be any number in a given range. Another example of a regression task is predicting the yield of a corn farm given attributes such as previous yields, weather, and number of employees working on the farm. The yield again can be an arbitrary number.

An easy way to distinguish between classification and regression tasks is to ask whether there is some kind of continuity in the output. If there is continuity between possible outcomes, then the problem is a regression problem. Think about predicting annual income. There is a clear continuity in the output. Whether a person makes $40,000 or $40,001 a year does not make a tangible difference, even though these are different amounts of money; if our algorithm predicts $39,999 or $40,001 when it should have predicted $40,000, we don’t mind that much.

By contrast, for the task of recognizing the language of a website (which is a classification problem), there is no matter of degree. A website is in one language, or it is in another. There is no continuity between languages, and there is no language that is between English and French.1

2.2 Generalization, Overfitting, and Underfitting

In supervised learning, we want to build a model on the training data and then be able to make accurate predictions on new, unseen data that has the same characteristics as the training set that we used. If a model is able to make accurate predictions on unseen data, we say it is able to generalize from the training set to the test set. We want to build a model that is able to generalize as accurately as possible.

Usually we build a model in such a way that it can make accurate predictions on the training set. If the training and test sets have enough in common, we expect the model to also be accurate on the test set. However, there are some cases where this can go wrong. For example, if we allow ourselves to build very complex models, we can always be as accurate as we like on the training set.

Let’s take a look at a made-up example to illustrate this point. Say a novice data scientist wants to predict whether a customer will buy a boat, given records of previous boat buyers and customers who we know are not interested in buying a boat.2 The goal is to send out promotional emails to people who are likely to actually make a purchase, but not bother those customers who won’t be interested.

Suppose we have the customer records shown in Table 2-1.

| Age | Number of cars owned |

Owns house | Number of children | Marital status | Owns a dog | Bought a boat |

|---|---|---|---|---|---|---|

66 |

1 |

yes |

2 |

widowed |

no |

yes |

52 |

2 |

yes |

3 |

married |

no |

yes |

22 |

0 |

no |

0 |

married |

yes |

no |

25 |

1 |

no |

1 |

single |

no |

no |

44 |

0 |

no |

2 |

divorced |

yes |

no |

39 |

1 |

yes |

2 |

married |

yes |

no |

26 |

1 |

no |

2 |

single |

no |

no |

40 |

3 |

yes |

1 |

married |

yes |

no |

53 |

2 |

yes |

2 |

divorced |

no |

yes |

64 |

2 |

yes |

3 |

divorced |

no |

no |

58 |

2 |

yes |

2 |

married |

yes |

yes |

33 |

1 |

no |

1 |

single |

no |

no |

After looking at the data for a while, our novice data scientist comes up with the following rule: “If the customer is older than 45, and has less than 3 children or is not divorced, then they want to buy a boat.” When asked how well this rule of his does, our data scientist answers, “It’s 100 percent accurate!” And indeed, on the data that is in the table, the rule is perfectly accurate. There are many possible rules we could come up with that would explain perfectly if someone in this dataset wants to buy a boat. No age appears twice in the data, so we could say people who are 66, 52, 53, or 58 years old want to buy a boat, while all others don’t. While we can make up many rules that work well on this data, remember that we are not interested in making predictions for this dataset; we already know the answers for these customers. We want to know if new customers are likely to buy a boat. We therefore want to find a rule that will work well for new customers, and achieving 100 percent accuracy on the training set does not help us there. We might not expect that the rule our data scientist came up with will work very well on new customers. It seems too complex, and it is supported by very little data. For example, the “or is not divorced” part of the rule hinges on a single customer.

The only measure of whether an algorithm will perform well on new data is the evaluation on the test set. However, intuitively3 we expect simple models to generalize better to new data. If the rule was “People older than 50 want to buy a boat,” and this would explain the behavior of all the customers, we would trust it more than the rule involving children and marital status in addition to age. Therefore, we always want to find the simplest model. Building a model that is too complex for the amount of information we have, as our novice data scientist did, is called overfitting. Overfitting occurs when you fit a model too closely to the particularities of the training set and obtain a model that works well on the training set but is not able to generalize to new data. On the other hand, if your model is too simple—say, “Everybody who owns a house buys a boat”—then you might not be able to capture all the aspects of and variability in the data, and your model will do badly even on the training set. Choosing too simple a model is called underfitting.

The more complex we allow our model to be, the better we will be able to predict on the training data. However, if our model becomes too complex, we start focusing too much on each individual data point in our training set, and the model will not generalize well to new data.

There is a sweet spot in between that will yield the best generalization performance. This is the model we want to find.

The trade-off between overfitting and underfitting is illustrated in Figure 2-1.

Figure 2-1. Trade-off of model complexity against training and test accuracy

2.2.1 Relation of Model Complexity to Dataset Size

It’s important to note that model complexity is intimately tied to the variation of inputs contained in your training dataset: the larger variety of data points your dataset contains, the more complex a model you can use without overfitting. Usually, collecting more data points will yield more variety, so larger datasets allow building more complex models. However, simply duplicating the same data points or collecting very similar data will not help.

Going back to the boat selling example, if we saw 10,000 more rows of customer data, and all of them complied with the rule “If the customer is older than 45, and has less than 3 children or is not divorced, then they want to buy a boat,” we would be much more likely to believe this to be a good rule than when it was developed using only the 12 rows in Table 2-1.

Having more data and building appropriately more complex models can often work wonders for supervised learning tasks. In this book, we will focus on working with datasets of fixed sizes. In the real world, you often have the ability to decide how much data to collect, which might be more beneficial than tweaking and tuning your model. Never underestimate the power of more data.

2.3 Supervised Machine Learning Algorithms

We will now review the most popular machine learning algorithms and explain how they learn from data and how they make predictions. We will also discuss how the concept of model complexity plays out for each of these models, and provide an overview of how each algorithm builds a model. We will examine the strengths and weaknesses of each algorithm, and what kind of data they can best be applied to. We will also explain the meaning of the most important parameters and options.4 Many algorithms have a classification and a regression variant, and we will describe both.

It is not necessary to read through the descriptions of each algorithm in detail, but understanding the models will give you a better feeling for the different ways machine learning algorithms can work. This chapter can also be used as a reference guide, and you can come back to it when you are unsure about the workings of any of the algorithms.

2.3.1 Some Sample Datasets

We will use several datasets to illustrate the different algorithms. Some of the datasets will be small and synthetic (meaning made-up), designed to highlight particular aspects of the algorithms. Other datasets will be large, real-world examples.

An example of a synthetic two-class classification dataset is the

forge dataset, which has two features. The following code creates a

scatter plot (Figure 2-2) visualizing all of the data points in this dataset. The

plot has the first feature on the x-axis and the second feature on the

y-axis. As is always the case in scatter plots, each data point is

represented as one dot. The color and shape of the dot indicates its

class:

In[1]:

# generate datasetX,y=mglearn.datasets.make_forge()# plot datasetmglearn.discrete_scatter(X[:,0],X[:,1],y)plt.legend(["Class 0","Class 1"],loc=4)plt.xlabel("First feature")plt.ylabel("Second feature")("X.shape:",X.shape)

Out[1]:

X.shape: (26, 2)

Figure 2-2. Scatter plot of the forge dataset

As you can see from X.shape, this dataset consists of 26 data points,

with 2 features.

To illustrate regression algorithms, we will use the synthetic wave

dataset. The wave dataset has a single input feature and a continuous

target variable (or response) that we want to model. The plot created

here (Figure 2-3) shows the single

feature on the x-axis and the regression target (the output) on the

y-axis:

In[2]:

X,y=mglearn.datasets.make_wave(n_samples=40)plt.plot(X,y,'o')plt.ylim(-3,3)plt.xlabel("Feature")plt.ylabel("Target")

Figure 2-3. Plot of the wave dataset, with the x-axis showing the feature and the y-axis showing the regression target

We are using these very simple, low-dimensional datasets because we can easily visualize them—a printed page has two dimensions, so data with more than two features is hard to show. Any intuition derived from datasets with few features (also called low-dimensional datasets) might not hold in datasets with many features (high-dimensional datasets). As long as you keep that in mind, inspecting algorithms on low-dimensional datasets can be very instructive.

We will complement these small synthetic datasets with two real-world

datasets that are included in scikit-learn. One is the Wisconsin

Breast Cancer dataset (cancer, for short), which records clinical

measurements of breast cancer tumors. Each tumor is labeled as “benign”

(for harmless tumors) or “malignant” (for cancerous tumors), and the

task is to learn to predict whether a tumor is malignant based on the

measurements of the tissue.

The data can be loaded using the load_breast_cancer function from

scikit-learn:

In[3]:

fromsklearn.datasetsimportload_breast_cancercancer=load_breast_cancer()("cancer.keys():",cancer.keys())

Out[3]:

cancer.keys(): dict_keys(['data', 'target', 'target_names', 'DESCR', 'feature_names', 'filename'])

Note

Datasets that are included in scikit-learn are usually stored

as Bunch objects, which contain some information about the dataset as

well as the actual data. All you need to know about Bunch objects is

that they behave like dictionaries, with the added benefit that you can

access values using a dot (as in bunch.key instead of bunch['key']).

The dataset consists of 569 data points, with 30 features each:

In[4]:

("Shape of cancer data:",cancer.data.shape)

Out[4]:

Shape of cancer data: (569, 30)

Of these 569 data points, 212 are labeled as malignant and 357 as benign:

In[5]:

("Sample counts per class:",{n:vforn,vinzip(cancer.target_names,np.bincount(cancer.target))})

Out[5]:

Sample counts per class:

{'malignant': 212, 'benign': 357}

To get a description of the semantic meaning of each feature, we can

have a look at the feature_names attribute:

In[6]:

("Feature names:",cancer.feature_names)

Out[6]:

Feature names: ['mean radius' 'mean texture' 'mean perimeter' 'mean area' 'mean smoothness' 'mean compactness' 'mean concavity' 'mean concave points' 'mean symmetry' 'mean fractal dimension' 'radius error' 'texture error' 'perimeter error' 'area error' 'smoothness error' 'compactness error' 'concavity error' 'concave points error' 'symmetry error' 'fractal dimension error' 'worst radius' 'worst texture' 'worst perimeter' 'worst area' 'worst smoothness' 'worst compactness' 'worst concavity' 'worst concave points' 'worst symmetry' 'worst fractal dimension']

You can find out more about the data by reading cancer.DESCR if you

are interested.

We will also be using a real-world regression dataset, the Boston Housing dataset. The task associated with this dataset is to predict the median value of homes in several Boston neighborhoods in the 1970s, using information such as crime rate, proximity to the Charles River, highway accessibility, and so on. The dataset contains 506 data points, described by 13 features:

In[7]:

fromsklearn.datasetsimportload_bostonboston=load_boston()("Data shape:",boston.data.shape)

Out[7]:

Data shape: (506, 13)

Again, you can get more information about the dataset by reading the

DESCR attribute of boston. For our purposes here, we will actually

expand this dataset by not only considering these 13 measurements as

input features, but also looking at all products (also called

interactions) between features. In other words, we will not only

consider crime rate and highway accessibility as features, but also the

product of crime rate and highway accessibility. Including derived

feature like these is called feature engineering, which we will

discuss in more detail in

Chapter 4. This derived dataset can be loaded using the

load_extended_boston function:

In[8]:

X,y=mglearn.datasets.load_extended_boston()("X.shape:",X.shape)

Out[8]:

X.shape: (506, 104)

The resulting 104 features are the 13 original features together with the 91 possible combinations of two features within those 13 (with replacement).5

We will use these datasets to explain and illustrate the properties of the different machine learning algorithms. But for now, let’s get to the algorithms themselves. First, we will revisit the k-nearest neighbors (k-NN) algorithm that we saw in the previous chapter.

2.3.2 k-Nearest Neighbors

The k-NN algorithm is arguably the simplest machine learning algorithm. Building the model consists only of storing the training dataset. To make a prediction for a new data point, the algorithm finds the closest data points in the training dataset—its “nearest neighbors.”

k-Neighbors classification

In its simplest version, the k-NN algorithm only considers exactly one

nearest neighbor, which is the closest training data point to the point

we want to make a prediction for. The prediction is then simply the

known output for this training point. Figure 2-4 illustrates this for the case of classification on

the forge dataset:

In[9]:

mglearn.plots.plot_knn_classification(n_neighbors=1)

Figure 2-4. Predictions made by the one-nearest-neighbor model on the forge dataset

Here, we added three new data points, shown as stars. For each of them, we marked the closest point in the training set. The prediction of the one-nearest-neighbor algorithm is the label of that point (shown by the color of the cross).

Instead of considering only the closest neighbor, we can also consider an arbitrary number, k, of neighbors. This is where the name of the k-nearest neighbors algorithm comes from. When considering more than one neighbor, we use voting to assign a label. This means that for each test point, we count how many neighbors belong to class 0 and how many neighbors belong to class 1. We then assign the class that is more frequent: in other words, the majority class among the k-nearest neighbors. The following example (Figure 2-5) uses the three closest neighbors:

In[10]:

mglearn.plots.plot_knn_classification(n_neighbors=3)

Figure 2-5. Predictions made by the three-nearest-neighbors model on the forge dataset

Again, the prediction is shown as the color of the cross. You can see that the prediction for the new data point at the top left is not the same as the prediction when we used only one neighbor.

While this illustration is for a binary classification problem, this method can be applied to datasets with any number of classes. For more classes, we count how many neighbors belong to each class and again predict the most common class.

Now let’s look at how we can apply the k-nearest neighbors

algorithm using scikit-learn. First, we split our data into a training

and a test set so we can evaluate generalization performance, as

discussed in Chapter 1:

In[11]:

fromsklearn.model_selectionimporttrain_test_splitX,y=mglearn.datasets.make_forge()X_train,X_test,y_train,y_test=train_test_split(X,y,random_state=0)

Next, we import and instantiate the class. This is when we can set parameters, like the number of neighbors to use. Here, we set it to 3:

In[12]:

fromsklearn.neighborsimportKNeighborsClassifierclf=KNeighborsClassifier(n_neighbors=3)

Now, we fit the classifier using the training set. For

KNeighborsClassifier this means storing the dataset, so we can compute

neighbors during prediction:

In[13]:

clf.fit(X_train,y_train)

To make predictions on the test data, we call the predict method. For

each data point in the test set, this computes its nearest neighbors in

the training set and finds the most common class among these:

In[14]:

("Test set predictions:",clf.predict(X_test))

Out[14]:

Test set predictions: [1 0 1 0 1 0 0]

To evaluate how well our model generalizes, we can call the score

method with the test data together with the test labels:

In[15]:

("Test set accuracy: {:.2f}".format(clf.score(X_test,y_test)))

Out[15]:

Test set accuracy: 0.86

We see that our model is about 86% accurate, meaning the model predicted the class correctly for 86% of the samples in the test dataset.

Analyzing KNeighborsClassifier

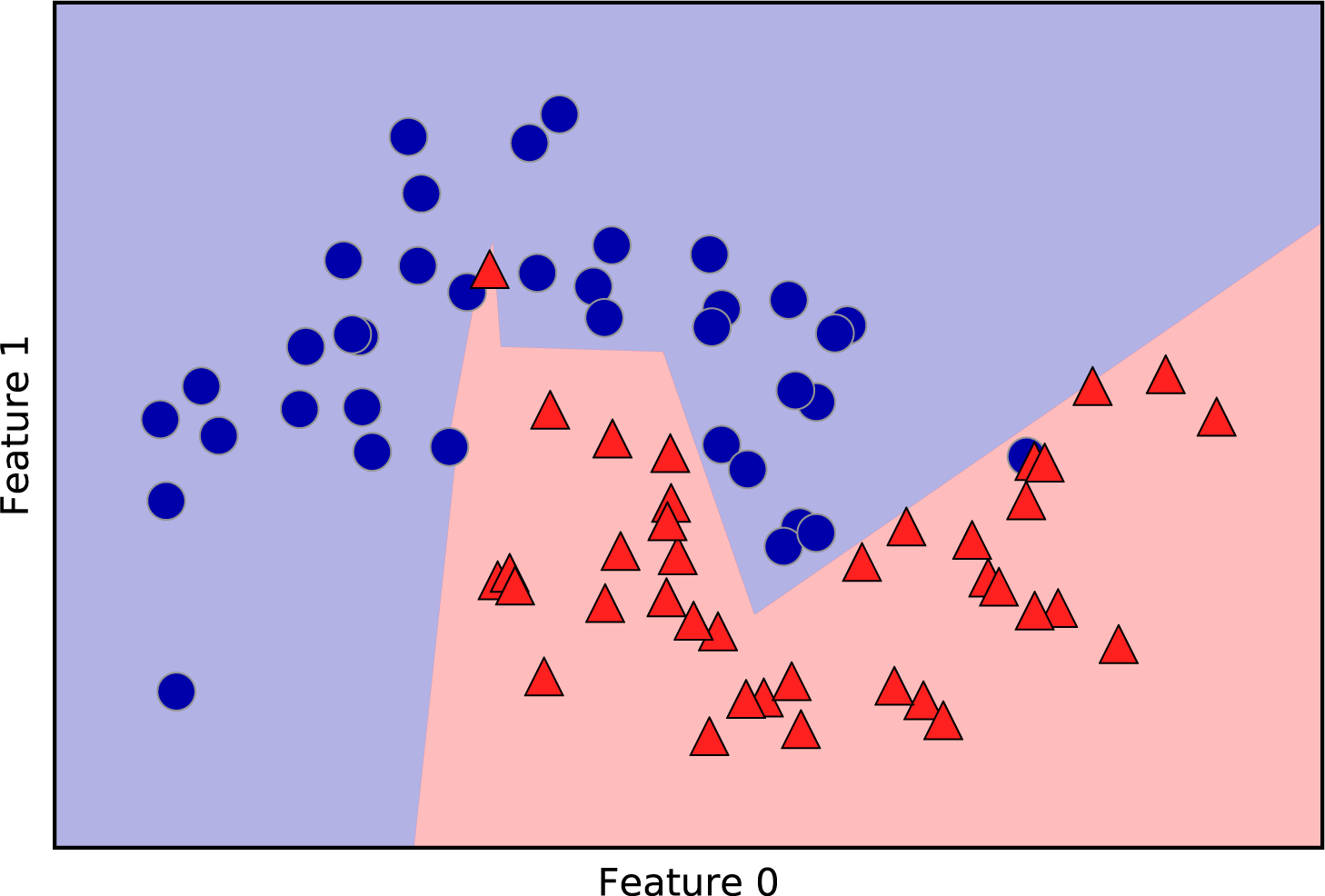

For two-dimensional datasets, we can also illustrate the prediction for all possible test points in the xy-plane. We color the plane according to the class that would be assigned to a point in this region. This lets us view the decision boundary, which is the divide between where the algorithm assigns class 0 versus where it assigns class 1. The following code produces the visualizations of the decision boundaries for one, three, and nine neighbors shown in Figure 2-6:

In[16]:

fig,axes=plt.subplots(1,3,figsize=(10,3))forn_neighbors,axinzip([1,3,9],axes):# the fit method returns the object self, so we can instantiate# and fit in one lineclf=KNeighborsClassifier(n_neighbors=n_neighbors).fit(X,y)mglearn.plots.plot_2d_separator(clf,X,fill=True,eps=0.5,ax=ax,alpha=.4)mglearn.discrete_scatter(X[:,0],X[:,1],y,ax=ax)ax.set_title("{} neighbor(s)".format(n_neighbors))ax.set_xlabel("feature 0")ax.set_ylabel("feature 1")axes[0].legend(loc=3)

Figure 2-6. Decision boundaries created by the nearest neighbors model for different values of n_neighbors

As you can see on the left in the figure, using a single neighbor results in a decision boundary that follows the training data closely. Considering more and more neighbors leads to a smoother decision boundary. A smoother boundary corresponds to a simpler model. In other words, using few neighbors corresponds to high model complexity (as shown on the right side of Figure 2-1), and using many neighbors corresponds to low model complexity (as shown on the left side of Figure 2-1). If you consider the extreme case where the number of neighbors is the number of all data points in the training set, each test point would have exactly the same neighbors (all training points) and all predictions would be the same: the class that is most frequent in the training set.

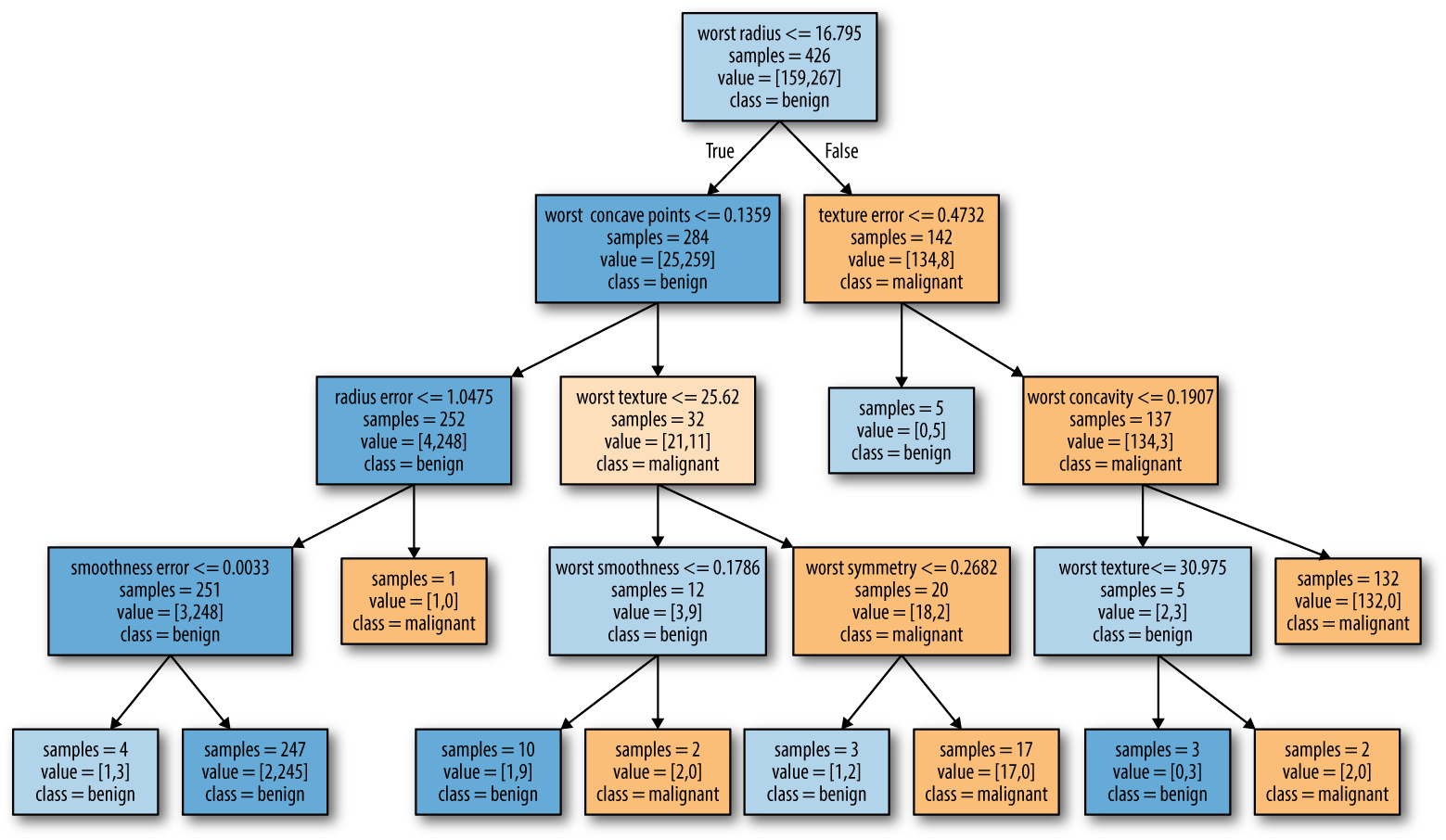

Let’s investigate whether we can confirm the connection between model complexity and generalization that we discussed earlier. We will do this on the real-world Breast Cancer dataset. We begin by splitting the dataset into a training and a test set. Then we evaluate training and test set performance with different numbers of neighbors. The results are shown in Figure 2-7:

In[17]:

fromsklearn.datasetsimportload_breast_cancercancer=load_breast_cancer()X_train,X_test,y_train,y_test=train_test_split(cancer.data,cancer.target,stratify=cancer.target,random_state=66)training_accuracy=[]test_accuracy=[]# try n_neighbors from 1 to 10neighbors_settings=range(1,11)forn_neighborsinneighbors_settings:# build the modelclf=KNeighborsClassifier(n_neighbors=n_neighbors)clf.fit(X_train,y_train)# record training set accuracytraining_accuracy.append(clf.score(X_train,y_train))# record generalization accuracytest_accuracy.append(clf.score(X_test,y_test))plt.plot(neighbors_settings,training_accuracy,label="training accuracy")plt.plot(neighbors_settings,test_accuracy,label="test accuracy")plt.ylabel("Accuracy")plt.xlabel("n_neighbors")plt.legend()

The plot shows the training and test set accuracy on the y-axis against

the setting of n_neighbors on the x-axis. While real-world plots

are rarely very smooth, we can still recognize some of the

characteristics of overfitting and underfitting (note that because considering

fewer neighbors corresponds to a more complex model, the plot is

horizontally flipped relative to the illustration in

Figure 2-1). Considering a single nearest neighbor, the prediction

on the training set is perfect. But when more neighbors are considered, the model

becomes simpler and the training accuracy drops. The test set

accuracy for using a single neighbor is lower than when using more

neighbors, indicating that using the single nearest neighbor leads to a

model that is too complex. On the other hand, when considering 10

neighbors, the model is too simple and performance is even worse. The

best performance is somewhere in the middle, using around six neighbors.

Still, it is good to keep the scale of the plot in mind. The worst

performance is around 88% accuracy, which might still be acceptable.

Figure 2-7. Comparison of training and test accuracy as a function of n_neighbors

k-neighbors regression

There is also a regression variant of the k-nearest neighbors algorithm.

Again, let’s start by using the single nearest neighbor, this time using

the wave dataset. We’ve added three test data points as green stars on

the x-axis. The prediction using a single neighbor is just the target

value of the nearest neighbor. These are shown as blue stars in

Figure 2-8:

In[18]:

mglearn.plots.plot_knn_regression(n_neighbors=1)

Figure 2-8. Predictions made by one-nearest-neighbor regression on the wave dataset

Again, we can use more than the single closest neighbor for regression. When using multiple nearest neighbors, the prediction is the average, or mean, of the relevant neighbors (Figure 2-9):

In[19]:

mglearn.plots.plot_knn_regression(n_neighbors=3)

Figure 2-9. Predictions made by three-nearest-neighbors regression on the wave dataset

The k-nearest neighbors algorithm for regression is implemented in the

KNeighborsRegressor class in scikit-learn. It’s used similarly to

KNeighborsClassifier:

In[20]:

fromsklearn.neighborsimportKNeighborsRegressorX,y=mglearn.datasets.make_wave(n_samples=40)# split the wave dataset into a training and a test setX_train,X_test,y_train,y_test=train_test_split(X,y,random_state=0)# instantiate the model and set the number of neighbors to consider to 3reg=KNeighborsRegressor(n_neighbors=3)# fit the model using the training data and training targetsreg.fit(X_train,y_train)

Now we can make predictions on the test set:

In[21]:

("Test set predictions:",reg.predict(X_test))

Out[21]:

Test set predictions: [-0.054 0.357 1.137 -1.894 -1.139 -1.631 0.357 0.912 -0.447 -1.139]

We can also evaluate the model using the score method, which for

regressors returns the R2 score. The R2

score, also known as the coefficient of determination, is a measure of

goodness of a prediction for a regression model, and yields a score

that’s usually between 0 and 1. A value of 1 corresponds to a perfect

prediction, and a value of 0 corresponds to a constant model that just

predicts the mean of the training set responses, y_train. The

formulation of R2 used here can even be negative, which

can indicate anticorrelated predictions.

In[22]:

("Test set R^2: {:.2f}".format(reg.score(X_test,y_test)))

Out[22]:

Test set R^2: 0.83

Here, the score is 0.83, which indicates a relatively good model fit.

Analyzing KNeighborsRegressor

For our one-dimensional dataset, we can see what the predictions look like for all possible feature values (Figure 2-10). To do this, we create a test dataset consisting of many points on the x-axis, which corresponds to the single feature:

In[23]:

fig,axes=plt.subplots(1,3,figsize=(15,4))# create 1,000 data points, evenly spaced between -3 and 3line=np.linspace(-3,3,1000).reshape(-1,1)forn_neighbors,axinzip([1,3,9],axes):# make predictions using 1, 3, or 9 neighborsreg=KNeighborsRegressor(n_neighbors=n_neighbors)reg.fit(X_train,y_train)ax.plot(line,reg.predict(line))ax.plot(X_train,y_train,'^',c=mglearn.cm2(0),markersize=8)ax.plot(X_test,y_test,'v',c=mglearn.cm2(1),markersize=8)ax.set_title("{} neighbor(s)train score: {:.2f} test score: {:.2f}".format(n_neighbors,reg.score(X_train,y_train),reg.score(X_test,y_test)))ax.set_xlabel("Feature")ax.set_ylabel("Target")axes[0].legend(["Model predictions","Training data/target","Test data/target"],loc="best")

Figure 2-10. Comparing predictions made by nearest neighbors regression for different values of n_neighbors

As we can see from the plot, using only a single neighbor, each point in the training set has an obvious influence on the predictions, and the predicted values go through all of the data points. This leads to a very unsteady prediction. Considering more neighbors leads to smoother predictions, but these do not fit the training data as well.

Strengths, weaknesses, and parameters

In principle, there are two important parameters to the KNeighbors

classifier: the number of neighbors and how you measure distance between

data points. In practice, using a small number of neighbors like three

or five often works well, but you should certainly adjust this

parameter. Choosing the right distance measure is somewhat beyond the

scope of this book. By default, Euclidean distance is used, which works

well in many settings.

One of the strengths of k-NN is that the model is very easy to understand, and often gives reasonable performance without a lot of adjustments. Using this algorithm is a good baseline method to try before considering more advanced techniques. Building the nearest neighbors model is usually very fast, but when your training set is very large (either in number of features or in number of samples) prediction can be slow. When using the k-NN algorithm, it’s important to preprocess your data (see Chapter 3). This approach often does not perform well on datasets with many features (hundreds or more), and it does particularly badly with datasets where most features are 0 most of the time (so-called sparse datasets).

So, while the k-nearest neighbors algorithm is easy to understand, it is not often used in practice, due to prediction being slow and its inability to handle many features. The method we discuss next has neither of these drawbacks.

2.3.3 Linear Models

Linear models are a class of models that are widely used in practice and have been studied extensively in the last few decades, with roots going back over a hundred years. Linear models make a prediction using a linear function of the input features, which we will explain shortly.

Linear models for regression

For regression, the general prediction formula for a linear model looks as follows:

- ŷ = w[0] * x[0] + w[1] * x[1] + ... + w[p] * x[p] + b

Here, x[0] to x[p] denotes the features (in this example, the number of features is p+1) of a single data point, w and b are parameters of the model that are learned, and ŷ is the prediction the model makes. For a dataset with a single feature, this is:

- ŷ = w[0] * x[0] + b

which you might remember from high school mathematics as the equation for a line. Here, w[0] is the slope and b is the y-axis offset. For more features, w contains the slopes along each feature axis. Alternatively, you can think of the predicted response as being a weighted sum of the input features, with weights (which can be negative) given by the entries of w.

Trying to learn the parameters w[0] and b on

our one-dimensional wave dataset might lead to the following line (see Figure 2-11):

In[24]:

mglearn.plots.plot_linear_regression_wave()

Out[24]:

w[0]: 0.393906 b: -0.031804

Figure 2-11. Predictions of a linear model on the wave dataset

We added a coordinate cross into the plot to make it easier to

understand the line. Looking at w[0] we see that the slope should be

around 0.4, which we can confirm visually in the plot. The intercept is

where the prediction line should cross the y-axis: this is slightly

below zero, which you can also confirm in the image.

Linear models for regression can be characterized as regression models for which the prediction is a line for a single feature, a plane when using two features, or a hyperplane in higher dimensions (that is, when using more features).

If you compare the predictions made by the straight line with those made

by the KNeighborsRegressor in Figure 2-10, using

a straight line to make predictions seems very restrictive. It looks

like all the fine details of the data are lost. In a sense, this is true.

It is a strong (and somewhat unrealistic) assumption that our target

y is a linear combination of the features. But looking at

one-dimensional data gives a somewhat skewed perspective. For datasets

with many features, linear models can be very powerful. In particular,

if you have more features than training data points, any target

y can be perfectly modeled (on the training set) as a

linear function.6

There are many different linear models for regression. The difference between these models lies in how the model parameters w and b are learned from the training data, and how model complexity can be controlled. We will now take a look at the most popular linear models for regression.

Linear regression (aka ordinary least squares)

Linear regression, or ordinary least squares (OLS), is the simplest and most classic linear method for regression. Linear regression finds the parameters w and b that minimize the mean squared error between predictions and the true regression targets, y, on the training set. The mean squared error is the sum of the squared differences between the predictions and the true values, divided by the number of samples. Linear regression has no parameters, which is a benefit, but it also has no way to control model complexity.

Here is the code that produces the model you can see in Figure 2-11:

In[25]:

fromsklearn.linear_modelimportLinearRegressionX,y=mglearn.datasets.make_wave(n_samples=60)X_train,X_test,y_train,y_test=train_test_split(X,y,random_state=42)lr=LinearRegression().fit(X_train,y_train)

The “slope” parameters (w), also called weights or coefficients, are

stored in the coef_ attribute, while the offset or intercept (b) is

stored in the intercept_ attribute:

In[26]:

("lr.coef_:",lr.coef_)("lr.intercept_:",lr.intercept_)

Out[26]:

lr.coef_: [0.394] lr.intercept_: -0.031804343026759746

Note

You might notice the strange-looking trailing underscore at the

end of coef_ and intercept_. scikit-learn always stores anything

that is derived from the training data in attributes that end with a

trailing underscore. That is to separate them from parameters that are

set by the user.

The intercept_ attribute is always a single float number, while the

coef_ attribute is a NumPy array with one entry per input feature. As

we only have a single input feature in the wave dataset, lr.coef_

only has a single entry.

Let’s look at the training set and test set performance:

In[27]:

("Training set score: {:.2f}".format(lr.score(X_train,y_train)))("Test set score: {:.2f}".format(lr.score(X_test,y_test)))

Out[27]:

Training set score: 0.67 Test set score: 0.66

An R2 of around 0.66 is not very good, but we can see

that the scores on the training and test sets are very close together. This

means we are likely underfitting, not overfitting. For this

one-dimensional dataset, there is little danger of overfitting, as the

model is very simple (or restricted). However, with higher-dimensional

datasets (meaning datasets with a large number of features), linear

models become more powerful, and there is a higher chance of

overfitting. Let’s take a look at how LinearRegression performs on a

more complex dataset, like the Boston Housing dataset. Remember that

this dataset has 506 samples and 104 derived features. First, we load

the dataset and split it into a training and a test set. Then we build

the linear regression model as before:

In[28]:

X,y=mglearn.datasets.load_extended_boston()X_train,X_test,y_train,y_test=train_test_split(X,y,random_state=0)lr=LinearRegression().fit(X_train,y_train)

When comparing training set and test set scores, we find that we predict very accurately on the training set, but the R2 on the test set is much worse:

In[29]:

("Training set score: {:.2f}".format(lr.score(X_train,y_train)))("Test set score: {:.2f}".format(lr.score(X_test,y_test)))

Out[29]:

Training set score: 0.95 Test set score: 0.61

This discrepancy between performance on the training set and the test set is a clear sign of overfitting, and therefore we should try to find a model that allows us to control complexity. One of the most commonly used alternatives to standard linear regression is ridge regression, which we will look into next.

Ridge regression

Ridge regression is also a linear model for regression, so the formula it uses to make predictions is the same one used for ordinary least squares. In ridge regression, though, the coefficients (w) are chosen not only so that they predict well on the training data, but also to fit an additional constraint. We also want the magnitude of coefficients to be as small as possible; in other words, all entries of w should be close to zero. Intuitively, this means each feature should have as little effect on the outcome as possible (which translates to having a small slope), while still predicting well. This constraint is an example of what is called regularization. Regularization means explicitly restricting a model to avoid overfitting. The particular kind used by ridge regression is known as L2 regularization.7

Ridge regression is implemented in linear_model.Ridge. Let’s see how

well it does on the extended Boston Housing dataset:

In[30]:

fromsklearn.linear_modelimportRidgeridge=Ridge().fit(X_train,y_train)("Training set score: {:.2f}".format(ridge.score(X_train,y_train)))("Test set score: {:.2f}".format(ridge.score(X_test,y_test)))

Out[30]:

Training set score: 0.89 Test set score: 0.75

As you can see, the training set score of Ridge is lower than for

LinearRegression, while the test set score is higher. This is

consistent with our expectation. With linear regression, we were

overfitting our data. Ridge is a more restricted model, so we are less

likely to overfit. A less complex model means worse performance on the

training set, but better generalization. As we are only interested in

generalization performance, we should choose the Ridge model over the

LinearRegression model.

The Ridge model makes a trade-off between the simplicity of the model

(near-zero coefficients) and its performance on the training set. How

much importance the model places on simplicity versus training set

performance can be specified by the user, using the alpha parameter.

In the previous example, we used the default parameter alpha=1.0.

There is no reason why this will give us the best trade-off, though. The

optimum setting of alpha depends on the particular dataset we are

using. Increasing alpha forces coefficients to move more toward zero,

which decreases training set performance but might help generalization.

For example:

In[31]:

ridge10=Ridge(alpha=10).fit(X_train,y_train)("Training set score: {:.2f}".format(ridge10.score(X_train,y_train)))("Test set score: {:.2f}".format(ridge10.score(X_test,y_test)))

Out[31]:

Training set score: 0.79 Test set score: 0.64

Decreasing alpha allows the coefficients to be less restricted,

meaning we move right in Figure 2-1. For very small values of alpha, coefficients are barely

restricted at all, and we end up with a model that resembles

LinearRegression:

In[32]:

ridge01=Ridge(alpha=0.1).fit(X_train,y_train)("Training set score: {:.2f}".format(ridge01.score(X_train,y_train)))("Test set score: {:.2f}".format(ridge01.score(X_test,y_test)))

Out[32]:

Training set score: 0.93 Test set score: 0.77

Here, alpha=0.1 seems to be working well. We could try decreasing

alpha even more to improve generalization. For now, notice how the

parameter alpha corresponds to the model complexity as shown in

Figure 2-1. We will discuss methods

to properly select parameters in

Chapter 5.

We can also get a more qualitative insight into how the alpha

parameter changes the model by inspecting the coef_ attribute of

models with different values of alpha. A higher alpha means a more

restricted model, so we expect the entries of coef_ to have smaller

magnitude for a high value of alpha than for a low value of alpha.

This is confirmed in the plot in Figure 2-12:

In[33]:

plt.plot(ridge.coef_,'s',label="Ridge alpha=1")plt.plot(ridge10.coef_,'^',label="Ridge alpha=10")plt.plot(ridge01.coef_,'v',label="Ridge alpha=0.1")plt.plot(lr.coef_,'o',label="LinearRegression")plt.xlabel("Coefficient index")plt.ylabel("Coefficient magnitude")plt.hlines(0,0,len(lr.coef_))plt.ylim(-25,25)plt.legend()

Figure 2-12. Comparing coefficient magnitudes for ridge regression with different values of alpha and linear regression

Here, the x-axis enumerates the entries of coef_: x=0 shows the

coefficient associated with the first feature, x=1 the coefficient

associated with the second feature, and so on up to x=100. The y-axis

shows the numeric values of the corresponding values of the coefficients.

The main takeaway here is that for alpha=10, the coefficients are

mostly between around –3 and 3. The coefficients for the Ridge model

with alpha=1, are somewhat larger. The dots corresponding to

alpha=0.1 have larger magnitude still, and many of the dots

corresponding to linear regression without any regularization (which

would be alpha=0) are so large they are outside of the chart.

Another way to understand the influence of regularization is to fix a

value of alpha but vary the amount of training data available. For

Figure 2-13, we subsampled the Boston

Housing dataset and evaluated LinearRegression and Ridge(alpha=1) on

subsets of increasing size (plots that show model performance as a

function of dataset size are called learning curves):

In[34]:

mglearn.plots.plot_ridge_n_samples()

Figure 2-13. Learning curves for ridge regression and linear regression on the Boston Housing dataset

As one would expect, the training score is higher than the test score for all dataset sizes, for both ridge and linear regression. Because ridge is regularized, the training score of ridge is lower than the training score for linear regression across the board. However, the test score for ridge is better, particularly for small subsets of the data. For less than 400 data points, linear regression is not able to learn anything. As more and more data becomes available to the model, both models improve, and linear regression catches up with ridge in the end. The lesson here is that with enough training data, regularization becomes less important, and given enough data, ridge and linear regression will have the same performance (the fact that this happens here when using the full dataset is just by chance). Another interesting aspect of Figure 2-13 is the decrease in training performance for linear regression. If more data is added, it becomes harder for a model to overfit, or memorize the data.

Lasso

An alternative to Ridge for regularizing linear regression is Lasso.

As with ridge regression, the lasso also restricts coefficients to be

close to zero, but in a slightly different way, called L1

regularization.8 The consequence of L1 regularization is that when

using the lasso, some coefficients are exactly zero. This means some

features are entirely ignored by the model. This can be seen as a form

of automatic feature selection. Having some coefficients be exactly zero

often makes a model easier to interpret, and can reveal the most

important features of your model.

Let’s apply the lasso to the extended Boston Housing dataset:

In[35]:

fromsklearn.linear_modelimportLassolasso=Lasso().fit(X_train,y_train)("Training set score: {:.2f}".format(lasso.score(X_train,y_train)))("Test set score: {:.2f}".format(lasso.score(X_test,y_test)))("Number of features used:",np.sum(lasso.coef_!=0))

Out[35]:

Training set score: 0.29 Test set score: 0.21 Number of features used: 4

As you can see, Lasso does quite badly, both on the training and the

test set. This indicates that we are underfitting, and we find that it

used only 4 of the 104 features. Similarly to Ridge, the Lasso also has

a regularization parameter, alpha, that controls how strongly

coefficients are pushed toward zero. In the previous example, we used

the default of alpha=1.0. To reduce underfitting, let’s try decreasing

alpha. When we do this, we also need to increase the default setting

of max_iter (the maximum number of iterations to run):

In[36]:

# we increase the default setting of "max_iter",# otherwise the model would warn us that we should increase max_iter.lasso001=Lasso(alpha=0.01,max_iter=100000).fit(X_train,y_train)("Training set score: {:.2f}".format(lasso001.score(X_train,y_train)))("Test set score: {:.2f}".format(lasso001.score(X_test,y_test)))("Number of features used:",np.sum(lasso001.coef_!=0))

Out[36]:

Training set score: 0.90 Test set score: 0.77 Number of features used: 33

A lower alpha allowed us to fit a more complex model, which worked

better on the training and test data. The performance is slightly better

than using Ridge, and we are using only 33 of the 104 features. This

makes this model potentially easier to understand.

If we set alpha too low, however, we again remove the effect of

regularization and end up overfitting, with a result similar to

LinearRegression:

In[37]:

lasso00001=Lasso(alpha=0.0001,max_iter=100000).fit(X_train,y_train)("Training set score: {:.2f}".format(lasso00001.score(X_train,y_train)))("Test set score: {:.2f}".format(lasso00001.score(X_test,y_test)))("Number of features used:",np.sum(lasso00001.coef_!=0))

Out[37]:

Training set score: 0.95 Test set score: 0.64 Number of features used: 94

Again, we can plot the coefficients of the different models, similarly to Figure 2-12. The result is shown in Figure 2-14:

In[38]:

plt.plot(lasso.coef_,'s',label="Lasso alpha=1")plt.plot(lasso001.coef_,'^',label="Lasso alpha=0.01")plt.plot(lasso00001.coef_,'v',label="Lasso alpha=0.0001")plt.plot(ridge01.coef_,'o',label="Ridge alpha=0.1")plt.legend(ncol=2,loc=(0,1.05))plt.ylim(-25,25)plt.xlabel("Coefficient index")plt.ylabel("Coefficient magnitude")

Figure 2-14. Comparing coefficient magnitudes for lasso regression with different values of alpha and ridge regression

For alpha=1, we not only see that most of the coefficients are zero (which we already

knew), but that the remaining coefficients are also small in magnitude.

Decreasing alpha to 0.01, we obtain the solution shown as an upward pointing triangle,

which causes most features to be exactly zero. Using alpha=0.0001, we get

a model that is quite unregularized, with most coefficients nonzero and

of large magnitude. For comparison, the best Ridge solution is shown as circles. The Ridge model with alpha=0.1 has similar predictive performance

as the lasso model with alpha=0.01, but using Ridge, all coefficients

are nonzero.

In practice, ridge regression is usually the first choice between these

two models. However, if you have a large amount of features and expect

only a few of them to be important, Lasso might be a better choice.

Similarly, if you would like to have a model that is easy to interpret,

Lasso will provide a model that is easier to understand, as it will

select only a subset of the input features. scikit-learn also provides

the ElasticNet class, which combines the penalties of Lasso and

Ridge. In practice, this combination works best, though at the price

of having two parameters to adjust: one for the L1 regularization, and

one for the L2 regularization.

Linear models for classification

Linear models are also extensively used for classification. Let’s look at binary classification first. In this case, a prediction is made using the following formula:

- ŷ = w[0] * x[0] + w[1] * x[1] + ... + w[p] * x[p] + b > 0

The formula looks very similar to the one for linear regression, but instead of just returning the weighted sum of the features, we threshold the predicted value at zero. If the function is smaller than zero, we predict the class –1; if it is larger than zero, we predict the class +1. This prediction rule is common to all linear models for classification. Again, there are many different ways to find the coefficients (w) and the intercept (b).

For linear models for regression, the output, ŷ, is a linear function of the features: a line, plane, or hyperplane (in higher dimensions). For linear models for classification, the decision boundary is a linear function of the input. In other words, a (binary) linear classifier is a classifier that separates two classes using a line, a plane, or a hyperplane. We will see examples of that in this section.

There are many algorithms for learning linear models. These algorithms all differ in the following two ways:

-

The way in which they measure how well a particular combination of coefficients and intercept fits the training data

-

If and what kind of regularization they use

Different algorithms choose different ways to measure what “fitting the training set well” means. For technical mathematical reasons, it is not possible to adjust w and b to minimize the number of misclassifications the algorithms produce, as one might hope. For our purposes, and many applications, the different choices for item 1 in the preceding list (called loss functions) are of little significance.

The two most common linear classification algorithms are logistic

regression, implemented in linear_model.LogisticRegression, and

linear support vector machines (linear SVMs), implemented in

svm.LinearSVC (SVC stands for support vector classifier). Despite its

name, LogisticRegression is a classification algorithm and not a

regression algorithm, and it should not be confused with

LinearRegression.

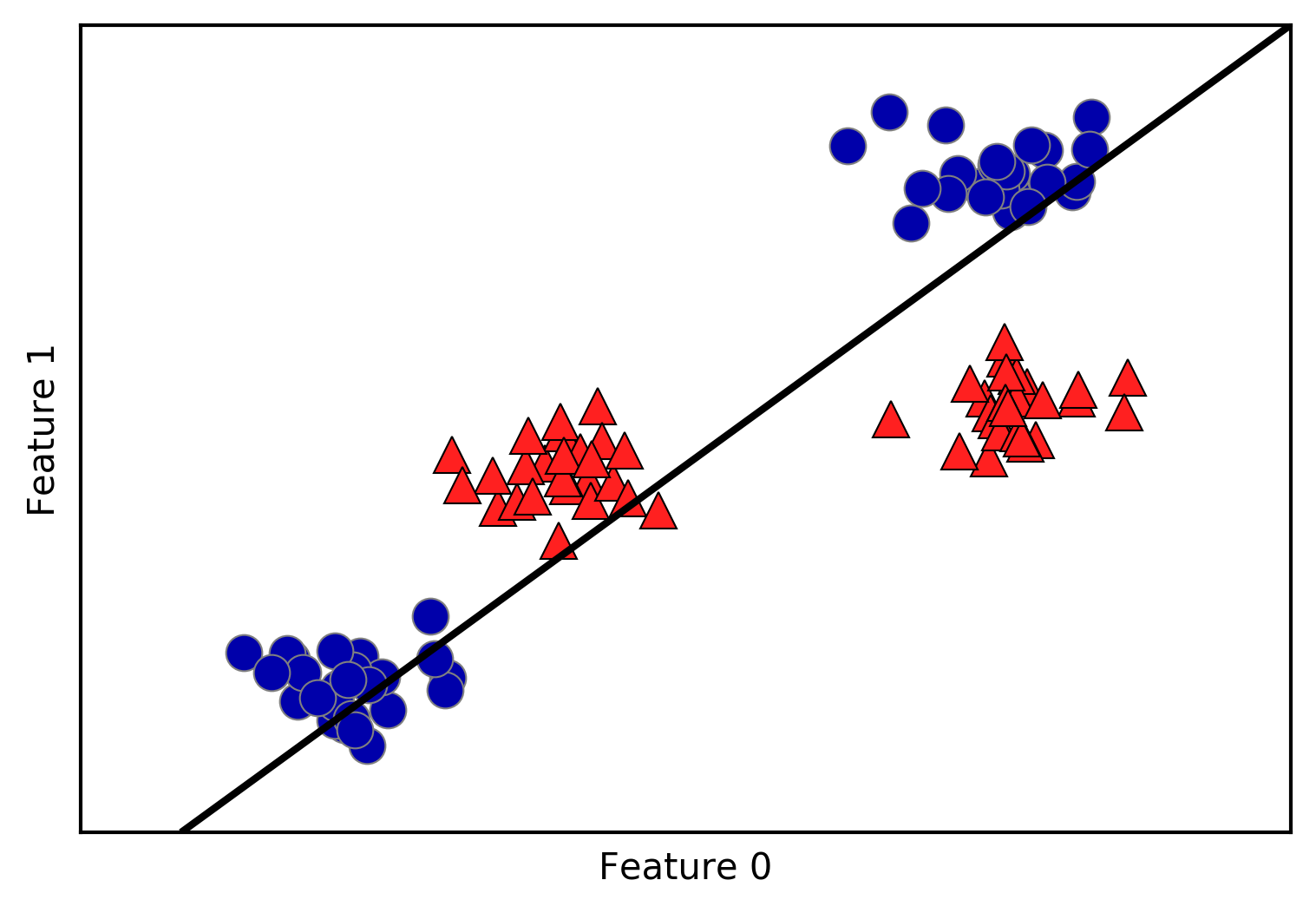

We can apply the LogisticRegression and LinearSVC models to the

forge dataset, and visualize the decision boundary as found by the

linear models (Figure 2-15):

In[39]:

fromsklearn.linear_modelimportLogisticRegressionfromsklearn.svmimportLinearSVCX,y=mglearn.datasets.make_forge()fig,axes=plt.subplots(1,2,figsize=(10,3))formodel,axinzip([LinearSVC(),LogisticRegression()],axes):clf=model.fit(X,y)mglearn.plots.plot_2d_separator(clf,X,fill=False,eps=0.5,ax=ax,alpha=.7)mglearn.discrete_scatter(X[:,0],X[:,1],y,ax=ax)ax.set_title(clf.__class__.__name__)ax.set_xlabel("Feature 0")ax.set_ylabel("Feature 1")axes[0].legend()

Figure 2-15. Decision boundaries of a linear SVM and logistic regression on the forge dataset with the default parameters

In this figure, we have the first feature of the forge dataset on the

x-axis and the second feature on the y-axis, as before. We display the

decision boundaries found by LinearSVC and LogisticRegression

respectively as straight lines, separating the area classified as class

1 on the top from the area classified as class 0 on the bottom. In other

words, any new data point that lies above the black line will be

classified as class 1 by the respective classifier, while any point that

lies below the black line will be classified as class 0.

The two models come up with similar decision boundaries. Note that both

misclassify two of the points. By default, both models apply an L2

regularization, in the same way that Ridge does for regression.

For LogisticRegression and LinearSVC the trade-off parameter that

determines the strength of the regularization is called C, and higher

values of C correspond to less regularization. In other words, when

you use a high value for the parameter C, LogisticRegression and

LinearSVC try to fit the training set as best as possible, while with

low values of the parameter C, the models put more emphasis on finding

a coefficient vector (w) that is close to zero.

There is another interesting aspect of how the parameter C acts. Using

low values of C will cause the algorithms to try to adjust to the

“majority” of data points, while using a higher value of C stresses

the importance that each individual data point be classified correctly.

Here is an illustration using LinearSVC (Figure 2-16):

In[40]:

mglearn.plots.plot_linear_svc_regularization()

Figure 2-16. Decision boundaries of a linear SVM on the forge dataset for different values of C

On the lefthand side, we have a very small C corresponding to a lot of

regularization. Most of the points in class 0 are at the bottom, and

most of the points in class 1 are at the top. The strongly regularized

model chooses a relatively horizontal line, misclassifying two points.

In the center plot, C is slightly higher, and the model focuses more

on the two misclassified samples, tilting the decision boundary.

Finally, on the righthand side, the very high value of C in the model

tilts the decision boundary a lot, now correctly classifying all points

in class 0. One of the points in class 1 is still misclassified, as it

is not possible to correctly classify all points in this dataset using a

straight line. The model illustrated on the righthand side tries hard to

correctly classify all points, but might not capture the overall layout

of the classes well. In other words, this model is likely overfitting.

Similarly to the case of regression, linear models for classification might seem very restrictive in low-dimensional spaces, only allowing for decision boundaries that are straight lines or planes. Again, in high dimensions, linear models for classification become very powerful, and guarding against overfitting becomes increasingly important when considering more features.

Let’s analyze LogisticRegression in more detail on the Breast Cancer

dataset:

In[41]:

fromsklearn.datasetsimportload_breast_cancercancer=load_breast_cancer()X_train,X_test,y_train,y_test=train_test_split(cancer.data,cancer.target,stratify=cancer.target,random_state=42)logreg=LogisticRegression().fit(X_train,y_train)("Training set score: {:.3f}".format(logreg.score(X_train,y_train)))("Test set score: {:.3f}".format(logreg.score(X_test,y_test)))

Out[41]:

Training set score: 0.953 Test set score: 0.958

The default value of C=1 provides quite good performance, with 95%

accuracy on both the training and the test set. But as training and test

set performance are very close, it is likely that we are underfitting.

Let’s try to increase C to fit a more flexible model:

In[42]:

logreg100=LogisticRegression(C=100).fit(X_train,y_train)("Training set score: {:.3f}".format(logreg100.score(X_train,y_train)))("Test set score: {:.3f}".format(logreg100.score(X_test,y_test)))

Out[42]:

Training set score: 0.972 Test set score: 0.965

Using C=100 results in higher training set accuracy, and also a

slightly increased test set accuracy, confirming our intuition that a

more complex model should perform better.

We can also investigate what happens if we use an even more regularized

model than the default of C=1, by setting C=0.01:

In[43]:

logreg001=LogisticRegression(C=0.01).fit(X_train,y_train)("Training set score: {:.3f}".format(logreg001.score(X_train,y_train)))("Test set score: {:.3f}".format(logreg001.score(X_test,y_test)))

Out[43]:

Training set score: 0.934 Test set score: 0.930

As expected, when moving more to the left along the scale shown in Figure 2-1 from an already underfit model, both training and test set accuracy decrease relative to the default parameters.

Finally, let’s look at the coefficients learned by the models with the

three different settings of the regularization parameter C (Figure 2-17):

In[44]:

plt.plot(logreg.coef_.T,'o',label="C=1")plt.plot(logreg100.coef_.T,'^',label="C=100")plt.plot(logreg001.coef_.T,'v',label="C=0.001")plt.xticks(range(cancer.data.shape[1]),cancer.feature_names,rotation=90)plt.hlines(0,0,cancer.data.shape[1])plt.ylim(-5,5)plt.xlabel("Feature")plt.ylabel("Coefficient magnitude")plt.legend()

Warning

As LogisticRegression applies an L2 regularization by default, the

result looks similar to that produced by Ridge in Figure 2-12. Stronger

regularization pushes coefficients more and more toward zero, though

coefficients never become exactly zero. Inspecting the plot more

closely, we can also see an interesting effect in the third coefficient,

for “mean perimeter.” For C=100 and C=1, the coefficient is negative,

while for C=0.001, the coefficient is positive, with a magnitude that is

even larger than for C=1. Interpreting a model like this, one might

think the coefficient tells us which class a feature is associated with.

For example, one might think that a high “texture error” feature is

related to a sample being “malignant.” However, the change of sign in

the coefficient for “mean perimeter” means that depending on which model

we look at, a high “mean perimeter” could be taken as being either

indicative of “benign” or indicative of “malignant.” This illustrates

that interpretations of coefficients of linear models should always be

taken with a grain of salt.

Figure 2-17. Coefficients learned by logistic regression on the Breast Cancer dataset for different values of C

If we desire a more interpretable model, using L1 regularization might help, as it limits the model to using only a few features. Here is the coefficient plot and classification accuracies for L1 regularization (Figure 2-18):

In[45]:

forC,markerinzip([0.001,1,100],['o','^','v']):lr_l1=LogisticRegression(C=C,penalty="l1").fit(X_train,y_train)("Training accuracy of l1 logreg with C={:.3f}: {:.2f}".format(C,lr_l1.score(X_train,y_train)))("Test accuracy of l1 logreg with C={:.3f}: {:.2f}".format(C,lr_l1.score(X_test,y_test)))plt.plot(lr_l1.coef_.T,marker,label="C={:.3f}".format(C))plt.xticks(range(cancer.data.shape[1]),cancer.feature_names,rotation=90)plt.hlines(0,0,cancer.data.shape[1])plt.xlabel("Feature")plt.ylabel("Coefficient magnitude")plt.ylim(-5,5)plt.legend(loc=3)

Out[45]:

Training accuracy of l1 logreg with C=0.001: 0.91 Test accuracy of l1 logreg with C=0.001: 0.92 Training accuracy of l1 logreg with C=1.000: 0.96 Test accuracy of l1 logreg with C=1.000: 0.96 Training accuracy of l1 logreg with C=100.000: 0.99 Test accuracy of l1 logreg with C=100.000: 0.98

As you can see, there are many parallels between linear models for

binary classification and linear models for regression. As in

regression, the main difference between the models is the penalty parameter, which

influences the regularization and whether the model will use all

available features or select only a subset.

Figure 2-18. Coefficients learned by logistic regression with L1 penalty on the Breast Cancer dataset for different values of C

Linear models for multiclass classification

Many linear classification models are for binary classification only, and don’t extend naturally to the multiclass case (with the exception of logistic regression). A common technique to extend a binary classification algorithm to a multiclass classification algorithm is the one-vs.-rest approach. In the one-vs.-rest approach, a binary model is learned for each class that tries to separate that class from all of the other classes, resulting in as many binary models as there are classes. To make a prediction, all binary classifiers are run on a test point. The classifier that has the highest score on its single class “wins,” and this class label is returned as the prediction.

Having one binary classifier per class results in having one vector of coefficients (w) and one intercept (b) for each class. The class for which the result of the classification confidence formula given here is highest is the assigned class label:

- w[0] * x[0] + w[1] * x[1] + ... + w[p] * x[p] + b

The mathematics behind multiclass logistic regression differ somewhat from the one-vs.-rest approach, but they also result in one coefficient vector and intercept per class, and the same method of making a prediction is applied.

Let’s apply the one-vs.-rest method to a simple three-class classification dataset. We use a two-dimensional dataset, where each class is given by data sampled from a Gaussian distribution (see Figure 2-19):

In[46]:

fromsklearn.datasetsimportmake_blobsX,y=make_blobs(random_state=42)mglearn.discrete_scatter(X[:,0],X[:,1],y)plt.xlabel("Feature 0")plt.ylabel("Feature 1")plt.legend(["Class 0","Class 1","Class 2"])

Figure 2-19. Two-dimensional toy dataset containing three classes

Now, we train a LinearSVC classifier on the dataset:

In[47]:

linear_svm=LinearSVC().fit(X,y)("Coefficient shape: ",linear_svm.coef_.shape)("Intercept shape: ",linear_svm.intercept_.shape)

Out[47]:

Coefficient shape: (3, 2) Intercept shape: (3,)

We see that the shape of the coef_ is (3, 2), meaning that each row

of coef_ contains the coefficient vector for one of the three classes

and each column holds the coefficient value for a specific feature

(there are two in this dataset). The intercept_ is now a

one-dimensional array, storing the intercepts for each class.

Let’s visualize the lines given by the three binary classifiers (Figure 2-20):

In[48]:

mglearn.discrete_scatter(X[:,0],X[:,1],y)line=np.linspace(-15,15)forcoef,intercept,colorinzip(linear_svm.coef_,linear_svm.intercept_,mglearn.cm3.colors):plt.plot(line,-(line*coef[0]+intercept)/coef[1],c=color)plt.ylim(-10,15)plt.xlim(-10,8)plt.xlabel("Feature 0")plt.ylabel("Feature 1")plt.legend(['Class 0','Class 1','Class 2','Line class 0','Line class 1','Line class 2'],loc=(1.01,0.3))

You can see that all the points belonging to class 0 in the training data are above the line corresponding to class 0, which means they are on the “class 0” side of this binary classifier. The points in class 0 are above the line corresponding to class 2, which means they are classified as “rest” by the binary classifier for class 2. The points belonging to class 0 are to the left of the line corresponding to class 1, which means the binary classifier for class 1 also classifies them as “rest.” Therefore, any point in this area will be classified as class 0 by the final classifier (the result of the classification confidence formula for classifier 0 is greater than zero, while it is smaller than zero for the other two classes).

But what about the triangle in the middle of the plot? All three binary classifiers classify points there as “rest.” Which class would a point there be assigned to? The answer is the one with the highest value for the classification formula: the class of the closest line.

Figure 2-20. Decision boundaries learned by the three one-vs.-rest classifiers

The following example (Figure 2-21) shows the predictions for all regions of the 2D space:

In[49]:

mglearn.plots.plot_2d_classification(linear_svm,X,fill=True,alpha=.7)mglearn.discrete_scatter(X[:,0],X[:,1],y)line=np.linspace(-15,15)forcoef,intercept,colorinzip(linear_svm.coef_,linear_svm.intercept_,mglearn.cm3.colors):plt.plot(line,-(line*coef[0]+intercept)/coef[1],c=color)plt.legend(['Class 0','Class 1','Class 2','Line class 0','Line class 1','Line class 2'],loc=(1.01,0.3))plt.xlabel("Feature 0")plt.ylabel("Feature 1")

Figure 2-21. Multiclass decision boundaries derived from the three one-vs.-rest classifiers

Strengths, weaknesses, and parameters

The main parameter of linear models is the regularization parameter,

called alpha in the regression models and C in LinearSVC and

LogisticRegression. Large values for alpha or small values for C mean simple models. In

particular for the regression models, tuning these parameters is quite

important. Usually C and alpha are searched for on a logarithmic

scale. The other decision you have to make is whether you want to use L1

regularization or L2 regularization. If you assume that only a few of your

features are actually important, you should use L1. Otherwise, you

should default to L2. L1 can also be useful if interpretability of the

model is important. As L1 will use only a few features, it is easier to

explain which features are important to the model, and what the effects

of these features are.

Linear models are very fast to train, and also fast to predict. They

scale to very large datasets and work well with sparse data. If your

data consists of hundreds of thousands or millions of samples, you might

want to investigate using the solver='sag' option in

LogisticRegression and Ridge, which can be faster than the default

on large datasets. Other options are the SGDClassifier class and the

SGDRegressor class, which implement even more scalable versions of the

linear models described here.

Another strength of linear models is that they make it relatively easy to understand how a prediction is made, using the formulas we saw earlier for regression and classification. Unfortunately, it is often not entirely clear why coefficients are the way they are. This is particularly true if your dataset has highly correlated features; in these cases, the coefficients might be hard to interpret.

Linear models often perform well when the number of features is large compared to the number of samples. They are also often used on very large datasets, simply because it’s not feasible to train other models. However, in lower-dimensional spaces, other models might yield better generalization performance. We will look at some examples in which linear models fail in Section 2.3.7.

2.3.4 Naive Bayes Classifiers

Naive Bayes classifiers are a family of classifiers that are quite

similar to the linear models discussed in the previous section. However, they tend to be

even faster in training. The price paid for this efficiency is that

naive Bayes models often provide generalization performance that is

slightly worse than that of linear classifiers like LogisticRegression and

LinearSVC.

The reason that naive Bayes models are so efficient is that they learn

parameters by looking at each feature individually and collect simple

per-class statistics from each feature. There are three kinds of naive

Bayes classifiers implemented in scikit-learn: GaussianNB,

BernoulliNB, and MultinomialNB. GaussianNB can be applied to any

continuous data, while BernoulliNB assumes binary data and

MultinomialNB assumes count data (that is, that each feature represents an

integer count of something, like how often a word appears in a

sentence). BernoulliNB and MultinomialNB are mostly used in text

data classification.

The BernoulliNB classifier counts how often every feature of each

class is not zero. This is most easily understood with an example:

In[53]:

X=np.array([[0,1,0,1],[1,0,1,1],[0,0,0,1],[1,0,1,0]])y=np.array([0,1,0,1])

Here, we have four data points, with four binary features each. There are two classes, 0 and 1. For class 0 (the first and third data points), the first feature is zero two times and nonzero zero times, the second feature is zero one time and nonzero one time, and so on. These same counts are then calculated for the data points in the second class. Counting the nonzero entries per class in essence looks like this:

In[54]:

counts={}forlabelinnp.unique(y):# iterate over each class# count (sum) entries of 1 per featurecounts[label]=X[y==label].sum(axis=0)("Feature counts:",counts)

Out[54]:

Feature counts:

{0: array([0, 1, 0, 2]), 1: array([2, 0, 2, 1])}

The other two naive Bayes models, MultinomialNB and GaussianNB, are

slightly different in what kinds of statistics they compute.

MultinomialNB takes into account the average value of each feature for

each class, while GaussianNB stores the average value as well as the

standard deviation of each feature for each class.

To make a prediction, a data point is compared to the statistics for

each of the classes, and the best matching class is predicted.

Interestingly, for both MultinomialNB and BernoulliNB, this leads to a

prediction formula that is of the same form as in the linear models

(see “Linear models for classification”). Unfortunately, coef_ for the naive Bayes models has a

somewhat different meaning than in the linear models, in that coef_ is

not the same as w.

Strengths, weaknesses, and parameters

MultinomialNB

and BernoulliNB have a single parameter, alpha,

which controls model complexity. The way alpha works is that the

algorithm adds to the data alpha many virtual data points that have

positive values for all the features. This results in a “smoothing” of

the statistics. A large alpha means more smoothing, resulting in less

complex models. The algorithm’s performance is relatively robust to the

setting of alpha, meaning that setting alpha is not critical for good

performance. However, tuning it usually improves accuracy somewhat.

GaussianNB is mostly used on very high-dimensional data, while the

other two variants of naive Bayes are widely used for sparse count data

such as text. MultinomialNB usually performs better than BernoulliNB, particularly on datasets with a relatively large number of nonzero

features (i.e., large documents).

The naive Bayes models share many of the strengths and weaknesses of the linear models. They are very fast to train and to predict, and the training procedure is easy to understand. The models work very well with high-dimensional sparse data and are relatively robust to the parameters. Naive Bayes models are great baseline models and are often used on very large datasets, where training even a linear model might take too long.

2.3.5 Decision Trees

Decision trees are widely used models for classification and regression tasks. Essentially, they learn a hierarchy of if/else questions, leading to a decision.

These questions are similar to the questions you might ask in a game of 20 Questions. Imagine you want to distinguish between the following four animals: bears, hawks, penguins, and dolphins. Your goal is to get to the right answer by asking as few if/else questions as possible. You might start off by asking whether the animal has feathers, a question that narrows down your possible animals to just two. If the answer is “yes,” you can ask another question that could help you distinguish between hawks and penguins. For example, you could ask whether the animal can fly. If the animal doesn’t have feathers, your possible animal choices are dolphins and bears, and you will need to ask a question to distinguish between these two animals—for example, asking whether the animal has fins.

This series of questions can be expressed as a decision tree, as shown in Figure 2-22.

In[55]:

mglearn.plots.plot_animal_tree()

Figure 2-22. A decision tree to distinguish among several animals

In this illustration, each node in the tree either represents a question or a terminal node (also called a leaf) that contains the answer. The edges connect the answers to a question with the next question you would ask.

In machine learning parlance, we built a model to distinguish between four classes of animals (hawks, penguins, dolphins, and bears) using the three features “has feathers,” “can fly,” and “has fins.” Instead of building these models by hand, we can learn them from data using supervised learning.

Building decision trees

Let’s go through the process of building a decision tree for the 2D

classification dataset shown in Figure 2-23. The

dataset consists of two half-moon shapes, with each class consisting of

75 data points. We will refer to this dataset as two_moons.

Learning a decision tree means learning the sequence of if/else questions that gets us to the true answer most quickly. In the machine learning setting, these questions are called tests (not to be confused with the test set, which is the data we use to test to see how generalizable our model is). Usually data does not come in the form of binary yes/no features as in the animal example, but is instead represented as continuous features such as in the 2D dataset shown in Figure 2-23. The tests that are used on continuous data are of the form “Is feature i larger than value a?”

Figure 2-23. Two-moons dataset on which the decision tree will be built

To build a tree, the algorithm searches over all possible tests and

finds the one that is most informative about the target variable. Figure 2-24 shows the first

test that is picked. Splitting the dataset horizontally at x[1]=0.0596

yields the most information; it best separates the points in class 0

from the points in class 1. The top node, also called the root,

represents the whole dataset, consisting of 50 points belonging to class

0 and 50 points belonging to class 1. The split is done by testing whether

x[1] <= 0.0596, indicated by a black line. If the test is true, a

point is assigned to the left node, which contains 2 points belonging to

class 0 and 32 points belonging to class 1. Otherwise the point is

assigned to the right node, which contains 48 points belonging to class

0 and 18 points belonging to class 1. These two nodes correspond to the

top and bottom regions shown in Figure 2-24. Even though the first

split did a good job of separating the two classes, the bottom region still

contains points belonging to class 0, and the top region still contains

points belonging to class 1.

We can build a more accurate model by repeating the process of looking

for the best test in both regions. Figure 2-25 shows that the most informative next split for the left

and the right region is based on x[0].

Figure 2-24. Decision boundary of tree with depth 1 (left) and corresponding tree (right)

Figure 2-25. Decision boundary of tree with depth 2 (left) and corresponding decision tree (right)

This recursive process yields a binary tree of decisions, with each node containing a test. Alternatively, you can think of each test as splitting the part of the data that is currently being considered along one axis. This yields a view of the algorithm as building a hierarchical partition. As each test concerns only a single feature, the regions in the resulting partition always have axis-parallel boundaries.

The recursive partitioning of the data is repeated until each region in the partition (each leaf in the decision tree) only contains a single target value (a single class or a single regression value). A leaf of the tree that contains data points that all share the same target value is called pure. The final partitioning for this dataset is shown in Figure 2-26.

Figure 2-26. Decision boundary of tree with depth 9 (left) and part of the corresponding tree (right); the full tree is quite large and hard to visualize

A prediction on a new data point is made by checking which region of the partition of the feature space the point lies in, and then predicting the majority target (or the single target in the case of pure leaves) in that region. The region can be found by traversing the tree from the root and going left or right, depending on whether the test is fulfilled or not.

It is also possible to use trees for regression tasks, using exactly the same technique. To make a prediction, we traverse the tree based on the tests in each node and find the leaf the new data point falls into. The output for this data point is the mean target of the training points in this leaf.

Controlling complexity of decision trees